Comparison of Human Intestinal Parasite Ova Segmentation Using Machine Learning and Deep Learning Techniques

Abstract

:1. Introduction

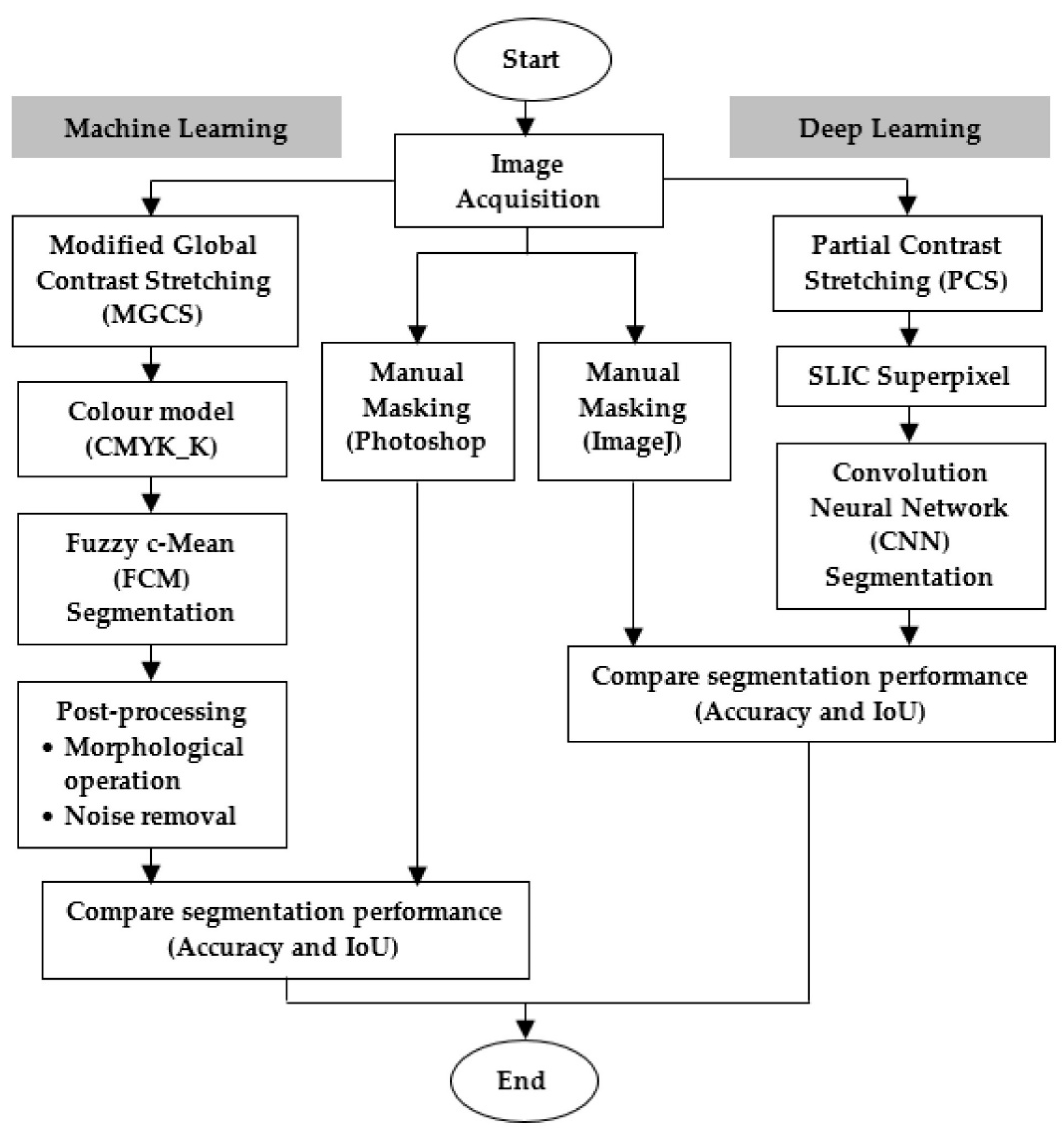

2. Materials and Methods

2.1. Image Acquisition

2.2. Machine Learning Segmentation Approach

2.2.1. Modified Global Contrast Stretching (MGCS)

2.2.2. Color Model

2.2.3. Fuzzy c-Mean (FCM) Segmentation

2.2.4. Post-Processing

2.2.5. Segmentation Performance

2.3. Deep Learning Segmentation Approach

2.3.1. Partial Contrast Stretching (PCS)

2.3.2. Simple Linear Iterative Clustering (SLIC) Superpixel

2.3.3. CNN Semantic Segmentation

2.3.4. Segmentation Performance

3. Results and Discussion

3.1. Machine Learning Segmentation

3.2. Deep Learning Segmentation

3.3. Segmentation Performances

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- World Health Organization (WHO). Soil-Transmitted Helminth Infections. 2022. Available online: https://www.who.int/news-room/fact-sheets/detail/soil-transmitted-helminth-infections (accessed on 13 July 2020).

- Centers for Disease Control and Preventation (CDC). Parasites–Soil-Transmitted Helminths. 2022. Available online: https://www.cdc.gov/parasites/sth (accessed on 1 January 2021).

- Jasti, A.; Ojha, S.C.; Singh, Y.I. Mental and behavioral effects of parasitic infections: A review. Nepal Med. Coll. J. 2007, 9, 50–56. [Google Scholar] [PubMed]

- Meltzer, E. Soil-transmitted helminth infections. Lancet 2006, 368, 283–284. [Google Scholar] [CrossRef]

- Lindquist, H.D.A.; Cross, J.H. Helminths. Infect. Dis. 2017, 2, 1763–1779.e1. [Google Scholar] [CrossRef]

- Ngwese, M.M.; Manouana, G.P.; Moure PA, N.; Ramharter, M.; Esen, M.; Adégnika, A.A. Diagnostic techniques of soil-transmitted helminths: Impact on control measures. Trop. Med. Infect. Dis. 2020, 5, 93. [Google Scholar] [CrossRef] [PubMed]

- Batta, M. Machine Learning Algorithms—A Review. Int. J. Sci. Res. 2020, 9, 381–386. [Google Scholar]

- Jiménez, B.; Maya, C.; Velásquez, G.; Torner, F.; Arambula, F.; Barrios, J.A.; Velasco, M. Identification and quantification of pathogenic helminth eggs using a digital image system. Exp. Parasitol. 2016, 166, 164–172. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Osaku, D.; Cuba, C.F.; Suzuki, C.T.N.; Gomes, J.F.; Falcão, A.X. Automated diagnosis of intestinal parasites: A new hybrid approach and its benefits. Comput. Biol. Med. 2020, 123, 103917. [Google Scholar] [CrossRef] [PubMed]

- Kitvimonrat, A.; Hongcharoen, N.; Marukatat, S.; Watcharabutsarakham, S. Automatic Detection and Characterization of Parasite Eggs using Deep Learning Methods. In Proceedings of the 17th International Conference on Electrical Engineering/Electronics, Computer, Telecommunications and Information Technology, ECTI-CON 2020, Piscataway, NJ, USA, 24–27 June 2020; pp. 153–156. [Google Scholar] [CrossRef]

- Abdul-Nasir, A.S.; Mashor, M.Y.; Mohamed, Z. Modified global and modified linear contrast stretching algorithms: New color contrast enhancement techniques for microscopic analysis of malaria slide images. Comput. Math. Methods Med. 2012, 2012, 637360. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hassanien, A.E. Fuzzy rough sets hybrid scheme for breast cancer detection. Image Vis. Comput. 2007, 25, 172–183. [Google Scholar] [CrossRef]

- Punn, N.S.; Agarwal, S. Inception U-Net Architecture for Semantic Segmentation to Identify Nuclei in Microscopy Cell Images. ACM Trans. Multimed. Comput. Commun. Appl. 2020, 16, 1–15. [Google Scholar] [CrossRef]

- Seok, J.; Song, J.J.; Koo, J.W.; Kim, H.C.; Choi, B.Y. The semantic segmentation approach for normal and pathologic tympanic membrane using deep learning. BioRxiv 2019, 1, 515007. [Google Scholar] [CrossRef] [Green Version]

- Khairudin, N.A.A.; Rohaizad, N.S.; Nasir, A.S.A.; Chin, L.C.; Jaafar, H.; Mohamed, Z. Image Segmentation using k-means Clustering and Otsu’s Thresholding with Classification Method for Human Intestinal Parasites. IOP Conf. Ser. Mater. Sci. Eng. 2020, 864, 012132. [Google Scholar] [CrossRef]

- Radha, N.; Tech, M. Comparison of Contrast Stretching methods of Image Enhancement Techniques for Acute Leukemia Images. Int. J. Eng. Res. Technol. 2012, 1, 1–8. [Google Scholar]

- Pavan, P.S.; Karuna, Y.; Saritha, S. Mri Brain Tumor Segmentation with Slic. J. Crit. Rev. 2020, 7, 4454–4462. [Google Scholar]

- Liu, X.; Guo, S.; Yang, B.; Ma, S.; Zhang, H.; Li, J.; Sun, C.; Jin, L.; Li, X.; Yang, Q.; et al. Automatic Organ Segmentation for CT Scans Based on Super-Pixel and Convolutional Neural Networks. J. Digit. Imaging 2018, 31, 748–760. [Google Scholar] [CrossRef]

- Albayrak, A.; Bilgin, G. A Hybrid Method of Superpixel Segmentation Algorithm and Deep Learning Method in Histopathological Image Segmentation. In Proceedings of the 2018 Innovations in Intelligent Systems and Applications (INISTA), Thessaloniki, Greece, 3–5 July 2018. [Google Scholar] [CrossRef]

- Wani, M.A.; Bhat, F.A.; Afzal, S.; Khan, A.I. Advances in Deep Learning; Springer: Berlin/Heidelberg, Germany, 2019; Volume 57. [Google Scholar] [CrossRef]

- Loke, S.W.; Lim, C.C.; Nasir, A.S.A.; Khairudin, N.A.; Chong, Y.F.; Mashor, M.Y.; Mohamed, Z. Analysis of the Performance of SLIC Super-pixel toward Pre-segmentation of Soil-Transmitted Helminth. In Proceedings of the International Conference on Biomedical Engineering 2021 (ICoBE2021), Online, 14–15 September 2021. [Google Scholar]

- Chin, C.L.; Lin, B.J.; Wu, G.R.; Weng, T.C.; Yang, C.S.; Su, R.C.; Pan, Y.J. An automated early ischemic stroke detection system using CNN deep learning algorithm. In Proceedings of the 2017 IEEE 8th International Conference on Awareness Science and Technology (iCAST), Taichung, Taiwan, 8–10 November 2017; pp. 368–372. [Google Scholar] [CrossRef]

- Siddique, N.; Sidike, P.; Elkin, C.; Devabhaktuni, V.; Siddique, P.S.N.; Devabhaktuni, V. U-Net and Its Variants for Medical Image Segmentation: Theory and Applications. 2020. Available online: http://arxiv.org/abs/2011.01118 (accessed on 15 March 2022).

| Image Illumination | Normal Image | Over-Exposed Image | Under-Exposed Image |

|---|---|---|---|

| Original Image |  |  |  |

| MGCS Image |  |  |  |

| Image Illumination | Normal Image | Over-Exposed Image | Under-Exposed Image |

|---|---|---|---|

| Original Image |  |  |  |

| PCS Image |  |  |  |

| Helminth Ova Species | Technique | Accuracy (%) | IoU (%) | |

|---|---|---|---|---|

| ALO | Machine learning segmentation | FCM | 98.54 | 59.82 |

| Deep learning segmentation | VGG-16 | 99.28 | 75.69 | |

| ResNet-18 | 99.22 | 69.82 | ||

| ResNet-34 | 99.30 | 75.80 | ||

| EVO | Machine learning segmentation | FCM | 97.94 | 40.81 |

| Deep learning segmentation | VGG-16 | 98.89 | 49.29 | |

| ResNet-18 | 98.80 | 54.36 | ||

| ResNet-34 | 98.86 | 55.48 | ||

| HWO | Machine learning segmentation | FCM | 99.48 | 73.25 |

| Deep learning segmentation | VGG-16 | 99.16 | 62.68 | |

| ResNet-18 | 99.20 | 66.32 | ||

| ResNet-34 | 99.11 | 61.87 | ||

| TTO | Machine learning segmentation | FCM | 98.75 | 51.58 |

| Deep learning segmentation | VGG-16 | 99.72 | 75.09 | |

| ResNet-18 | 99.69 | 77.06 | ||

| ResNet-34 | 99.68 | 74.33 | ||

| Helminth Ova Species | Accuracy (%) | IoU (%) | |

|---|---|---|---|

| Ground Truth | Segmentation Image | ||

ALO |  ALO | 99.30 | 88.54 |

EVO |  EVO | 93.35 | 70.37 |

HWO |  HWO | 98.28 | 83.41 |

TTO |  TTO | 96.50 | 67.84 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lim, C.C.; Khairudin, N.A.A.; Loke, S.W.; Nasir, A.S.A.; Chong, Y.F.; Mohamed, Z. Comparison of Human Intestinal Parasite Ova Segmentation Using Machine Learning and Deep Learning Techniques. Appl. Sci. 2022, 12, 7542. https://doi.org/10.3390/app12157542

Lim CC, Khairudin NAA, Loke SW, Nasir ASA, Chong YF, Mohamed Z. Comparison of Human Intestinal Parasite Ova Segmentation Using Machine Learning and Deep Learning Techniques. Applied Sciences. 2022; 12(15):7542. https://doi.org/10.3390/app12157542

Chicago/Turabian StyleLim, Chee Chin, Norhanis Ayunie Ahmad Khairudin, Siew Wen Loke, Aimi Salihah Abdul Nasir, Yen Fook Chong, and Zeehaida Mohamed. 2022. "Comparison of Human Intestinal Parasite Ova Segmentation Using Machine Learning and Deep Learning Techniques" Applied Sciences 12, no. 15: 7542. https://doi.org/10.3390/app12157542

APA StyleLim, C. C., Khairudin, N. A. A., Loke, S. W., Nasir, A. S. A., Chong, Y. F., & Mohamed, Z. (2022). Comparison of Human Intestinal Parasite Ova Segmentation Using Machine Learning and Deep Learning Techniques. Applied Sciences, 12(15), 7542. https://doi.org/10.3390/app12157542