1. Introduction

Advances in machine tool technology make possible the creation of parts with tolerances as fine as ±0.0025 mm. Thus, precision machining plays a key role in fabricating many of the critical tight-tolerance components used in the military, electronics, aerospace, and medical industries nowadays. However, the success of precision machining methods still relies on the appropriate determination of the machining parameters. In the past, the optimal processing parameters were determined mainly by operator experience, or through a process of trial-and-error. However, such an approach is not only time-consuming, expensive, and subjective, but also offers no guarantee of finding the optimal solution. Furthermore, the knowledge learned in this way is not easily documented and shared with others. Thus, to realize the goals of high efficiency, high precision, and low cost, precision machining requires faster and more systematic approaches for identifying the optimal processing parameters.

Computer numerical control (CNC) machines are widely used throughout the manufacturing industry. CNC turning machines have many advantages for mass production, including a high speed, high precision, excellent repeatability, minimal manual intervention, and good potential for automation.

The cutting behavior of turning is determined by two components: the toolpath and the feed rate [

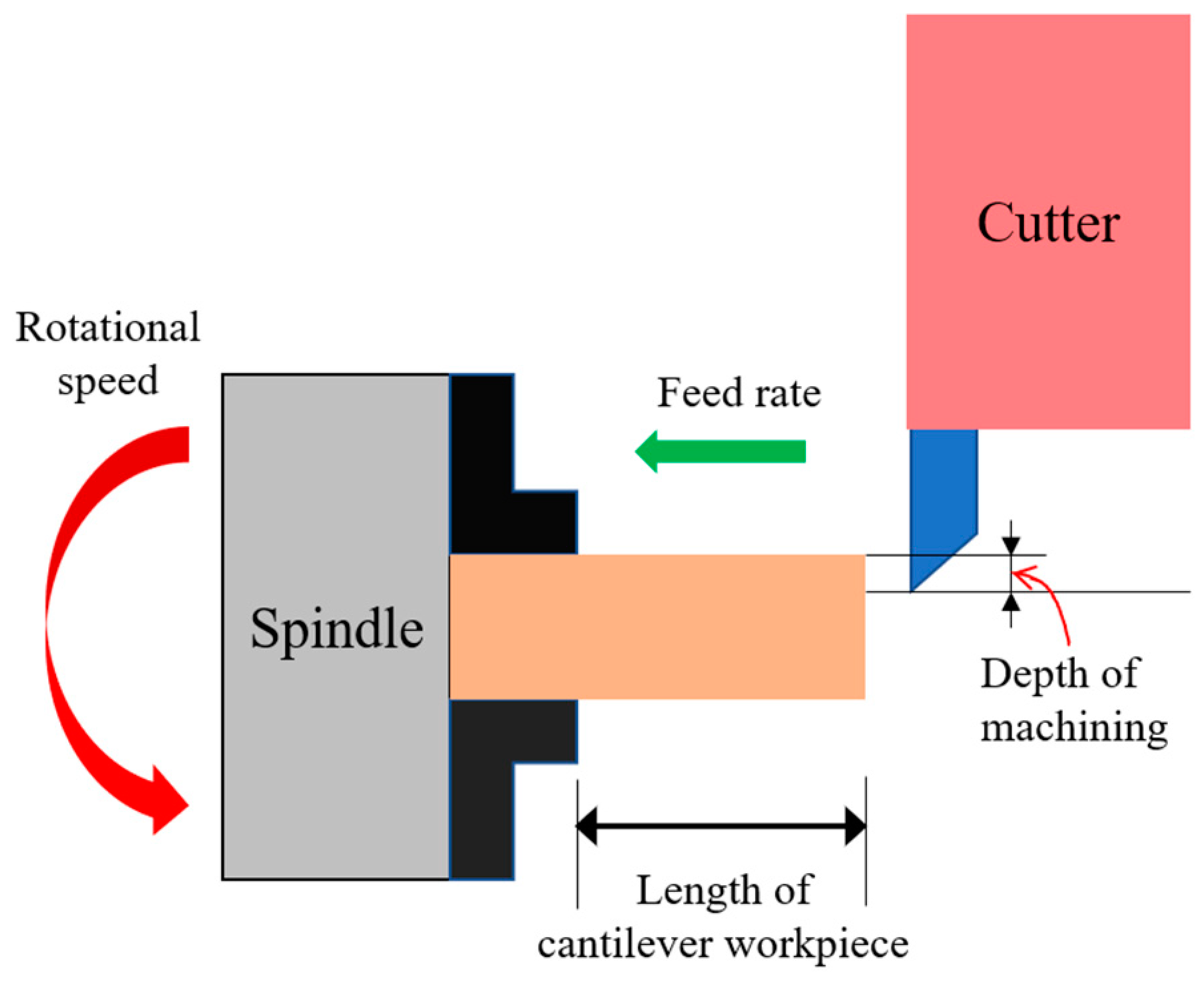

1]. The “toolpath” is the trace on the workpiece that the cutter moves along, while the “feed rate” is the speed at which the cutter moves over the surface of the workpiece. When cutting metals, the machining quality is affected by two main types of factors, namely human and environmental. In most cases, the environmental factors, e.g., the age and condition of the machine, the machining temperature, the workpiece-material properties, and so on, are difficult to control. Accordingly, in attempting to improve the quality of the CNC machining process, many studies have focused on the problem of controlling the human factors, in particular, the settings assigned by the operator or designer of the machining parameters, such as the machining depth, rotational speed, feed rate, and cantilever cutting length. The machining depth has a direct effect on the cutting force exerted on the cutter. An excessive force may cause vibration of the cutter, which not only causes tool wear, but also significantly decreases the machining quality. The rotational speed and feed rate govern the speed at which the cutter moves over the workpiece. In general, a higher rotational speed and/or feed rate increases the rate at which the material is removed from the workpiece. However, an excessive speed may increase the cutting force, while a low speed increases the contact time between the cutter and the workpiece, and may thus result in the production of scratches. Finally, an excessive cantilever cutting length reduces the stability of the cutter at the contact point between the tip and the workpiece, and may therefore degrade the precision of the machining process. Hence, it is essential that all four machining parameters be optimized in order to ensure the quality and precision of the turning process.

Su et al. [

2] presented a multi-objective optimization framework based on the robust Taguchi experimental design method, the grey relational analysis (GRA), and the response surface methodology (RSM) for determining the optimal cutting parameters in the turning of AISI 304 austenitic stainless steel. It was shown that, given the optimal settings of the turning parameters, the surface roughness (Ra) was reduced by 66.90%, and the material removal rate (MRR) was increased by 8.82%. Furthermore, the specific energy consumption (SEC) of the turning process was reduced by 81.46%. Akkus and Yaka [

3] conducted a series of experiments to investigate the optimal cutting speed, feed rate, and depth of cut for the turning of Ti6Al4V titanium alloy. The optimality of the turning parameters was evaluated by examining their effects on surface roughness, vibration energy, and energy consumption. In general, the results revealed that the quality of the machining outcome was governed mainly by the feed rate. Zhou He et al. [

4] proposed a genetic-gradient boosting regression tree (GA-GBRT) model, and used cutting speed, feed rate, and depth of cut as inputs to predict the surface roughness of milling, and then matched AISI 304 stainless steel for the cutting experiment. The results showed that the best processing parameters with the highest efficiency and best quality could be obtained based on the GA-GBRT model. Wu et al. [

5] predicted the surface roughness when milling Inconel 718 through the Elman neural network according to the cutting conditions, vibration, and current signals during cutting, and then took the maximum cutting efficiency as the consideration and used the particle swarm optimization (PSO) algorithm to optimize cutting parameters. Sivalingam et al. [

6] conducted the turning of Hastelloy X materials with PVD Ti-Al-N tools in different machining environments, and evaluated the cutting performances according to the cutting force, surface roughness, and cutting temperature caused by different cutting conditions. They found that the moth-flame optimization (MFO) algorithm could be applied to match the multiple linear regression models (MLRM) model to select the best processing parameters.

In response to the rise of artificial intelligence, this paper uses machine learning methods to predict turning machining error. Before using the machine learning method, a database of machining parameters and corresponding machining errors must be established to train the model. Due to the huge number of permutations and combinations of processing parameters, it is important to improve the efficiency of data collection. For this reason, this paper uses the Taguchi method to design the experiment, which can collect the most comprehensive data with the least number of experiments.

Machine learning [

7] has proven effective in solving many problems which require extensive experimentation to obtain reliable results, or which involve such massive volumes of data that they cannot realistically be solved by manual analysis. Supervised machine learning models use a labelled dataset to learn the mapping function which most accurately describes the relationship between the input variables and the output (or outputs). Having trained the model, it is tested using a testing dataset and predicts the output accordingly. Such models have been widely applied for face detection, stock price prediction, spam detection, risk assessment, and so on. They have also been used to solve various problems in the field of smart manufacturing. For example, Lu et al. [

8] studied the use of the group method of data handling (GMDH) model to predict the surface roughness of micro-milling LF21 antenna. The spindle speed and feed rate were used as model inputs, and the surface roughness was the output. The prediction accuracy could reach an average relative error of 13.92%, the maximum relative error was 21.22%, and finally, the best cutting parameters were found through the prediction results. Chen et al. [

9] used a back-propagation neural network (BPNN) to predict the surface roughness of a milling workpiece. The model inputs were the depth of cut, feed rate, spindle speed and milling distance. The prediction accuracy was expressed by the root-mean-square-error (RMSE), which could reach 0.008, and analyzed the influence of various parameters on the surface roughness. The experimental results showed that the feed rate had the greatest influence on the surface roughness. Benardos and Vosniakos, et al. [

10] used an artificial neural network (ANN) model based on the Levenberg–Marquardt (LM) training algorithm to predict the surface roughness in CNC face milling. In the proposed approach, the training and testing data were obtained using the Taguchi design of experiment (DoE) method based on seven control factors, namely the depth of cut, the feed rate per tooth, the cutting speed, the engagement and wear of the cutting tool, the cutting fluid, and the cutting force. It was shown that the trained model achieved a mean square error (MSE) of just 1.86% for the surface roughness of the milled component. Cus and Zuperl [

11] developed an ANN for predicting the optimal cutting conditions subject to technological, economic, and organizational constraints. Asiltürk and Çunkaş [

12] combined an ANN model and a regression analysis technique to predict the surface roughness of turned AISI 1040 steel. The model was trained using three different algorithms, namely back-propagation, scaled conjugate gradient (SCG), and Levenberg–Marquardt (LM). The results showed that the ANN model trained with the SCG algorithm provided a significantly better prediction accuracy than that obtained from a traditional, regression-based model. Pontes et al. [

13] used the Taguchi DOE method to optimize the parameters of a radial base function (RBF) model for predicting the mean value of the surface roughness (Ra) of AISI 52,100 hardened steel in hand-turning processes. In general, the results confirmed that the use of the DOE approach to obtain the training data required to optimize the RBF model was far more efficient than traditional trial-and-error methods. The above studies focus mainly on the effects of the depth of cut, feed rate, and cutting speed on the surface roughness of machined components. However, besides the surface roughness, the machining error of the machining outcome is also extremely important, particularly for tight-tolerance components. The application of machine learning to the optimization of the cutting parameters is generally utilized by an ANN model [

11,

12]. However, such models, which have a simple structure consisting of an input layer, one or more hidden layers, and an output layer, achieve only a relatively poor prediction performance for machining precision. Accordingly, the present study examines the performance of three different tree-based machine learning (ML) models in predicting the precision of the turning process, namely random forest, XGBoost, and decision tree. For each model, the data required for training purposes are obtained using the Taguchi DOE method [

14] based on four turning parameters: the machining depth, the rotational speed, the feed rate, and the cantilever cutting length. The model which shows the best prediction performance is further enhanced through the use of an over-sampling technique and four different optimization algorithms, namely genetic algorithm (GA), grey wolf (GW), PSO, and center particle swarm optimization (CPSO). Finally, the performances of the various models are evaluated and compared using the leave-one-out cross-validation technique.

In response to the rapid development of present technology, the requirements for product quality are also increasing, and the quality is closely related to the processing parameters. At present, there is no systematic method for the optimization of processing parameters in the industry, and most of them rely on empirical rules to overcome, but the disadvantages are that they are time-consuming and difficult to inherit techniques. In terms of research methods, in the past, it was necessary to rely on methods such as analyzing the structural characteristics of the machine or analyzing the cutting behavior in order to find suitable parameters more efficiently, but it was difficult and more expensive. Therefore, this paper proposes a kind of parameter optimization method to improve this problem. This method is to use the Taguchi method with the machine learning method for parameter optimization. In this paper, the Taguchi method and oversampling techniques are applied to improve the efficiency of data collection. Machine learning methods and optimization algorithms are then used to estimate the turning accuracy. The remainder of this paper is organized as follows:

Section 2 briefly describes the setup of the Taguchi DOE method employed in the present study;

Section 3 introduces the details of each of the major components of the proposed methodology, including the data preprocessing step, the ML models, the oversampling technique, and the optimization algorithms;

Section 4 presents and discusses the experimental results; and finally,

Section 5 provides some brief concluding remarks.

3. Proposed Method

In the present study, the effects of the four machining parameters on the turning precision were predicted using a supervised MK model. Most supervised-learning problems can be categorized as either “classification” problems or “regression” problems. Classification problems involve predicting a discrete valued output, such as a number or a certain species. By contrast, regression problems involve predicting a continuous valued output, such as stock market movement or the price of goods. The present study considered a regression-type problem, in which the aim was to predict the machining error of the turning process, given a knowledge of the settings assigned to the four machining parameters. The data required to train the ML model were obtained from the Taguchi DOE factorial design described in the previous section, where the level settings for the four machining parameters are listed in

Table 2.

The machining error is calculated by the following equation:

where

Dtarget is the diameter of the turning target size and

Dmeasured is the diameter of the measured value after the turning process.

The turning experiments were performed on a Feeler turning machine (model: FTC-10), as shown in

Figure 2, using cylindrical S45C medium carbon steel specimens. The specifications of the workpiece and cutting tool are shown in

Table 3.

Prior to constructing the ML model, the experimental data obtained from the Taguchi experiments were pre-processed in order to accelerate the convergence of the training process and improve the prediction accuracy. Data pre-processing was performed using the min–max normalization [

15] technique. In other words, the minimum and maximum values of each variable in the dataset were mapped to 0 and 1, respectively, and all the other values in between were scaled accordingly within the range of [0, 1]. In other words, the normalization process was performed as

where

is the value after normalization and

xmax, xmin, and

x are the maximum, minimum, and original values, respectively. The normalization process is illustrated schematically in

Figure 3.

Following data processing, the model was trained and tested using the leave-one-out cross-validation technique [

16]. Briefly, one data sample in the dataset was taken as an assessment set (i.e., a testing set), and all the other data samples were taken as a training set. The process was repeated until every data sample in the dataset had been taken as the assessment set once. As shown in

Table 2, the Taguchi DOE design involved nine experimental runs. In other words, the Taguchi experiments generated nine data samples, with each sample consisting of the values assigned to the four machining parameters and the corresponding machining precision. Accordingly, the leave-one-out procedure was repeated nine times, as shown in

Figure 4, with the prediction accuracy calculated each time.

The present study considered three ML models: random forest (RF), XGBoost (XGB), and decision tree (DT). Having evaluated the prediction performance of all three models, the model with the best performance was further improved using a synthetic minority over-sampling technique for regression with Gaussian noise (SMOGN) and four different optimization algorithms: genetic algorithm (GA), grey wolf (GW), particle swarm optimization (PSO), and center particle swarm optimization (CPSO). The prediction performances of the various models were then evaluated and compared. The details of the ML models, oversampling technique, and optimization algorithms are provided in the following sections.

3.1. Random Forest

Random forest (RF) [

17] combines the strengths of the bagging and decision tree algorithms, as shown in

Figure 5. Bagging is used first to generate randomly distributed training data, and this data is then randomly assigned to multiple decision trees for training purposes. Since each decision tree uses different training data, each trained decision tree is different from the others. The weight of each decision tree is thus obtained via majority voting. As the training and testing process proceeds, the weaker decision trees are gradually combined to construct a stronger model with a better prediction performance.

3.2. XGBoost

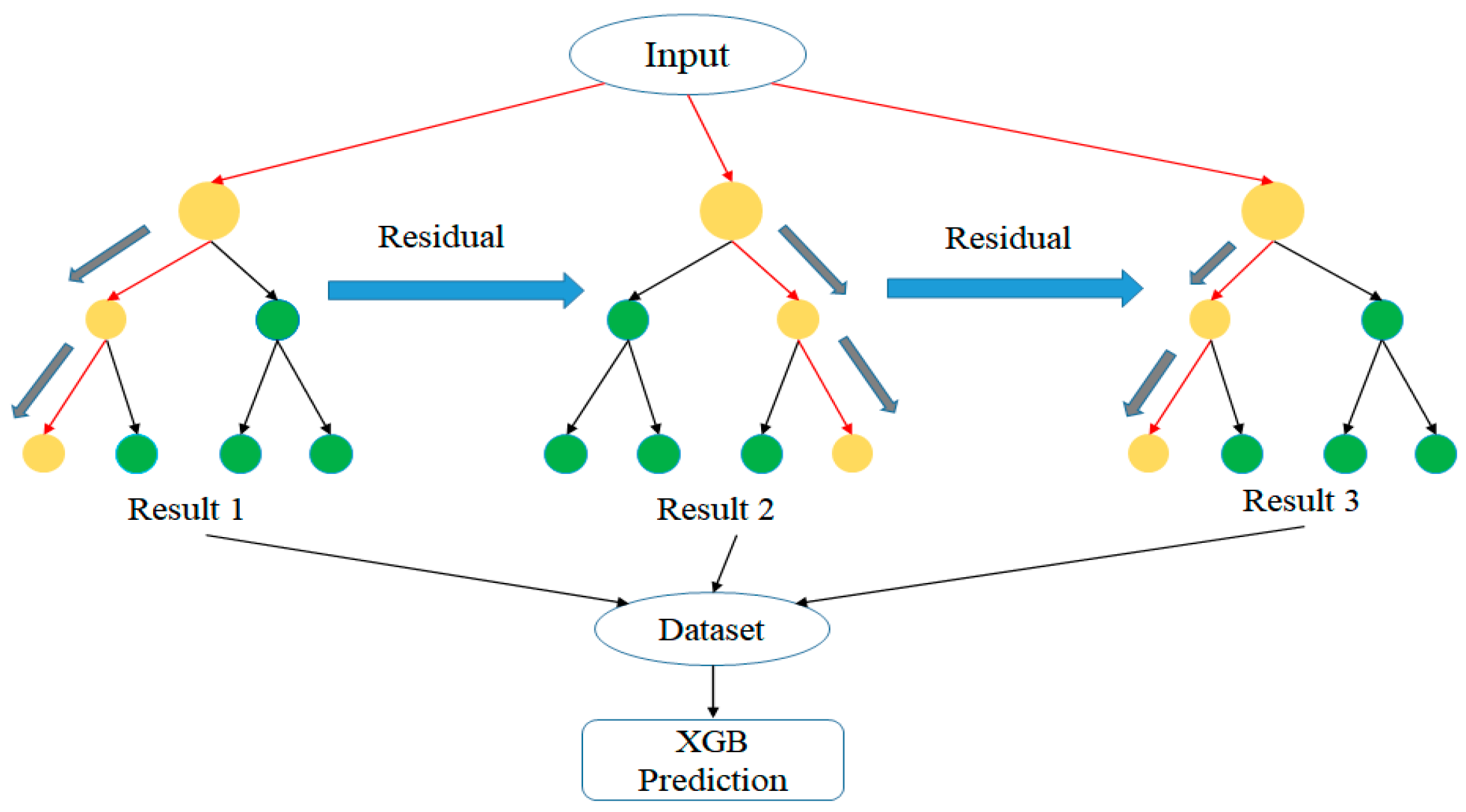

Extreme gradient boosting (XGBoost or XGB) [

18] combines the strengths of bagging and boosting, as shown in

Figure 6. In particular, an additive model is first constructed, consisting of multiple base models. A new tree is then added to the model in order to correct the error produced by the previous tree, thereby improving the overall strength of the model. The process proceeds iteratively in this way until either no further improvement is obtained over a specified number of epochs, or the number of trees in the XGBoost structure reaches the maximum prescribed number.

In the present study, the maximum depth of the tree structure was set as 500, the number of samples was set as 1000, the learning rate was set as 0.3, and the booster was set as “gbtree”.

3.3. Decision Tree

The decision tree (DT) model [

19] can be used to solve both classification and regression problems. In the present study, the model was used to solve the regression problem of predicting the turning precision based on the values of the four machining parameters. As shown in

Figure 7, the DT structure consisted of three types of nodes: a root node, multiple interior nodes, and several output nodes. The root node represented the entire sample, while the interior nodes represented the features of the data set and the leaf nodes represented the regression outputs. Moreover, the branches between the nodes represented the decision rules (formulated as True or False). Briefly, each data point in the dataset was run through the tree until it reached an output node. The final prediction result was then obtained by computing the average value of the dependent variable at each node.

In this study, the depth of the DT structure was set as 10, the parameter controlling the randomness of the estimator was set as 0, and the feature split randomness criterion was set as “best” (meaning that the DT chose the split preferably based on the most important feature).

3.4. Oversampling

As described above, each of the three models was trained and tested using the leave-one-out cross-validation method. The best model was then further improved using the synthetic minority over-sampling technique for regression with Gaussian noise (SMOGN) [

20]. In general, the purpose of oversampling is to overcome the problem of data imbalance in the dataset and/or insufficient available data. To preserve the original data features in the dataset, the data instances with few but important features are first identified. Synthetic data with similar features are then created, such that the final dataset contains a balanced number of features. In implementing the oversampling method, the sampling factor is set in accordance with the imbalance proportion of the data. In addition, the k-NN algorithm is applied to each sample

X in the minority class. In particular, the distances between the sample and all the other samples in the same minority class are calculated in order to find the k minority class samples that are the closest to

X. One of the minority class examples is then randomly selected and inserted into the following equation:

where

is a random factor with a value in the interval of [0, 1] and

is the minority class example selected (see

Figure 8).

3.5. Model Optimization

Following the over-sampling process, optimization algorithms [

21] were applied to further tune the parameters of the ML model. Four algorithms were considered, namely GA, GW, PSO and CPSO. The details of each algorithm are described in the following.

The genetic algorithm (GA) optimizer [

22] was a randomized search algorithm which imitated the processes of selection and reproduction in nature. The algorithm commenced by constructing an initial population of potential candidate solutions, where each candidate was encoded in the form of a string. A set of these candidates was then selected as the initial guessed values, and selection, crossover, and mutation operations were performed based on the fitness of these guessed values in order to create a new population of improved candidate solutions. The algorithm iterated in this way until the specified termination criteria were satisfied, at which point the candidate with the best fit was decoded and taken as the optimal solution.

Figure 9 illustrates the basic workflow of the GA algorithm.

The grey wolf (GW) optimizer [

23] is an intelligent optimization algorithm that mimics the group hunting behavior of grey wolves, and involves three main steps, namely, establishing hierarchy, encircling, and attacking. As shown in

Figure 10, three solutions (alpha, beta, and delta) are first selected as the best solutions among the initial population. In each iteration, the position of the prey (i.e., the optimal solution) is updated according to the positions of these wolves, based on

where

t is the current iteration;

A and

C are the auxiliary coefficient vectors;

is the position vector of the prey; and

is the current position vector of the wolves.

drops from 2 to 0 as the iteration process proceeds, and

and

are random vectors in the interval of [0, 1]. In the hunting process, when

> 1, the wolves diverge. By contrast, when

< 1, they converge, and search a more localized area in order to pinpoint the prey.

The particle swarm optimization (PSO) algorithm [

24] encodes each candidate solution as a particle within the feasible search space, and gradually converges toward the optimal solution based on the individual and collective experiences of all the members of the swarm. As shown in

Figure 11, the optimization process involves three iterative steps: (one) assigning the initial positions of all the particles and recording the position of the best solution among them, (two) calculating the acceleration vector for each particle and moving the particle to a new position, and (three) updating the personal best solutions of the individual particles and the global best solution. The related PSO equations are expressed as follows:

where

is the velocity of individual i in the k-th iteration,

is the weight,

and

are the acceleration constants, and

and

are random values in the interval [0, 1]. In addition,

is the position of individual

i in the

k-th iteration,

is the best position of individual

i in each iteration, and

is the best solution in the entire domain.

Center particle swarm optimization (CPSO) [

25] is an extension of the PSO algorithm, and aims to reach the optimal solution more rapidly through the introduction of a center particle located in the middle of all the particles in the swarm (see

Figure 12). The rationale for this approach lies in the fact that the center of the personal best solutions of all the particles is located closer to the best solution than the global best solution. Thus, in the CPSO algorithm, the position of the center particle is taken in place of the global best position in the original PSO algorithm in order to improve the convergence speed. The position of the center particle is computed as

where

is the center position in the (

k + 1)-th iteration,

N is the number of particles in the swarm, and

is the position of the

i-th particle in the (

k + 1)-th iteration.

Figure 13 and

Figure 14 show the training procedures for the single RF, XGBoost, and DT models, and the optimized models, respectively. As shown in

Figure 13, the training process for the single models involves the following steps: (one) loading the data, (two) data normalization, (three) cross validation (separating the training set and test set), (four) model training, (five) prediction, and (six) prediction accuracy evaluation. Similarly, the main steps in the training process for the optimized models include: (one) loading the data, (two) data normalization, (three) cross validation, (four) oversampling, (five) model optimization, (six) model training using the optimized parameters, (seen) prediction, and (eight) prediction accuracy evaluation (see

Figure 14).