Event-Related Potentials during Verbal Recognition of Naturalistic Neutral-to-Emotional Dynamic Facial Expressions

Abstract

:1. Introduction

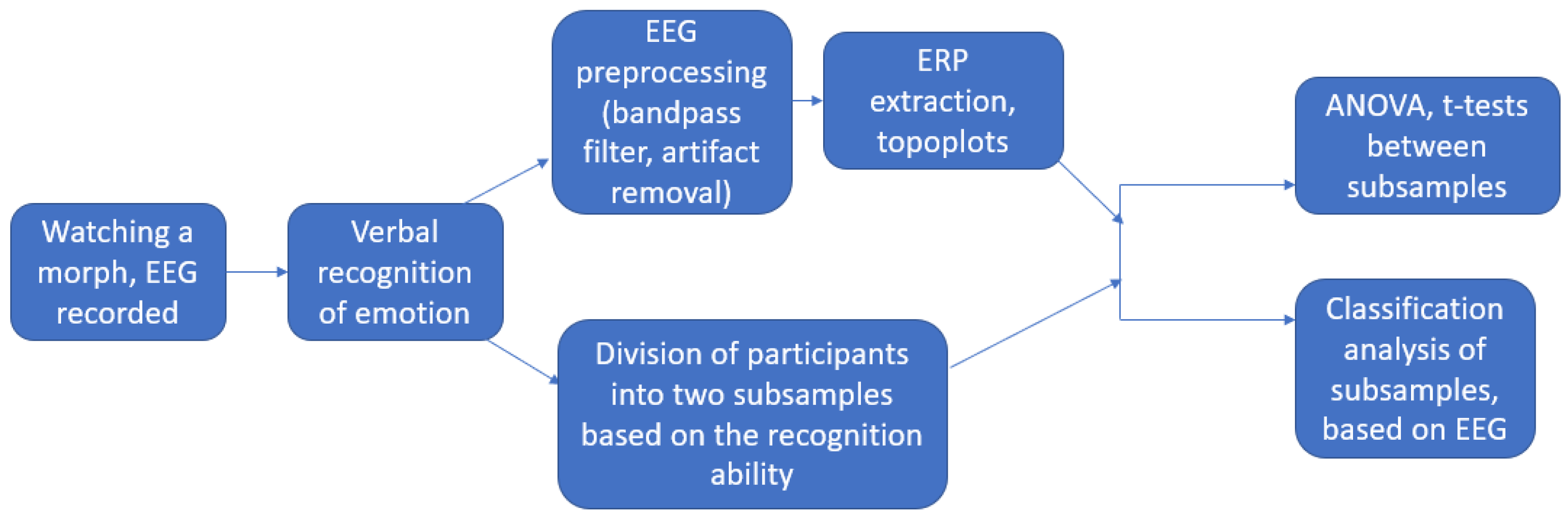

2. Methods

2.1. Participants

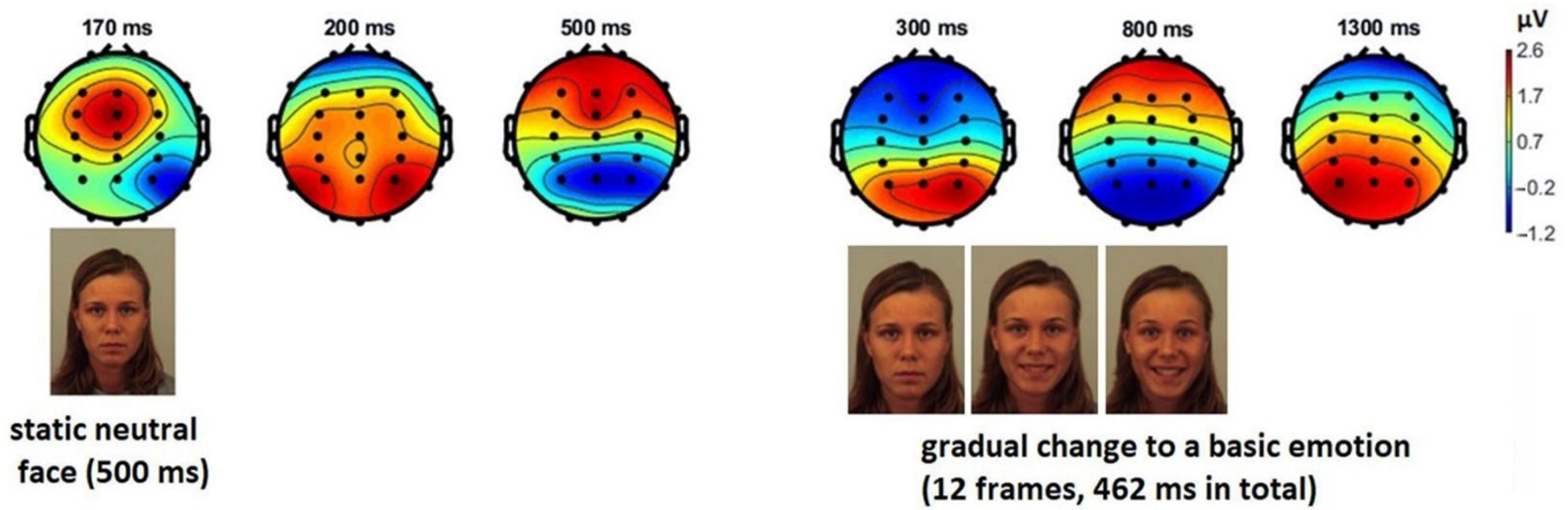

2.2. Material and Design

2.3. Data Collection and Reduction

2.4. Data Analysis

3. Results

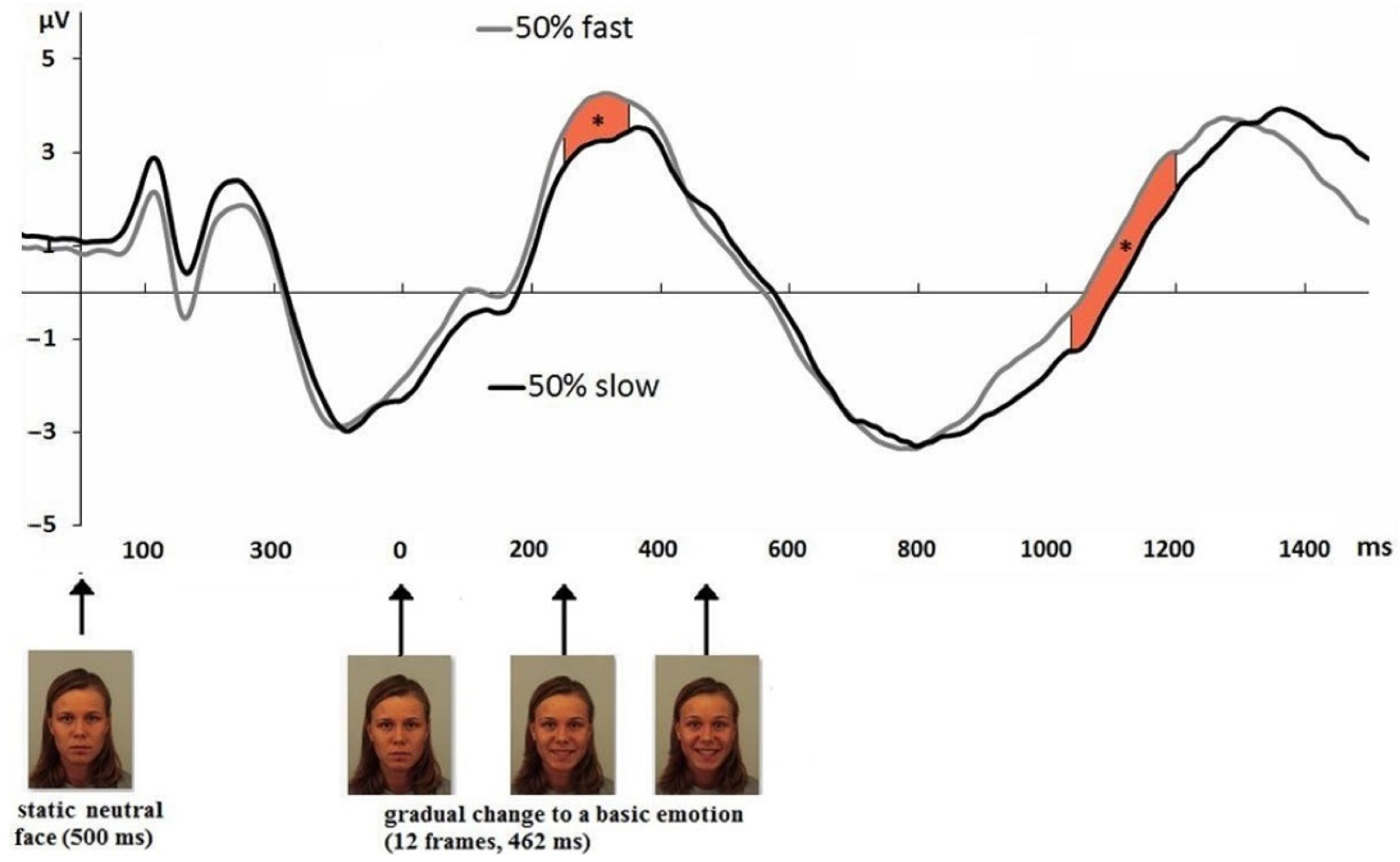

3.1. Event-Related Potentials of EEG

3.2. ERP Related to Individual Differences in Facial Emotion Recognition

3.3. Classification Analysis

4. Discussion

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Darwin, C. The Expression of the Emotions in Man and Animals; Murray: London, UK, 1872. [Google Scholar]

- Adolphs, R. Recognizing emotion from facial expressions: Psychological and neurological mechanisms. Behav. Cogn. Neurosci. Rev. 2002, 1, 21–62. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liu, T.; Wang, J.; Yang, B.; Wang, X. Facial expression recognition method with multi-label distribution learning for non-verbal behavior understanding in the classroom. Infrared Phys. Technol. 2021, 112, 103594. [Google Scholar] [CrossRef]

- Liu, H.; Fang, S.; Zhang, Z.; Li, D.; Lin, K.; Wang, J. MFDNet: Collaborative poses perception and matrix Fisher distribution for head pose estimation. IEEE Trans. Multimed. 2021, 24, 2449–2460. [Google Scholar] [CrossRef]

- Liu, H.; Liu, T.; Zhang, Z.; Sangaiah, A.K.; Yang, B.; Li, Y.F. ARHPE: Asymmetric Relation-aware Representation Learning for Head Pose Estimation in Industrial Human-machine Interaction. IEEE Trans. Ind. Inform. 2022, 18, 7107–7117. [Google Scholar] [CrossRef]

- Collin, L.; Bindra, J.; Raju, M.; Gillberg, C.; Minnis, H. Facial emotion recognition in child psychiatry: A systematic review. Res. Dev. Disabil. 2013, 34, 1505–1520. [Google Scholar] [CrossRef] [PubMed]

- Martinez, A.; Anllo-Vento, L.; Sereno, M.I.; Frank, L.R.; Buxton, R.B.; Dubowitz, D.J.; Wong, E.C.; Hinrichs, H.; Heinze, H.J.; Hillyard, S.A. Involvement of striate and extrastriate visual cortical areas in spatial attention. Nat. Neurosci. 1999, 2, 364–369. [Google Scholar] [CrossRef] [PubMed]

- Pizzagalli, D.; Regard, M.; Lehmann, D. Rapid emotional face processing in the human right and left brain hemispheres: An ERP study. NeuroReport 1999, 10, 2691–2698. [Google Scholar] [CrossRef]

- Luck, S.J.; Heinze, H.J.; Mangun, G.R.; Hillyard, S.A. Visual event-related potentials index focused attention within bilateral stimulus arrays. II. Functional dissociation of P1 and N1 components. Electroencephalogr. Clin. Neurophysiol. 1990, 75, 528–542. [Google Scholar] [CrossRef]

- Finnigan, S.; O’Connell, R.G.; Cummins, T.D.; Broughton, M.; Robertson, I.H. ERP measures indicate both attention and working memory encoding decrements in aging. Psychophysiology 2011, 48, 601–611. [Google Scholar] [CrossRef]

- Herrmann, C.S.; Knight, R.T. Mechanisms of human attention: Event-related potentials and oscillations. Neurosci. Biobehav. Rev. 2001, 25, 465–476. [Google Scholar] [CrossRef]

- Holmes, A.; Nielsen, M.K.; Tipper, S.; Green, S. An electrophysiological investigation into the automaticity of emotional face processing in high versus low trait anxious individuals. Cogn. Affect. Behav. Neurosci. 2009, 9, 323–334. [Google Scholar] [CrossRef] [Green Version]

- Rellecke, J.; Sommer, W.; Schacht, A. Does processing of emotional facial expressions depend on intention? Time-resolved evidence from event-related brain potentials. Biol. Psychol. 2012, 90, 23–32. [Google Scholar] [CrossRef]

- Pourtois, G.; Grandjean, D.; Sander, D.; Vuilleumier, P. Electrophysiological correlates of rapid spatial orienting towards fearful faces. Cereb. Cortex 2004, 14, 619–633. [Google Scholar] [CrossRef] [Green Version]

- Bentin, S.; McCarthy, G.; Perez, E.; Puce, A.; Allison, T. Electrophysiological studies of face perception in humans. J. Cogn. Neurosci. 1996, 8, 551–565. [Google Scholar] [CrossRef] [Green Version]

- Itier, R.J.; Taylor, M.J. N170 or N1? Spatiotemporal differences between object and face processing using ERPs. Cereb. Cortex 2004, 14, 132–142. [Google Scholar] [CrossRef] [Green Version]

- Hinojosa, J.A.; Mercado, F.; Carretié, L. N170 sensitivity to facial expression: A meta-analysis. Neurosci. Biobehav. Rev. 2015, 55, 498–509. [Google Scholar] [CrossRef]

- Bötzel, K.; Schulze, S.; Stodieck, S.R.G. Scalp topography and analysis of intracranial sources of face-evoked potentials. Exp. Brain Res. 1995, 104, 135–143. [Google Scholar] [CrossRef]

- Rossion, B.; Joyce, C.A.; Cottrell, G.W.; Tarr, M.J. Early lateralization and orientation tuning for face, word, and object processing in the visual cortex. Neuroimage 2003, 20, 1609–1624. [Google Scholar] [CrossRef]

- Pourtois, G.; Schettino, A.; Vuilleumier, P. Brain mechanisms for emotional influences on perception and attention: What is magic and what is not. Biol. Psychol. 2013, 92, 492–512. [Google Scholar] [CrossRef] [Green Version]

- Schupp, H.T.; Öhman, A.; Junghöfer, M.; Weike, A.I.; Stockburger, J.; Hamm, A.O. The facilitated processing of threatening faces: An ERP analysis. Emotion 2004, 4, 189. [Google Scholar] [CrossRef] [Green Version]

- Hartigan, A.; Richards, A. Disgust exposure and explicit emotional appraisal enhance the LPP in response to disgusted facial expressions. Soc. Neurosci. 2017, 12, 458–467. [Google Scholar] [CrossRef] [Green Version]

- Mavratzakis, A.; Herbert, C.; Walla, P. Emotional facial expressions evoke faster orienting responses, but weaker emotional responses at neural and behavioural levels compared to scenes: A simultaneous EEG and facial EMG study. NeuroImage 2016, 124, 931–946. [Google Scholar] [CrossRef]

- Langeslag, S.J.; Van Strien, J.W. Early visual processing of snakes and angry faces: An ERP study. Brain Res. 2018, 1678, 297–303. [Google Scholar] [CrossRef]

- Junghöfer, M.; Bradley, M.M.; Elbert, T.R.; Lang, P.J. Fleeting images: A new look at early emotion discrimination. Psychophysiology 2001, 38, 175–178. [Google Scholar] [CrossRef]

- Sarraf-Razavi, M.; Tehrani-Doost, M.; Ghassemi, F.; Nazari, M.A.; Ahmadi, Z.Z. Early posterior negativity as facial emotion recognition index in children with attention deficit hyperactivity disorder. Basic Clin. Neurosci. 2018, 9, 439–447. [Google Scholar] [CrossRef]

- Frenkel, T.I.; Bar-Haim, Y. Neural activation during the processing of ambiguous fearful facial expressions: An ERP study in anxious and nonanxious individuals. Biol. Psychol. 2011, 88, 188–195. [Google Scholar] [CrossRef]

- Antal, A.; Kéri, S.; Kovács, G.; Liszli, P.; Janka, Z.; Benedek, G. Event-related potentials from a visual categorization task. Brain Res. Protoc. 2001, 7, 131–136. [Google Scholar] [CrossRef]

- Crowley, K.E.; Colrain, I.M. A review of the evidence for P2 being an independent component process: Age, sleep and modality. Clin. Neurophysiol. 2004, 115, 732–744. [Google Scholar] [CrossRef]

- Wong, T.K.; Fung, P.C.; Chua, S.E.; McAlonan, G.M. Abnormal spatiotemporal processing of emotional facial expressions in childhood autism: Dipole source analysis of event-related potentials. Eur. J. Neurosci. 2008, 28, 407–416. [Google Scholar] [CrossRef]

- Balconi, M.; Lucchiari, C. Consciousness and emotional facial expression recognition: Subliminal/supraliminal stimulation effect on N200 and P300 ERPs. J. Psychophysiol. 2007, 21, 100–108. [Google Scholar] [CrossRef]

- Ueno, T.; Morita, K.; Shoji, Y.; Yamamoto, M.; Yamamoto, H.; Maeda, H. Recognition of facial expression and visual P300 in schizophrenic patients: Differences between paranoid type patients and non-paranoid patients. Psychiatry Clin. Neurosci. 2004, 58, 585–592. [Google Scholar] [CrossRef] [PubMed]

- Werheid, K.; Schacht, A.; Sommer, W. Facial attractiveness modulates early and late event-related brain potentials. Biol. Psychol. 2007, 76, 100–108. [Google Scholar] [CrossRef] [PubMed]

- Lu, Y.; Wang, J.; Wang, L.; Wang, J.; Qin, J. Neural responses to cartoon facial attractiveness: An event-related potential study. Neurosci. Bull. 2014, 30, 441–450. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Krumhuber, E.G.; Kappas, A.; Manstead, A.S. Effects of dynamic aspects of facial expressions: A review. Emot. Rev. 2013, 5, 41–46. [Google Scholar] [CrossRef]

- Ambadar, Z.; Schooler, J.W.; Cohn, J.F. Deciphering the enigmatic face: The importance of facial dynamics in interpreting subtle facial expressions. Psychol. Sci. 2005, 16, 403–410. [Google Scholar] [CrossRef]

- Lander, K.; Bruce, V. Recognizing famous faces: Exploring the benefits of facial motion. Ecol. Psychol. 2000, 12, 259–272. [Google Scholar] [CrossRef]

- Kilts, C.D.; Egan, G.; Gideon, D.A.; Ely, T.D.; Hoffman, J.M. Dissociable neural pathways are involved in the recognition of emotion in static and dynamic facial expressions. NeuroImage 2003, 18, 156–168. [Google Scholar] [CrossRef] [Green Version]

- Zinchenko, O.; Yaple, Z.A.; Arsalidou, M. Brain Responses to Dynamic Facial Expressions: A Normative Meta-Analysis. Front. Hum. Neurosci. 2018, 12, 227. [Google Scholar] [CrossRef] [Green Version]

- Amaral, C.P.; Simöes, M.A.; Castelo-Branco, M.S. Neural signals evoked by stimuli of increasing social scene complexity are detectable at the single-trial level and right lateralized. PLoS ONE 2015, 10, e0121970. [Google Scholar] [CrossRef]

- Qu, F.; Wang, S.J.; Yan, W.J.; Li, H.; Wu, S.; Fu, X. CAS(ME)2: A Database for Spontaneous Macro-Expression and Micro-Expression Spotting and Recognition. IEEE Trans. Affect. Comput. 2017, 9, 424–436. [Google Scholar] [CrossRef]

- LaBar, K.S.; Crupain, M.J.; Voyvodic, J.T.; McCarthy, G. Dynamic perception of facial affect and identity in the human brain. Cereb. Cortex 2003, 13, 1023–1033. [Google Scholar] [CrossRef]

- Sato, W.; Kochiyama, T.; Uono, S. Spatiotemporal neural network dynamics for the processing of dynamic facial expressions. Sci. Rep. 2015, 5, 12432. [Google Scholar] [CrossRef] [Green Version]

- Sato, W.; Kochiyama, T.; Yoshikawa, S.; Naito, E.; Matsumura, M. Enhanced neural activity in response to dynamic facial expressions of emotion: An fMRI study. Cogn. Brain Res. 2004, 20, 81–91. [Google Scholar] [CrossRef]

- Fichtenholtz, H.M.; Hopfinger, J.B.; Graham, R.; Detwiler, J.M.; LaBar, K.S. Event-related potentials reveal temporal staging of dynamic facial expression and gaze shift effects on attentional orienting. Soc. Neurosci. 2009, 4, 317–331. [Google Scholar] [CrossRef]

- Recio, G.; Sommer, W.; Schacht, A. Electrophysiological correlates of perceiving and evaluating static and dynamic facial emotional expressions. Brain Res. 2011, 1376, 66–75. [Google Scholar] [CrossRef]

- Trautmann-Lengsfeld, S.A.; Domínguez-Borràs, J.; Escera, C.; Herrmann, M.; Fehr, T. The perception of dynamic and static facial expressions of happiness and disgust investigated by ERPs and fMRI constrained source analysis. PLoS ONE 2013, 8, e66997. [Google Scholar] [CrossRef] [Green Version]

- Stefanou, M.E.; Dundon, N.M.; Bestelmeyer, P.E.G.; Koldewyn, K.; Saville, C.W.N.; Fleischhaker, C.; Feige, B.; Biscaldi, M.; Smyrnis, N.; Klein, C. Electro-cortical correlates of multisensory integration using ecologically valid emotional stimuli: Differential effects for fear and disgust. Biol. Psychol. 2019, 142, 132–139. [Google Scholar] [CrossRef] [Green Version]

- Recio, G.; Schacht, A.; Sommer, W. Recognizing dynamic facial expressions of emotion: Specificity and intensity effects in event-related brain potentials. Biol. Psychol. 2014, 96, 111–125. [Google Scholar] [CrossRef]

- Kosonogov, V.; Titova, A. Recognition of all basic emotions varies in accuracy and reaction time: A new verbal method of measurement. Int. J. Psychol. 2019, 54, 582–588. [Google Scholar] [CrossRef]

- Liu, H.; Zheng, C.; Li, D.; Shen, X.; Lin, K.; Wang, J.; Zhang, J.; Zhang, J.; Xiong, N.N. EDMF: Efficient Deep Matrix Factorization with Review Feature Learning for Industrial Recommender System. IEEE Trans. Ind. Inform. 2022, 18, 4361–4437. [Google Scholar] [CrossRef]

- Liu, H.; Nie, H.; Zhang, Z.; Li, Y.F. Anisotropic angle distribution learning for head pose estimation and attention understanding in human-computer interaction. Neurocomputing 2021, 433, 310–322. [Google Scholar] [CrossRef]

- Li, Z.; Liu, H.; Zhang, Z.; Liu, T.; Xiong, N.N. Learning Knowledge Graph Embedding with Heterogeneous Relation Attention Networks. IEEE Trans. Neural Netw. Learn. Syst. 2021. [Google Scholar] [CrossRef] [PubMed]

- Lundqvist, D.; Flykt, A.; Öhman, A. The Karolinska Directed Emotional Faces—KDEF, CD ROM from Department of Clinical Neuroscience, Psychology Section, Karolinska Institutet; Karolinska Institutet: Stockholm, Sweden, 1998; Available online: http://www.emotionlab.se/resources/kdef (accessed on 1 January 2021)ISBN 91-630-7164-9.

- Mathôt, S.; Schreij, D.; Theeuwes, J. OpenSesame: An open-source, graphical experiment builder for the social sciences. Behav. Res. Methods 2012, 44, 314–324. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Delorme, A.; Makeig, S. EEGLAB: An open source toolbox for analysis of single-trial EEG dynamics including independent component analysis. J. Neurosci. Methods 2004, 134, 9–21. [Google Scholar] [CrossRef] [Green Version]

- Gratton, G.; Coles, M.G.; Donchin, E. A new method for off-line removal of ocular artifact. Electroencephalogr. Clin. Neurophysiol. 1983, 55, 468–484. [Google Scholar] [CrossRef]

- Huffmeijer, R.; Bakermans-Kranenburg, M.J.; Alink, L.R.; Van Ijzendoorn, M.H. Reliability of event-related potentials: The influence of number of trials and electrodes. Physiol. Behav. 2014, 130, 13–22. [Google Scholar] [CrossRef]

- Benjamini, Y.; Hochberg, Y. Controlling the false discovery rate: A practical and powerful approach to multiple testing. J. R. Stat. Soc. Ser. B 1995, 57, 289–300. [Google Scholar] [CrossRef]

- Derntl, B.; Habel, U.; Windischberger, C.; Robinson, S.; Kryspin-Exner, I.; Gur, R.C.; Moser, E. General and specific responsiveness of the amygdala during explicit emotion recognition in females and males. BMC Neurosci. 2009, 10, 91. [Google Scholar] [CrossRef] [Green Version]

- Brand, S.; Schilling, R.; Ludyga, S.; Colledge, F.; Sadeghi Bahmani, D.; Holsboer-Trachsler, E.; Puhse, U.; Gerber, M. Further Evidence of the Zero-Association Between Symptoms of Insomnia and Facial Emotion Recognition—Results from a Sample of Adults in Their Late 30s. Front. Psychiatry 2019, 9, 754. [Google Scholar] [CrossRef]

- Tanaka, H. Face-sensitive P1 and N170 components are related to the perception of two-dimensional and three-dimensional objects. NeuroReport 2018, 29, 583. [Google Scholar] [CrossRef]

- Kolassa, I.T.; Kolassa, S.; Bergmann, S.; Lauche, R.; Dilger, S.; Miltner, W.H.; Musial, F. Interpretive bias in social phobia: An ERP study with morphed emotional schematic faces. Cogn. Emot. 2009, 23, 69–95. [Google Scholar] [CrossRef]

- Rossignol, M.; Campanella, S.; Bissot, C.; Philippot, P. Fear of negative evaluation and attentional bias for facial expressions: An event-related study. Brain Cogn. 2013, 82, 344–352. [Google Scholar] [CrossRef]

- Balconi, M.; Carrera, A. Cross-modal integration of emotional face and voice in congruous and incongruous pairs: The P2 ERP effect. J. Cogn. Psychol. 2011, 23, 132–139. [Google Scholar] [CrossRef]

- Okita, T. Event-related potentials and selective attention to auditory stimuli varying in pitch and localization. Biol. Psychol. 1979, 9, 271–284. [Google Scholar] [CrossRef]

- Di Russo, F.; Taddei, F.; Apnile, T.; Spinelli, D. Neural correlates of fast stimulus discrimination and response selection in top-level fencers. Neurosci. Lett. 2006, 408, 113–118. [Google Scholar] [CrossRef]

- Kanske, P.; Plitschka, J.; Kotz, S.A. Attentional orienting towards emotion: P2 and N400 ERP effects. Neuropsychologia 2011, 49, 3121–3129. [Google Scholar] [CrossRef]

- Proverbio, A.M.; Zani, A.; Adorni, R. Neural markers of a greater female responsiveness to social stimuli. BMC Neurosci. 2008, 9, 56. [Google Scholar] [CrossRef] [Green Version]

- Carretié, L.; Kessel, D.; Carboni, A.; López-Martín, S.; Albert, J.; Tapia, M.; Mercado, F.; Capilla, A.; Hinojosa, J.A. Exogenous attention to facial versus non-facial emotional visual stimuli. Soc. Cogn. Affect. Neurosci. 2013, 8, 764–773. [Google Scholar] [CrossRef] [Green Version]

- Kosonogov, V.; Martinez-Selva, J.M.; Carrillo-Verdejo, E.; Torrente, G.; Carretié, L.; Sanchez-Navarro, J.P. Effects of social and affective content on exogenous attention as revealed by event-related potentials. Cogn. Emot. 2019, 33, 683–695. [Google Scholar] [CrossRef]

- Dolcos, F.; Cabeza, R. Event-related potentials of emotional memory: Encoding pleasant, unpleasant, and neutral pictures. Cogn. Affect. Behav. Neurosci. 2002, 2, 252–263. [Google Scholar] [CrossRef]

- Bayer, M.; Schacht, A. Event-related brain responses to emotional words, pictures, and faces—A cross-domain comparison. Front. Psychol. 2014, 5, 1106. [Google Scholar] [CrossRef] [Green Version]

- Eimer, M.; Holmes, A.; McGlone, F.P. The role of spatial attention in the processing of facial expression: An ERP study of rapid brain responses to six basic emotions. Cogn. Affect. Behav. Neurosci. 2003, 3, 97–110. [Google Scholar] [CrossRef] [Green Version]

- Schupp, H.T.; Junghöfer, M.; Weike, A.I.; Hamm, A.O. The selective processing of briefly presented affective pictures: An ERP analysis. Psychophysiology 2004, 41, 441–449. [Google Scholar] [CrossRef] [Green Version]

- Foti, D.; Olvet, D.M.; Klein, D.N.; Hajcak, G. Reduced electrocortical response to threatening faces in major depressive disorder. Depress. Anxiety 2010, 27, 813–820. [Google Scholar] [CrossRef]

- Vico, C.; Guerra, P.; Robles, H.; Vila, J.; Anllo-Vento, L. Affective processing of loved faces: Contributions from peripheral and central electrophysiology. Neuropsychologia 2010, 48, 2894–2902. [Google Scholar] [CrossRef]

- Choi, D.; Nishimura, T.; Motoi, M.; Egashira, Y.; Matsumoto, R.; Watanuki, S. Effect of empathy trait on attention to various facial expressions: Evidence from N170 and late positive potential (LPP). J. Physiol. Anthropol. 2014, 33, 18. [Google Scholar] [CrossRef] [Green Version]

- Posner, M. Orienting of attention. Q. J. Exp. Psychol. 2008, 32, 3–25. [Google Scholar] [CrossRef]

- Ortega, R.; López, V.; Aboitiz, F. Voluntary modulations of attention in a semantic auditory-visual matching Task: An ERP study. Biol. Res. 2008, 41, 453–460. [Google Scholar] [CrossRef]

- Plass, J.L.; Moreno, R.; Brünken, R. (Eds.) Cognitive Load Theory; Cambridge University Press: Cambridge, UK, 2010. [Google Scholar]

- Abbruzzese, L.; Magnani, N.; Robertson, I.H.; Mancuso, M. Age and gender differences in emotion recognition. Front. Psychol. 2019, 10, 2371. [Google Scholar] [CrossRef] [Green Version]

- Connolly, H.L.; Lefevre, C.E.; Young, A.W.; Lewis, G.J. Sex differences in emotion recognition: Evidence for a small overall female superiority on facial disgust. Emotion 2019, 19, 455–464. [Google Scholar] [CrossRef] [Green Version]

- Byrne, S.P.; Mayo, A.; O’Hair, C.; Zankman, M.; Austin, G.M.; Thompson-Booth, C.; McCrory, E.J.; Mayes, L.C.; Rutherford, H.J. Facial emotion recognition during pregnancy: Examining the effects of facial age and affect. Infant Behav. Dev. 2019, 54, 108–113. [Google Scholar] [CrossRef] [PubMed]

- Elfenbein, H.A.; Ambady, N. On the universality and cultural specificity of emotion recognition: A meta-analysis. Psychol. Bull. 2002, 128, 203–235. [Google Scholar] [CrossRef] [PubMed] [Green Version]

| 50% with Low Accuracy | 50% with High Accuracy | ||||

|---|---|---|---|---|---|

| Median | IQR | Median | IQR | p | |

| total | 61.8 | 58.7–65.7 | 71.7 | 70.0–74.4 | 0.001 |

| happiness | 100 | 90.9–100 | 100 | 95.4–100 | 0.017 |

| surprise | 83.3 | 72.2–91.7 | 88.2 | 76.2–94.7 | 0.084 |

| disgust | 73.9 | 66.7–83.3 | 85.0 | 77.3–90.9 | 0.001 |

| anger | 62.5 | 45.5–70.8 | 79.2 | 71.4–85.7 | 0.001 |

| sadness | 38.9 | 29.4–50.0 | 56.5 | 47.1–66.7 | 0.001 |

| fear | 17.4 | 5.9–29.2 | 27.8 | 18.2–34.8 | 0.001 |

| 50% with Slow RT | 50% with Fast RT | ||||

|---|---|---|---|---|---|

| Median | IQR | Median | IQR | p | |

| total | 1661 | 1541–1813 | 1164 | 1066–1267 | 0.001 |

| happiness | 1239 | 1107–1423 | 950 | 861–1023 | 0.001 |

| surprise | 1608 | 1466–1939 | 1179 | 1056–1325 | 0.001 |

| disgust | 1627 | 1359–1971 | 1138 | 1018–1331 | 0.001 |

| anger | 1754 | 1578–2193 | 1259 | 1116–1392 | 0.001 |

| sadness | 1950 | 1730–2238 | 1391 | 1165–1546 | 0.001 |

| fear | 1906 | 1565–2221 | 1334 | 1186–1537 | 0.001 |

| Method | Accuracy, % |

|---|---|

| K-Nearest Neighbours | 62.07 |

| Naïve Bayesian Classificator | 68.97 |

| Automated Neural Network | 75.86 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kosonogov, V.; Kovsh, E.; Vorobyeva, E. Event-Related Potentials during Verbal Recognition of Naturalistic Neutral-to-Emotional Dynamic Facial Expressions. Appl. Sci. 2022, 12, 7782. https://doi.org/10.3390/app12157782

Kosonogov V, Kovsh E, Vorobyeva E. Event-Related Potentials during Verbal Recognition of Naturalistic Neutral-to-Emotional Dynamic Facial Expressions. Applied Sciences. 2022; 12(15):7782. https://doi.org/10.3390/app12157782

Chicago/Turabian StyleKosonogov, Vladimir, Ekaterina Kovsh, and Elena Vorobyeva. 2022. "Event-Related Potentials during Verbal Recognition of Naturalistic Neutral-to-Emotional Dynamic Facial Expressions" Applied Sciences 12, no. 15: 7782. https://doi.org/10.3390/app12157782

APA StyleKosonogov, V., Kovsh, E., & Vorobyeva, E. (2022). Event-Related Potentials during Verbal Recognition of Naturalistic Neutral-to-Emotional Dynamic Facial Expressions. Applied Sciences, 12(15), 7782. https://doi.org/10.3390/app12157782