Research on Dynamic Path Planning of Multi-AGVs Based on Reinforcement Learning

Abstract

:1. Introduction

1.1. Motivation and Incitement

1.2. Literature Review and Contribution

- (1)

- To solve the problem of dynamic obstacle avoidance and unknown environment pathfinding for AGVs, we design an improved Q-learning path optimization algorithm based on Kohonen networks (K-L for short).

- (2)

- In order to obtain improved path planning results, we propose a two-level path planning method by combining a K-L algorithm and an improved genetic algorithm (referred to as GA-KL). We use a genetic algorithm (T-GA) based on path smoothing factor and node busy factor optimization as a global planning algorithm to generate the global optimal set of path nodes.

- (3)

- To effectively accomplish dynamic scheduling of multi-AGVs, we integrate the scheduling policy into global path planning and achieve dynamic scheduling of multiple AGVs by analyzing the congestion of the current working environment and combining it with a weight-based voting algorithm.

1.3. Paper Organization

2. K-L-Based Local Path Planning Algorithm

2.1. Design of Action State-Space Model

2.2. Adaptive Clustering Method Based on Kohonen Network

2.2.1. Implementation of Adaptive Clustering Method Based on Kohonen Network

- (1)

- The input sample represents the distance between the AGV and the obstacle. The input initialization vector is , and the weights of the connections between all input and output nodes are , . The number of neurons in the input layer is , and the output layer is .

- (2)

- Construct the Kohonen network.

- (3)

- Initialize the learning rate (), the neighborhood (), and the maximum number of iterations ().

- (4)

- Feed input feature vector .

- (5)

- Calculate the similarity between and by Euclidean distance. Eventually, the output node (j*) with the minimum distance is selected.

- (6)

- Adjust the connection weights of the winning neuron and its neighborhood, .

- (7)

- Check if the network converges. Implement (1) if it converges; otherwise, exit.

2.2.2. Analysis of Discrete State

2.3. Exploration Strategy Based on a Self-Adapting Simulated Annealing Algorithm

2.4. Reward Function

2.5. The Flow of the K-L Algorithm

- (1)

- When the sensors find an obstacle, the K-L algorithm is activated:

- (2)

- First, the Kohonen network outputs the state set (), which records the information collected by the sensors.

- (3)

- Then, the algorithm establishes a state–action mapping pair. The mapping pair is established by traversing the actions of each state (for example, the AGV moves forward). The mapping pair is represented by and is stored in the Q-table.

- (4)

- Then, the SSA-based exploration strategy selects the next action based on the Q-table. Finally, the AGV obtains the reward value of environmental feedback.

- (5)

- The AGV selects the next action according to the reward value of environmental feedback until it bypasses the obstacle.

2.6. Time Complexity Analysis of the K-L

2.7. Space Complexity Analysis of the K-L

3. Global Path Planning Algorithm Based on the Improved Genetic Algorithm

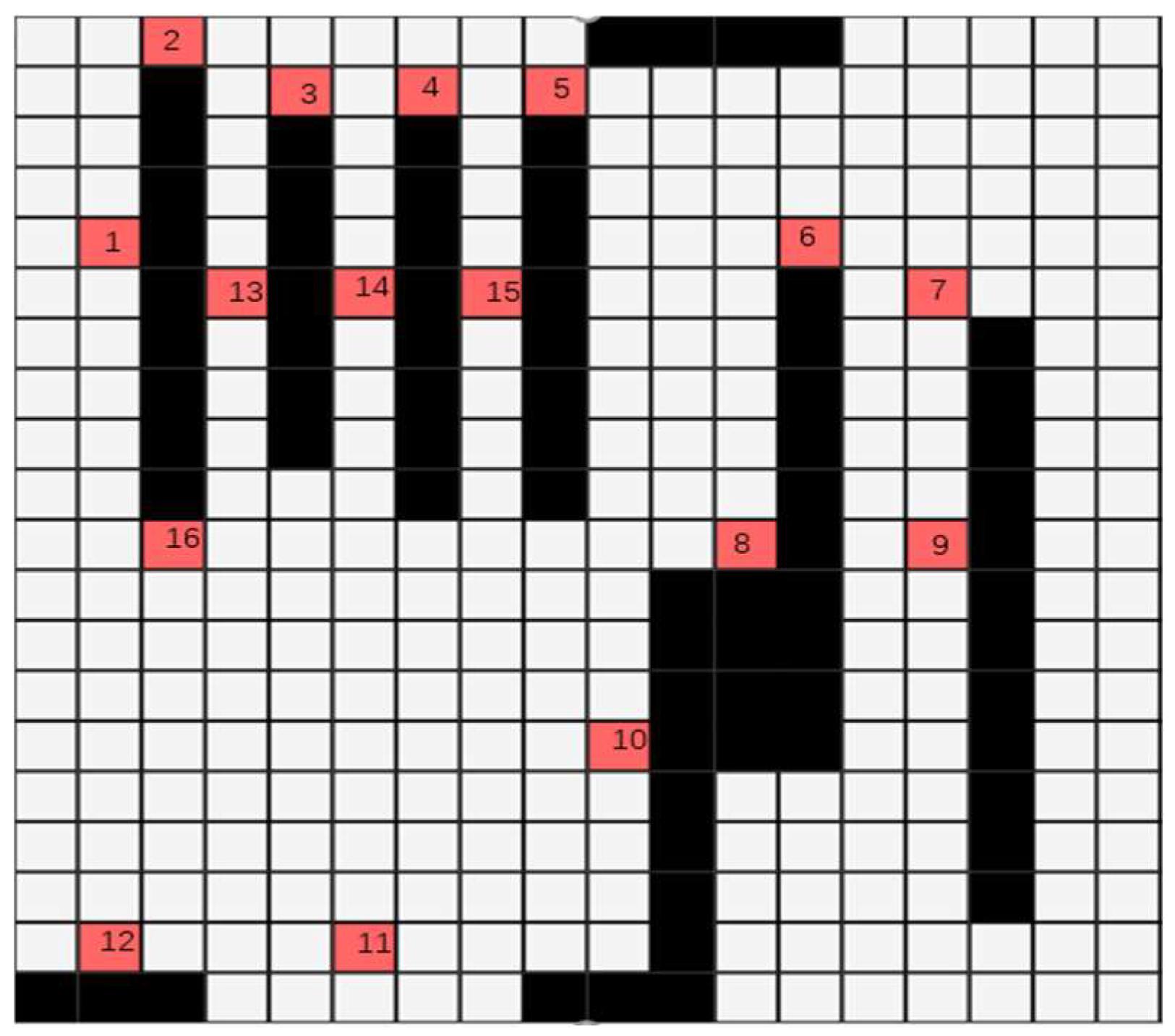

3.1. Environmental Modeling

3.2. Coding Method

3.3. Optimization of the Fitness Function

3.3.1. Path Smoothing Factor

3.3.2. Node Busy Factor

3.3.3. Weight-Based Polling Algorithm

| Algorithm 1 Weight-based smoothing polling algorithm. |

| WBSP Procedure |

| Input: Number of AGVs in waiting status , AGVs current set of weights , AGVs fixed set of weights , and Serial number of the best AGVs Best. |

| Output:Best |

| for each AGVs |

| Initialize the current weight of AGVs, = 0 |

| Calculating the fixed weights of AGVs |

| end for |

| , |

| while n < |

| Random selection of an AGVs |

| = + |

| if < |

| n = n + 1; |

| end while |

| print Best |

| end procedure |

4. Two-Level Path Planning Method

- (1)

- The working environment is scanned by the AGV’s own SLAM system, which obtains map information to create a raster map.

- (2)

- First, the optimal set of global path nodes is obtained using the T-GA algorithm; then, the shortest path between nodes is generated using the A* algorithm, and the algorithm aims at the shortest path length.

- (3)

- Due to the global set of path nodes, we divide the whole path into multiple subpaths to obtain multiple local target points.

- (4)

- For each subpath, we first detect dynamic obstacles using the detection radar of the AGV; then, when dynamic obstacles appear, the K-L algorithm is enabled to complete obstacle avoidance and select the shortest path length as the goal to obtain the local optimal path; if there are no obstacles, the path planned by the A* algorithm continues to be used.

5. Experimental Results and Discussion

5.1. Parameter Settings

5.2. Experiment 1: Experiments to Verify the Effectiveness of the K-L Algorithm

5.3. Experiment 2: Comparison of the Convergence Time of K-L Algorithm and the Improved Q-Learning Algorithm

5.4. Experiment 3: Comparison of the Convergence Time of K-L Algorithm and Q-Learning Algorithm Based on Clustering Network Improvement

5.5. Experiment 4: Comparing the Impact of Different Exploration Strategies on the K-L Algorithm

5.6. Experiment 5: Experiments Based on GA-KL Path Planning Method for Real Environments

5.6.1. Experiments on Frontal Avoidance of Dynamic Obstacles by AGVs Based on the GA-KL Method

5.6.2. Experiments on Side Avoidance of Dynamic Obstacles by AGVs Based on the GA-KL Method

5.7. Experiment 6: GA-KL Method Stability Test in a Narrow Operating Environment

- (1)

- Size of the map: 20 m × 10 m.

- (2)

- Coordinates of starting point: (−9.50 m, 5.87 m); coordinates of target point: (6.25 m, 1.83 m).

- (3)

- Average speed of AGVs: 0.8 m/s.

5.8. Experiment 7: GA-KL Method Stability Test in a Highly Congested Environment

6. Conclusions

- (1)

- The K-L algorithm introduces an exploration strategy of an adaptive simulated annealing algorithm and the Kohonen network state classification method, as well as an improved reward function based on potential energy, which can effectively handle path planning in complex environments with fast convergence and satisfactory optimization results. Through simulation and field experiments, the effectiveness of the K-L algorithm is further verified; the convergence time of the algorithm is reduced by about 6.3%, on average, and the path length of AGVs is reduced by about 4.6%, on average.

- (2)

- The GA-KL method uses the T-GA algorithm for global path planning and the K-L algorithm for local path planning. The T-GA algorithm is a genetic algorithm optimized by path smoothing coefficients and node busy coefficients, which can coordinate the path planning of multi-AGVs and make the AGVs complete their tasks as soon as possible. In addition, the GA-KL method integrates a scheduling policy into global path planning, which can obtain a better dynamic scheduling policy for multi-AGVs in different environments and under different congestion conditions. Finally, it is experimentally demonstrated that the GA-KL method reduces the total path length by 8.4%, on average, and the total path completion time by 12.6%, on average, compared to other path planning methods.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ajeil, F.H.; Ibraheem, I.K.; Azar, A.T.; Humaidi, A.J. Grid-based Mobile Robot Path Planning Using aging-based ant colony optimization algorithm in static and dynamic environments. Sensors 2020, 20, 1880. [Google Scholar] [CrossRef] [PubMed]

- Luo, Y.; Lu, J.; Zhang, Y.; Qin, Q.; Liu, Y. 3D JPS Path Optimization Algorithm and Dynamic-Obstacle Avoidance Design Based on Near-Ground Search Drone. Appl. Sci. 2022, 12, 7333. [Google Scholar] [CrossRef]

- Liang, D.; Liu, Z.; Bhamara, R. Collaborative Multi-Robot Formation Control and Global Path Optimization. Appl. Sci. 2022, 12, 7046. [Google Scholar] [CrossRef]

- Nazarahari, M.; Khanmirza, E.; Doostie, S. Multi-objective multi-robot path planning in continuous environment using an enhanced genetic algorithm. Expert Syst. Appl. 2019, 115, 106–120. [Google Scholar] [CrossRef]

- Duan, H.; Yu, Y.; Zhang, X.; Shao, S. Three-dimension path planning for UCAV using hybrid meta-heuristic ACO-DE algorithm. Simul. Model. Pract. Theory 2010, 8, 1104–1115. [Google Scholar] [CrossRef]

- Ahmed, F.; Deb, K. Multi-objective optimal path planning using elitist non-dominated sorting genetic algorithms. Soft Comput. 2013, 7, 1283–1299. [Google Scholar] [CrossRef]

- Wang, S.F.; Zhang, J.X.; Zhang, J.Y. Artificial potential field algorithm for path control of unmanned ground vehicles formation in highway. Electron. Lett. 2018, 54, 1166–1167. [Google Scholar] [CrossRef]

- Tian, W.J.; Zhou, H.; Gao, M.J. A path planning algorithm for mobile robot based on combined fuzzy and Artificial Potential Field. In Advanced Computer Technology, New Education, Proceedings; Xiamen University Press: Wuhan, China, 2007; pp. 55–58. [Google Scholar]

- Sun, Y.; Zhang, R.B. Research on Global Path Planning for AUV Based on GA. Mech. Eng. Technol. 2012, 125, 311–318. [Google Scholar]

- Ahmed, N.; Pawase, C.J.; Chang, K. Distributed 3-D Path Planning for Multi-UAVs with Full Area Surveillance Based on Particle Swarm Optimization. Appl. Sci. 2021, 11, 3417. [Google Scholar] [CrossRef]

- Xu, L.H.; Xia, X.H.; Luo, Q. The study of reinforcement learning for traffic self-adaptive control under multiagent markov game environment. Math. Probl. Eng. 2013, 2013, 962869. [Google Scholar] [CrossRef]

- Yung, N.C.; Ye, C. An intelligent mobile vehicle navigator based on fuzzy logic and reinforcement learning. IEEE Trans. Syst. Man Cybernetics. Part B Cybern. 1999, 29, 314–321. [Google Scholar] [CrossRef] [PubMed]

- Hengst, B. Discovering Hierarchical Reinforcement Learning, Sydney; University of New South Wales: Sydney, NSW, Australia, 2008. [Google Scholar]

- Xie, R.; Meng, Z.; Zhou, Y.; Ma, Y.; Wu, Z. Heuristic Q-learning based on experience replay for three-dimensional path planning of the unmanned aerial vehicle. Sci. Prog. 2020, 103, 0036850419879024. [Google Scholar] [CrossRef] [PubMed]

- Osowski, S.; Szmurlo, R.; Siwek, K.; Ciechulski, T. Neural Approaches to Short-Time Load Forecasting in Power Systems—A Comparative Study. Energies 2022, 15, 3265. [Google Scholar] [CrossRef]

- Souza, L.C.; Pimentel, B.A.; Filho, T.D.M.S.; de Souza, R.M. Kohonen map-wise regression applied to interval data. Knowl. Based Syst. 2021, 224, 107091. [Google Scholar] [CrossRef]

- Moskalev, A.K.; Petrunina, A.E.; Tsygankov, N.S. Neural network modelling for determining the priority areas of regional development. IOP Conf. Ser. Mater. Sci. Eng. 2020, 986, 012–017. [Google Scholar] [CrossRef]

- Shneier, M.; Chang, T.; Hong, T.; Shackleford, W.; Bostelman, R.; Albus, J.S. Learning traversability models for autonomous mobile vehicles. Auton. Robots 2008, 24, 69–86. [Google Scholar] [CrossRef]

- Na, Y.-K.; Oh, S.-Y. Hybrid Control for Autonomous Mobile Robot Navigation Using Neural Network Based Behavior Modules and Environment Classification. Auton. Robots 2003, 15, 193–206. [Google Scholar] [CrossRef]

- Griepentrog, H.W.; Jaeger, C.L.D.; Paraforos, D.S. Robots for Field Operations with Comprehensive Multilayer Control. KI Künstliche Intell. 2013, 27, 325–333. [Google Scholar] [CrossRef]

- Dou, J.; Chen, C.; Pei, Y. Genetic Scheduling and Reinforcement Learning in Multirobot Systems for Intelligent Warehouses. Math. Probl. Eng. Theory Methods Appl. 2015, 25, 597956. [Google Scholar] [CrossRef]

- Cui, W.; Wang, H.; Jan, B. Simulation Design of AGVS Operating Process in Manufacturing Workshop. In Proceedings of the 2019 34rd Youth Academic Annual Conference of Chinese Association of Automation (YAC), Jinzhou, China, 6–8 June 2019; pp. 6–10. [Google Scholar] [CrossRef]

- Yongqiang, Q.; Hailan, Y.; Dan, R.; Yi, K.; Dongchen, L.; Chunyang, L.; Xiaoting, L. Path-Integral-Based Reinforcement Learning Algorithm for Goal-Directed Locomotion of Snake-Shaped Robot. Discret. Dyn. Nat. Soc. 2021, 12, 8824377. [Google Scholar] [CrossRef]

- Liu, X.; Zhang, D.; Zhang, T.; Cui, Y.; Chen, L.; Liu, S. Novel best path selection approach based on hybrid improved A* algorithm and reinforcement learning. Appl. Intell. 2021, 51, 9015–9029. [Google Scholar] [CrossRef]

- Guo, X.; Zhai, Y. K-Means Clustering Based Reinforcement Learning Algorithm for Automatic Control in Robots. Int. J. Simul. Syst. 2016, 17, 24–30. [Google Scholar]

- Zhuang, H.; Dong, K.; Qi, Y.; Wang, N.; Dong, L. Multi-Destination Path Planning Method Research of Mobile Robots Based on Goal of Passing through the Fewest Obstacles. Appl. Sci. 2021, 11, 7378. [Google Scholar] [CrossRef]

| Number of Divisions | Number of States |

|---|---|

| 4 | 3072 |

| 5 | 9375 |

| 6 | 23,328 |

| 7 | 50,421 |

| 8 | 98,304 |

| Steps | Details | Space Complexity |

|---|---|---|

| 1 | Clustering state of Kohonen network | |

| 2 | Select the next action according to exploration strategy | |

| 3 | Update Q-value matrix | |

| 4 | Judge whether the algorithm reaches the stop condition. If not, proceed to step 2. | |

| 5 | Output |

| Serial Number | Number of Passing AGVs | Number of Times AGVs are Used |

|---|---|---|

| 1 | ||

| 2 | ||

| 3 | ||

| … | … | … |

| M |

| Fixed Annealing Rate | Initialization Temperature | Learning Efficiency | Penalty Coefficient | Penalty Value of the Reward Function | Default Reward Value for the Reward Function |

|---|---|---|---|---|---|

| = 0.95 | = 0.2 | = 0.9 | 1 | −0.5 |

| Average Speed | Turning Speed | Penalty Factor | Number of Nodes | Maximum Number of AGVs | Time Bonus Factor | Maximum Value of Node Busy Factor |

|---|---|---|---|---|---|---|

| = 0.80 m/s | =0.35 m/s | a = 2.1 | = 15 | 5 |

| Algorithm | Convergence Time (s) | Number of States |

|---|---|---|

| K-L | 116.58 | 1500 |

| PIRL | 178.52 | 9863 |

| HARL | 282.35 | 10,085 |

| Algorithm | Convergence Time (s) | Number of States |

|---|---|---|

| K-L | 192.86 | 1500 |

| PIRL | 255.88 | 16,144 |

| HARL | 695.35 | 44,728 |

| ID | Running Time (s) | Path Length (m) |

|---|---|---|

| GA-KL | 13.28 | 12.08 |

| GP-AP | 26.37 | 11.80 |

| BP-P | 23.12 | 11.80 |

| HARL | 17.31 | 12.64 |

| PIRL | 20.35 | 13.40 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bai, Y.; Ding, X.; Hu, D.; Jiang, Y. Research on Dynamic Path Planning of Multi-AGVs Based on Reinforcement Learning. Appl. Sci. 2022, 12, 8166. https://doi.org/10.3390/app12168166

Bai Y, Ding X, Hu D, Jiang Y. Research on Dynamic Path Planning of Multi-AGVs Based on Reinforcement Learning. Applied Sciences. 2022; 12(16):8166. https://doi.org/10.3390/app12168166

Chicago/Turabian StyleBai, Yunfei, Xuefeng Ding, Dasha Hu, and Yuming Jiang. 2022. "Research on Dynamic Path Planning of Multi-AGVs Based on Reinforcement Learning" Applied Sciences 12, no. 16: 8166. https://doi.org/10.3390/app12168166

APA StyleBai, Y., Ding, X., Hu, D., & Jiang, Y. (2022). Research on Dynamic Path Planning of Multi-AGVs Based on Reinforcement Learning. Applied Sciences, 12(16), 8166. https://doi.org/10.3390/app12168166