1. Introduction

Modern electric power systems must deal with unprecedented conditions for power-quality (PQ) deterioration, such as the growing presence of distributed, renewable-energy-based generation units, or the countless high-end electronic devices loaded as either electrical equipment controllers [

1] or standalone appliances. This situation has driven increased attention of researchers towards PQ management activities, and, specifically, to PQ indices forecasting. In particular, forecasting the PQ indices for steady-state power systems, e.g., voltage deviation, voltage unbalance, frequency deviation, or flickering, is a significant problem. A substantial amount of research focuses on implementing early-warning mechanisms able to predict PQ disturbances in the future operation of different electric power configurations [

1,

2,

3].

Most of the state-of-the-art research focuses on PQ forecasting, but it deals with small power arrangements. The data may come from real or simulated systems that provide a basis for analyzing the impact of renewable-energy ingestion on isolated power systems. Although valuable, these experiments do not face the data-scale problem of forecasting for city- or regional-size power distribution grids. On the other hand, PQ forecasting projects that use data from large distribution grids usually employ information from only a few power quality meters (PQM). As a result, the need for investigating a methodology for PQ indices forecasting in big-data environments remains.

This article presents the methodology, architecture, and results of a short-term PQ indices-forecasting project developed in Mexico for the electric-power distribution grid of CFE (the Spanish acronym for Comisión Federal de Electricidad—Federal Board of Electric Power), the state-owned enterprise responsible for the Mexican state grid and also the primary electric-power provider in the country. This article presents MIRD, a system whose main objective is the application of artificial-intelligence techniques (machine-learning-based time-series forecasting) to monitor the evolution of significant PQ indices. This monitoring process occurs at crucial coupling points of the distribution grid. Based on the Mexican Energy Law reform in the mid-2010s, CRE (the Spanish acronym for Comisión Reguladora de Energía—Energy Regulation Board), issued the general conditions and criteria for distributing electric power in Mexico. This law expresses strict regulations on the quality of delivered energy in terms of detailed PQ indices intervals and values. In particular, that law requires that CFE’s power distribution grid fulfill the following requirements (see

Table 1):

Operation voltage. The voltage a quality-control node or quality node operates at must lie within a defined interval for at least 90% of the 10-min voltage measurements in the node. This acceptance interval is 93–105% of the node’s nominal voltage. The operation voltage is calculated as the average of the three per-phase RMS voltages, as .

Electric power factor. The power factor measured at a distribution circuit must be greater than or equal to 0.95 in at least 80% of a given set of circuits. The power factor is calculated as the ratio of the real power absorbed by the load to the apparent power that flows through the circuit.

Current unbalance. The three-phase current flowing into a distribution circuit must be within the required balance interval, which is 15% in at least 80% of a given set of circuits. The current unbalance is calculated as follows: (a) the per-phase current average is determined, (b) the largest per-phase current deviation from the average is determined, and (c) the current unbalance is the ratio of the largest deviation and the current average, expressed as a percentage.

Under these regulations, CFE requires an efficient early-warning system to meet standards and avoid penalties determined by CRE. In the past, CFE’s monitoring engineers received only a weekly report listing the performance of the distribution circuits under their command. If CFE did not meet the standards for any reason, there was nothing they could do to avoid fines issued to CFE. By providing a prediction or estimation of the PQ indices for the following week, engineers can act ahead of time, fix those circuits’ operating conditions, and improve their performances in adequate time frames. If predictions are accurate and engineers take corrective actions properly, the company saves substantial money by avoiding economic penalties. Not being fined by CRE is a desirable situation, leading to the more efficient management of the distribution networks. In the end, the total cost of energy distribution lowers, which entails lower electricity costs to the consumers.

CFE manages power distribution in Mexico on the basis of 16 divisional units and 150 distribution zones (see

Figure 1). The MIRD project was planned and developed inside DCO (the Spanish Acronym for Division Centro Occidente—Central-West Division), one of the most innovative and efficient divisional units of CFE.

Table 2 shows power infrastructure and capacity figures for both CFE as a whole and DCO only. These numbers give a clear idea of the importance and magnitude of the DCO distribution grid. Moreover, a total of 5953 services connected to the DCO grid as distributed generation (as of 2021) reinforce the need for an adequate early-warning system for PQ monitoring. Across its 145 power substations, DCO is in charge of approximately 800 distribution circuits. Those distribution circuits are of considerable size, with power consumption ranging between 100 KW to 8 MW and 0 to 3 MVAR.

Every Sunday night, MIRD generates the forecast of the variables of interest of each circuit for the following week. Based on those forecasts, it computes the predicted PQ indices for that week. If there is a possibility that PQ indices will not meet the standard, MIRD sends email messages to the engineers in charge of monitoring the circuits delivering low-quality energy. Once engineers receive the message, they verify the circuits’ operating conditions and collect more information from MIRD’s web interface (or other systems that CFE has established for its day-to-day operation). Distribution engineers can then take corrective measures to prevent any incidents that would lower the circuits’ PQ indices under their responsibility. As a result, the reliability of the distribution system has increased, as well as the quality of the electric power delivered to customers.

MIRD is required to be an efficient early-warning system capable of acquiring and processing information at a big-data scale. The number of PQMs delivering data to MIRD is approximately 700 distribution circuits and nearly 150 quality-control nodes. Each circuit and node contains five and three variables of interest, respectively (although more than 30 variables are monitored, reported, and stored in the system). That required nearly 4000 univariate forecasting models capable of producing hourly forecasts for a one-week horizon. Additionally, as in most data-science endeavors, data is noisy and contains outliers and missing information. It is also worth noticing that, due to CFE’s data governance policies, developing our solution based on specialized services from public cloud providers was not an option. Therefore, MIRD was implemented entirely on-premises, based on a fast and resilient software architecture capable of dealing with the huge-scale and high-speed requirements posed by the problem. Additionally, fundamental components of that architecture had to take care of both challenging data-preprocessing operations (facing many types of data disturbance across the data-acquisition stage) and the urgent need to balance forecasting accuracy and speed.

In that context, the main contributions of this research are as follows:

The complete implementation of a large-scale machine-learning (ML) pipeline focused on short-term PQ indices forecasting for a regional-size, state distribution grid.

We developed our ML pipeline with the integration of modern, widely tested, open-source computing frameworks such as the big-data unified engine Apache Spark or the scientific computation library SciKit Learn.

To our knowledge, no other regional power distribution grid in Latin America has made a parallel effort in PQ indices forecasting or early-warning operations.

The rest of the article is structured as follows:

Section 2 presents an account of work found in the literature that deals with the problem of short-term PQ indices forecasting.

Section 3 describes the overall architecture of MIRD as a data pipeline centered on large-scale ML operations.

Section 4 and

Section 5 present in detail the two most important components of the MIRD pipeline: data preprocessing and forecasting.

Section 6 presents examples of the results of the MIRD operation. Finally,

Section 7 presents the conclusions and lines of future work.

2. Related Work

A significant part of the early research on PQ forecasting focuses on autonomous (off-grid), renewable-energy-based, actively controlled, domestic or semi-industrial power systems loaded with multiple real devices. The importance of such isolated configurations is increasing, and the connection of local, renewable energy sources is a factor in PQ deterioration. Therefore, PQ prediction becomes key to monitoring and early-warning activities. A project developed by a team from the Technical University of Ostrava constitutes an example of this situation. The first stage of this project [

3] developed a PQ forecasting model as an integrated part of active demand side management (ADSM). The model is based on a multi-layer artificial neural networks (ANN) and produces multi-step predictions for the following steady-state PQ indices: total harmonic distortion of voltage (THDV), total harmonic distortion of current (THDC), long-term flicker severity, and power frequency. In a subsequent stage of the project [

4], the authors developed an ML classifier based on multiple algorithms, including ANN, support vector machine (SVM), decision tree (DT), AdaBoost, and random forest (RF), that overperformed their previous multi-layer ANN predictor. Moving the prediction output from continuous PQ-index values to a binary state classifier (PQ failure/not PQ failure) allowed the team to experiment at a higher data resolution for longer. By expanding the classification mechanism to a 32-class problem, forecasting output is now a vector of binary values for five major steady-state PQ indices (they added short-term flicker severity to the original problem). The project uses a fandom decision forest algorithm to produce the classification model. The authors use multi-objective optimization via particle swarm optimization (PSO) and Non-Dominated Sorting Genetic Algorithm II (NSGA-II) to adjust the model’s hyper-parameters. Recently, a team from the Technical University of Ostrava worked on data from an experimental off-grid platform to compare predictive performances and computation times for seven approaches to PQ indices forecasting. ANN, linear regression (LR), interaction LR, pure quadratic LR, quadratic LR, bagging DTs, and boosting DTs were used to forecast frequency, voltage, THDV, and THDC based on the history values of the system power load, and weather information (solar irradiance, wind speed, air pressure, and air temperature) [

5]. The dataset used for this research comprises only two weeks of data, at 10-min resolution, for model training and only 14 values, at hourly resolution, for inference.

Weng et al. [

6] use an ANN-based PQ forecasting model to predict steady-state PQ indices at the point of common coupling (PCC). They predict voltage deviation (VD), frequency deviation, three-phase voltage unbalance (VU), and harmonic distortion using environmental variables (illumination intensity and temperature) and power load values as inputs to the model. Three neural networks with different structures compose the prediction model: back propagation (BP), radial basis function (RBF), and general regression neural networks (GRNN). Hua et al. [

7] predict voltage deviation (VD), frequency deviation, and harmonics using a hybrid deep ML model that includes convolutional neural network (CNN) and long short-term memory (LSTM) layers. They tested their model using an active distribution network simulated on the basis of the IEEE-13 node. Xu et al. [

8] also used simulated data from the IEEE-13 node to train a model for PQ indices forecasting. The model is a neural network which applies variational mode decomposition (VMD) to the power signal. The resulting power components are used as features for an LSTM-based layer that computes a predicted power signal. The difference between real and predicted power values (residuals) are passed to a one-dimension CNN which finally outputs the target PQ indices values. This architecture is tested on a single time series of 32 K values; however, this time series is further sampled before the final layer to datasets of 4500 and 500 values for training and inference, respectively.

Several projects have investigated PQ forecasting models for city- or regional-size distribution grids. The increased data and computation requirements driven by this context calls for using diverse approaches. Bai et al. [

2] developed a PQ prediction, early-warning, and control system for PCC between a 200 MW wind farm and the distribution network. This system includes a clustering analysis based on the dynamic time warping (DTW) distance performed over a set of historic, monitored environmental variables and PQ index values. A probability distribution is fitted for each data cluster and then used as the base for predicting PQ indices one day ahead via a Monte Carlo process. However, the dataset reported for this research comprises only 10 days of meter readings at a 10-min resolution. Song et al. [

9] predicted steady-state PQ indices (VD, frequency deviation, three-phase VU, and THDV) using cluster analysis and SVMs. The input to the model comprises calendar information (weekday and holiday indicators), environmental-condition values (temperature, humidity, atmospheric pressure, rainfall), and power load values (active power, reactive power). The experimental study extends to one year of data; however, the dataset comprises only a single 35 kV substation of the Chinese state grid. Pan et al. [

10] forecast the variables VD, THDV, and three-phase VU for a regional state grid. Their forecasting model uses phase-space reconstruction on the history of the predicted variables, least-squares SVM, and PSO. They use 2 years of readings at daily resolution for training the forecasting model; however, the dataset comprises only a single 220 kV substation.

Yong et al. [

11] predict VD using principal component analysis (PCA) for dimensionality reduction, affinity propagation (AP) clustering, and a back propagation (BP) neural network. Besides the history of predicted variables, the model accepts relevant meteorological data (e.g., temperature, dew point, humidity, air pressure, wind speed, and wind direction) as exogenous information. Datasets come from the Chinese state grid and comprise 98 days in the training dataset, and two extra days for the test set, at hourly resolution. Sun et al. [

12] forecast voltage deviation and the fifth harmonic content of voltage using ARIMA and a BP neural network. Datasets come from a state grid but are very small (only 29 readings at a 1-day resolution for the fifth harmonic and 310 readings at 1-min resolution for voltage deviation). Michalowska et al. [

13], developed a PQ forecasting model to predict the appearance of three PQ disturbance types in the Norwegian power distribution grid: earth faults, rapid voltage changes, and voltage dips. The model takes as input target variables history and weather information; it uses random forests (RF) to produce a binary classifier. Datasets are relatively large, with five years of data at daily resolution. Zhang et al. [

14] forecasted THDV using a Bayesian-optimized bidirectional LSTM neural network. Datasets also come from a city grid but comprise only one week of data, at 10-min resolution, for 5 PQM.

In close line with the research projects mentioned above, we built MIRD to produce ML-based, short-term predictions (1-h resolution, 1-week ahead) for three steady-state PQ indices: power factor (PF), three-phase current unbalance (CU), and VD. The MIRD forecasting process is indirect: to forecast these PQ indices, MIRD first builds predictions for the variables required for the computation of the PQ indices, that is, the active (kW) and reactive (kVAR) components of electric power for PF, three-phase voltage for VD, and three-phase current for CU. Forecasting of active and reactive power components lies in the domain of short-term power load forecasting, covered in a vast body of literature [

15]. However, MIRD exhibits a substantial difference concerning the research projects covered in this section, which is the size of the problem it deals with: eight primary variables are required to be short-term predicted for 700+ substation metering devices based on history datasets that span from several months to years. Therefore, another significant difference is that MIRD’s design corresponds to an ML-based, PQ forecasting project, which composes the foundation for an extensive, tailor-made big-data architecture that enables CFE to perform powerful and diverse analytics on multiple levels of consumer targets. Considerations on this architecture design will be addressed in

Section 3.

4. Data Preprocessing

Real-world databases are highly susceptible to noise, missing data, and outliers, among other disturbances. Examples of noise sources are measuring devices, rounding operations, and transmission mechanisms. Intermittent operation of measuring or transmission devices produces missing data. Periods of missing data can be as short as one reading or as long as several months. Outliers are out-of-range measurements. We can see them as a form of noise but, in some cases, they are authentic peaks (positive or negative) that result from non-characteristic behavior of the underlying system that produces the data. Noise, missing data, and outliers are responsible for data quality deterioration; these problems are more likely to happen when the system handles vast amounts of data (up to gigabytes). Data analytics pipelines such as MIRD must specifically deal with this problem as the lower the input data quality, the lower the quality of the results.

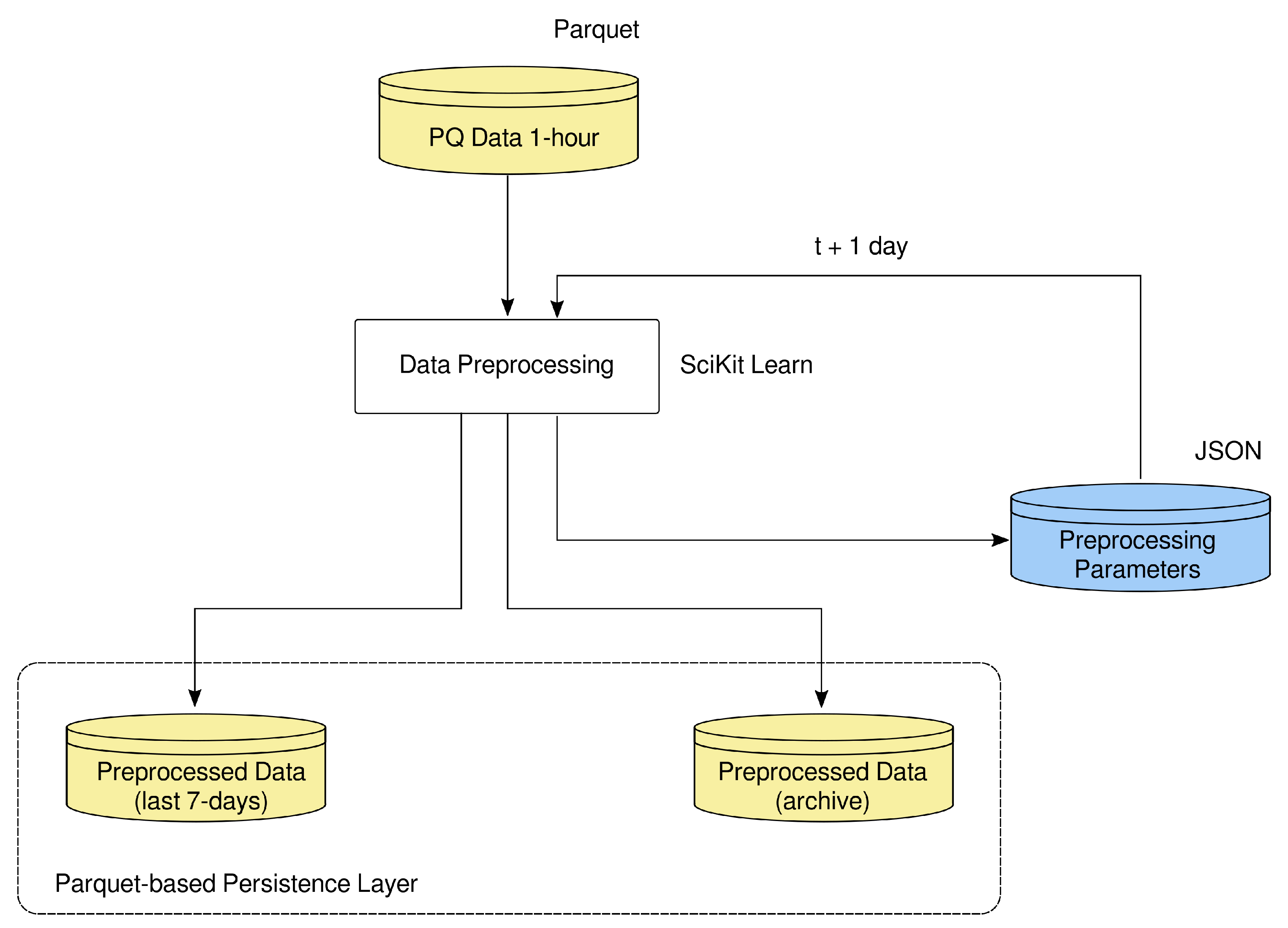

Before describing the operations of the data-preprocessing component, it is essential to understand how MIRD acquires data. CFE implemented an Internet-of-Things system across most of its assets and equipment. This system includes a PQM at the coupling point of every circuit or quality node. As previously stated, each PQM records more than 30 electrical variables at a 10-min resolution. A relational database called SIMOCE (the Spanish acronym for Sistema de Monitoreo de Calidad de la Energia—Power Quality Monitoring System) collects PQM readings, which serve CFE’s distribution engineers throughout the country. The data-ingestion component of MIRD uses SIMOCE as its data source. Afterward, the data-prepŕocessing component consists of four steps. The first step is a sub-sampling operation. PQ measurements, originally recorded at 10-min intervals, are average aggregated to a 1-h resolution, which results in 168 values per week. The second step is to locate missing data; in the absence of data, it applies an imputation process. This operation applies only when a few measurements are missing. The third step is to detect outliers. If they exist, the same missing data imputation process is applied. The preprocessed time series persists in the artifact-storage (Parquet) layer in the fourth step. The following sections explain these steps in detail.

4.1. Missing Data

Missing data result when the measuring device or the database does not record the value of the variable of interest at a given instant of the time series. Missing data can be caused by measurement, transmission, or recording problems. Douglas et al. [

19] provide an example, replicated in

Figure 8. The plot shows one missing data on 13 January and three successive days—16, 17, and 18—without recording information (producing missing data). In this step of data preprocessing, we count the total observations in the weekly time series.

represents the missing data subset in the time series

. If

observations are missing, the system does not record the time series. It indicates that there is insufficient data to pass this combination PQM-variable to the next pipeline component. If

observations are missing, an imputation process is executed. A recovery operation uses the Incomplete Datasets archive of the data-ingestion component.

4.2. Data Imputation

Imputation is the process of filling in missing data or replacing outliers. Imputation replaces missing or erroneous values with a “likely” value based on other available information. Imputation allows MIRD to work with statistical and machine-learning techniques designed to handle the complete datasets (see [

19]). The imputation process replaces the missing data or outliers with the average of the last five observations. Let us denote the time series by

(see

Section 5.1); each missing entry,

, in the missing data or outliers set

, detected at time

, can be replaced as indicated in Equation (

1). The pipeline executes this process twice, once to perform imputation for missing data and again to impute outlier values.

4.3. Outliers

Time-series data can be affected by isolated events, perturbations, or errors that create inconsistencies and produce unusual data that is not consistent with the general behavior of the time series; those unusual data points are called outliers. Outliers may result from external events, measuring, or recording errors (see [

20]). There are two types of outliers: global and local.

Figure 9 shows global (squares) and local outliers (circles). Different methods exist to detect outliers; in this work, we use the three-sigma method for detecting the global outliers and the local outlier factor (LOF) method for detecting the local ones.

4.3.1. Global Outliers

In a time series, a global outlier is one observation that deviates significantly from the rest of the data [

21]. We use the three-sigma rule to determine the percentage of values within a band around the average in a normal distribution. (

2) expresses the probability of a data item lying within the three

band

where

is an observation of a normally distributed random variable,

is the mean of the distribution

and

is the standard deviation. The three-sigma rule is a conventional heuristic—99.7% of a normally distributed population lies within three standard deviations of the mean. Therefore, values that lie outside those limits are unlikely to be produced by the underlying process. (see

Figure 9) Therefore, they are considered outliers.

4.3.2. Local Outliers

Local outliers are more challenging to detect than global ones. We need to know the nature of the time series to find local outliers. For example: “The current temperature is 27

C. Is it an outlier?”. It depends, for example, on the time and location. In the city of Morelia, it is an outlier if it is the month of December at night. If it is the month of May for the day in Morelia, then the measurement is a usual case. The determination of whether the value of today’s temperature is an outlier or not depends on the context: the date, time, location, and possibly some other factors [

21]. We used the local outlier factor (LOF) method in this situation [

22]. The LOF method compares outliers with data in their local neighborhoods rather than the global data distribution. LOF is an outlier detection technique based on the density around an outlier; density around normal data must be higher than the density around outliers. LOF compares the relative density of a point against that of its neighbors. That ratio is an indicator of the degree to which objects are outliers. High-density points have many neighbors at a close distance, and low-density points have fewer. A typical distance measure is the Euclidean distance defined in (

3).

where

m is the dimensionality of the data points. Let

C be a set of objects in an

m-dimensional space and

p a query object. Let us denote

as the array of objects

, sorted by distance to

p. The

k-nearest neighbor (

) of

p is

.

Figure 10 shows an example where

in two dimensions.

The

k-distance neighborhood represents the set of all neighbors of

p, the center of the big circle on the left of

Figure 10, that are as far from

p as

—see (

4).

The reachability distance of

p and

o is the maximum between their distance and the

. See (

5).

Local reachability density is the inverse of the average reachability distances. (

6) shows the local reachability of point

p within its

k-distance neighborhood.

where

is not necessarily

k, since there is the possibility that several neighbors are at the same distance.

The local outlier factor (LOF) of a point

p is the average of the ratio of the

and

for all

k-distance neighbours of

p. See (

7).

We compute the LOF of every point

to determine outliers and discriminate outliers using (

8).

The first two cases indicate that the density of around p is similar to or higher than that of its neighbors; therefore, the data point is labeled as normal. The last case indicates that the density around p is lower than that of its neighbors; therefore, the data point is labeled as a local outlier. The LOF method detects and labels the local outliers. This process detects outliers, as outlined above. Those points detected as outliers are corrected using data imputation.

5. Forecasting

This section describes the architecture of the forecasting models used in predicting the PQ delivered by the distribution networks of CFE-DCO. Many tests were performed using different types of models from the machine-learning area. The goal was to determine the best-suited forecasting model to solve the problem. The tested models included ARIMA, regression forests, nearest neighbors, recurrent, and feed-forward artificial neural networks. The kind of model that performed the best was MLP; i.e., it produced the smallest error with respect to the test set. To use an MLP to perform forecasting, we need to map the forecasting problem to a regression problem; phase space reconstruction achieved this mapping. After that, we used an MLP to solve the resulting regression problem.

5.1. Phase Space Reconstruction

A time series is an abstraction of the data required by the forecasting model. A time series is a sequence of scalar values of a specific variable sampled at a discrete equidistant time. Let

be a time series, where

is the value of variable

s at time

t. The goal is to obtain the forecast of

consecutive values, this is

by employing any observation available in

. By using a time delay of

and an embedding dimension

m, it is possible to build delay vectors of the form

, where

. These vectors represent a reconstruction of the

m-dimensional phase space that defines the underlying system dynamics that produced the time series [

23]. However, the forecasting results exhibit low accuracy using vectors composed only of samples from the variable of interest (i.e., the time series). For this reason, vector

includes the day of the week and the hour (in minutes from the start of the day) as control variables. Thus, we append the date and time (hour) when the sample was taken to each vector

,

. (

9) shows the resulting vector.

where

returns the day of the week (as a real number from 0.0 to 0.8571 starting on Monday), and

returns the minute of the day (represented as a real number from 0.0 to 0.9993). Those quantities correspond to the day and time when the delay vector ends (i.e., when the forecast is to be produced).

5.2. Forecasting Model

We model the forecasting problem based on multi-layer perceptrons (MLP). An MLP is a feed-forward artificial neural network (ANN), fully connected between layers. A learning algorithm adjusts the weights and biases of the network’s neurons using a loss function and a training algorithm. The learning algorithm achieves these adjustments by exposing the network to every sample feature vector and its target. ANN are computing systems vaguely inspired by the biological neural networks that constitute animal brains [

24].

Figure 11 shows the basic structure of an artificial neuron.

A neuron

n can receive multiple inputs

which are weighed individually

to make an intermediate output

. That is

where

represents a bias, which acts as a weight. This bias acts as an independent term in an inherently non-linear model. The output

is passed to a

activation function which yields the output

of the neuron. The neuron computes its output as

By piling many neurons operating in parallel, it is possible to form a layer. ANNs usually consist of one input layer of length

m, one output layer (with one or more outputs), and several hidden layers. Those hidden layers can also have a varying number of neurons [

25].

Figure 12 shows a typical ANN topology.

Generally speaking, an ANN is a universal approximator. Theorem 1 states the universal approximation theorem [

26].

Theorem 1. Let be a non-constant, bounded, and monotonically increasing continuous function. Let denote the -dimensional unit hypercube . The space of continuous functions on is denoted by . Then, given any function and , there exists an integer and sets of real constants , , and , where and such that we may defineas an approximate realization of the function ; that is,for all that lie in the input space. In time-series forecasting, an ANN can identify patterns and trends in the data and adjust the weights of each neuron to match the desired output. ANNs are successful models used in time-series forecasting; research work exhibits their forecasting capabilities. Those forecasting models include applications on forecasting water demand [

27], foreign exchange markets [

28], market volatility [

29], and more. For this work, we designed a simple MLP;

Table 3 describes the components of the MLP architecture.

To produce the forecasting models, we took the last 5000 values of every time series, representing a little more than six months of data. This value was set since, after preprocessing all the time series, we found that many types of equipment had regime changes around that time. To minimize the modeling time, we implemented a simple parallel computing technique. The models were trained on a cluster server with 40 computing threads. Each thread was in charge of producing, for a given PQM, the models of the individual variables. For each variable of every PQM to forecast, we produced ten independent MLP models and kept the model that obtained the lowest forecasting error. In total, the training process produced nearly 4000 univariate forecasting models spanning all individual variables in the problem context. The following section shows the results obtained in modeling those variables and PQ indices.

7. Conclusions

This section discusses the general framework of applicability of the presented solution to forecasting PQ indices, emphasizing MIRD’s salient features. In a second part, this section proposes the directions for future work.

7.1. Discussion and General Conclusions

PQ indices forecasting has gained attention due to increasing PQ deterioration factors in electric power systems, such as distributed, renewable-energy-based generation units or non-linear electronic devices. Most of the recent research on PQ forecasting uses relatively small datasets. The data may come from domestic or semi-industrial, off-grid electric-power configurations or from gathering information from only a few PQM in power distribution grids; some of them even come from simulation data. The state-of-the-art disregards the big-data nature of PQ forecasting for city- or regional-size grids, focusing mainly on harmonic distortion (voltage total harmonic distortion—THDv) at a fine-grained level of detail.

This paper presents MIRD, a large-scale ML system for short-term PQ indices forecasting implemented at a Mexican, region-sized power distribution grid. MIRD is an early-warning system that produces one-week-ahead predictions at hourly resolution for the three-phase current unbalance and the power factor of nearly 700 distribution circuits. It also forecasts the operation voltage of nearly 150 quality-control nodes. This problem accounts for more than 4000 uni-variate forecast models executed weekly over massive amounts of data.

MIRD operates on massive amounts of real data (sensed by IoT devices in the network), produces a large number of trained ANN-based forecasting models, and monitors the distribution network autonomously. Such a system has no precedent in the Latin-American electrical industry.

In developing the system we present in this article, we tested several forecasting models and selected the type of model that best served the forecasting goals and could be implemented on premises with the available resources. Given those conditions, we could not afford more sophisticated models such as LSTM ANN, which take much longer to train and do not improve accuracy by much. Those facts lead to the decision to use feed forward multi-layer perceptron networks as a general model with acceptable forecasting accuracy and which are not as computationally expensive as other models.

Experimental results show that, although non-linearity in the underlying system that produces the data makes predicting reactive power (kVAR) a difficult task, MIRD’s overall predictive performance is encouraging. A total of 63% of the forecasting models produce SMAPE values under 8.33%, while 82% of the forecasting models produce SMAPE values under 16.66%. Given the uniqueness of the system we present here, we cannot compare its results against other systems; the authors did not find any such systems reported in the literature.

Most governments have issued strict PQ standards that power distribution grids must follow. PQ forecasting becomes crucial to ubiquitous early-warning PQ deterioration systems. However, to properly approach this task, it is essential to bear in mind the large-scale nature of the data when it comes to power grids that serve millions of customers. The practical value of MIRD lies in that, by reporting the likely behavior of each circuit and node one week in advance, MIRD allows the distribution engineers to produce corrective measures before delivering low-quality energy to the users. The delivery of high-quality energy by CFE allows the company to reach world-level standards. In addition, by maintaining the energy quality within the required intervals, CFE avoids expensive fines by the Mexican regulatory organization (CRE).

7.2. Future Work

Future work on MIRD includes for the following research lines:

NoSQL database implementation. The growing volume of the Parquet-based persistence layer of MIRD could eventually decrease the complete pipeline’s performance. We must perform the required research to design a new persistence layer; proven strategies in this field point to replacing our Parquet-based layer with a faster and more efficient NoSQL database [

30]. For this task, we must evaluate different NoSQL databases (columnar, key-value, document-based).

Parallel implementation. The data-preprocessing and the forecasting components of MIRD use SciKit Learn, designed to run on single-device hardware configurations. Given the massive amount of data managed by MIRD, it is advisable to distribute the SciKit Learn code or migrate those components to a computing framework that runs in distributed mode. Although many programming resources can distribute SciKit Learn jobs, Apache Spark, which already is the basis for the data-ingestion and the power-quality-evaluation components, is a convenient option upon which to base this upgrade.

Forecast-model enhancement. MIRD’s forecasting models rely on MLP neural networks. Recently, we started experimenting with deep-learning models for MIRD based on long short-term memory (LSTM) networks, encoder–decoder networks, and transformers that have constantly reported better predictive performance than MLPs [

31,

32,

33]. Our results suggest adding forecasting models based on such neural-network architectures to MIRD. However, having more powerful neural networks also means requiring more computing capacity. Therefore, this research line reinforces the need for achieving parallel implementation.

Migration to an ML-pipeline development framework. We started the MIRD project in mid-2017 with early and preliminary prototypes and forecasting models. At that time, the availability of a fully integrated, end-to-end, production-ready framework for ML-pipeline development was still an idea. Today, many widely tested, open-source frameworks for ML-pipeline authoring are available [

34,

35,

36]. Given the solid technical support and implementation expertise associated with these frameworks, it is worth analyzing a migration of the complete functionality of MIRD to one of them.