Fault Diagnosis Method for Aircraft EHA Based on FCNN and MSPSO Hyperparameter Optimization

Abstract

:Featured Application

Abstract

1. Introduction

2. Fusion Convolutional Neural Network (FCNN)

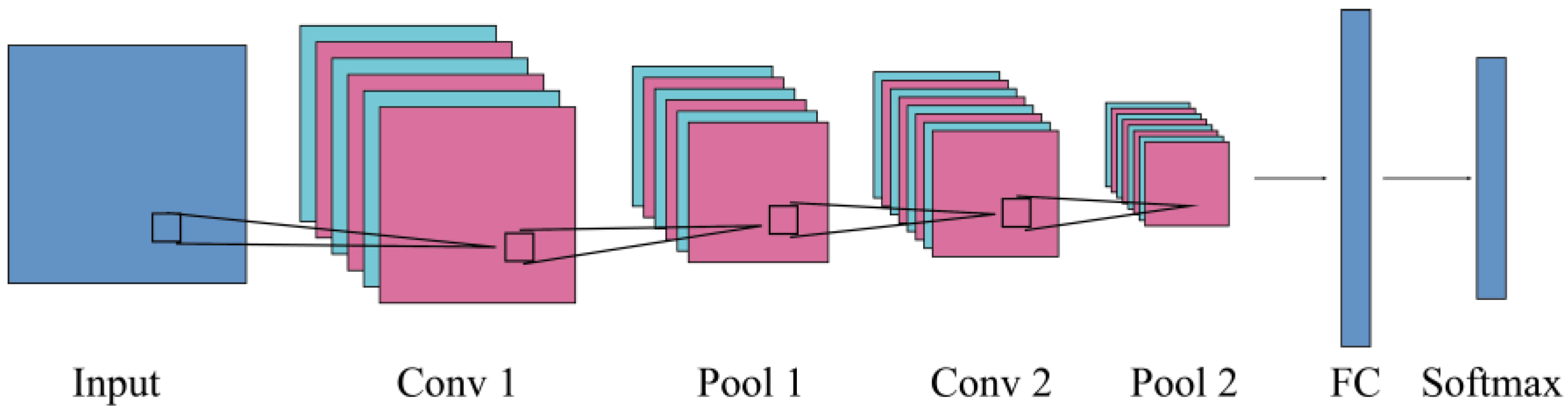

2.1. Two-Dimensional Convolutional Neural Network (2DCNN)

2.1.1. Convolutional Layer

2.1.2. Pooling Layer

2.1.3. Fully Connected Layer

2.2. One-Dimensional Convolutional Neural Network (1DCNN)

2.3. Multi-Feature Fusion Convolutional Neural Network (FCNN)

3. Multi-Strategy Hybrid Particle Swarm Optimization Algorithm (MSPSO)

3.1. Standard Particle Swarm Optimization Algorithm

3.2. Multi-Strategy Hybrid Particle Swarm Optimization Algorithm

3.2.1. Initialization Strategy Based on Homogenization and Randomization

3.2.2. Adaptive Inertia Weights and Learning Factor Strategies

3.2.3. Hybrid Variation Strategy

3.2.4. Improved Handling Method of Boundary-Crossing Particles

3.2.5. Algorithm Flow

| Algorithm 1: Multi-Strategy Hybrid Particle Swarm Optimization Algorithm | |

| 1 | : Generate n particles, initialize the particle positions using the initialization strategy in Section 3.2.1, and calculate the values of and in the initial population. |

| 2 | : while |

| 3 | : Adjust the values of and , according to Equations (12) and (13). |

| 4 | : Update particle positions and velocities according to Equations (7) and (8). |

| 5 | : Calculate the particle fitness values and update and according to Equations (9) and (10). |

| 6 | : if the particles out of bounds then |

| 7 | : Use the strategy in Section 3.2.4 to make the particles out of bounds fall within the boundary |

| 8 | : end if |

| 9 | : if the hybridization variation condition is satisfied according to Equations (14) and (15) then |

| 10 | : Generate a new population according to the hybrid mutation strategy of Equation (17). |

| 11 | : end if |

| 12 | : end while |

| 13 | : output the current optimal particle: |

4. Fault Diagnosis Algorithm Based on MSPSO and FCNN

4.1. Structural Analysis of FCNN

4.2. Optimization of FCNN Structure Based on MSPSO

4.3. The Process of MSPSO-FCNN Fault Diagnosis Method

5. Typical Fault Simulation and Fault Data Acquisition of EHA

5.1. Overview of the EHA Principle

5.2. Typical Failure Simulation of EHA

- (1)

- Internal leakage of the hydraulic pump: The clearance between the plunger and the cylinder was set to 0.1 mm, 0.2 mm, 0.3 mm, and 0.4 mm to simulate different degrees of internal leakage of the hydraulic pump.

- (2)

- Oil mingled with air: The hydraulic oil air content is set to 2%, 3%, 4% and 5% to simulate different levels of air content.

- (3)

- Internal leakage of the actuator cylinder: The clearance between the piston and the barrel was set to 0.1 mm, 0.2 mm, 0.3 mm, and 0.4 mm to simulate different degrees of failure.

- (4)

- Increased friction between the actuator cylinder piston and the cylinder body: The Coulomb friction between the piston and the barrel was set to 1.1 times, 1.2 times, 1.3 times, and 1.4 times of the normal condition to simulate different degrees of friction increase.

- (5)

- Decreased sensor gain: The displacement feedback loop gain was set to 0.9, 0.8, 0.7, and 0.6 to simulate different levels of gain reduction.

- (6)

- Increased motor winding resistance: Set the winding resistance to 3.6 Ω, 4.6 Ω, 5.6 Ω, 6.6 Ω to simulate different levels of failure.

5.3. Fault Sample Data Collection

5.4. GADF-Based Image Processing of Fault Sample Sets

6. Typical Fault Diagnosis of EHA

- (1)

- Accuracy

- (2)

- Precision and Recall

- (3)

6.1. The Results of MSPSO Optimizes FCNN

6.2. Results and Discussion

6.3. Comparison

7. Conclusions

- (1)

- Compared to 1DCNN, 2DCNN with GADF map as input performs better in fault diagnosis of aircraft EHA. With the optimization of MSPSO, the 2DCNN can outperform the 1DCNN by up to 10.2% when diagnosing typical aircraft EHA faults.

- (2)

- FCNN combines the advantages of 1DCNN and 2DCNN, and it can extract richer fault features from GADF images while retaining the original features in one-dimensional fault data. With the optimization of MSPSO, the accuracy of FCNN is 96.86%, which is 16.5% and 5.7% higher than 2DCNN and 1DCNN, respectively.

- (3)

- The original data of aircraft EHA data are highly nonlinear, and encoding them into images by the GADF matrix can weaken the nonlinearity and reduce the noise, which makes 2DCNN and FCNN have better robustness.

- (4)

- The algorithm quickly falls into the local optimum when using the standard PSO to optimize the CNN structure. In comparison, the MSPSO optimization algorithm proposed in the paper benefits from its integration of multiple strategies, can jump from the local optimum, and has better ergodicity. The accuracy of 1DCNN, 2DCNN, and FCNN under MSPSO optimization improved by 4.6%, 8.3%, and 8.6%, respectively, over PSO.

- (5)

- Through comprehensive comparison with the LeNet-5, GoogleNet, AlexNet, and GRU models, the proposed MSPSO model possesses the highest test accuracy and shorter training time, which is very suitable for aircraft EHA fault diagnosis.

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Navatha, A.; Bellad, K.; Hiremath, S.S. Dynamic Analysis of Electro Hydrostatic Actuation System. Procedia Technol. 2016, 25, 1289–1296. [Google Scholar] [CrossRef]

- Kumar, M. A survey on electro hydrostatic actuator: Architecture and way ahead. Mater. Today Proc. 2021, 45, 6057–6063. [Google Scholar] [CrossRef]

- Ouyang, X. Modern Hydraulics for Aircrafts, 2nd ed.; Zhejiang University Press: Zhejiang, China, 2016; pp. 154–155. [Google Scholar]

- Ma, J. Design, Simulation and Analysis of Integrated Electrical Hydrostatic Actuator. Chin. J. Aeronaut. 2005, 1, 79–83. [Google Scholar]

- Xu, K. Research on EHA Devices Fault Diagnosis and Prognostics Based on Deep Learning. Master’s Thesis, Zhejiang Sci-Tech University, Hangzhou, China, 2020. [Google Scholar]

- Nawaz, M.H.; Yu, L.; Liu, H. Analytical method for Fault Detection & Isolation in Electro-Hydrostatic Actuator using Bond Graph Modeling. In Proceedings of the 14th International Bhurban Conference on Applied Sciences and Technology, Islamabad, Pakistan, 10–14 January 2017; pp. 312–317. [Google Scholar]

- Dalla Vedova, M.D.L.; Berri, P.C.; Bonanno, G.; Maggiore, P. Fault Detection and Identification Method Based on Genetic Algorithms to Monitor Degradation of Electrohydraulic Servomechanisms. In Proceedings of the 4th International Conference on System Reliability and Safety, Rome, Italy, 20–22 November 2019; pp. 304–311. [Google Scholar]

- Liu, J.; Zhang, L.; Li, H. Application of grey relation analysis in fault diagnosis of EHA-VSVP. In Proceedings of the 2013 IEEE International Conference on Grey systems and Intelligent Services, Macao, China, 15–17 November 2013; pp. 29–32. [Google Scholar]

- Cui, S.; Wang, Y.; Zhou, Z. Fault Injection of Electro-Hydrostatic Actuator and its Influence Analysis of Aircraft Flight Performance. In Proceedings of the Prognostics and System Health Management Conference, Qingdao, China, 25–27 October 2019; pp. 1–6. [Google Scholar]

- Zhao, J.; Hu, J.; Yao, J.; Zhou, H.; Wang, J. EHA fault diagnosis and fault tolerant control based on adaptive neural network robust observer. J. Beijing Univ. Aeronaut. Astronaut. 2022, 1, 1–16. [Google Scholar]

- Gadsden, S.A. Mathematical modeling and fault detection and diagnosis of an electrohydrostatic actuator. In Proceedings of the 2012 American Control Conference, Montreal, QC, Canada, 27–29 June 2012; pp. 5152–5159. [Google Scholar]

- Shao, Y.; Yuan, X.; Zhang, C.; Song, Y.; Xu, Q. A Novel Fault Diagnosis Algorithm for Rolling Bearings Based on One-Dimensional Convolutional Neural Network and INPSO-SVM. Appl. Sci. 2020, 10, 4303. [Google Scholar] [CrossRef]

- Li, S. Research on Fault Diagnosis Algorithms of Aircraft Hydraulic System Based on Convolutional Neural Network. Master’s Thesis, Nanjing University of Aeronautics and Astronautics, Nanjing, China, 2020. [Google Scholar]

- Ji, S.; Duan, J.; Tu, Y. Convolution Neural Network Based Internal Leakage Fault Diagnosis for Hydraulic Cylinders. Mach. Tool Hydraul. 2017, 45, 182–185. [Google Scholar]

- Zhang, W.; Peng, G.; Li, C. Bearings Fault Diagnosis Based on Convolutional Neural Networks with 2-D Representation of Vibration Signals as Input. MATEC Web Conf. 2017, 95, 13001. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, H.; Zhang, G. cPSO-CNN: An efficient PSO-based algorithm for fine-tuning hyperparameters of convolutional neural networks. Swarm Evol. Comput. 2019, 49, 114–123. [Google Scholar] [CrossRef]

- Westlake, N.; Cai, H.; Hall, P. Detecting People in Artwork with CNNs. In Proceedings of the Computer Vision—ECCV 2016 Workshops, Amsterdam, The Netherlands, 8–10 and 15–16 October 2016; Volume 9913, pp. 825–841. [Google Scholar]

- He, Z.; Shao, H.; Zhong, X.; Zhao, X. Ensemble Transfer CNNs Driven by Multi-Channel Signals for Fault Diagnosis of Rotating Machinery Cross Working Conditions. Knowl. -Based Syst. 2020, 207, 106396. [Google Scholar] [CrossRef]

- Valle, R. Hands-On Generative Adversarial Networks with Keras; Packt Publishing Ltd.: Birmingham, UK, 2019. [Google Scholar]

- Waziralilah, N.F.; Abu, A.; Lim, M.H.; Quen, L.K.; Elfakharany, A. A Review on Convolutional Neural Network in Bearing Fault Diagnosis. In Proceedings of the Engineering Application of Artificial Intelligence Conference 2018, Kota Kinabalu, Malaysia, 3–5 December 2018; Volume 255, pp. 06002:1–06002:7. [Google Scholar]

- Zhang, W.; Peng, G.; Li, C.; Chen, Y.; Zhang, Z. A new deep learning model for fault diagnosis with good anti-noise and domain adaptation ability on raw vibration signals. Sensors 2017, 17, 425. [Google Scholar] [CrossRef]

- Len, J.; Liu, Z. Research on Fault Diagnosis of Rotating Machinery Based on Multi-feature Fusion CNN Network. Softw. Guide 2021, 20, 44–50. [Google Scholar]

- Marini, F.; Walczak, B. Particle swarm optimization (PSO). A tutorial. Chemom. Intell. Lab. Syst. 2015, 149, 153–165. [Google Scholar] [CrossRef]

- Tyagi, S.; Panigrahi, S.K. An improved envelope detection method using particle swarm optimisation for rolling element bearing fault diagnosis. J. Comput. Des. Eng. 2017, 4, 305–317. [Google Scholar] [CrossRef]

- Shao, H.; Ding, Z.; Cheng, J.; Jiang, H. Intelligent fault diagnosis among different rotating machines using novel stacked transfer auto-encoder optimized by PSO. ISA Trans. 2020, 105, 308–319. [Google Scholar]

- Liu, Y.; Zheng, N.; Shao, Y. Bi-level planning method for distribution network with distributed generations based on hybrid particle swarm optimization. Smart Power 2019, 47, 85–92. [Google Scholar]

- Shi, Y.; Eberhart, R.C. A modified particle swarm optimizer. In Proceedings of the IEEE International Conference on Evolutionary Computation, Anchorage, AK, USA, 4–9 May 1998; pp. 69–73. [Google Scholar]

- Zhao, Z.; Huang, S.; Wang, W. Simplified particle swarm optimization algorithm based on stochastic inertia weight. Appl. Res. Comput. 2014, 31, 361–363. [Google Scholar]

- Islam, M.J.; Li, X.; Mei, Y. A Time-Varying Transfer Function for Balancing the Exploration and Exploitation ability of a Binary PSO. Appl. Soft Comput. 2017, 59, 182–196. [Google Scholar] [CrossRef]

- Garg, H. A hybrid PSO-GA algorithm for constrained optimization problems. Appl. Math. Comput. 2016, 274, 292–305. [Google Scholar] [CrossRef]

- Xu, G.; Cui, Q.; Shi, X. Particle swarm optimization based on dimensional learning strategy. Swarm Evol. Comput. 2019, 45, 33–51. [Google Scholar] [CrossRef]

- Lu, F.; Tong, N.; Feng, W. Adaptive hybrid annealing particle swarm optimization algorithm. Syst. Eng. Electron. 2022, 44, 684–695. [Google Scholar]

- Robinson, J.; Rahmat, Y. Particle swarm optimization in electromagnetics. IEEE Trans. Antennas Propag. 2004, 52, 397–407. [Google Scholar] [CrossRef]

- Zhou, Z.; Yang, Y.; Fan, X. Array antennas pattern synthesis based on improved dichotomy particle swarm optimization. Syst. Eng. Electron. 2015, 37, 2460–2466. [Google Scholar]

- Toshi, S.; Ali, H.; Brijesh, V. Particle swarm optimization based approach forfinding optimal values of convolutional neural network parameters. In Proceedings of the 2018 IEEE Congress on Evolutionary Computation, Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–6. [Google Scholar]

- Li, C.; Xiong, J.; Zhu, X. Fault Diagnosis Method Based on Encoding Time Series and Convolutional Neural Network. IEEE Access 2020, 8, 165232–165246. [Google Scholar] [CrossRef]

- Liu, H.; Wei, X. Rolling bearing fault diagnosis based on GADF and convolutional neural network. J. Mech. Electr. Eng. 2021, 38, 587–591. [Google Scholar]

- Yao, L.; Sun, J.; Ma, C. Fault Diagnosis Method for Rolling Bearing based on Gramian Angular Fields and CNN-RNN. Bearing 2022, 2, 61–67. [Google Scholar]

- Yang, L.; Dong, H. Robust support vector machine with generalized quantile loss for classification and regression. Appl. Soft Comput. 2019, 81, 105483. [Google Scholar] [CrossRef]

| Particle | Hyperparameters | Particle | Hyperparameters |

|---|---|---|---|

| Optimizer | |||

| Learning rate | |||

| Dropout | |||

| Batchsize | |||

| Num | Displacement of Actuator | Velocity of Actuator | Pressure Difference of Pump | Flow of Plunger Pump | Rotation Speed of Motor | Outlet Pressure of Accumulator |

|---|---|---|---|---|---|---|

| 1 | 0.2008 | −0.0008 | −2.6600 | 20.3202 | 2423.4024 | −5.1040 |

| 2 | 0.2008 | −0.0008 | −2.6600 | 20.31987 | 2423.3584 | −5.1040 |

| …… | …… | …… | …… | …… | …… | …… |

| 206 | −0.2139 | 2.6553 | −0.1578 | 81.1545 | 9727.8676 | −2.2610 |

| 207 | −0.1836 | 3.3891 | −0.4929 | 71.17593 | 8900.0614 | −2.8220 |

| …… | …… | …… | …… | …… | …… | …… |

| 576 | −0.1506 | 0.0006 | 1.9900 | −17.57166 | −2010.5476 | −3.2170 |

| Particle | Searching Range | Gbest | Particle | Searching Range | Gbest |

|---|---|---|---|---|---|

| 4–64 (step: 2) | 22 | Sigmoid, Tanh, Relu | Relu | ||

| 4–64 (step: 2) | 28 | Sigmoid, Tanh, Relu | Sigmoid | ||

| 4–64 (step: 2) | 40 | Sigmoid, Tanh, Relu | Sigmoid | ||

| 4–64 (step: 2) | 18 | 64–1024 (step: 2) | 482 | ||

| 4–64 (step: 2) | 26 | Sigmoid, Tanh, Relu | Sigmoid | ||

| 2–8 (step: 1) | 3 | Adam, Adagrad, SGD | Adam | ||

| 2–8 (step: 1) | 3 | 0.05–1 (step: 0.02) | 0.36 | ||

| 2–8 (step: 1) | 2 | 0.2–0.8 (step: 0.1) | 0.5 | ||

| 4–20 (step: 1) | 11 | 25, 40, 50, 100, 150 | 50 | ||

| 4–20 (step: 1) | 7 | ||||

| Fitness: 0.9758 | |||||

| Layers | Filter Size | Filter Number | Feature Size | Activation Function |

|---|---|---|---|---|

| Input layer | - | - | 576 × 1 × 6 | - |

| Convolutional layer 1 | 11 × 1 | 18 | 566 × 1 × 6 | Sigmoid |

| Pooling layer 1 | 2 × 1 | 18 | 283 × 1 | - |

| Convolutional layer 2 | 7 × 1 | 26 | 277 × 1 | Sigmoid |

| Pooling layer 2 | 2 × 1 | 26 | 139 × 1 | - |

| Fully connected layer | 1 × 1 | 3614 | 3614 | Sigmoid |

| Layers | Filter Size | Filter Number | Feature Size | Activation Function |

|---|---|---|---|---|

| Input layer | - | - | 64 × 64 × 6 | - |

| Convolutional layer 1 | 3 × 3 | 22 | 62 × 62 × 6 | Relu |

| Pooling layer 1 | 2 × 2 | 22 | 31 × 31 | - |

| Convolutional layer 2 | 3 × 3 | 28 | 29 × 29 | Relu |

| Pooling layer 2 | 2 × 2 | 28 | 15 × 15 | - |

| Convolutional layer 3 | 2 × 2 | 40 | 14 × 14 | Relu |

| Pooling layer 3 | 2 × 2 | 40 | 7 × 7 | - |

| Fully connected layer | 1 × 1 | 3614 | 1960 | Sigmoid |

| Method | Acc (%) | P (%) | F1 (%) | |

|---|---|---|---|---|

| Optimization Method | Basic Structure of CNN | |||

| PSO | 1DCNN | 79.51 | 79.78 | 79.57 |

| 2DCNN | 84.60 | 83.41 | 83.93 | |

| FCNN | 89.20 | 84.69 | 86.82 | |

| MSPSO | 1DCNN | 83.14 | 91.64 | 87.14 |

| 2DCNN | 91.64 | 89.30 | 90.39 | |

| FCNN | 96.86 | 96.95 | 96.88 | |

| Method | Acc (%) | |

|---|---|---|

| Cases 1 | Cases 2 | |

| MSPSO-1DCNN | 88.58 | 83.14 |

| MSPSO-2DCNN | 92.47 | 91.64 |

| MSPSO-FCNN | 97.21 | 96.86 |

| Model | Acc (%) | P (%) | F1 (%) | Training Time (s) | Test Time (s) |

|---|---|---|---|---|---|

| MSPSO-LeNet-5 | 85.71 | 84.94 | 85.32 | 288.6 | 0.78 |

| MSPSO-GoogleNet | 95.47 | 91.98 | 93.69 | 573.2 | 1.11 |

| MSPSO-AlexNet | 91.64 | 91.29 | 91.46 | 449.5 | 0.98 |

| MSPSO-GRU | 84.94 | 89.96 | 87.38 | 350.6 | 0.65 |

| MSPSO-FCNN | 96.86 | 96.95 | 96.88 | 412.5 | 0.81 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; Li, Y.; Cao, Y.; Duan, S.; Wang, X.; Zhao, Z. Fault Diagnosis Method for Aircraft EHA Based on FCNN and MSPSO Hyperparameter Optimization. Appl. Sci. 2022, 12, 8562. https://doi.org/10.3390/app12178562

Li X, Li Y, Cao Y, Duan S, Wang X, Zhao Z. Fault Diagnosis Method for Aircraft EHA Based on FCNN and MSPSO Hyperparameter Optimization. Applied Sciences. 2022; 12(17):8562. https://doi.org/10.3390/app12178562

Chicago/Turabian StyleLi, Xudong, Yanjun Li, Yuyuan Cao, Shixuan Duan, Xingye Wang, and Zejian Zhao. 2022. "Fault Diagnosis Method for Aircraft EHA Based on FCNN and MSPSO Hyperparameter Optimization" Applied Sciences 12, no. 17: 8562. https://doi.org/10.3390/app12178562