The Progress on Lung Computed Tomography Imaging Signs: A Review

Abstract

:1. Introduction

2. Preliminary

2.1. Lung CT Public Database

2.2. Evaluation Criteria

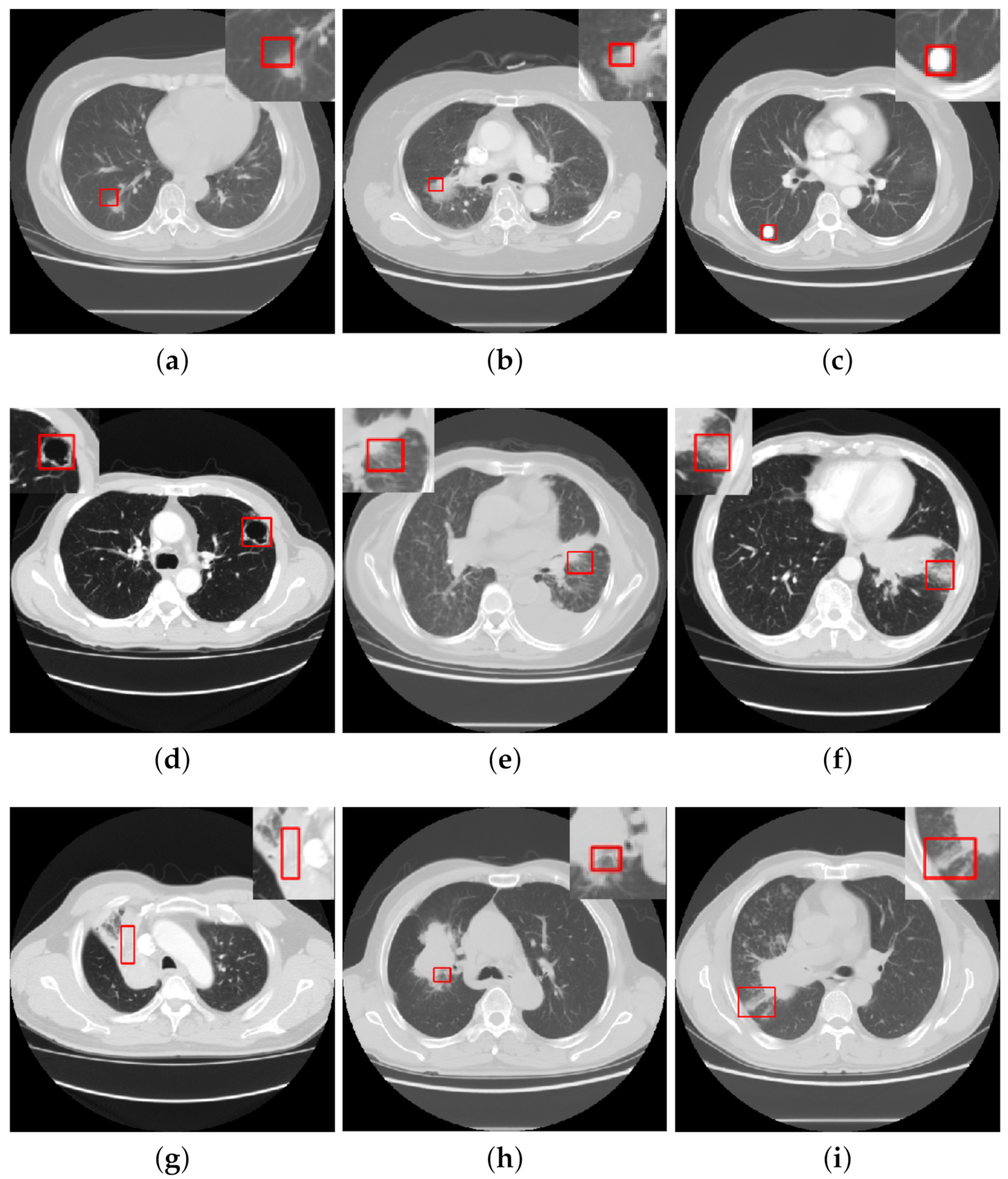

2.3. The Overview Framework for Detection of CISs

3. Detection of CISs Based on Traditional Machine Learning Methods

3.1. Candidate Lesion Segmentation

3.2. Feature Extraction

3.3. Feature Classification

4. Detection of CISs Based on Deep Learning Methods

4.1. Classification

4.1.1. Fully-Supervised Classification

4.1.2. Semi-Supervised Learning in Classification

4.2. Image Retrieval

5. Discussion

- CISs classification. Current work is able to obtain above 90% of the classification performance in one or more of the three assessment metrics: sensitivity, specificity, and accuracy. In terms of datasets, earlier work on sign classification mostly depended on the publicly accessible LISS dataset, although there was also some successful research using private data for CISs classification [20,24]. It is not possible to directly compare the classification results because of the differences in datasets and the CISs studied, but the performance of model classification shows an upward trend on the whole, with deep learning methods outperforming traditional machine learning methods for both the classification of single signs and the classification of multiple classes of signs.

- CISs segmentation. Segmentation of lesions is essential to improve the performance of sign classification and detection. The major approaches are the threshold and morphological methods. As lung lesions contain additional blood vessels, the threshold method of segmenting lesions tends to produce a large number of false positive areas and is mainly used for the segmentation of larger organs, such as the lung parenchyma, while the morphological method can effectively remove blood vessels and is mainly applied to the segmentation of small lesion areas.

- CISs detection. While having achieved great progress, most of the present approaches largely focus on CISs classification tasks. There is a lack of research focusing on object detection of CISs. However, people are more interested in lesion detection tasks in medical imaging as opposed to classification tasks, which may be for the following reasons: objection detection networks are much more complex than classification networks and require higher quantity and quality of data. However, there is a wide variety of signs of lung lesions, and as the differences between signs are small, it is very difficult to accurately detect and quantify them.

6. Conclusions

- Create sign-oriented datasets. The publicly accessible database LISS, in terms of signs, only comprises 271 case data, which is far from the quantity of data necessary to train deep learning networks. Therefore, building a larger-scale sign-oriented lung CT annotated dataset is vital for the development of lung CT sign recognition, especially for carrying out research on sign identification based on deep learning methods.

- Relieve the dependency on manually labeled data, a potential strategy has to be utilized: semi-supervised learning. This technique needs to receive more attention. Since enormous success of SSL has been accomplished in the field of natural images, applying current improvements of SSL to medical imaging difficulties looks to be a viable strategy. There is a lack of material focused on semi-supervised CISs detection. It would be a good concept to transfer the semi-supervised approaches in the field of natural images to the field of medical images and to innovate on this basis.

- Design a sign-based assisted diagnosis system for lung diseases that physicians may utilize to assess symptoms and diagnose lung abnormalities, to encourage in-depth research and development of lung sign detecting tasks using real clinical demands as a reference.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Sung, H.; Ferlay, J.; Siegel, R.L.; Laversanne, M.; Soerjomataram, I.; Jemal, A.; Bray, F. Global cancer statistics 2020: GLOBOCAN estimates of incidence and mortality worldwide for 36 cancers in 185 countries. CA Cancer J. Clin. 2021, 71, 209–249. [Google Scholar] [CrossRef] [PubMed]

- Kian, W.; Zemel, M.; Levitas, D.; Alguayn, W.; Remilah, A.A.; Rahman, N.A.; Peled, N. Lung cancer screening: A critical appraisal. Curr. Opin. Oncol. 2022, 34, 36–43. [Google Scholar] [CrossRef] [PubMed]

- Hong, B.Y. Diagnostic value of CT for single tuberculosis cavity and canserous cavity. Chin. J. CT MRI 2021, 19, 139. [Google Scholar]

- Xia, X.; Wang, C.J.; Wang, Y. The diagnostic value of deep lobulation and short burrs in cavity lung cancer. Chin. J. Med. Clin. 2021, 21, 2469–2470. [Google Scholar]

- Yu, Y.; Zhang, Y.; Zhang, F.; Fu, Y.C.; Xu, J.R.; Wu, H.W. Value of CT signs in determining the invasiveness of lung adenocarcinoma manifesting as pGGN. Int. J. Med. Radiol. 2021, 43, 639–643. [Google Scholar]

- Armato, S.G., III; McLennan, G.; Bidaut, L.; McNitt-Gray, M.F.; Meyer, C.R.; Reeves, A.P.; Clarke, L.P. The lung image database consortium (LIDC) and image database resource initiative (IDRI): A completed reference database of lung nodules on CT scans. Med. Phys. 2011, 38, 915–931. [Google Scholar] [CrossRef]

- Setio, A.A.A.; Traverso, A.; De Bel, T.; Berens, M.S.; Van Den Bogaard, C.; Cerello, P.; Jacobs, C. Validation, comparison, and combination of algorithms for automatic detection of pulmonary nodules in computed tomography images: The LUNA16 challenge. Med. Image Anal. 2017, 42, 1–13. [Google Scholar] [CrossRef]

- Van Ginneken, B.; Armato, S.G., III; de Hoop, B.; van Amelsvoort-van de Vorst, S.; Duindam, T.; Niemeijer, M.; Prokop, M. Comparing and combining algorithms for computer-aided detection of pulmonary nodules in computed tomography scans: The ANODE09 study. Med. Image Anal. 2010, 14, 707–722. [Google Scholar] [CrossRef]

- Dolejsi, M.; Kybic, J.; Polovincak, M.; Tuma, S. The lung time: Annotated lung nodule dataset and nodule detection framework. In Proceedings of the Medical Imaging 2009: Computer-Aided Diagnosis, Lake Buena Vista, FL, USA, 10–12 February 2009; pp. 538–545. [Google Scholar]

- Depeursinge, A.; Vargas, A.; Platon, A.; Geissbuhler, A.; Poletti, P.A.; Müller, H. Building a reference multimedia database for interstitial lung diseases. Comput. Med. Imaging Graph. 2012, 36, 227–238. [Google Scholar] [CrossRef]

- Han, G.; Liu, X.; Han, F.; Santika, I.N.T.; Zhao, Y.; Zhao, X.; Zhou, C. The LISS—A public database of common imaging signs of lung diseases for computer-aided detection and diagnosis research and medical education. IEEE Trans. Biomed. Eng. 2014, 62, 648–656. [Google Scholar] [CrossRef]

- Guo, K.; Liu, X.; Soomro, N.Q.; Liu, Y. A novel 2D ground-glass opacity detection method through local-to-global multilevel thresholding for segmentation and minimum bayes risk learning for classification. J. Med. Imaging Health Inform. 2016, 6, 1193–1201. [Google Scholar] [CrossRef]

- Huang, S.; Liu, X.; Han, G.; Zhao, X.; Zhao, Y.; Zhou, C. 3D GGO candidate extraction in lung CT images using multilevel thresholding on supervoxels. In Proceedings of the Medical Imaging: Computer-Aided Diagnosis, Houston, TX, USA, 12–15 February 2018; p. 1057533. [Google Scholar]

- Dhara, A.K.; Mukhopadhyay, S.; Saha, P.; Garg, M.; Khandelwal, N. Differential geometry-based techniques for characterization of boundary roughness of pulmonary nodules in CT images. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 337–349. [Google Scholar] [CrossRef] [PubMed]

- Cui, W.; Wang, Y. A Lung Calcification Detection Method through Improved Two-dimensional OTSU and the combined features. In Proceedings of the IEEE International Conference on Artificial Intelligence and Computer Applications, Dalian, China, 29–31 March 2019; pp. 369–373. [Google Scholar]

- Xiao, H.G.; Ran, Z.Q.; Huang, J.F.; Ren, H.J.; Liu, C.; Zhang, B.L.; Zhang, B.L.; Dang, J. Research progress in lung parenchyma segmentation based on computed tomography. J. Biomed. Eng. 2021, 38, 379–386. [Google Scholar]

- Farheen, F.; Shamil, M.S.; Ibtehaz, N.; Rahman, M.S. Revisiting segmentation of lung tumors from CT images. Comput. Biol. Med. 2022, 144, 105385. [Google Scholar] [CrossRef]

- Zhao, X.; Zhang, P.; Song, F.; Fan, G.; Sun, Y.; Wang, Y.; Tian, Z.; Zhang, L.; Zhang, G. D2A U-Net: Automatic segmentation of COVID-19 CT slices based on dual attention and hybrid dilated convolution. Comput. Biol. Med. 2021, 135, 104526. [Google Scholar] [CrossRef]

- Fan, D.P.; Zhou, T.; Ji, G.P.; Zhou, Y.; Chen, G.; Fu, H.; Shao, L. Inf-net: Automatic covid-19 lung infection segmentation from ct images. IEEE Trans. Med. Imaging 2020, 39, 2626–2637. [Google Scholar] [CrossRef]

- Zheng, B.; Liu, Y.; Zhu, Y.; Yu, F.; Jiang, T.; Yang, D.; Xu, T. MSD-Net: Multi-scale discriminative network for COVID-19 lung infection segmentation on CT. IEEE Access 2020, 8, 185786–185795. [Google Scholar] [CrossRef]

- Song, L.; Liu, X.; Ma, L.; Zhou, C.; Zhao, X.; Zhao, Y. Using HOG-LBP features and MMP learning to recognize imaging signs of lung lesions. In Proceedings of the 25th IEEE International Symposium on Computer-Based Medical Systems (CBMS), Rome, Italy, 20–22 June 2012; pp. 1–4. [Google Scholar]

- Kashif, M.; Raja, G.; Shaukat, F. An Efficient Content-Based Image Retrieval System for the Diagnosis of Lung Diseases. J. Digit. Imaging 2020, 33, 971–987. [Google Scholar] [CrossRef]

- Ma, L.; Liu, X.; Gao, Y.; Zhao, Y.; Zhao, X.; Zhou, C. A new method of content based medical image retrieval and its applications to CT imaging sign retrieval. J. Biomed. Inform. 2017, 66, 148–158. [Google Scholar] [CrossRef]

- Liu, X.; Ma, L.; Song, L.; Zhao, Y.; Zhao, X.; Zhou, C. Recognizing common CT imaging signs of lung diseases through a new feature selection method based on Fisher criterion and genetic optimization. IEEE J. Biomed. Health Inform. 2014, 19, 635–647. [Google Scholar] [CrossRef]

- Sun, S.S.; Ren, H.Z.; Kang, Y.; Zhao, H. Pulmonary nodules detection based on genetic algorithm and support vector machine. J. Syst. Simul. 2011, 23, 497–501. [Google Scholar]

- Ma, L.; Liu, X.; Fei, B. Learning with distribution of optimized features for recognizing common CT imaging signs of lung diseases. Phys. Med. Biol. 2016, 62, 612. [Google Scholar] [CrossRef] [PubMed]

- Ma, L.; Liu, X.; Song, L.; Zhao, C.; Zhao, X.; Zhou, Y. A new classifier fusion method based on historical and on-line classification reliability for recognizing common CT imaging signs of lung diseases. Comput. Med. Imaging Graph. 2015, 40, 39–48. [Google Scholar] [CrossRef]

- Han, G.; Liu, X.; Zhang, H.; Zheng, G.; Liu, W. Hybrid resampling and multi-feature fusion for automatic recognition of cavity imaging sign in lung ct. Future Gener. Comput. Syst. 2019, 99, 558–570. [Google Scholar] [CrossRef]

- Shen, R.; Cheng, I.; Basu, A. A hybrid knowledge-guided detection technique for screening of infectious pulmonary tuberculosis from chest radiographs. IEEE Trans. Biomed. Eng. 2010, 57, 2646–2656. [Google Scholar] [CrossRef] [PubMed]

- Xu, T.; Cheng, I.; Long, R.; Mandal, M. Novel coarse-to-fine dual scale technique for tuberculosis cavity detection in chest radiographs. Eurasip J. Image Video Process. 2013, 2013, 3. [Google Scholar] [CrossRef]

- Han, G.; Liu, X.; Zheng, G.; Wang, M.; Huang, S. Automatic recognition of 3D GGO CT imaging signs through the fusion of hybrid resampling and layer-wise fine-tuning CNNs. Med. Biol. Eng. Comput. 2018, 56, 2201–2212. [Google Scholar] [CrossRef]

- Shin, H.C.; Roth, H.R.; Gao, M.; Lu, L.; Xu, Z.; Nogues, I.; Summers, R.M. Deep convolutional neural networks for computer-aided detection: CNN architectures, dataset characteristics and transfer learning. IEEE Trans. Med. Imaging 2016, 35, 1285–1298. [Google Scholar] [CrossRef]

- Song, Y.; Cai, W.; Huang, H.; Zhou, Y.; Feng, D.D.; Wang, Y.; Chen, M. Large margin local estimate with applications to medical image classification. IEEE Trans. Med. Imaging 2015, 34, 1362–1377. [Google Scholar] [CrossRef]

- Zheng, G.; Han, G.; Soomro, N.Q. An inception module CNN classifiers fusion method on pulmonary nodule diagnosis by signs. Tsinghua Sci. Technol. 2019, 25, 368–383. [Google Scholar] [CrossRef]

- Frid-Adar, M.; Klang, E.; Amitai, M.; Goldberger, J.; Greenspan, H. Synthetic data augmentation using GAN for improved liver lesion classification. In Proceedings of the15th IEEE International Symposium on Biomedical Imaging, Washington, DC, USA, 4–7 April 2018; pp. 289–293. [Google Scholar]

- Zhuang, F.Z.; Qi, Z.Y.; Duan, K.Y.; Xi, D.B.; Zhu, Y.C.; Zhu, H.S.; Xiong, H.; He, Q. A comprehensive survey on transfer learning. Proc. IEEE 2021, 109, 43–76. [Google Scholar] [CrossRef]

- Gui, J.; Sun, Z.; Wen, Y.; Tao, D.; Ye, J. A review on generative adversarial networks: Algorithms, theory, and applications. IEEE Trans. Knowl. Data Eng. 2021, 1. [Google Scholar] [CrossRef]

- He, G. Lung ct imaging sign classification through deep learning on small data. arXiv 2019, arXiv:1903.00183. [Google Scholar]

- Zheng, G.; Han, G.; Soomro, N.Q.; Ma, L.; Zhang, F.; Zhao, Y.; Zhou, C. A novel computer-aided diagnosis scheme on small annotated set: G2C-CAD. BioMed Res. Int. 2019, 2019, 6425963. [Google Scholar] [CrossRef]

- Zhao, J.J.; Pan, L.; Zhao, P.F.; Tang, X.X. Medical sign recognition of lung nodules based on image retrieval with semantic features and supervised hashing. J. Comput. Sci. Technol. 2017, 32, 457–469. [Google Scholar] [CrossRef]

- Zhang, Y.N.; Zhao, L.J.; Wu, W.; Geng, X.; Hou, G.J. Alternate optimization of 3D pulmonary nodules retrieval based on medical signs. J. Taiyuan Univ. Technol. 2022, 53, 8. [Google Scholar]

- Nora, Y.; Ayman, A.; Sahar, M.; Hany, H. An Intelligent CBMIR System for Detection and Localization of Lung Diseases. Int. J. Adv. Res. 2021, 9, 651–660. [Google Scholar]

- Ma, L.; Liu, X.; Fei, B. A multi-level similarity measure for the retrieval of the common CT imaging signs of lung diseases. Med. Biol. Eng. Comput. 2020, 58, 1015–1029. [Google Scholar] [CrossRef]

| Dataset | CTScan | Type | Number of Class | Object | Description of the Purpose of Dataset |

|---|---|---|---|---|---|

| LIDC-IDRI | 1018 | DICOM | 9 | Lesion | Lesion classification and detection. |

| LUNA16 | 888 | MHD | 9 | Lesion | Lesion detection algorithm development. |

| ANEODE09 | 55 | DICOM | 4 | Lesion | Evaluation of lesion detection algorithms. |

| ILDs | 108 | DICOM | 13 | Lung diseases | Classification of interstitial lung disease. |

| Lung TIME | 157 | DICOM | 4 | Lesion | Lesion classification and detection. |

| LISS | 271 | DICOM | 9 | Sign | CISs classification and detection. |

| Indicator | Definition | Description |

|---|---|---|

| Sensitivity | True positive rate. | |

| Specificity | True negative rate. | |

| Accuracy | Proportion of correct sample to total sample. | |

| Precision | Proportion of true positive sample to all positive samples. | |

| F1-score | Harmonic mean. | |

| ROC | TPR versus FPR curve | , . |

| AUC | Total area under the ROC curve | area under curve (AUC). |

| Author | Year | Dataset | Sensitivity % | Specificity % | Accuracy % | Others | AUC | Task | Method Type |

|---|---|---|---|---|---|---|---|---|---|

| Guo et al. | 2016 | LISS | 100 | 33.13 | - | - | - | GGO segment | Threshold |

| Huang et al. | 2018 | LISS | 100 | - | - | - | - | GGO segment | Threshold |

| Cui et al. | 2019 | LISS | 56.37 | 92.29 | 89.89 | - | - | Calcification segment | Morphology |

| Song et al. | 2015 | ILDs | 70 | - | - | F1-score: 0.80 | - | ILDs classification | Deep learning |

| Shin et al. | 2016 | ILDs; Others | 70 | - | - | F1-score: 0.74 | - | Lymph node and ILDs classification | Deep learning |

| Han et al. | 2018 | LIDC-IDRI | 96.64 | 71.43 | 82.51 | F1-score: 0.83 | - | GGO classification | Deep learning |

| Han et al. | 2019 | LISS; LIDC-IDRI | 85 | 59 | 59 | - | - | Cavity classification | Deep learning |

| Song et al. | 2012 | Private | 91.8 | 98.5 | 98 | - | - | Nine CISs classification | Machine learning |

| Liu et al. | 2014 | LISS | 70.2 | 97.2 | 80.26 | - | - | Nine CISs classification | Machine learning |

| Liu et al. | 2015 | Private | 70.4 | 96.84 | 76.79 | - | - | Nine CISs classification | Machine learning |

| Ma et al. | 2016 | Private | 97.65 | 99.03 | 91.96 | - | - | Nine CISs classification | Machine learning |

| He et al. | 2019 | LISS | 92.73 | 99 | 91.83 | - | - | Nine CISs classification | Deep learning |

| Zheng et al. | 2019 | LISS; LIDC-IDRI | 82.22 | 93.17 | 88.67 | - | - | Five CISs classification | Deep learning |

| Zheng et al. | 2019 | LISS; LIDC-IDRI | - | - | - | - | Mean AUC: 0.9184 | Five CISs classification | Deep learning |

| Ma et al. | 2017 | LISS | - | - | - | mAcc: 77.49% | 0.4854 | Nine CISs retrieval | Machine learning |

| Zhao et al. | 2017 | LISS; LIDC-IDRI | - | - | 93.52 | - | - | Six CISs retrieval | Deep learning |

| Kashif et al. | 2020 | LISS | - | - | - | mAcc: 70% | 0.58 | Nine CISs retrieval | Machine learning |

| Ma et al. | 2020 | Private | - | - | 80 | - | - | Nine CISs retrieval | Machine learning |

| Zhang et al. | 2021 | LIDC-IDRI; Private | - | - | 94.83 | - | - | Nine CISs retrieval | Deep learning |

| Yahia et al. | 2021 | LISS | - | - | - | mAcc: 92% | - | Nine CISs retrieval | Deep learning |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xiao, H.; Li, Y.; Jiang, B.; Xia, Q.; Wei, Y.; Li, H. The Progress on Lung Computed Tomography Imaging Signs: A Review. Appl. Sci. 2022, 12, 9367. https://doi.org/10.3390/app12189367

Xiao H, Li Y, Jiang B, Xia Q, Wei Y, Li H. The Progress on Lung Computed Tomography Imaging Signs: A Review. Applied Sciences. 2022; 12(18):9367. https://doi.org/10.3390/app12189367

Chicago/Turabian StyleXiao, Hanguang, Yuewei Li, Bin Jiang, Qingling Xia, Yujia Wei, and Huanqi Li. 2022. "The Progress on Lung Computed Tomography Imaging Signs: A Review" Applied Sciences 12, no. 18: 9367. https://doi.org/10.3390/app12189367