Multiple Object Tracking in Robotic Applications: Trends and Challenges

Abstract

1. Introduction

1.1. Challenges

1.2. Related Work

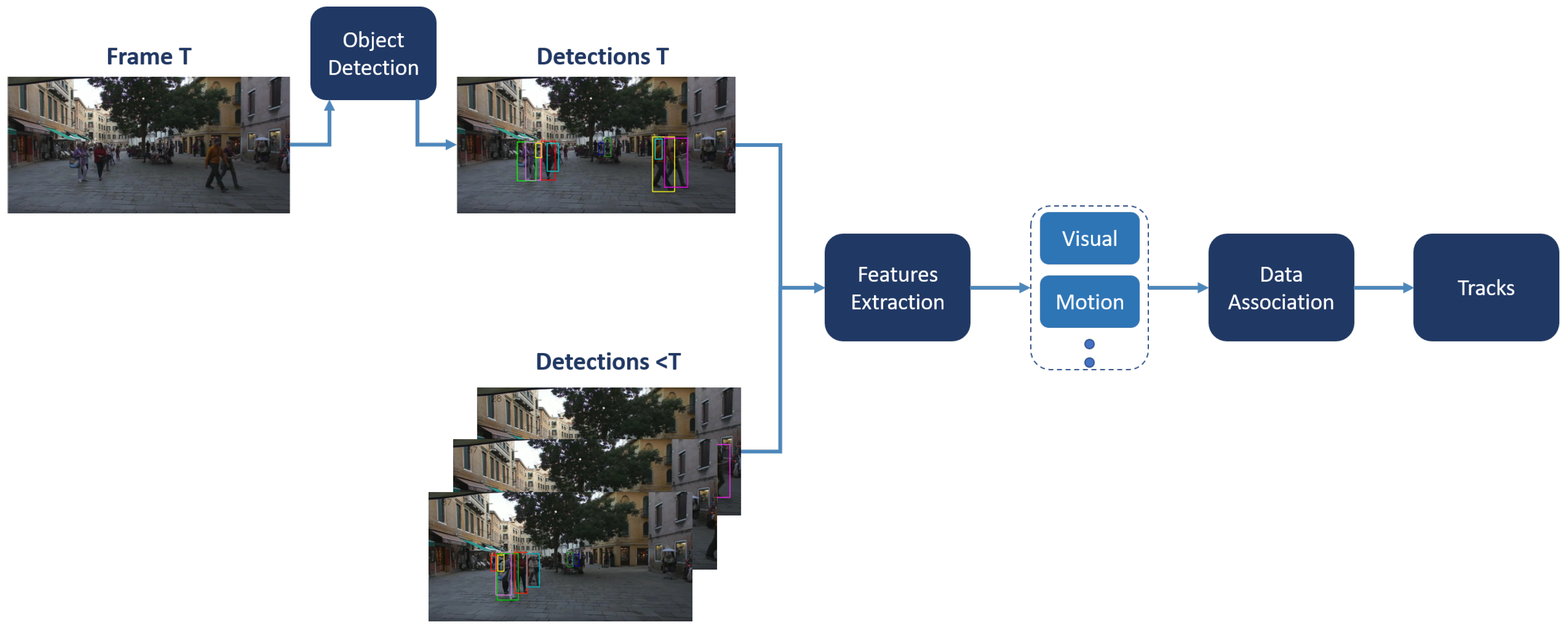

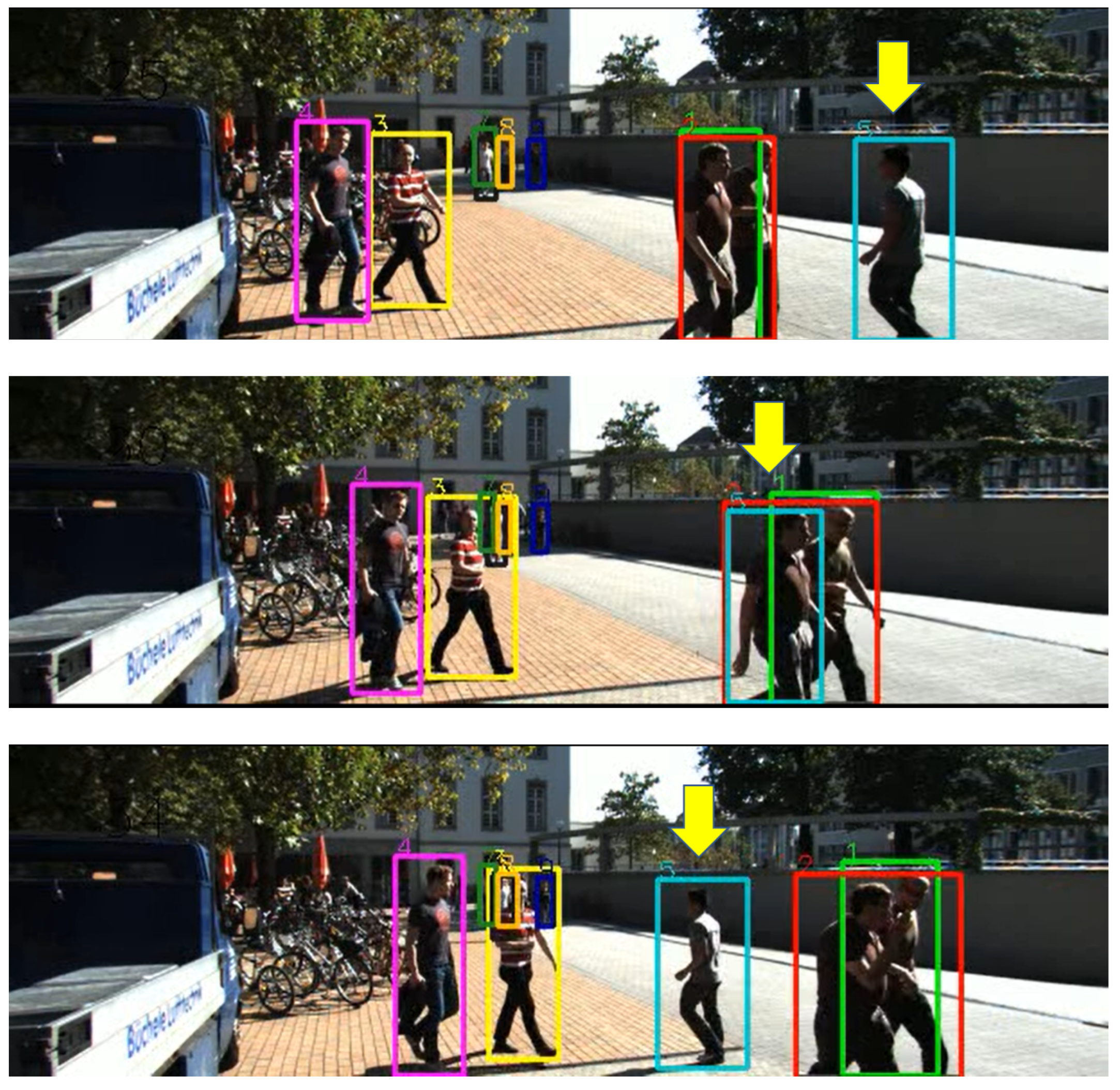

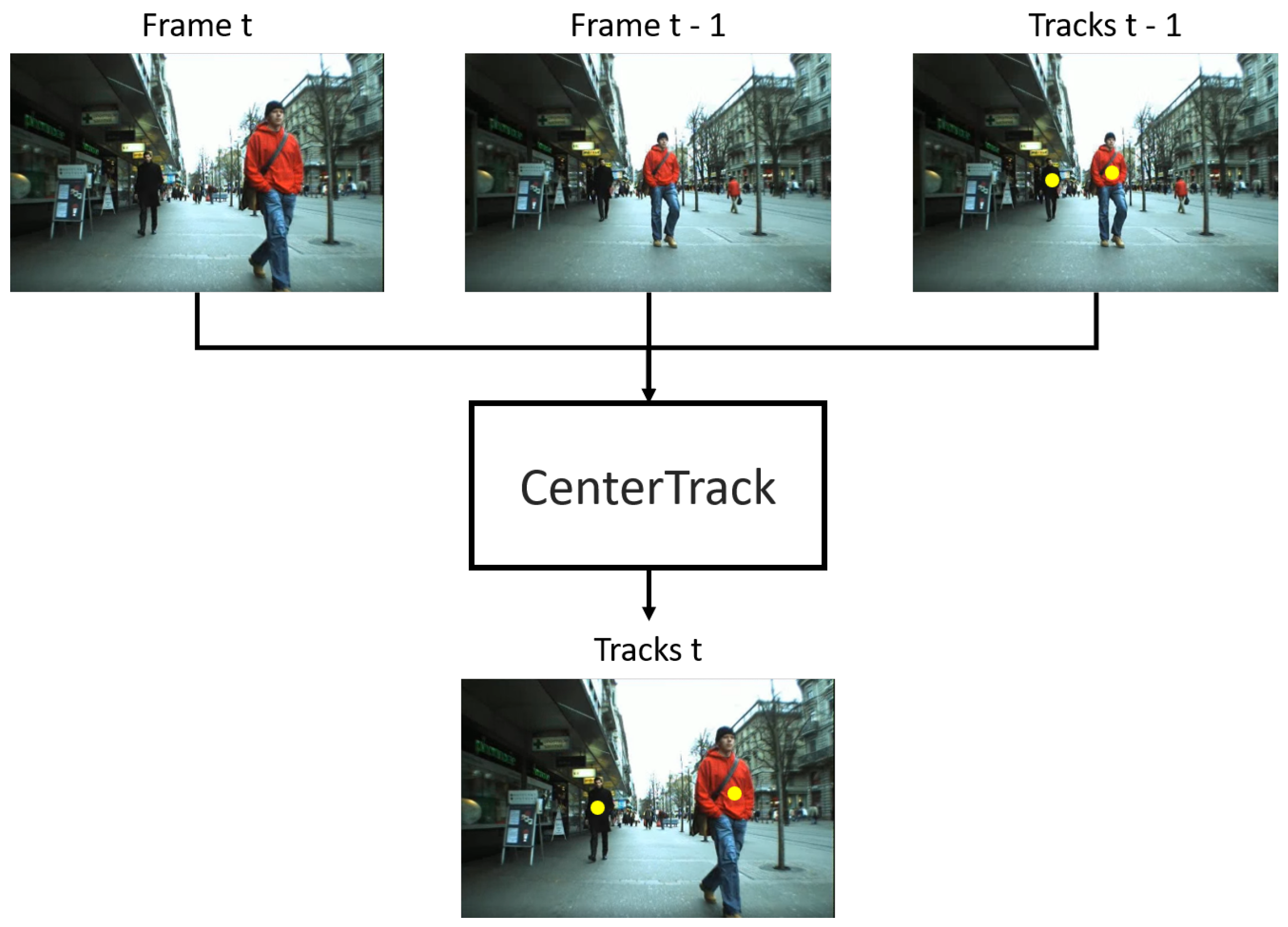

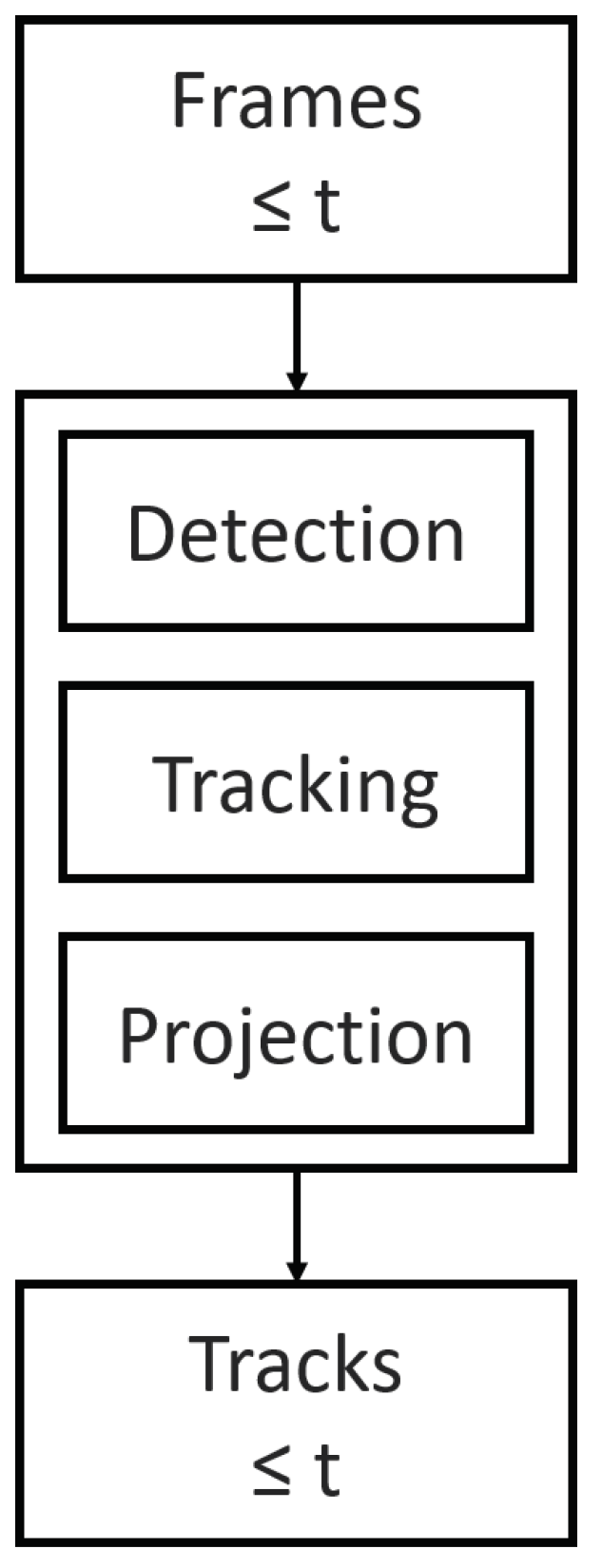

2. Mot Techniques

3. Mot Benchmark Datasets and Evaluation Metrics

3.1. Benchmark Datasets

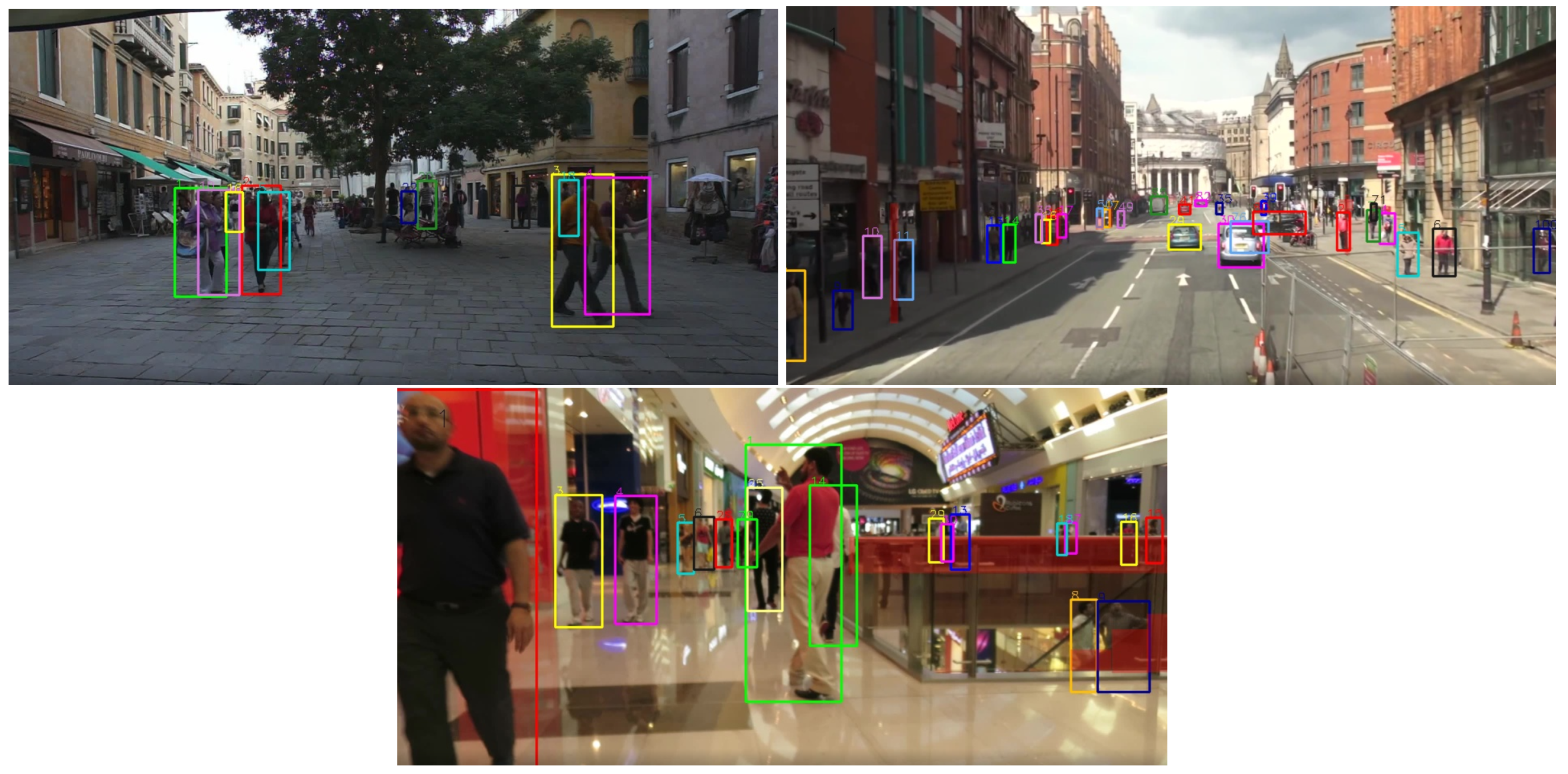

- MOTChallenge: The most common datasets in this collection are the MOT15 [19], MOT16 [92], MOT17 [92], and MOT20 [93]. There is a newly created set, MOT20, but it has not yet become a standard for evaluation in the research community to our current knowledge. The MOT datasets contain some data from existing sets such as PETS and TownCenter and others that are unique. Examples of the data included are presented in Table 4, where the amount of variation included in the MOT15 and MOT16 can be observed. Thus, the dataset is useful for training and testing using static and dynamic backgrounds and for 2D and 3D tracking. An evaluation tool is also given with the set to measure all features of the multiple object tracking algorithm, including accuracy, precision, and FPS. The ground truth data samples are shown in Figure 10.

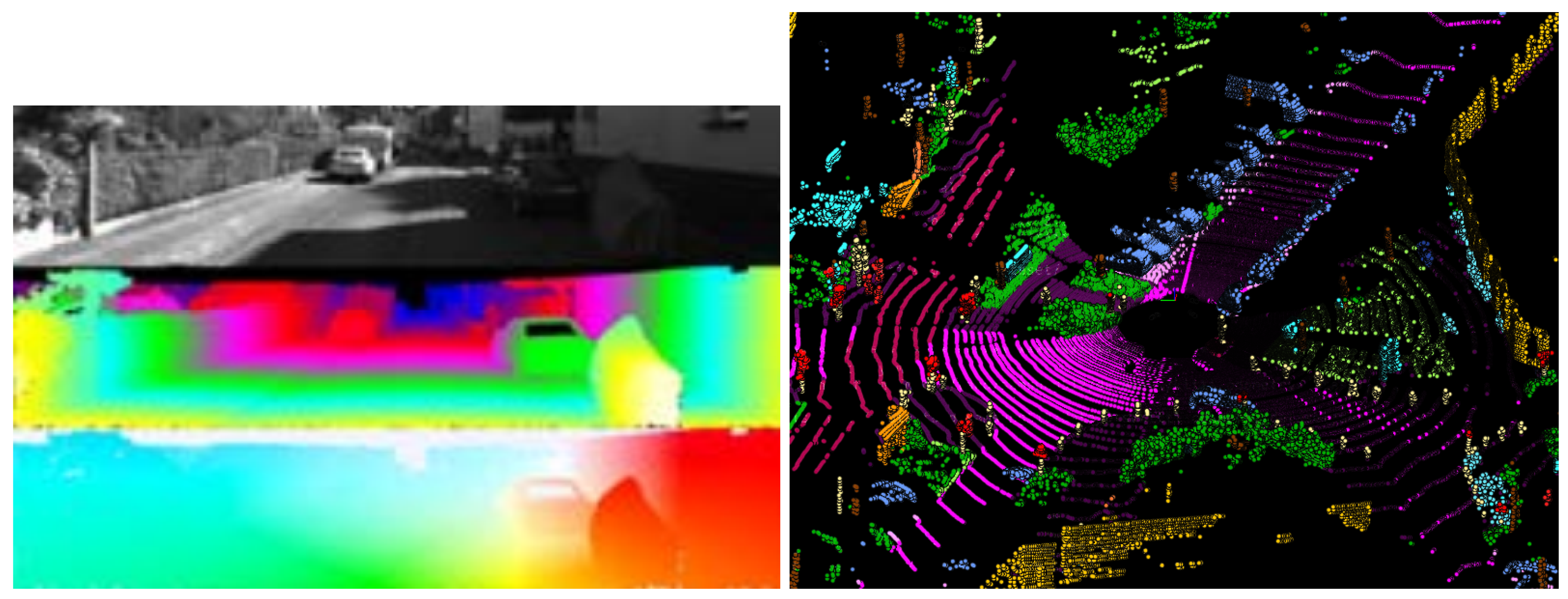

- KITTI [94]: This dataset is created specifically for autonomous driving. It was collected by a car driven through the streets with multiple sensors mounted for data collection. The set includes PointCloud data collected using LIDAR sensors and RGB video sequences captured by monocular cameras. It has been included in multiple research related to 2D and 3D multiple object tracking. Samples of the pointcloud and RGB data included in the KITTI dataset are shown in Figure 11.

- UA-DETRAC [96,97,98]: The dataset includes videos sequences captured from static cameras looking at the streets at different cities. A huge amount of labeled vehicles can assist in training and testing for static background multiple object tracking in surveillance and autonomous driving. Samples of the UA-DETRAC dataset at different illumination conditions can be shown in Figure 12.

3.2. Evaluation Metrics

4. Evaluation and Discussion

5. Current Research Challenges

6. Future Work

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| MOT | Multiple Object Tracking |

| LIDAR | Light Detection and Ranging |

| KITTI | Karlsruhe Institute of Technology and Toyota Technological Institute |

| UA-DETRAC | University at Albany DEtection and TRACking |

| SLAM | Simultaneous localization and mapping |

| ROI | Region of Interest |

| IoU | Intersection over Union |

| HOTA | Higher Order Tracking Accuracy |

| SLAMMOT | Simultaneous localization and mapping Multiple Object Tracking |

| RTU | Recurrent Tracking Unit |

| LSTM | Long Short Term Memory |

| ECO | Efficient Convolution Operators |

| PCA | Principal Component Analysis |

| LDAE | Lightweight and Deep Appearance Embedding |

| DLA | Deep Layer Aggregation |

| GCD | Global Context Disentangling |

| GTE | Guided Transformer Encoder |

| DETR | DEtection TRansformer |

| PCB | Part-based Convolutional Baseline |

References

- Runz, M.; Buffier, M.; Agapito, L. MaskFusion: Real-Time Recognition, Tracking and Reconstruction of Multiple Moving Objects. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality, ISMAR 2018, Munich, Germany, 16–20 October 2019; pp. 10–20. [Google Scholar]

- Zhou, Q.; Zhao, S.; Li, H.; Lu, R.; Wu, C. Adaptive Neural Network Tracking Control for Robotic Manipulators with Dead Zone. IEEE Trans. Neural Netw. Learn. Syst. 2019, 30, 3611–3620. [Google Scholar] [CrossRef] [PubMed]

- Yu, C.; Liu, Z.; Liu, X.; Xie, F.; Yang, Y.; Wei, Q.; Fei, Q. DS-SLAM: A Semantic Visual SLAM towards Dynamic Environments. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018; pp. 1168–1174. [Google Scholar]

- Fehr, L.; Langbein, W.E.; Skaar, S.B. Adequacy of power wheelchair control interfaces for persons with severe disabilities: A clinical survey. J. Rehabil. Res. Dev. 2000, 37, 353–360. [Google Scholar]

- Simpson, R. Smart wheelchairs: A literature review. J. Rehabil. Res. Dev. 2005, 42, 423–436. [Google Scholar] [CrossRef]

- Martins, M.M.; Santos, C.P.; Frizera-Neto, A.; Ceres, R. Assistive mobility devices focusing on Smart Walkers: Classification and review. Robot. Auton. Syst. 2012, 60, 548–562. [Google Scholar] [CrossRef]

- Khan, M.Q.; Lee, S. A comprehensive survey of driving monitoring and assistance systems. Sensors 2019, 19, 2574. [Google Scholar] [CrossRef] [PubMed]

- Van Brummelen, J.; O’Brien, M.; Gruyer, D.; Najjaran, H. Autonomous vehicle perception: The technology of today and tomorrow. Transp. Res. Part C Emerg. Technol. 2018, 89, 384–406. [Google Scholar] [CrossRef]

- Pham, H.X.; La, H.M.; Feil-Seifer, D.; Deans, M.C. A distributed control framework of multiple unmanned aerial vehicles for dynamic wildfire tracking. IEEE Trans. Syst. Man Cybern. Syst. 2020, 50, 1537–1548. [Google Scholar] [CrossRef]

- Cai, Z.; Wang, L.; Zhao, J.; Wu, K.; Wang, Y. Virtual target guidance-based distributed model predictive control for formation control of multiple UAVs. Chin. J. Aeronaut. 2020, 33, 1037–1056. [Google Scholar] [CrossRef]

- Huang, Y.; Liu, W.; Li, B.; Yang, Y.; Xiao, B. Finite-time formation tracking control with collision avoidance for quadrotor UAVs. J. Frankl. Inst. 2020, 357, 4034–4058. [Google Scholar] [CrossRef]

- Luo, W.; Xing, J.; Milan, A.; Zhang, X.; Liu, W.; Kim, T. Multiple object tracking: A literature review. Artif. Intell. 2021, 293, 103448. [Google Scholar] [CrossRef]

- Ciaparrone, G.; Luque Sánchez, F.; Tabik, S.; Troiano, L.; Tagliaferri, R.; Herrera, F. Deep learning in video multi-object tracking: A survey. Neurocomputing 2020, 381, 61–88. [Google Scholar] [CrossRef]

- Xu, Y.; Zhou, X.; Chen, S.; Li, F. Deep learning for multiple object tracking: A survey. IET Comput. Vis. 2019, 13, 411–419. [Google Scholar] [CrossRef]

- Irvine, J.M.; Wood, R.J.; Reed, D.; Lepanto, J. Video image quality analysis for enhancing tracker performance. In Proceedings of the 2013 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), Washington, DC, USA, 23–25 October 2013; pp. 1–9. [Google Scholar] [CrossRef]

- Meng, L.; Yang, X. A Survey of Object Tracking Algorithms. Zidonghua Xuebao/Acta Autom. Sin. 2019, 45, 1244–1260. [Google Scholar]

- Jain, V.; Wu, Q.; Grover, S.; Sidana, K.; Chaudhary, D.G.; Myint, S.; Hua, Q. Generating Bird’s Eye View from Egocentric RGB Videos. Wirel. Commun. Mob. Comput. 2021, 2021, 7479473. [Google Scholar] [CrossRef]

- Yeong, D.J.; Velasco-Hernandez, G.; Barry, J.; Walsh, J. Sensor and Sensor Fusion Technology in Autonomous Vehicles: A Review. Sensors 2021, 21, 2140. [Google Scholar] [CrossRef]

- Leal-Taixé, L.; Milan, A.; Reid, I.; Roth, S.; Schindler, K. MOTChallenge 2015: Towards a Benchmark for Multi-Target Tracking. arXiv 2015, arXiv:1504.01942. [Google Scholar]

- Xu, Z.; Rong, Z.; Wu, Y. A survey: Which features are required for dynamic visual simultaneous localization and mapping? Vis. Comput. Ind. Biomed. Art 2021, 4, 20. [Google Scholar] [CrossRef]

- Grigorescu, S.; Trasnea, B.; Cocias, T.; Macesanu, G. A survey of deep learning techniques for autonomous driving. J. Field Robot. 2020, 37, 362–386. [Google Scholar] [CrossRef]

- Janai, J.; Güney, F.; Behl, A.; Geiger, A. Computer vision for autonomous vehicles. Found. Trends Comput. Graph. Vis. 2020, 12, 1–308. [Google Scholar] [CrossRef]

- Datondji, S.R.E.; Dupuis, Y.; Subirats, P.; Vasseur, P. A Survey of Vision-Based Traffic Monitoring of Road Intersections. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2681–2698. [Google Scholar] [CrossRef]

- Buch, N.; Velastin, S.A.; Orwell, J. A review of computer vision techniques for the analysis of urban traffic. IEEE Trans. Intell. Transp. Syst. 2011, 12, 920–939. [Google Scholar] [CrossRef]

- Otto, A.; Agatz, N.; Campbell, J.; Golden, B.; Pesch, E. Optimization approaches for civil applications of unmanned aerial vehicles (UAVs) or aerial drones: A survey. Networks 2018, 72, 411–458. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Badue, C.; Guidolini, R.; Carneiro, R.V.; Azevedo, P.; Cardoso, V.B.; Forechi, A.; Jesus, L.; Berriel, R.; Paixão, T.M.; Mutz, F.; et al. Self-driving cars: A survey. Expert Syst. Appl. 2021, 165, 113816. [Google Scholar] [CrossRef]

- Wang, Z.; Wu, Y.; Niu, Q. Multi-Sensor Fusion in Automated Driving: A Survey. IEEE Access 2020, 8, 2847–2868. [Google Scholar] [CrossRef]

- Elhousni, M.; Huang, X. A Survey on 3D LiDAR Localization for Autonomous Vehicles. In Proceedings of the IEEE Intelligent Vehicles Symposium, Las Vegas, NV, USA, 9 October–13 November 2020; pp. 1879–1884. [Google Scholar]

- Fritsch, J.; Kühnl, T.; Geiger, A. A new performance measure and evaluation benchmark for road detection algorithms. In Proceedings of the 16th International IEEE Conference on Intelligent Transportation Systems (ITSC 2013), The Hague, The Netherlands, 6–9 October 2013; pp. 1693–1700. [Google Scholar] [CrossRef]

- Sadeghian, A.; Alahi, A.; Savarese, S. Tracking the Untrackable: Learning to Track Multiple Cues with Long-Term Dependencies. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 300–311. [Google Scholar] [CrossRef]

- Xiang, J.; Zhang, G.; Hou, J. Online Multi-Object Tracking Based on Feature Representation and Bayesian Filtering Within a Deep Learning Architecture. IEEE Access 2019, 7, 27923–27935. [Google Scholar] [CrossRef]

- Karunasekera, H.; Wang, H.; Zhang, H. Multiple Object Tracking With Attention to Appearance, Structure, Motion and Size. IEEE Access 2019, 7, 104423–104434. [Google Scholar] [CrossRef]

- Mahmoudi, N.; Ahadi, S.M.; Mohammad, R. Multi-target tracking using CNN-based features: CNNMTT. Multimed. Tools Appl. 2019, 78, 7077–7096. [Google Scholar] [CrossRef]

- Zhou, Q.; Zhong, B.; Zhang, Y.; Li, J.; Fu, Y. Deep Alignment Network Based Multi-Person Tracking With Occlusion and Motion Reasoning. IEEE Trans. Multimed. 2019, 21, 1183–1194. [Google Scholar] [CrossRef]

- Zhao, D.; Fu, H.; Xiao, L.; Wu, T.; Dai, B. Multi-Object Tracking with Correlation Filter for Autonomous Vehicle. Sensors 2018, 18, 2004. [Google Scholar] [CrossRef]

- Keuper, M.; Tang, S.; Andres, B.; Brox, T.; Schiele, B. Motion Segmentation & Multiple Object Tracking by Correlation Co-Clustering. IEEE Trans. Pattern Anal. Mach. Intell. 2020, 42, 140–153. [Google Scholar] [CrossRef]

- Fang, K.; Xiang, Y.; Li, X.; Savarese, S. Recurrent Autoregressive Networks for Online Multi-Object Tracking. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, NV, USA, 12–15 March 2018. [Google Scholar] [CrossRef]

- Chu, P.; Fan, H.; Tan, C.C.; Ling, H. Online Multi-Object Tracking with Instance-Aware Tracker and Dynamic Model Refreshment. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 7–11 January 2019. [Google Scholar] [CrossRef]

- Zhu, J.; Yang, H.; Liu, N.; Kim, M.; Zhang, W.; Yang, M.H. Online Multi-Object Tracking with Dual Matching Attention Networks. arXiv 2019, arXiv:1902.00749. [Google Scholar]

- Zhou, Z.; Xing, J.; Zhang, M.; Hu, W. Online Multi-Target Tracking with Tensor-Based High-Order Graph Matching. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 1809–1814. [Google Scholar] [CrossRef]

- Sun, S.; Akhtar, N.; Song, H.; Mian, A.; Shah, M. Deep Affinity Network for Multiple Object Tracking. arXiv 2018, arXiv:1810.11780. [Google Scholar] [CrossRef]

- Peng, J.; Wang, C.; Wan, F.; Wu, Y.; Wang, Y.; Tai, Y.; Wang, C.; Li, J.; Huang, F.; Fu, Y. Chained-Tracker: Chaining Paired Attentive Regression Results for End-to-End Joint Multiple-Object Detection and Tracking. arXiv 2020, arXiv:2007.14557. [Google Scholar]

- Wang, G.; Wang, Y.; Zhang, H.; Gu, R.; Hwang, J.N. Exploit the Connectivity: Multi-Object Tracking with TrackletNet. arXiv 2018, arXiv:1811.07258. [Google Scholar]

- Lan, L.; Wang, X.; Zhang, S.; Tao, D.; Gao, W.; Huang, T.S. Interacting Tracklets for Multi-Object Tracking. IEEE Trans. Image Process. 2018, 27, 4585–4597. [Google Scholar] [CrossRef] [PubMed]

- Zhou, X.; Koltun, V.; Krähenbühl, P. Tracking Objects as Points. arXiv 2020, arXiv:2004.01177. [Google Scholar]

- Chu, P.; Ling, H. FAMNet: Joint Learning of Feature, Affinity and Multi-dimensional Assignment for Online Multiple Object Tracking. arXiv 2019, arXiv:1904.04989. [Google Scholar]

- Chen, L.; Ai, H.; Shang, C.; Zhuang, Z.; Bai, B. Online multi-object tracking with convolutional neural networks. In Proceedings of the 2017 IEEE International Conference on Image Processing (ICIP), Beijing, China, 17–20 September 2017; pp. 645–649. [Google Scholar] [CrossRef]

- Yoon, K.; Kim, D.Y.; Yoon, Y.C.; Jeon, M. Data Association for Multi-Object Tracking via Deep Neural Networks. Sensors 2019, 19, 559. [Google Scholar] [CrossRef]

- Xu, B.; Liang, D.; Li, L.; Quan, R.; Zhang, M. An Effectively Finite-Tailed Updating for Multiple Object Tracking in Crowd Scenes. Appl. Sci. 2022, 12, 1061. [Google Scholar] [CrossRef]

- Ye, L.; Li, W.; Zheng, L.; Zeng, Y. Lightweight and Deep Appearance Embedding for Multiple Object Tracking. IET Comput. Vis. 2022, 16, 489–503. [Google Scholar] [CrossRef]

- Wang, F.; Luo, L.; Zhu, E.; Wang, S.; Long, J. Multi-object Tracking with a Hierarchical Single-branch Network. CoRR 2021, abs/2101.01984. Available online: http://xxx.lanl.gov/abs/2101.01984 (accessed on 7 September 2022).

- Yu, E.; Li, Z.; Han, S.; Wang, H. RelationTrack: Relation-aware Multiple Object Tracking with Decoupled Representation. CoRR 2021, abs/2105.04322. Available online: http://xxx.lanl.gov/abs/2105.04322 (accessed on 7 September 2022).

- Wang, S.; Sheng, H.; Yang, D.; Zhang, Y.; Wu, Y.; Wang, S. Extendable Multiple Nodes Recurrent Tracking Framework with RTU++. IEEE Trans. Image Process. 2022, 31, 5257–5271. [Google Scholar] [CrossRef] [PubMed]

- Gao, Y.; Gu, X.; Gao, Q.; Hou, R.; Hou, Y. TdmTracker: Multi-Object Tracker Guided by Trajectory Distribution Map. Electronics 2022, 11, 1010. [Google Scholar] [CrossRef]

- Nasseri, M.H.; Babaee, M.; Moradi, H.; Hosseini, R. Fast Online and Relational Tracking. arXiv 2022, arXiv:2208.03659. [Google Scholar]

- Zhao, Z.; Wu, Z.; Zhuang, Y.; Li, B.; Jia, J. Tracking Objects as Pixel-wise Distributions. arXiv 2022, arXiv:2207.05518. [Google Scholar]

- Aharon, N.; Orfaig, R.; Bobrovsky, B.Z. BoT-SORT: Robust Associations Multi-Pedestrian Tracking. arXiv 2022, arXiv:2206.14651. [Google Scholar]

- Seidenschwarz, J.; Brasó, G.; Elezi, I.; Leal-Taixé, L. Simple Cues Lead to a Strong Multi-Object Tracker. arXiv 2022, arXiv:2206.04656. [Google Scholar]

- Dai, P.; Feng, Y.; Weng, R.; Zhang, C. Joint Spatial-Temporal and Appearance Modeling with Transformer for Multiple Object Tracking. arXiv 2022, arXiv:2205.15495. [Google Scholar]

- Zhang, Y.; Wang, C.; Wang, X.; Zeng, W.; Liu, W. Robust Multi-Object Tracking by Marginal Inference. arXiv 2022, arXiv:2208.03727. [Google Scholar]

- Hyun, J.; Kang, M.; Wee, D.; Yeung, D.Y. Detection Recovery in Online Multi-Object Tracking with Sparse Graph Tracker. arXiv 2022, arXiv:2205.00968. [Google Scholar]

- Chen, M.; Liao, Y.; Liu, S.; Wang, F.; Hwang, J.N. TR-MOT: Multi-Object Tracking by Reference. arXiv 2022, arXiv:2203.16621. [Google Scholar]

- Cao, J.; Weng, X.; Khirodkar, R.; Pang, J.; Kitani, K. Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking. arXiv 2022, arXiv:2203.1662. [Google Scholar]

- Wan, J.; Zhang, H.; Zhang, J.; Ding, Y.; Yang, Y.; Li, Y.; Li, X. DSRRTracker: Dynamic Search Region Refinement for Attention-based Siamese Multi-Object Tracking. arXiv 2022, arXiv:2203.10729. [Google Scholar]

- Du, Y.; Song, Y.; Yang, B.; Zhao, Y. StrongSORT: Make DeepSORT Great Again. arXiv 2022, arXiv:2202.13514. [Google Scholar]

- Zhang, Y.; Sun, P.; Jiang, Y.; Yu, D.; Weng, F.; Yuan, Z.; Luo, P.; Liu, W.; Wang, X. ByteTrack: Multi-Object Tracking by Associating Every Detection Box. arXiv 2021, arXiv:2110.06864. [Google Scholar]

- Bochinski, E.; Senst, T.; Sikora, T. Extending IOU Based Multi-Object Tracking by Visual Information. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Hou, X.; Wang, Y.; Chau, L.P. Vehicle Tracking Using Deep SORT with Low Confidence Track Filtering. In Proceedings of the 2019 16th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Taipei, Taiwan, 18–21 September 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Scheidegger, S.; Benjaminsson, J.; Rosenberg, E.; Krishnan, A.; Granstrom, K. Mono-Camera 3D Multi-Object Tracking Using Deep Learning Detections and PMBM Filtering. arXiv 2018, arXiv:1802.09975. [Google Scholar]

- Hu, H.N.; Cai, Q.Z.; Wang, D.; Lin, J.; Sun, M.; Kraehenbuehl, P.; Darrell, T.; Yu, F. Joint Monocular 3D Vehicle Detection and Tracking. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 5389–5398. [Google Scholar] [CrossRef]

- Kutschbach, T.; Bochinski, E.; Eiselein, V.; Sikora, T. Sequential sensor fusion combining probability hypothesis density and kernelized correlation filters for multi-object tracking in video data. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–5. [Google Scholar] [CrossRef]

- Lowe, D. Distinctive Image Features from Scale-Invariant Keypoints. Int. J. Comput. Vis. 2004, 60, 91. [Google Scholar] [CrossRef]

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05), San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893. [Google Scholar] [CrossRef]

- Ojala, T.; Pietikäinen, M.; Harwood, D. A comparative study of texture measures with classification based on featured distributions. Pattern Recognit. 1996, 29, 51–59. [Google Scholar] [CrossRef]

- Ahonen, T.; Hadid, A.; Pietikainen, M. Face Description with Local Binary Patterns: Application to Face Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 2037–2041. [Google Scholar] [CrossRef]

- Kuhn, H.W. The hungarian method for the assignment problem. Nav. Res. Logist. Q. 1955, 2, 83–97. [Google Scholar] [CrossRef]

- Bochinski, E.; Eiselein, V.; Sikora, T. High-Speed tracking-by-detection without using image information. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Zhou, X.; Wang, D.; Krähenbühl, P. Objects as Points. arXiv 2019, arXiv:1904.07850. [Google Scholar]

- Dimitriou, N.; Stavropoulos, G.; Moustakas, K.; Tzovaras, D. Multiple object tracking based on motion segmentation of point trajectories. In Proceedings of the 2016 13th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Colorado Springs, CO, USA, 23–26 August 2016; pp. 200–206. [Google Scholar] [CrossRef]

- Cai, J.; Wang, Y.; Zhang, H.; Hsu, H.; Ma, C.; Hwang, J. IA-MOT: Instance-Aware Multi-Object Tracking with Motion Consistency. CoRR 2020, abs/2006.13458. Available online: http://xxx.lanl.gov/abs/2006.13458 (accessed on 7 September 2022).

- Yan, B.; Jiang, Y.; Sun, P.; Wang, D.; Yuan, Z.; Luo, P.; Lu, H. Towards Grand Unification of Object Tracking. arXiv 2022, arXiv:2207.07078. [Google Scholar]

- Yang, F.; Chang, X.; Dang, C.; Zheng, Z.; Sakti, S.; Nakamura, S.; Wu, Y. ReMOTS: Self-Supervised Refining Multi-Object Tracking and Segmentation. CoRR 2020, abs/2007.03200. Available online: http://xxx.lanl.gov/abs/2007.03200 (accessed on 7 September 2022).

- Voigtlaender, P.; Krause, M.; Osep, A.; Luiten, J.; Sekar, B.B.G.; Geiger, A.; Leibe, B. MOTS: Multi-Object Tracking and Segmentation. arXiv 2019, arXiv:1902.03604. [Google Scholar]

- Simon, M.; Amende, K.; Kraus, A.; Honer, J.; Sämann, T.; Kaulbersch, H.; Milz, S.; Gross, H.M. Complexer-YOLO: Real-Time 3D Object Detection and Tracking on Semantic Point Clouds. arXiv 2019, arXiv:1904.07537. [Google Scholar]

- Zhang, W.; Zhou, H.; Sun, S.; Wang, Z.; Shi, J.; Loy, C.C. Robust Multi-Modality Multi-Object Tracking. arXiv 2019, arXiv:1909.03850. [Google Scholar]

- Frossard, D.; Urtasun, R. End-to-end Learning of Multi-sensor 3D Tracking by Detection. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 635–642. [Google Scholar] [CrossRef]

- Weng, X.; Wang, Y.; Man, Y.; Kitani, K. GNN3DMOT: Graph Neural Network for 3D Multi-Object Tracking with Multi-Feature Learning. arXiv 2020, arXiv:2006.07327. [Google Scholar]

- Sualeh, M.; Kim, G.W. Visual-LiDAR Based 3D Object Detection and Tracking for Embedded Systems. IEEE Access 2020, 8, 156285–156298. [Google Scholar] [CrossRef]

- Shenoi, A.; Patel, M.; Gwak, J.; Goebel, P.; Sadeghian, A.; Rezatofighi, H.; Martín-Martín, R.; Savarese, S. JRMOT: A Real-Time 3D Multi-Object Tracker and a New Large-Scale Dataset. arXiv 2020, arXiv:2002.08397. [Google Scholar]

- Paszke, A.; Chaurasia, A.; Kim, S.; Culurciello, E. ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation. CoRR 2016, abs/1606.02147. Available online: http://xxx.lanl.gov/abs/1606.02147 (accessed on 7 September 2022).

- Milan, A.; Leal-Taixé, L.; Reid, I.; Roth, S.; Schindler, K. MOT16: A Benchmark for Multi-Object Tracking. arXiv 2016, arXiv:1603.00831. [Google Scholar]

- Dendorfer, P.; Rezatofighi, H.; Milan, A.; Shi, J.; Cremers, D.; Reid, I.D.; Roth, S.; Schindler, K.; Leal-Taixé, L. MOT20: A benchmark for multi object tracking in crowded scenes. CoRR 2020, abs/2003.09003. Available online: http://xxx.lanl.gov/abs/2003.09003 (accessed on 7 September 2022).

- Geiger, A.; Lenz, P.; Urtasun, R. Are we ready for Autonomous Driving? The KITTI Vision Benchmark Suite. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 3354–3361. [Google Scholar]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. SemanticKITTI: A Dataset for Semantic Scene Understanding of LiDAR Sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

- Wen, L.; Du, D.; Cai, Z.; Lei, Z.; Chang, M.; Qi, H.; Lim, J.; Yang, M.; Lyu, S. UA-DETRAC: A New Benchmark and Protocol for Multi-Object Detection and Tracking. Comput. Vis. Image Underst. 2020, 193, 102907. [Google Scholar] [CrossRef]

- Lyu, S.; Chang, M.C.; Du, D.; Li, W.; Wei, Y.; Del Coco, M.; Carcagnì, P.; Schumann, A.; Munjal, B.; Choi, D.H.; et al. UA-DETRAC 2018: Report of AVSS2018 & IWT4S challenge on advanced traffic monitoring. In Proceedings of the 2018 15th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Auckland, New Zealand, 27–30 November 2018; pp. 1–6. [Google Scholar]

- Lyu, S.; Chang, M.C.; Du, D.; Wen, L.; Qi, H.; Li, Y.; Wei, Y.; Ke, L.; Hu, T.; Del Coco, M.; et al. UA-DETRAC 2017: Report of AVSS2017 & IWT4S Challenge on Advanced Traffic Monitoring. In Proceedings of the 2017 14th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Lecce, Italy, 29 August–1 September 2017; pp. 1–7. [Google Scholar]

- Luiten, J.; Osep, A.; Dendorfer, P.; Torr, P.H.S.; Geiger, A.; Leal-Taixé, L.; Leibe, B. HOTA: A Higher Order Metric for Evaluating Multi-Object Tracking. CoRR 2020, abs/2009.07736. Available online: http://xxx.lanl.gov/abs/2009.07736 (accessed on 7 September 2022).

- Henschel, R.; Leal-Taixé, L.; Cremers, D.; Rosenhahn, B. Fusion of Head and Full-Body Detectors for Multi-Object Tracking. arXiv arXiv:1705.08314, 2017.

- Henschel, R.; Zou, Y.; Rosenhahn, B. Multiple People Tracking Using Body and Joint Detections. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Long Beach, CA, USA, 16–17 June 2019; pp. 770–779. [Google Scholar] [CrossRef]

- Weng, X.; Kitani, K. A Baseline for 3D Multi-Object Tracking. CoRR 2019, abs/1907.03961. Available online: http://xxx.lanl.gov/abs/1907.03961 (accessed on 7 September 2022).

- Gloudemans, D.; Work, D.B. Localization-Based Tracking. CoRR 2021, abs/2104.05823. Available online: http://xxx.lanl.gov/abs/2104.05823 (accessed on 7 September 2022).

- Sun, S.; Akhtar, N.; Song, X.; Song, H.; Mian, A.; Shah, M. Simultaneous Detection and Tracking with Motion Modelling for Multiple Object Tracking. CoRR 2020, abs/2008.08826. Available online: http://xxx.lanl.gov/abs/2008.08826 (accessed on 7 September 2022).

- Luiten, J.; Fischer, T.; Leibe, B. Track to Reconstruct and Reconstruct to Track. IEEE Robot. Autom. Lett. 2020, 5, 1803–1810. [Google Scholar] [CrossRef]

- Wang, S.; Sun, Y.; Liu, C.; Liu, M. PointTrackNet: An End-to-End Network For 3-D Object Detection and Tracking From Point Clouds. arXiv 2020, arXiv:2002.11559. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, X.; Qin, W.; Li, X.; Yang, L.; Li, Z.; Zhu, L.; Wang, H.; Li, J.; Liu, H. CAMO-MOT: Combined Appearance-Motion Optimization for 3D Multi-Object Tracking with Camera-LiDAR Fusion. arXiv 2022, arXiv:2209.02540. [Google Scholar]

- Sun, Y.; Zhao, Y.; Wang, S. Multiple Traffic Target Tracking with Spatial-Temporal Affinity Network. Comput. Intell. Neurosci. 2022, 2022, 9693767. [Google Scholar] [CrossRef]

- Messoussi, O.; de Magalhaes, F.G.; Lamarre, F.; Perreault, F.; Sogoba, I.; Bilodeau, G.; Nicolescu, G. Vehicle Detection and Tracking from Surveillance Cameras in Urban Scenes. CoRR 2021, abs/2109.12414. Available online: http://xxx.lanl.gov/abs/2109.12414 (accessed on 7 September 2022).

- Wang, G.; Gu, R.; Liu, Z.; Hu, W.; Song, M.; Hwang, J. Track without Appearance: Learn Box and Tracklet Embedding with Local and Global Motion Patterns for Vehicle Tracking. CoRR 2021, abs/2108.06029. Available online: http://xxx.lanl.gov/abs/2108.06029 (accessed on 7 September 2022).

| Review | Year | Evaluation Dataset |

|---|---|---|

| Ciaparrone et al. [13] | 2019 | MOT 15, 16, 17 |

| Xu et al. [14] | 2019 | MOT 15, 16 |

| Luo et al. [12] | 2022 | PETS2009-S2L1 |

| Ours | 2022 | KITTI, MOT 15, 16, 17, 20, and UA_DETRAC |

| Tracker | Appearance Cue | Motion Cue | Data Association | Mode | Occlusion Handling |

|---|---|---|---|---|---|

| ine Keuper et al. [37] | - | Optical flow | Correlation Co-Clustering | Online | Point trajectories |

| Fang et al. [38] | fc8 layer of the inception network | Relative center coordinates | Conditional Probability | Online | Track history |

| Xiang et al. [32] | VGG-16 | LSTM network | Metric Learning | Online | Track history |

| Chu et al. [39] | CNN | - | Reinforcement Learning | Online | SVM Classifier |

| Zhu et al. [40] | ECO | - | Dual Matching Attention Networks | Online | Spatial attention network |

| Zhou et al. [41] | ResNet50 | Linear Model | Siamese Networks | Online | Track history |

| Sun et al. [42] | VGG-like network | - | Affinity estimator | - | Track history |

| Peng et al. [43] | ResNet-50 + FPN | - | Prediction Network | Online | Track history |

| Wang et al. [44] | FaceNet | - | Multi-Scale TrackletNet | - | Track history |

| Mahmoudi et al. [34] | CNN | Relative mean velocity and position | Hungarian Algorithm | Online | Track history |

| Zhou et al. [35] | ResNet-50 | Kalman Filter | Hungarian Algorithm | Online | Track history |

| Lan et al. [45] | Decoder | Relative position | Unary Potential | Near-online | Track history |

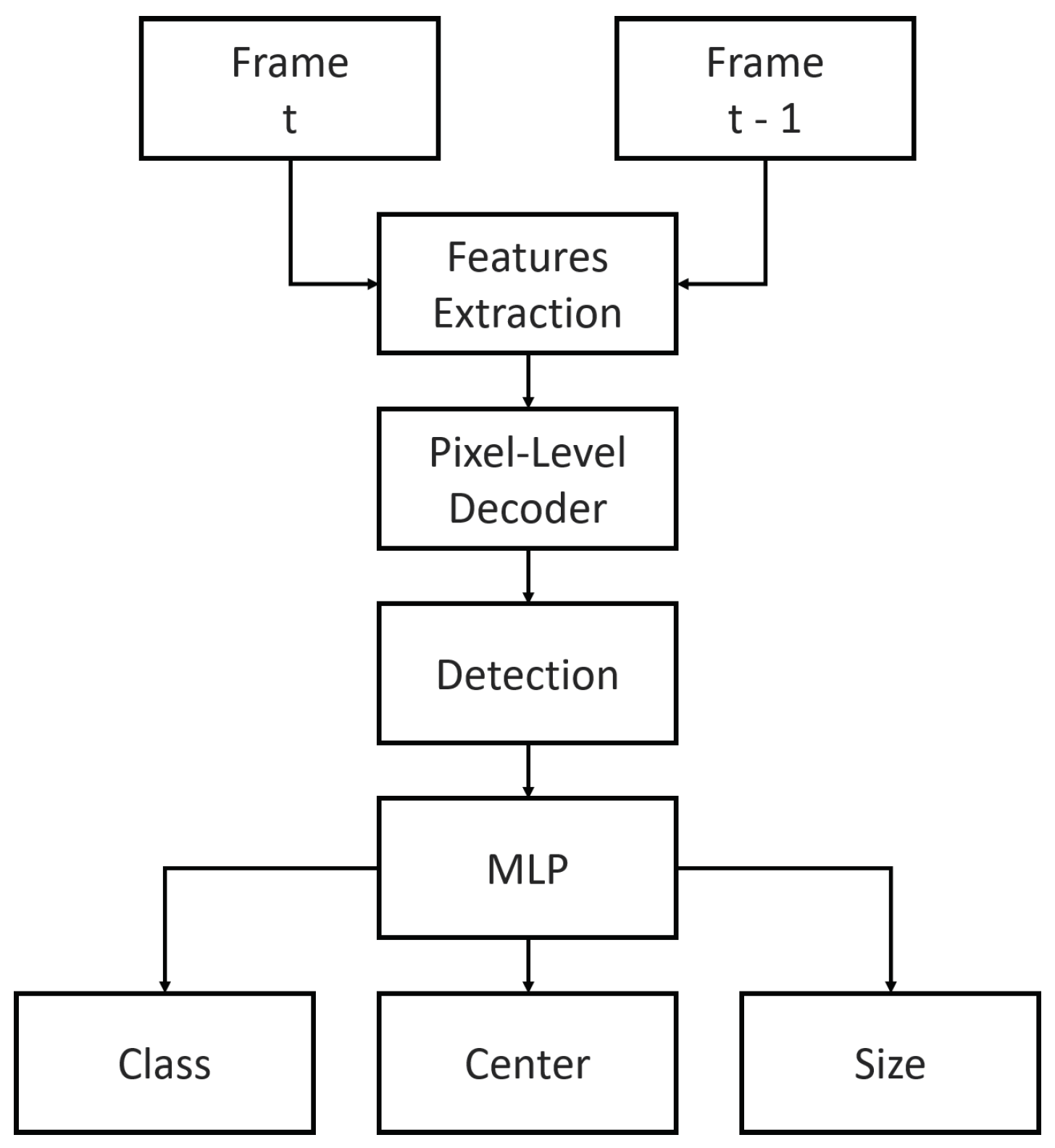

| Zhou et al. [46] | - | CenterTrack | 2D displacement prediction | Online | Track history |

| Karunasekera et al. [33] | Matching histograms | Relative position | Hungarian Algorithm | Online | Grid Structure + Track history |

| Chu et al. [47] | Siamese-style network | - | R1TA Power Iteration layer | Online | Track history |

| ine Zhao et al. [36] | CNN + PCA + Correlation Filter | - | Hungarian Algorithm | Online | APCE + IoU |

| Chen et al. [48] | Faster-RCNN (VGG16) | - | Category Classifier | Online | Track history |

| Sadeghian et al. [31] | VGG16 + LSTM network | LSTM network | RNN | Online | Track history |

| Yoon et al. [49] | Encoder | - | Decoder | Online | Track history |

| Xu et al. [50] | FairMOT | Kalman Filter | Hungarian Algorithm | Online | Track history |

| Ye et al. [51] | LDAE | Kalman Filter | Cosine Distance | Online | Track History |

| Wang et al. [52] | Faster-RCNN (ResNet50) | Kalman Filter | Faster RCNN (ResNet50) | Online | Track history |

| Yu et al. [53] | DLA-34 + GCD + GTE | - | Hungarian Algorithm | Online | Track history |

| Wang et al. [54] | PCB | Kalman Filter | RTU++ | Online | Track history |

| Gao et al. [55] | ResNet34 | - | Depth wise Cross Correlation | Online | Track history |

| Nasseri et al. [56] | - | Kalman Filter | Normalized IoU | Online | Track history |

| Zhao et al. [57] | Transformer + Pixel Decoder | Kalman Filter | Cosine Distance | Online | Track history |

| Aharon et al. [58] | FastReID’s SBS-50 | Kalman Filter | Cosine Distance | Online | Track history |

| Seidenschwarz et al. [59] | ResNet50 | Linear Model | Histogram Distance | Online | Track history |

| dai et al. [60] | CNN | - | Hungarian Algorithm | Online | Track history |

| Zhang et al. [61] | FairMOT | Kalman Filter | IoU + Hungarian Algorithm | Online | Track history |

| Hyun et al. [62] | FairMOT | - | Sparse Graph + Hungarian | Online | Track history |

| ine Chen et al. [63] | DETR Network | - | Hungarian Algorithm | Online | Track history |

| Cao et al. [64] | - | Kalman Filter + non-linear model + Smoothing | Observation Centric Recovery | Online | Track history |

| Wan et al. [65] | YOLOx | - | Self and Cross Attention Layers | Online | Track history |

| Du et al. [66] | - | NST Kalman Filter + ResNet50 | AFLink | Online | Track history |

| Zhang et al. [67] | ResNet50 | Kalman Filter | Hungarian Algorithm | Online | Track history |

| Tracker | RGB Camera | Point Cloud LIDAR | Data Fusion | Target Tracking |

|---|---|---|---|---|

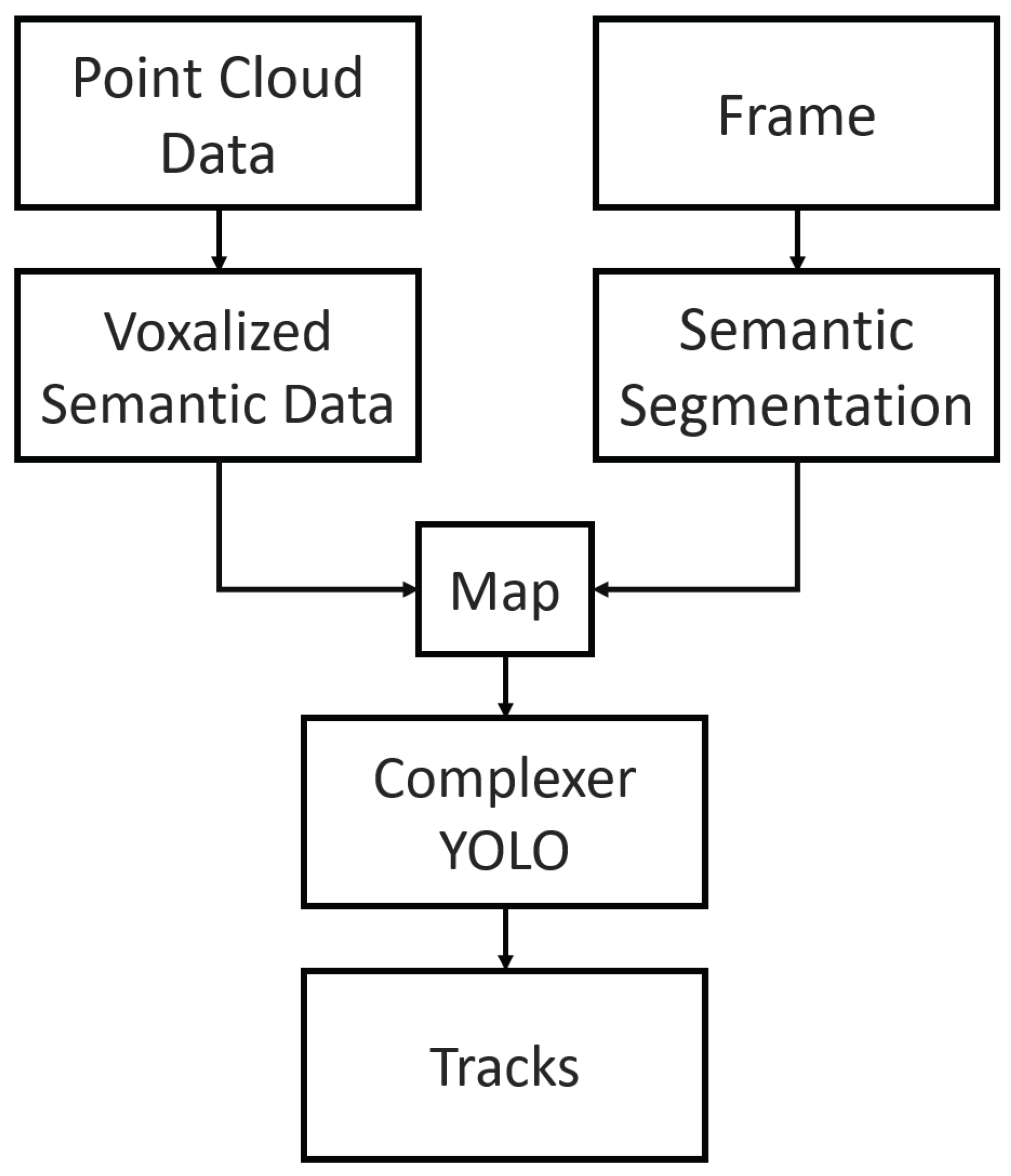

| ine Simon et al. [85] | Enet | 3D Voxel Quantization | Complex-YOLO | LMB RFS |

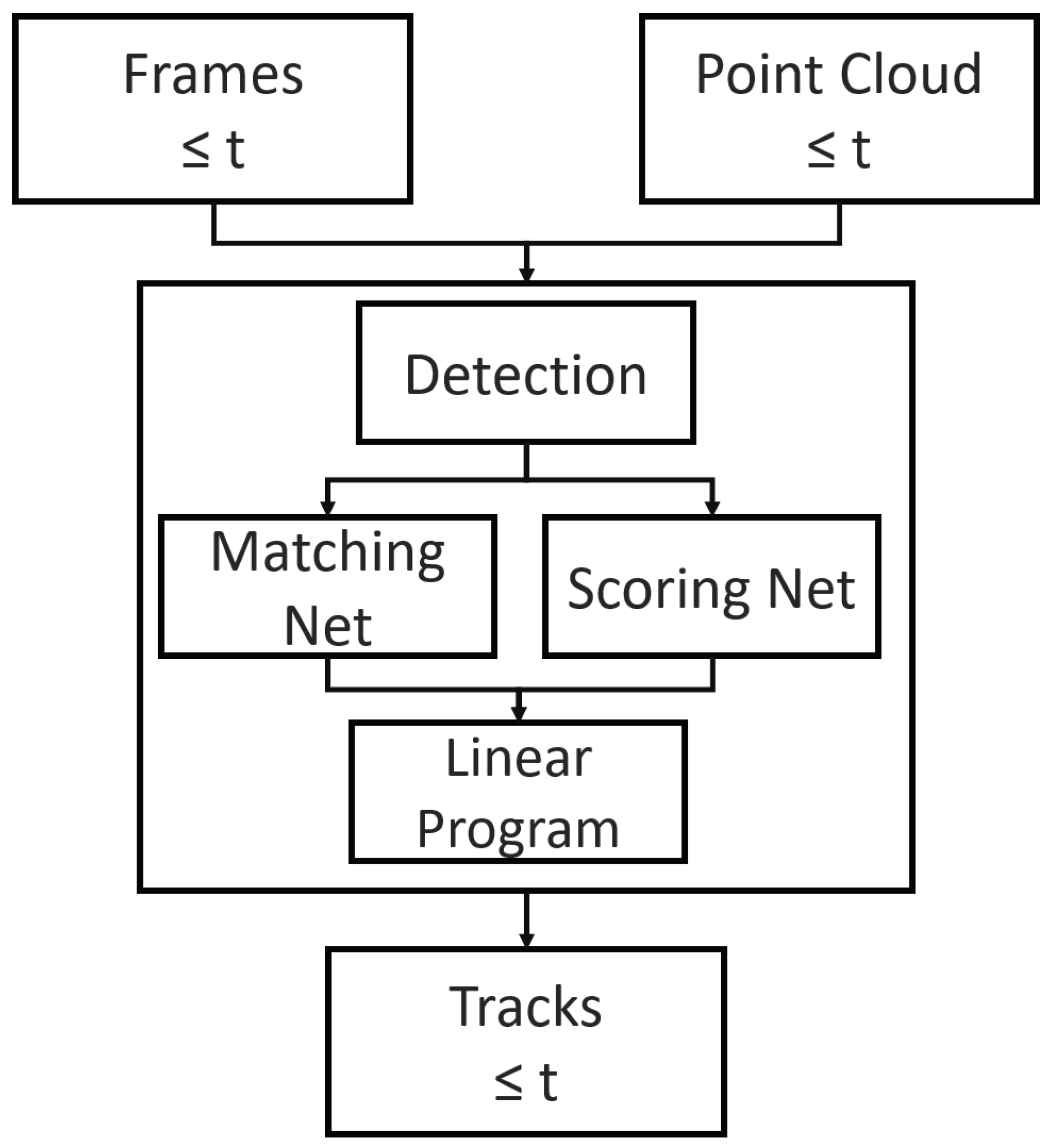

| Zhang et al. [86] | VGG-16 | PointNet | Point-wise convolution + Start and end estimator | Linear Programming |

| Frossard et al. [87] | MV3D | MV3D | MV3D | Linear Programming |

| Hu et al. [71] | Faster R-CNN | 34-layer DLA-up | Monocular 3D estimation | LSTM Network |

| Weng et al. [88] | ResNet | PointNet | Addition | Graph Neural Network |

| Sualeh et al. [89] | YOLOv3 | IMM-UKF-JPDAF | 3D projection | Munkres Association |

| Shenoi et al. [90] | Mask RCNN | F-PointNet | 3-layered fully connected network | JPDA |

| Dataset | Sequences | Length | Tracks | FPS | Platform | ViewPoint | Density | Weather |

|---|---|---|---|---|---|---|---|---|

| MOT 2015 | TUD-Crossing | 201 | 13 | 25 | static | horizontal | 5.5 | cloudy |

| PETS09-S2L2 | 436 | 42 | 7 | static | high | 22.1 | cloudy | |

| ETH-Jelmoli | 440 | 45 | 14 | moving | low | 5.8 | sunny | |

| KITTI-16 | 209 | 17 | 10 | static | horizontal | 8.1 | sunny | |

| MOT 2016 | MOT16-01 | 450 | 23 | 30 | static | horizontal | 14.2 | cloudy |

| MOT16-03 | 1500 | 148 | 30 | static | high | 69.7 | night | |

| MOT16-06 | 1194 | 221 | 14 | moving | low | 9.7 | sunny | |

| MOT16-12 | 900 | 86 | 30 | moving | horizontal | 9.2 | indoor |

| Dataset | Tracker | MOTA ↑ | MOTP ↑ | IDF1 ↑ | MT ↑ | ML ↓ | FP ↓ | FN ↓ | IDS ↓ | Frag ↓ | Hz ↑ | HOTA ↑ | Protocol |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| MOT15 | Chen et al. [48] | 53.0 | 75.5 | 29.1 | 20.2 | 5159 | 22,984 | 708 | 1476 | Private | |||

| Chen et al. [48] | 38.5 | 72.6 | 8.7 | 37.4 | 4005 | 33,204 | 586 | 1263 | Public | ||||

| Sadeghian et al. [31] | 37.6 | 71.7 | 15.8 | 26.8 | 7933 | 29,397 | 1026 | 2024 | 1 | Public | |||

| Keuper et al. [37] | 35.6 | 45.1 | 23.2 | 39.3 | 10,580 | 28,508 | 457 | 969 | Private | ||||

| Fang et al. [38] | 56.5 | 73.0 | 61.3 | 45.1 | 14.6 | 9386 | 16,921 | 428 | 1364 | 5.1 | Public | ||

| Xiang et al. [32] | 37.1 | 72.5 | 28.4 | 12.6 | 39.7 | 8305 | 29,732 | 580 | 1193 | 1.0 | Private | ||

| Chu et al. [39] | 38.9 | 70.6 | 44.5 | 16.6 | 31.5 | 7321 | 29,501 | 720 | 1440 | 0.3 | Public | ||

| Sun et al. [42] | 38.3 | 71.1 | 45.6 | 17.6 | 41.2 | 1290 | 2700 | 1648 | 1515 | 6.3 | Public | ||

| Zhou et al. [46] | 30.5 | 71.2 | 1.1 | 7.6 | 41.2 | 6534 | 35,284 | 879 | 2208 | 5.9 | Private | ||

| Dimitriou et al. [80] | 24.2 | 70.2 | 7.1 | 49.2 | 6400 | 39,659 | 529 | 1034 | Private | ||||

| MOT16 | Yoon et al. [49] | 22.5 | 70.9 | 25.9 | 6.4 | 61.9 | 7346 | 39,092 | 1159 | 1538 | 172.8 | Public | |

| Sadeghian et al. [31] | 47.2 | 75.8 | 14.0 | 41.6 | 2681 | 92,856 | 774 | 1675 | 1.0 | Public | |||

| Keuper et al. [37] | 47.1 | 52.3 | 20.4 | 46.9 | 6703 | 89,368 | 370 | 598 | Private | ||||

| Fang et al. [38] | 63 | 78.8 | 39.9 | 22.1 | 13,663 | 53,248 | 482 | 1251 | Public | ||||

| Xiang et al. [32] | 48.3 | 76.7 | 15.4 | 40.1 | 2706 | 91,047 | 543 | 0.5 | Private | ||||

| Zhu et al. [40] | 46.1 | 73.8 | 54.8 | 17.4 | 42.7 | 7909 | 89,874 | 532 | 1616 | 0.3 | Private | ||

| Zhou et al. [41] | 64.8 | 78.6 | 73.5 | 40.6 | 22.0 | 13,470 | 49,927 | 794 | 1050 | 18.2 | Private | ||

| Chu et al. [39] | 48.8 | 75.7 | 47.2 | 15.8 | 38.1 | 5875 | 86,567 | 906 | 1116 | 0.1 | Public | ||

| Peng et al. [43] | 67.6 | 78.4 | 57.2 | 32.9 | 23.1 | 8934 | 48,305 | 1897 | 34.4 | Private | |||

| Henschel et al. [100] | 47.8 | 47.8 | 19.1 | 38.2 | 8886 | 85,487 | 852 | Private | |||||

| Wang et al. [44] | 49.2 | 56.1 | 17.3 | 40.3 | 8400 | 83,702 | 882 | Public | |||||

| Mahmoudi et al. [34] | 65.2 | 78.4 | 32.4 | 21.3 | 6578 | 55,896 | 946 | 2283 | 11.2 | Public | |||

| Zhou et al. [35] | 40.8 | 74.4 | 13.7 | 38.3 | 15,143 | 91,792 | 1051 | 2210 | 6.5 | Private | |||

| Lan et al. [45] | 45.4 | 74.4 | 18.1 | 38.7 | 13,407 | 85,547 | 600 | 930 | Public | ||||

| Xu et al. [50] | 74.4 | 73.7 | 46.1 | 15.2 | 60.2 | Private | |||||||

| Ye et al. [51] | 62.8 | 79.4 | 63.1 | 34.4 | 17.2 | 12,463 | 54,648 | 701 | 983 | Private | |||

| wang et al. [52] | 50.4 | 47.5 | 18,370 | 69,800 | 1826 | Private | |||||||

| Yu et al. [53] | 75.6 | 80.9 | 75.8 | 43.1 | 21.5 | 9786 | 34,214 | 448 | 61.7 | Private | |||

| Dai et al. [60] | 63.8 | 70.6 | 30.3 | 30.6 | 7412 | 57,975 | 629 | 11.2 | 54.7 | Private | |||

| Hyun et al. [62] | 76.7 | 73.1 | 49.1 | 10.7 | 10,689 | 30,428 | 1420 | Private | |||||

| MOT17 | Keuper et al. [37] | 51.2 | 20.7 | 37.4 | 24,986 | 248,328 | 1851 | 2991 | Private | ||||

| Zhu et al. [40] | 48.2 | 75.9 | 55.7 | 19.3 | 38.3 | 26,218 | 263,608 | 2194 | 5378 | 11.4 | Private | ||

| Sun et al. [42] | 52.4 | 53.9 | 76.9 | 49.5 | 21.4 | 25,423 | 234,592 | 8431 | 14,797 | 6.3 | Public | ||

| Peng et al. [43] | 66.6 | 78.2 | 57.4 | 32.2 | 24.2 | 22,284 | 160,491 | 5529 | 34.4 | Private | |||

| Henschel et al. [100] | 51.3 | 47.6 | 21.4 | 35.2 | 24,101 | 247,921 | 2648 | Private | |||||

| Wang et al. [44] | 51.9 | 58.0 | 23.5 | 35.5 | 37,311 | 231,658 | 2917 | Public | |||||

| Henschel et al. [101] | 52.6 | 50.8 | 19.7 | 35.8 | 31,572 | 232,572 | 3050 | 3792 | Private | ||||

| Zhou et al. [46] | 61.5 | 59.6 | 26.4 | 31.9 | 14,076 | 200,672 | 2583 | Private | |||||

| Karunasekera et al. [33] | 46.9 | 76.1 | 16.9 | 36.3 | 4478 | 17.1 | Private | ||||||

| Xu et al. [50] | 73.1 | 73.0 | 45 | 16.8 | 59.7 | Private | |||||||

| Ye et al. [51] | 61.7 | 79.3 | 62.0 | 32.5 | 20.1 | 32,863 | 181,035 | 1809 | 3168 | Private | |||

| Yu et al. [53] | 73.8 | 81.0 | 74.7 | 41.7 | 23.2 | 27,999 | 118,623 | 1374 | 61.0 | Private | |||

| Wang et al. [54] | 79.5 | 79.1 | 29,508 | 84,618 | 1302 | 2046 | 24.7 | 63.9 | Private | ||||

| Gao et al. [55] | 70.2 | 65.5 | 43.1 | 15.4 | 30,367 | 115,986 | 3265 | 10.7 | Private | ||||

| Nasseri et al. [56] | 80.4 | 77.7 | 28,887 | 79,329 | 2325 | 4689 | 63.3 | Private | |||||

| Zhao et al. [57] | 81.2 | 78.1 | 54.5 | 13.2 | 17,281 | 86,861 | 1893 | Private | |||||

| Aharon et al. [58] | 80.5 | 80.2 | 22,521 | 86,037 | 1212 | 4.5 | 65.0 | Private | |||||

| Seidenschwarz et al. [59] | 61.7 | 64.2 | 26.3 | 32.2 | 1639 | 50.9 | Private | ||||||

| Dai et al. [60] | 63.0 | 69.9 | 30.4 | 30.6 | 23,022 | 183,659 | 1842 | 10.8 | 54.6 | Private | |||

| Zhang et al. [61] | 62.1 | 65.0 | 24,052 | 188,264 | 1768 | Public | |||||||

| Zhang et al. [61] | 77.3 | 75.9 | 45,030 | 79,716 | 3255 | Private | |||||||

| Hyun et al. [62] | 76.5 | 73.6 | 47.6 | 12.7 | 29,808 | 99,510 | 3369 | Private | |||||

| Chen et al. [63] | 76.5 | 72.6 | 59.7 | Private | |||||||||

| Cao et al. [64] | 78.0 | 77.5 | 15,100 | 108,000 | 1950 | 2040 | 63.2 | Private | |||||

| Wan et al. [65] | 67.2 | 66.5 | 38.3 | 20.5 | 17,875 | 164,032 | 2896 | Public | |||||

| Wan et al. [65] | 75.6 | 76.4 | 48.8 | 16.2 | 26,983 | 108,186 | 2394 | Private | |||||

| Du et al. [66] | 79.6 | 79.5 | 1194 | 7.1 | 64.4 | Private | |||||||

| Zhang et al. [67] | 80.3 | 77.3 | 25,491 | 83,721 | 2196 | 29.6 | 63.1 | Private | |||||

| MOT20 | Xu et al. [50] | 59.7 | 67.8 | 66.5 | 7.7 | 54.0 | Private | ||||||

| Ye et al. [51] | 54.9 | 79.1 | 59.1 | 36.2 | 23.5 | 19,953 | 211,392 | 1630 | 2455 | Private | |||

| Wang et al. [52] | 51.5 | 44.5 | 38,223 | 208,616 | 4055 | Private | |||||||

| Yu et al. [53] | 67.2 | 79.2 | 70.5 | 62.2 | 8.9 | 61,134 | 104,597 | 4243 | 56.5 | Private | |||

| Wang et al. [54] | 76.5 | 76.8 | 19,247 | 101,290 | 971 | 1190 | 15.7 | 62.8 | Private | ||||

| Nasseri et al. [56] | 76.8 | 76.4 | 27,106 | 91,740 | 1446 | 3053 | 61.4 | Private | |||||

| Zhao et al. [57] | 78.1 | 76.4 | 70.5 | 7.4 | 25,413 | 86,510 | 1332 | Private | |||||

| Aharon et al. [58] | 77.8 | 77.5 | 24,638 | 88,863 | 1257 | 2.4 | 63.3 | Private | |||||

| Seidenschwarz et al. [59] | 52.7 | 55.0 | 29.1 | 26.8 | 1437 | 43.4 | Private | ||||||

| Dai et al. [60] | 60.1 | 66.7 | 46.5 | 17.8 | 37,657 | 165,866 | 2926 | 4.0 | 51.7 | Private | |||

| Zhang et al. [61] | 55.6 | 65.0 | 12,297 | 216,986 | 480 | Public | |||||||

| Hyun et al. [61] | 66.3 | 67.7 | 41,538 | 130,072 | 2715 | Private | |||||||

| Hyun et al. [62] | 72.8 | 70.5 | 64.3 | 12.8 | 25,165 | 112,897 | 2649 | Private | |||||

| Chen et al. [63] | 67.1 | 59.1 | 50.4 | Private | |||||||||

| Cao et al. [64] | 75.5 | 75.9 | 18,000 | 108,000 | 913 | 1198 | 63.2 | Private | |||||

| Wan et al. [65] | 70.4 | 71.9 | 65.1 | 9.6 | 48,343 | 101,034 | 3739 | Private | |||||

| Du et al. [66] | 73.8 | 77.0 | 25,491 | 83,721 | 2196 | 1.4 | 62.6 | Private | |||||

| Zhang et al. [67] | 77.8 | 75.2 | 26,249 | 87,594 | 1223 | 17.5 | 61.3 | Private |

| Tracker | MOTA ↑ | MOTP ↑ | MT ↑ | ML ↓ | FP ↓ | FN ↓ | IDS ↓ | Frag ↓ | Type |

|---|---|---|---|---|---|---|---|---|---|

| ine Chu et al. [47] | 77.1 | 78.8 | 51.4 | 8.9 | 760 | 6998 | 123 | Car | |

| Simon et al. [85] | 75.7 | 78.5 | 58.0 | 5.1 | All | ||||

| Zhang et al. [86] | 84.8 | 85.2 | 73.2 | 2.77 | 711 | 4243 | 284 | 753 | All |

| Scheidegger et al. [70] | 80.4 | 81.3 | 62.8 | 6.2 | 121 | 613 | Car | ||

| Frossard et al. [87] | 76.15 | 83.42 | 60.0 | 8.31 | 296 | 868 | All | ||

| Hu et al. [71] | 84.5 | 85.6 | 73.4 | 2.8 | 705 | 4242 | All | ||

| Weng et al. [88] | 82.2 | 84.1 | 64.9 | 6 | 142 | 416 | Car | ||

| Zhao et al. [36] | 71.3 | 81.8 | 48.3 | 5.9 | All | ||||

| Weng et al. [102] | 86.2 | 78.43 | 0 | 15 | Car | ||||

| Weng et al. [102] | 70.9 | Pedestrian | |||||||

| Weng et al. [102] | 84.9 | Cyclist | |||||||

| Luiten et al. [105] | 84.8 | 681 | 4260 | 275 | Car | ||||

| Wang et al. [106] | 68.2 | 76.6 | 60.6 | 12.3 | 111 | 725 | All | ||

| Sualeh et al. [89] | 78.10 | 79.3 | 70.3 | 9.1 | 21 | 111 | Car | ||

| Sualeh et al. [89] | 46.1 | 67.6 | 30.3 | 38.7 | 57 | 500 | Pedestrian | ||

| Sualeh et al. [89] | 70.9 | 77.6 | 71.4 | 14.3 | 3 | 24 | Cyclist | ||

| Shenoi et al. [90] | 85.7 | 85.5 | 98 | Car | |||||

| Shenoi et al. [90] | 46.0 | 72.6 | 395 | Pedestrian | |||||

| ine Zhou et al. [46] | 89.4 | 85.1 | 82.3 | 2.3 | 116 | 744 | All | ||

| Karunasekera et al. [33] | 85.0 | 85.5 | 74.3 | 2.8 | 301 | All | |||

| Zhao et al. [57] | 91.2 | 86.5 | 2.3 | Car | |||||

| Zhao et al. [57] | 67.7 | 49.1 | 14.5 | Pedestrian | |||||

| Aharon et al. [58] | 90.3 | 250 | 280 | Car | |||||

| Aharon et al. [58] | 65.1 | 204 | 609 | Pedestrian | |||||

| Wang et al. [107] | 90.4 | 85.0 | 84.6 | 7.38 | 2322 | 962 | Car | ||

| Wang et al. [107] | 52.2 | 64.5 | 35.4 | 25.4 | 1112 | 2560 | Pedestrian | ||

| Sun et al. [108] | 86.9 | 85.7 | 83.1 | 2.9 | 271 | 254 | Car |

| Dataset | Tracker | PR-MOTA ↑ | PR-MOTP ↑ | PR-MT ↑ | PR-ML ↓ | PR-FP ↓ | PR-FN ↓ | PR-IDS ↓ | PR-FRAG ↓ |

|---|---|---|---|---|---|---|---|---|---|

| UA_DETRAC | Bochinski et al. [68] | 30.7 | 37 | 32 | 22.6 | 18,046 | 179,191 | 363 | 1123 |

| Kutschbach et al. [72] | 14.5 | 36 | 14 | 18.1 | 38,597 | 174,043 | 799 | 1607 | |

| Hou et al. [69] | 30.3 | 36.3 | 30.2 | 21.0 | 20,263 | 179,317 | 389 | 1260 | |

| Sun et al. [42] | 20.2 | 26.3 | 14.5 | 18.1 | 9747 | 135,978 | |||

| Chu et al. [47] | 19.8 | 36.7 | 17.1 | 18.2 | 14,989 | 164,433 | 617 | ||

| Gloudemans et al. [103] | 46.4 | 69.5 | 41.1 | 16.3 | |||||

| Sun et al. [104] | 12.2 | 10.8 | 14.9 | 36,355 | 192,289 | 228 | 674 | ||

| Messoussi et al. [109] | 31.2 | 50.9 | 28.1 | 18.5 | 6036 | 170,700 | 252 | ||

| Wang et al. [110] | 22.5 | 35.2 | 15.5 | 10.1 | 1563 | 3186 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gad, A.; Basmaji, T.; Yaghi, M.; Alheeh, H.; Alkhedher, M.; Ghazal, M. Multiple Object Tracking in Robotic Applications: Trends and Challenges. Appl. Sci. 2022, 12, 9408. https://doi.org/10.3390/app12199408

Gad A, Basmaji T, Yaghi M, Alheeh H, Alkhedher M, Ghazal M. Multiple Object Tracking in Robotic Applications: Trends and Challenges. Applied Sciences. 2022; 12(19):9408. https://doi.org/10.3390/app12199408

Chicago/Turabian StyleGad, Abdalla, Tasnim Basmaji, Maha Yaghi, Huda Alheeh, Mohammad Alkhedher, and Mohammed Ghazal. 2022. "Multiple Object Tracking in Robotic Applications: Trends and Challenges" Applied Sciences 12, no. 19: 9408. https://doi.org/10.3390/app12199408

APA StyleGad, A., Basmaji, T., Yaghi, M., Alheeh, H., Alkhedher, M., & Ghazal, M. (2022). Multiple Object Tracking in Robotic Applications: Trends and Challenges. Applied Sciences, 12(19), 9408. https://doi.org/10.3390/app12199408