1. Introduction

Misinformation is low-cost, wide-spreading, and large-scale replicable on social media, which has significantly influenced democracy, justice, and public trust [

1]. Especially with the support of new multimedia technology, such as short videos on social media, misinformation spread has transcended countries and languages. Research indicates that the proportion of false claims is only approximately 1% of total news dissemination on all platforms, while the percentage of false claims is almost more than 6% of total tweets on social media [

2]. Human fact-checking is of high-quality but is time-consuming, it refers to specifically confronting false information with a deceptive purpose, such as offensive news, political deception, and dramatic online rumors around the world. The absence of professional auditors, particularly who understand minority languages, further increases the challenge of multilingual fact-checking. Thus, multilingual automated fact-checking has become urgent to research, including the release of multilingual fact-checking datasets and inventing computational approaches.

Multilingual fact-checking is a special fact-checking task that aims to leverage related evidence from a multilingual text corpus to verify a multilingual textual claim.

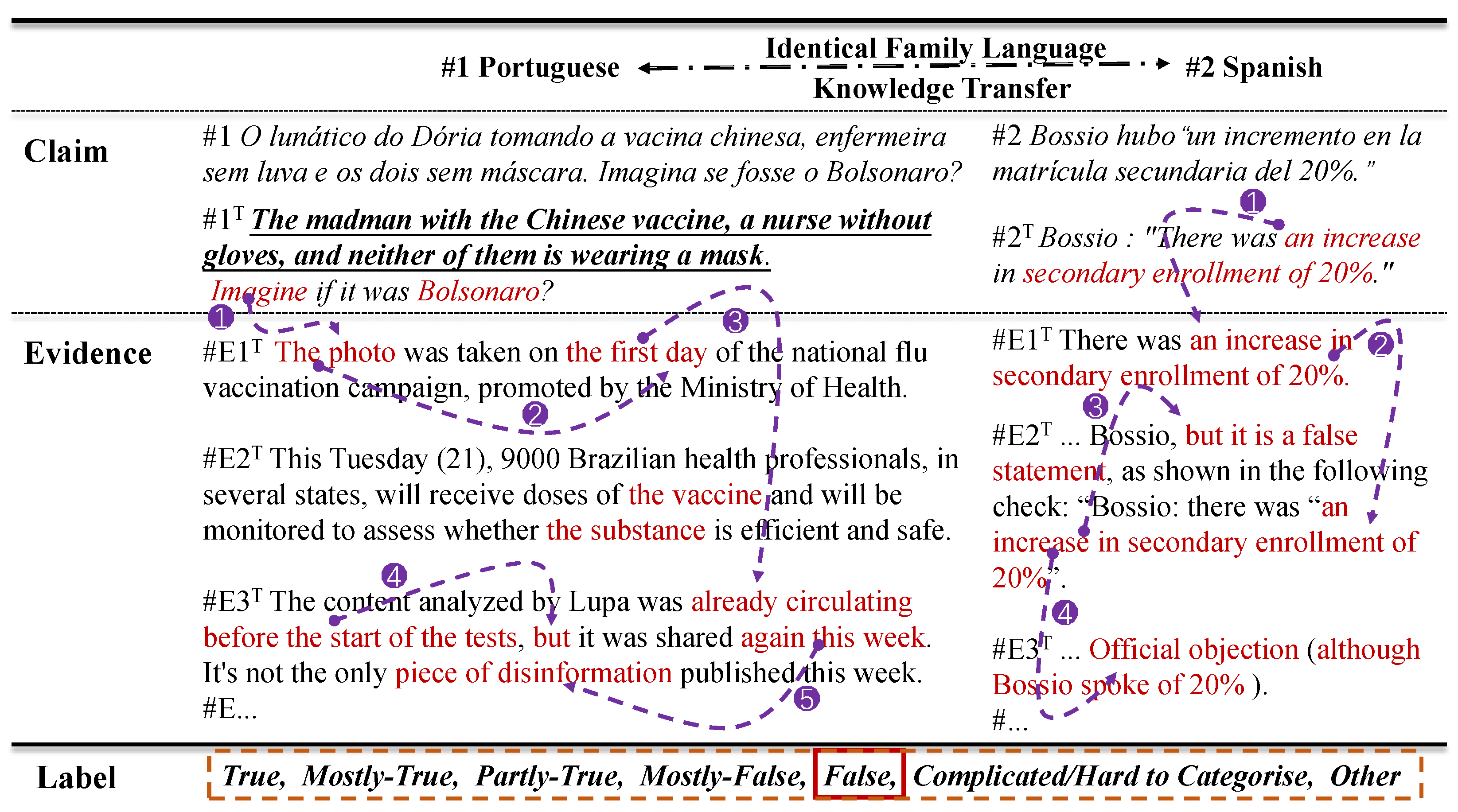

Figure 1 for example, shows two such samples from Portuguese and German. In the special task, how to effectively retrieve, align, integrate, infer, and verify is the major challenge and still remains an open question, especially leveraging resources from different languages in multilingual scenes.

In previous studies, a possible approach for multilingual verification was to utilize translation systems (e.g., Google Translator) for news verification based on multilingual evidence, which hypothesized that fake news tended to be less widespread across languages as compared to authoritative news [

3]. However, the threshold value of the modeling pipeline needed to be set manually. In consequence, the end-to-end frameworks based on multilingual fine-tuning transformers were designed to encode multilingual claims and evidence for fact-checking. Schwarz, S et al. [

4] proposed the EMET framework based on a convolutional neural network to classify the reliability of messages posted on social media platforms. Shahi and Nandini [

5] built a BERT classifier to detect the false class and other fact-check articles. Roy, A. and Ekbal, A. [

6] next proposed an automated multi-modal content approach based on the multilingual fact verification system, which automated the task of fact-verifying websites, and provided evidence for each judgment. Nevertheless, they merely utilized simple evidence combination methods such as concatenating the evidence or just dealing with each evidence–claim pair.

Going further, in addition to considering the use of pre-trained models to achieve multilingual semantics, another promising direction is to distill knowledge from high-resource to low-resource languages. Kazemi, A. et al. [

3] adopted the teacher–student framework to train a multilingual embedding model based on the XLM-R model, in which a high-quality teacher model is adopted to promote the learning ability of a student model. With the expansion of language categories, a potentially troublesome problem in fine-grained multilingual fact-checking in this way is that the teacher model has maybe never seen the statements expressed in a minority language. Thus, the intensive research of few-shot or zero-shot multilingual fact-checking has been subject to considerable discussion. Martín, A. et al. [

7] proposed FaceTeR-Check architecture for semi-automated fact-checking, which enables several modules to evaluate semantic similarity to calculate natural language inference and to retrieve information from online social networks. The multilingual fact-checking tool could verify new claims, extract related evidence, and track the evolution. Lee, N. et al. [

8] leveraged an evidence-conditioned perplexity score from the masked language model for claim verification, which is an unsupervised method. In the fine-gained scenario, Gupta, A. and Srikumar, V. [

9] determined the veracity of a claim by adopting an attention-based evidence aggregator that used a multilingual transformer-based model, and concatenated the additional metadata of a claim to augment the model.

The unified models based on pre-training and fine-tuning are the mainstream fact-checking paradigm, for example, designing the evidence-conditioned perplexity function or evidence aggregator based on the attention mechanism for fact verification, which is mainly trained for learning representation patterns and joint probability distributions of factual claims and pieces of evidence. The methods indicated a feasible inference idea; the core of this is that with deep semantic understanding from multilingual pre-training models, a downstream classifier can be progressively fine-tuned based on a training dataset in a certain scale. In consequence, sufficient evidence of aggregation and metadata supplementation for fact verification contributes to strengthening the authenticity inference calculation. However, in the scenario of few-shot or zero-shot samples across domains and languages, the learning perception ability of the previous models is extremely limited. The main reasons are as follows: On the one hand, due to the lack of large-scale data driving, the model cannot be quickly and finely fine-tuned to the downstream tasks, and the potential of pre-training models is desired to be further stimulated. On the other hand, the existing coarse-grained evidence aggregation mechanism, which requires more fine-grained evidence chains, cannot provide acceptable evidence. Last, but not the least, the previous models have not considered leveraging the consistency of the same language family in the fact statement representation paradigm to deal with the correlation learning mechanism of the lifting model, thus, strengthening the transfer learning ability of the model in few-/zero-shot scenarios.

To deal with the above issues, we propose the Joint Prompt and Evidence Inference Network (henceforth, PEINet) framework for multilingual fact-checking in the zero-shot scene, which is inspired by the human cognitive paradigm. When the human brain judges whether a fact is true or false, the first decision-making consciousness is to take aim at the claim or title. If there is an excessively exaggerated or obviously outrageous expression in the statement, we can directly determine that it is false. Of course, for an obscure and complicated statement, next it is necessary to retrieve relevant evidence and extract reasonable clues, so as to generate final judgment. Furthermore, in the face of fact-checking in terra incognita, relevance cognition plays an important role in the brain learning mechanism. In general, claims in a similar-expression paradigm may have consistent authenticity, and similar fact statements described in the same language family may share the like expression paradigms.

In order to better clarify the mechanism, we take a concrete example, as shown in

Figure 1. Through comprehensive comparison and observation of examples #1 and #2, we discover that the claim in #1 obviously contains exaggerated and deliberate contents that are highlighted with roughening and underlining in the figure, which evidently is false from logical empiricism. Furthermore, the claim in #2 is relatively objective. Next, as shown in the purple dotted lines and order numbers in circles in the figure, the fine-grained clue chains of evidence in red font explored from the claim and retrieved evidence can verify that the claim is clearly false. Further consideration is given to the fact that Portuguese and Spanish belong to the common language family ‘Indo-Aryan: Romance’. By strengthening the interaction of different claims in the same language family, the model can transfer knowledge between the claims. In other words, even if the model only is trained with the Portuguese claims, the Spanish claims in the test can be verified by the model. Specifically, in PEINet, we first design a language family-shared embedding layer to strengthen the model for the inter-semantic understanding between different claims belonging to the same language family. Then, we design a co-interactive cognitive inference layer, in which a prompt-based claim verification module is responsible for directly judging the claim, and the fine-grained evidence aggregating module takes the chance of inferring the deep-seated evidence clues. Finally, to achieve a unified cognitive architecture, the multi-task learning mechanism is adopted for building the affine classification layer. The PEINet model, as a novel algorithm, can be adapted to the multilingual fact-checking social media platform to automatically identify and filter misinformation, fake news, political deception, online rumors, etc. For the information published by the social media platforms, the system automatically submits it to the algorithm for review. Then, the algorithm can verify the claim and choose whether to block it or strictly review it manually according to the classification label. In particular, when a piece of misinformation is refuted, and is deliberately repackaged in another language, the algorithm can replace manual audits efficiently and greatly relieve the pressure on auditors.

Experiments on the challenging real-world datasets X-Fact demonstrate that our model outperforms several state-of-the-art scores in in-domain, out-of-domain, and zero-shot tests in terms of the macro-F1 evaluation metric. Moreover, the comprehensive experimental analysis confirms the validity and the reasonableness of our model. To sum up, our main contributions are as follows:

We propose PEINet, a novel framework inspired by the human cognitive decision paradigm to verify multilingual claims in out-of-domain and zero-shot scenarios.

We explore that the shared encoding mechanism via language family metadata augmentation strengthens authenticity-dependent learning between cross-language claims. Moreover, we investigate the multilingual fact-checking model that integrates prompt-based judgment and further fine-grained evidence aggregation inferences for the final claim verification based on the multi-task learning mechanism.

We perform exhaustive empirical evaluation and ablation studies to demonstrate our model’s effectiveness, especially in zero-shot scenarios, and further discuss the potential optimizable issues.

3. Methods

In this section, we introduce our PEINet framework for zero-shot multilingual fact-checking, which is the main contribution of this paper.

Figure 2 shows the framework, which mainly introduces three components: (1) An LF-aware shared encoding layer (details in

Section 3.2), which enhances authenticity-dependent learning between multilingual samples by encoding the language family metadata. (2) A co-interactive cognitive inference layer (details in

Section 3.3), in which the prompt-based claim verification module verifies whether the claim is true or false depending on the claim contents. Furthermore, the fine-grained evidence aggregating module obtains recursively valuable evidence to verify the claim again. (3) A unified affine classification layer (details in

Section 3.4), which leverages the multi-task learning mechanism to achieve the final classification uniformly.

3.1. Problem Formulation

First, we describe the mathematical modeling of the multilingual fact-checking problem. For a dataset , in which and , there are n samples and m labels. Giving, arbitrarily, an input , which includes a claim , the corresponding metadata , and N pieces of retrieved evidence , the model output is the truthfulness label of the claim . We aim to train the model that can fit a more accurate function so that the predict label is more probable to be consistent with the true label .

3.2. LF-Aware Shared Encoding Layer

We employ mBERT [

38] as the shared encoder by extracting the [CLS] embedding token as the representation, where the [CLS] is a special classification token and the last hidden state of mBERT corresponding to this token (h[CLS]).

Specifically, the claim and retrieved evidences are, respectively, fed into mBERT for obtaining the corresponding semantic embedding vectors. Notably, the feature of language family (LF) is extracted beforehand from the metadata for augmenting the cross-language comprehension ability. The form of the LF feature is a string template modified by special characters [unused*], e.g., m = “[unused1], ar, [unused1], [unused2], Afro-Asiatic, [unused2].”, where the [unused1] and [unused2] are also the special tokens for fixed mark. Considering there are abnormal characters in the claims and evidence, we also replace these invalid with a null character. Subsequently, the new input is contacted as follows:

Next, the input is re-encoded as follows:

3.3. Co-Interactive Cognitive Inference Layer

3.3.1. Prompt-Based Claim Verification Module

As we mentioned before, the prompt is well developed for few-/zero-shot classification tasks such as multi-class fact verification and other natural language inferences [

39]. Considering that most auto-generating prompts cannot achieve a comparable performance, we design task-specific manual prompts for fact-checking [

40,

41]. In this section, we introduce the details of the prompt-based claim verification module, and illustrate how to adapt the prompt-tuning for fact-checking inferences.

As a cloze-style task for tuning PLMs, there are template and a set of label words formally. For each input instance x, the template is designed to map x to the prompt input . Notably, the template defines whether the position of input tokens is needed to be adjusted and whether to pad other additional tokens.

Since the task contains seven classification labels, as described in detail in

Section 4.1, at least two parameters are required to be placed into the template for filling the label words. Specifically, the template is designed as:

where the

X is the content strings.

Accordingly, the template maps the input of claim sequence

to

, which can be formalized as Equation (

4):

where the symbol + only represents string concatenation.

Then, we use the mBERT to encode all tokens of the input sequence into corresponding embedding vectors

. Next, by inputting

into mBERT, the embedding vectors

in hidden layers are generated based on the feed-forward calculation. Given

, we calculate the probability that the token

v is the cloze answer of the masked position, as shown in Equation (

5):

where

is the embedding vector of the token

v in the mBERT.

Further, we bridge the mapping relationship between the set of labels and the set of words, which is expressed as the affine function in Equation (

6):

As mentioned above, the prompt template contains multiple [MASK] tokens, and the label probability is, thus, considered with all masked positions to make final predictions. The formula is Equation (

7):

where

n is the number of masked positions in

, and

is to map the class

y to the set of label words

for the

j-th masked position

. The

corresponding to the maximum probability value is the prediction label.

Furthermore, the optimization objective in the prompt-based module is to minimize the loss function in Equation (

8):

3.3.2. Fine-Grained Evidence Aggregating Module

For the mentioned input, we feed the claim

and the retrieved evidence

into mBERT to achieve the corresponding representation. This is shown as follows:

Note that the embedding vectors of the claim and evidences are generated from the final hidden state of the special token [CLS]. Next, we extract the potential graph representations of the evidence nodes in the fine-gained evidence aggregating network, where information passing is guided by the noteworthy elements in the claim.

Semi-supervised graph networks have been used in the past to capture hidden information for a classification task [

42,

43]. Inspired by the previous studies, we propose a Recursive Graph Attention Network (RGAT) for evidence aggregating and reasoning.

In order to bridge information gaps between different pieces of evidence, a fully connected graph neural network is firstly constructed, where each network node represents one piece of evidence. In addition, every node is on a self-loop, and mainly considers the mining of fine-grained information and the flowing interaction of information from itself. Then, the three-layer recurrent network that deductively generates connected evidence chains is formulated for evidence reasoning. Specifically, the hidden states of nodes at layer

l in the network are obtained as learning feature representations, as shown in Equation (

10):

where

and

F are the number of node features. The initial hidden state of each evidence node

is initialized by the evidence presentation:

.

As far as the correlation coefficients between a node

i and its neighbor

is concerned, we compute the coefficients as Equation (

11):

where

denotes the neighbor set of node

i,

and

are the weight matrix, and the operation

indicates the concatenation of different variables.

Next, the coefficients

are normalized with the softmax function, as shown in Equation (

12):

Finally, through the recursive interactive fusion of evidence nodes layer by layer, the feature representation vector for node

i at layer

l is estimated as Equation (

13):

Following this, the final hidden states of evidence nodes from stacking

T layers are extracted as the component embedding representation of the evidence reasoning, which is seen in Equation (

14):

As the next important step, we construct the evidence attention aggregator to gather the fine-grained information from the final embedding representation of the evidence reasoning. Specifically, what information is aggregated preferentially depends on how important it is to the claim, which is measured based on the multi-head attention mechanism [

44], as shown in Equation (

15):

In Equation (

15), the

is calculated by Equation (

16):

where distinct parameter matrices

,

are learned for each head

and the extra parameter matrix

projects the concatenation of the

head outputs (each in

) to the output space

. In the multi-head setting, we call

the dimension of each head and

the total dimension of the query/key space.

Once the final state

is obtained, we employ the multilayer perceptron to get the prediction

in Equation (

17):

where

and

are parameters, and

C is the number of prediction labels.

Next, the cross-entropy is used as a loss function for optimizing the RGAT model, as shown in Equation (

18):

3.4. Unified Affine Classification Layer

To combine the prompt-based claim verification module and the fine-grained evidence aggregation module uniformly for generating the final decision, we design the unified affine classification layer, which chiefly integrates the classification labels from the different module’s output. In other words, the last layer aims to strengthen the joint learning between multi-tasks for final efficiency and accurate predictions.

On the whole, the multi-task learning target is considered as the model optimizing problem concerning multiple objectives. Specifically, the naive approach to combining multi-objective losses would be to perform a weighted linear sum of the above losses for each module task, as shown in Equation (

19):

where the

and

are, respectively, the abstract expression of the loss function

and

,

,

, and

.

However, there are a series of issues employing the naive calculation method. Namely, model performance is extremely sensitive to weight values. These weight hyper-parameters are time-consuming to tune for the best performance, and are increasingly difficult with large models. Thus, we derive the unified loss function based on the previous work that proposed a principled approach to multi-task deep learning [

45]—to learn optimal task weightings using ideas from probabilistic modeling based on the epistemic uncertainty and aleatoric uncertainty. The core calculation formula is shown in Equation (

20):

where

and

are the relative weight of the losses

and

adaptively, and the

and

are, respectively, the abstract expression of the weight parameters

and

. On the other hand, in order to facilitate the modeling calculation, the

and

are, respectively, the approximate expression of the weight parameters

and

.

More concretely, the final optimization objective can be seen as minimizing the loss function with respect to and learning the weight of in the losses and . As indicates that the noise parameter for the label increases, the weight of decreases. In other words, as the noise decreases, the weight of the respective objective increases. This last objective can be seen as learning the relative weights of the losses for each output. Large scale values will decrease the contribution of , whereas small will increase its contribution, and similarly for of .

3.5. Procedure

In this section, we focus on revealing the model construction and optimization of Algorithm 1 in the training process, which not only includes the input and output data, but also the pre-processing and running process.

| Algorithm 1: The process of training PEINet. |

![Applsci 12 09688 i001]() |

For better intuitive understanding, the professional function namespace of the Pytorch framework is employed in the pseudocode. As a whole, the inference forecasting of the development and test process can be executed directly based on the model trained by the algorithm.

4. Experiments and Results

4.1. Datasets and Evaluation Metrics

We conduct our experiments on the large-scale benchmark dataset X-FACT, which includes 31,189 short claims labeled for veracity by expert fact-checkers and covers 25 typically diverse languages across 12 language families. According to the different rating scales for categorization, the label set is divided into seven labels by fact-checkers: True, Mostly True, Partly True, Mostly False, False, Complicated/Hard to Categorise, and Other.

Table 1 shows the statistics of the dataset. Following the evaluation criteria of previous studies, we likewise report average accuracy, macro F1 scores, and standard deviations in the experience with different random seeds.

The dataset, shown in the

Appendix A.1, provides a challenging multilingual fact-checking evaluation benchmark that measures in-domain classification, out-of-domain generalization, and zero-shot capabilities of the multilingual models. More specifically, the Test1 set contains fact-checks from the same languages and sources as the training set. Second, the out-of-domain test set (Test2) consists of claims from the same languages as the training set but are from a different fact-checking website source. The third test set (Test3) is the zero-shot set, which seeks to measure the cross-lingual transfer abilities of fact-checking systems. Test3 accommodates claims from the new language not contained in the training set. In general, the dataset is specially created to evaluate the various performances of the models that not only solve the difficulty of these multilingual fact-checking sets (Test1), but also fit both source-specific patterns (Test2) and language-specific patterns (Test3).

4.2. Setting

The core details of the model’s relevant parameters are provided for better evaluation and reproducibility.

Firstly, the hyper-parameter settings of the model architecture are as follows: In the shared encoding layer, the embedding layer of 50 dimensions is randomly initialized to encode the language family feature. For claim-evidence input pairs, all retrieved evidences related to the claim are inputted into the model, totaling five pieces. To maintain uniform input length, the input maximum sequence length is 360 due to constraints on the GPUs memory, where parts longer than the maximum length are truncated, and the shorter sequences are zero-padded. Next, we used the mBART-base model for all of our experiments. This model has 12 layers each with a hidden size of 768, the number of attention heads equal to 12, and the value for dropout in the hidden layer is 0.2. The total number of parameters in this model is 125 million. In the recursive graph attention network, we attempt to stack 0–3 recursive layers and analyze the effect of different layer numbers. Furthermore, the number of multi-attention heads is set to 0, 8, and 16, respectively, for evaluating the evidence aggregating performance. In the classification layer, the dynamic weights are randomly initialized and gradually optimized in iteration. Furthermore, the class label number is 7, which is consistent with the number of task categories.

Next, regarding the training of the model, we optimize the model via the AdamW optimizer for the best performance, in which the initial learning rate is 1.95 × 10, and the learning rate warm-up steps are set to 10% of the total training steps. In addition, the maximum batch size is 8 and the train epoch number is 10. Once each epoch training is completed, we evaluate the model performance and save the model once. All our models were run with four random seeds (seed = [1; 2; 3; 4]).

Lastly, for the experimental environment, all algorithm codes are developed based on the PyTorch framework, which is run on the NVIDIA GeForce RTX 3090 machine (24 GB VRAM) with a GPU accelerator. Furthermore, the average training time of our model is about 160 min. The experiments can be sped up with distributed data-parallel training via multiple GPUs on multiple computing machines.

4.3. Overall Performance

We compare our methods with the following several models, including top-performing models reported with the X-FACT dataset [

9], and homogeneous models retrieved on the same dataset [

42].

Majority. The model directly generates classification results based on the majority label of the dataset distribution, i.e., Mostly False.

Claim-Only. The model is only inputted with the textual claim, basically treating the task as a multi-class problem.

Attn-EA. The procedure is emulated by developing an attention-based evidence aggregation model that operates on evidence documents retrieved from web searches with the claim using Google.

Graph-EA. The framework for claim verification utilizes the BERT sentence encoder, the evidence reasoning network, and an evidence aggregator to encode, propagate, and aggregate information from multiple pieces of evidence.

Common and Different Aspects. In addition to the Majority model, which is calculated based on statistical methods, other models are designed based on the common architecture of a deep neural network, which leverages the mbert model to encode the claims and pieces of evidence. The core distinction between other models is the downstream network structure of the basic semantic encoder. Furthermore, the different network modules can be modeled to extract different features, which determines the final performance superiority of multilingual claim verification.

Metadata Augmenting. As far as metadata augmenting is concerned, to strengthen the model performance, the above models mainly adopt the scheme whereby the input is increased with additional metadata, and is represented as a sequence of the key-value form. In detail, the metadata includes the language, website name, claimant, claim date, and review date. If a certain field is not available for a claim, the value is set by none. Unlike the previous scheme, we only embed the metadata of language and language family for augmenting performances.

Table 2 reports the performance of our model and other models under the same evaluation conditions. Compared with the above models, PEINet has achieved state-of-the-art scores. Firstly, by vertically comparing model performance in training, validation, and testing, it can be seen that our model has no over-fitting. Next, by comparing the performance of the model on Test1 horizontally, the PEINet model outperforms the baseline models in the in-domain test, which demonstrates that the model has superior performance in multilingual automated fact-checking. Furthermore, our model significantly also outperforms previous methods not only on the Test2 set, but also on the Test3 set in further analysis, which means that our model has a better performance in both out-of-domain generalization, and zero-shot capabilities. The overall performance advantage of the model suggests that our motivation of the human fact-checking cognitive paradigm is valid and reasonable. In a separate analysis of the degree of performance superiority in three different test sets, our model performs best in Test3, which further demonstrates the advantages of the model in few-/zero-shot scenarios.

4.4. Ablation Study

To investigate the effect of each component in PEINet, a concise summary of ablation analysis by removing different modules of our model is shown in

Table 3. We employ ‘-UnifiedLossLayer’, ‘-FEAModule’, ‘-PCVModule’, and ‘-LFEncoderLayer’, which, respectively, represents: unified multi-task loss function, fine-grained evidence aggregating module, prompt-based claim verification module, and LF-shared encoding module. Individually, ‘-UnifiedLossLayer’ represents that the multi-task learning mechanism is not adopted, and both

and

are 0.5. Next, ‘-FEAModule’ means that the recursive graph attention network is replaced by the three-layer fully connected neural network. Then, ‘-PCVModule’ indicates that the prompt learning template is not employed, and the corresponding module is remodeled based on the claim embedding the special token [CLS]. Last, ‘-LFEncoder’ represents that the language family feature is not encoded, and only other mete-data features are adopted to strengthen the model.

The empirical results show that first, the removal of different components suffers different degrees of performance degradation. Second, the performance of the model without the fine-grained evidence aggregating module is subjected to a large reduction, which indicates that it is necessary to leverage the recursive graph attention between the claims and pieces of evidence to verify the claim, thus, contributing to boosting the final performance. Finally, without language family embedding, the model performance uniformly declined to a certain extent in each test scenario, which fully embodies the key role of language family metadata augmentation.

4.5. Effectiveness of the Prompt Template

To verify the impact of different designed prompts, we manually design language-special and sequence-special contrast experiments. Therein, for language-special setting, except taking English as the prompt language, the Portuguese and Indonesian language are selected as representative languages, which belong to the top proportions of claim language. Furthermore, to evaluate whether there is an effective contribution of the language-special prompt, we also adopt the null prompt method, which simply concatenates inputs and the [MASK] tokens. As

Table 4 shows, language-special prompts in correspondence with the source language of claim do not perform best in our experiments, and the null prompt also limits the performance. On the contrary, we observe that the language of prompts close to the source language of the claim label can effectively boost the model performance. The main reason is that on one head, the English prompt templates are better in line with the claim label. On the other head, errors may exist in the translated verbalizer. For a sequence-special setting, the experimental results suggest that template T1 in typical narration outperforms template T2 in a flashback.

4.6. Effectiveness of RGAT Parameters Setting

Next, we focus on the effect of the different parameters in the RGAT module. In our observation, from the results in

Table 5, the 8-head attention aggregator model with two recursive layers achieves the best results, which indicates that claims from the obscure and complicated subset require multi-step evidence chain propagation.

We further analyze the results from different angles. From the perspective of model layer numbers, the performance of a model with three recursive layers is inferior to that of a model with two recursive layers, which suggests that the model is overfitting with the increase in layers. On the other head, when analyzing the parameters of the attention heads, the results show that the performance of the 16-head attention aggregators is overall lower than that of the 8-head models, which suggests that the multi-head attention mechanism recreates the role of information aggregation, and the redundancy errors are gradually introduced with the increase in multi-attention heads. Regarding the whole analysis, the results demonstrate the ability of our model to verify the claims with recursive excavation and aggregation of evidence.

4.7. Case Study and Error Analysis

In this section, we focus on the effect of error propagating based on experimental findings. We select representative samples from the train, dev, and test sets, which may reflect the prominent problem that PEINet confronts.

Through our analysis findings of the typical error cases, the retrieved evidence—a set of homogeneous snippets to classify the claim—may be the primary factor leading to the misjudgment of the model, which is exacerbated by space-limited abbreviation writing. For example, in

Figure 3, to verify the claim “Wandering outside the home? Fire shoot in Malaysia with Drone.”, the pieces of evidence retrieved are almost indistinguishable–-the #Evi1 and #Evi5 are almost similar, and the #Evi2, #Evi3, and #Evi4 are same. More critically, the evidence snippets are like replications of the claim, which cannot provide sufficient information to classify the claim. This result may be explained by the fact that the new title of partial search snippets is mainly extracted as evidence, whereby information is insufficient. Next, through the evidence source link provided in the dataset, we further find the truth evidence from the full text of the evidence web pages. As shown in

Figure 3, the core part of the evidence that determines the veracity of the claim is briefly listed in the table. A comparison of the retrieval evidence and truth evidence confirms that the claim verification component can not make the right inference with insufficient evidence.

To explore the effect of this issue, we test our models on an evidence-enhanced dev set, in which we add the multi-hop ground truth evidence chain using the full text of the evidence pages. The experimental results show that augmenting evidence significantly improves model performance, which further is in agreement with the idea that fine-grained evidence aggregating and reasoning can contribute to verifying the claim. In addition, the error case analysis in this study also enhances an understanding that leveraging prompt learning to directly verify the claim possibly is a better idea for fact-checking the retrieval evidence to verify whether the claim is true or false or is similar to the claim. Hypothetically, this means it is worthwhile to design a better evidence retrieval pipeline, and the models are able to ingest large documents (web pages); thus, their performance increase could have been much more significant, which remains to be our focus in future research.

5. Discussion

In this section, we will discuss results by answering a series of research questions.

Do the different pre-trained multilingual models make wide differences? We emphatically analyze the performance of different pre-trained multilingual models employed in the framework.

Table 6 shows that the model based on mBERT outperforms other similar models. With comparative analysis, the XLM-based model’s performance is close to that of the mBERT-based model, and the DistilBERT-based model’s overall performance is relatively poor, which is limited to performing direct zero-shot tasks due to the learning mechanism. The results suggest that similar large-scale pre-trained multilingual models can almost achieve the equivalent semantic understanding of the same claims’ input, so the core of fact-checking is still an evidence-aware inference.

What are the values of the learned relative weights of the losses in multi-task learning? We evaluate the performance of the PEINet model with different loss function calculation frameworks, where different methods correspond to different task weights. In the single-task learning framework, the task weights are set in advance. Under the multi-task learning framework, the relative weights of the losses are automatically generated through iterative training. The results of the contrastive analysis are presented in

Table 7, which clearly illustrates the benefit of multi-task learning. Interestingly, what stands out in the table is that the task weight of the fine-grained evidence aggregation loss is higher than that of the prompt loss, which suggests that the verification of most multilingual claims would depend on the evidence chain excavation.

Can we improve performance with data augmentation of English corpus? A performance comparison is conducted by augmenting the dataset with 12,311 English claims and retrieved evidences from PolitiFact corpus. Average Micro F1 scores (and standard deviations) of the models are reported over four random runs in

Table 8. The result that English data augmentation degrades the models’ performances is somewhat counter-intuitive. A possible cause proposed by the dataset publishers is that augmenting data almost comes from the political field, and cross-domain tasks increase the difficulty of multilingual fact-checking, especially in the out-of-domain and zero-shot scene. Another significant factor in our observation is that the evaluation criteria between X-Fact dataset and PolitiFact dataset is inconsistent. However, with English data augmentation, different models have different levels of performance degradation, among which, the performance of PEINet is relatively more stable. The results further support the idea that language family embedding strengthens the model transfer learning in the zero-shot scene.