An Adaptive Dynamic Multi-Template Correlation Filter for Robust Object Tracking

Abstract

:1. Introduction

2. Related Work

- The template of the tracking object must have sufficient characteristics of the target object;

- The template of the tracking object must not be sensitive to illumination variations;

- The template of the tracking object must be more than one set;

- The template of the tracking object must be dynamically updatable.

3. Methods

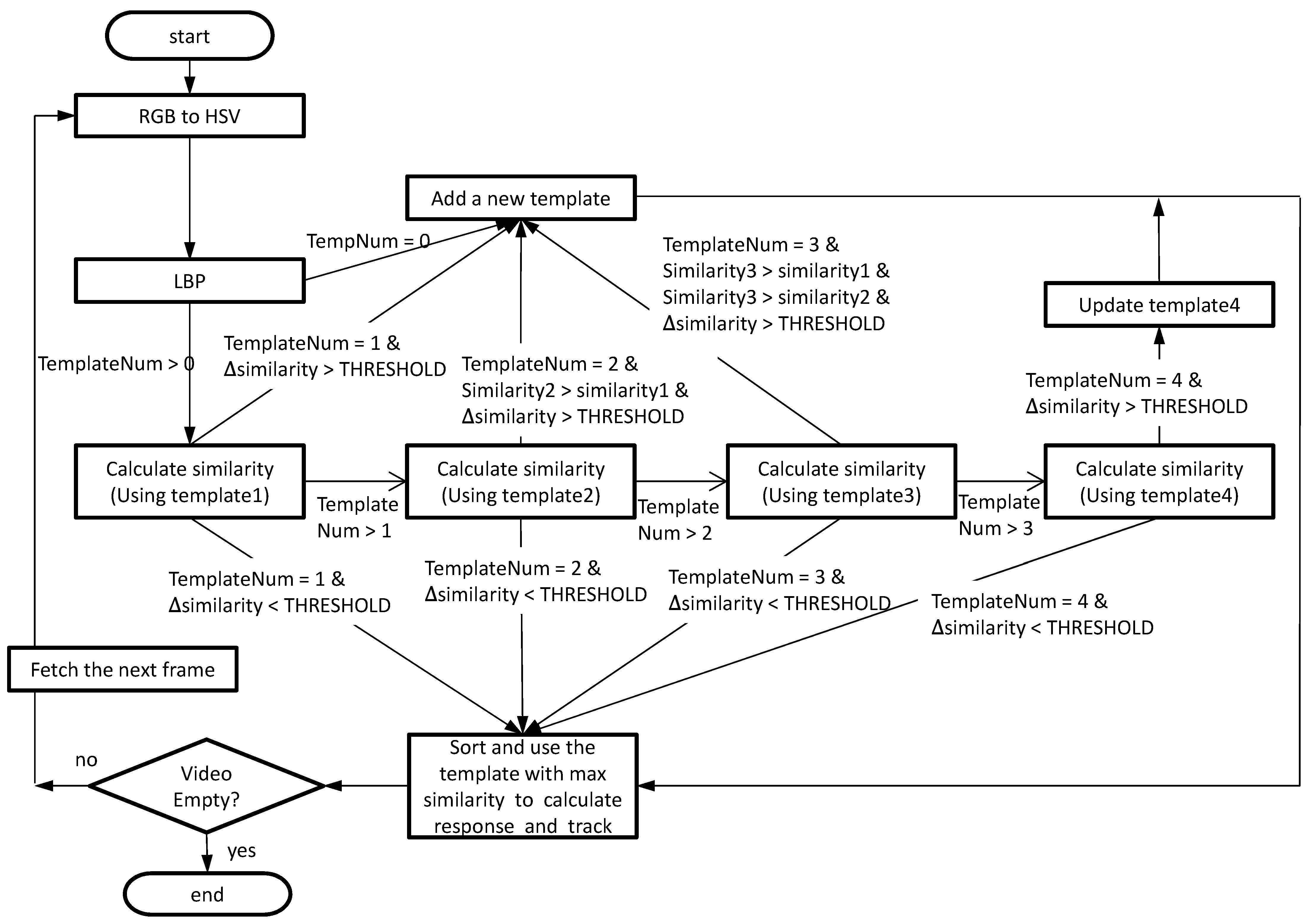

3.1. The Process of the ADMTCF

3.1.1. HSV Color Space and LBP Encoding

3.1.2. The Strategy for Adding and Updating Templates

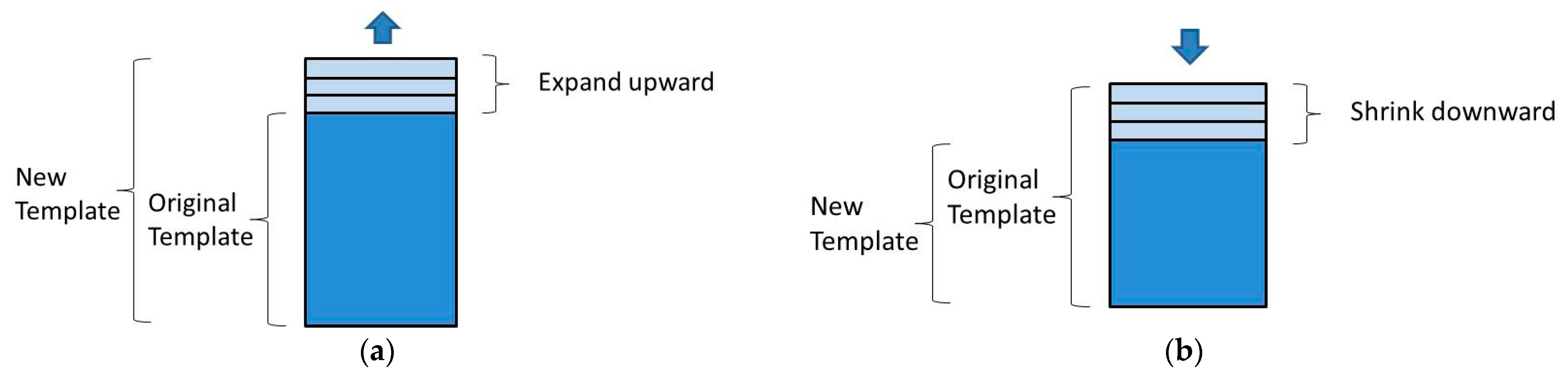

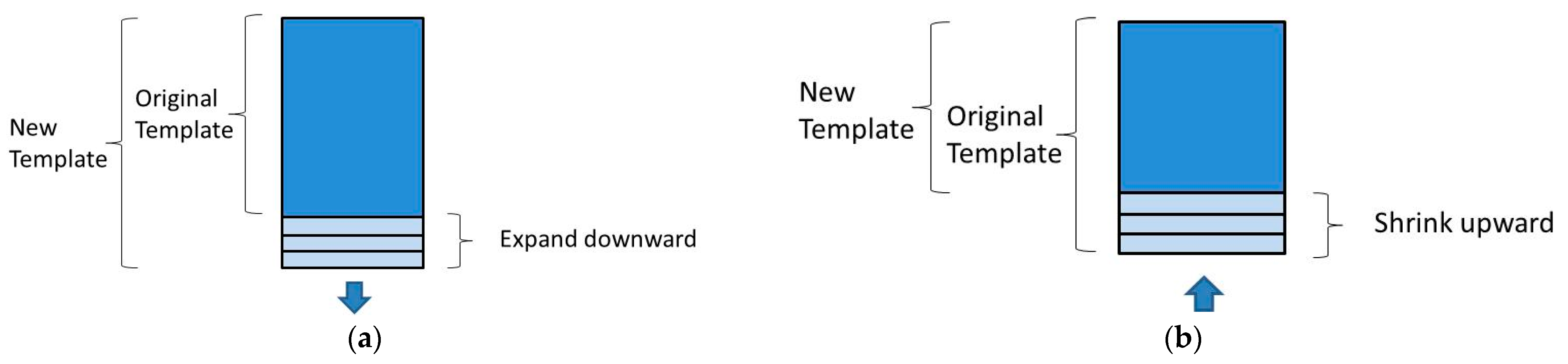

3.1.3. The Dynamic Change of the Adaptive Template’s Size

3.1.4. The Mechanism of Multi-Template with Adaptive Size Change for the Process of Tracking

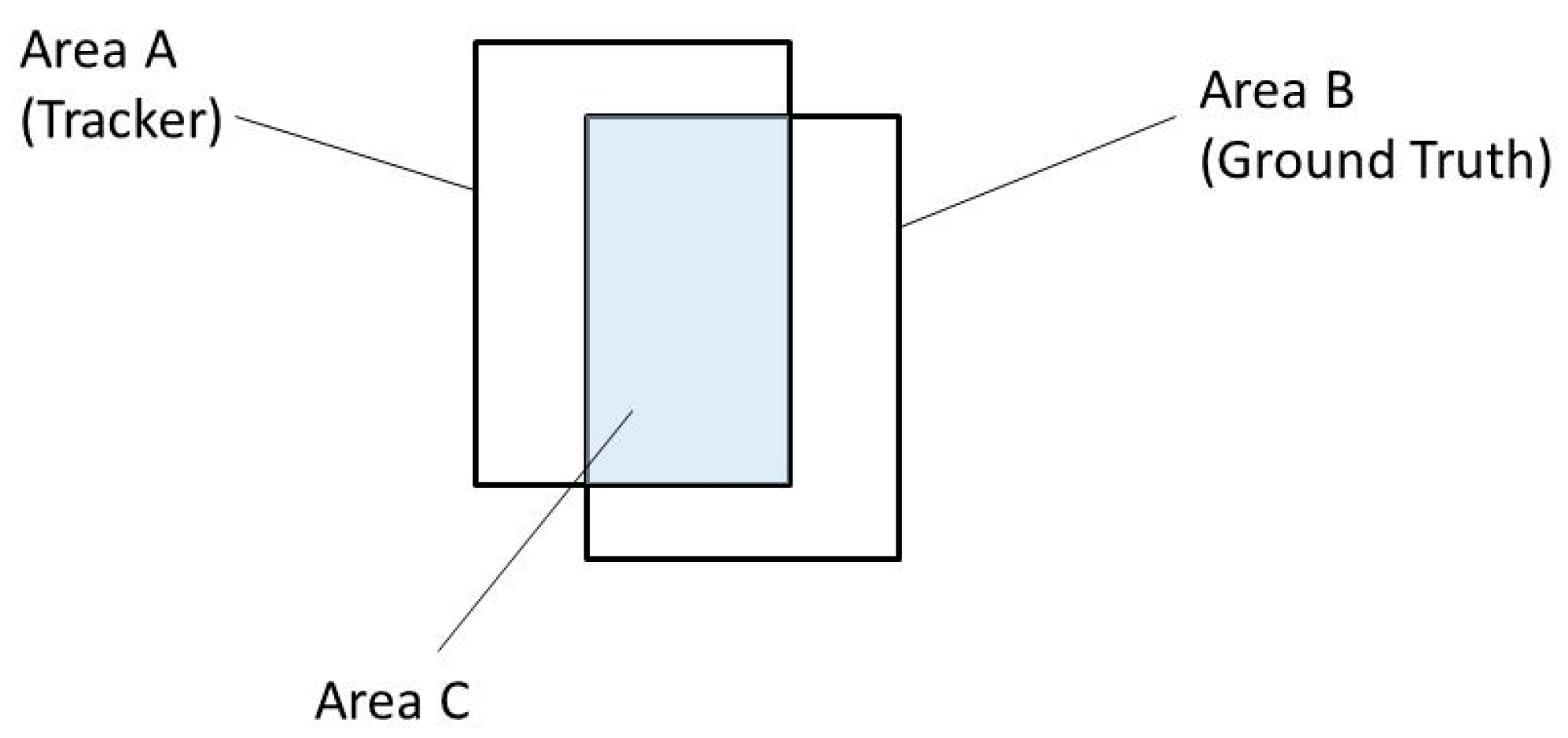

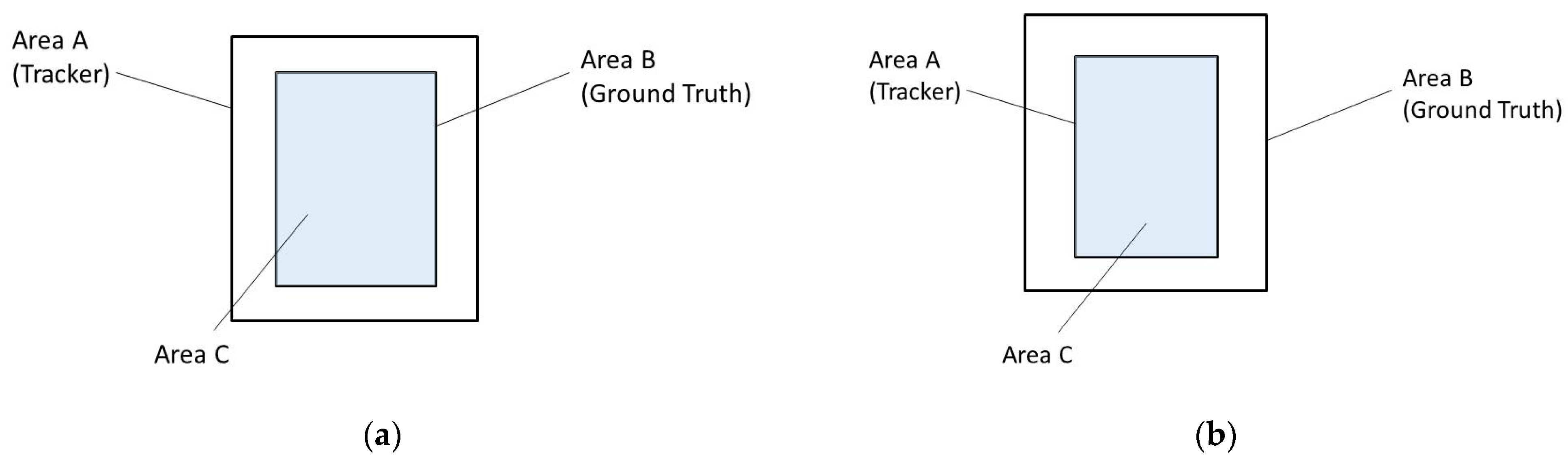

3.2. Evaluation of the Tracking Performance

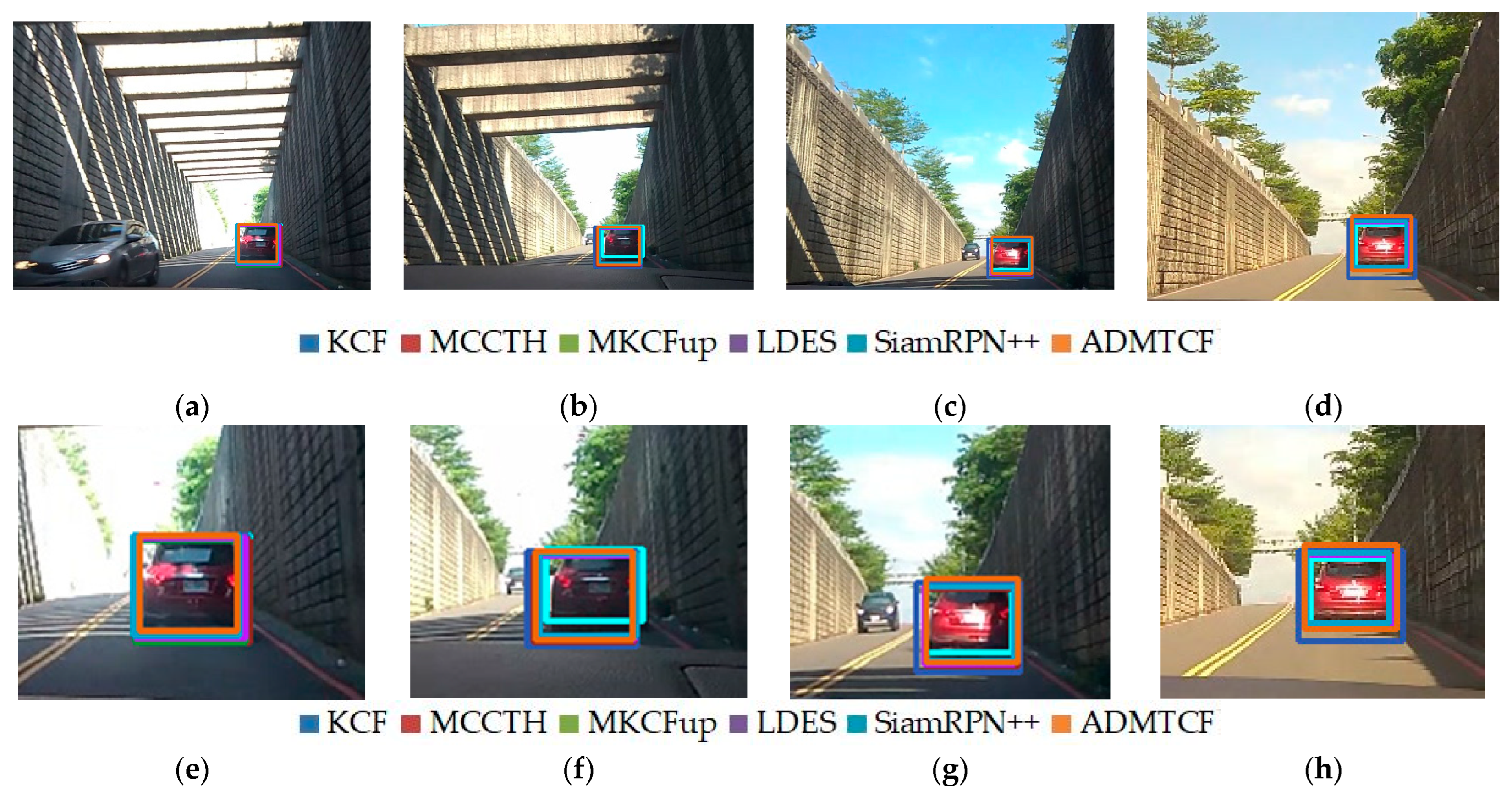

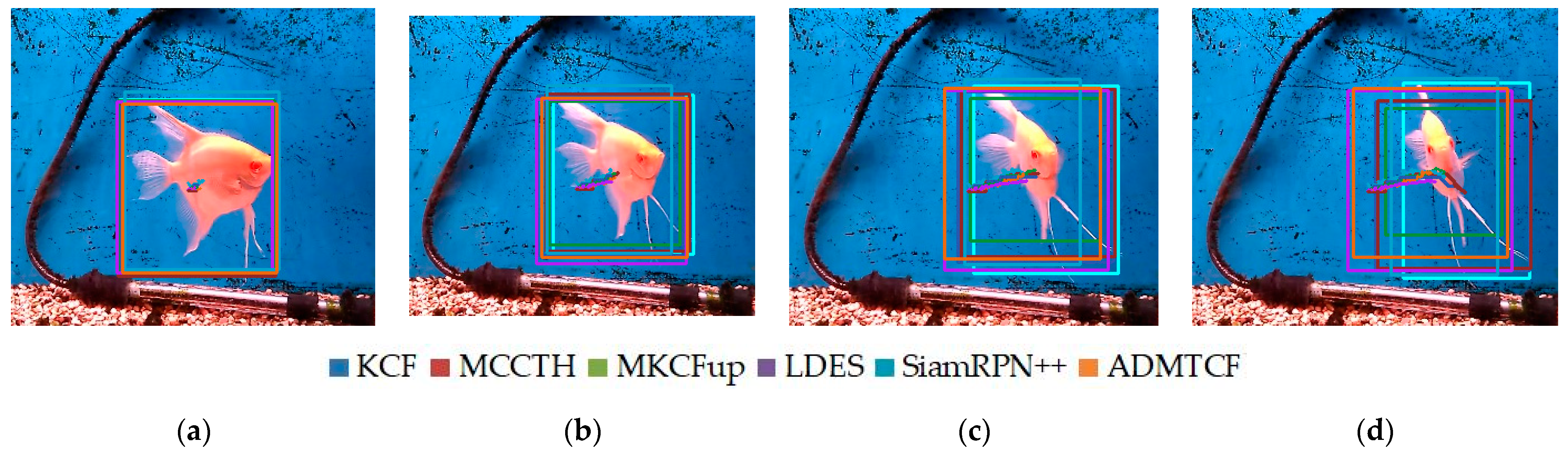

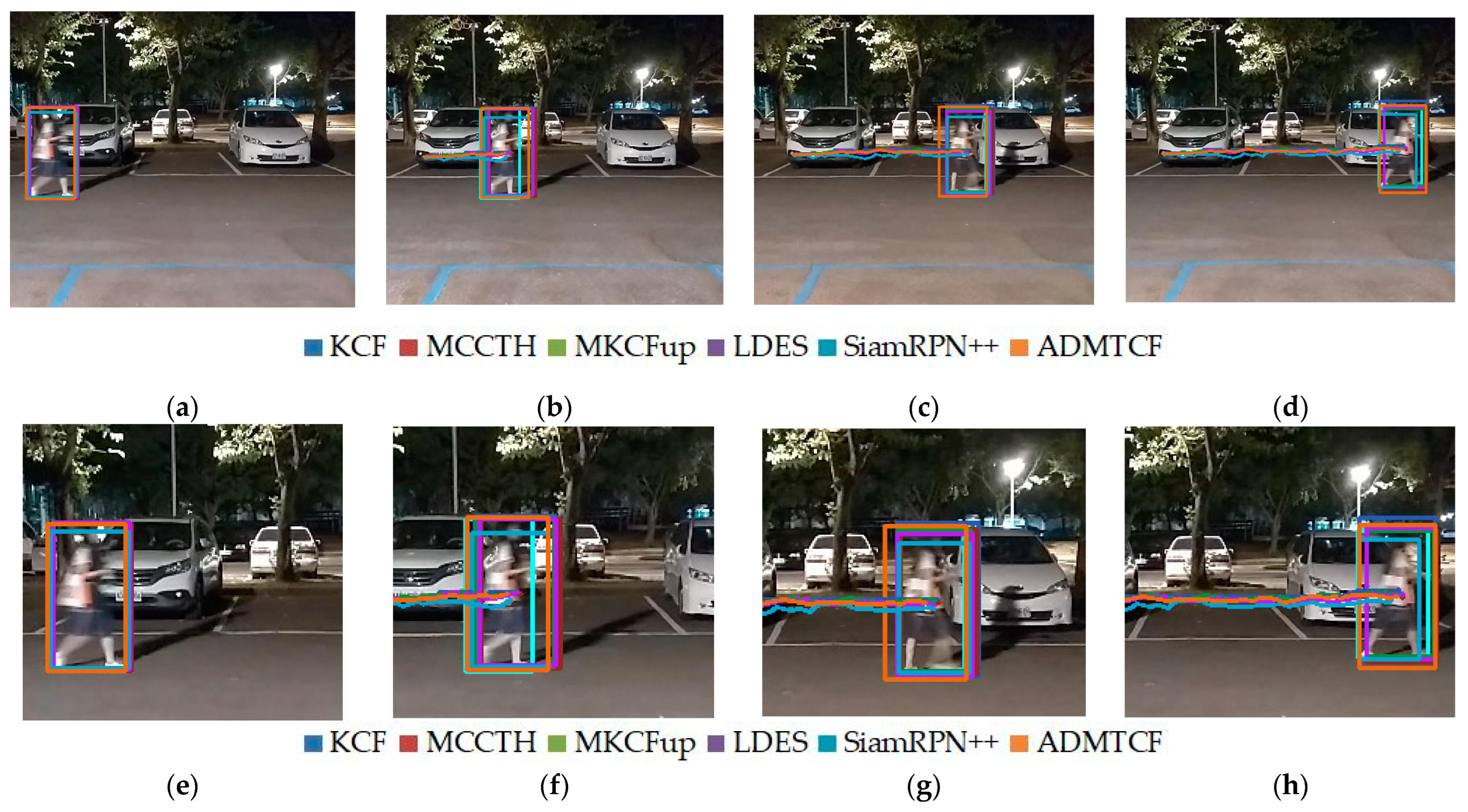

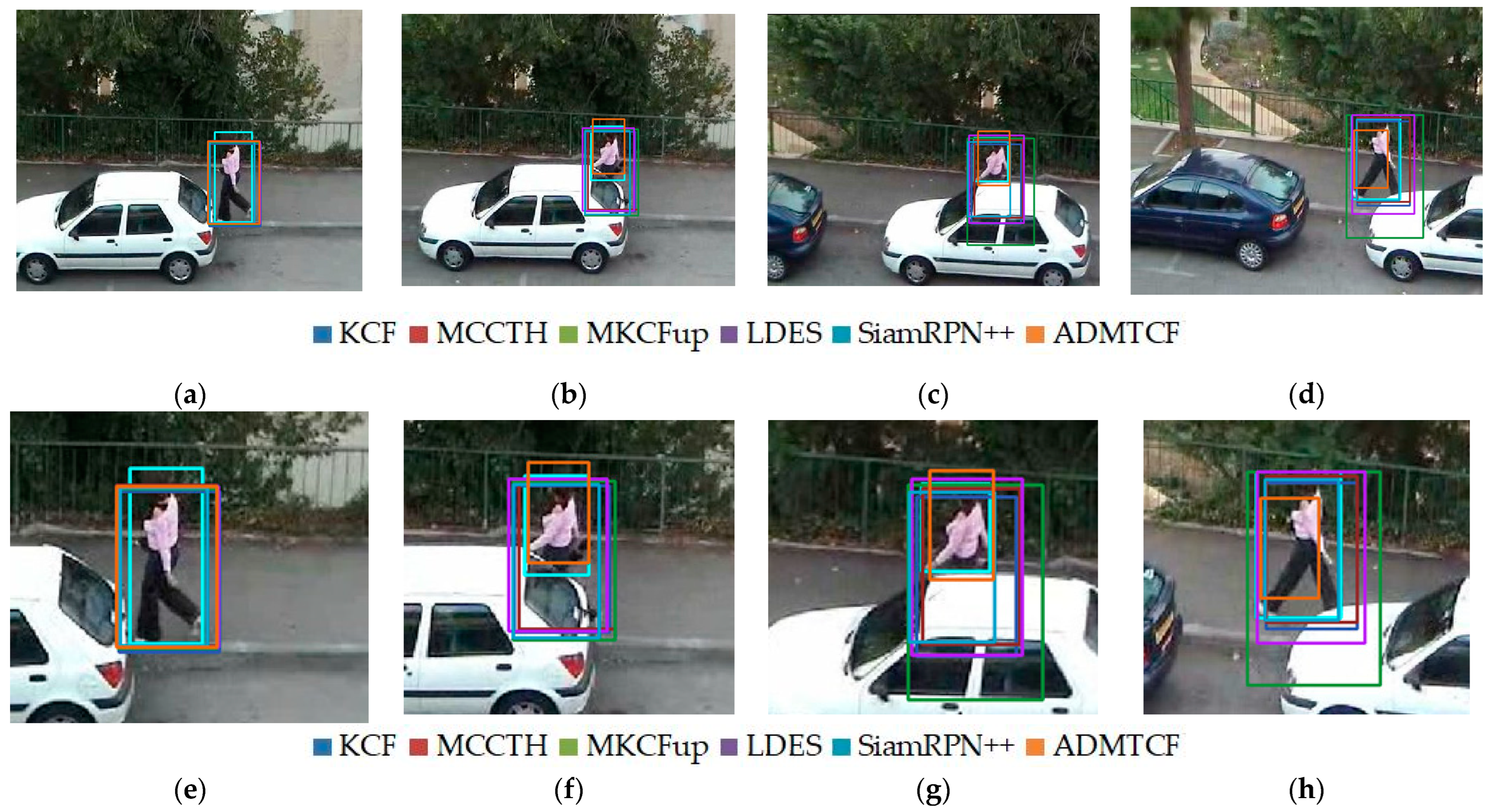

4. Results

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- CTA Launches Industry-Led 8K Ultra HD Display Definition, Logo Program. Available online: https://www.cta.tech/Resources/i3-Magazine/i3-Issues/2019/November-December/CTA-Launches-Industry-Led-8K-Ultra-HD-Display-Defi (accessed on 30 September 2022).

- Agarwal, N.; Chiang, C.-W.; Sharma, A. A Study on Computer Vision Techniques for Self-Driving Cars. In Proceedings of the International Conference on Frontier Computing, Kuala Lumpur, Malaysia, 3–6 July 2018; pp. 629–634. [Google Scholar]

- Buyval, A.; Gabdullin, A.; Mustafin, R.; Shimchik, I. Realtime Vehicle and Pedestrian Tracking for Didi Udacity Self-Driving Car Challenge. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 2064–2069. [Google Scholar]

- Cho, H.; Seo, Y.-W.; Kumar, B.V.; Rajkumar, R.R. A multi-sensor fusion system for moving object detection and tracking in urban driving environments. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1836–1843. [Google Scholar]

- Petrovskaya, A.; Thrun, S.J.A.R. Model based vehicle detection and tracking for autonomous urban driving. Auton Robot 2009, 26, 123–139. [Google Scholar] [CrossRef]

- Gajjar, V.; Gurnani, A.; Khandhediya, Y. Human detection and tracking for video surveillance: A cognitive science approach. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 2805–2809. [Google Scholar]

- Lee, Y.-G.; Tang, Z.; Hwang, J.-N. Online-learning-based human tracking across non-overlapping cameras. IEEE Trans. Circuits Syst. Video Technol. 2017, 28, 2870–2883. [Google Scholar] [CrossRef]

- Xu, R.; Nikouei, S.Y.; Chen, Y.; Polunchenko, A.; Song, S.; Deng, C.; Faughnan, T.R. Real-time human objects tracking for smart surveillance at the edge. In Proceedings of the 2018 IEEE International Conference on Communications (ICC), Kansas City, MO, USA, 20–24 May 2018; pp. 1–6. [Google Scholar]

- Zhouabc, Y.; Zlatanovac, S.; Wanga, Z.; Zhangcd, Y.; Liuc, L. Moving human path tracking based on video surveillance in 3D indoor scenarios. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 3, 97–101. [Google Scholar]

- Teutsch, M.; Krüger, W. Detection, segmentation, and tracking of moving objects in UAV videos. In Proceedings of the 2012 IEEE Ninth International Conference on Advanced Video and Signal-Based Surveillance, Beijing, China, 18–21 September 2012; pp. 313–318. [Google Scholar]

- Muresan, M.P.; Nedevschi, S.; Danescu, R.J.S. Robust data association using fusion of data-driven and engineered features for real-time pedestrian tracking in thermal images. Sensors 2021, 21, 8005. [Google Scholar] [CrossRef] [PubMed]

- Karunasekera, H.; Wang, H.; Zhang, H.J.I.A. Multiple object tracking with attention to appearance, structure, motion and size. IEEE Access 2019, 7, 104423–104434. [Google Scholar] [CrossRef]

- Guo, Q.; Feng, W.; Gao, R.; Liu, Y.; Wang, S.J. Exploring the effects of blur and deblurring to visual object tracking. IEEE Trans. Image Process. 2021, 30, 1812–1824. [Google Scholar] [CrossRef]

- Mao, Z.; Chen, X.; Wang, Y.; Yan, J. Robust Tracking for Motion Blur Via Context Enhancement. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 659–663. [Google Scholar]

- Tan, Z.; Yang, W.; Li, S.; Chen, Y.; Ma, X.; Wu, S. Research on High-speed Object Tracking Based on Circle Migration Estimation Neighborhood. In Proceedings of the 2021 8th International Conference on Computational Science/Intelligence and Applied Informatics (CSII), Zhuhai, China, 13–15 September 2021; pp. 29–33. [Google Scholar]

- Zhai, Z.; Sun, S.; Liu, J. Tracking Planar Objects by Segment Pixels. In Proceedings of the 2021 3rd International Academic Exchange Conference on Science and Technology Innovation (IAECST), Guangzhou, China, 10–12 December 2021; pp. 308–311. [Google Scholar]

- Liang, C.; Zhang, Z.; Zhou, X.; Li, B.; Lu, Y.; Hu, W.J. One More Check: Making “Fake Background” Be Tracked Again. Process. AAAI Conf. Artif. Intell. 2022, 36, 1546–1554. [Google Scholar] [CrossRef]

- Hyun, J.; Kang, M.; Wee, D.; Yeung, D.-Y.J. Detection Recovery in Online Multi-Object Tracking with Sparse Graph Tracker. arXiv 2022, arXiv:2205.00968. [Google Scholar]

- Liu, W.; Song, Y.; Chen, D.; He, S.; Yu, Y.; Yan, T.; Hancke, G.P.; Lau, R.W. Deformable object tracking with gated fusion. IEEE Trans. Image Process. 2019, 28, 3766–3777. [Google Scholar] [CrossRef] [Green Version]

- Huang, D.; Kong, L.; Zhu, J.; Zheng, L.J. Improved action-decision network for visual tracking with meta-learning. IEEE Access 2019, 7, 117206–117218. [Google Scholar] [CrossRef]

- Zhang, J.; Sun, J.; Wang, J.; Yue, X.-G. Visual object tracking based on residual network and cascaded correlation filters. J. Ambient. Intell. Humaniz. Comput. 2021, 12, 8427–8440. [Google Scholar] [CrossRef]

- Lan, S.; Li, J.; Sun, S.; Lai, X.; Wang, W. Robust Visual Object Tracking with Spatiotemporal Regularisation and Discriminative Occlusion Deformation. In Proceedings of the 2021 IEEE International Conference on Image Processing (ICIP), Anchorage, AK, USA, 19–22 September 2021; pp. 1879–1883. [Google Scholar]

- Gao, X.; Zhou, Y.; Huo, S.; Li, Z.; Li, K.J. Robust object tracking via deformation samples generator. J. Vis. Commun. Image Represent. 2022, 83, 103446. [Google Scholar] [CrossRef]

- Yan, Y.; Ren, J.; Zhao, H.; Sun, G.; Wang, Z.; Zheng, J.; Marshall, S.; Soraghan, J.J. Cognitive fusion of thermal and visible imagery for effective detection and tracking of pedestrians in videos. Cogn. Comput. 2018, 10, 94–104. [Google Scholar] [CrossRef] [Green Version]

- Liu, S.; Liu, G.; Zhou, H.J. A robust parallel object tracking method for illumination variations. Mob. Netw. Appl. 2019, 24, 5–17. [Google Scholar] [CrossRef] [Green Version]

- Yang, J.; Ge, H.; Yang, J.; Tong, Y.; Su, S.J. Online multi-object tracking using multi-function integration and tracking simulation training. Appl. Intell. 2022, 52, 1268–1288. [Google Scholar] [CrossRef]

- Zhou, Y.; Zhang, Y.J. SiamET: A Siamese based visual tracking network with enhanced templates. Appl. Intell. 2022, 52, 9782–9794. [Google Scholar] [CrossRef]

- Feng, W.; Han, R.; Guo, Q.; Zhu, J.; Wang, S.J. Dynamic saliency-aware regularization for correlation filter-based object tracking. IEEE Trans. Image Process. 2019, 28, 3232–3245. [Google Scholar] [CrossRef]

- Yuan, Y.; Chu, J.; Leng, L.; Miao, J.; Kim, B.-G. A scale-adaptive object-tracking algorithm with occlusion detection. EURASIP J. Image Video Process. 2020, 2020, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Yuan, D.; Li, X.; He, Z.; Liu, Q.; Lu, S.J. Visual object tracking with adaptive structural convolutional network. Knowl.-Based Syst. 2020, 194, 105554. [Google Scholar] [CrossRef]

- Tai, Y.; Tan, Y.; Xiong, S.; Tian, J.J. Subspace reconstruction based correlation filter for object tracking. Comput. Vis. Image Underst. 2021, 212, 103272. [Google Scholar] [CrossRef]

- Cao, J.; Weng, X.; Khirodkar, R.; Pang, J.; Kitani, K.J. Observation-Centric SORT: Rethinking SORT for Robust Multi-Object Tracking. arXiv 2022, arXiv:2203.14360. [Google Scholar]

- Bibi, A.; Ghanem, B. Multi-template scale-adaptive kernelized correlation filters. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Santiago, Chile, 7–13 December 2015; pp. 50–57. [Google Scholar]

- Ojala, T.; Pietikainen, M.; Harwood, D. Performance evaluation of texture measures with classification based on Kullback discrimination of distributions. In Proceedings of the 12th International Conference on Pattern Recognition, Jerusalem, Israel, 9–13 October 1994; pp. 582–585. [Google Scholar]

- Cucchiara, R.; Grana, C.; Neri, G.; Piccardi, M.; Prati, A. The Sakbot System for Moving Object Detection and Tracking. In Video-Based Surveillance Systems; Springer: Boston, MA, USA, 2002; pp. 145–157. [Google Scholar]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J.J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 37, 583–596. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, N.; Zhou, W.; Tian, Q.; Hong, R.; Wang, M.; Li, H. Multi-cue correlation filters for robust visual tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4844–4853. [Google Scholar]

- Tang, M.; Yu, B.; Zhang, F.; Wang, J. High-speed tracking with multi-kernel correlation filters. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4874–4883. [Google Scholar]

- Li, Y.; Zhu, J.; Hoi, S.C.; Song, W.; Wang, Z.; Liu, H. Robust estimation of similarity transformation for visual object tracking. AAAI 2019, 33, 8666–8673. [Google Scholar] [CrossRef]

- Li, B.; Wu, W.; Wang, Q.; Zhang, F.; Xing, J.; Yan, J. Siamrpn++: Evolution of siamese visual tracking with very deep networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 4282–4291. [Google Scholar]

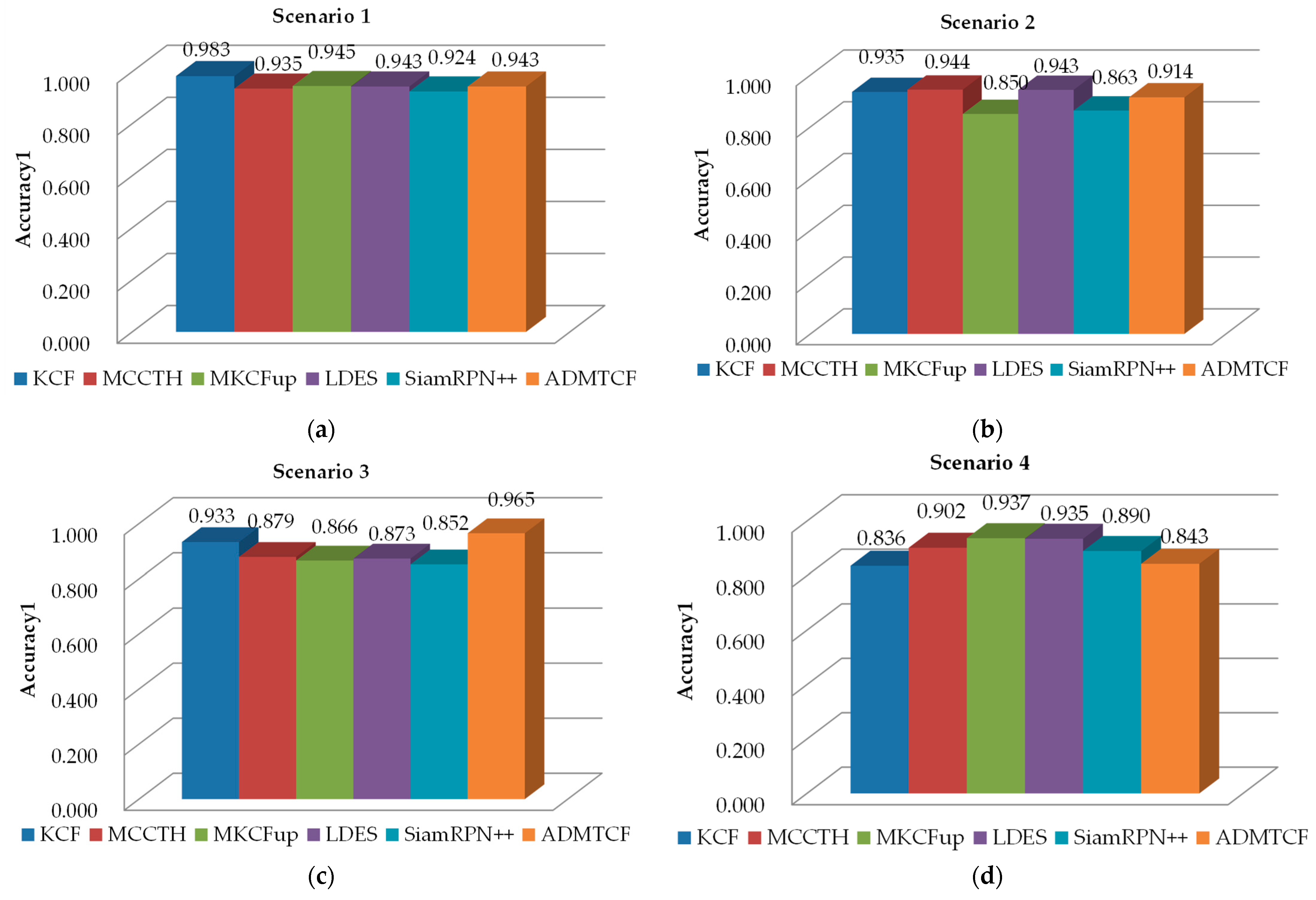

| Title 2 | Ours | KCF | MCCTH | MKCFup | LDES | SiamRPN++ |

|---|---|---|---|---|---|---|

| Scenario 1 | 0.943 | 0.983 * | 0.935 | 0.945 | 0.943 | 0.924 |

| Scenario 2 | 0.914 | 0.935 | 0.944 * | 0.850 | 0.943 | 0.863 |

| Scenario 3 | 0.965 * | 0.933 | 0.879 | 0.866 | 0.873 | 0.852 |

| Scenario 4 | 0.843 | 0.836 | 0.902 | 0.937 * | 0.935 | 0.890 |

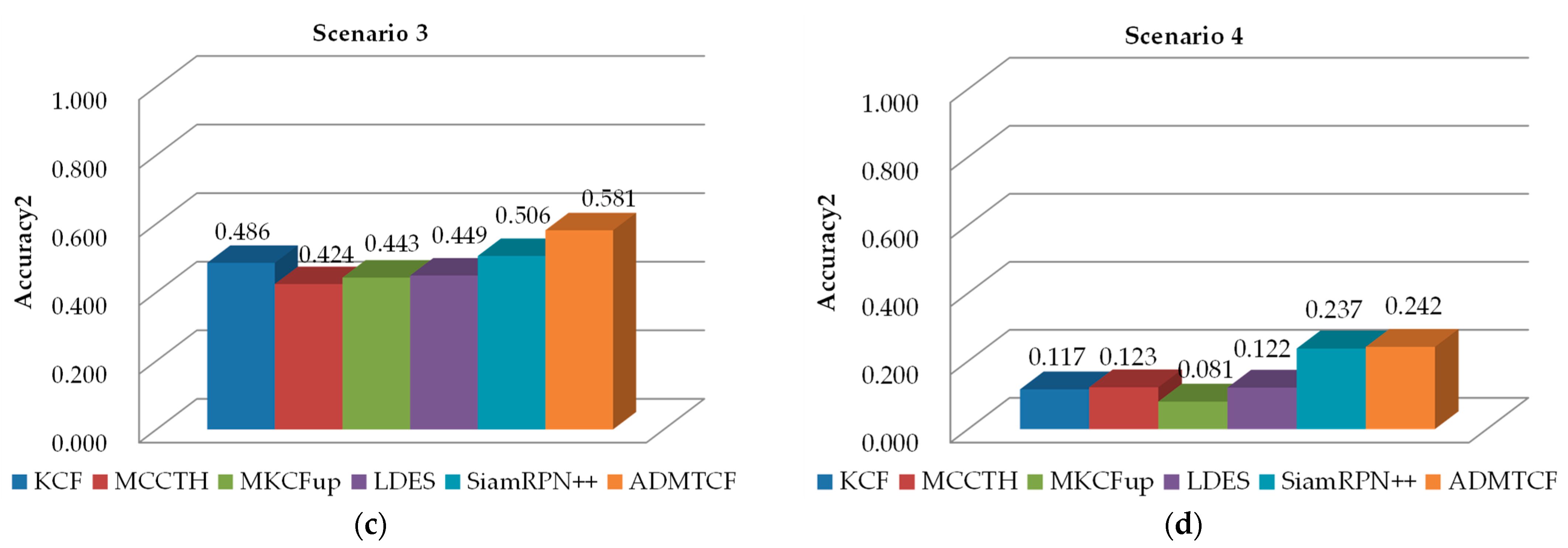

| Title 2 | Ours | KCF | MCCTH | MKCFup | LDES | SiamRPN++ |

|---|---|---|---|---|---|---|

| Scenario 1 | 0.593 | 0.456 | 0.614 | 0.672 | 0.649 | 0.690 * |

| Scenario 2 | 0.730 | 0.793 | 0.815 * | 0.675 | 0.754 | 0.637 |

| Scenario 3 | 0.581 * | 0.486 | 0.424 | 0.443 | 0.449 | 0.506 |

| Scenario 4 | 0.242 * | 0.117 | 0.123 | 0.081 | 0.122 | 0.237 |

| Title 2 | Ours | KCF | MCCTH | MKCFup | LDES | SiamRPN++ |

|---|---|---|---|---|---|---|

| Scenario 1 + 2 + 3 + 4 | 0.916 | 0.922 | 0.915 | 0.900 | 0.924 * | 0.882 |

| Title 2 | Ours | KCF | MCCTH | MKCFup | LDES | SiamRPN++ |

|---|---|---|---|---|---|---|

| Scenario 1 + 2 + 3 + 4 | 0.537 * | 0.463 | 0.494 | 0.468 | 0.494 | 0.518 |

| fps | Ours | KCF | MCCTH | MKCFup | LDES | SiamRPN++ |

|---|---|---|---|---|---|---|

| Scenario 1 | 7.37 | 9.31 * | 2.56 | 5.14 | 1.41 | 0.07 |

| Scenario 2 | 3.28 * | 1.62 | 2.13 | 1.46 | 0.22 | 0.06 |

| Scenario 3 | 3.24 | 3.54 * | 1.9 | 3.04 | 0.67 | 0.07 |

| Scenario 4 | 4.28 * | 2.11 | 2.66 | 2.19 | 0.36 | 0.04 |

| fps | Ours | KCF | MCCTH | MKCFup | LDES | SiamRPN++ |

|---|---|---|---|---|---|---|

| Scenario1 | 33.77 * | 12.67 | 6.58 | 11.54 | 2.29 | 0.52 |

| Scenario2 | 13.9 * | 4.4 | 5.93 | 4.25 | 0.93 | 0.11 |

| scenario3 | 14.12 * | 7.01 | 4.75 | 6.59 | 1.35 | 0.27 |

| scenario4 | 18.57 * | 5.99 | 6.61 | 5.66 | 3.35 | 0.41 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hung, K.-C.; Lin, S.-F. An Adaptive Dynamic Multi-Template Correlation Filter for Robust Object Tracking. Appl. Sci. 2022, 12, 10221. https://doi.org/10.3390/app122010221

Hung K-C, Lin S-F. An Adaptive Dynamic Multi-Template Correlation Filter for Robust Object Tracking. Applied Sciences. 2022; 12(20):10221. https://doi.org/10.3390/app122010221

Chicago/Turabian StyleHung, Kuo-Ching, and Sheng-Fuu Lin. 2022. "An Adaptive Dynamic Multi-Template Correlation Filter for Robust Object Tracking" Applied Sciences 12, no. 20: 10221. https://doi.org/10.3390/app122010221

APA StyleHung, K.-C., & Lin, S.-F. (2022). An Adaptive Dynamic Multi-Template Correlation Filter for Robust Object Tracking. Applied Sciences, 12(20), 10221. https://doi.org/10.3390/app122010221