Deep Collaborative Recommendation Algorithm Based on Attention Mechanism

Abstract

1. Introduction

- We propose a ranking recommendation model, DACR, with the characteristics of fusion MLP and traditional MF, which learns the low-rank relationship for user-items and the mapping functions between user-item representations and matching scores from different perspectives.

- We integrate the attention mechanism to capture the hidden information within implicit feedback.

- We research the effect of the dimension of the prediction vectors and the negative sampling ratio on personalized ranking performance through detailed experiments.

- We conduct numerous and detailed experiments on two public datasets to demonstrate the effectiveness of the DACR model and discuss some possibilities for improvement.

2. Related Work

2.1. Collaborative Filtering with Implicit Feedback

2.2. Collaborative Filtering Based on Representation Learning

2.3. Collaborative Filtering Based on Matching Function Learning

2.4. Attention Mechanism in Recommendation System

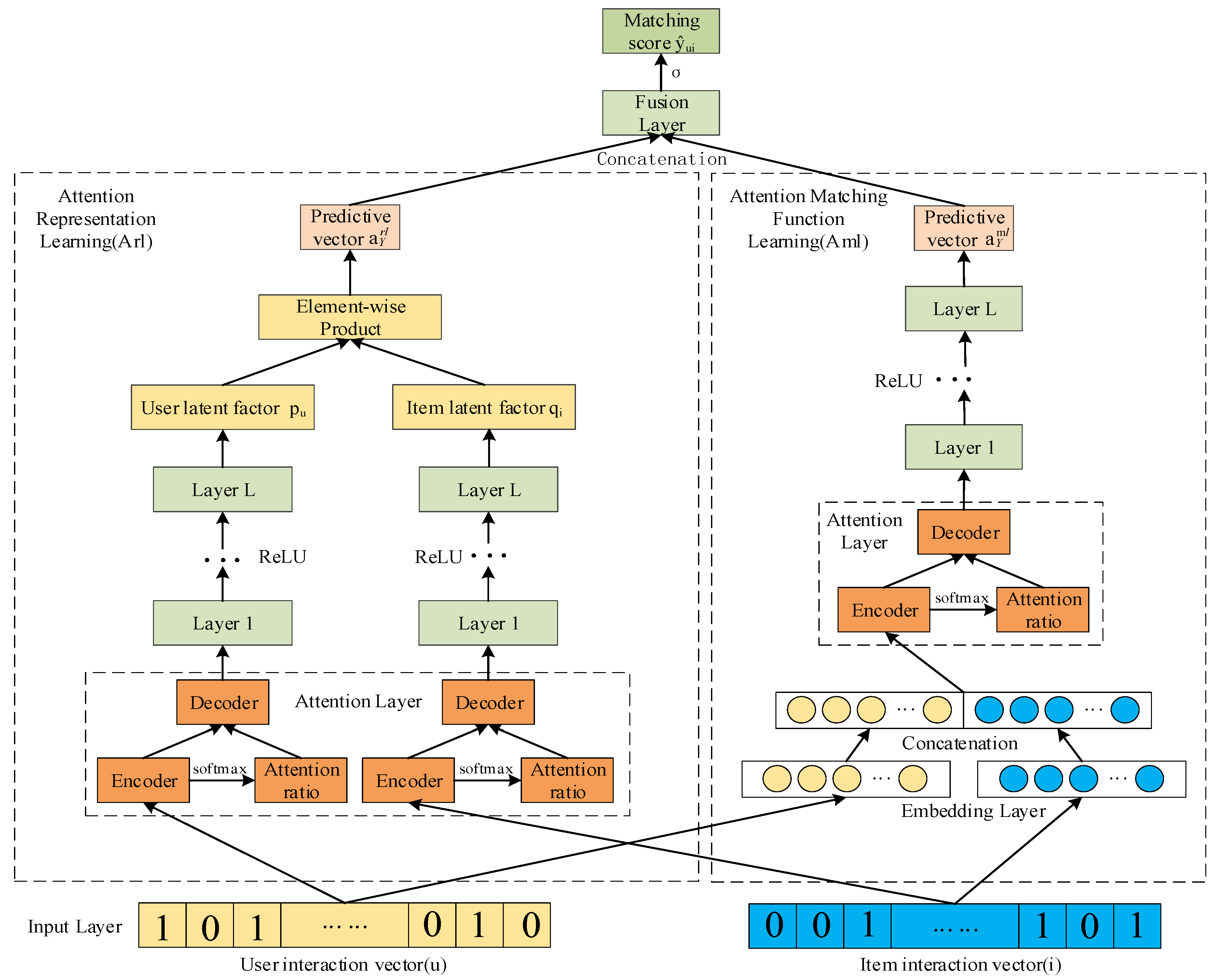

3. Proposed DACR Model

3.1. General Process

3.2. Attention Representation Learning

3.3. Matching Function Learning

3.4. Fusion

3.5. Other Details

3.5.1. Inout Data of DACR

3.5.2. Processing Input Data

3.5.3. Loss Function

3.5.4. Training of DACR

| Algorithm 1. The training of DACR |

| Input: Y: user- item interaction matrix; all the observed interactions in Y; : the sampled Unobserved interactions; n: epochs. |

| Output: θ: the model parameters |

| Randomly initialize the model parameters θ with a Gaussian distribution. |

| For all epochs = 1 to n do |

| For all (u,i) ∈ do |

| Calculate the predictive vector of by Equations (1)–(3) |

| Calculate the predictive vector of by Equations (4) and (5) |

| Calculate the predicted interaction by Equation (6) |

| End for |

| Obtain the loss by Equation (10) |

| Optimize |

| End for |

| Return θ |

4. Experiment

4.1. Experimental Settings

4.1.1. Datasets

4.1.2. Comparison Methods

4.1.3. Parameter Settings

4.1.4. Evaluation Protocols

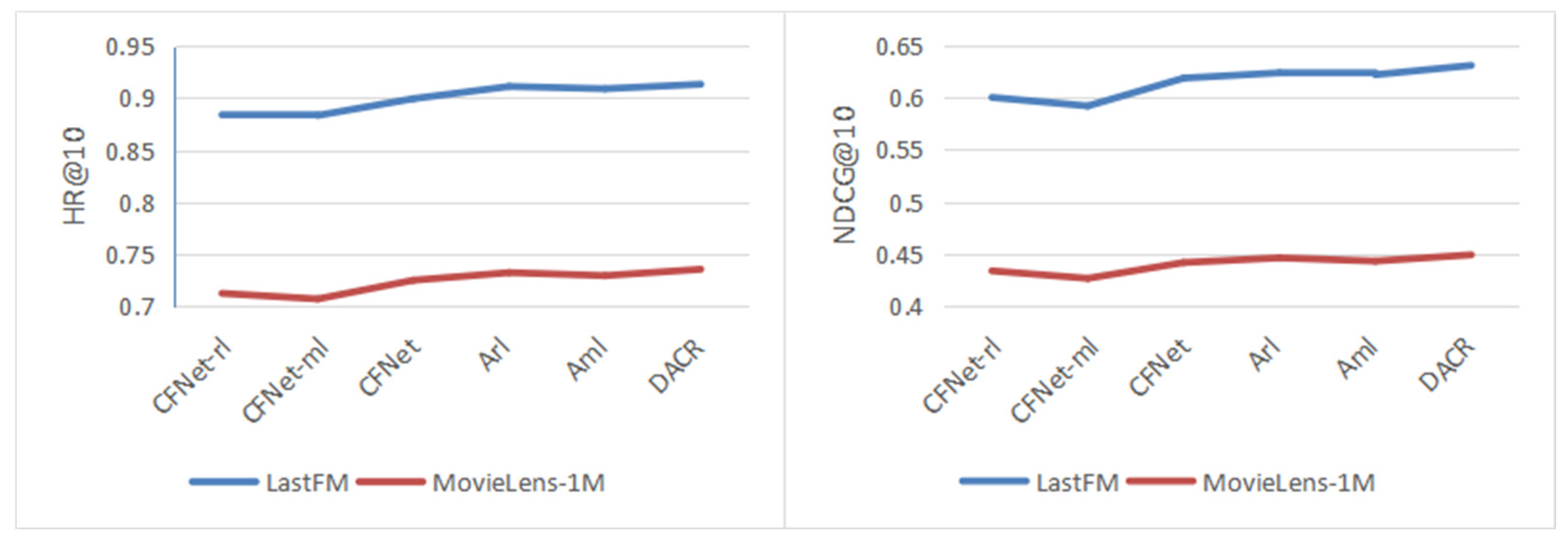

4.2. Overall Performance (RQ1)

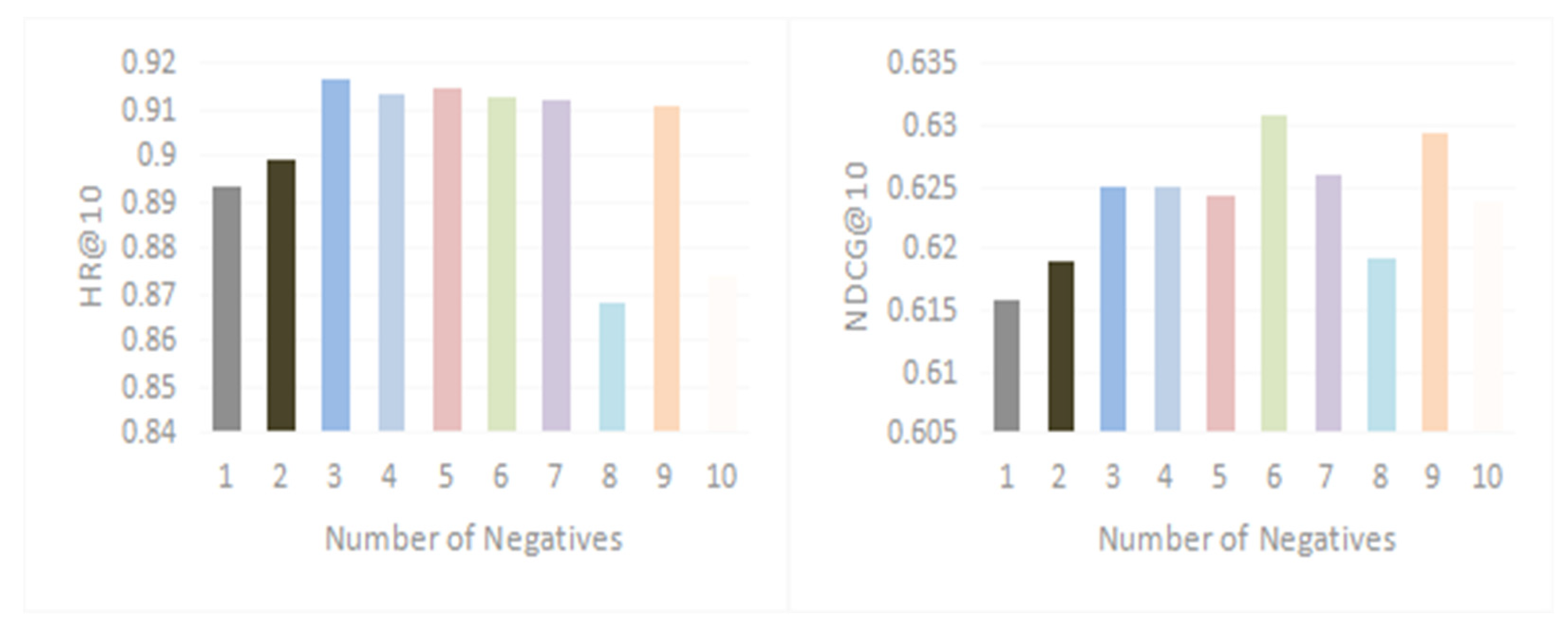

4.3. Impact of the Negative Sampling Ratio (RQ2)

4.4. Impact of the Dimension of Prediction Vectors (RQ3)

4.5. Impact of Different Modules (RQ4)

5. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhao, Z.L.; Huang, L.; Wang, C.D.; Huang, D. Low-rank and sparse cross-domain recommendation algorithm. In Proceedings of the International Conference on Database Systems for Advanced Applications, Gold Coast, QLD, Australia, 21–24 May 2018; Springer: Cham, Switzerland, 2018; pp. 150–157. [Google Scholar]

- Cai, Y.; Leung, H.F.; Li, Q.; Min, H.; Tang, J.; Li, J. Typicality-based collaborative filtering recommendation. IEEE Trans. Knowl. Data Eng. 2013, 26, 766–779. [Google Scholar] [CrossRef]

- Wang, H.; Li, W.J. Relational collaborative topic regression for recommender systems. IEEE Trans. Knowl. Data Eng. 2014, 27, 1343–1355. [Google Scholar] [CrossRef]

- Hu, Q.Y.; Huang, L.; Wang, C.D.; Chao, H.Y. Item orientated recommendation by multi-view intact space learning with overlapping. Knowl.-Based Syst. 2019, 164, 358–370. [Google Scholar] [CrossRef]

- Heidari, A.; Navimipour, N.J.; Unal, M. Applications of ML/DL in the management of smart cities and societies based on new trends in information technologies: A systematic literature review. Sustain. Cities Soc. 2022, 85, 104089. [Google Scholar] [CrossRef]

- Heidi, A.; Jafari Navimipour, N.; Unal, M.; Toumaj, S. Machine learning applications for COVID-19 outbreak management. Neural Comput. Appl. 2022, 1–36. [Google Scholar]

- Covington, P.; Adams, J.; Sargin, E. Deep neural networks for youtube recommendations. In Proceedings of the 10th ACM Conference on Recommender Systems, Boston, MA, USA, 15–19 September 2016; pp. 191–198. [Google Scholar]

- Xia, H.; Li, J.J.; Liu, Y. Collaborative filtering recommendation algorithm based on attention GRU and adversarial learning. IEEE Access 2020, 8, 208149–208157. [Google Scholar] [CrossRef]

- Huang, P.S.; He, X.; Gao, J.; Deng, L.; Acero, A.; Heck, L. Learning deep structured semantic models for web search using clickthrough data. In Proceedings of the 22nd ACM International Conference on INFORMATION & Knowledge Management, San Francisco, CA, USA, 27 October–1 November 2013; pp. 2333–2338. [Google Scholar]

- Xue, H.J.; Dai, X.; Zhang, J.; Huang, S.; Chen, J. Deep matrix factorization models for recommender systems. In Proceedings of the IJCAI, Melbourne, Australia, 19–25 August 2017; Volume 17, pp. 3203–3209. [Google Scholar]

- Xu, J.; He, X.; Li, H. Deep learning for matching in search and recommendation. Found. Trends® Inf. Retr. 2020, 14, 102–288. [Google Scholar] [CrossRef]

- Hornik, K.; Stinchcombe, M.; White, H. Multilayer feedforward networks are universal approximators. Neural Netw. 1989, 2, 359–366. [Google Scholar] [CrossRef]

- He, X.; Liao, L.; Zhang, H.; Nie, L.; Hu, X.; Chua, T.S. Neural collaborative filtering. In Proceedings of the 26th International Conference on World Wide Web, Perth, Australia, 3–7 April 2017; pp. 173–182. [Google Scholar]

- Beutel, A.; Covington, P.; Jain, S.; Xu, C.; Li, J.; Gatto, V.; Chi, E.H. Latent cross: Making use of context in recurrent recommender systems. In Proceedings of the Eleventh ACM International Conference on Web Search and Data Mining, Los Angeles, CA, USA, 5–9 February 2018; pp. 46–54. [Google Scholar]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Feng, J.; Feng, X.; Chen, J.; Cao, X.; Zhang, X.; Jiao, L.; Yu, T. Generative adversarial networks based on collaborative learning and attention mechanism for hyperspectral image classification. Remote Sens. 2020, 12, 1149. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, F.; Xie, X.; Guo, M. DKN: Deep knowledge-aware network for news recommendation. In Proceedings of the 2018 World Wide Web Conference, Lyon, France, 23–27 April 2018; pp. 1835–1844. [Google Scholar]

- He, X.; He, Z.; Song, J.; Liu, Z.; Jiang, Y.G.; Chua, T.S. Nais: Neural attentive item similarity model for recommendation. IEEE Trans. Knowl. Data Eng. 2018, 30, 2354–2366. [Google Scholar] [CrossRef]

- Tay, Y.; Zhang, S.; Tuan, L.A.; Hui, S.C. Self-attentive neural collaborative filtering. arXiv 2018, arXiv:1806.06446. [Google Scholar]

- Xiao, J.; Ye, H.; He, X.; Zhang, H.; Wu, F.; Chua, T.S. Attentional factorization machines: Learning the weight of feature interactions via attention networks. arXiv 2017, arXiv:1708.04617. [Google Scholar]

- Oard, D.W.; Kim, J. Implicit feedback for recommender systems. In Proceedings of the AAAI Workshop on Recommender Systems, AAAI. Madison, WI, USA, 26–30 July 1998; Volume 83, pp. 81–83. [Google Scholar]

- Ma, H. An experimental study on implicit social recommendation. In Proceedings of the 36th International ACM SIGIR Conference on Research and Development in Information Retrieval, Dublin, Ireland, 28 July–1 August 2013; pp. 73–82. [Google Scholar]

- Hu, Y.; Koren, Y.; Volinsky, C. Collaborative filtering for implicit feedback datasets. In Proceedings of the 2008 Eighth IEEE International Conference on Data Mining, Washington, DC, USA, 15–19 December 2008; pp. 263–272. [Google Scholar]

- Koren, Y. Factorization meets the neighborhood: A multifaceted collaborative filtering model. In Proceedings of the 14th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Las Vegas, NV, USA, 24–27 August 2008; pp. 426–434. [Google Scholar]

- Rendle, S.; Freudenthaler, C.; Gantner, Z.; Schmidt-Thieme, L. BPR: Bayesian personalized ranking from implicit feedback. arXiv 2012, arXiv:1205.2618. [Google Scholar]

- Koren, Y.; Bell, R.; Volinsky, C. Matrix factorization techniques for recommender systems. Computer 2009, 42, 30–37. [Google Scholar] [CrossRef]

- Koren, Y. Collaborative filtering with temporal dynamics. In Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Paris, France, 28 June–1 July 2009; pp. 447–456. [Google Scholar]

- Hu, L.; Sun, A.; Liu, Y. Your neighbors affect your ratings: On geographical neighborhood influence to rating prediction. In Proceedings of the 37th International ACM SIGIR Conference on Research & Development in Information Retrieval, Gold Coast, QLD, Australia, 6–11 July 2014; pp. 345–354. [Google Scholar]

- Wang, H.; Wang, N.; Yeung, D.Y. Collaborative deep learning for recommender systems. In Proceedings of the 21th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, Sydney, Australia, 10–13 August 2015; pp. 1235–1244. [Google Scholar]

- Sedhain, S.; Menon, A.K.; Sanner, S.; Xie, L. Autorec: Autoencoders meet collaborative filtering. In Proceedings of the 24th International Conference on World Wide Web, Florence, Italy, 18–22 May 2015; pp. 111–112. [Google Scholar]

- Ahmadian, S.; Ahmadian, M.; Jalili, M. A deep learning based trust-and tag-aware recommender system. Neurocomputing 2022, 488, 557–571. [Google Scholar] [CrossRef]

- Ahmadian, M.; Ahmadi, M.; Ahmadian, S.; Jalali, S.M.J.; Khosravi, A.; Nahavandi, S. Integration of Deep Sparse Autoencoder and Particle Swarm Optimization to Develop a Recommender System. In Proceedings of the 2021 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Melbourne, Australia, 17–20 October 2021; pp. 2524–2530. [Google Scholar]

- Ahmadian, M.; Ahmadi, M.; Ahmadian, S. A reliable deep representation learning to improve trust-aware recommendation systems. Expert Syst. Appl. 2022, 197, 116697. [Google Scholar] [CrossRef]

- Bai, T.; Wen, J.R.; Zhang, J.; Zhao, W.X. A neural collaborative filtering model with interaction-based neighborhood. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 1979–1982. [Google Scholar]

- Cheng, H.T.; Koc, L.; Harmsen, J.; Shaked, T.; Chandra, T.; Aradhye, H.; Anderson, G.; Corrado, G.; Chai, W.; Ispir, M.; et al. Wide & deep learning for recommender systems. In Proceedings of the 1st Workshop on Deep Learning for Recommender Systems, Boston, MA, USA, 15 September 2016; pp. 7–10. [Google Scholar]

- He, X.; Du, X.; Wang, X.; Tian, F.; Tang, J.; Chua, T.S. Outer product-based neural collaborative filtering. arXiv 2018, arXiv:1808.03912. [Google Scholar]

- Zhang, Q.; Cao, L.; Zhu, C.; Li, Z.; Sun, J. Coupledcf: Learning explicit and implicit user-item couplings in recommendation for deep collaborative filtering. In Proceedings of the IJCAI International Joint Conference on Artificial Intelligence, Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Chen, J.; Zhang, H.; He, X.; Nie, L.; Liu, W.; Chua, T.S. Attentive collaborative filtering: Multimedia recommendation with item-and component-level attention. In Proceedings of the 40th International ACM SIGIR Conference on Research and Development in Information Retrieval, Tokyo, Japan, 7–11 August 2017; pp. 335–344. [Google Scholar]

- Cheng, Z.; Ding, Y.; He, X.; Zhu, L.; Song, X.; Kankanhalli, M.S. A3NCF: An Adaptive Aspect Attention Model for Rating Prediction. In Proceedings of the IJCAI 2018, Stockholm, Sweden, 13–19 July 2018; pp. 3748–3754. [Google Scholar]

- Da’u, A.; Salim, N.; Idris, R. An adaptive deep learning method for item recommendation system. Knowl.-Based Syst. 2021, 213, 106681. [Google Scholar] [CrossRef]

- Xi, W.D.; Huang, L.; Wang, C.D.; Zheng, Y.Y.; Lai, J. BPAM: Recommendation Based on BP Neural Network with Attention Mechanism. In Proceedings of the IJCAI, Macao, 10–16 August 2019; pp. 3905–3911. [Google Scholar]

- Pan, R.; Zhou, Y.; Cao, B.; Liu, N.N.; Lukose, R.; Scholz, M.; Yang, Q. One-class collaborative filtering. In Proceedings of the 2008 Eighth IEEE International Conference on Data Mining, Washington, DC, USA, 15–19 December 2008; pp. 502–511. [Google Scholar]

- Salakhutdinov, R.; Mnih, A. Bayesian probabilistic matrix factorization using Markov chain Monte Carlo. In Proceedings of the 25th International Conference on Machine Learning, Helsinki, Finland, 5–9 July 2008; pp. 880–887. [Google Scholar]

- McCaffrey, J.D. Why you should use cross-entropy error instead of classification error or mean squared error for neural network classifier training. Last Accessed Jan 2018. [Google Scholar]

- Sarwar, B.; Karypis, G.; Konstan, J.; Riedl, J. Item-based collaborative filtering recommendation algorithms. In Proceedings of the 10th International Conference on World Wide Web, Hong Kong, 1–5 May 2001; pp. 285–295. [Google Scholar]

- Deng, Z.H.; Huang, L.; Wang, C.D.; Lai, J.H.; Philip, S.Y. Deepcf: A unified framework of representation learning and matching function learning in recommender system. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 61–68. [Google Scholar]

- Chen, J.; Wang, X.; Zhao, S.; Qian, F.; Zhang, Y. Deep attention user-based collaborative filtering for recommendation. Neurocomputing 2020, 383, 57–68. [Google Scholar] [CrossRef]

| Statistics | MovieLens 1M | Lastfm | AMusic |

|---|---|---|---|

| Of users | 6040 | 1741 | 1776 |

| Of items | 3706 | 2665 | 12,929 |

| Of ratings | 1,000,209 | 69,149 | 46,087 |

| Sparsity | 0.9553 | 0.9851 | 0.9980 |

| Statistics | ml-1m | Lastfm | AMusic | |||

|---|---|---|---|---|---|---|

| HR@10 | NDCG@10 | HR@10 | NDCG@10 | HR@10 | NDCG@10 | |

| ItemPop | 0.4535 | 0.2542 | 0.6628 | 0.3862 | 0.2483 | 0.1304 |

| ItemKNN | 0.6624 | 0.3905 | 0.8673 | 0.5617 | 0.3510 | 0.1989 |

| BPR | 0.6725 | 0.3908 | 0.6249 | 0.3466 | - | - |

| DMF | 0.6565 | 0.3761 | 0.8702 | 0.5804 | 0.3744 | 0.2149 |

| DeepCF | 0.7220 | 0.4378 | 0.8995 | 0.6186 | 0.4116 | 0.2601 |

| DeepUCF+a | 0.7264 | 0.4420 | 0.9014 | 0.6193 | - | - |

| Ours(DACR) | 0.7352 | 0.4485 | 0.9133 | 0.6308 | 0.4189 | 0.2564 |

| Improvement | 0.88% | 0.65% | 1.19% | 1.15% | 0.73% | −0.37% |

| Evaluation | Dimension of Prediction Vectors | |||

|---|---|---|---|---|

| 8 | 16 | 32 | 64 | |

| HR@10 | 0.6925 | 0.7087 | 0.7262 | 0.7352 |

| DNCG@10 | 0.4032 | 0.4174 | 0.4391 | 0.4485 |

| Evaluation | Dimension of Prediction Vectors | |||

|---|---|---|---|---|

| 8 | 16 | 32 | 64 | |

| HR@10 | 0.8852 | 0.8895 | 0.8975 | 0.9133 |

| DNCG@10 | 0.6072 | 0.6134 | 0.6167 | 0.6308 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cui, C.; Qin, J.; Ren, Q. Deep Collaborative Recommendation Algorithm Based on Attention Mechanism. Appl. Sci. 2022, 12, 10594. https://doi.org/10.3390/app122010594

Cui C, Qin J, Ren Q. Deep Collaborative Recommendation Algorithm Based on Attention Mechanism. Applied Sciences. 2022; 12(20):10594. https://doi.org/10.3390/app122010594

Chicago/Turabian StyleCui, Can, Jiwei Qin, and Qiulin Ren. 2022. "Deep Collaborative Recommendation Algorithm Based on Attention Mechanism" Applied Sciences 12, no. 20: 10594. https://doi.org/10.3390/app122010594

APA StyleCui, C., Qin, J., & Ren, Q. (2022). Deep Collaborative Recommendation Algorithm Based on Attention Mechanism. Applied Sciences, 12(20), 10594. https://doi.org/10.3390/app122010594