Hyperparameter Tuned Deep Autoencoder Model for Road Classification Model in Intelligent Transportation Systems

Abstract

:1. Introduction

2. Literature Review

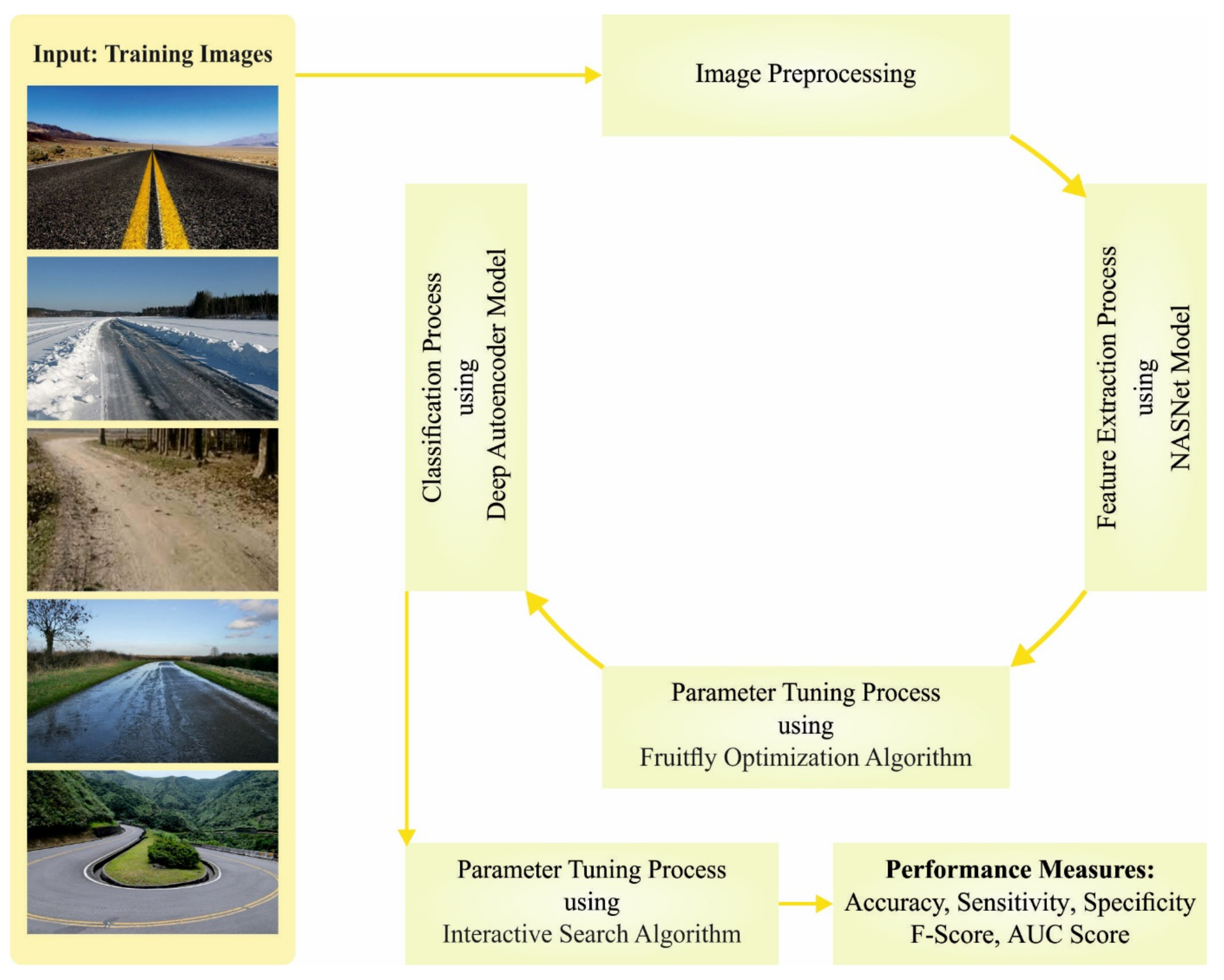

3. Materials and Methods

3.1. Feature Extraction

3.2. Road Classification Using DAE

3.3. Hyperparameter Tuning

4. Performance Evaluation

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Wang, W.; Yang, N.; Zhang, Y.; Wang, F.; Cao, T.; Eklund, P. A review of road extraction from remote sensing images. J. Traffic Transp. Eng. 2016, 3, 271–282. [Google Scholar] [CrossRef] [Green Version]

- Kahraman, I.; Karas, I.R.; Akay, A.E. Road extraction techniques from remote sensing images: A review. In Proceedings of the International Conference on Geomatic & Geospatial Technology (Ggt 2018): Geospatial And Disaster Risk Management; Copernicus Gesellschaft Mbh; UTM: Kuala Lumpur, Malaysia, 2018. [Google Scholar]

- Abdollahi, A.; Pradhan, B.; Shukla, N.; Chakraborty, S.; Alamri, A. Deep learning approaches applied to remote sensing datasets for road extraction: A state-of-the-art review. Remote Sens. 2020, 12, 1444. [Google Scholar] [CrossRef]

- Gao, L.; Song, W.; Dai, J.; Chen, Y. Road extraction from high-resolution remote sensing imagery using refined deep residual convolutional neural network. Remote Sens. 2019, 11, 552. [Google Scholar] [CrossRef] [Green Version]

- Karimzadeh, S.; Matsuoka, M. Development of nationwide road quality map: Remote sensing meets field sensing. Sensors 2021, 21, 2251. [Google Scholar] [CrossRef] [PubMed]

- Al-Qarafi, A.; Alrowais, F.; Alotaibi, S.; Nemri, N.; Al-Wesabi, F.N.; Al Duhayyim, M.; Marzouk, R.; Othma, M.; Al-Shabi, M. Optimal machine learning based privacy preserving blockchain assisted internet of things with smart cities environment. Appl. Sci. 2022, 12, 5893. [Google Scholar] [CrossRef]

- Xu, Y.; Xie, Z.; Feng, Y.; Chen, Z. Road extraction from high-resolution remote sensing imagery using deep learning. Remote Sens. 2018, 10, 1461. [Google Scholar] [CrossRef] [Green Version]

- Jia, J.; Sun, H.; Jiang, C.; Karila, K.; Karjalainen, M.; Ahokas, E.; Khoramshahi, E.; Hu, P.; Chen, C.; Xue, T.; et al. Review on active and passive remote sensing techniques for road extraction. Remote Sens. 2021, 13, 4235. [Google Scholar] [CrossRef]

- Chen, Z.; Deng, L.; Luo, Y.; Li, D.; Junior, J.M.; Gonçalves, W.N.; Nurunnabi, A.A.M.; Li, J.; Wang, C.; Li, D. Road extraction in remote sensing data: A survey. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102833. [Google Scholar] [CrossRef]

- Shao, Z.; Zhou, Z.; Huang, X.; Zhang, Y. MRENet: Simultaneous extraction of road surface and road centerline in complex urban scenes from very high-resolution images. Remote Sens. 2021, 13, 239. [Google Scholar] [CrossRef]

- Alshehhi, R.; Marpu, P.R.; Woon, W.L.; Dalla Mura, M. Simultaneous extraction of roads and buildings in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2017, 130, 139–149. [Google Scholar] [CrossRef]

- Lourenço, P.; Teodoro, A.C.; Gonçalves, J.A.; Honrado, J.P.; Cunha, M.; Sillero, N. Assessing the performance of different OBIA software approaches for mapping invasive alien plants along roads with remote sensing data. Int. J. Appl. Earth Obs. Geoinf. 2021, 95, 102263. [Google Scholar] [CrossRef]

- Zhang, L.; Lan, M.; Zhang, J.; Tao, D. Stagewise unsupervised domain adaptation with adversarial self-training for road segmentation of remote-sensing images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–13. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, C.; Li, J.; Fan, W.; Du, J.; Zhong, B. Adaboost-like End-to-End multiple lightweight U-nets for road extraction from optical remote sensing images. Int. J. Appl. Earth Obs. Geoinf. 2021, 100, 102341. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B. Integrated technique of segmentation and classification methods with connected components analysis for road extraction from orthophoto images. Expert Syst. Appl. 2021, 176, 114908. [Google Scholar] [CrossRef]

- Ding, C.; Weng, L.; Xia, M.; Lin, H. Non-local feature search network for building and road segmentation of remote sensing image. ISPRS Int. J. Geo-Inf. 2021, 10, 245. [Google Scholar] [CrossRef]

- Dewangan, D.K.; Sahu, S.P. RCNet: Road classification convolutional neural networks for intelligent vehicle system. Intell. Serv. Robot. 2021, 14, 199–214. [Google Scholar] [CrossRef]

- Falconí, L.G.; Pérez, M.; Aguilar, W.G. Transfer learning in breast mammogram abnormalities classification with mobilenet and nasnet. In Proceedings of the 2019 International Conference on Systems, Signals and Image Processing (IWSSIP), Osijek, Croatia, 5–7 June 2019; pp. 109–114. [Google Scholar]

- Hu, G.; Xu, Z.; Wang, G.; Zeng, B.; Liu, Y.; Lei, Y. Forecasting energy consumption of long-distance oil products pipeline based on improved fruit fly optimization algorithm and support vector regression. Energy 2021, 224, 120153. [Google Scholar] [CrossRef]

- Kunang, Y.N.; Nurmaini, S.; Stiawan, D.; Suprapto, B.Y. Attack classification of an intrusion detection system using deep learning and hyperparameter optimization. J. Inf. Secur. Appl. 2021, 58, 102804. [Google Scholar] [CrossRef]

- Mortazavi, A.; Toğan, V.; Nuhoğlu, A. Interactive search algorithm: A new hybrid metaheuristic optimization algorithm. Eng. Appl. Artif. Intell. 2018, 71, 275–292. [Google Scholar] [CrossRef]

- Mortazavi, A. Bayesian interactive search algorithm: A new probabilistic swarm intelligence tested on mathematical and structural optimization problems. Adv. Eng. Softw. 2021, 155, 102994. [Google Scholar] [CrossRef]

| Class | No. of Samples |

|---|---|

| Dry | 2500 |

| Ice | 2500 |

| Rough | 2500 |

| Wet | 2500 |

| Curvy | 2500 |

| Total No. of Samples | 12,500 |

| Entire Dataset | |||||

|---|---|---|---|---|---|

| Class | Accuracy | Sensitivity | Specificity | F-Score | AUC Score |

| Dry | 99.22 | 98.20 | 99.48 | 98.06 | 98.84 |

| Ice | 99.61 | 98.80 | 99.81 | 99.02 | 99.30 |

| Rough | 99.50 | 98.76 | 99.68 | 98.74 | 99.22 |

| Wet | 98.97 | 96.64 | 99.55 | 97.40 | 98.09 |

| Curvy | 99.17 | 98.76 | 99.27 | 97.94 | 99.02 |

| Average | 99.29 | 98.23 | 99.56 | 98.23 | 98.89 |

| Training Phase (70%) | |||||

|---|---|---|---|---|---|

| Class | Accuracy | Sensitivity | Specificity | F-Score | AUC Score |

| Dry | 99.21 | 98.02 | 99.51 | 98.05 | 98.77 |

| Ice | 99.59 | 98.75 | 99.80 | 98.97 | 99.27 |

| Rough | 99.46 | 98.57 | 99.69 | 98.65 | 99.13 |

| Wet | 99.01 | 97.01 | 99.50 | 97.49 | 98.26 |

| Curvy | 99.19 | 98.80 | 99.29 | 97.98 | 99.04 |

| Average | 99.29 | 98.23 | 99.56 | 98.23 | 98.89 |

| Testing Phase (30%) | |||||

|---|---|---|---|---|---|

| Class | Accuracy | Sensitivity | Specificity | F-Score | AUC Score |

| Dry | 99.25 | 98.64 | 99.40 | 98.10 | 99.02 |

| Ice | 99.65 | 98.93 | 99.83 | 99.13 | 99.38 |

| Rough | 99.57 | 99.21 | 99.67 | 98.94 | 99.44 |

| Wet | 98.88 | 95.78 | 99.67 | 97.19 | 97.72 |

| Curvy | 99.12 | 98.68 | 99.23 | 97.84 | 98.96 |

| Average | 99.30 | 98.25 | 99.56 | 98.24 | 98.90 |

| Methods | Accuracy | Sensitivity | Specificity | F1-Score |

|---|---|---|---|---|

| MODAE-RCM | 99.30 | 98.25 | 99.56 | 98.24 |

| SGD | 74.11 | 73.46 | 93.46 | 71.13 |

| RMSProp | 97.65 | 97.51 | 99.15 | 97.28 |

| Adagrad | 79.99 | 80.51 | 95.07 | 80.86 |

| Adam | 98.62 | 98.09 | 98.57 | 97.89 |

| Adadelta | 71.37 | 71.39 | 92.51 | 71.51 |

| Adamax | 95.39 | 96.27 | 98.59 | 95.87 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmed Hamza, M.; Alqahtani, H.; Elkamchouchi, D.H.; Alshahrani, H.; Alzahrani, J.S.; Maray, M.; Ahmed Elfaki, M.; Aziz, A.S.A. Hyperparameter Tuned Deep Autoencoder Model for Road Classification Model in Intelligent Transportation Systems. Appl. Sci. 2022, 12, 10605. https://doi.org/10.3390/app122010605

Ahmed Hamza M, Alqahtani H, Elkamchouchi DH, Alshahrani H, Alzahrani JS, Maray M, Ahmed Elfaki M, Aziz ASA. Hyperparameter Tuned Deep Autoencoder Model for Road Classification Model in Intelligent Transportation Systems. Applied Sciences. 2022; 12(20):10605. https://doi.org/10.3390/app122010605

Chicago/Turabian StyleAhmed Hamza, Manar, Hamed Alqahtani, Dalia H. Elkamchouchi, Hussain Alshahrani, Jaber S. Alzahrani, Mohammed Maray, Mohamed Ahmed Elfaki, and Amira Sayed A. Aziz. 2022. "Hyperparameter Tuned Deep Autoencoder Model for Road Classification Model in Intelligent Transportation Systems" Applied Sciences 12, no. 20: 10605. https://doi.org/10.3390/app122010605