On the Privacy–Utility Trade-Off in Differentially Private Hierarchical Text Classification

Abstract

1. Introduction

- Empirically quantifying and comparing the privacy–utility trade-off for three widely used HTC ANN architectures on three reference datasets. In particular, we consider Bag of Words (BoW), Convolutional Neural Networks (CNNs) and Transformer-based architectures.

- Connecting DP privacy guarantees to MI attack performance for HTC ANNs. In contrast to the adversary considered by DP, the MI adversary represents an ML specific threat model.

- Recommending HTC model architectures and privacy parameters for the practitioner based on the privacy–utility trade-off under DP and MI.

2. Preliminaries

2.1. Differential Privacy

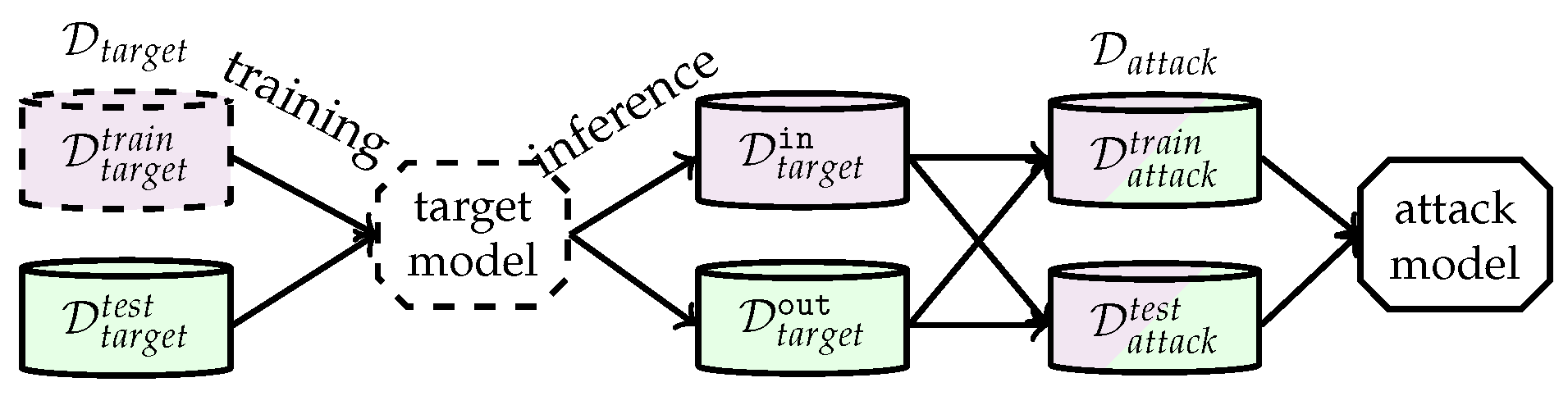

2.2. Membership Inference

2.3. Hierarchical Text Classification

2.4. Embeddings

3. Related Work

4. Quantifying Utility and Privacy in HTC

4.1. HTC Model Architectures

4.2. Utility Metrics

4.3. Privacy Metrics and Bounds

5. Reference Datasets

6. Experimental Setup

7. Evaluation

7.1. Empirical Privacy and Utility

7.2. Drivers for Attack Performance

7.2.1. HTC-Specific Attack Model Features

7.2.2. Reduced Target Model Generalization

8. Discussion

9. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ANN | Artificial Neural Network |

| AUC | Area under the ROC Curve |

| BERT | Bidirectional Encoder Representations from Transformers |

| BoW | Bag of Words |

| CNN | Convolutional Neural Network |

| DAG | Directed Acyclic Graph |

| DP | Differential Privacy |

| FPR | False Positive Rate |

| HTC | Hierarchical Text Classification |

| LCA | Lowest Common Ancestor |

| LCL | Local Classifier per Level |

| LCPN | Local Classifier per Parent Node |

| LCN | Local Classifier per Node |

| MI | Membership Inference |

| NLP | Natural Language Processing |

| RCV1 | Reuters Corpus Volume 1 |

| RDP | Rényi Differential Privacy |

| RNN | Recurrent Neural Network |

| ROC | Receiver Operating Characteristic |

| TPR | True Positive Rate |

Appendix A. Additional Figures

References

- Hariri, R.; Fredericks, E.; Bowers, K. Uncertainty in big data analytics: Survey, opportunities, and challenges. J. Big Data 2019, 6, 44. [Google Scholar] [CrossRef]

- Taylor, C. What’s the Big Deal with Unstructured Data? 2013. Wired. Available online: https://www.wired.com/insights/2013/09/whats-the-big-deal-with-unstructured-data/ (accessed on 6 April 2022).

- Mao, Y.; Tian, J.; Han, J.; Ren, X. Hierarchical Text Classification with Reinforced Label Assignment. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Hong Kong, China, 3–7 November 2019; pp. 445–455. [Google Scholar]

- Qu, B.; Cong, G.; Li, C.; Sun, A.; Chen, H. An evaluation of classification models for question topic categorization. J. Am. Soc. Inf. Sci. Technol. 2012, 63, 889–903. [Google Scholar] [CrossRef]

- Agrawal, R.; Gupta, A.; Prabhu, Y.; Varma, M. Multi-label learning with millions of labels: Recommending advertiser bid phrases for web pages. In Proceedings of the International Conference on World Wide Web, Rio de Janeiro, Brazil, 13–17 May 2013; pp. 13–24. [Google Scholar]

- Peng, S.; You, R.; Wang, H.; Zhai, C.; Mamitsuka, H.; Zhu, S. DeepMeSH: Deep semantic representation for improving large-scale MeSH indexing. Bioinformatics 2016, 32, i70–i79. [Google Scholar] [CrossRef] [PubMed]

- Shokri, R.; Stronati, M.; Song, C.; Shmatikov, V. Membership Inference Attacks against Machine Learning Models. In Proceedings of the IEEE Symposium on Security and Privacy, San Jose, CA, USA, 22–26 May 2017; pp. 3–18. [Google Scholar]

- Nasr, M.; Shokri, R.; Houmansadr, A. Comprehensive Privacy Analysis of Deep Learning: Passive and Active White-Box Inference Attacks against Centralized and Federated Learning. In Proceedings of the 2019 IEEE Symposium on Security and Privacy, San Francisco, CA, USA, 19–23 May 2019; pp. 739–753. [Google Scholar]

- Zhang, C.; Bengio, S.; Hardt, M.; Recht, B.; Vinyals, O. Understanding Deep Learning Requires Rethinking Generalization. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Carlini, N.; Liu, C.; Erlingsson, Ú.; Kos, J.; Song, D. The Secret Sharer: Evaluating and Testing Unintended Memorization in Neural Networks. In Proceedings of the USENIX Security Symposium, Santa Clara, CA, USA, 14–16 August 2019; pp. 267–284. [Google Scholar]

- Dwork, C. Differential Privacy. In Proceedings of the International Colloquium on Automata, Languages and Programming, Venice, Italy, 10–14 July 2006; pp. 1–12. [Google Scholar]

- Abadi, M.; Chu, A.; Goodfellow, I.; McMahan, H.B.; Mironov, I.; Talwar, K.; Zhang, L. Deep Learning with Differential Privacy. In Proceedings of the ACM SIGSAC Conference on Computer and Communications Security, Vienna, Austria, 24–28 October 2016; pp. 308–318. [Google Scholar]

- Hayes, J.; Melis, L.; Danezis, G.; De Cristofaro, E. LOGAN: Membership Inference Attacks Against Generative Models. In Proceedings on Privacy Enhancing Technologies; De Gruyter: Berlin, Germany, 2019. [Google Scholar]

- Bagdasaryan, E.; Poursaeed, O.; Shmatikov, V. Differential Privacy Has Disparate Impact on Model Accuracy. In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Rahman, A.; Rahman, T.; Laganiere, R.; Mohammed, N.; Wang, Y. Membership Inference Attack against Differentially Private Deep Learning Model. Trans. Data Priv. 2018, 11, 61–79. [Google Scholar]

- Jayaraman, B.; Evans, D. Evaluating Differentially Private Machine Learning in Practice. In Proceedings of the USENIX Security Symposium, Santa Clara, CA, USA, 14–16 August 2019; pp. 1895–1912. [Google Scholar]

- Bernau, D.; Grassal, P.W.; Robl, J.; Kerschbaum, F. Assessing Differentially Private Deep Learning with Membership Inference. arXiv 2020, arXiv:1912.11328. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All You Need. In Proceedings of the International Conference on Neural Information Processing Systems, Long Beach, CA, USA, 4–9 December 2017; pp. 6000–6010. [Google Scholar]

- Dwork, C.; Roth, A. The Algorithmic Foundations of Differential Privacy. In Foundations and Trends in Theoretical Computer Science; Now Publishers: Norwell, MA, USA, 2014. [Google Scholar]

- Mironov, I. Renyi Differential Privacy. In Proceedings of the Computer Security Foundations Symposium, Santa Barbara, CA, USA, 21–25 August 2017; pp. 263–275. [Google Scholar]

- van Erven, T.; Harremoës, P. Rényi Divergence and Majorization. In Proceedings of the Symposium on Information Theory, Austin, TX, USA, 13–18 June 2010; pp. 1335–1339. [Google Scholar]

- Manning, C.; Schütze, H. Foundations of Statistical Natural Language Processing; MIT Press: Cambridge, MA, USA, 1999; Chapter 16: Text Categorization. [Google Scholar]

- Murphy, G. The Big Book of Concepts; MIT Press: Cambridge, MA, USA, 2004. [Google Scholar]

- Goyal, P.; Pandey, S.; Jain, K. Deep Learning for Natural Language Processing; Apress: New York, NY, USA, 2018. [Google Scholar]

- Mikolov, T.; Chen, K.; Corrado, G.; Dean, J. Efficient Estimation of Word Representations in Vector Space. In Proceedings of the International Conference on Learning Representations, Scottsdale, AZ, USA, 2–4 May 2013. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C. Glove: Global Vectors for Word Representation. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Stein, R.A.; Jaques, P.A.; Valiati, J.F. An Analysis of Hierarchical Text Classification Using Word Embeddings. Inf. Sci. 2019, 471, 216–232. [Google Scholar] [CrossRef]

- Vu, X.S.; Tran, S.N.; Jiang, L. dpUGC: Learn Differentially Private Representation for User Generated Contents. In Proceedings of the International Conference on Computational Linguistics and Intelligent Text Processing, La Rochelle, France, 9 April 2019. [Google Scholar]

- Fernandes, N.; Dras, M.; McIver, A. Generalised Differential Privacy for Text Document Processing. In Proceedings of the Confernece on Principles of Security and Trust, Prague, Czech Republic, 6–11 April 2019; pp. 123–148. [Google Scholar]

- Weggenmann, B.; Kerschbaum, F. SynTF: Synthetic and Differentially Private Term Frequency Vectors for Privacy-Preserving Text Mining. In Proceedings of the International ACM SIGIR Conference on Research and Development in Information Retrieval, Ann Arbor, MI, USA, 8–12 July 2018; pp. 305–314. [Google Scholar]

- Misra, V. Black Box Attacks on Transformer Language Models. In Proceedings of the Debugging Machine Learning Models, Workshop during the International Conference on Learning Representations, New Orleans, LA, USA, 6 May 2019. [Google Scholar]

- Yeom, S.; Giacomelli, I.; Fredrikson, M.; Jha, S. Privacy Risk in Machine Learning: Analyzing the Connection to Overfitting. In Proceedings of the Computer Security Foundations Symposium, Oxford, UK, 9–12 July 2018; pp. 268–282. [Google Scholar]

- Humphries, T.; Rafuse, M.; Tulloch, L.; Oya, S.; Goldberg, I.; Kerschbaum, F. Differentially Private Learning Does Not Bound Membership Inference. arXiv 2020, arXiv:2010.12112. [Google Scholar]

- Babbar, R.; Partalas, I.; Gaussier, E.; Amini, M.R. On Flat versus Hierarchical Classification in Large-Scale Taxonomies. In Proceedings of the Advances in Neural Information Processing Systems 26 (NIPS 2013), Lake Tahoe, NV, USA, 5–10 December 2013. [Google Scholar]

- Joulin, A.; Grave, E.; Bojanowski, P.; Mikolov, T. Bag of Tricks for Efficient Text Classification. In Proceedings of the Conference of the European Chapter of the Association for Computational Linguistics, Valencia, Spain, 3–7 April 2017; pp. 427–431. [Google Scholar]

- Kowsari, K.; Jafari Meimandi, K.; Heidarysafa, M.; Mendu, S.; Barnes, L.; Brown, D. Text Classification Algorithms: A Survey. Information 2019, 10, 150. [Google Scholar] [CrossRef]

- Kalchbrenner, N.; Grefenstette, E.; Blunsom, P. A Convolutional Neural Network for Modelling Sentences. In Proceedings of the Annual Meeting of the Association for Computational Linguistics, Baltimore, MD, USA, 23–25 June 2014; pp. 655–665. [Google Scholar]

- Collobert, R.; Weston, J.; Bottou, L.; Karlen, M.; Kavukcuoglu, K.; Kuksa, P. Natural language processing (almost) from scratch. J. Mach. Learn. Res. 2011, 12, 2493–2537. [Google Scholar]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the Conference on Empirical Methods in Natural Language Processing, Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the Conference of the North American Chapter of the Association for Computational Linguistics, Minnesota, MI, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Wolf, T.; Debut, L.; Sanh, V.; Chaumond, J.; Delangue, C. HuggingFace’s Transformers: State-of-the-Art Natural Language Processing. arXiv 2019, arXiv:1910.03771. [Google Scholar]

- Silla, C.; Freitas, A. A Survey of Hierarchical Classification across Different Application Domains. Data Min. Knowl. Discov. 2011, 22, 31–72. [Google Scholar] [CrossRef]

- Kosmopoulos, A.; Partalas, I.; Gaussier, E.; Paliouras, G.; Androutsopoulos, I. Evaluation Measures for Hierarchical Classification: A Unified View and Novel Approaches. Data Min. Knowl. Discov. 2015, 29, 820–865. [Google Scholar] [CrossRef]

- Lee, J.; Clifton, C. Differential Identifiability. In Proceedings of the International Conference on Knowledge Discovery and Data Mining, Beijing, China, 12–16 August 2012. [Google Scholar]

- Bernau, D.; Eibl, G.; Grassal, P.; Keller, H.; Kerschbaum, F. Quantifying identifiability to choose and audit epsilon in differentially private deep learning. Proc. VLDB Endow. 2021, 14, 3335–3347. [Google Scholar] [CrossRef]

- Fawcett, T. An introduction to ROC analysis. Pattern Recognit. Lett. 2006, 27, 861–874. [Google Scholar] [CrossRef]

- Nissim, K.; Raskhodnikova, S.; Smith, A. Smooth Sensitivity and Sampling in Private Data Analysis. In Proceedings of the Symposium on Theory of Computing, San Diego, CA, USA, 11–13 June 2007; pp. 75–84. [Google Scholar]

- Lewis, D.D.; Yang, Y.; Rose, T.G.; Li, F. RCV1: A New Benchmark Collection for Text Categorization Research. J. Mach. Learn. Res. 2004, 5, 361–397. [Google Scholar]

- Lehmann, J.; Isele, R.; Jakob, M.; Jentzsch, A.; Kontokostas, D.; Mendes, P.N.; Hellmann, S.; Morsey, M.; van Kleef, P.; Auer, S.; et al. DBpedia—A Large-Scale, Multilingual Knowledge Base Extracted from Wikipedia. Semant. Web 2015, 6, 167–195. [Google Scholar] [CrossRef]

- Yang, Z.; Dai, Z.; Yang, Y.; Carbonell, J.; Salakhutdinov, R.; Le, Q.V. XLNet: Generalized Autoregressive Pretraining for Language Understanding. In Proceedings of the Advances in Neural Information Processing Systems 32 (NeurIPS 2019), Vancouver, BC, Canada, 8–14 December 2019. [Google Scholar]

- Minaee, S.; Kalchbrenner, N.; Cambria, E.; Nikzad, N.; Chenaghlu, M.; Gao, J. Deep Learning Based Text Classification: A Comprehensive Review. arXiv 2020, arXiv:2004.03705. [Google Scholar] [CrossRef]

- McMahan, H.B.; Andrew, G.; Erlingsson, U.; Chien, S.; Mironov, I.; Papernot, N.; Kairouz, P. A General Approach to Adding Differential Privacy to Iterative Training Procedures. In Proceedings of the Privacy Preserving Machine Learning, Workshop during the Conference on Neural Information Processing Systems, Montréal, QC, Canada, 2–8 December 2018. [Google Scholar]

- Ezen-Can, A. A Comparison of LSTM and BERT for Small Corpus. arXiv 2020, arXiv:2009.05451. [Google Scholar]

- Neal, B.; Mittal, S.; Baratin, A.; Tantia, V.; Scicluna, M.; Lacoste-Julien, S.; Mitliagkas, I. A Modern Take on the Bias-Variance Tradeoff in Neural Networks. arXiv 2019, arXiv:1810.08591. [Google Scholar]

- Papernot, N.; Thakurta, A.; Song, S.; Chien, S.; Erlingsson, Ú. Tempered Sigmoid Activations for Deep Learning with Differential Privacy. arXiv 2020, arXiv:stat.ML/2007.14191. [Google Scholar] [CrossRef]

| Hierarchy Level | Dataset | Classes | Assigned Products |

|---|---|---|---|

| Level | BestBuy | 19 | 51,390 |

| DBPedia | 9 | 337,739 | |

| Reuters | 4 | 804,427 | |

| Level | BestBuy | 164 | 50,837 |

| DBPedia | 70 | 337,739 | |

| Reuters | 55 | 779,714 | |

| Level | BestBuy | 612 | 44,949 |

| DBPedia | 219 | 337,739 | |

| Reuters | 43 | 406,961 | |

| Level | BestBuy | 771 | 26,138 |

| Level | BestBuy | 198 | 5640 |

| Level | BestBuy | 23 | 346 |

| Level | BestBuy | 1 | 1 |

| BestBuy | Reuters | DBPedia | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|

| BoW | CNN | Transformer | BoW | CNN | Transformer | BoW | CNN | Transformer | ||

| learning rate | Orig. | |||||||||

| DP | ||||||||||

| batch size | Orig. | 32 | 32 | 32 | 32 | 32 | 32 | 32 | 32 | 32 |

| DP | 64 | 64 | 64 | 64 | 64 | 32 | 64 | 64 | 32 | |

| DP | ||||||||||

| microbatch size | DP | 1 | 1 | 1 | 1 | 1 | 4 | 1 | 1 | 4 |

| 30,253 | 33,731 | 5902 | 2048 | 1091 | 21,597 | 4317 | 6414 | 24,741 | ||

| Training records | 41,625 | 651,585 | 240,942 | |||||||

| Validation records | 4626 | 72,399 | 36,003 | |||||||

| Test records | 5139 | 80,443 | 60,794 | |||||||

| , | , | , | , | , | ||

|---|---|---|---|---|---|---|

| 14 epochs | 50 epochs | 100 epochs | 30 epochs | 30 epochs | ||

| Loss | ||||||

| Loss | ||||||

| Gap | ||||||

| Loss Ratio | 160 | 187 | ||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wunderlich, D.; Bernau, D.; Aldà, F.; Parra-Arnau, J.; Strufe, T. On the Privacy–Utility Trade-Off in Differentially Private Hierarchical Text Classification. Appl. Sci. 2022, 12, 11177. https://doi.org/10.3390/app122111177

Wunderlich D, Bernau D, Aldà F, Parra-Arnau J, Strufe T. On the Privacy–Utility Trade-Off in Differentially Private Hierarchical Text Classification. Applied Sciences. 2022; 12(21):11177. https://doi.org/10.3390/app122111177

Chicago/Turabian StyleWunderlich, Dominik, Daniel Bernau, Francesco Aldà, Javier Parra-Arnau, and Thorsten Strufe. 2022. "On the Privacy–Utility Trade-Off in Differentially Private Hierarchical Text Classification" Applied Sciences 12, no. 21: 11177. https://doi.org/10.3390/app122111177

APA StyleWunderlich, D., Bernau, D., Aldà, F., Parra-Arnau, J., & Strufe, T. (2022). On the Privacy–Utility Trade-Off in Differentially Private Hierarchical Text Classification. Applied Sciences, 12(21), 11177. https://doi.org/10.3390/app122111177