Abstract

This paper first studies the generalization ability of the convolutional layer as a feature mapper (CFM) for extracting image features and the classification ability of the multilayer perception (MLP) in a CNN. Then, a novel generalized hybrid probability convolutional neural network (GHP-CNN) is proposed to solve abstract feature classification with an unknown distribution form. To measure the generalization ability of the CFM, a new index is defined and the positive correlation between it and the CFM is researched. Generally, a fully trained CFM can extract features that are beneficial to classification, regardless of whether the data participate in training the CFM. In the CNN, the fully connected layer in the MLP is not always optimal, and the extracted abstract feature has an unknown distribution. Thus, an improved classifier called the structure-optimized probabilistic neural network (SOPNN) is used for abstract feature classification in the GHP-CNN. In the SOPNN, the separability information is not lost in the normalization process, and the final classification surface is close to the optimal classification surface under the Bayesian criterion. The proposed GHP-CNN utilizes the generalization ability of the CFM and the classification ability of the SOPNN. Experiments show that the proposed network has better classification ability than the existing hybrid neural networks.

1. Introduction

The effect of pattern classification is mainly determined by classification features and classifiers. For an image classification task, the classification model needs to extract image features that are easy to distinguish, and then design a suitable classifier that matches the features. Traditional artificial feature extraction methods have achieved good results, such as the local binary pattern (LBP) [,], the speeded-up robust features (SURF) [,] and the scale-invariant features (SIFT) [,]. However, these features are single features without any hierarchical structure, sometimes lacking good generalization abilities. In recent years, the convolutional neural network (CNN) has attracted the extensive attention of scholars in many fields [,,,], because CNNs can extract various shallow features and deep features of images.

Based on the MLP training algorithm, LeCun et al. [] designed a convolutional neural network called LeNet-5, which was an early classic convolutional neural network model. It was mainly used for handwritten digit recognition on checks. With a gradual increase in the number of convolutional layers, the CNN’s feature extraction classification abilities are becoming stronger and stronger. Similarly, graph convolution networks (Graph CNNs) have good feature extraction ability [,,], because a Graph CNN is also very effective for non-Euclidean data, and is relatively more complex. In this paper, we only discuss CNNs. Krizhevsky [] proposed the extended deep convolutional neural network AlexNet, which used the ReLU function instead of the saturated nonlinear function tanh. It also used the dropout technique to avoid overfitting in the training process. In 2014, Google proposed a convolutional neural network with more than 20 layers, GoogLeNet [], to resolve the gradient disappearance and two loss functions were designed at different depths of the network. The VGG [] network model proposed by Oxford University adopted a layer-by-layer training method. The trained VGG network showed a good ability to extract image features. The residual network ResNet proposed in [] allowed the original input information to be passed directly to the subsequent network layer by adding connected channels directly. ResNet could train hundreds or even thousands of layers while achieving a good classification accuracy. In general, the above various types of convolutional neural networks have achieved good results in different scenarios, mainly because the convolutional layer of a CNN can extract abstract features with good separability. However, because the distribution of these abstract features is unpredictable, designing a suitable classifier for these features is a problem worthy of further study.

The above CNNs can extract all kinds of image features that are good for image classification, but the MLP classifiers in CNNs sometimes fall into local optimal [,,,,,,,,]. To solve this problem, Niu et al. [] proposed using a pretrained CNN as the feature extractor to extract the hierarchical features of an image, and then using an SVM as the classifier. The beginning of the method mainly focused on the binary classification problem. When applied to multiclass classification problems, it mainly adopted multiple two-classifier voting methods. In a multiple-two-classification problem, if a sample has the highest number of votes in two different categories, the SVM divides the sample into either category, which may lead to classification errors. Duan et al. [] proposed using the pretrained CNN as a feature extractor to extract facial features, and then using an extreme learning machine (ELM) as a classifier. However, it was generally necessary to reset the number of hidden nodes when using ELM classifiers on different dataset. Tien et al. [] used depth features and artificial features to merge into new hybrid features, and then used an SVM as a classifier. The new hybrid features combined the characteristics of deep features and artificial features and had a high separability. Guo et al. [] proposed a multinetwork feature fusion structure that used merged features to classify features. Merged features have more information than a single feature, which is beneficial in classification tasks. Although studies have shown that convolutional neural networks have a good ability to extract image features and have good classification capabilities, training a deep convolutional neural network takes a long time and requires a large quantity of data. When a new dataset needs to be classified, the network needs to be retrained.

The above literature review shows that the convolutional layer as a CFM, which is fully trained by a multicategory source dataset, can extract image features that are beneficial to the classification, but there are no studies on the relationship between the generalization ability of the CFM-extracted features, the number of source dataset and target dataset categories. In this paper, the trained CNN on the multiclass source dataset does not need retraining. It directly extracts image features from new target tasks and then cascades the appropriate classifier. This can not only use the ability of the CNN convolutional layer to extract image features, but also makes use of the advantages of different classifiers.

The main contributions of this paper include the following three points. First, the general rule between the generalization ability of the CNN’s convolutional layer as a CFM, and the number of categories of the source dataset and target dataset are summarized. Then, the interpretability of the generalization ability of the CFM is given. Second, a new GHP-CNN is proposed to solve the problem of a target image classification. The GHP-CNN mainly includes a CFM that extracts the features of the target set and a classifier called the structure-optimized probability neural network (SOPNN). The SOPNN classifier is not restricted by the distribution form of the target feature. The GHP-CNN avoids the problem of MLP classifiers in CNN networks easily falling into local optima and improves the classification ability.

The organizational structure of the rest of this paper is as follows. In Section 2, the generalization ability of the CFM to extract target image features is studied. In Section 3, a structure-optimized probabilistic neural network classifier is designed. In Section 4, the GHP-CNN is proposed, and its principle and characteristics are analyzed. Section 6 presents the analytical and experimental results. The last section summarizes the conclusions of the study. Some important symbols used in this article are shown in Table 1.

Table 1.

Important notations and variables used in this paper.

2. Research on the Generalization Ability of the CNN Feature Mapper (CFM) to Extract Image Features

In this paper, the dataset for training the CNN is called the source dataset, and the dataset for testing the CFM generalization ability is called the target dataset. The number of sample categories in is and the number of sample categories in is . In general, the sample categories in the source dataset and the target dataset are not the same. This paper comprehensively studies the relationship between category reduction, unknown category expansion and the generalization ability of CNNs to extract target image features. In this paper, the category reduction refers to the source dataset containing the target dataset (). An unknown category extension means that there is no sample that is common to both the source dataset and the target dataset (). The network used in this section was VGG-16 [] (stride =1, padding = 1, MaxPooling), and the dataset used was CIFAR-100 [].

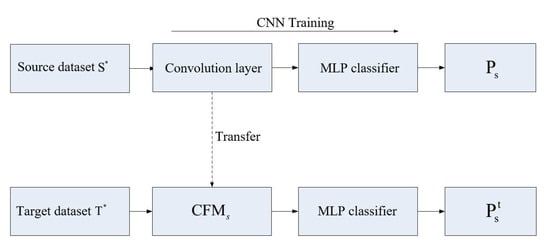

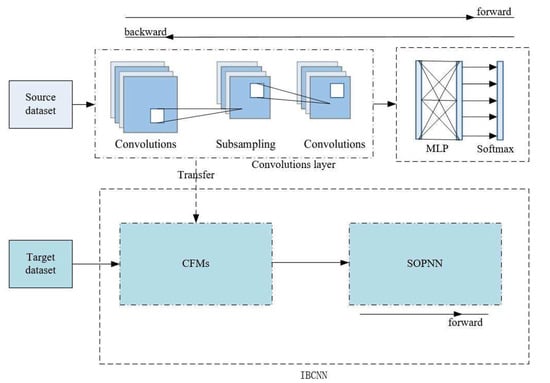

The convolution neural network trained on the source dataset can be simply divided into two parts: the convolutional layer used to extract the feature and the MLP layer as the classifier. The convolutional network is fully trained on the source dataset until it converged, and the convolutional layer migrates as the CFM of the target set. As shown in Figure 1, after the source dataset is trained by the convolutional network and the MLP layer, the corresponding classification accuracy is obtained, the feature mapper corresponding to the source dataset of the s class is , and the target dataset of the t class is passed through the . The features are extracted, and then classification training is performed with the MLP classifier, and the corresponding accuracy is obtained

Figure 1.

The experimental structure diagram of generalization ability of feature mapper.

For the different affiliations between the source dataset and the target dataset , and to make the experiment statistically valid, when training multiple , s different categories of the source dataset and t categories of the target dataset were selected, where each s and t category samples satisfied the uniform distribution in the CIFAR-100 dataset. Multiple source datasets were selected, where . When , multiple target datasets were selected, where .

For each t-class target dataset, there are an average classification accuracy and a classification accuracy of the s-class source dataset corresponding to the . Comparisons between the magnitudes of and show the generalization ability of the in the t-class target dataset.

The size of can measure the strength of the ’s generalization ability. That is, the greater the value of is, the stronger the generalization ability of the . When , the can extract features with better separability for the t-class targets and can obtain a higher accuracy than under subsequent classifiers. That is, a trained by the s-class source dataset has a better generalization ability for a t-class target dataset . When , the fails to extract features with better separability in the t-class target dataset, which results in a lower accuracy than the original under subsequent classifiers. The corresponding classification accuracy can be obtained by changing the membership relationship between and , and the generalization ability of the under different conditions can be further reflected by increasing or decreasing the magnitude of the classification accuracy compared with the original accuracy.

2.1. Analysis of the Generalization Ability of the Feature Mapper to the Target Set after Category Reduction ()

When , the trained on multiclass source dataset extracts the features of target dataset and trains the classification by the MLP classifier. In the CIFAR-100 dataset, we selected the source dataset with the number of categories for s () to be fully trained with the VGG-16 algorithm, we obtained different original precisions and the corresponding 15 s, and then randomly extracted the target dataset with the number of categories t () from the source dataset . The features of these target dataset were extracted by the , and then the training classification was performed by the MLP classifier. To make the results more universal, the target dataset of each category are randomly extracted eight times. The classification accuracy was counted each time, and the final result was the average value of the multiple classification results, where t represented the number of categories in the target dataset , and s represented the number of categories in the source dataset .

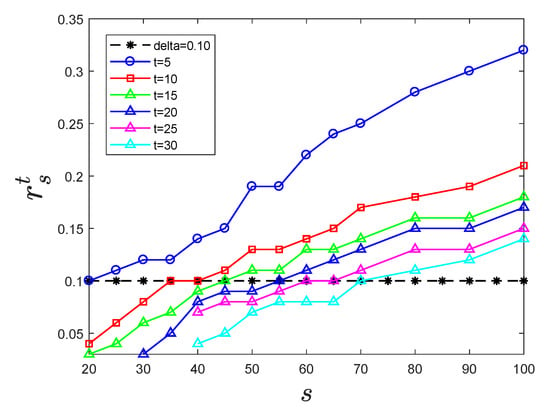

As shown in Figure 2, it can be seen that for the determined t categories from target dataset , the value of was positively correlated with s: as the corresponding s of the increased, increased monotonously. That is, for a certain target dataset with t categories, the larger the value of s was, the better the generalization ability of the trained by the source dataset . For a trained on the determined s source dataset , the value of was negatively correlated with and with the number of t categories in the target dataset; decreased monotonously as t increased. That is, for a that determined the number of categories, a target dataset with a smaller number of t had a better the generalization ability than a target dataset with larger values of t. When was a certain value, that is, after the feature was extracted under the for t different categories, and the magnitude of the classification promotion was the same, the number of for different values of t corresponding to s was different.

Figure 2.

The accuracy increase of different t unknown subclasses compared with the original accuracy.

2.2. Analysis of the Generalization Ability of the Feature Mapper to Unknown Target Categories ()

When , the trained on a multiclass source dataset extracts the features of target dataset and the features are used for training the MLP classifier. In the CIFAR-100 dataset, 90 categories were randomly selected as a candidate source dataset, and the remaining 10 categories were used as a candidate target dataset. The sample sets with number of categories s () were selected from the 90 candidate source dataset to be fully trained by VGG-16, and the relatively best classification results and corresponding 14 were obtained and randomly selected from the 10 candidate dataset. The unknown target dataset with a number of categories t () was selected, and the features of the randomly selected unknown target dataset were extracted by the CFM and then trained by the MLP classifier. To make the results more universal, each number of unknown sample subsets was extracted three times, the classification results were counted each time, and the final result was the average of three results.

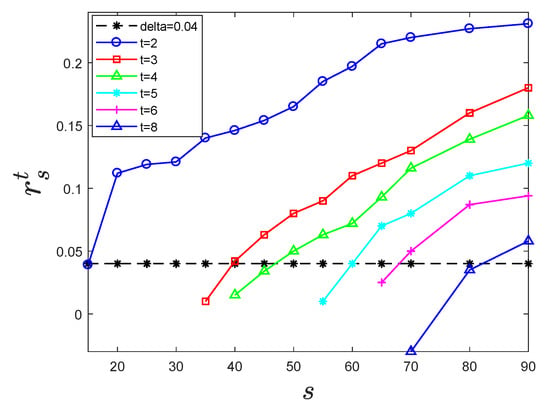

As shown in Figure 3, for the determined t category target dataset , the value of was positively correlated with s; as the corresponding s of the increased, increased monotonously. That is, for a certain t category target dataset, the larger s was, the better the generalization ability of the trained by the source dataset . For the trained from the determined s category source dataset , the value of was negatively correlated with the number of t categories in the target dataset ; as t increased, decreased monotonously. That is, for a that determined the number of categories, the generalization ability of the target dataset with a smaller t was better. When was a certain value, that is, after the feature was extracted under the for different t categories from the target dataset, and the magnitude of the classification promotion was the same, then the number of for t categories corresponding to s categories was different.

Figure 3.

The accuracy increase of different t unknown subclasses compared with the original accuracy.

2.3. Analysis of the CFM Generalization Ability

Through the above experiments, which were from different angles, the generalization ability of CFM was analyzed in a large number of ways. From the analysis, the generalization ability of a trained CNN as a CFM was related to the number of categories in the source dataset(t). The more categories in the source dataset were trained by the CFM, the better the generalization ability of the CFM, and the closer it was to global optimum characteristics.

In the above experiments, the features extracted by the CFM were input to the MLP for intensive training, which could be regarded as a fine-tuning of the MLP classifier after parameter migration. Although the network after fine-tuning the MLP classifier also had a better classification accuracy, it might also fall into a local optimum, and the internal function mapping relationship would be difficult to explain. Since the image features extracted by are generally abstract, the feature distribution is unknown. The classifier based on the Bayesian probability density is not restricted by the distribution of target features, and the final classification surface is close to the optimal classification surface under the Bayesian criterion. We design an optimized Bayesian classifier in the next section.

3. SOPNN Classifier in the GHP-CNN

3.1. The Overall Structure of the SOPNN Classifier

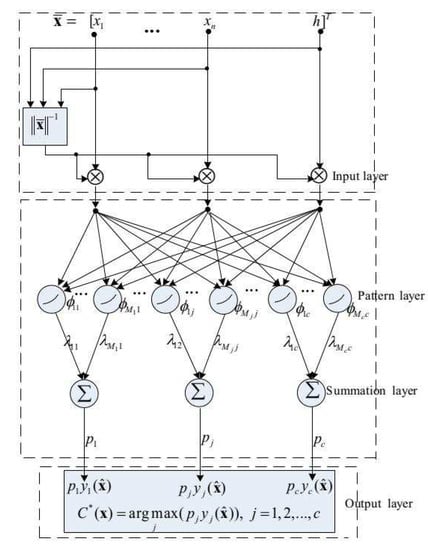

As shown in Figure 4, the SOPNN classifier consists of four layers. The first layer is the input layer for receiving the input feature vectors. A traditional PNN adopts a unit hypersphere normalization for the pattern vectors and input samples, which ensures that the distance between different vectors in a network can be expressed in a simple inner product form. That is, the squared distance between and can be expressed as if and are normalized. Note that such a simple inner form can be easily realized with a neuron structure. However, with a hypersphere normalization, all sample points that are collinear with the sphere center overlap. To avoid the separability problem in the hypersphere normalization process, the SOPNN used the unit hyperspherical crown normalization from reference [], where and are n-dimensional features of the target image extracted by the CFM. h is the additional dimension.

Figure 4.

The overall structure of the SOPNN classifier.

The second layer is the pattern layer, and the pattern nodes in this layer receives the vector from the input layer. Let a pattern node be the mth center in the jth class (). Its output can be given by

where denotes the kernel width, denotes the number of pattern nodes in the jth class and the total number of pattern nodes is M. That is, .

The third layer is the summation layer, which includes c neurons and one neuron per class. Each neuron calculates a conditional probability density function for each class using the following weighted summation form.

where denotes the prior distribution information of the training samples of the mth center in the jth class and satisfies .

The fourth layer is the output layer, which makes decisions according to the following equation.

where c is the class number and is the predicted class of . denotes the prior probability of the jth class. Obviously, all the parameters have an interpretable physical meaning in our classifier.

3.2. The Hidden Parameter Optimization in the SOPNN Using the EM Algorithm

The EM algorithm solves the parameter estimation problem using iterative methods in various probability models. Each iteration consists of two steps, called the step and the step. The step uses the existing estimated parameters and the observed data to calculate the expectation of the hidden parameters. The step uses the expectation obtained in the step and maximum likelihood estimation to estimate the hidden parameter.

Let a set X that is used for training the SOPNN contain L samples from c classes, and let the jth class contain training samples. That is, . With a hyperspherical crown mapping, X can be projected as . According to the independence of the sample, the corresponding log-likelihood function can be obtained:

Using the EM algorithm to solve for the maximum value of the likelihood function, the following iterative formulas can be obtained:

If the initial pattern node and kernel width are known, we can obtain the corresponding parameter value in Equation (4) by using the above iterative formulas. The termination criterion of the EM algorithm is that the absolute value of the difference between the hidden parameter of the th order and the hidden parameter of the th order is less than a given positive , where .

There are three differences between the used SOPNN classifier in this paper and the traditional probability neural network (PNN) classifier. First, the hyperspherical crown normalization method in the SOPNN is a map without separable information loss, and the hypersphere normalization in a traditional PNN is a map with separable information loss. The conclusion that the hyperspherical crown normalization is superior to the traditional hypersphere normalization in terms of separability was proven in []. Second, when the training set is large, the number of pattern nodes in the traditional PNN is equal to the number of training samples, which leads to a complex network structure. In this paper, the kernel coverage method based on the potential function in [] is used to adaptively generate pattern nodes, which makes the number of pattern nodes far fewer than the number of training samples. This ensures that the network structure of the SOPNN is simpler than that of the traditional PNN. Third, the SOPNN assigns a local intraclass weight coefficient to each pattern node, which reflects the prior distribution information of the local region of the pattern node. Therefore, the probability density estimation in the SOPNN is more accurate than in the traditional PNN, and its classification surface can better approximate the optimal classification surface under the Bayesian criterion. The hidden parameters and in the SOPNN can be optimized by using the expectation maximization algorithm.

4. GHP-CNN Neural Network

Structure and Characteristics of the GHP-CNN

The network structure of the GHP-CNN in this paper is shown in Figure 5. The GHP-CNN mainly consists of three steps in the training process. First, the CNN is adequately trained with the source data. The source data information is propagated forward and backward until the CNN converges. Second, the parameters of the convolutional layer can be obtained in the first step of training, and the convolutional layer with fixed parameters is transferred as a separate to extract the image features of the training samples in the target set. Third, the SOPNN is trained on the target dataset. The image features of the target training set are input into the SOPNN for training, and a well-trained SOPNN classifier can be obtained. SOPNN training only requires forward propagation once and does not require backpropagation. After completing the above three steps, we obtain the trained GHP-CNN network. If the test image in the target dataset is input directly into the CFM, then the SOPNN classifier in the GHP-CNN can be used for target classification. The proposed GHP-CNN has the following three characteristics.

Figure 5.

The network structure of GHP-CNN.

First, the GHP-CNN makes full use of the generalization ability and the parameter transferability of the CFM to extract image features. It only needs to transfer the convolutional layer as a CFM, and we do not need to transfer the entire CNN network.

Second, there is no need to retrain the CNN on the target set, only the SOPNN needs to be trained. The GHP-CNN can still be trained and work well even if the target dataset is small. Since training the SOPNN does not require backpropagation, the efficiency of training the SOPNN on the target set is significantly better than that of retraining the CNN.

Third, the distribution of the features extracted by the CFM is unknown. The SOPNN classifier in the GHP-CNN is based on probability density estimation and it is not limited by the distribution of the extracted features. Moreover, the interface of the SOPNN is close to the optimal classification surface under the Bayesian criterion, and there is no local optimal problem with the MLP classifier in the CNN.

Given the target set and initial network. The main steps of the GHP-CNN learning algorithm can be summarized as follows:

Step 1. According to the relationship between , s and t (as shown in Figure 2 and Figure 3), we can determine the number of categories in the source dataset and train various CFMs with different generality abilities.

Step 2. The CFM trained in the source dataset are transferred to extract the features of the target training set with the determined category number.

Step 3. The extracted features in step 2 are input into the SOPNN and are normalized by a hyperspherical crown normalization mapping. The appropriate parameter h should guarantee that the normalized data have the optimal separability under the Fisher criterion.

Step 4. The initial pattern node set of the SOPNN is generated by the adaptive kernel coverage method []. Because the number of generated pattern nodes is far less than the number of training samples, the SOPNN classifier with the optimized structure is obtained. A potential value is used to measure the distribution density of samples in the sample space, and the sample with the highest potential value is selected as the pattern node each time. Then, the potential value of the selected pattern region is iteratively eliminated, and the remaining samples are reordered according to the potential value.

Step 5. The parameters of the SOPNN are optimized by the expectation maximization method (Equations (7)–(10)), and then the well-trained GHP-CNN is obtained.

Step 6. The extracted features of the target test set are input into the SOPNN classifier in the GHP-CNN for classification.

5. Experiment and Results

5.1. Research on the GHP-CNN’s Classification Capabilities after Category Reduction

Through the analysis of the CFM’s generalization ability in the second part, the more source dataset categories used for training the CFM, the better the generalization ability. In the experiment, we used the CIFAR-100 and Caltech-256 datasets and the VGG and ResNet networks. After fully training VGG-16 with the CIFAR-100 dataset, the average accuracy of the 100-class test was 69.58%, and the fully trained convolutional layer was used as the CFM. Four, six, eight, twelve and sixteen subcategories were randomly selected from the CIFAR-100 data, and the corresponding classification accuracy of the subclasses was obtained after training with VGG-16. The features of these subclasses were extracted by the CFM and trained and tested with hybrid networks such as VGG-16-E, CNN-PNN, CNN-SVM, CNN-ELM and GHP-CNN. VGG-16-E is the result of training the MLP using the CFM in VGG-16. The results are shown in Table 2. After fully training ResNet-152 with Caltech-256, ResNet-152-E was the result of training the MLP using the CFM in ResNet-152. The results are shown in Table 3.

Table 2.

Classification accuracy corresponding to different algorithms after category reduction with the CIFAR-100 dataset.

Table 3.

Classification accuracy corresponding to different algorithms after category reduction with the Caltech-256 dataset.

In Table 2, when , the average classification accuracy of the corresponding 100 classes was determined, but for each different subclass t, the corresponding classification accuracy was different. When the number of source data categories was determined (), the corresponding CFMs could extract different categories of target features () and have significantly improved classification effects in different classifiers. Overall, the CFM trained by a multiclass dataset could extract features with a high separability and had a good generalization ability of feature mapping. When subclasses were reclassified, the results of the comparison algorithm were significantly higher than the original accuracy. Our GHP-CNN method had the highest classification accuracy. In Table 3, when , the average classification accuracy of the corresponding 256 classes was determined. When subclasses were reclassified, the results of the comparison algorithm were significantly higher than the original accuracy. Our GHP-CNN method had the highest classification accuracy.

5.2. Research on the Classification Ability of the GHP-CNN with Unknown Categories

The CFM trained by the multiclass dataset extracted the features of unknown classes and verified the classification performance of the VGG-16-E, the CNN-PNN, the CNN-SVM, the CNN-ELM and the GHP-CNN classifiers. Ninety categories were randomly selected from the CIFAR-100 dataset as a candidate source dataset for training the CFM, and the remaining 10 categories were used as the unknown target dataset. The classification accuracies for the unknown categories are shown in Table 4, when we trained ResNet-152 with Caltech-256. The classification accuracies for the unknown categories are shown in Table 5.

Table 4.

Classification accuracy corresponding to different algorithms after the unknown categories with the CIFAR-100 dataset.

Table 5.

Classification accuracy corresponding to different algorithms after the unknown categories with the Caltech-256 dataset.

In Table 4, the trained from the multiclass source dataset also had a good generalization ability for unknown subclasses. The accuracy of the proposed GHP-CNN was significantly higher than that of the comparison algorithms. In Table 5, the accuracy of the proposed GHP-CNN was significantly higher than that of the comparison algorithms.

5.3. Result Analysis

In the comparison algorithm, the MLP classifier performed intensive training on the mapped subclass features, and the accuracy was higher than the original accuracy. However, because the MLP classifier could easily fall into a local optimum during the training process, the overall performance was not good, and the CNN-PNN, CNN-SVM, CNN-ELM and GHP-CNN classifiers had better results. The GHP-CNN avoided some loss of separability information caused by the hypersphere normalization in the traditional PNN and retained the optimal classification surface under the Bayesian criterion. The classification ability of the GHP-CNN was obviously better than the other algorithms. In addition, the accuracy of the classification of unknown categories did not reach the classification accuracy of subclasses, because the generalization ability of the mapping of unknown subclass features was not as good as the generalization ability of the mapping of subcategory features. The separability of mapping features was not good enough, and the features of different categories were mutually integrated.

5.4. Parameter Analysis

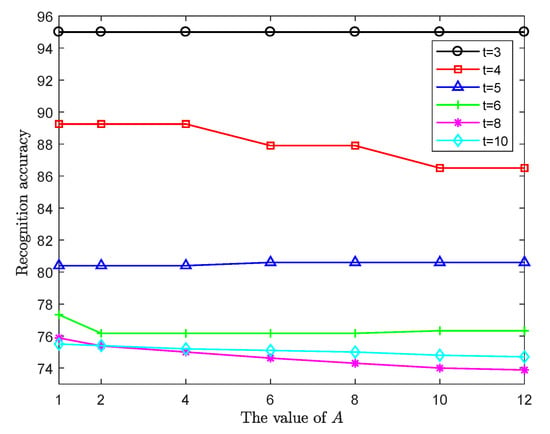

In the experiment of the generalizability to unknown categories, the parameter A of a potential function [] in the GHP-CNN represents the distance weighting factor between the vectors. By adjusting this parameter, the potential value between the two vectors can be changed. When the potential value between two vectors is assumed to be a fixed value, the larger the distance between the two vectors, the smaller A is. When the distance between two vectors is assumed to be a fixed value, the larger A is, the smaller the potential value between the sample vectors. Figure 6 shows that when parameter A changed in a small range, the change in classification accuracy was relatively stable, which showed that the algorithm had a good robustness to parameter A.

Figure 6.

Effect of parameter A on classification accuracy of GHP-CNN.

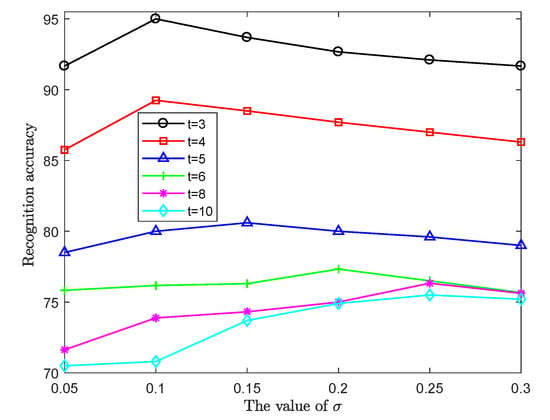

The size of the initial kernel width affects the coverage of pattern nodes of the GHP-CNN, thereby affecting the number of mode layer nodes. If the value of is too small, then the coverage region of the generated pattern node does not contain other sample points, and the PNN classifier is close to the nearest neighbor classifier, which is equivalent to using a training sample to represent a pattern node, and the structure of the network pattern layer cannot be simplified in this case. If the value of is too large, the selected pattern node cannot reflect the real sample distribution and may increase the number of heterogeneous samples in the kernel, which seriously affects the classification accuracy. In the algorithm of this paper, the input sample was a vector after the hyperspherical mapping, so that could be kept in a small range for proper selection. As shown in Figure 7, when was between 0.05 and 0.25, the GHP-CNN algorithm could achieve a relatively good classification accuracy.

Figure 7.

Effect of parameter on classification accuracy of GHP-CNN.

Note that different h values lead to differences in the separability of the normalized samples. We can select h according to the following separability criterion.

where and denote the between-class scatter matrix and total scatter matrix of the normalized training samples, respectively. However, as a new dimension, the value of h should be matched with the other dimensions. Therefore, the initial value of h can be selected as follows.

where . denotes the volume of an n-dimension hypercube for the target sample space, and det () measures the volume of an n-dimension superellipsoid of the sample scatter for the target set. We compute the two volumes differently because the actual distribution shape of the features of the target set is generally irregular.

To ensure fairness in the comparison, all algorithms were tested using the same CPU. The unit of time consumed was in seconds. As shown in Table 6, the computational efficiency of the CNN-ELM and CNN-SVM algorithms was significantly higher than that of the other algorithms. The VGG-16-E algorithm took the most time because the node parameters of the MLP layer were much larger than in the other classifiers. The time required for the GHP-CNN algorithm proposed in this paper was much lower than the VGG-16-E test time.

Table 6.

Comparison of test time for each algorithm.

6. Conclusions

In this paper, the generalization ability of a CFM to extract image features was studied and a novel quantization index for the CFM was defined. The analysis showed that the trained CFM could obtain abstract features with good separability. Since the distribution of the extracted features was unknown and the MLP classifier in the fully connected layer sometimes fell into a local optimum, an improved classifier named the structure-optimized probabilistic neural network (SOPNN) was used for various abstract feature classifications. Utilizing the characteristics of the CFM and the classification ability of the SOPNN, a novel generalized hybrid probability convolutional neural network (GHP-CNN) was proposed. Experiments showed that, for the same abstract features, the proposed GHP-CNN was superior to the existing hybrid neural networks in terms of classification ability.

Author Contributions

Conceptualization, writing—original draft preparation, W.Z. and J.Z.; methodology, H.F. and J.Z.; validation, H.F. and J.Z. and H.W.; formal analysis, H.W.; investigation, Y.X.; writing—review and editing, visualization, W.Z. and H.F.; supervision, project administration, funding acquisition, H.W. and J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the PHD Research Foundation of Gannan Normal University (grant no. BSJJ202261); the National Natural Science Foundation of China (grant no. 62276146); the Open Foundation of Engineering Research Center of Big Data Application in Private Health Medicine, Fujian Province University (grant no. MKF202201); the Fujian Provincial Natural Science Foundation Projects (grant no. 2022J011171).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. The data are not publicly available due to copyright.

Conflicts of Interest

The authors declare no conflict of interest.

Sample Availability

Not applicable.

References

- Ahonen, T.; Hadid, A.; Pietikainen, M. Face description with local binary patterns: Application to face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2006, 28, 2037–2041. [Google Scholar] [CrossRef] [PubMed]

- Satpathy, A.; Jiang, X.; Eng, H.L. LBP-based edge-texture features for object recognition. IEEE Trans. Image Process. 2014, 23, 1953–1964. [Google Scholar] [CrossRef] [PubMed]

- Bay, H.; Ess, A.; Tuytelaars, T.; Van Gool, L. Speeded-up robust features (SURF). Comput. Vis. Image Underst. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Li, J.; Zhang, Y. Learning surf cascade for fast and accurate object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013; pp. 3468–3475. [Google Scholar]

- Dellinger, F.; Delon, J.; Gousseau, Y.; Michel, J.; Tupin, F. SAR-SIFT: A SIFT-like algorithm for SAR images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 453–466. [Google Scholar] [CrossRef]

- Zheng, L.; Yang, Y.; Tian, Q. SIFT meets CNN: A decade survey of instance retrieval. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 1224–1244. [Google Scholar] [CrossRef]

- Song, L.; Liu, J.; Qian, B.; Sun, M.; Yang, K.; Sun, M.; Abbas, S. A deep multi-modal CNN for multi-instance multi-label image classification. IEEE Trans. Image Process. 2018, 27, 6025–6038. [Google Scholar] [CrossRef]

- Ferrari, C.; Lisanti, G.; Berretti, S.; Del Bimbo, A. Investigating nuisances in DCNN-based face recognition. IEEE Trans. Image Process. 2018, 27, 5638–5651. [Google Scholar] [CrossRef]

- Yin, X.; Liu, X. Multi-task convolutional neural network for pose-invariant face recognition. IEEE Trans. Image Process. 2018, 27, 964–975. [Google Scholar] [CrossRef]

- Basu, T.; Menzer, O.; Ward, J.; SenGupta, I. A Novel Implementation of Siamese Type Neural Networks in Predicting Rare Fluctuations in Financial Time Series. Risks 2022, 10, 39. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar] [CrossRef]

- Rama-Maneiro, E.; Vidal, J.C.; Lama, M. Embedding Graph Convolutional Networks in Recurrent Neural Networks for Predictive Monitoring. arXiv 2021, arXiv:2112.09641. [Google Scholar]

- Nassar, M.; Wang, X.; Tumer, E. Fully Convolutional Graph Neural Networks using Bipartite Graph Convolutions. ICLR 2020. [Google Scholar]

- Hong, Y.; Liu, Y.; Yang, S.; Zhang, K.; Wen, A.; Hu, J. Improving graph convolutional networks based on relation-aware attention for end-to-end relation extraction. IEEE Access 2020, 8, 51315–51323. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2012; pp. 1097–1105. [Google Scholar]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going deeper with convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–9. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Niu, X.X.; Suen, C.Y. A novel hybrid CNN–SVM classifier for recognizing handwritten digits. Pattern Recognit. 2012, 45, 1318–1325. [Google Scholar] [CrossRef]

- Duan, M.; Li, K.; Yang, C.; Li, K. A hybrid deep learning CNN–ELM for age and gender classification. Neurocomputing 2018, 275, 448–461. [Google Scholar] [CrossRef]

- Fu, M.y.; Liu, F.y.; Yang, Y.; Wang, M.l. Background pixels mutation detection and Hu invariant moments based traffic signs detection on autonomous vehicles. In Proceedings of the 33rd Chinese Control Conference, Nanjing, China, 28–30 July 2014; pp. 670–674. [Google Scholar]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Blitzer, J.; Crammer, K.; Kulesza, A.; Pereira, F.; Wortman, J. Learning bounds for domain adaptation. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2008; pp. 129–136. [Google Scholar]

- Liu, F.; Lin, G.; Shen, C. CRF learning with CNN features for image segmentation. Pattern Recognit. 2015, 48, 2983–2992. [Google Scholar] [CrossRef]

- Xie, G.S.; Zhang, X.Y.; Yan, S.; Liu, C.L. Hybrid CNN and dictionary-based models for scene recognition and domain adaptation. IEEE Trans. Circuits Syst. Video Technol. 2015, 27, 1263–1274. [Google Scholar] [CrossRef]

- Nguyen, D.T.; Pham, T.D.; Baek, N.R.; Park, K.R. Combining deep and handcrafted image features for presentation attack detection in face recognition systems using visible-light camera sensors. Sensors 2018, 18, 699. [Google Scholar] [CrossRef]

- Guo, Z.; Chen, Q.; Wu, G.; Xu, Y.; Shibasaki, R.; Shao, X. Village building identification based on ensemble convolutional neural networks. Sensors 2017, 17, 2487. [Google Scholar] [CrossRef] [PubMed]

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images. Master’s Thesis, University of Toronto, Toronto, ON, Canada, 2009. [Google Scholar]

- Pei, J.; Fan, H.; Pu, L. Range space super spherical cap discriminant analysis. Neurocomputing 2017, 244, 112–122. [Google Scholar] [CrossRef]

- Wen, H.; Xie, W.; Pei, J.; Guan, L. An incremental learning algorithm for the hybrid RBF-BP network classifier. EURASIP J. Adv. Signal Process. 2016, 2016, 57. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).