A Neuro-Symbolic Classifier with Optimized Satisfiability for Monitoring Security Alerts in Network Traffic

Abstract

:1. Introduction

Related Work

2. Materials and Methods

3. Results Overview

| Algorithm 1 The generic hybrid solution |

|

4. Discussions Alongside Numerical Experiments

4.1. Classifier Based on Protocol Type and Status of the Connection

- 1.

- The level of satisfiability of the Knowledge Base of the training data.

- 2.

- The level of satisfiability of the Knowledge Base of the test data.

- 3.

- The training accuracy (we used Hamming loss function).

- 4.

- The test accuracy (same thing, but for the test samples).

- 5.

- The level of satisfiability of a formula . (every connection is not a connection and vice-versa)

- 6.

- The level of satisfiability of a formula . (every connection is not a one and vice-versa)

- 7.

- The level of satisfiability of a formula . (every connection is not a one and vice-versa)

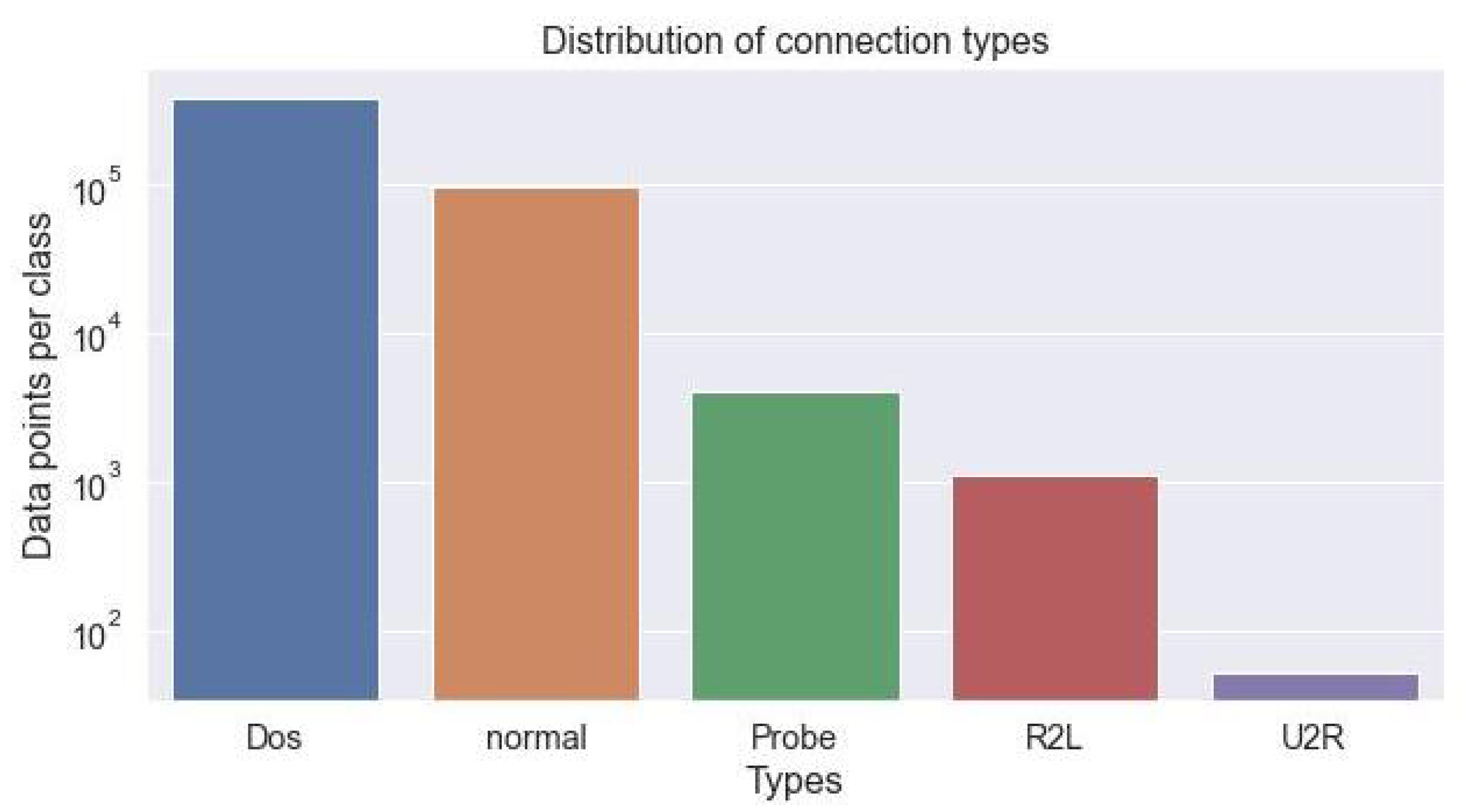

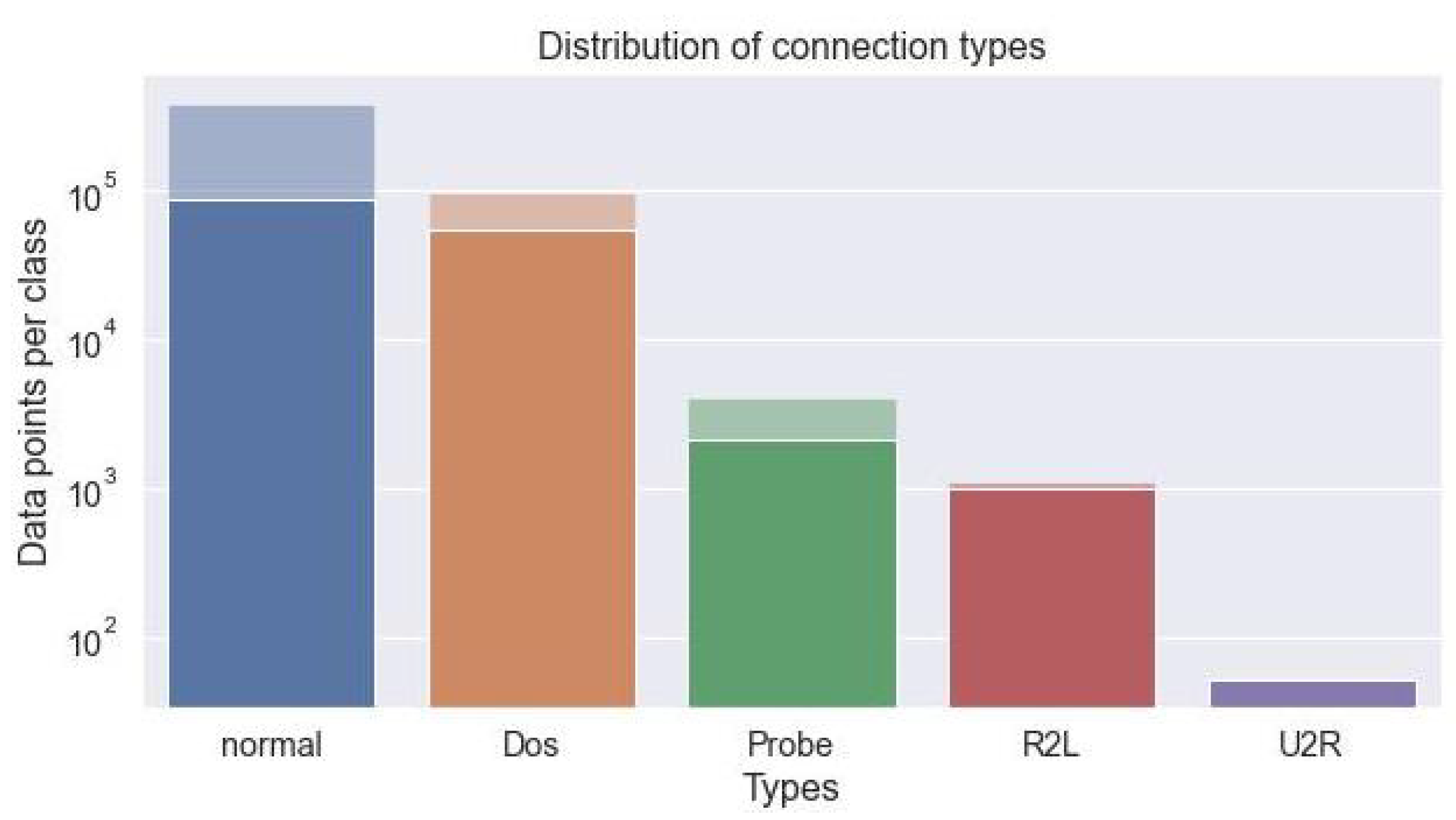

4.2. LTN-Based Intrusions Detection in Network Traffic

- All the non-attacks should have label normal;

- All the DOS attacks should have label DOS;

- All the probe attacks should have label probe;

- All the R2L should have label R2L;

- All the U2R attacks should have label U2R;

- If an example x is labelled as normal, it cannot be labelled as DOS too;

- If an example x is labelled as normal, it cannot be labelled as probe too;

- If an example x is labelled as normal, it cannot be labelled as R2L too;

- and so forth...

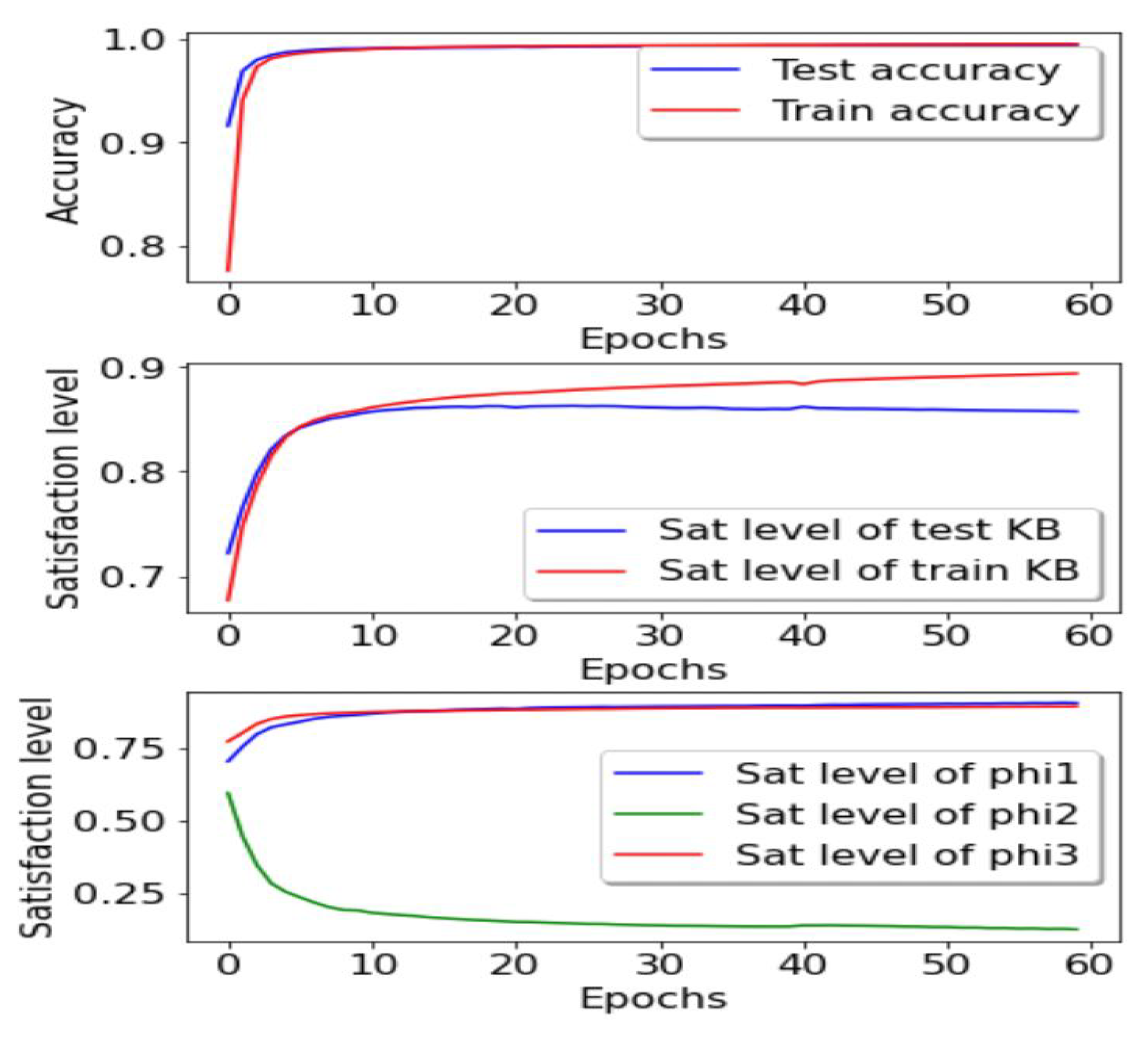

- 1.

- The level of satisfiability of the Knowledge Base of the training data.

- 2.

- The level of satisfiability of the Knowledge Base of the test data.

- 3.

- The training accuracy (calculated as the fraction of the labels that are correctly predicted).

- 4.

- The test accuracy (same thing, but for the test samples).

- 5.

- The level of satisfiability of a formula we expect to be true. (every connection cannot be a connection and vice-versa)

- 6.

- The level of satisfiability of a formula we expect to be false. (every connection is also a one)

- 7.

- The level of satisfiability of a formula we expect to be false. (every connection has a connection status)

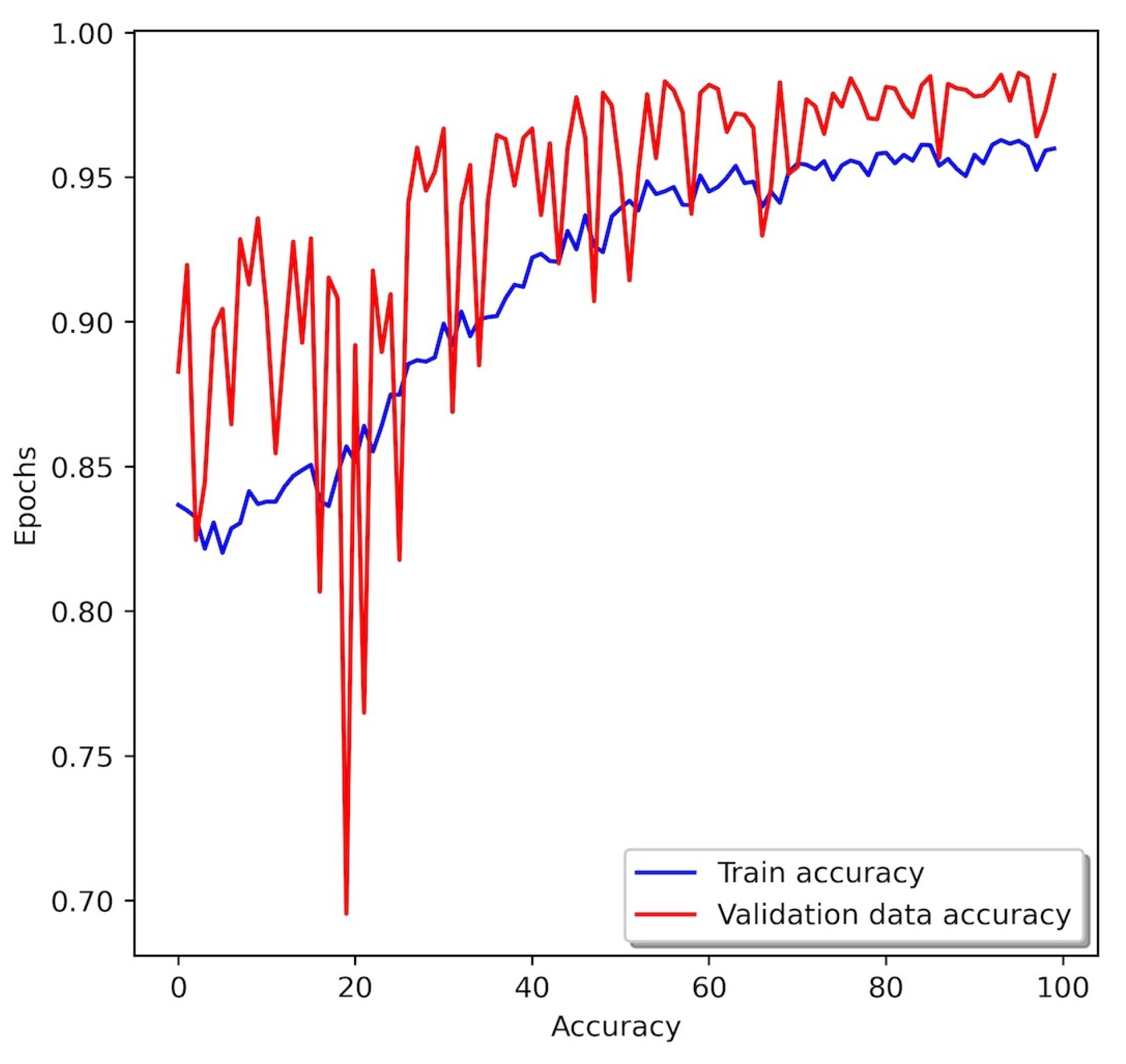

4.3. LTN and DNN on CIC-IDS2017

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Ioulianou, P.; Vasilakis, V.; Moscholios, I.; Logothetis, M. A signature-based intrusion detection system for the internet of things. Inf. Commun. Technol. Form, 2018; in press. [Google Scholar]

- Kumar, V.; Sangwan, O.P. Signature based intrusion detection system using SNORT. Int. J. Comput. Appl. Inf. Technol. 2012, 1, 35–41. [Google Scholar]

- Aydın, M.A.; Zaim, A.H.; Ceylan, K.G. A hybrid intrusion detection system design for computer network security. Comput. Electr. Eng. 2009, 35, 517–526. [Google Scholar] [CrossRef]

- Smys, S.; Basar, A.; Wang, H. Hybrid intrusion detection system for internet of things (IoT). J. ISMAC 2020, 2, 190–199. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [Green Version]

- Molnar, C. Interpretable Machine Learning; Independently Published: Chicago, IL, USA, 2022; ISBN 979-8411463330. [Google Scholar]

- Onchis, D.M.; Gillich, G.R. Stable and explainable deep learning damage prediction for prismatic cantilever steel beam. Comput. Ind. 2021, 125, 103359. [Google Scholar] [CrossRef]

- Haugeland, J. Artificial Intelligence: The Very Idea; MIT Press: Cambridge, MA, USA, 1989. [Google Scholar]

- Badreddine, S.; d’Avila Garcez, A.; Serafini, L.; Spranger, M. Logic Tensor Networks. Artif. Intell. 2022, 303, 103649. [Google Scholar] [CrossRef]

- ATOS Scientific Community Report. 2022. Available online: https://atos.net/content/journey/unlocking-virtual-dimensions-atos-scientific-community-report.pdf (accessed on 23 October 2022).

- Ashiku, L.; Dagli, C. Network Intrusion Detection System using Deep Learning. Procedia Comput. Sci. 2021, 185, 239–247. [Google Scholar] [CrossRef]

- Onchis, D. Observing damaged beams through their time–frequency extended signatures. Signal Process. 2014, 96, 16–20. [Google Scholar] [CrossRef]

- Mihail, G.; Onchis, D. Face and marker detection using Gabor frames on GPUs. Signal Process. 2014, 96, 90–93. [Google Scholar] [CrossRef]

- Lansky, J.; Ali, S.; Mohammadi, M.; Majeed, M.K.; Karim, S.H.T.; Rashidi, S.; Hosseinzadeh, M.; Rahmani, A.M. Deep Learning-Based Intrusion Detection Systems: A Systematic Review. IEEE Access 2021, 9, 101574–101599. [Google Scholar] [CrossRef]

- Karatas, G.; Demir, O.; Koray Sahingoz, O. Deep Learning in Intrusion Detection Systems. In Proceedings of the 2018 International Congress on Big Data, Deep Learning and Fighting Cyber Terrorism (IBIGDELFT), Ankara, Turkey, 3–4 December 2018; pp. 113–116. [Google Scholar] [CrossRef]

- Berman, D.S.; Buczak, A.L.; Chavis, J.S.; Corbett, C.L. A Survey of Deep Learning Methods for Cyber Security. Information 2019, 10, 122. [Google Scholar] [CrossRef] [Green Version]

- Ullah, S.; Khan, M.A.; Ahmad, J.; Jamal, S.S.; e Huma, Z.; Hassan, M.T.; Pitropakis, N.; Arshad; Buchanan, W.J. HDL-IDS: A Hybrid Deep Learning Architecture for Intrusion Detection in the Internet of Vehicles. Sensors 2022, 22, 1340. [Google Scholar] [CrossRef]

- Garnelo, M.; Shanahan, M. Reconciling deep learning with symbolic artificial intelligence: Representing objects and relations. Curr. Opin. Behav. Sci. 2019, 29, 17–23. [Google Scholar] [CrossRef]

- d’Avila Garcez, A.; Lamb, L.C. Neurosymbolic AI: The 3rd wave. arXiv 2020, arXiv:2012.05876. [Google Scholar]

- Palvanov, A.; Im Cho, Y. Comparisons of deep learning algorithms for MNIST in real-time environment. Int. J. Fuzzy Log. Intell. Syst. 2018, 18, 126–134. [Google Scholar] [CrossRef] [Green Version]

- Diaz-del Rio, F.; Real, P.; Onchis, D. A parallel Homological Spanning Forest framework for 2D topological image analysis. Pattern Recognit. Lett. 2016, 83, 49–58. [Google Scholar] [CrossRef]

- Feichtinger, H.; Grybos, A.; Onchis, D. Approximate dual Gabor atoms via the adjoint lattice method. Adv. Comput. Math. 2014, 40, 651–665. [Google Scholar] [CrossRef]

- Badreddine, S.; d’Avila Garcez, A.; Serafini, L.; Spranger, M. Logic Tensor Networks. 2022. Available online: https://github.com/logictensornetworks/logictensornetworks (accessed on 1 February 2022).

- Hájek, P. Metamathematics of Fuzzy Logic; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2013; Volume 4. [Google Scholar]

- McKinney, W. Data Structures for Statistical Computing in Python. In Proceedings of the 9th Python in Science Conference, Austin, TX, USA, 28 June–3 July 2010; van der Walt, S., Millman, J., Eds.; 2010; pp. 56–61. [Google Scholar] [CrossRef] [Green Version]

- KDD99 Dataset. 1999. Available online: http://kdd.ics.uci.edu/databases/kddcup99/kddcup99.html (accessed on 1 May 2021).

- Kayacik, H.G.; Zincir-Heywood, A.N.; Heywood, M.I. Selecting features for intrusion detection: A feature relevance analysis on KDD 99 intrusion detection datasets. In Proceedings of the Third Annual Conference on Privacy, Security and Trust, St. Andrews, NB, Canada, 12–14 October 2005; Volume 94, pp. 1722–1723. [Google Scholar]

- Al Tobi, A.M.; Duncan, I. KDD 1999 generation faults: A review and analysis. J. Cyber Secur. Technol. 2018, 2, 164–200. [Google Scholar] [CrossRef]

- Brugger, T. KDD Cup ’99 Dataset (Network Intrusion) Considered Harmful. 2007. Available online: https://www.kdnuggets.com/news/2007/n18/4i.html (accessed on 1 May 2021).

- Thapa, N.; Liu, Z.; Shaver, A.; Esterline, A.; Gokaraju, B.; Roy, K. Secure Cyber Defense: An Analysis of Network Intrusion-Based Dataset CCD-IDSv1 with Machine Learning and Deep Learning Models. Electronics 2021, 10, 1747. [Google Scholar] [CrossRef]

- Sharafaldin, I.; Lashkari, A.H.; Ghorbani, A.A. Toward generating a new intrusion detection dataset and intrusion traffic characterization. ICISSp 2018, 1, 108–116. [Google Scholar]

| 0. TCP | 1. ICMP | 2. UDP | 3. SF | 4. S1 |

|---|---|---|---|---|

| 5. REJ | 6. S2 | 7. S0 | 8. S3 | 9. RSTO |

| 10. RSTR | 11. RSTOS0 | 12. OTH | 13. SH |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Onchis, D.; Istin, C.; Hogea, E. A Neuro-Symbolic Classifier with Optimized Satisfiability for Monitoring Security Alerts in Network Traffic. Appl. Sci. 2022, 12, 11502. https://doi.org/10.3390/app122211502

Onchis D, Istin C, Hogea E. A Neuro-Symbolic Classifier with Optimized Satisfiability for Monitoring Security Alerts in Network Traffic. Applied Sciences. 2022; 12(22):11502. https://doi.org/10.3390/app122211502

Chicago/Turabian StyleOnchis, Darian, Codruta Istin, and Eduard Hogea. 2022. "A Neuro-Symbolic Classifier with Optimized Satisfiability for Monitoring Security Alerts in Network Traffic" Applied Sciences 12, no. 22: 11502. https://doi.org/10.3390/app122211502

APA StyleOnchis, D., Istin, C., & Hogea, E. (2022). A Neuro-Symbolic Classifier with Optimized Satisfiability for Monitoring Security Alerts in Network Traffic. Applied Sciences, 12(22), 11502. https://doi.org/10.3390/app122211502