1. Introduction

With the rapid development of robotics and computer application technology, Simultaneous Localization and Mapping System (SLAM) [

1,

2] technology has been widely used in agriculture, unmanned driving, aerospace, and other fields. As one of the most advanced technologies in the field of mobile robotics, SLAM utilizes a variety of sensors on the robot to conduct localization, navigation, and map construction in the environment. A robot must build a map of its environment to realize autonomous positioning and navigation in an unfamiliar environment. Therefore, for the robot, the construction of an environmental map is the foundation of all follow-up work, and an accurate map can enable the robot to conduct accurate autonomous positioning. With in-depth research, SLAM technology has also been greatly developed, and many SLAM mapping technologies have emerged, such as VSLAM [

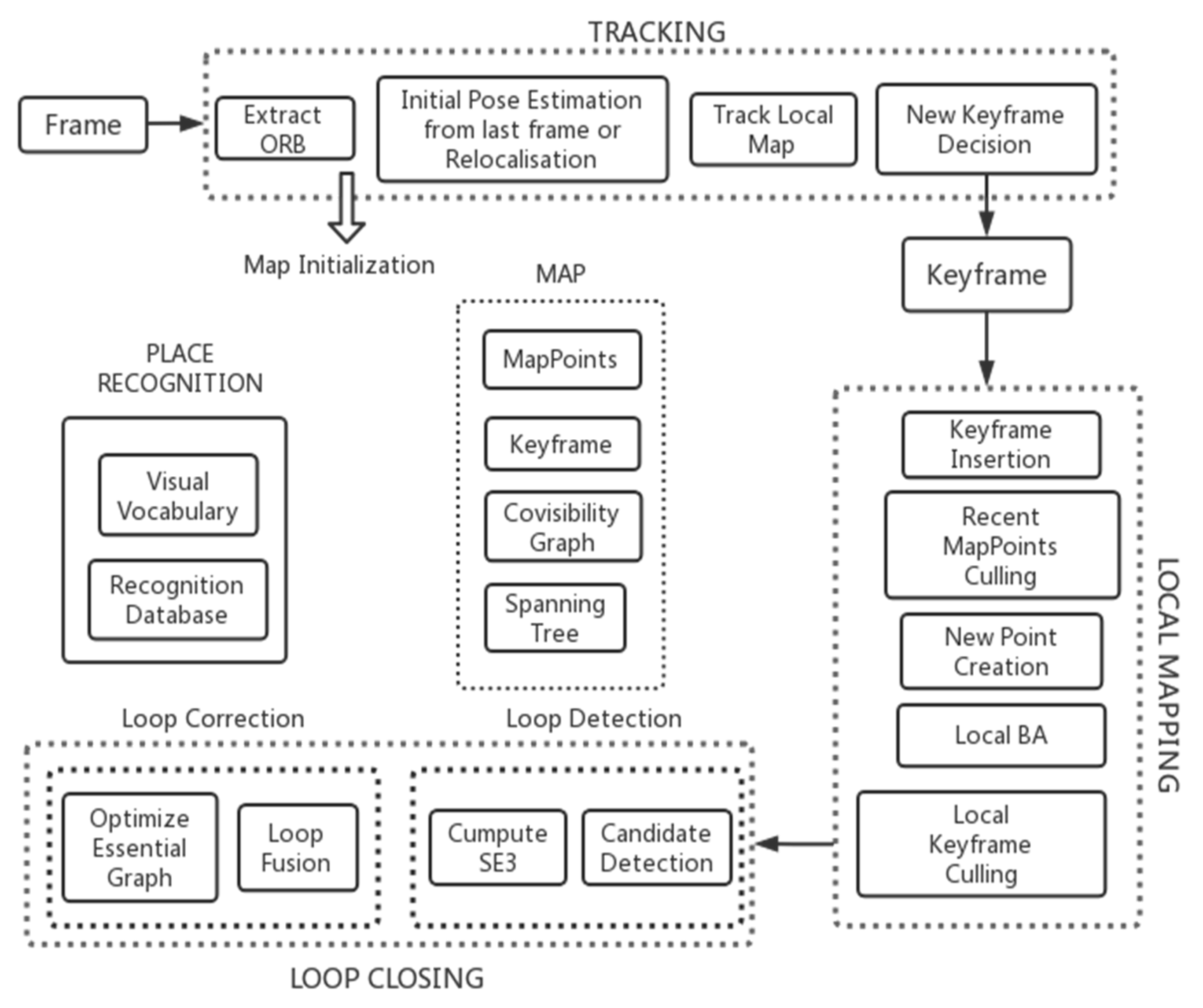

3], ORB-SLAM [

4], ORB-SLAM2 [

5], DynaSLAM [

6], ORB-SLAM3 [

7], RGBD SLAM [

8,

9,

10], DS-SLAM [

11], and so on. However, most of the mapping construction in the SLAM system is based on a static environment. When drawing in a static environment, the ORB-SLAM2 system is one of the classic drawing frames of the SLAM system. The ORB-SLAM2 system has the advantages of a simple structure and fast running speed. Therefore, this research is based on ORB-SLAM2. Most of the existing SLAM systems operate in a static environment, and most of them suffer from low accuracy of map building, large trajectory errors, and poor robustness of the system when building maps in a dynamic environment. As most of our environments are dynamic (i.e., they are static only in specific circumstances), map construction and positional trajectories in dynamic environments are even more important.

In the process of researching dynamic SLAM map building [

12,

13], many scholars have conducted in-depth research on SLAM systems in a dynamic environment, put forward many improvement methods, put forward new methods in the process of SLAM research, and achieved certain results. However, in practical applications, problems such as poor real-time performance of the improved algorithms, low accuracy of map building, and large trajectory errors still exist and have not been fundamentally solved. Linhui Xiao et al. [

14] constructed a Single Shot MultiBox Detector (SSD) object detector based on a convolutional neural network and prior knowledge when eliminating dynamic objects in a dynamic environment, and used this SSD object detector to detect moving objects in a dynamic environment. At the same time, a missing detection compensation algorithm was added to optimize object detection. Finally, map construction and pose estimation were carried out in the SLAM system. Linyan Cui et al. [

15,

16] used a semantic optical flow SLAM method to detect and reject dynamic objects in the environment, and at the same time used a segmentation Network (SegNet) to mask the semantic information, and then filter the object features, detect the dynamic object, and segment the dynamic object. The dynamic points were then eliminated by judging the feature points that have mobility without a priori dynamic markers. The obtained static features were then used to build the map. Yuxiang Sun et al. [

17] proposed a method to delete RGB-D data when removing feature points in a dynamic environment, and applied this improved method before employing SLAM. In the process of drawing construction, the method proposed by the author was used to eliminate the dynamic data, to achieve the effect of removing the dynamic features. Feng Li et al. [

18] used an enhanced semantic segmentation network to detect and segment dynamic features of dynamic SLAM environments as a way to achieve dynamic feature rejection. Miao Sheng et al. [

19] adopted a static and dynamic image segmentation method to improve the accuracy of image construction to reduce the interference of dynamic objects in image construction. This method uses the semantic module to segment the image and then uses the geometric module to detect and eliminate the dynamic objects. Shiqiang Yang et al. [

20] used a combined geometric and semantic approach for the detection of dynamic object features, which first uses a semantic segmentation network for the segmentation of dynamic objects, and then uses an approach based on the geometric constraint method to reject the segmented dynamic objects. Wenxin Wu et al. [

21] accelerated and generated the semantic information necessary for the SLAM system by using a low-latency backbone while proposing a new geometrically constrained method to filter the detection of dynamic points in image frames, where dynamic features can be distinguished by random sampling consistency (RANSAC) using depth differences. Wanfang Xie et al. [

22] proposed a motion detection and segmentation method to improve localization accuracy, while using a mask repair method to ensure the integrity of segmented objects, and using the Lucas–Kanade optical flow (LK optical flow) [

23] method for dynamic point rejection in the rejection of dynamic objects. XUDONG LONG et al. [

24] proposed a Pyramid Scene Parsing Network (PSPNet) detection thread based on the pyramid optical flow method combined with semantic information. In dynamic feature detection, PSPNet is used as the thread of semantic information detection to carry out semantic annotation on dynamic objects. An error-compensation matrix is designed to improve the real-time performance of the system, reducing the time consumed. Ozgur Hasturk et al. [

25] proposed a dynamic dense construction method using 3D reconstruction errors to identify static and dynamic objects by studying the construction of dynamic environments. To improve the robustness and efficiency of dynamic SLAM mapping, Li Yan et al. [

26] proposed a method of combining geometric information with semantic information. Firstly, a residual model combined with a semantic module was used to perform semantic segmentation for each frame of an image. At the same time, a feature point classification method was designed to detect potential dynamic objects. Weichen Dai et al. [

27] proposed a map-building method that includes static maps and dynamic maps, in which dynamic maps consist of motion trajectories and points, and the correlation between maps is used to distinguish static points from dynamic points as a way to eliminate the influence of dynamic points in the map-building process. ImadE Bouazzaou et al. [

28] eliminated the effect of errors on map-building localization in dynamic environments by improving the mode of sensor-to-image acquisition and by selecting the specifications of the sensor for a particular environment. Chenyang Zhang et al. [

29] proposed a PLD–SLAM combined with deep learning (for semantic information segmentation) and a K—Means clustering algorithm considering depth information (to detect the underlying dynamic features for the dynamic SLAM map building method) in order to solve the problem of incomplete or over-segmentation of images caused by deep learning while using point and line features to estimate the trajectory. Guihai Li et al. [

30] combined the tracking algorithm and target detection, using the limit geometric constraint method to detect static points, while at the same time introducing depth constraints in the system to improve the robustness of the system. However, these methods have certain drawbacks when performing in dynamic environments, and there are various unavoidable disadvantages. When using geometric methods for dynamic point rejection, problems such as incomplete detection of dynamic points and non-detection of low dynamic points can easily occur, which in turn affects the accuracy of map building and positional accuracy. Additionally, when using deep learning networks [

31,

32] for map building, an unavoidable problem is the poor real-time performance of the system, and the processing time per image frame increases significantly.

When the above methods build a map in a dynamic environment, they have the disadvantages of inaccurate detection of dynamic feature points and low accuracy of pose estimation, as well as the large computational power of semantic networks leading to the slow running of algorithms and the phenomenon of lost frames. These problems will affect the mapping and pose estimation accuracy of the SLAM system, leading to the failure of mapping and serious deviations of positioning and navigation. To solve the interference of dynamic objects in the environment to the SLAM system and to improve the accuracy of SLAM mapping and pose estimation, a new SLAM mapping method in a dynamic environment is proposed in this paper. In this paper, a dynamic feature detection module is added to the ORB-SLAM2 algorithm. In the feature point recognition and rejection module, the LK optical flow method is used to detect and reject dynamic feature points. The YOLOv5 (You Only Look Once) target detection network can detect most objects with dynamic features. Afterward, the detected images are detected and rejected twice using the optical flow method. By detecting and rejecting the dynamic features twice, the proposed algorithm can reject most of the dynamic features well and ensure the accuracy of map building and pose estimation. Moreover, the YOLOv5 network has a small scale and small amount of calculation, which ensures the running speed of the algorithm, effectively solving the problem of lost frames in the algorithm. Compared with SLAM algorithms of the same type, the running speed of the algorithm in this paper is much faster than that of DynaSLAM and DS-SLAM algorithms in the literature.

The main contributions of this paper are as follows: 1. Applying the deep learning target detection network YOLOv5 to the SLAM mapping system, which enables the SLAM system to perform fast target detection and segmentation; 2. A secondary detection of dynamic targets based on the deep learning network YOLOv5 combined with the LK optical flow method is proposed, which can effectively remove dynamic features in the dynamic feature region of points; 3. Based on the ORB-SLAM2 system, a new SLAM mapping system is successfully constructed. The structure of this paper is as follows: In the

Section 1, the principle of the ORB-SLAM2 algorithm and YOLOv5 network structure, as well as the function of each module, are introduced in detail. In the

Section 2, an improvement to the ORB-SLAM2 algorithm is proposed, and the principle of eliminating dynamic feature points is described in detail. The

Section 3 uses the TUM dataset to verify the proposed algorithm, and provides the experimental results based on the TUM dataset. Finally, the

Section 4 summarizes and considers future developments that may arise from the work in this paper.

4. Method Validation

In this paper, the accuracy of this algorithm in a dynamic environment is tested with three datasets: fre3_walking_xyz, fre3_walking_halfsphere, and fre3_walking_static. The trajectory map results obtained by this algorithm are compared with those obtained by the ORB-SLAM2 algorithm and DynaSLAM algorithm, and the processing time for each frame is compared with that of DynaSLAM. The advantages and disadvantages of this algorithm in terms of accuracy and real-time performance are summarized and analyzed.

In this paper, we use the Relative Pose Error (RPE) and Absolute Trajectory Error (ATE) evaluation metrics to evaluate the mapping accuracy of SLAM systems. Usually, when evaluating map construction accuracy, it is more intuitive to compare the results by observing the map construction, but such observation results can only describe in general terms whether a certain algorithm is relatively accurate with respect to map construction. Therefore, in order to calculate the accuracy of map construction more accurately, researchers generally use errors to estimate the accuracy of map construction. In this paper, the accuracy of map construction is expressed by calculating the magnitude of Root Mean Squared Error (

RMSE) with the following formula:

where

denotes the error at moment

,

denotes the camera pose from 1 to

n, and Δ denotes the fixed time interval.

The calculation method of performance improvement in this paper is shown in Equation (16):

where

represents the

RMSE of the ORB-SLAM2 algorithm and

represents the

RMSE of the OurSLAM algorithm.

where

represents the

SD of the ORB-SLAM2 algorithm and

represents the

SD of the OurSLAM algorithm.

In the experiments for this paper, the values of ATE and RPE are used to reflect the results of the map construction accuracy of the algorithm in this paper. The values of RPE and ATE are used in the calculation of the evaluation parameters

RMSE and Standard Deviation (

SD). Since

is more sensitive to outliers in the data and can be observed more intuitively by deviation, the robustness of the system is described by using

in this paper. In response to the degree of dispersion of the camera trajectory, this paper uses the standard deviation for judgment, which can describe the stability of the system more clearly, as shown in

Table 1 and

Table 2.

In

Table 1 and

Table 2, the

RMSE and

SD results obtained by the proposed algorithm and ORB-SLAM2 algorithm are compared and analyzed, and the performance improvement of the proposed algorithm compared with the ORB-SLAM2 algorithm is obtained. As can be seen from

Table 1, in terms of the comparison results of absolute trajectory errors, the

RMSE of the proposed algorithm is 97.8% higher than that of the ORB-SLAM2 algorithm, and the

SD of the proposed algorithm is 97.8% higher than that of the ORB-SLAM2 algorithm. It can be seen from

Table 2 that the

RMSE of the proposed algorithm has a maximum performance improvement of 69.8% compared with that of the ORB-SLAM2 algorithm, and the

SD of the proposed algorithm has a maximum performance improvement of 98.6% compared with that of the ORB-SLAM2 algorithm.

As shown in

Figure 7, this paper uses the fr3_walking_xyz datasets to predict the trajectory of ORB-SLAM2, DynaSLAM, DS-SLAM, and OurSLAM as shown below.

As shown in

Figure 7, this paper uses different colored lines to represent the trajectory images of four different algorithms: ORB-SLAM2, DynaSLAM, DS-SLAM, and OurSLAM. As shown in

Figure 7a, the dotted line represents the real trajectory. The blue line represents the track of the ORB-SLAM2 algorithm; the green line represents the track of the DynaSLAM algorithm; the red line represents the track of the DS-SLAM algorithm; and the purple line represents the trajectory of the OurSLAM algorithm. As can be seen from

Figure 7a, the trajectory of the ORB-SLAM2 algorithm in the dynamic environment does not coincide with the real trajectory at all. It can also be seen from

Figure 7b,c that the fitting effect of the ORB-SLAM2 algorithm in all directions is quite different from that of the real trajectory. Although DynaSLAM and DS-SLAM are much better than ORB-SLAM2 in dynamic environment mapping, we can see from

Figure 7b,c that when there is a breakpoint in the real trajectory, that is, when the camera suddenly changes its angle, the frame loss of both DynaSLAM and DS-SLAM algorithm is serious, and the trajectory will have a large deviation. It can be seen from

Figure 7 that compared with the three SLAM algorithms of ORB-SLAM2, DynaSLAM, and DS-SLAM, the OurSLAM algorithm proposed in this paper has obvious advantages in trajectory prediction. The trajectory of the OurSLAM algorithm fits well with the real trajectory in all directions, and compared with the other three SLAM algorithms, the frame loss phenomenon of the camera in this algorithm is significantly improved.

In this paper, two datasets (fr3_walking_xyz and fr3_walking_halfsphere) are used to verify the results of this algorithm, and to compare it with the advanced SLAM algorithms in recent years. Compared with the ORB-SLAM2, DynaSLAM, and DS-SLAM algorithms, the performance of this algorithm is better than the other three algorithms when building maps in a dynamic environment, and it can well meet the needs of dynamic mapping in the SLAM system. In pose estimation, Absolute Pose Error (APE) and RPE are usually used to prove the advantages and disadvantages of an algorithm.

In performing the error comparison, the results of the algorithm testing using the datasets in this paper are as follows. The results of the tests performed on the fre3_walking_xyz dataset are shown in

Figure 8.

As can be seen from

Figure 8, compared with the ORB-SLAM2 algorithm, DynaSLAM, DS-SLAM, and OurSLAM are superior to ORB-SLAM2 in absolute and relative trajectory errors. Among these, the absolute trajectory error and relative trajectory error of DS-SLAM are large, and it cannot meet the accuracy requirements of the SLAM system. Compared with DynaSLAM, OurSLAM is slightly better than DynaSLAM in absolute trajectory error and relative trajectory error.

From

Figure 9, it can be seen that the ORB-SLAM2 algorithm has almost no correct rate in trajectory error. The trajectory error of DynaSLAM is in the range of 0.003–0.039, and the trajectory error is relatively small. The trajectory error of DS-SLAM is relatively large due to drift, and the error range is 0.002–0.269. The trajectory error of OurSLAM is in the range of 0.002–0.043, and the accuracy of the algorithm is almost the same as that of DynaSLAM. OurSLAM has higher accuracy compared with DS-SLAM. The accuracy of OurSLAM in map building and pose estimation is very high, and the error is within the error tolerance. By comparing the trajectory error graphs, it can be seen that the algorithm in this paper outperforms ORB-SLAM2 and DynaSLAM in terms of mapping accuracy and pose estimation.

The experimental results on the fre3_walking_halfsphere dataset are as follows:

As shown in

Figure 10, the trajectory predictions of ORB-SLAM2, DynaSLAM, DS-SLAM, and OurSLAM on the fr3_walking_halfsphere dataset are compared. This paper uses lines of different colors to represent the trajectory images of four different algorithms: ORB-SLAM2, DynaSLAM, DS-SLAM, and OurSLAM. As shown in

Figure 10a, the dotted line represents the real trajectory; the blue line represents the track of the ORB-SLAM2 algorithm; the green line represents the track of the DynaSLAM algorithm; the red line represents the track of the DS-SLAM algorithm; and the purple line represents the trajectory of the OurSLAM algorithm. As can be seen from

Figure 10a, the matching accuracy between the real trajectory and the trajectory of the ORB-SLAM2 algorithm in the dynamic environment is almost zero. Although the matching between the real trajectory and the trajectory of the DynaSlam algorithm and the DS-SLAM algorithm is greatly improved compared with the ORB-SLAM2 algorithm, there are still some shortcomings compared with the algorithm in this paper. It is obvious from

Figure 10b that the trajectory of the ORB-SLAM2 algorithm differs too much from the real trajectory on each coordinate axis of

x,

y, and

z. The accuracy of the matching result is very low. The matching results of the trajectories of DynaSLAM and DS-SLAM are roughly the same as those of the real trajectories on each coordinate axis of

x,

y, and

z, and have a certain accuracy, but there is still a certain error in the peak and valley of the image. The matching results of the OurSLAM trajectory on the

x,

y, and

z, axes are consistent with the real trajectory, and have a good matching accuracy. It can be seen from

Figure 10c that when trajectory prediction is carried out on the axes of yaw, pitch, and roll, the matching effect of OurSLAM is the best, while that of ORB-SLAM2 is the worst.

The results of the experiments using the fr3_walking_halfsphere dataset are shown in

Figure 11. In terms of APE, the APE range of OurSLAM fluctuates between 0.01–0.06. However, the fluctuation range of ORB-SLAM2 reaches a maximum of over 1.2, and the fluctuation range of DynaSLAM reaches a maximum of over 0.12. The fluctuation range of the APE of DS-SLAM is not much different from OurSLAM, but its APE range is 0.00–0.07, reaching maximum of over 0.07. In terms of RPE, this algorithm is better than the other three algorithms. The RPE of OurSLAM fluctuates in the range of 0.01–0.04, while the RPE of DS-SLAM fluctuates in the range of 0.00–0.07, the RPE of DynaSLAM fluctuates in the range of 0.00–0.14, and the RPE of ORB-SLAM2 fluctuates in the range of 0.00–0.12. Therefore, by comparing the APE and RPE of each algorithm, it is obvious that the OurSLAM algorithm has obvious advantages, which proves the effectiveness of the algorithm in this paper.

The results of the experiments performed on the fr3_walking_halfsphere dataset are shown in

Figure 12. On both APE and RPE, the error trajectories of ORB-SLAM2 and DynaSLAM have obvious red trajectories, so these two algorithms have some errors in performing trajectory prediction. Compared with ORB-SLAM2 and DynaSLAM, a small amount of red traces exist on the error trajectories of the DS-SLAM algorithm, which indicates that DS-SLAM has less error in performing trajectory prediction, and thus that its prediction results are better than those of ORB-SLAM2 and DynaSLAM. The trajectory error results of OurSLAM show nearly all blue lines with almost no red lines. Therefore, it can be shown that the error of this paper’s algorithm in trajectory prediction is very small, which proves the high quality of the map building and trajectory prediction results of this paper’s algorithm.

When the SLAM system is used to build maps, the real-time performance is often used as an important evaluation index to reflect its merits. So, we compared the time spent per frame by this algorithm and the DynaSLAM algorithm on detecting dynamic points, and the results of all three algorithms are shown in

Table 3. It is obvious that the time difference between this algorithm and the ORB-SLAM2 algorithm in processing each frame is not significant. However, the time consumed by this algorithm in processing each frame is significantly lower than the time used by the DynaSLAM algorithm in tracking each frame, so this algorithm has a greater advantage in real-time compared with other SLAM map-building algorithms in dynamic environments.

5. Conclusions

When using the SLAM system to build a map of a dynamic environment, the dynamic features in the environment will affect both the accuracy of building a map and the accuracy of trajectory prediction, resulting in poor accuracies of both the map and the trajectory prediction, or even the failure to build a map at all, which will greatly reduce the accuracy of subsequent positioning and navigation. Therefore, to address the interference of dynamic targets with the SLAM map-building system, this paper improves the system based on the ORB-SLAM2 system. In this paper, a target detection and recognition module based on the YOLOv5 network was added to the ORB-SLAM2 system, which allowed the improved system to not only detect and recognize targets, but also to better detect the dynamic feature points in images. Meanwhile, this paper used the LK optical flow method in the dynamic feature detection module of ORB-SLAM2 for the secondary detection of dynamic objects in images, which increased the accuracy of the system for dynamic feature detection. At present, the mainstream semantic SLAM algorithms, such as DynaSLAM and DS-SLAM, are effective in building maps in dynamic environments, but the semantic segmentation network requires a large amount of computation, which leads to long running times and low real-time performance when building maps, as well as making the algorithms prone to frame loss, resulting in low accuracy of map construction. Compared with semantic SLAM algorithms such as DynaSLAM and DS-SLAM, the dynamic SLAM algorithm based on the YOLOv5 network proposed in this paper is able to detect dynamic features in real-time, can quickly complete the detection of dynamic features, and rarely lose frames due to its fast detection speed, which effectively improves the accuracy of map building and trajectory prediction of the SLAM system.

Although the dynamic SLAM mapping system based on the YOLOv5 network proposed in this paper can eliminate most dynamic objects in the environment and can improve the accuracy of mapping and trajectory prediction, it also has some limitations. When the dynamic objects in the environment are stationary for a long time, they will obscure the static background leading to inaccurate map building, and the algorithm in this paper detects and rejects them. The obscured static background area will have a large error. Therefore, in our future work, our research will mainly focus on recovering the occluded region. The occluded region is first segmented out together with the occluding object using a segmentation network, and then the segmented region is masked into three parts: the occluded background, the occluding object, and the unobscured background. Then the occluding object is removed and the occluded background is predicted according to the unobscured background, in order to recover the occluded background.