Building Natural Language Interfaces Using Natural Language Understanding and Generation: A Case Study on Human–Machine Interaction in Agriculture

Abstract

:1. Introduction

- How to construct a high-quality human–machine interaction dataset about the agricultural domain, i.e., a reasonable linguistic framework is needed to translate between the natural language commands entered by the user and the machine commands that can be understood by the computer system. An intermediate language framework needs to be found to structure the natural language for storage.

- How to build a natural user interface with scientific generalization capability and extensibility, and effectively complete the mapping between natural language command sequences and computer system call interfaces.

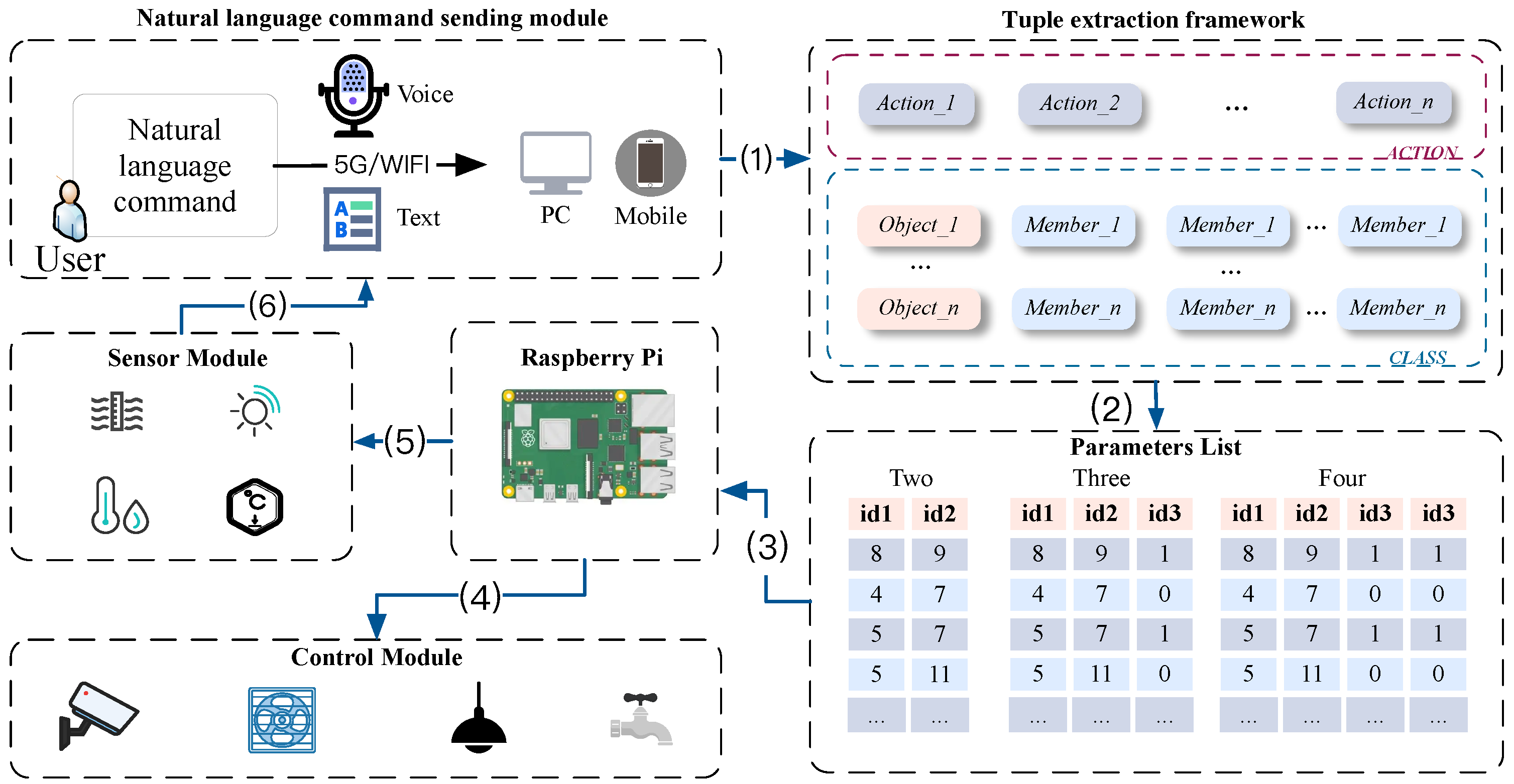

- We have proposed a new language storage framework based on dynamic tuple extraction, and accordingly define a structured intermediate language AOM (Action-Object-Member), which is mapped to corresponding structured intermediate statements according to the complexity of natural language commands, respectively. It can collect high-quality training data efficiently.

- We have built an end-to-end framework from natural language to AOM (NL2AOM). We have used an improved Seq2Seq model based on BERT using a special mask mechanism to implement a sequence-to-sequence concept based on self-attention to obtain global information about natural language commands.

- We have collected natural language command data from real agricultural environments to train and test our model and applied it to real agricultural environments. Our experiments show that the proposed human–machine user interface in agriculture can better solve the natural human–machine interaction problem and achieves good results in the evaluation of performance metrics, with response times that meet the requirements of human–machine interactions. A robust baseline is provided for the field.

2. Proposed System

3. Training Data Collection

3.1. Sketch-Based Synthesis of AOM Statements

- Basic natural language commands (a): Natural language commands that do not contain any nested, parallel, or other complex relationships, such as “请帮我打开大棚的吊灯” (“Please help me turn on the chandelier in the shed”), the generated AOM statement is {Action_1: “打开” (“turn on”), Object _1: “大棚” (“shed”), Member_1: “吊灯” (“chandelier”)}, in the form of a single keyword for each attribute.

- Complex natural language commands(b): Multiple simple natural language commands are combined. If the basic natural language command is S_1, then the complex natural language command is (S_1, S_2, S_3, …, S_n), where n is the number of simple natural language commands.

- Object juxtaposition natural language commands (c): As the name suggests, two objects point to the same member, e.g., the command “请帮我同时打开稻田和麦田的喷洒机” (“Please help me turn on the sprinklers in both the rice field and the wheat field”), the keywords “稻田” (“rice field”) and “麦田” (“wheat field”) are Object_1 and Object_2, respectively, both pointing to the same type of member property, i.e., Member_1: “喷洒机” (“sprinkler”). Theoretically generated AOM statements are {Action_1: “打开” (“turn on”), Object: {Object_1: “稻田” (“rice field”), Object_2: “麦田” (“wheat field”)}, Member_1: “喷洒机” (“sprinkler”)}.

- Member parallel natural language commands (d): This type of command manipulates member variables that exist side by side; for example, the command “分别打开稻田的喷头和传感器” (“Turn on the nozzle and sensor of the paddy field respectively”), in which the object properties are “喷头” (“nozzle”) and “传感器” (“sensor”), respectively, is a parallel relationship, and the common object they belong to is “稻田” (“paddy field”), i.e., Object_1 points to both Member_1 and Member_2. The generated AOM statement is {Action_1: “打开” (“turn on”), Object_1: “稻田” (“paddy field”), Member: { Member_1: “喷头” (“nozzle”), Member_2. “传感器” (“sensor”)}}.

- Object nested natural language commands (e): This type of command is relatively complex; for example, “请帮我查看一下稻田里面土壤的温湿度情况” (“Please help me check the temperature and humidity of the soil in the rice field”). Here, “稻田” (“rice field”) and “土壤” (“soil”) are both object attributes, but “土壤” (“soil”) is nested inside “稻田” (“rice field”); that is, “温湿度” (“temperature and humidity”) is a member of “土壤” (“soil”), not a member of “稻田” (“rice field”), as in Figure 2, Object_1 points to the line; that is, on behalf of Object_1 it tries to find its net nested object Object_i (i = 2, 3, …, n). Then the final object points to the member property in the directive. Then the generated AOM statement is {Action_1: “查看” (“check”), Object_1: “稻田” (“rice field”), Object_2: “土壤” (“soil”), Member, {Member_1: “温度” (“temperature”): Member_2: “湿度” (“humidity”)}}

3.2. Command Structured Parsing Algorithm and Details

3.2.1. Semantic Parsing Using TF-IDF Keyword Extraction Combined with Glove Word Vectors

- Step (1) represents the Chinese word separation of the input Chinese natural language commands. Unlike languages such as English, where there are spaces separating each word, there is no separation between each word in Chinese; word separation is required before subsequent processing.

- Step (2) performs lexical annotation, and in the lexical annotation result, the terms marked as /c are concatenations in the commands. Parallel conjunctions connect words, phrases, sentences, etc., such as “then”, “and”, “our”, “so”, and so on. Currently, the presence of sub-instructions is determined by considering the presence or absence of concatenation in the input natural language commands. In the proposed method, if coordinating conjunctions such as “and”, “our”, and “then” are found later, the command is considered a complex natural language command.

- Step (3) represents stop word filtering and the removal of some redundant information.

- Step (4) represents the extraction of key indicator information using the TF-IDF keyword extraction process; the details are described as follows.

- Step (5) represents the optimization process of similarity calculation; for example, in this subsection, “开启” (“open”) may not be recognized, so the Glove word vector model is used to vectorize it, calculate the cosine similarity, and find the most similar synonyms for replacement. After repeated tests, the two words with cosine similarity greater than 0.68 are synonyms. The cosine similarity of “打开” (“turn on”) and “开启” (“open”) is 0.87, so they are identified as synonyms; for the word vectors and , the cosine similarity is calculated as follows, where the cosine similarity takes the value interval of [0, 1]. In Equation (2), the closer the cosine value of the angle between the two word vectors is to 1, the closer the semantics of these two words are.

- Step (6) represents the process of synthesizing the AOM statement, which automatically fills the corresponding slot with the extracted key information, and finally converts the natural language command “请帮我开启大棚的传感器” (“Please help me turn on the sensor of the shed”) into the AOM statement {Action_1: “打开” (“turn on”), Object_1: “大棚” (“shed”), Member_1: “传感器” (“sensor”)}.

| Algorithm 1: Commands extraction strategy based on keywords extraction and similarity mapping. |

| Input: Natural language command sequences:CommandString Output: Structured AOM Statements 1: Initialize AOM = Ø 2: postag = HanLP(CommandString) /*Using HanLP for lexical annotation of commands*/ 3: IF postag == ‘c’/*Find the conjunctive lexeme, then it is a complex natural language command*/ 4: String = split(CommandString)/*Cut complex natural language commands into basic natural language commands*/ 5: ELSE StringList = TF-IDF(String,’TF’)/*Keyword extraction for commands */ 6: END IF 7: FOR word IN StringList 8: IF word.postag == ‘v’/*If the keyword word is a v*/ 9: Action←word 10: ELSE IF GloVe_cossim(word, word_dict) > 0.68/*Similarity-optimal mapping*/ 11: Action←word 12: IF word.postag == ’ns’ /*If the keyword word is a ns*/ 13: Object←word 14: ELSE IF GloVe_cossim(word, word_dict) > 0.68/*Similarity-optimal mapping */ 15: Object←word 16: IF word.postag == ‘nz’ OR ‘nc’ /*If the keyword word is a nz or nc*/ 17: Member←word 18: ELSE IF GloVe_cossim(word, word_dict) > 0.68/*Similarity-optimal mapping */ 19: Member←word 20: END IF 21: AOM ← {Action_1: “word_1”, Object: {Object_1: “word_2”,Object_2: “word_3”}, Member1_1: “word_4”}*Update AOM*/ 22: END FOR 23: RETURN AOM |

3.2.2. Structured Processing of Natural Language Commands Based on Dependency Parsing

| Algorithm 2: Semantic parsing process for the juxtaposition of natural language commands for objects. |

| Input: Dependency syntactic analysis tree generated by each keyword node, each node of the tree contains the original word: word, lexical property: postag, dependency: deprel, node position dependency: head Output: Structured AOM Statements 1: Initialize AOM = Ø 2: FOR relation IN deprel/*Iterative dependencies*/ 3: num = num + 1/*Automatic loop + 1 for list subscript positioning*/ 4: IF relation == ‘VOB’/*Traversing the verb-object relationship*/ 5: Action←word[head[num] − 1]/*Find the word prototypes on which the VOB relationship depends as action attributes*/ 6: ELSE IF relation == ’COO’: /Traversing the COO relationship/ 7: Object←word[head[num] − 1]/*Use the COO-dependent word prototype as the first object*/ 8: Object.append(word[num])/*The COO itself corresponds to the word prototype for the second object*/ 9: ELSE IF relation == ‘ATT’/*Traversing the ATT relationship*/ 10: Member←word[head[num] − 1]/*ATT-dependent word prototypes as manipulated member attributes*/ 11: END IF 12: AOM←{Action_1: “word_1”, Object: {Object_1: “word_2”, Object_2: “word_3”}, Member1_1: “word_4”}/*Update AOM*/ 13: END FOR 14: RETURN AOM |

| Algorithm 3: Semantic parsing process for the juxtaposition of natural language commands for members. |

| Input: Dependency syntactic analysis tree generated by each keyword node, each node of the tree contains the original word: word, lexical property: postag, dependency: deprel, node position dependency: head Output: Structured AOM Statements 1: Initialize AOM = Ø 2: FOR relation IN deprel/*Iterative dependencies*/ 3: num = num + 1/*Automatic loop + 1 for list subscript positioning*/ 4: IF relation == ‘VOB’/*Traversing the verb-object relationship*/ 5: Action←word[head[num] − 1]/*Find the word prototypes on which the VOB relationship depends as action attributes*/ 6: ELSE IF relation == ’COO’:/ Traversing the COO relationship / 7: Member←word[head[num] − 1]/*Use the COO-dependent word prototype as the first member*/ 8: Member.append(word[num])/*The COO itself corresponds to the word prototype for the second member*/ 9: ELSE IF relation == ‘ATT’/* Traversing the ATT relationship*/ 10: Object←word[head[num] − 1]/*Use the ATT-dependent word prototype as the object*/ 11: END IF 12: AOM←Action_1: {“word_1”, Object_1: “word_2”, Member: {Member 1_1: “word_3”, Member 1_2: “word_4”}} /*Update AOM*/ 13: END FOR 14: RETURN AOM |

| Algorithm 4: Semantic parsing process for Object nested natural language commands. |

| Input: Dependency syntactic analysis tree generated by each keyword node, each node of the tree contains the original word: word, lexical property: postag, dependency: deprel, node position dependency: headOutput: Structured AOM Statements 1: Initialize AOM = Ø 2: FOR relation IN deprel/*Iterative dependencies*/ 3: num = num + 1/*Automatic loop + 1 for list subscript positioning*/ 4: IF relation == ‘VOB’/*Traversing the verb-object relationship*/ 5: Action←word[head[num] − 1]/*Find the word prototypes on which the VOB relationship depends as action attributes*/ 6: ELSE IF relation == ’ATT’ 7: NestingObject←word[num]/*First traversal of ATT dependencies as nested object*/ 8: ELSE IF (postag[num] == ’f’) AND (relation == ’ATT’)/*Find the prototype of the word lexical category for f*/ 9: NestedObject←word[head[num] − 1]/*Dependent word prototype of word lexical f is the nested object*/ 10: Member←word[head[head[num] − 1]]/*Find the manipulated member by the nested object*/ 11: END IF 12: AOM←{Action_1: “word_1”, Object_1: “word_2”, Member: {Member 1_1: “word_3”, Member 1_2: “word_4”}} /*Update AOM*/ 13: END FOR 14: RETURN AOM |

4. Natural Language Interfaces

4.1. Seq2seq Model

4.2. Seq2seq Paraphrase Model with BERT

4.2.1. Self-Attention Network

4.2.2. Model Details

5. Experiment

5.1. Model Performance Evaluation Metrics

5.2. Experimental Performance Evaluation of Language Frameworks Based on Dynamic Tuple Extraction

- With the increase in the number of input commands, the performance indicators of the generated AOM intermediate language are decreased, where the parsing performance of command types a and b are above 90%, and the parsing time is between 0.9 and 1 s. It can be considered that the TF-IDF algorithm combined with the use of the Glove word vector to calculate the cosine similarity has good results in the parsing of these two types of commands. The average response time of instruction type b is slightly longer than that of instruction type a. The main reason is the time consumption of the process of cutting complex natural language commands into basic natural language commands. The good parsing performance is mainly because the commands themselves generate simple AOM statements in the form of a basic triplet. Therefore, this method is reliable for generating NL2AOM datasets for commands a and b.

- Command types c and d have index values above 85% when generating the AOM intermediate language, and the change curves of their F-score values overlap significantly when the number of input commands is 100 to 150, indicating that Algorithms 2 and 3 are more similar in performance, mainly the characteristics of these two commands are more similar; the former belongs to object juxtaposition, and the latter belongs to member juxtaposition. The response time of generating AOM intermediate language is between 1.85 and 2.0 s, so the training data can be collected effectively.

- Command type e has index values for the generation of close to 80% AOM intermediate language; the main reason for consideration is the complexity of object nesting. For example, “查看大棚里传感器的温度” (“check the temperature of the sensor in the shed”) where “大棚” (“shed”) and “传感器” (“sensor”) are objects, and “请帮我打开大棚的传感器” (“please help me open the shed’s sensor”) is a member of the “传感器” (“sensor”), which is a common cause of ambiguity. Therefore, its performance is the lowest among the five types of commands. The parsing response time is more than 2 s. Therefore, when collecting the NL2AOM dataset of type e commands, we need to manually screen out the non-conforming pairs and normalize them to provide high-quality datasets for the subsequent deep learning training.

- Combining the above experimental results, the data collection method proposed in this paper can collect high-quality datasets in a low-cost and efficient manner. It provides the basis for the subsequent training of deep learning models.

5.3. Evaluation of Sequence-to-Sequence Based Semantic Parsing Models

5.3.1. Segment Generation Performance Evaluation

- In the experimental tables generated by the fields, the accuracy of the method proposed in this paper is a lot higher than the other four methods, with the training set accuracy reaching 86.43%, 61.13%, and 86.43% in the three fields, respectively. The test set accuracies reached 85.24%, 60.24%, and 85.24%, respectively.

- The main reason for the leading accuracy of field extraction compared with other models is that the self-attention mechanism inside BERT combines with the mask mechanism, which can realize the recursive concept based on the perception of global information, and since the training instructions are of statement-level size, the self-attention mechanism is more capable of capturing the connections inside the sequence. Thus the field extraction effect is ahead of the classical sequence model.

- The reason for the low accuracy of the extracted field Object is that the indicator can easily cause ambiguity; that is, the same keyword can have different indicators in different instructions; for example, “查看大棚的空调温度是多少” (“check what is the temperature of the air conditioner in the shed”). The “空调” (“air conditioner)” index of this command belongs to Object_2, which forms a nested relationship with the “大棚” (“shed”). However, “请帮我打开一下大棚的空调” (“Please help me turn on the air conditioner in the shed”), where “空调” (air conditioner“) belongs to Member_1 and forms a verb–object relationship (VOB) with ”打开“(”turn on“). Therefore, the accuracy rate is relatively low.

5.3.2. Overall Synthetic AOM Performance Evaluation

- In the table of synthetic AOM performance evaluation values, the method Seq2Seq with BERT in this paper achieves 78.71% accuracy, 79.21% precision, 78.98% recall, and 79.09% F-score values on the NL2AOM task. Its accuracy gains are 46.54%, 45.33%, 37.44%, and 37.88% for Seq2Seq(LSTM), Seq2Seq(GRU), Seq2Seq(BiLSTM), and Seq2Seq(BiGRU), respectively. The main reason is that LSTM, BiLSTM, GRU, and BiGRU are all variants of RNN, which can solve the recursive timing problem well but cannot sense the information globally. The recursive timing feature is implemented. This results in a higher accuracy rate on the sequence-to-sequence task from natural language commands to AOM statements.

- The experimental results also show that the dynamic tuple extraction-based language framework proposed in this paper can collect high-quality training datasets efficiently, quickly, and at a low cost. Further, the proposed BERT-based Seq2Seq model, based on the use of self-attention masks, can both globally acquire natural language instruction information and implement the sequence-to-sequence concept, thus enabling the better implementation of the NL2AOM task and providing a more robust baseline.

5.4. Agricultural Human–Machine Interaction Interface

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Razali, M.H.H.; Atar, M.; Norjihah, S. Human computer interaction and application in agriculture for education. Int. J. Inf. Technol. Comput. Sci. 2012, 2, 7–11. [Google Scholar]

- Jansen, B.J. The graphical user interface. ACM SIGCHI Bull. 1998, 30, 22–26. [Google Scholar] [CrossRef]

- Petersen, N.; Stricker, D. Continuous natural user interface: Reducing the gap between real and digital world. In Proceedings of the ISMAR, Orlando, FL, USA, 19–22 October 2009; pp. 23–26. [Google Scholar]

- Alonso, G.; Casati, F.; Kuno, H.; Machiraju, V. Web services. In Web Services; Springer: Berlin/Heidelberg, Germany, 2004; pp. 123–149. [Google Scholar]

- Bouguettaya, A.; Sheng, Q.Z.; Daniel, F. Web Services Foundations; Springer: Berlin/Heidelberg, Germany, 2014. [Google Scholar]

- Bellegarda, J.R. Spoken language understanding for natural interaction: The siri experience. In Natural Interaction with Robots, Knowbots and Smartphones; Springer: New York, NY, USA, 2014; pp. 3–14. [Google Scholar]

- Sarikaya, R.; Crook, P.A.; Marin, A.; Jeong, M.; Robichaud, J.P.; Celikyilmaz, A.; Kim, Y.B.; Rochette, A.; Khan, O.Z.; Liu, X.; et al. An overview of end-to-end language understanding and dialog management for personal digital assistants. In Proceedings of the 2016 IEEE Spoken Language Technology Workshop (SLT), San Diego, CA, USA, 13–16 December 2016; pp. 391–397. [Google Scholar]

- Xu, X.; Liu, C.; Song, D. Sqlnet: Generating structured queries from natural language without reinforcement learning. arXiv 2017, arXiv:1711.04436. [Google Scholar]

- Su, Y.; Awadallah, A.H.; Khabsa, M.; Pantel, P.; Gamon, M.; Encarnacion, M. Building natural language interfaces to web apis. In Proceedings of the 2017 ACM on Conference on Information and Knowledge Management, Singapore, 6–10 November 2017; pp. 177–186. [Google Scholar]

- Austerjost, J.; Porr, M.; Riedel, N.; Geier, D.; Becker, T.; Scheper, T.; Marquard, D.; Lindner, P.; Beutel, S. Introducing a Virtual Assistant to the Lab: A Voice User Interface for the Intuitive Control of Laboratory Instruments. SLAS Technol. Transl. Life Sci. Innov. 2018, 23, 476–482. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mehrabani, M.; Bangalore, S.; Stern, B. Personalized speech recognition for Internet of Things. In Proceedings of the 2015 IEEE 2nd World Forum on Internet of Things (WF-IoT), Milan, Italy, 14–16 December 2015. [Google Scholar]

- Noura, M.; Heil, S.; Gaedke, M. Natural language goal understanding for smart home environments. In Proceedings of the 10th International Conference on the Internet of Things, Malmo, Sweden, 6–9 October 2020. [Google Scholar]

- Feng, J. A general framework for building natural language understanding modules in voice search. In Proceedings of the 2010 IEEE International Conference on Acoustics, Speech and Signal Processing, Dallas, TX, USA, 14–19 March 2010; pp. 5362–5365. [Google Scholar]

- Maynard, D.; Bontcheva, K.; Augenstein, I. Natural Language Processing for the Semantic Web; Synthesis Lectures on Data, Semantics, and Knowledge; Springer: Cham, Switzerland, 2016; Volume 6, pp. 1–194. [Google Scholar]

- Dar, H.S.; Lali, M.I.; Din, M.U.; Malik, K.M.; Bukhari, S.A.C. Frameworks for querying databases using natural language: A literature review. arXiv 2019, arXiv:1909.01822. [Google Scholar]

- Jin, H.; Luo, Y.; Gao, C.; Tang, X.; Yuan, P. ComQA: Question answering over knowledge base via semantic matching. IEEE Access 2019, 7, 75235–75246. [Google Scholar] [CrossRef]

- Ait-Mlouk, A.; Jiang, L. KBot: A Knowledge graph based chatBot for natural language understanding over linked data. IEEE Access 2020, 8, 149220–149230. [Google Scholar] [CrossRef]

- Song, J.; Liu, F.; Ding, K.; Du, K.; Zhang, X. Semantic comprehension of questions in Q&A system for Chinese Language based on semantic element combination. IEEE Access 2020, 8, 102971–102981. [Google Scholar]

- Xu, K.; Reddy, S.; Feng, Y.; Huang, S.; Zhao, D. Question answering on freebase via relation extraction and textual evidence. arXiv 2016, arXiv:1603.00957. [Google Scholar]

- Chu, E.T.H.; Huang, Z.Z. DBOS: A dialog-based object query system for hospital nurses. Sensors 2020, 20, 6639. [Google Scholar] [CrossRef] [PubMed]

- Tian, Y.; Shou, L.-D.; Chen, K.; Luo, X.-Y.; Chen, G. Natural Language Interface for Databases with Content-based Table Column Embeddings. Comput. Sci. 2020, 47, 60–66. [Google Scholar]

- V.S., A.; Kamath S, S. Discovering composable web services using functional semantics and service dependencies based on natural language requests. Inf. Syst. Front. 2019, 21, 175–189. [Google Scholar] [CrossRef]

- Qiu, M.; Li, F.L.; Wang, S.; Gao, X.; Chen, Y.; Zhao, W.; Chen, H.; Huang, J.; Chu, W. Alime chat: A sequence to sequence and rerank based chatbot engine. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), Vancouver, LM, Canada, 30 July–4 August 2017; pp. 498–503. [Google Scholar]

- Wu, H.; Shen, C.; He, Z.; Wang, Y.; Xu, X. SCADA-NLI: A Natural Language Query and Control Interface for Distributed Systems. IEEE Access 2021, 9, 78108–78127. [Google Scholar] [CrossRef]

- Qin, L.; Xie, T.; Che, W.; Liu, T. A Survey on Spoken Language Understanding: Recent Advances and New Frontiers. In Proceedings of the International Joint Conference on Artificial Intelligence, Montreal, QC, Canada, 19–27 August 2021. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the Advances in Neural Information Processing Systems 27, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Mikolov, T.; Karafiát, M.; Burget, L.; Cernocky, J.; Khudanpur, S. Recurrent neural network based language model. In Proceedings of the ‘Eleventh Annual Conference of the International Speech Communication Association’, Chiba, Japan, 26–30 September 2010. [Google Scholar]

- Sundermeyer, M.; Schlüter, R.; Ney, H. LSTM neural networks for language processing. In Proceedings of the Interspeech, Portland, OR, USA, 9–13 September 2012; Volume 2012, pp. 194–197. [Google Scholar]

- Dey, R.; Salem, F.M. Gate-variants of gated recurrent unit (GRU) neural networks. In Proceedings of the 2017 IEEE 60th International Midwest Symposium on Circuits and Systems (MWSCAS), Boston, MA, USA, 6–9 August 2017; pp. 1597–1600. [Google Scholar]

- Huang, Y.; Jiang, Y.; Hasan, T.; Jiang, Q.; Li, C. A topic BiLSTM model for sentiment classification. In Proceedings of the 2nd International Conference on Innovation in Artificial Intelligence, Shanghai China, 9–12 March 2018; pp. 143–147. [Google Scholar]

- Zhou, L.; Bian, X. Improved text sentiment classification method based on BiGRU-Attention. J. Phys. Conf. Ser. 2019, 1345, 032097. [Google Scholar] [CrossRef]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Chorowski, J.; Jaitly, N. Towards better decoding and language model integration in sequence to sequence models. arXiv 2016, arXiv:1612.02695. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems 30, Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Shaw, P.; Uszkoreit, J.; Vaswani, A. Self-attention with relative position representations. arXiv 2018, arXiv:1803.02155. [Google Scholar]

| Relations | Word Descriptions | Examples |

|---|---|---|

| HED | Sentence Core | Turn on the table lamp in the shed (ROOT, turn on, HED) |

| ATT | Definite and central words | Turning off the sensors in the shed (sensors, shed, ATT) |

| VOB | Object and Predicate | Please help me to turn on the nozzle of the rice field (turn on, nozzle, VOB) |

| COO | The same type of words | Turn on the pumps and sensors in the wheat field (pumps, sensors, COO) |

| No. | Type | Natural Language Commands | AOM Statements |

|---|---|---|---|

| 1 | a | 请帮我打开大棚的空调 (Please help me turn on the air conditioning in the shed) | {Action_1: “打开” (“turn on”), Object_1: “大棚” (“shed”), Member_1: “空调” (“air conditioning”)} |

| 2 | b | 先打开大棚的空调, 再查看大棚的温度是多少 (First turn on the air conditioning of the shed, and then check what the temperature of the shed is) | { Action_1: “打开” (“turn on”), Object_1: “大棚” (“shed”), Member_1: “空调” (“air conditioning”)}, {Action_1: “查看” (“check”), Object_1: “大棚” (“shed”), Member_1: “温度” (“temperature”)} |

| 3 | c | 请帮我同时打开稻田和麦田的水泵 (Please help me turn on the water pump in both the rice and wheat fields) | { Action_1: “打开” (“turn on”), Object: {Object_1: “稻田” (“rice fields”), Object_2: “麦田” (“wheat fields”)}, Member_1: “水泵” (“pump”)} |

| 4 | d | 分别打开稻田的喷头和传感器 (Turn on the nozzle and sensor of the rice field separately) | {Action_1: “打开” (“turn on”), Object_1: “稻田” (“rice fiel”), Member: {Member_1: “喷头” (“nozzle”), Member_2: “传感器” (“sensor”)}} |

| 5 | e | 请帮我查看稻田里土壤的温度和湿度 (Please help me to check the temperature and humidity of the soil in the rice field) | {Action_1: “查看” (“check”), Object_1: “稻田” (“rice fiel”), Object_2: “土壤” (“soil”), Member:{Member_1: “温度” (“temperature”): Member_2: “湿度” (“humidity”)}} |

| … | … | … | … |

| No. | Number | Accuracy | Precision | Recall | F-Score | Time Taken |

|---|---|---|---|---|---|---|

| 1 | 50 | 96.21% | 95.52% | 94.37% | 94.94% | 0.92 s |

| 2 | 100 | 95.57% | 93.39% | 92.57% | 92.98% | 0.93 s |

| 3 | 150 | 94.29% | 93.57% | 92.89% | 93.23% | 0.95 s |

| 4 | 200 | 93.87% | 92.24% | 91.19% | 91.71% | 0.94 s |

| No. | Number | Accuracy | Precision | Recall | F-Score | Time Taken |

|---|---|---|---|---|---|---|

| 1 | 50 | 96.22% | 95.37% | 94.59% | 94.98% | 0.97 s |

| 2 | 100 | 95.29% | 93.56% | 92.29% | 92.92% | 0.98 s |

| 3 | 150 | 93.21% | 92.29% | 92.27% | 92.28% | 0.97 s |

| 4 | 200 | 92.27% | 91.48% | 90.57% | 91.02% | 0.95 s |

| No. | Number | Accuracy | Precision | Recall | F-Score | Time Taken |

|---|---|---|---|---|---|---|

| 1 | 50 | 91.15% | 90.07% | 89.87% | 89.96% | 1.97 s |

| 2 | 100 | 90.27% | 89.97% | 88.53% | 89.24% | 1.98 s |

| 3 | 150 | 89.87% | 88.87% | 87.78% | 88.32% | 1.94 s |

| 4 | 200 | 87.79% | 86.64% | 85.57% | 86.10% | 1.98 s |

| No. | Number | Accuracy | Precision | Recall | F-Score | Time Taken |

|---|---|---|---|---|---|---|

| 1 | 50 | 92.16% | 95.10% | 93.27% | 94.18% | 1.86 s |

| 2 | 100 | 90.10% | 89.81% | 90.65% | 90.22 % | 1.96 s |

| 3 | 150 | 88.15% | 87.58% | 89.91% | 88.73% | 1.96 s |

| 4 | 200 | 89.47% | 89.58% | 90.72% | 90.15% | 1.87 s |

| No. | Number | Accuracy | Precision | Recall | F-Score | Time Taken |

|---|---|---|---|---|---|---|

| 1 | 50 | 87.57% | 86.52% | 85.12% | 85.81% | 2.02 s |

| 2 | 100 | 85.47% | 84.12% | 83.39% | 83.75% | 2.06 s |

| 3 | 150 | 83.39% | 82.21% | 81.19% | 81.70% | 2.06 s |

| 4 | 200 | 81.27% | 80.23% | 79.81% | 80.02% | 2.07 s |

| Fields | Model Name | Accuracy(Train) | Accuracy(Test) |

|---|---|---|---|

| Seq2Seq(LSTM) | 45.12%(758/1680) | 42.62%(179/420) | |

| Seq2Seq(GRU) | 41.43%(696/1680) | 39.52%(166/420) | |

| Action | Seq2Seq(BiLSTM) | 48.87%(821/1680) | 45.48%(191/420) |

| Seq2Seq(BiGRU) | 52.02%(874/1680) | 44.52%(187/420) | |

| Seq2Seq(BERT)(Our method) | 86.43%(1452/1680) | 85.24%(358/420) | |

| Seq2Seq(LSTM) | 27.20%(457/1680) | 26.90%(113/420) | |

| Seq2Seq(GRU) | 31.01%(521/1680) | 29.05%(122/420) | |

| Object | Seq2Seq(BiLSTM) | 36.37%(611/1680) | 34.76%(146/420) |

| Seq2Seq(BiGRU) | 35.77%(601/1680) | 33.57%(141/420) | |

| Seq2Seq(BERT)(Our method) | 61.13%(1027/1680) | 60.24%(253/420) | |

| Seq2Seq(LSTM) | 45.12%(758/1680) | 42.62%(179/420) | |

| Seq2Seq(GRU) | 41.43%(696/1680) | 39.52%(166/420) | |

| Member | Seq2Seq(BiLSTM) | 48.87%(821/1680) | 45.48%(191/420) |

| Seq2Seq(BiGRU) | 52.02%(874/1680) | 44.52%(187/420) | |

| Seq2Seq(BERT)(Our method) | 86.43%(1452/1680) | 85.24%(358/420) |

| No. | Model Name | Accuracy | Precision | Recall | F-Score |

|---|---|---|---|---|---|

| 1 | Seq2Seq(LSTM) | 32.17% | 38.19% | 37.21% | 37.69% |

| 2 | Seq2Seq(GRU) | 33.38% | 34.57% | 33.27% | 33.91% |

| 3 | Seq2Seq(BiLSTM) | 41.27% | 47.89% | 43.57% | 45.53% |

| 4 | Seq2Seq(BiGRU) | 40.83% | 47.24% | 46.87% | 47.05% |

| 5 | Seq2Seq(BERT)(Our method) | 78.71% | 79.21% | 78.98% | 79.09% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Yao, S.; Wang, P.; Wu, H.; Xu, Z.; Wang, Y.; Zhang, Y. Building Natural Language Interfaces Using Natural Language Understanding and Generation: A Case Study on Human–Machine Interaction in Agriculture. Appl. Sci. 2022, 12, 11830. https://doi.org/10.3390/app122211830

Zhang Y, Yao S, Wang P, Wu H, Xu Z, Wang Y, Zhang Y. Building Natural Language Interfaces Using Natural Language Understanding and Generation: A Case Study on Human–Machine Interaction in Agriculture. Applied Sciences. 2022; 12(22):11830. https://doi.org/10.3390/app122211830

Chicago/Turabian StyleZhang, Yongheng, Siyi Yao, Peng Wang, Hao Wu, Zhipeng Xu, Yongmei Wang, and Youhua Zhang. 2022. "Building Natural Language Interfaces Using Natural Language Understanding and Generation: A Case Study on Human–Machine Interaction in Agriculture" Applied Sciences 12, no. 22: 11830. https://doi.org/10.3390/app122211830

APA StyleZhang, Y., Yao, S., Wang, P., Wu, H., Xu, Z., Wang, Y., & Zhang, Y. (2022). Building Natural Language Interfaces Using Natural Language Understanding and Generation: A Case Study on Human–Machine Interaction in Agriculture. Applied Sciences, 12(22), 11830. https://doi.org/10.3390/app122211830