FF-MR: A DoH-Encrypted DNS Covert Channel Detection Method Based on Feature Fusion

Abstract

:1. Introduction

- We summarize and analyze the threat scenario of DoH-encrypted DNS covert channels in the C&C stage, clarify its communication principle, and provide support for the research of detection methods.

- We propose a DoH-encrypted DNS covert channel detection method (FF-MR) based on feature fusion. FF-MR takes the session as a representation of encrypted DNS covert channel traffic, fuses statistical features with byte sequence features extracted by Residual Neural Networks, and focuses on important features through a Multi-Head Attention mechanism to detect and identify three encrypted DNS covert channels.

- We conduct comprehensive experiments to evaluate the performance of FF-MR by comparing it with other encrypted DNS covert channel detection methods. We establish four baselines to measure the improvements achieved by the detection model in FF-MR and verify the validity of the model. Finally, recommended values for the hyperparameters are identified using a parameter sensitivity experiment.

2. Related Work

- There are few works related to DoH-encrypted DNS covert channel detection and identification in existing studies, and the performance in this area still needs to be improved;

- Most existing studies use statistical features as the basis for detection, which makes it easy for attackers to evade detection by using single features;

- The role of byte sequence features and combining multiple features is ignored, thus failing to meet the requirements of encrypted DNS covert channel detection.

3. Background

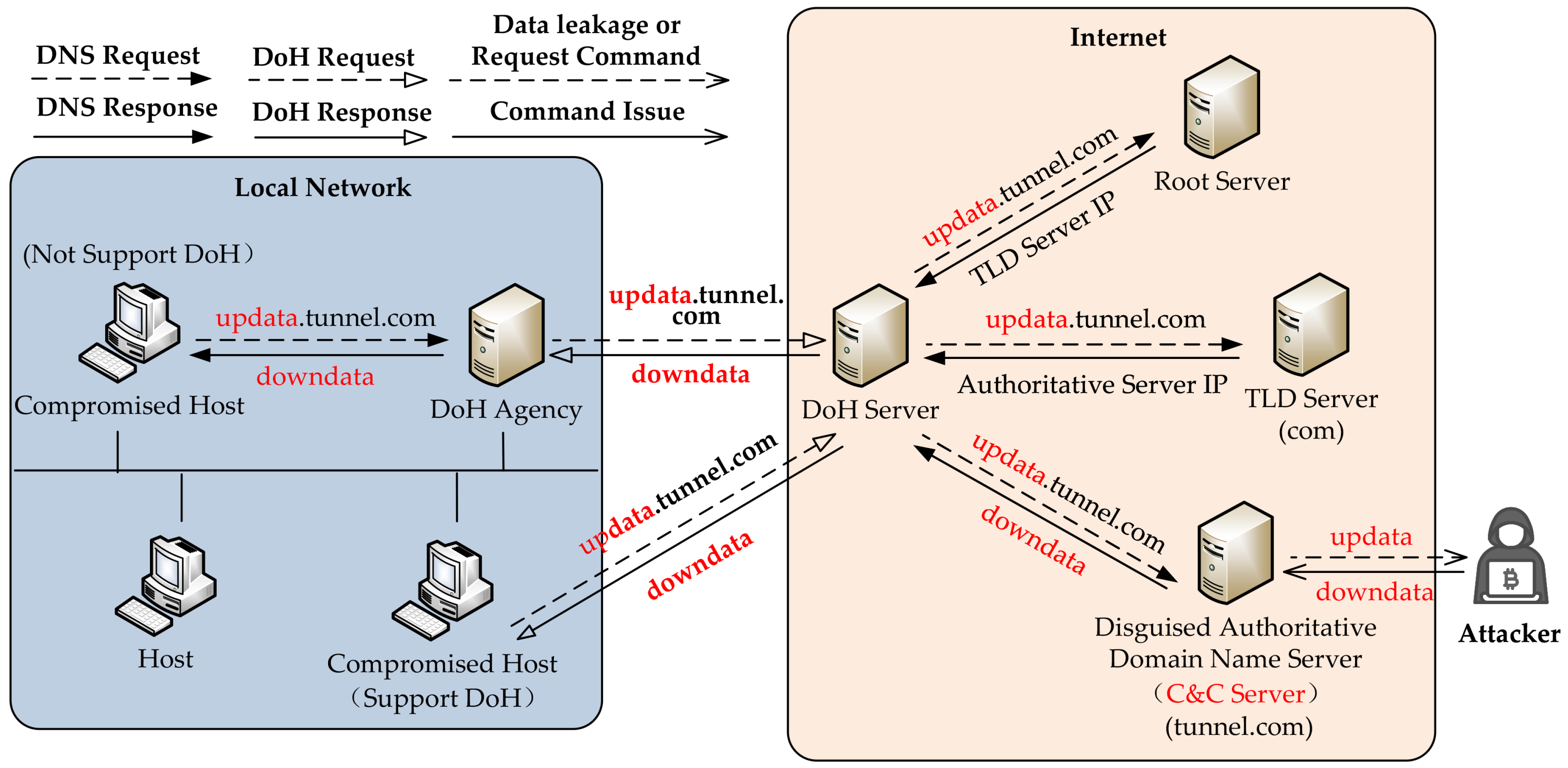

3.1. DoH-Encrypted DNS Covert Channel

3.2. Multi-Head Attention Mechanism

4. Method Design

4.1. Data Preprocessing

4.1.1. Traffic Splitting

4.1.2. Traffic Cleaning

4.2. Statistical Features and Session Representation Extraction

4.2.1. Session Representation Extraction

4.2.2. Session Statistical Features Extraction

4.3. Model Development and Architecture

4.3.1. Session Byte Sequence Features Extraction

4.3.2. Weighted Fusion of Session Statistical Features and Byte Sequence Features

4.3.3. Session Classification

5. Experimental Evaluation

5.1. Dataset and Performance Metrics

5.2. Hyperparameter Settings

5.3. Performance Evaluation

5.3.1. t-SNE Feature Dimensionality Reduction and Visual Analysis

5.3.2. Results and Evaluation

- LightGBM [13] is a framework for implementing the Gradient Boosting Decision Tree (GBDT) algorithm, which supports efficient parallel training and has a faster training speed, lower memory consumption, and better accuracy. The method takes the statistical features of flow as input and outputs one of the five labels as a prediction;

- HAST-II [42] takes the first 4096 bytes of the session as input and combines CNN with LSTM to learn the spatial features and temporal features of the session bytes, respectively; it then uses a softmax classifier to perform five classifications on the spatio-temporal features;

- The input of CENTIME [43] is the same as ours: both use the statistical features and the first n bytes of the session. The difference is that they use the self-encoder to reconstruct the statistical features and the residual neural network with the same structure as ours to extract the byte sequence features and then concatenate the two inputs to the fully connected network for classification.

5.4. Validation of Effectiveness

5.5. Parameter Sensitivity Experiments

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| DNS | Domain Name System |

| DoH | DNS over HTTPS |

| FF-MR | Feature Fusion based on Multi-Head Attention and Residual Neural Network |

| MLP | Multilayer Perceptron |

| DNSSEC | Domain Name System Security Extensions |

| DoT | DNS over TLS |

| IoT | Internet of Things |

| C&C | Command and Control |

| APT | Advanced Persistent Threat |

| RF | Random Forest |

| NB | Naive Bayes |

| SVM | Support Vector Machines |

| LSTM | Long Short-Term Memory |

| ELK | Elasticsearch, Logstash, Kibana |

| SOC | Security Operation Center |

| Bi-RNN | Bidirectional Recurrent Neural Network |

| ET-BERT | Encrypted Traffic Bidirectional Encoder Representations from Transformer |

| EV SSL | Extended Validation SSL Certificate |

| Conv1D | One-dimensional Convolutional layer |

| NLP | Natural Language Processing |

| ResLayer | Residual Layer |

| ResBlock | Residual block |

| BatchNorm | Batch Normalization layer |

| Avgpooling1D | One-dimensional Averaging pooling |

| TP | True Positives |

| TN | True Negatives |

| FP | False Positives |

| FN | False Negatives |

| GBDT | Gradient Boosting Decision Tree |

References

- Meng, D.; Zou, F. DNS Privacy Protection Security Analysis. Commun. Technol. 2020, 53, 5. [Google Scholar]

- Cloudflare. Dns Over tls Vs. dns Over https | Secure dns. Technical Report. 2021. Available online: https://www.cloudflare-cn.com/learning/dns/dns-over-tls/ (accessed on 10 June 2022).

- Bures, M.; Klima, M.; Rechtberger, V.; Ahmed, B.S.; Hindy, H.; Bellekens, X. Review of specific features and challenges in the current internet of things systems impacting their security and reliability. In World Conference on Information Systems and Technologies; Springer: Berlin/Heidelberg, Germany, 2021; pp. 546–556. [Google Scholar]

- Mahmoud, R.; Yousuf, T.; Aloul, F.; Zualkernan, I. Internet of things (iot) security: Current status, challenges and prospective measures. In Proceedings of the 2015 10th International Conference for Internet Technology and Secured Transactions (ICITST), London, UK, 14–16 December 2015; pp. 336–341. [Google Scholar]

- Hesselman, C.; Kaeo, M.; Chapin, L.; Claffy, K.; Seiden, M.; McPherson, D.; Piscitello, D.; McConachie, A.; April, T.; Latour, J.; et al. The dns in iot: Opportunities, risks, and challenges. IEEE Internet Comput. 2020, 24, 23–32. [Google Scholar] [CrossRef]

- Network Security Research Lab at 360. An Analysis of Godlua Backdoor. Technical Report. 2019. Available online: https://blog.netlab.360.com/an-analysis-of-godlua-backdoor-en/ (accessed on 10 June 2022).

- Cyber Security Review. Iranian Hacker Group Becomes First Known Apt to Weaponize Dns-Over-Https (Doh). Technical Report. 2020. Available online: https://www.cybersecurity-review.com/news-august-2020/iranian-hacker-group-becomes-first-known-apt-to-weaponize-dns-over-https-doh/ (accessed on 10 June 2022).

- Banadaki, Y.M.; Robert, S. Detecting malicious dns over https traffic in domain name system using machine learning classifiers. J. Comput. Sci. Appl. 2020, 8, 46–55. [Google Scholar]

- Montazerishatoori, M.; Davidson, L.; Kaur, G.; Lashkari, A.H. Detection of doh tunnels using time-series classification of encrypted traffic. In Proceedings of the 2020 IEEE International Conference on Dependable, Autonomic and Secure Computing, International Conference on Pervasive Intelligence and Computing, International Conference on Cloud and Big Data Computing, International Conference on Cyber Science and Technology Congress (DASC/PiCom/CBDCom/CyberSciTech), Calgary, AB, Canada, 17–22 August 2020; pp. 63–70. [Google Scholar]

- Al-Fawa’reh, M.; Ashi, Z.; Jafar, M.T. Detecting malicious dns queries over encrypted tunnels using statistical analysis and bi-directional recurrent neural networks. Karbala Int. J. Mod. Sci. 2021, 7, 4. [Google Scholar] [CrossRef]

- Nguyen, T.A.; Park, M. Doh tunneling detection system for enterprise network using deep learning technique. Appl. Sci. 2022, 12, 2416. [Google Scholar] [CrossRef]

- Zhan, M.; Li, Y.; Yu, G.; Li, B.; Wang, W. Detecting dns over https based data exfiltration. Comput. Netw. 2022, 209, 108919. [Google Scholar] [CrossRef]

- Mitsuhashi, R.; Satoh, A.; Jin, Y.; Iida, K.; Takahiro, S.; Takai, Y. Identifying malicious dns tunnel tools from doh traffic using hierarchical machine learning classification. In International Conference on Information Security; Springer: Berlin/Heidelberg, Germany, 2021; pp. 238–256. [Google Scholar]

- Zebin, T.; Rezvy, S.; Luo, Y. An explainable ai-based intrusion detection system for dns over https (doh) attacks. IEEE Trans. Inf. Forensics Secur. 2022, 17, 2339–2349. [Google Scholar] [CrossRef]

- Ren, H.; Wang, X. Review of attention mechanism. J. Comput. Appl. 2021, 41, 6. [Google Scholar]

- Mnih, V.; Heess, N.; Graves, A.; Kavukcuoglu, K. Recurrent models of visual attention. Adv. Neural Inf. Process. Syst. 2014, 3, 2204–2212. [Google Scholar]

- Zhu, Z.; Rao, Y.; Wu, Y.; Qi, J.; Zhang, Y. Research Progress of Attention Mechanism in Deep Learning. J. Chin. Inf. Process. 2019, 33, 11. [Google Scholar]

- Zhao, S.; Zhang, Z. Attention-via-attention neural machine translation. In Proceedings of the AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018. [Google Scholar]

- Britz, D.; Goldie, A.; Luong, M.T.; Le, Q. Massive exploration of neural machine translation architectures. arXiv 2017, arXiv:1703.03906. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Brown, T.B.; Mann, B.; Ryder, N.; Subbiah, M.; Amodei, D. Language models are few-shot learners. arXiv 2020, arXiv:2005.14165. [Google Scholar]

- Ming, Y.; Meng, X.; Fan, C.; Yu, H. Deep learning for monocular depth estimation: A review. Neurocomputing 2021, 438, 14–33. [Google Scholar]

- Wang, Y.; Dong, X.; Li, G.; Dong, J.; Yu, H. Cascade regression-based face frontalization for dynamic facial expression analysis. Cogn. Comput. 2022, 14, 1571–1584. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Liu, S.; Zhang, X. Intrusion Detection System Based on Dual Attention. Netinfo Secur. 2022, in press. [Google Scholar]

- Zhang, G.; Yan, F.; Zhang, D.; Liu, X. Insider Threat Detection Model Based on LSTM-Attention. Netinfo Secur. 2022, in press. [Google Scholar]

- Jiang, T.; Yin, W.; Cai, B.; Zhang, K. Encrypted malicious traffic identification based on hierarchical spatiotemporal feature and Multi-Head attention. Comput. Eng. 2021, 47, 101–108. [Google Scholar]

- Wang, H.; Wei, T.; Huangfu, Y.; Li, L.; Shen, F. Enabling Self-Attention based multi-feature anomaly detection and classification of network traffic. J. East China Norm. Univ. (Nat. Sci.) 2021, in press. [Google Scholar]

- Wang, R.; Ren, H.; Dong, W.; Li, H.; Sun, X. Network traffic anomaly detection model based on stacked convolution attention. Comput. Eng. 2022, in press. [Google Scholar]

- Lin, X.; Xiong, G.; Gou, G.; Li, Z.; Shi, J.; Yu, J. Et-bert: A contextualized datagram representation with pre-training transformers for encrypted traffic classification. Proc. ACM Web Conf. 2022, 2022, 633–642. [Google Scholar]

- Devlin, J.; Chang, M.W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Ekman, E. iodine; Technical Report; lwIP Developers: New York, NY, USA, 2014. [Google Scholar]

- Ron. dnscat2; Technical Report; SkullSecurity: New York, NY, USA, 2014. [Google Scholar]

- Dembour, O. dns2tcp; Technical Report; SkullSecurity: New York, NY, USA, 2017. [Google Scholar]

- Huo, Y.; Zhao, F. Analysis of Encrypted Malicious Traffic Detection Based on Stacking and Multi-feature Fusion. Comput. Eng. 2022, 142–148. [Google Scholar]

- Torroledo, I.; Camacho, L.D.; Bahnsen, A.C. Hunting malicious tls certificates with deep neural networks. In Proceedings of the 11th ACM Workshop on Artificial Intelligence and Security, Toronto, Canada, 15–19 October 2018; Association for Computing Machinery: New York, NY, USA. [Google Scholar]

- Pai, K.C.; Mitra, S.; Madhusoodhana, C.S. Novel tls signature extraction for malware detection. In Proceedings of the 2020 IEEE International Conference on Electronics, Computing and Communication Technologies (CONECCT), Bangalore, India, 2–4 July 2020. [Google Scholar]

- Lashkari, A.H. Dohlyzer; Technical Report; York University: York, UK, 2020. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE conference on computer vision and pattern recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Van Der Maaten, L.; Hinton, G. Visualizing data using t-sne. J. Mach. Learn. Res. 2008, 9, 2579–2605. [Google Scholar]

- Wang, W.; Sheng, Y.; Wang, J.; Zeng, X.; Ye, X.; Huang, Y.; Zhu, M. Hast-ids: Learning hierarchical spatial-temporal features using deep neural networks to improve intrusion detection. IEEE Access 2018, 6, 1792–1806. [Google Scholar] [CrossRef]

- Wang, M.; Zheng, K.; Ning, X.; Yang, Y.; Wang, X. Centime: A direct comprehensive traffic features extraction for encrypted traffic classification. In Proceedings of the 2021 IEEE 6th International Conference on Computer and Communication Systems (ICCCS), Chengdu, China, 23–26 April 2021; pp. 490–498. [Google Scholar]

| Research Category | Publication Year | Author | Features/Neural Network Input | Method |

|---|---|---|---|---|

| Detection | 2020 | Banadaki et al. [8] | Statistical Features | LGBM, Random Forest |

| 2020 | MontazeriShatoori et al. [9] | Statistical Features | Random Forest, Naive Bayes, SVM, LSTM | |

| 2021 | Al-Fawa’reh [10] | Statistical Features | Bi-RNN | |

| 2022 | Nguyen et al. [11] | Statistical Features | Transformer | |

| 2022 | Zhan et al. [12] | Statistical Features +TLS fingerprint | Decision tree, Random Forest, Logistic Regression | |

| Detection and Identification | 2021 | Mitsuhashi et al. [13] | Statistical Features | LGBM, XGBoost |

| 2022 | Zebin et al. [14] | Statistical Features | Stacked Random Forest |

| Category | Number | Feature |

|---|---|---|

| Duration | 1 | Session duration |

| Number of bytes | 2 | Number of session bytes sent |

| 3 | Rate of session bytes sent | |

| 4 | Number of session bytes received | |

| 5 | Rate of session bytes received | |

| Packet length | 6 | Mean Packet Length |

| 7 | Median Packet Length | |

| 8 | Mode Packet Length | |

| 9 | Variance of Packet Length | |

| 10 | Standard Deviation of Packet Length | |

| 11 | Coefficient of Variation of Packet Length | |

| 12 | Skew from median Packet Length | |

| 13 | Skew from mode Packet Length | |

| Packet time | 14 | Mean Packet Time |

| 15 | Median Packet Time | |

| 16 | Mode Packet Time | |

| 17 | Variance of Packet Time | |

| 18 | Standard Deviation of Packet Time | |

| 19 | Coefficient of Variation of Packet Time | |

| 20 | Skew from median Packet Time | |

| 21 | Skew from mode Packet Time | |

| Request/response time difference | 22 | Mean Request/response time difference |

| 23 | Median Request/response time difference | |

| 24 | Mode Request/response time difference | |

| 25 | Variance of Request/response time difference | |

| 26 | Standard Deviation of Request/response time difference | |

| 27 | Coefficient of Variation of Request/response time difference | |

| 28 | Skew from median Request/response time difference | |

| 29 | Skew from mode Request/response time difference |

| Category | Browsers\Tools | Number of Flows | Number of Sessions | Number of Sessions after Preprocessing |

|---|---|---|---|---|

| malicious_DoH | iodine | 46,613 | 12,368 | 12,367 |

| dnscat2 | 35,622 | 10,298 | 10,298 | |

| dns2tcp | 167,515 | 121,897 | 121,738 | |

| benign_DoH | Google Chrome | 19,807 | 27,940 | 26,238 |

| Mozilla Firefox | ||||

| non_DoH | Google Chrome | 897,493 | 492,171 | 485,654 |

| Mozilla Firefox |

| Substructure | Layer | Operation | Input | Output |

|---|---|---|---|---|

| Residual neural Network | Conv1D | One-dimensional convolution | 1*1024 | 32*1024 |

| ResLayer1 | One-dimensional convolution*4 | 32*1024 | 32*1024 | |

| ResLayer2 | One-dimensional convolution*4 | 32*1024 | 64*512 | |

| ResLayer 3 | One-dimensional convolution*4 | 64*512 | 128*256 | |

| ResLayer 4 | One-dimensional convolution*4 | 128*256 | 256*128 | |

| AvgPooling1D | Global average pooling | 256*128 | 256*1 | |

| Multi-Head Attention mechanism | Linear | linear transformation + Sigmoid | 29*1 | 14*1 |

| Embedding | word embedding | 14*1 | 14*128 | |

| Multi-Head Attention | calculate the attention weight matrix | (256 + 14)*128 | (256 + 14)*128 | |

| Feed Forward | linear transformation + ReLU+ linear transformation | (256 + 14)*128 | (256+14)*128 | |

| Flatten | Flatten the weighted fusion feature matrix | (256 + 14)*128 | 34,560*1 | |

| MLP+softmax | Linear | linear transformation + ReLU | 34,560 + 256 | 200 |

| Linear | linear transformation | 200 | 30 | |

| Linear | linear transformation + softmax | 30 | 5 |

| Metrics | LightGBM [13] | RF [8] | HAST-II [42] | CENTIME [43] | FF-MR | |

|---|---|---|---|---|---|---|

| Macro-averaging | Macro_P | 0.9558 | 0.9609 | 0.9892 | 0.9913 | 0.9972 |

| Macro_R | 0.9489 | 0.9482 | 0.9391 | 0.992 | 0.9973 | |

| Macro_F1 | 0.9522 | 0.9543 | 0.9616 | 0.9916 | 0.9978 | |

| iodine | Precision | 0.9234 | 0.9319 | 0.9743 | 0.9773 | 0.994 |

| Recall | 0.9458 | 0.942 | 0.905 | 0.9766 | 0.9935 | |

| F1-Score | 0.9345 | 0.937 | 0.9384 | 0.977 | 0.9951 | |

| dnscat2 | Precision | 0.9157 | 0.913 | 0.9957 | 0.9864 | 0.9939 |

| Recall | 0.9107 | 0.9154 | 0.7919 | 0.9874 | 0.9942 | |

| F1-Score | 0.9132 | 0.9142 | 0.8822 | 0.9869 | 0.9954 | |

| dns2tcp | Precision | 0.9911 | 0.9926 | 0.9761 | 0.9931 | 0.9993 |

| Recall | 0.986 | 0.9881 | 0.9998 | 0.9982 | 0.9995 | |

| F1-Score | 0.9885 | 0.9903 | 0.9878 | 0.9956 | 0.9995 | |

| benign_DoH | Precision | 0.9513 | 0.9697 | 0.9999 | 0.9994 | 0.999 |

| Recall | 0.9033 | 0.896 | 0.999 | 0.9992 | 0.9995 | |

| F1-Score | 0.9267 | 0.9314 | 0.9994 | 0.9993 | 0.9992 | |

| non_DoH | Precision | 0.9977 | 0.9975 | 0.9999 | 1.0000 | 0.9999 |

| Recall | 0.9987 | 0.9994 | 1.0000 | 0.9987 | 0.9999 | |

| F1-Score | 0.9982 | 0.9985 | 0.9999 | 0.9993 | 0.9999 |

| Metrics | 1D-CNN | 2D-CNN | 1D-Resnet | 2D-Resnet | MHA-Resnet | |

|---|---|---|---|---|---|---|

| Macro-averaging | Macro_P | 0.9061 | 0.9098 | 0.9838 | 0.9514 | 0.9972 |

| Macro_R | 0.9089 | 0.9157 | 0.9835 | 0.9519 | 0.9973 | |

| Macro_F1 | 0.9075 | 0.9126 | 0.9836 | 0.9516 | 0.9978 | |

| iodine | Precision | 0.847 | 0.8557 | 0.9696 | 0.9262 | 0.994 |

| Recall | 0.8456 | 0.8513 | 0.9673 | 0.9188 | 0.9935 | |

| F1-Score | 0.8463 | 0.8535 | 0.9684 | 0.9225 | 0.9951 | |

| dnscat2 | Precision | 0.7007 | 0.7081 | 0.954 | 0.8424 | 0.9939 |

| Recall | 0.7238 | 0.7524 | 0.9558 | 0.8563 | 0.9942 | |

| F1-Score | 0.712 | 0.7296 | 0.9549 | 0.8493 | 0.9954 | |

| dns2tcp | Precision | 0.9851 | 0.9874 | 0.9959 | 0.9899 | 0.9993 |

| Recall | 0.9825 | 0.9825 | 0.9959 | 0.9892 | 0.9995 | |

| F1-Score | 0.9838 | 0.985 | 0.9959 | 0.9896 | 0.9995 | |

| benign_DoH | Precision | 0.9983 | 0.9981 | 0.9994 | 0.9989 | 0.999 |

| Recall | 0.9929 | 0.9926 | 0.9987 | 0.9952 | 0.9995 | |

| F1-Score | 0.9956 | 0.9953 | 0.999 | 0.997 | 0.9992 | |

| non_DoH | Precision | 0.9996 | 0.9996 | 0.9999 | 0.9997 | 0.9999 |

| Recall | 0.9999 | 0.9999 | 1.0000 | 0.9999 | 0.9999 | |

| F1-Score | 0.9997 | 0.9997 | 0.9999 | 0.9998 | 0.9999 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Shen, C.; Hou, D.; Xiong, X.; Li, Y. FF-MR: A DoH-Encrypted DNS Covert Channel Detection Method Based on Feature Fusion. Appl. Sci. 2022, 12, 12644. https://doi.org/10.3390/app122412644

Wang Y, Shen C, Hou D, Xiong X, Li Y. FF-MR: A DoH-Encrypted DNS Covert Channel Detection Method Based on Feature Fusion. Applied Sciences. 2022; 12(24):12644. https://doi.org/10.3390/app122412644

Chicago/Turabian StyleWang, Yongjie, Chuanxin Shen, Dongdong Hou, Xinli Xiong, and Yang Li. 2022. "FF-MR: A DoH-Encrypted DNS Covert Channel Detection Method Based on Feature Fusion" Applied Sciences 12, no. 24: 12644. https://doi.org/10.3390/app122412644

APA StyleWang, Y., Shen, C., Hou, D., Xiong, X., & Li, Y. (2022). FF-MR: A DoH-Encrypted DNS Covert Channel Detection Method Based on Feature Fusion. Applied Sciences, 12(24), 12644. https://doi.org/10.3390/app122412644