Anthropomorphic Grasping of Complex-Shaped Objects Using Imitation Learning

Abstract

:1. Introduction

2. Related Work

3. Hardware Configuration

3.1. Manipulator Arm

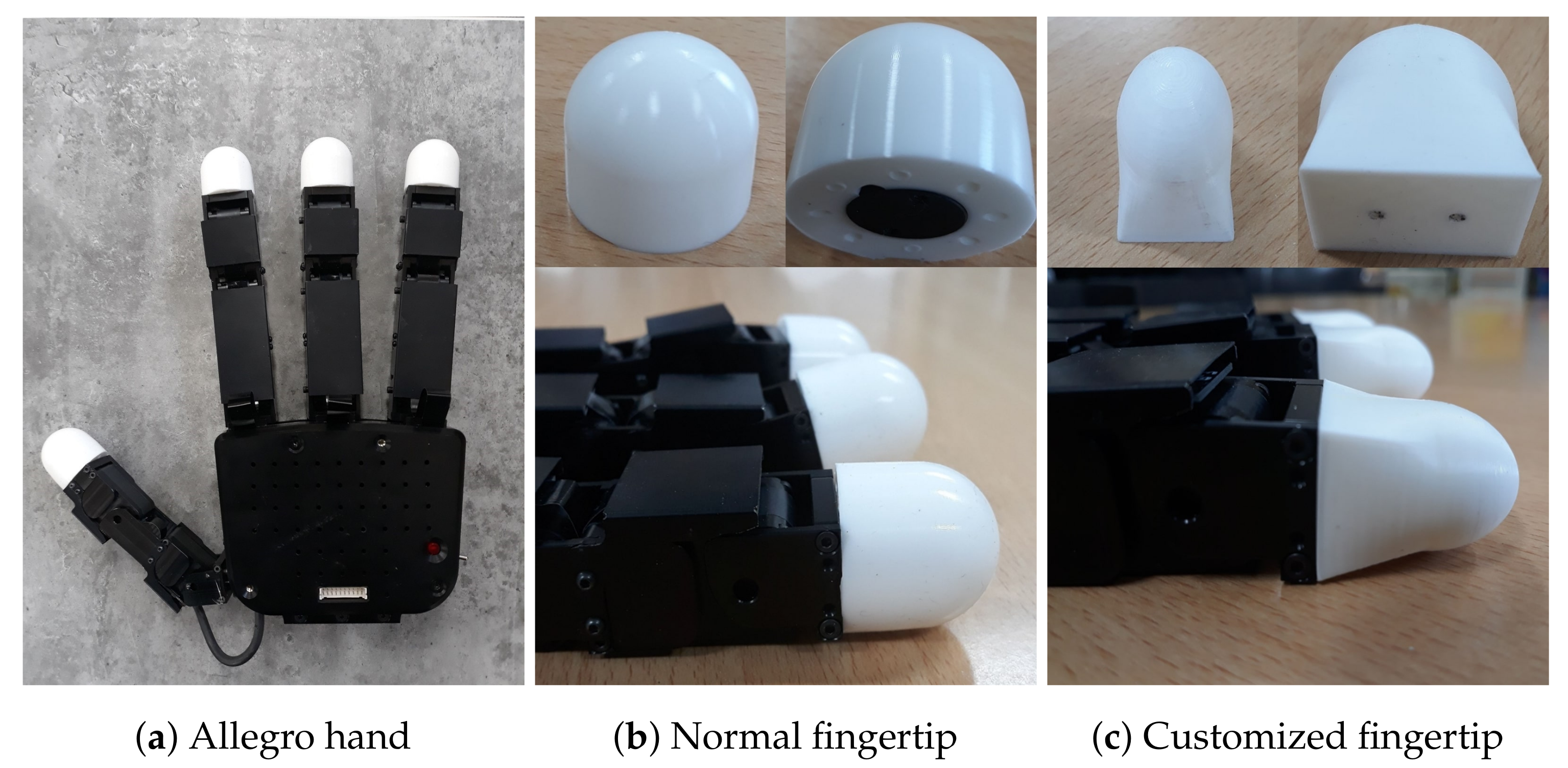

3.2. Robot Hand

3.3. RGBD Camera

4. System Overview

5. Collecting Data for Imitation Learning

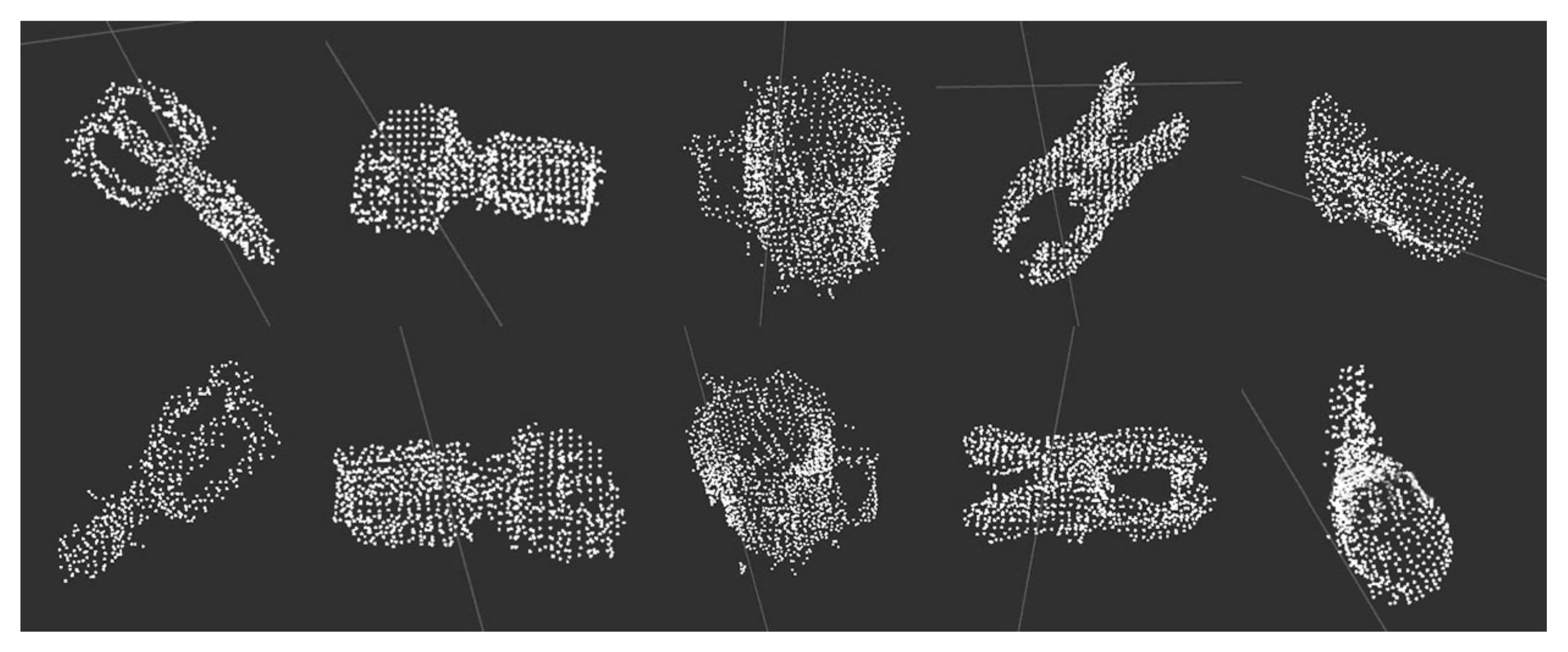

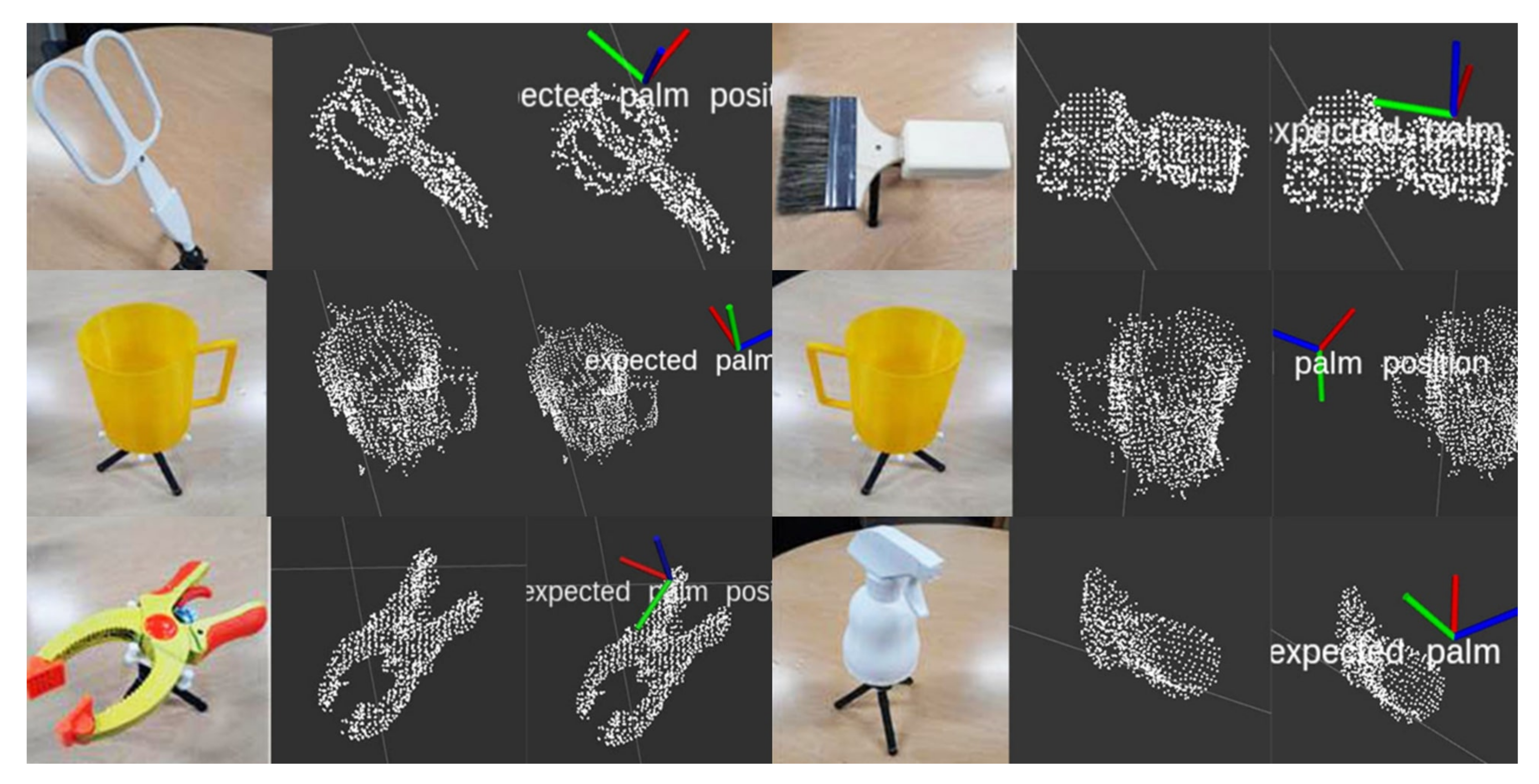

5.1. Three-Dimensional Data

| Algorithm 1 Three-Dimensional Reconstruction Algorithm |

|

5.2. Trajectory Data

5.3. RGB Data

6. Grasping Pose Prediction

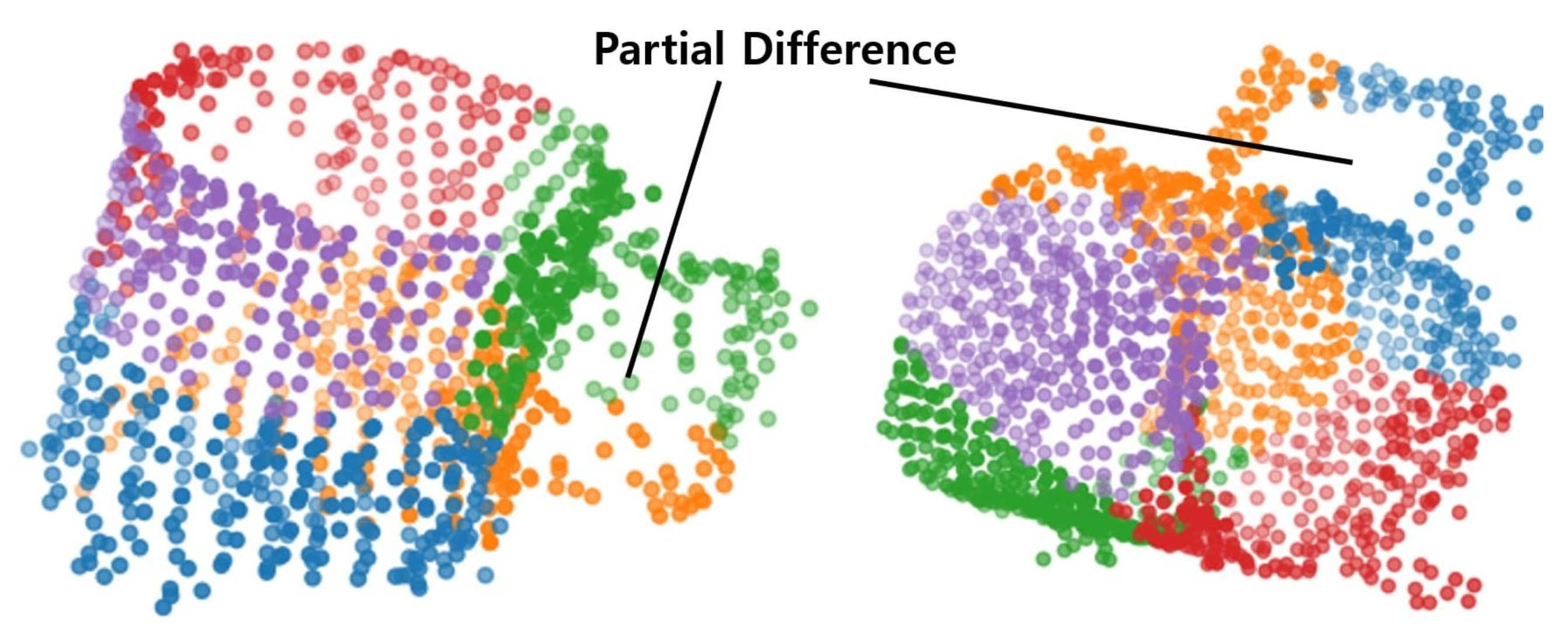

6.1. Candidate Data Selection

6.2. Transformation Matrix Creation

6.3. Grasping Pose Prediction

| Algorithm 2 Predict_grasping_pose(rgb_image, 3d_data) |

|

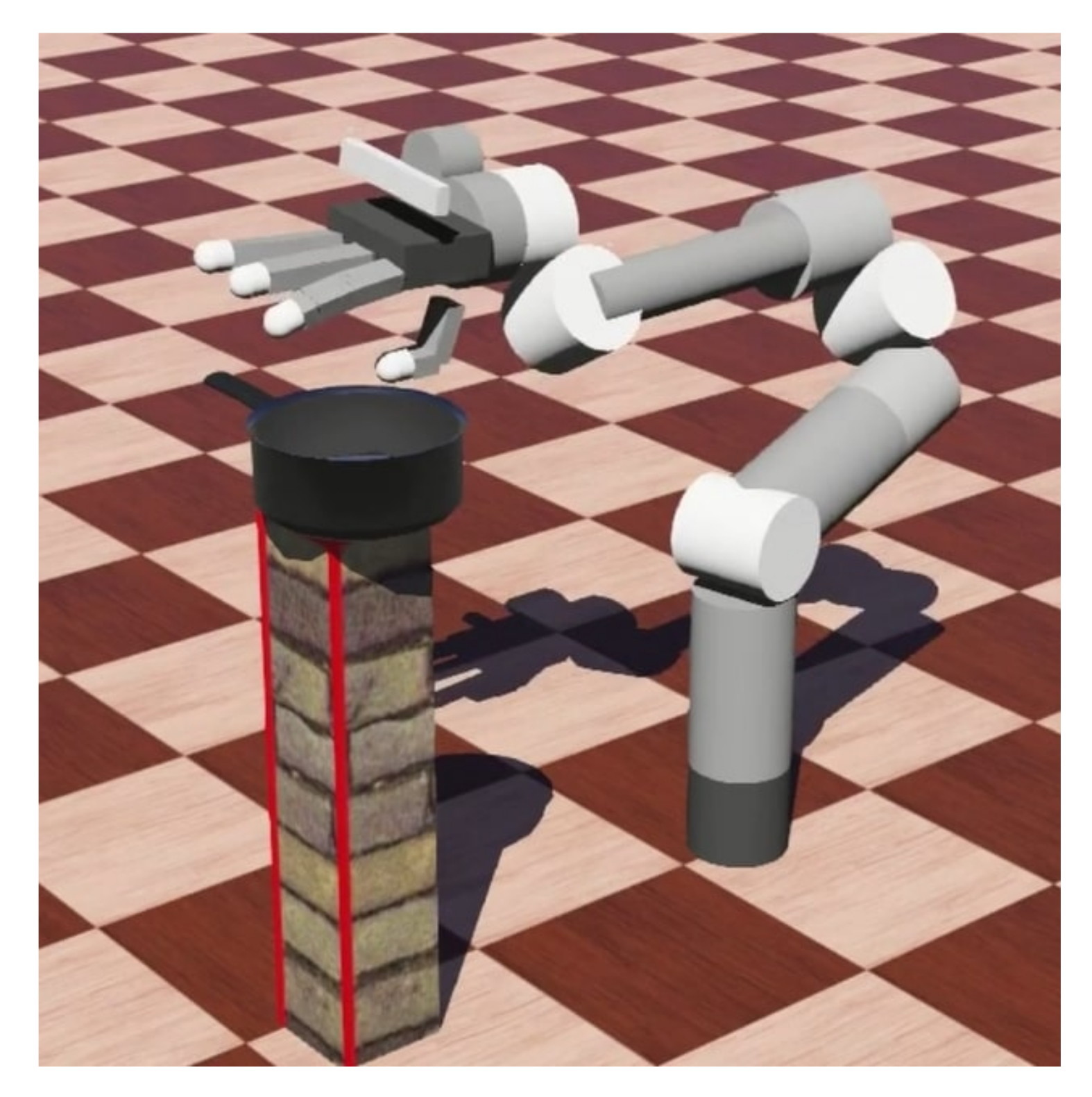

7. Manipulation

7.1. Extraction

7.2. Position Trajectory

7.3. Orientation and Hand Shape Trajectory

8. Experiment

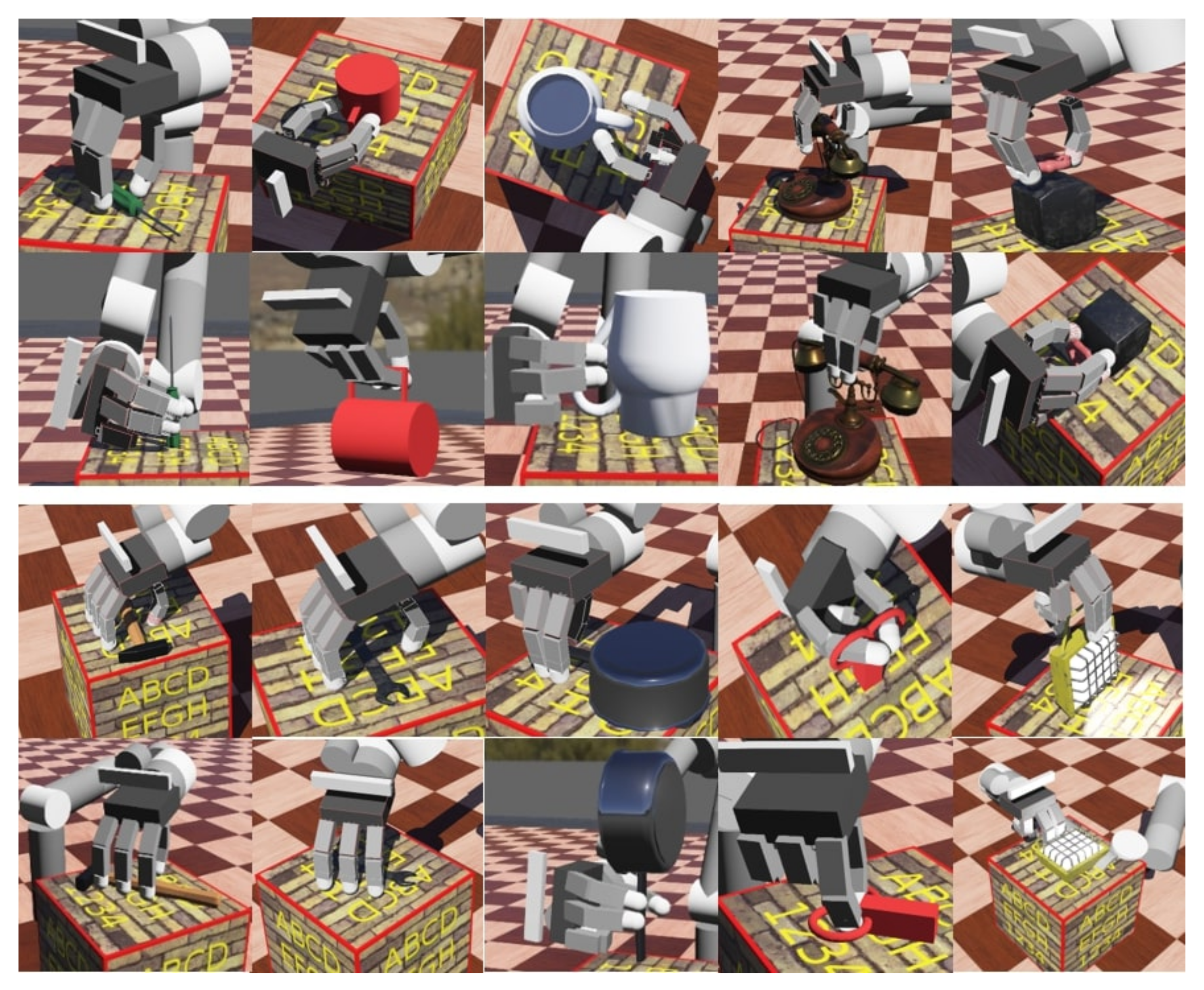

8.1. Simulation Results

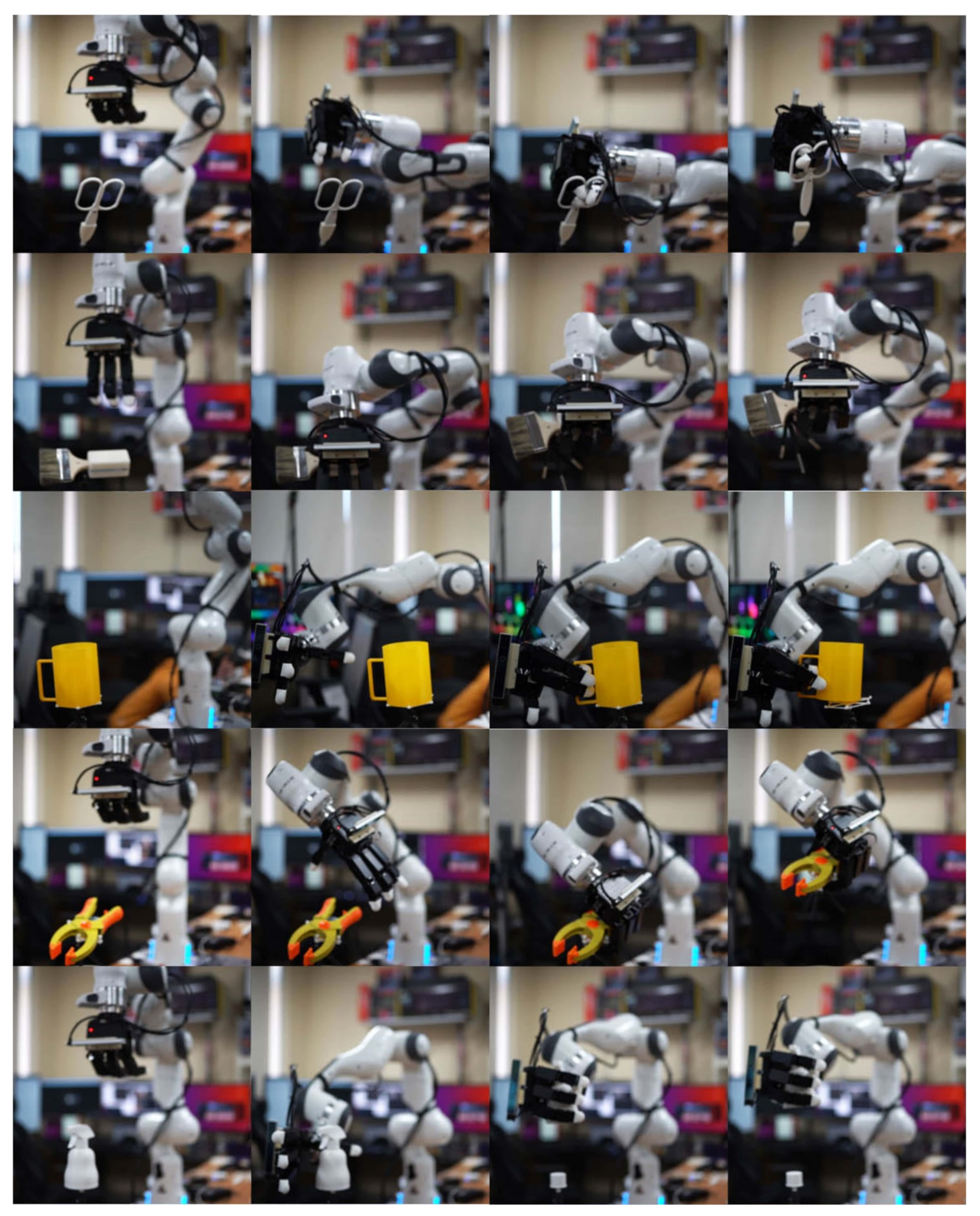

8.2. Experimental Results

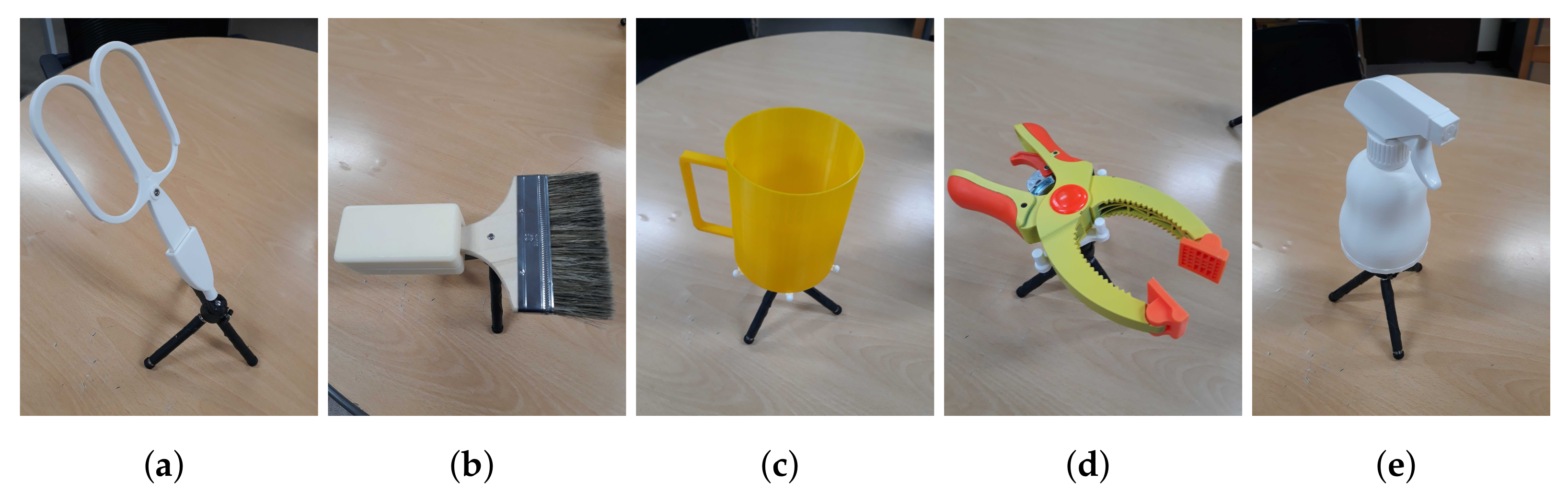

8.2.1. Official Test

8.2.2. Success Conditions

8.2.3. Results

8.3. Discussions

9. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| DOF | Degree of freedom |

| ResNet | Residual neural network |

| DMP | Dynamic movement primitive |

| FK | Forward kinematics |

| IK | Inverse kinematics |

| KNN | K-means clustering |

| HMM | Hidden Markov model |

| DNN | Deep neural network |

| GMR | Gaussian mixture regression |

| GMM | Gaussian mixture model |

| STARHMM | State-based transitions autoregressive hidden Markov model |

| MoMP | Mixture of motor primitives |

References

- Grau, A.; Indri, M.; Bello, L.L.; Sauter, T. Industrial robotics in factory automation: From the early stage to the Internet of Things. In Proceedings of the IECON 2017—43rd Annual Conference of the IEEE Industrial Electronics Society, Beijing, China, 29 October–1 November 2017; pp. 6159–6164. [Google Scholar]

- Grau, A.; Indri, M.; Lo Bello, L.; Sauter, T. Robots in Industry: The Past, Present, and Future of a Growing Collaboration with Humans. IEEE Ind. Electron. Mag. 2021, 15, 50–61. [Google Scholar] [CrossRef]

- Land, N. Towards Implementing Collaborative Robots within the Automotive Industry. Master’s Thesis, University of Skövde, School of Engineering Science, Skövde, Sweden, 2018. Available online: http://urn.kb.se/resolve?urn=urn:nbn:se:his:diva-15925 (accessed on 16 November 2022).

- Torres, P.; Arents, J.; Marques, H.; Marques, P. Bin-Picking Solution for Randomly Placed Automotive Connectors Based on Machine Learning Techniques. Electronics 2022, 11, 476. [Google Scholar] [CrossRef]

- Ishige, M.; Umedachi, T.; Ijiri, Y.; Taniguchi, T.; Kawahara, Y. Blind Bin Picking of Small Screws through In-finger Manipulation with Compliant Robotic Fingers. In Proceedings of the 2020 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 24 October 2020–24 January 2021; pp. 9337–9344. [Google Scholar] [CrossRef]

- Osa, T.; Pajarinen, J.; Neumann, G.; Bagnell, J.; Abbeel, P.; Peters, J. An Algorithmic Perspective on Imitation Learning. Found. Trends Robot. 2018, 7, 1–179. [Google Scholar] [CrossRef]

- Mülling, K.; Kober, J.; Kroemer, O.; Peters, J. Learning to select and generalize striking movements in robot table tennis. Int. J. Robot. Res. 2013, 32, 263–279. [Google Scholar] [CrossRef]

- Calinon, S.; D’halluin, F.; Sauser, E.L.; Caldwell, D.G.; Billard, A.G. Learning and Reproduction of Gestures by Imitation. IEEE Robot. Autom. Mag. 2010, 17, 44–54. [Google Scholar] [CrossRef] [Green Version]

- Dyrstad, J.S.; Ruud Øye, E.; Stahl, A.; Reidar Mathiassen, J. Teaching a Robot to Grasp Real Fish by Imitation Learning from a Human Supervisor in Virtual Reality. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 7185–7192. [Google Scholar]

- Zhang, T.; McCarthy, Z.; Jowl, O.; Lee, D.; Chen, X.; Goldberg, K.; Abbeel, P. Deep Imitation Learning for Complex Manipulation Tasks from Virtual Reality Teleoperation. arXiv 2018, arXiv:1710.04615. [Google Scholar]

- Kim, S.; Shukla, A.; Billard, A. Catching Objects in Flight. IEEE Trans. Robot. 2014, 30, 1049–1065. [Google Scholar] [CrossRef]

- Kroemer, O.; Daniel, C.; Neumann, G.; van Hoof, H.; Peters, J. Towards learning hierarchical skills for multi-phase manipulation tasks. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 1503–1510. [Google Scholar]

- Feix, T.; Romero, J.; Schmiedmayer, H.B.; Dollar, A.M.; Kragic, D. The GRASP Taxonomy of Human Grasp Types. IEEE Trans. Hum.-Mach. Syst. 2016, 46, 66–77. [Google Scholar] [CrossRef]

- OpenAI; Akkaya, I.; Andrychowicz, M.; Chociej, M.; Litwin, M.; McGrew, B.; Petron, A.; Paino, A.; Plappert, M.; Powell, G.; et al. Solving Rubik’s Cube with a Robot Hand. arXiv 2019, arXiv:1910.07113. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE Computer Society: Los Alamitos, CA, USA, 2016; pp. 770–778. [Google Scholar]

- Na, S.; Xumin, L.; Yong, G. Research on k-means Clustering Algorithm: An Improved k-means Clustering Algorithm. In Proceedings of the 2010 Third International Symposium on Intelligent Information Technology and Security Informatics, Ji’an, China, 2–4 April 2010; pp. 63–67. [Google Scholar]

- Zhu, H.; Guo, B.; Zou, K.; Li, Y.; Yuen, K.V.; Mihaylova, L.; Leung, H. A Review of Point Set Registration: From Pairwise Registration to Groupwise Registration. Sensors 2019, 19, 1191. [Google Scholar] [CrossRef] [Green Version]

- Golyanik, V.; Ali, S.A.; Stricker, D. Gravitational Approach for Point Set Registration. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 5802–5810. [Google Scholar]

- Ijspeert, A.J.; Nakanishi, J.; Hoffmann, H.; Pastor, P.; Schaal, S. Dynamical Movement Primitives: Learning Attractor Models for Motor Behaviors. Neural Comput. 2013, 25, 328–373. [Google Scholar] [CrossRef] [PubMed]

- Awad, M.; Khanna, R. Hidden Markov Model. In Efficient Learning Machines; Apress: Berkeley, CA, USA, 2015; pp. 81–104. [Google Scholar] [CrossRef] [Green Version]

- Fabisch, A. gmr: Gaussian Mixture Regression. J. Open Source Softw. 2021, 6, 3054. [Google Scholar] [CrossRef]

- Rao, C.; Liu, Y. Three-dimensional convolutional neural network (3D-CNN) for heterogeneous material homogenization. Comput. Mater. Sci. 2020, 184, 109850. [Google Scholar] [CrossRef]

- Reynolds, D. Gaussian Mixture Models. In Encyclopedia Of Biometrics; Springer: New York, NY, USA, 2009. [Google Scholar]

- Levine, S.; Wagener, N.; Abbeel, P. Learning Contact-Rich Manipulation Skills with Guided Policy Search. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 156–163. [Google Scholar]

- Pratheep, V.G.; Chinnathambi, M.; Priyanka, E.B.; Ponmurugan, P.; Thiagarajan, P. Design and Analysis of six DOF Robotic Manipulator. IOP Conf. Ser. Mater. Sci. Eng. 2021, 1055, 012005. [Google Scholar] [CrossRef]

- Kang, T.; Yi, J.B.; Song, D.; Yi, S.J. High-Speed Autonomous Robotic Assembly Using In-Hand Manipulation and Re-Grasping. Appl. Sci. 2021, 11, 37. [Google Scholar] [CrossRef]

- Yi, J.-B.; Kang, T.; Song, D.; Yi, S.-J. Unified Software Platform for Intelligent Home Service Robots. Appl. Sci. 2020, 10, 5874. [Google Scholar] [CrossRef]

- Ye, L.; Xiong, G.; Zeng, C.; Zhang, H. Trajectory tracking control of 7-DOF redundant robot based on estimation of intention in physical human-robot interaction. Sci. Prog. 2020, 103, 0036850420953642. [Google Scholar] [CrossRef]

- Merckaert, K.; Convens, B.; Wu, C.-J.; Roncone, A.; Nicotra, M.M.; Vanderborght, B. Real-time motion control of robotic manipulators for safe human–robot coexistence. Robot. Comput.-Integr. Manuf. 2022, 73, 102223. [Google Scholar] [CrossRef]

- Schwarz, M.; Lenz, C.; Rochow, A.; Schreiber, M.; Behnke, S. NimbRo Avatar: Interactive Immersive Telepresence with Force-Feedback Telemanipulation. arXiv 2021, arXiv:2109.13772. [Google Scholar]

- Zhu, L. A Pipeline of 3D Scene Reconstruction from Point Clouds. Ph.D. Thesis, Maankäyttötieteiden laitos Department of Real Estate, Planning and Geoinformatics, Espoo, Finland, 2015. [Google Scholar]

- Ko, J.; Ho, Y.S. 3D Point Cloud Generation Using Structure from Motion with Multiple View Images. In Proceedings of the Korean Institute Of Smart Media Fall Conference, Kwangju, Republic of Korea, 28–29 October 2016; pp. 91–92. [Google Scholar]

- Lynch, K.M.; Park, F.C. Modern Robotics: Mechanics, Planning, and Control; Cambridge Univeristy Press: Cambridge, UK, 2017. [Google Scholar]

- Schäfer, M.B.; Stewart, K.W.; Lösch, N.; Pott, P.P. Telemanipulation of an Articulated Robotic Arm Using a Commercial Virtual Reality Controller. Curr. Dir. Biomed. Eng. 2020, 6, 127–130. [Google Scholar] [CrossRef]

- Constantin, D.; Lupoae, M.; Baciu, C.; Ilie, B. Forward Kinematic Analysis of an Industrial Robot. In Proceedings of the International Conference on Mechanical Engineering (ME 2015), Kuantan, Malaysia, 18–19 August 2015; pp. 90–95. [Google Scholar]

- Craig, J.J. Introduction to Robotics: Mechanics and Control, 2nd ed.; Addison-Wesley Longman Publishing Co., Inc.: Boston, MA, USA, 1989. [Google Scholar]

- Michel, O. Webots: Professional Mobile Robot Simulation. J. Adv. Robot. Syst. 2004, 1, 39–42. [Google Scholar]

- Choi, C.; Schwarting, W.; DelPreto, J.; Rus, D. Learning Object Grasping for Soft Robot Hands. IEEE Robot. Autom. Lett. 2018, 3, 2370–2377. [Google Scholar] [CrossRef]

- Zhu, T.; Wu, R.; Lin, X.; Sun, Y. Toward Human-Like Grasp: Dexterous Grasping via Semantic Representation of Object-Hand. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, QC, Canada, 10–17 October 2021; pp. 15721–15731. [Google Scholar] [CrossRef]

- Zhifei, S.; Er, J. A survey of inverse reinforcement learning techniques. Int. J. Intell. Comput. Cybern. 2012, 5, 293–311. [Google Scholar] [CrossRef]

- Lee, M.S.; Admoni, H.; Simmons, R. Machine Teaching for Human Inverse Reinforcement Learning. Front. Robot. AI 2021, 8, 188. [Google Scholar] [CrossRef]

- Vasquez, D.; Okal, B.; Arras, K.O. Inverse Reinforcement Learning algorithms and features for robot navigation in crowds: An experimental comparison. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 1341–1346. [Google Scholar]

- Natarajan, S.; Kunapuli, G.; Judah, K.; Tadepalli, P.; Kersting, K.; Shavlik, J. Multi-Agent Inverse Reinforcement Learning. In Proceedings of the 2010 Ninth International Conference on Machine Learning and Applications, Washington, DC, USA, 12–14 December 2010; pp. 395–400. [Google Scholar]

- Wang, Z.; Long, C.; Cong, G. Trajectory Simplification with Reinforcement Learning. In Proceedings of the 2021 IEEE 37th International Conference on Data Engineering (ICDE), Chania, Greece, 19–22 April 2021; pp. 684–695. [Google Scholar] [CrossRef]

- Efroni, Y.; Merlis, N.; Mannor, S. Reinforcement Learning with Trajectory Feedback. Proc. AAAI Conf. Artif. Intell. 2021, 35, 7288–7295. [Google Scholar] [CrossRef]

- Zhu, H.; Gupta, A.; Rajeswaran, A.; Levine, S.; Kumar, V. Dexterous Manipulation with Deep Reinforcement Learning: Efficient, General, and Low-Cost. In Proceedings of the 2019 International Conference on Robotics and Automation (ICRA), Montreal, QC, Canada, 20–24 May 2019; pp. 3651–3657. [Google Scholar] [CrossRef] [Green Version]

- Chen, T.; Xu, J.; Agrawal, P. A System for General In-Hand Object Re-Orientation. arXiv 2021, arXiv:2111.03043. [Google Scholar]

- Andrychowicz, O.M.; Baker, B.; Chociej, M. Learning dexterous in-hand manipulation. Int. J. Robot. Res. 2020, 39, 3–20. [Google Scholar] [CrossRef]

- Zhao, Z.; Liang, Y. Deep Inverse Reinforcement Learning for Route Choice Modeling. arXiv 2022, arXiv:2206.10598. [Google Scholar]

| Object | Trial Num | Success Num | Failure Num | Success Rate | Failure Rate |

|---|---|---|---|---|---|

| Screwdriver | 10 | 10 | 0 | 100% | 0% |

| Cup | 10 | 9 | 1 | 90% | 10% |

| Carafe | 10 | 10 | 0 | 100% | 0% |

| Telephone | 10 | 10 | 0 | 100% | 0% |

| Valve | 10 | 10 | 0 | 100% | 0% |

| Hammer | 10 | 10 | 0 | 100% | 0% |

| Wrench | 10 | 10 | 0 | 100% | 0% |

| Skillet | 10 | 10 | 0 | 100% | 0% |

| Scissors | 10 | 8 | 2 | 80% | 20% |

| Lamp | 10 | 10 | 0 | 100% | 0% |

| Average | 10 | 9.7 | 0.3 | 97% | 3% |

| Object | Trial Num | Success Num | Failure Num | Success Rate | Failure Rate |

|---|---|---|---|---|---|

| Scissors | 10 | 8 | 2 | 80% | 20% |

| Spray bottle | 10 | 9 | 1 | 90% | 10% |

| Clamp | 10 | 9 | 1 | 90% | 10% |

| Cup | 10 | 8 | 2 | 80% | 20% |

| Brush | 10 | 9 | 1 | 90% | 10% |

| Average | 10 | 8.6 | 1.4 | 86% | 14% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yi, J.-B.; Kim, J.; Kang, T.; Song, D.; Park, J.; Yi, S.-J. Anthropomorphic Grasping of Complex-Shaped Objects Using Imitation Learning. Appl. Sci. 2022, 12, 12861. https://doi.org/10.3390/app122412861

Yi J-B, Kim J, Kang T, Song D, Park J, Yi S-J. Anthropomorphic Grasping of Complex-Shaped Objects Using Imitation Learning. Applied Sciences. 2022; 12(24):12861. https://doi.org/10.3390/app122412861

Chicago/Turabian StyleYi, Jae-Bong, Joonyoung Kim, Taewoong Kang, Dongwoon Song, Jinwoo Park, and Seung-Joon Yi. 2022. "Anthropomorphic Grasping of Complex-Shaped Objects Using Imitation Learning" Applied Sciences 12, no. 24: 12861. https://doi.org/10.3390/app122412861

APA StyleYi, J.-B., Kim, J., Kang, T., Song, D., Park, J., & Yi, S.-J. (2022). Anthropomorphic Grasping of Complex-Shaped Objects Using Imitation Learning. Applied Sciences, 12(24), 12861. https://doi.org/10.3390/app122412861