TobSet: A New Tobacco Crop and Weeds Image Dataset and Its Utilization for Vision-Based Spraying by Agricultural Robots

Abstract

:1. Introduction

- 1.

- Development and deployment of a vision-based robotic spraying system for replacement of the conventional broadcast spraying methods with a site-specific selective spraying technique that can detect tobacco plants and weed and classify them in a real time;

- 2.

- Building a tobacco image dataset (TobSet) that comprises labeled images of tobacco crop and weed. The dataset is collected under challenging real in-field conditions to train and evaluate the latest state-of-the-art deep learning algorithms. TobSet is an open-source dataset and is publicly available at https://github.com/mshahabalam/TobSet (accessed on 11 October 2021).

2. Data Description

- 1.

- It comprises labelled images of tobacco plants and weeds that can be utilized by computer scientists for performance evaluation of their developed computer vision algorithms;

- 2.

- Scientists working on agricultural robotics can use it to train their robots for variable rate-spray applications, plant or weed detection, and detection of plant diseases;

- 3.

- It can also be used by agriculturists and researchers for studying various aspects of tobacco plant growth, weed management, yield enhancement, leaf diseases, and pest prevention, etc.

3. Materials and Methods

4. CNN-Based Detection and Classification Frameworks

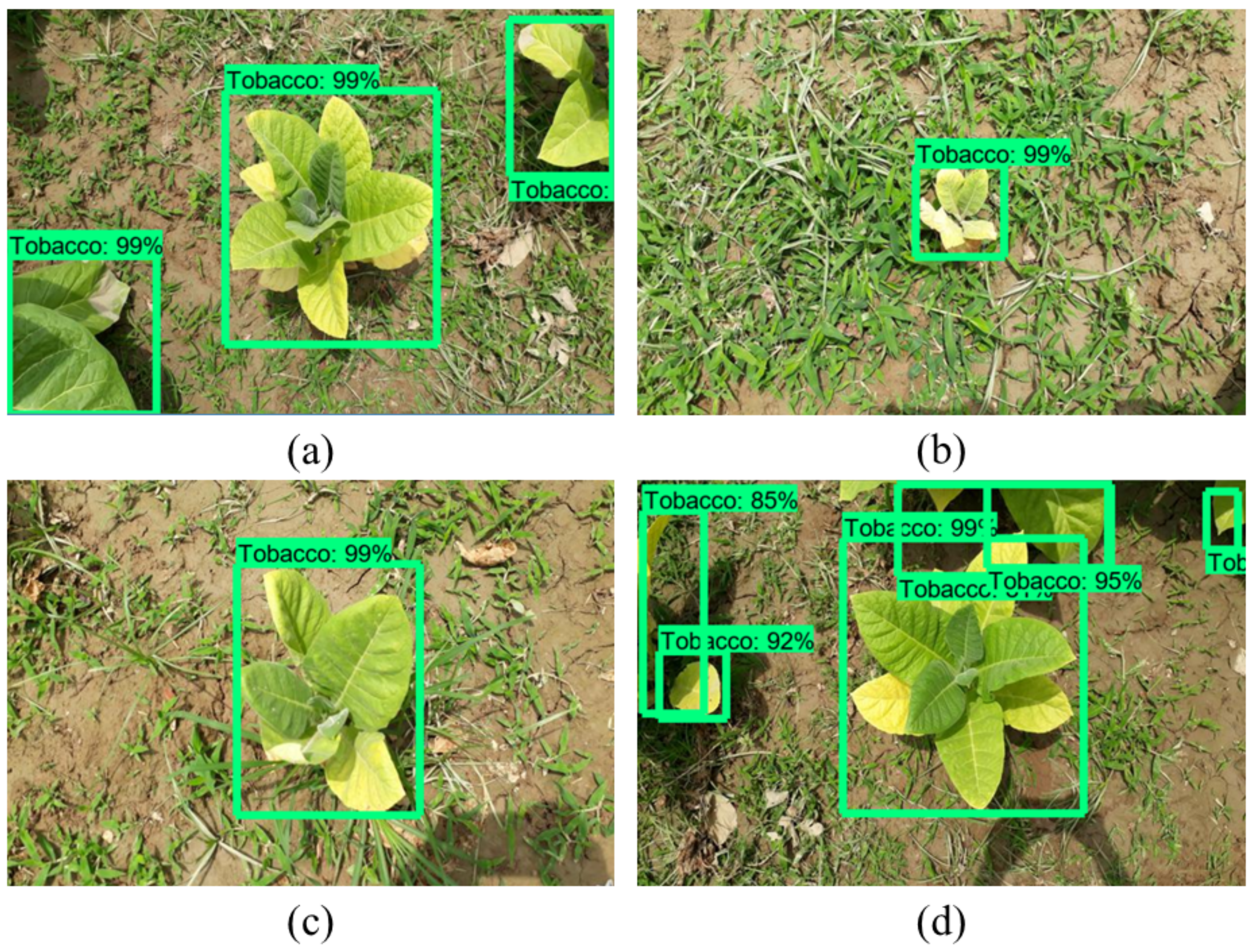

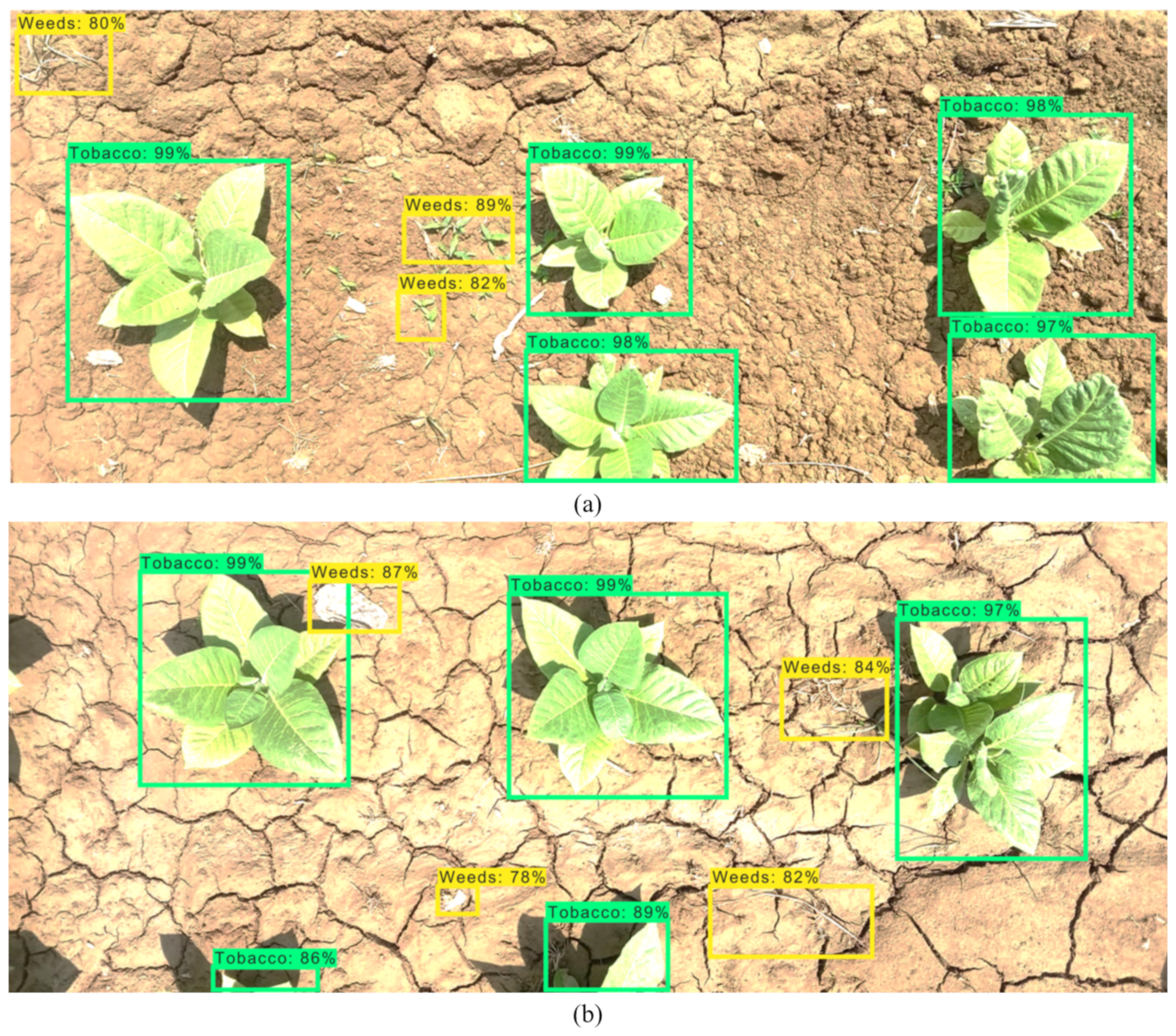

4.1. Faster R-CNN

4.1.1. Convolutional Layers

4.1.2. Region Proposal Networks

4.1.3. ROI Pooling

- 1.

- Original feature maps;

- 2.

- RPN output proposal boxes of different sizes.

4.1.4. Classification

- 1.

- Classification of proposals by layer and ;

- 2.

- Bounding box regression on the proposals for acquiring more accurate rectangular boxes.

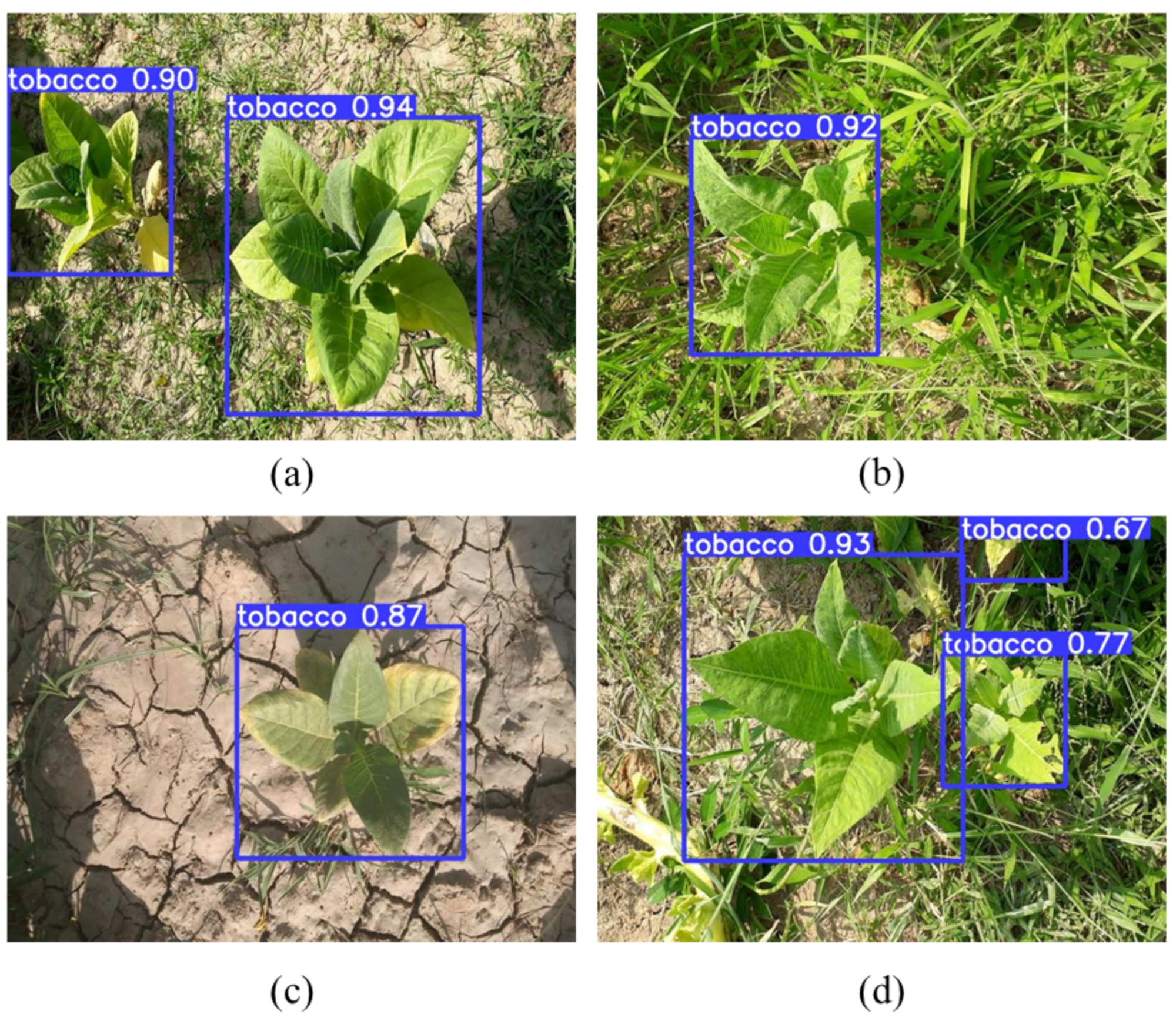

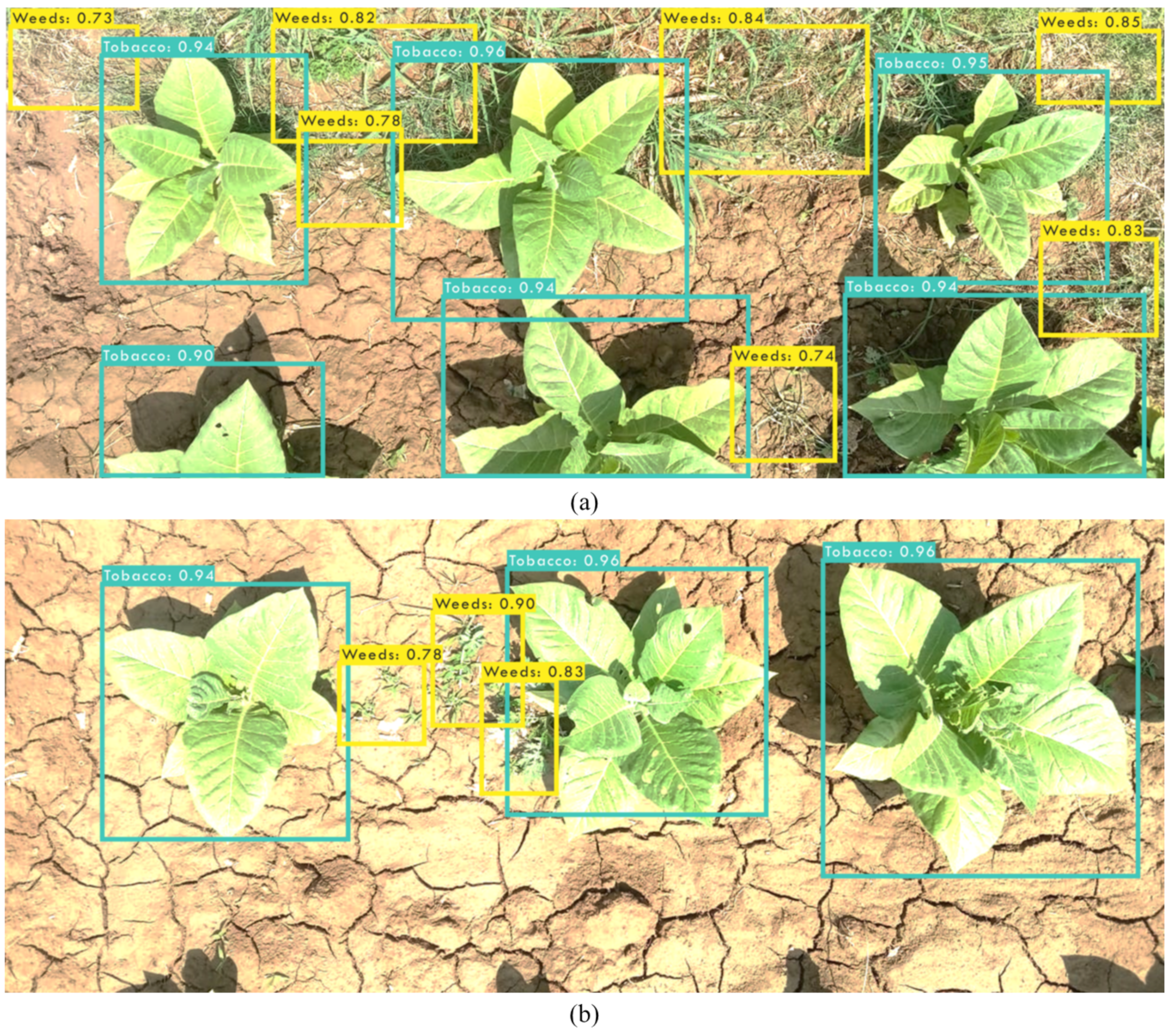

4.2. You Only Look Once (YOLO)

Network Architecture

5. Experimental Evaluation

Hardware Setup

6. Results and Discussions

Real-Time Inference

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Iqbal, J.; Rauf, A. Tobacco Revenue and Political Economy of Khyber Pakhtunkhwa. FWU J. Soc. Sci. 2021, 15, 11–25. [Google Scholar]

- Wang, G.; Lan, Y.; Yuan, H.; Qi, H.; Chen, P.; Ouyang, F.; Han, Y. Comparison of spray deposition, control efficacy on wheat aphids and working efficiency in the wheat field of the unmanned aerial vehicle with boom sprayer and two conventional knapsack sprayers. Appl. Sci. 2019, 9, 218. [Google Scholar] [CrossRef] [Green Version]

- Liu, W.; Wu, C.; She, D. Effect of spraying direction on the exposure to handlers with hand-pumped knapsack sprayer in maize field. Ecotoxicol. Environ. Saf. 2019, 170, 107–111. [Google Scholar] [CrossRef] [PubMed]

- Hughes, E.A.; Flores, A.P.; Ramos, L.M.; Zalts, A.; Glass, C.R.; Montserrat, J.M. Potential dermal exposure to deltamethrin and risk assessment for manual sprayers: Influence of crop type. Sci. Total Environ. 2008, 391, 34–40. [Google Scholar] [CrossRef]

- Ellis, M.B.; Lane, A.; O’Sullivan, C.; Miller, P.; Glass, C. Bystander exposure to pesticide spray drift: New data for model development and validation. Biosyst. Eng. 2010, 107, 162–168. [Google Scholar] [CrossRef]

- Kim, K.D.; Lee, H.S.; Hwang, S.J.; Lee, Y.J.; Nam, J.S.; Shin, B.S. Analysis of Spray Characteristics of Tractor-mounted Boom Sprayer for Precise Spraying. J. Biosyst. Eng. 2017, 42, 258–264. [Google Scholar]

- Matthews, G. Pesticide Application Methods; John Wiley & Sons: Hoboken, NJ, USA, 2008. [Google Scholar]

- Talaviya, T.; Shah, D.; Patel, N.; Yagnik, H.; Shah, M. Implementation of artificial intelligence in agriculture for optimisation of irrigation and application of pesticides and herbicides. Artif. Intell. Agric. 2020, 4, 58–73. [Google Scholar] [CrossRef]

- Mavridou, E.; Vrochidou, E.; Papakostas, G.A.; Pachidis, T.; Kaburlasos, V.G. Machine vision systems in precision agriculture for crop farming. J. Imaging 2019, 5, 89. [Google Scholar] [CrossRef] [Green Version]

- Tian, H.; Wang, T.; Liu, Y.; Qiao, X.; Li, Y. Computer vision technology in agricultural automation—A review. Inf. Process. Agric. 2020, 7, 1–19. [Google Scholar] [CrossRef]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef] [Green Version]

- Osman, Y.; Dennis, R.; Elgazzar, K. Yield Estimation and Visualization Solution for Precision Agriculture. Sensors 2021, 21, 6657. [Google Scholar] [CrossRef]

- Bechar, A.; Vigneault, C. Agricultural robots for field operations: Concepts and components. Biosyst. Eng. 2016, 149, 94–111. [Google Scholar] [CrossRef]

- Berenstein, R.; Edan, Y. Automatic adjustable spraying device for site-specific agricultural application. IEEE Trans. Autom. Sci. Eng. 2017, 15, 641–650. [Google Scholar] [CrossRef]

- Arakeri, M.P.; Kumar, B.V.; Barsaiya, S.; Sairam, H. Computer vision based robotic weed control system for precision agriculture. In Proceedings of the International Conference on Advances in Computing, Communications and Informatics, Udupi, India, 13–16 September 2017; pp. 1201–1205. [Google Scholar]

- Gázquez, J.A.; Castellano, N.N.; Manzano-Agugliaro, F. Intelligent low cost telecontrol system for agricultural vehicles in harmful environments. J. Clean. Prod. 2016, 113, 204–215. [Google Scholar] [CrossRef]

- Faiçal, B.S.; Freitas, H.; Gomes, P.H.; Mano, L.Y.; Pessin, G.; de Carvalho, A.C.; Krishnamachari, B.; Ueyama, J. An adaptive approach for UAV-based pesticide spraying in dynamic environments. Comput. Electron. Agric. 2017, 138, 210–223. [Google Scholar] [CrossRef]

- Zhu, H.; Lan, Y.; Wu, W.; Hoffmann, W.C.; Huang, Y.; Xue, X.; Liang, J.; Fritz, B. Development of a PWM precision spraying controller for unmanned aerial vehicles. J. Bionic Eng. 2010, 7, 276–283. [Google Scholar] [CrossRef]

- Yang, Y.; Hannula, S.P. Development of precision spray forming for rapid tooling. Mater. Sci. Eng. A 2008, 477, 63–68. [Google Scholar] [CrossRef]

- Tellaeche, A.; BurgosArtizzu, X.P.; Pajares, G.; Ribeiro, A.; Fernández-Quintanilla, C. A new vision-based approach to differential spraying in precision agriculture. Comput. Electron. Agric. 2008, 60, 144–155. [Google Scholar] [CrossRef] [Green Version]

- Tewari, V.; Pareek, C.; Lal, G.; Dhruw, L.; Singh, N. Image processing based real-time variable-rate chemical spraying system for disease control in paddy crop. Artif. Intell. Agric. 2020, 4, 21–30. [Google Scholar] [CrossRef]

- Rincón, V.J.; Grella, M.; Marucco, P.; Alcatrão, L.E.; Sanchez-Hermosilla, J.; Balsari, P. Spray performance assessment of a remote-controlled vehicle prototype for pesticide application in greenhouse tomato crops. Sci. Total Environ. 2020, 726, 138509. [Google Scholar] [CrossRef]

- Gil, E.; Llorens, J.; Llop, J.; Fàbregas, X.; Escolà, A.; Rosell-Polo, J. Variable rate sprayer. Part 2–Vineyard prototype: Design, implementation, and validation. Comput. Electron. Agric. 2013, 95, 136–150. [Google Scholar] [CrossRef] [Green Version]

- Alam, M.; Alam, M.S.; Roman, M.; Tufail, M.; Khan, M.U.; Khan, M.T. Real-time machine-learning based crop/weed detection and classification for variable-rate spraying in precision agriculture. In Proceedings of the International Conference on Electrical and Electronics Engineering, Antalya, Turkey, 14–16 April 2020; pp. 273–280. [Google Scholar]

- Tufail, M.; Iqbal, J.; Tiwana, M.I.; Alam, M.S.; Khan, Z.A.; Khan, M.T. Identification of Tobacco Crop Based on Machine Learning for a Precision Agricultural Sprayer. IEEE Access 2021, 9, 23814–23825. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.J.; Wulfsohn, D.; Rasmussen, J. Sugar beet (Beta vulgaris L.) and thistle (Cirsium arvensis L.) discrimination based on field spectral data. Biosyst. Eng. 2015, 139, 1–15. [Google Scholar] [CrossRef]

- Li, X.; Chen, Z. Weed identification based on shape features and ant colony optimization algorithm. In Proceedings of the International Conference on Computer Application and System Modeling, Taiyuan, China, 22–24 October 2010; Volume 1, pp. 1–384. [Google Scholar]

- Burgos-Artizzu, X.P.; Ribeiro, A.; Guijarro, M.; Pajares, G. Real-time image processing for crop/weed discrimination in maize fields. Comput. Electron. Agric. 2011, 75, 337–346. [Google Scholar] [CrossRef] [Green Version]

- Cheng, B.; Matson, E.T. A Feature-Based Machine Learning Agent for Automatic Rice and Weed Discrimination. In International Conference on Artificial Intelligence and Soft Computing; Springer: Berlin/Heidelberg, Germany, 2015; pp. 517–527. [Google Scholar]

- Guru, D.; Mallikarjuna, P.; Manjunath, S.; Shenoi, M. Machine vision based classification of tobacco leaves for automatic harvesting. Intell. Autom. Soft Comput. 2012, 18, 581–590. [Google Scholar] [CrossRef]

- Haug, S.; Michaels, A.; Biber, P.; Ostermann, J. Plant classification system for crop/weed discrimination without segmentation. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision, Steamboat Springs, CO, USA, 24–26 March 2014; pp. 1142–1149. [Google Scholar]

- Rumpf, T.; Römer, C.; Weis, M.; Sökefeld, M.; Gerhards, R.; Plümer, L. Sequential support vector machine classification for small-grain weed species discrimination with special regard to Cirsium arvense and Galium aparine. Comput. Electron. Agric. 2012, 80, 89–96. [Google Scholar] [CrossRef]

- Ouyang, W.; Zeng, X.; Wang, X.; Qiu, S.; Luo, P.; Tian, Y.; Li, H.; Yang, S.; Wang, Z.; Li, H.; et al. DeepID-Net: Object detection with deformable part based convolutional neural networks. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1320–1334. [Google Scholar] [CrossRef]

- Diba, A.; Sharma, V.; Pazandeh, A.; Pirsiavash, H.; Van Gool, L. Weakly supervised cascaded convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 914–922. [Google Scholar]

- Toshev, A.; Szegedy, C. Deeppose: Human pose estimation via deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1653–1660. [Google Scholar]

- Chen, X.; Yuille, A. Articulated pose estimation by a graphical model with image dependent pairwise relations. arXiv 2014, arXiv:1407.3399. [Google Scholar]

- Noh, H.; Hong, S.; Han, B. Learning deconvolution network for semantic segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1520–1528. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3431–3440. [Google Scholar]

- Lin, L.; Wang, K.; Zuo, W.; Wang, M.; Luo, J.; Zhang, L. A deep structured model with radius–margin bound for 3d human activity recognition. Int. J. Comput. Vis. 2016, 118, 256–273. [Google Scholar] [CrossRef] [Green Version]

- Cao, S.; Nevatia, R. Exploring deep learning based solutions in fine grained activity recognition in the wild. In Proceedings of the 23rd International Conference on Pattern Recognition, Cancun, Mexico, 4–8 December 2016; pp. 384–389. [Google Scholar]

- Doulamis, N. Adaptable deep learning structures for object labeling/tracking under dynamic visual environments. Multimed. Tools. Appl. 2018, 77, 9651–9689. [Google Scholar] [CrossRef]

- Lopez-Martin, M.; Carro, B.; Sanchez-Esguevillas, A. IoT type-of-traffic forecasting method based on gradient boosting neural networks. Future Gener. Comput. Syst. 2020, 105, 331–345. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. Deep learning with unsupervised data labeling for weed detection in line crops in UAV images. Remote Sens. 2018, 10, 1690. [Google Scholar] [CrossRef] [Green Version]

- Yu, J.; Schumann, A.W.; Cao, Z.; Sharpe, S.M.; Boyd, N.S. Weed detection in perennial ryegrass with deep learning convolutional neural network. Front. Plant Sci. 2019, 10, 1422. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Asad, M.H.; Bais, A. Weed detection in canola fields using maximum likelihood classification and deep convolutional neural network. Inf. Process. Agric. 2019, 7, 535–545. [Google Scholar] [CrossRef]

- Umamaheswari, S.; Arjun, R.; Meganathan, D. Weed detection in farm crops using parallel image processing. In Proceedings of the Conference on Information and Communication Technology, Jabalpur, India, 26–28 October 2018; pp. 1–4. [Google Scholar]

- Bah, M.D.; Dericquebourg, E.; Hafiane, A.; Canals, R. Deep Learning Based Classification System for Identifying Weeds Using High-Resolution UAV Imagery. In Science and Information Conference; Springer: Cham, Switzerland, 2018; pp. 176–187. [Google Scholar]

- Forero, M.G.; Herrera-Rivera, S.; Ávila-Navarro, J.; Franco, C.A.; Rasmussen, J.; Nielsen, J. Color Classification Methods for Perennial Weed Detection in Cereal Crops. In Iberoamerican Congress on Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2018; pp. 117–123. [Google Scholar]

- Wang, A.; Zhang, W.; Wei, X. A review on weed detection using ground-based machine vision and image processing techniques. Comput. Electron. Agric. 2019, 158, 226–240. [Google Scholar] [CrossRef]

- Hu, K.; Wang, Z.; Coleman, G.; Bender, A.; Yao, T.; Zeng, S.; Song, D.; Schumann, A.; Walsh, M. Deep Learning Techniques for In-Crop Weed Identification: A Review. arXiv 2021, arXiv:2103.14872. [Google Scholar]

- Loey, M.; ElSawy, A.; Afify, M. Deep learning in plant diseases detection for agricultural crops: A survey. Int. J. Serv. Sci. Manag. Eng. Tech. 2020, 11, 41–58. [Google Scholar] [CrossRef]

- Weng, Y.; Zeng, R.; Wu, C.; Wang, M.; Wang, X.; Liu, Y. A survey on deep-learning-based plant phenotype research in agriculture. Sci. Sin. Vitae 2019, 49, 698–716. [Google Scholar] [CrossRef] [Green Version]

- Bu, F.; Gharajeh, M.S. Intelligent and vision-based fire detection systems: A survey. Image Vis. Comput. 2019, 91, 103803. [Google Scholar] [CrossRef]

- Chouhan, S.S.; Singh, U.P.; Jain, S. Applications of computer vision in plant pathology: A survey. Arch. Comput. Methods Eng. 2020, 27, 611–632. [Google Scholar] [CrossRef]

- Bonadies, S.; Gadsden, S.A. An overview of autonomous crop row navigation strategies for unmanned ground vehicles. Eng. Agric. Environ. Food 2019, 12, 24–31. [Google Scholar] [CrossRef]

- Tripathi, M.K.; Maktedar, D.D. A role of computer vision in fruits and vegetables among various horticulture products of agriculture fields: A survey. Inf. Process. Agric. 2020, 7, 183–203. [Google Scholar] [CrossRef]

- da Costa, A.Z.; Figueroa, H.E.; Fracarolli, J.A. Computer vision based detection of external defects on tomatoes using deep learning. Biosyst. Eng. 2020, 190, 131–144. [Google Scholar] [CrossRef]

- dos Santos Ferreira, A.; Freitas, D.M.; da Silva, G.G.; Pistori, H.; Folhes, M.T. Weed detection in soybean crops using ConvNets. Comput. Electron. Agric. 2017, 143, 314–324. [Google Scholar] [CrossRef]

- Yu, J.; Sharpe, S.M.; Schumann, A.W.; Boyd, N.S. Deep learning for image-based weed detection in turfgrass. Eur. J. Agron. 2019, 104, 78–84. [Google Scholar] [CrossRef]

- Sharpe, S.M.; Schumann, A.W.; Boyd, N.S. Detection of Carolina geranium (Geranium carolinianum) growing in competition with strawberry using convolutional neural networks. Weed Sci. 2019, 67, 239–245. [Google Scholar] [CrossRef]

- Le, V.N.T.; Truong, G.; Alameh, K. Detecting weeds from crops under complex field environments based on Faster RCNN. In Proceedings of the IEEE Eighth International Conference on Communications and Electronics, Phu Quoc Island, Vietnam, 13–15 January 2021; pp. 350–355. [Google Scholar]

- Quan, L.; Feng, H.; Lv, Y.; Wang, Q.; Zhang, C.; Liu, J.; Yuan, Z. Maize seedling detection under different growth stages and complex field environments based on an improved Faster R–CNN. Biosyst. Eng. 2019, 184, 1–23. [Google Scholar] [CrossRef]

- Osorio, K.; Puerto, A.; Pedraza, C.; Jamaica, D.; Rodríguez, L. A deep learning approach for weed detection in lettuce crops using multispectral images. AgriEngineering 2020, 2, 471–488. [Google Scholar] [CrossRef]

- Wu, D.; Lv, S.; Jiang, M.; Song, H. Using channel pruning-based YOLO v4 deep learning algorithm for the real-time and accurate detection of apple flowers in natural environments. Comput. Electron. Agric. 2020, 178, 105742. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 91–99. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- Zeiler, M.D.; Fergus, R. Visualizing and Understanding Convolutional Networks. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 818–833. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

| S. No. | Learning Rate | Epoch | Loss for Faster R-CNN | Loss for YOLOv5 |

|---|---|---|---|---|

| 1 | 0.0002 | 2 k | 0.046 | 0.124 |

| 2 | 0.0002 | 4 k | 0.029 | 0.081 |

| 3 | 0.0002 | 6 k | 0.028 | 0.066 |

| 4 | 0.0002 | 8 k | 0.025 | 0.058 |

| 5 | 0.0002 | 10 k | 0.017 | 0.049 |

| Predicted Class | |||

|---|---|---|---|

| True Class | Tobacco | Weeds | |

| Tobacco | 454 | 6 | |

| Weeds | 7 | 168 | |

| 98.48% | 96.55% | ||

| Predicted Class | |||

|---|---|---|---|

| True Class | Tobacco | Weeds | |

| Tobacco | 437 | 13 | |

| Weeds | 24 | 161 | |

| 94.79% | 92.52% | ||

| S.No. | Evaluation Measure | Faster R-CNN | YOLOv5 |

|---|---|---|---|

| 1 | Precision | 0.9829 | 0.9481 |

| 2 | Recall | 0.9863 | 0.9732 |

| 3 | -Score | 0.9859 | 0.9576 |

| 4 | Accuracy | 0.9834 | 0.9445 |

| Model | Faster R-CNN | YOLOv5 |

|---|---|---|

| Inference time (ms) | ||

| Frames per second (fps) | 10.15 | 16.12 |

| mAP | 0.94 | 0.91 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alam, M.S.; Alam, M.; Tufail, M.; Khan, M.U.; Güneş, A.; Salah, B.; Nasir, F.E.; Saleem, W.; Khan, M.T. TobSet: A New Tobacco Crop and Weeds Image Dataset and Its Utilization for Vision-Based Spraying by Agricultural Robots. Appl. Sci. 2022, 12, 1308. https://doi.org/10.3390/app12031308

Alam MS, Alam M, Tufail M, Khan MU, Güneş A, Salah B, Nasir FE, Saleem W, Khan MT. TobSet: A New Tobacco Crop and Weeds Image Dataset and Its Utilization for Vision-Based Spraying by Agricultural Robots. Applied Sciences. 2022; 12(3):1308. https://doi.org/10.3390/app12031308

Chicago/Turabian StyleAlam, Muhammad Shahab, Mansoor Alam, Muhammad Tufail, Muhammad Umer Khan, Ahmet Güneş, Bashir Salah, Fazal E. Nasir, Waqas Saleem, and Muhammad Tahir Khan. 2022. "TobSet: A New Tobacco Crop and Weeds Image Dataset and Its Utilization for Vision-Based Spraying by Agricultural Robots" Applied Sciences 12, no. 3: 1308. https://doi.org/10.3390/app12031308

APA StyleAlam, M. S., Alam, M., Tufail, M., Khan, M. U., Güneş, A., Salah, B., Nasir, F. E., Saleem, W., & Khan, M. T. (2022). TobSet: A New Tobacco Crop and Weeds Image Dataset and Its Utilization for Vision-Based Spraying by Agricultural Robots. Applied Sciences, 12(3), 1308. https://doi.org/10.3390/app12031308