An Analysis of the Impact of Gating Techniques on the Optimization of the Energy Dissipated in Real-Time Systems

Abstract

:1. Introduction

2. Related Work

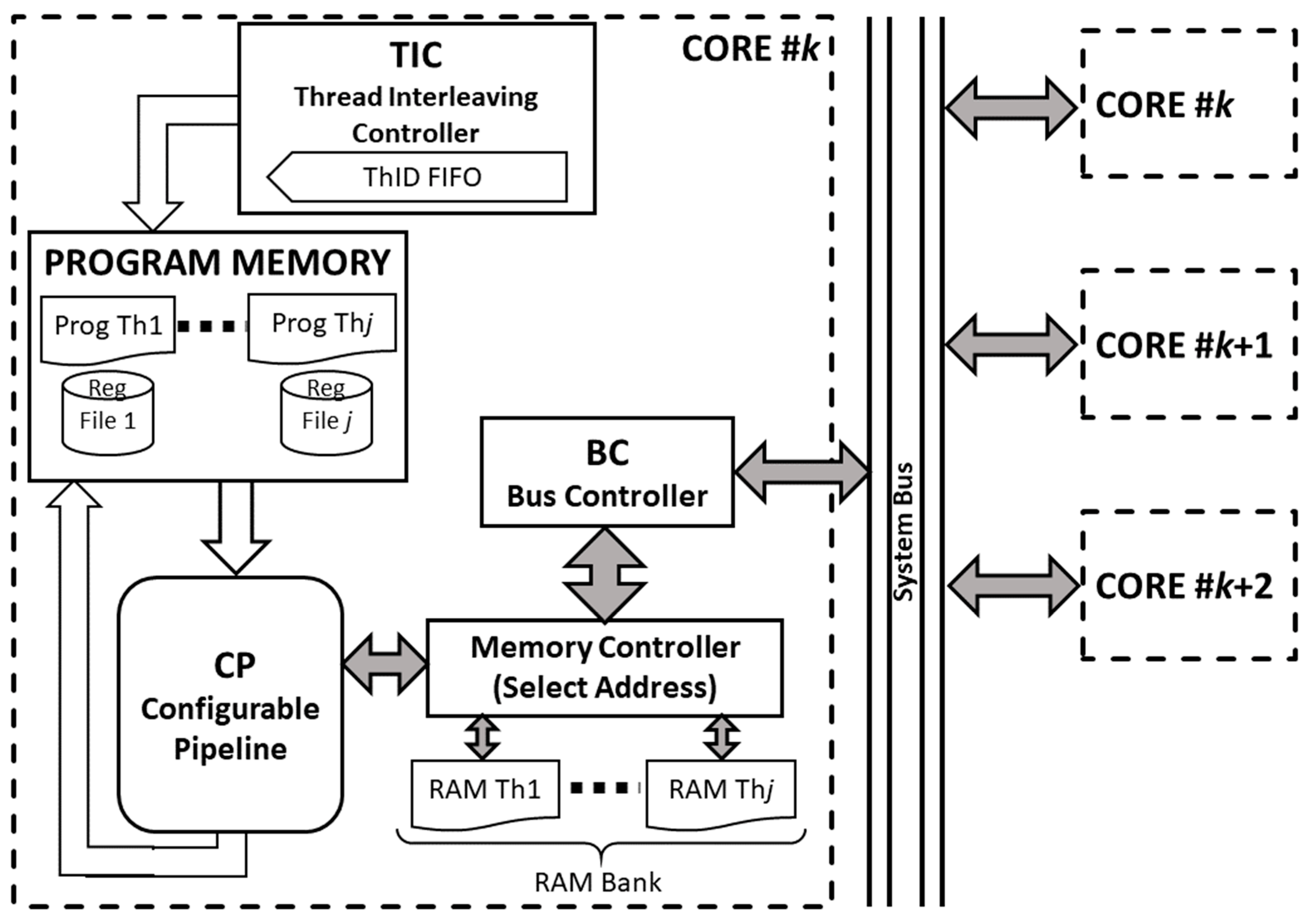

3. Different Configurations of Real-Time Systems

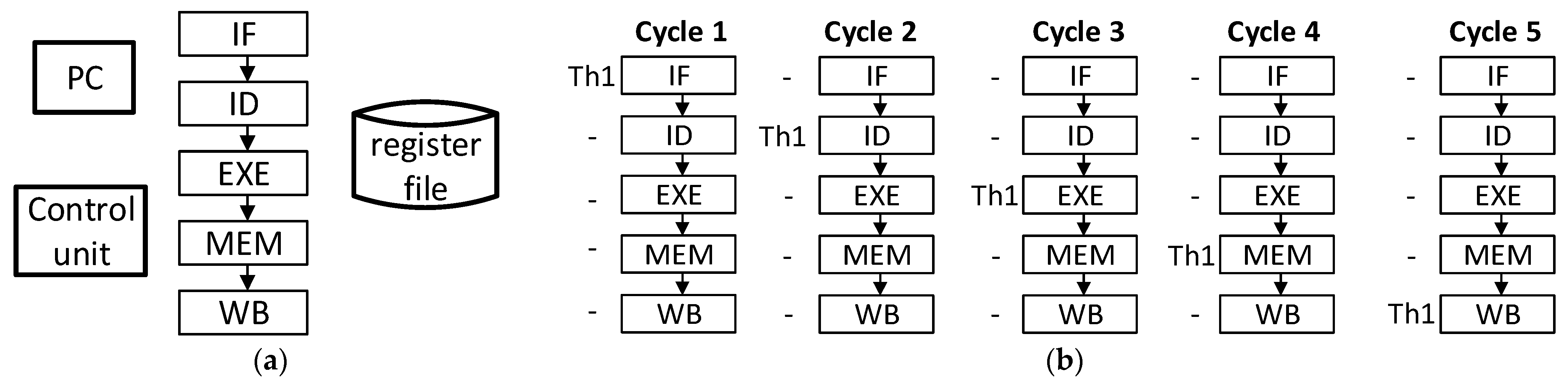

3.1. Classical Simple Core Architecture (STP)

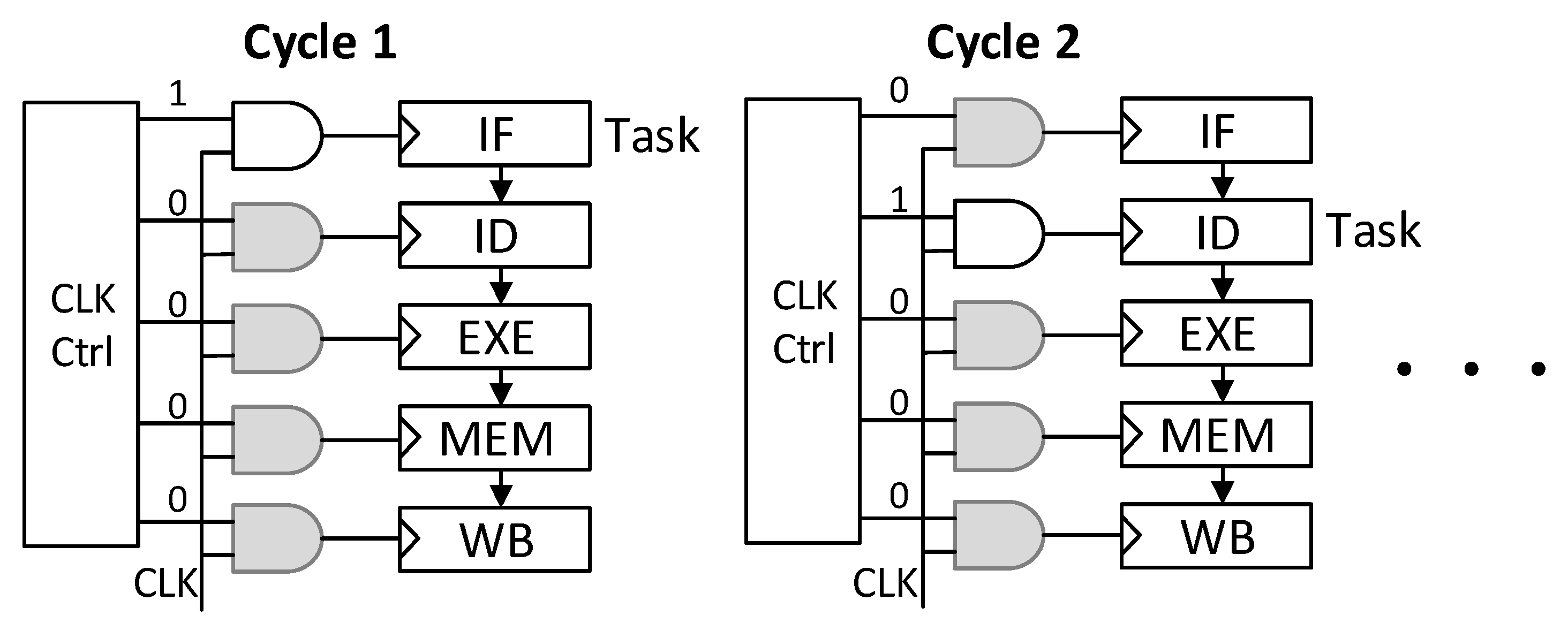

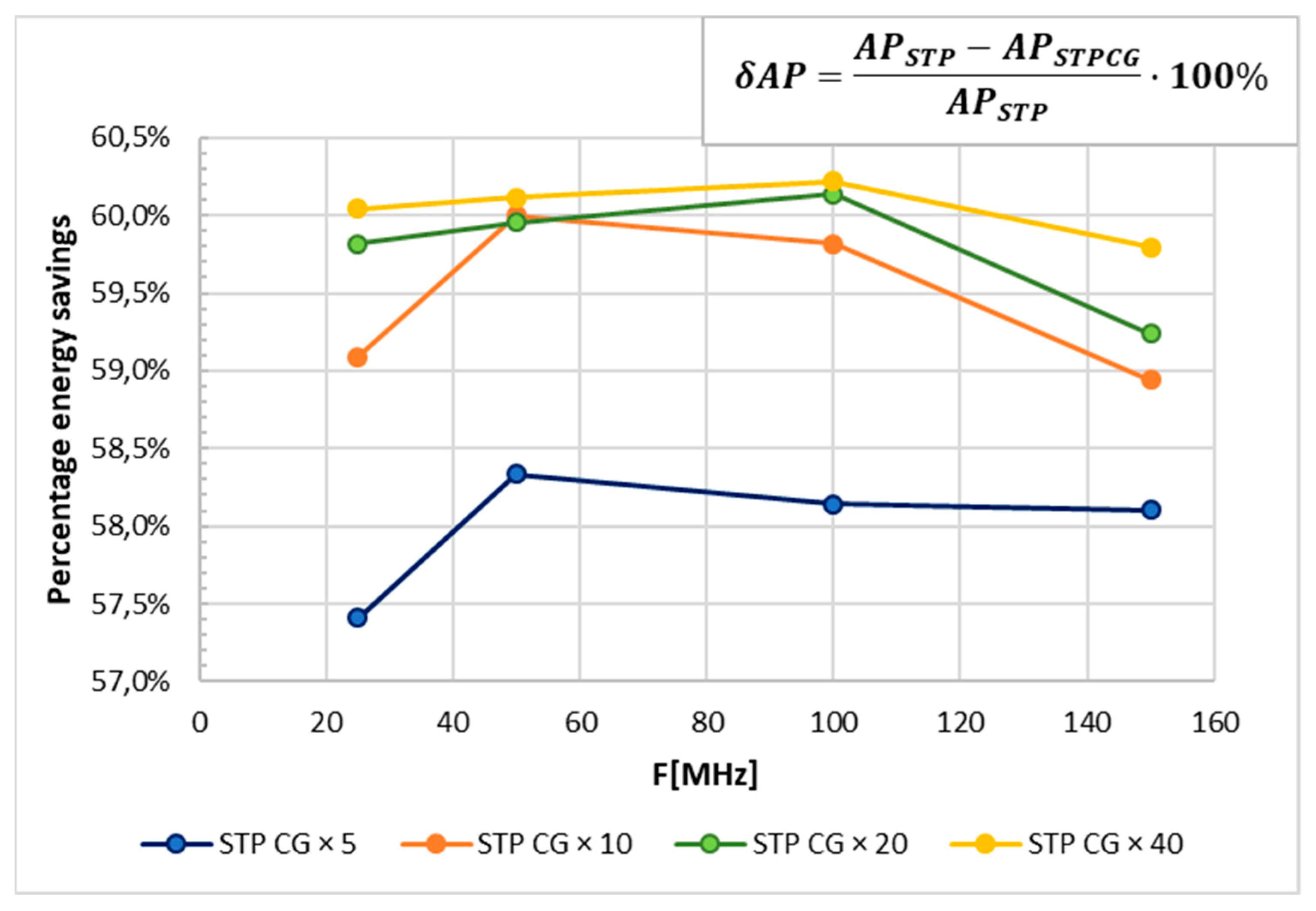

3.2. Simple Core with Clock-Gating Mechanism (STP CG)

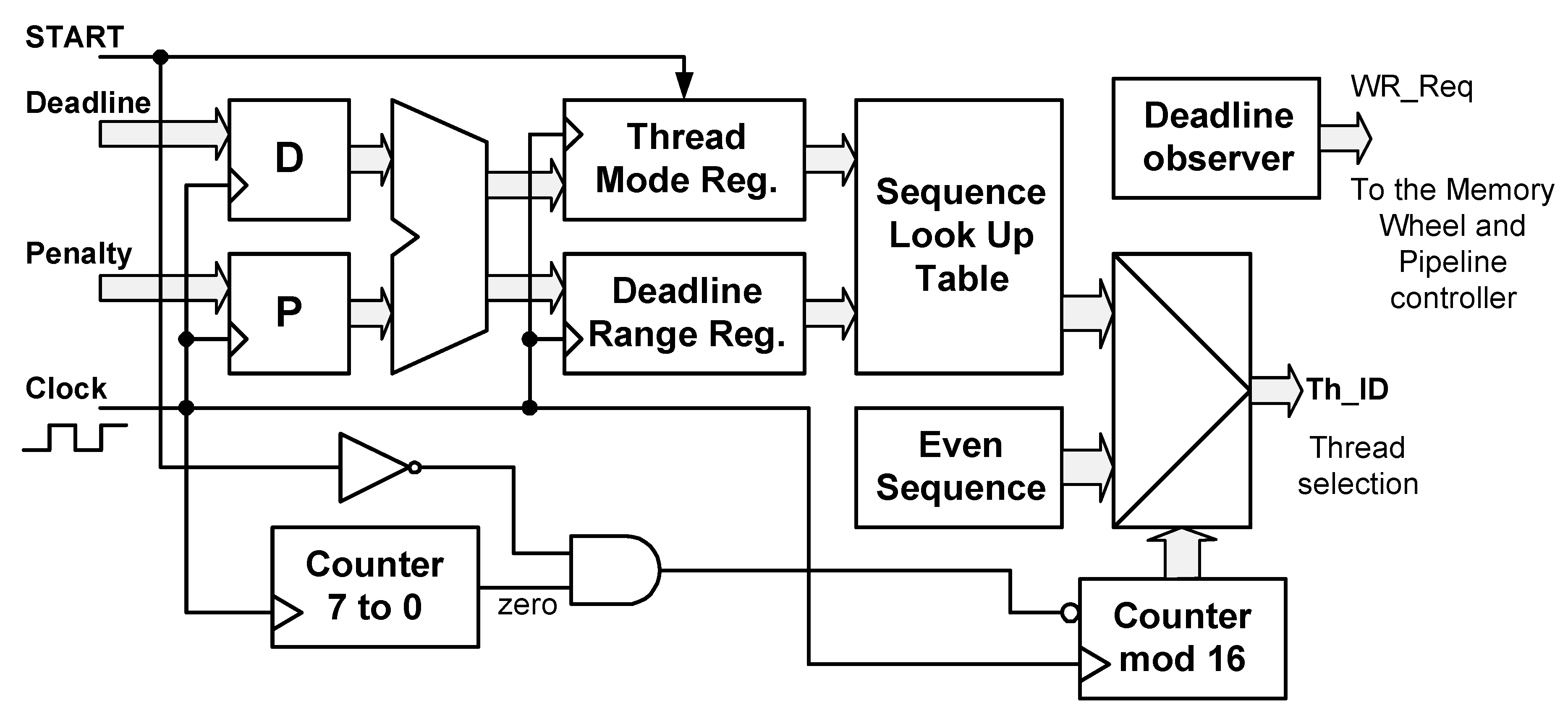

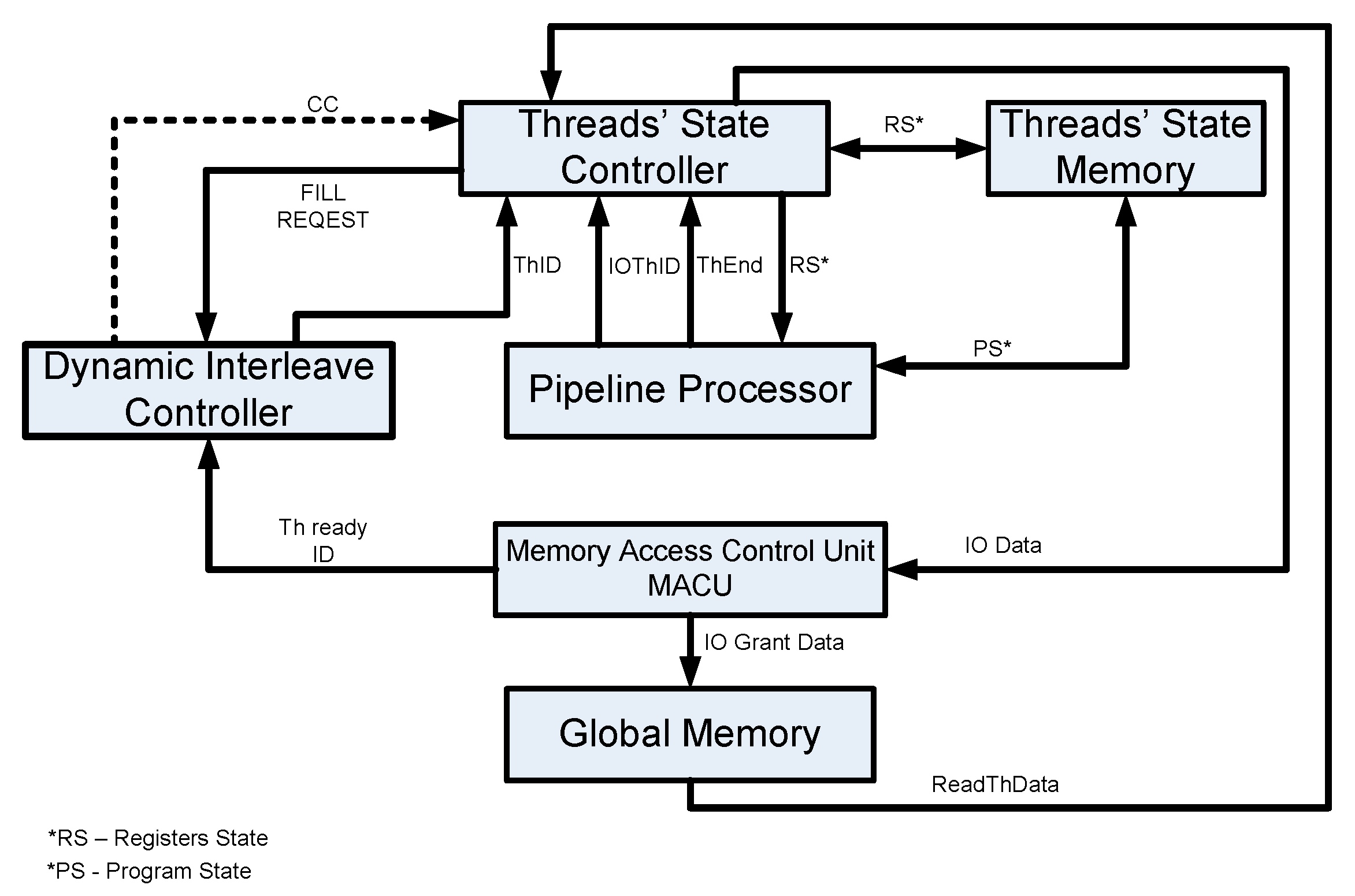

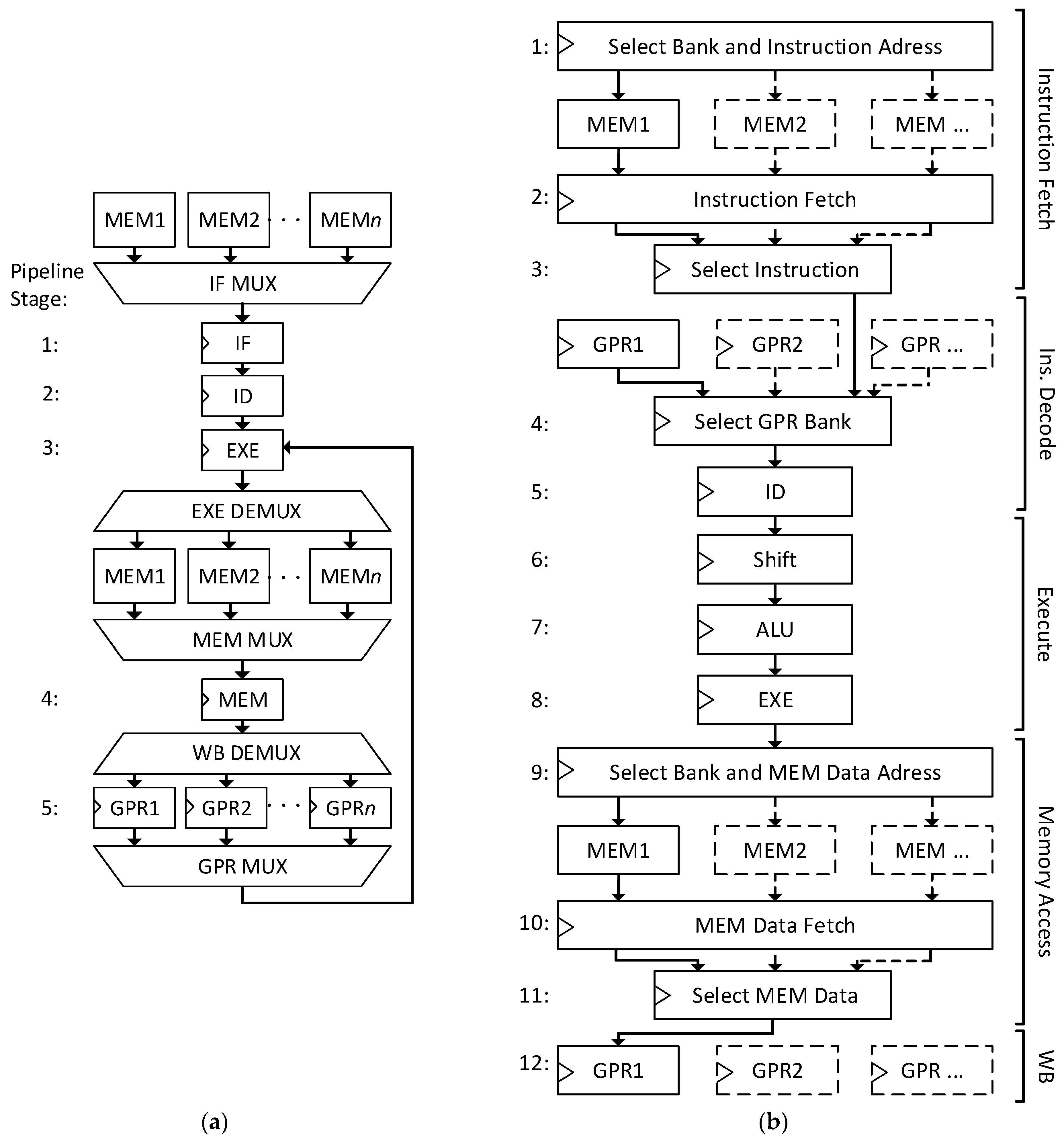

3.3. Multithread Core with Pipeline Processing (MTP)

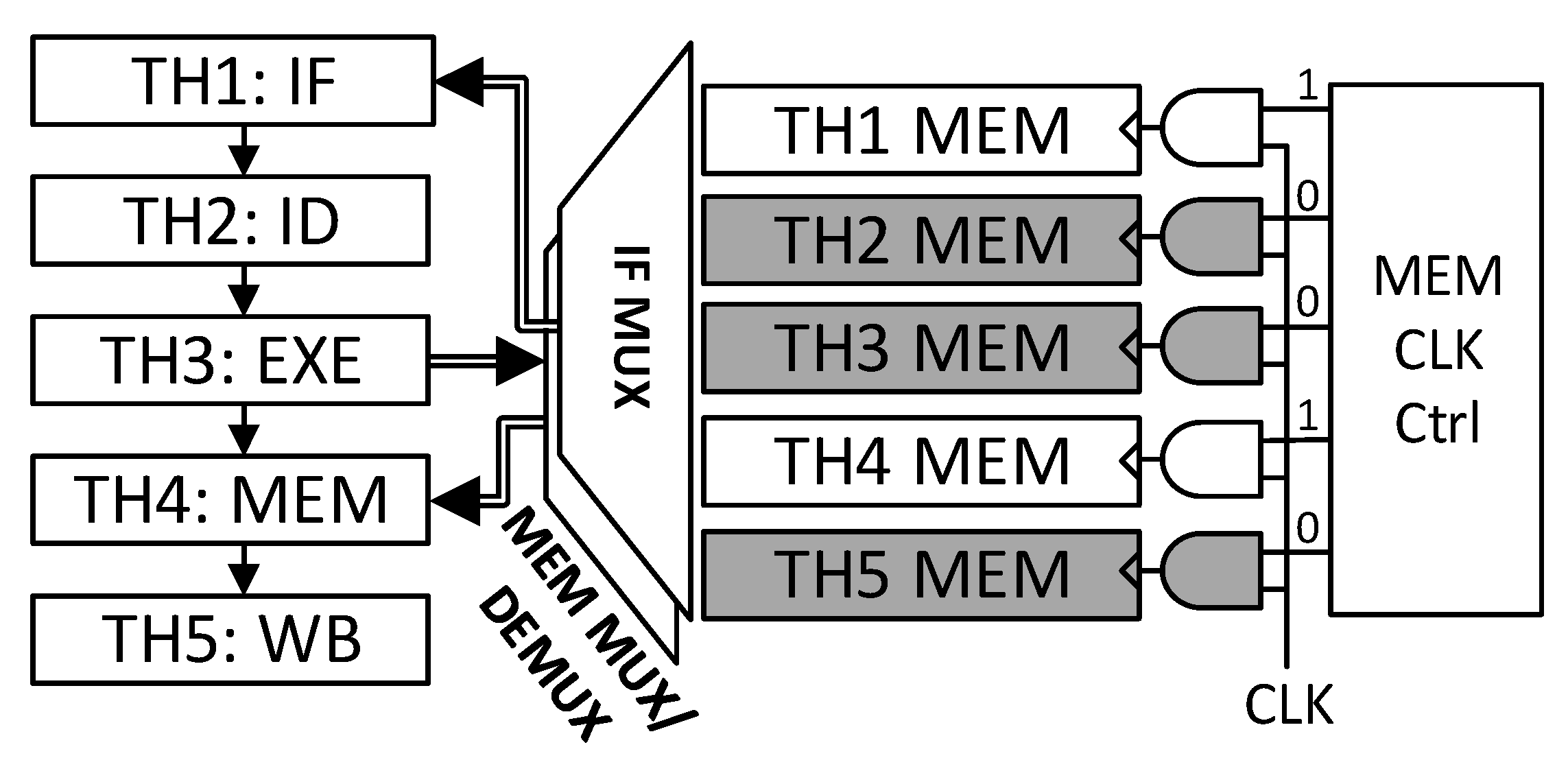

3.4. Multithread Pipeline Processors with Memory-Gating Mechanisms (MTP RG)

3.5. The Main Motivation of the Research and Proposed Methodology

4. Metrics Used

5. Experimental Results

5.1. Simple Architecture Testing

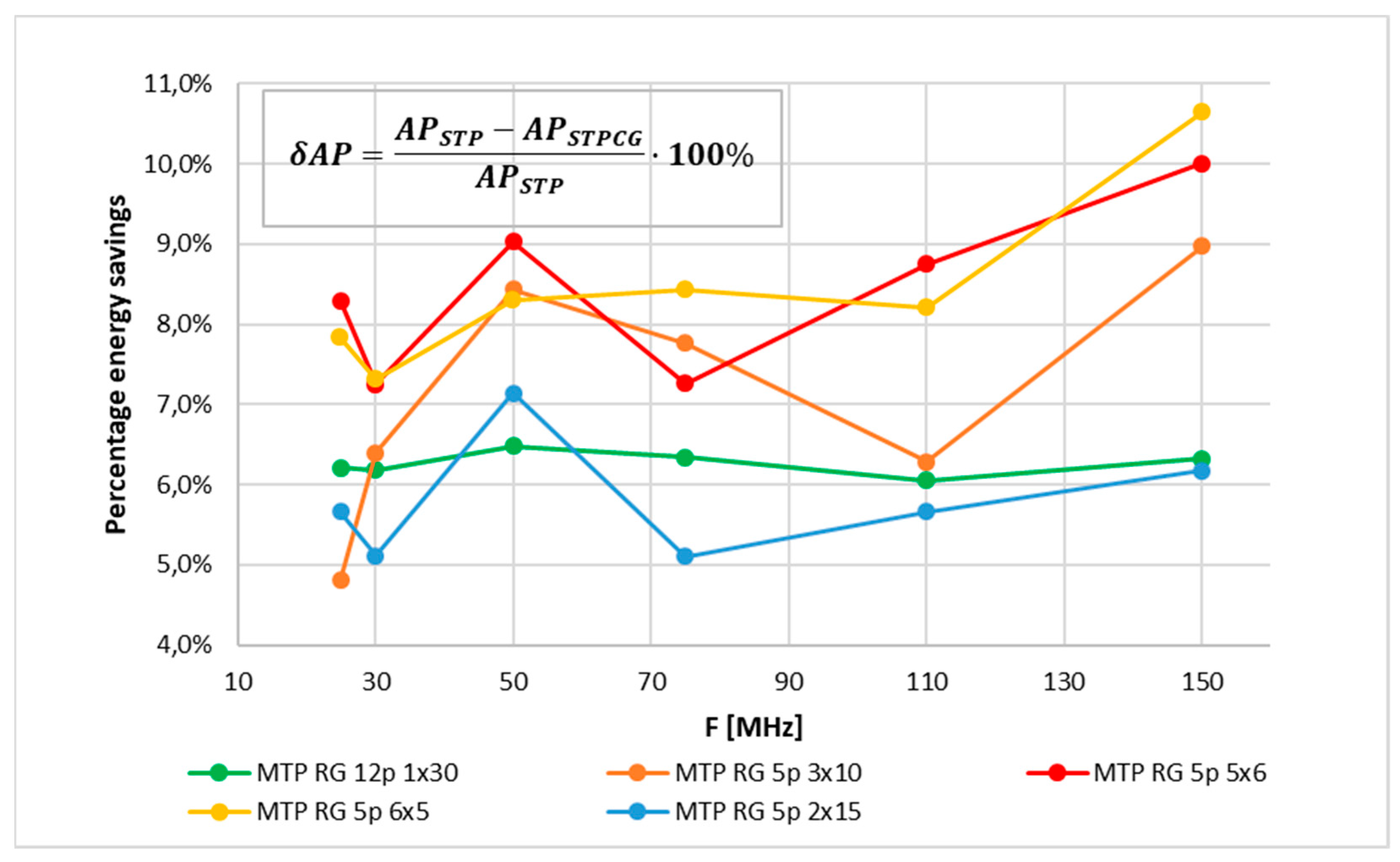

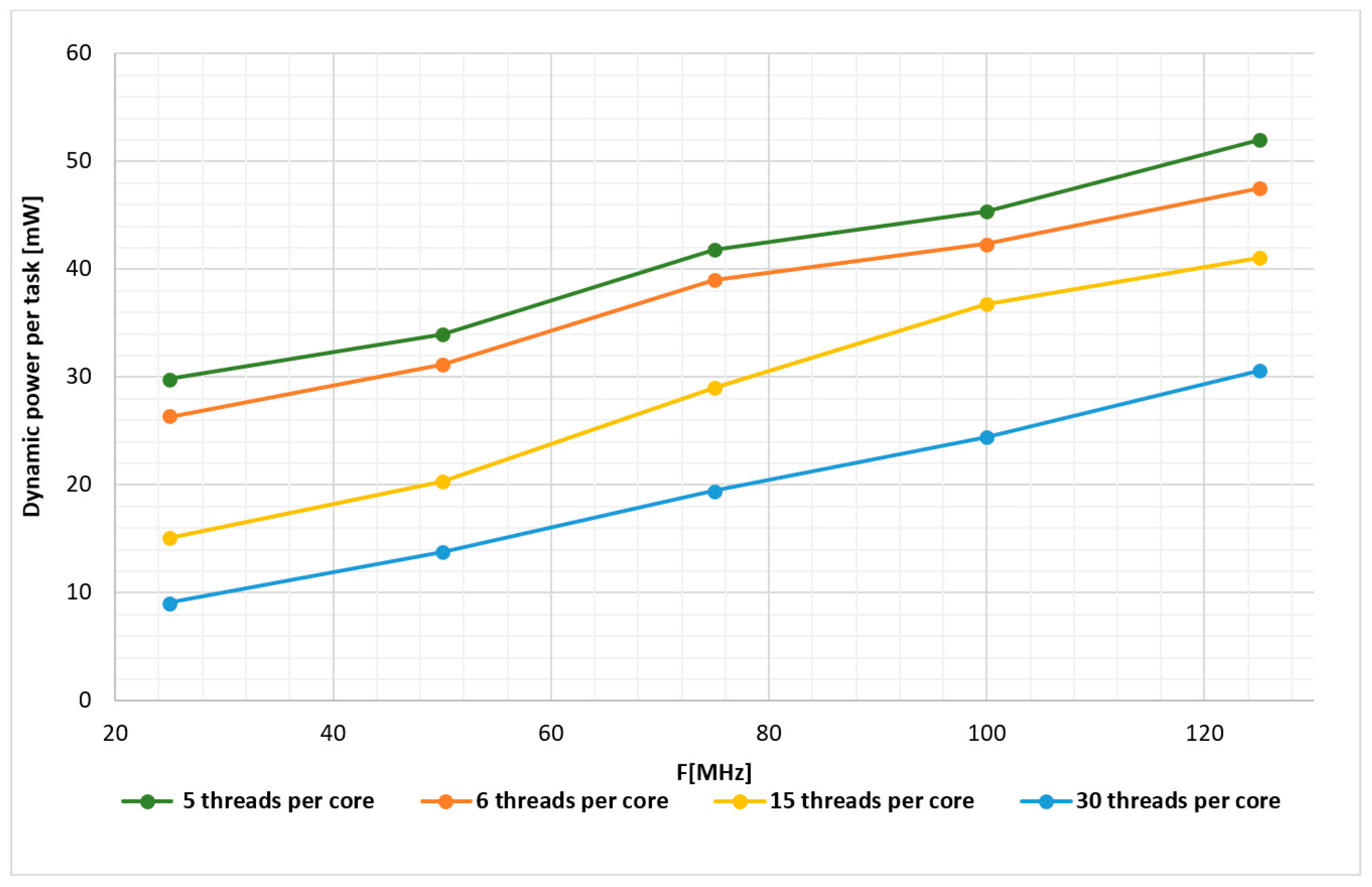

5.2. Experiments with Multitasking Cores (MTP)

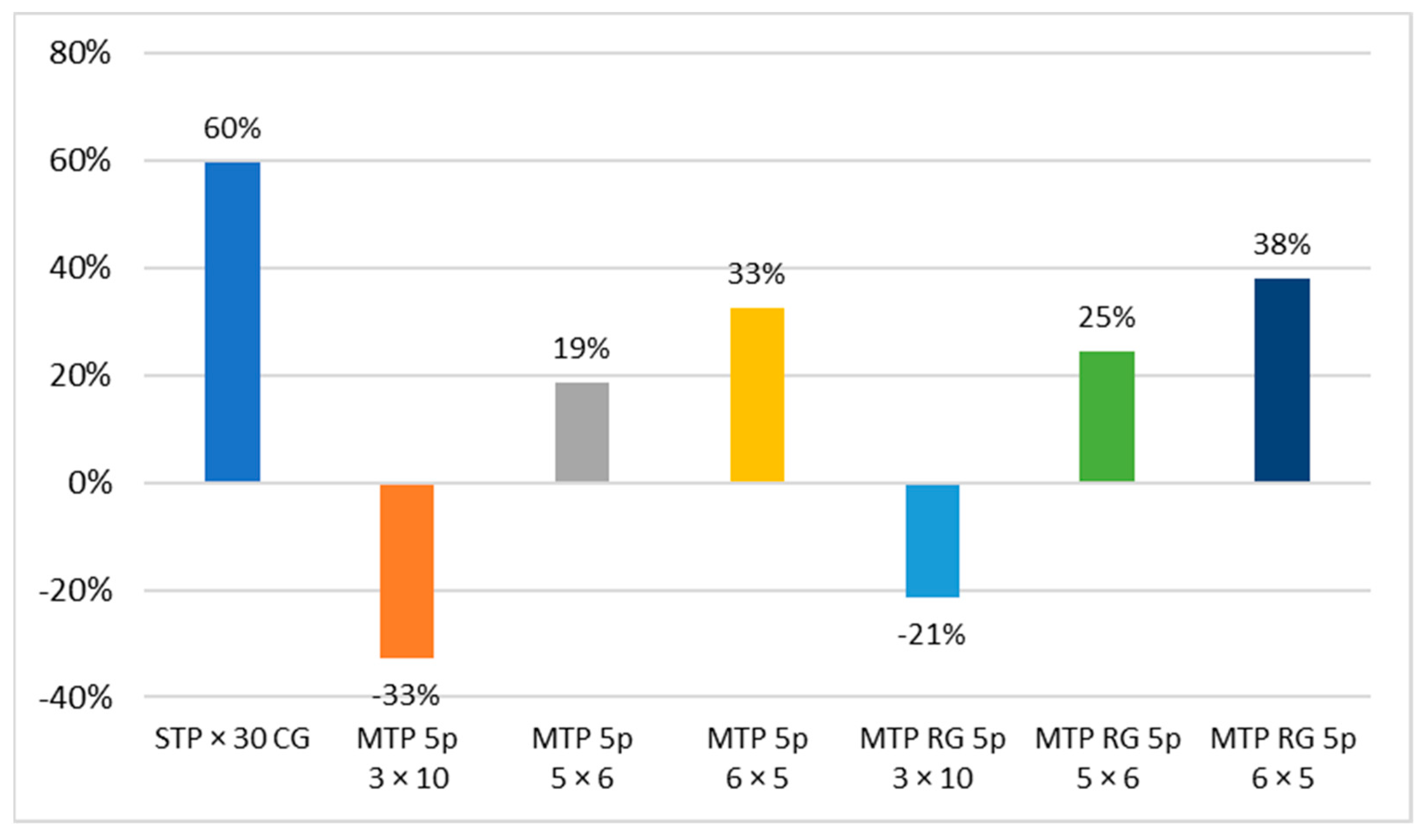

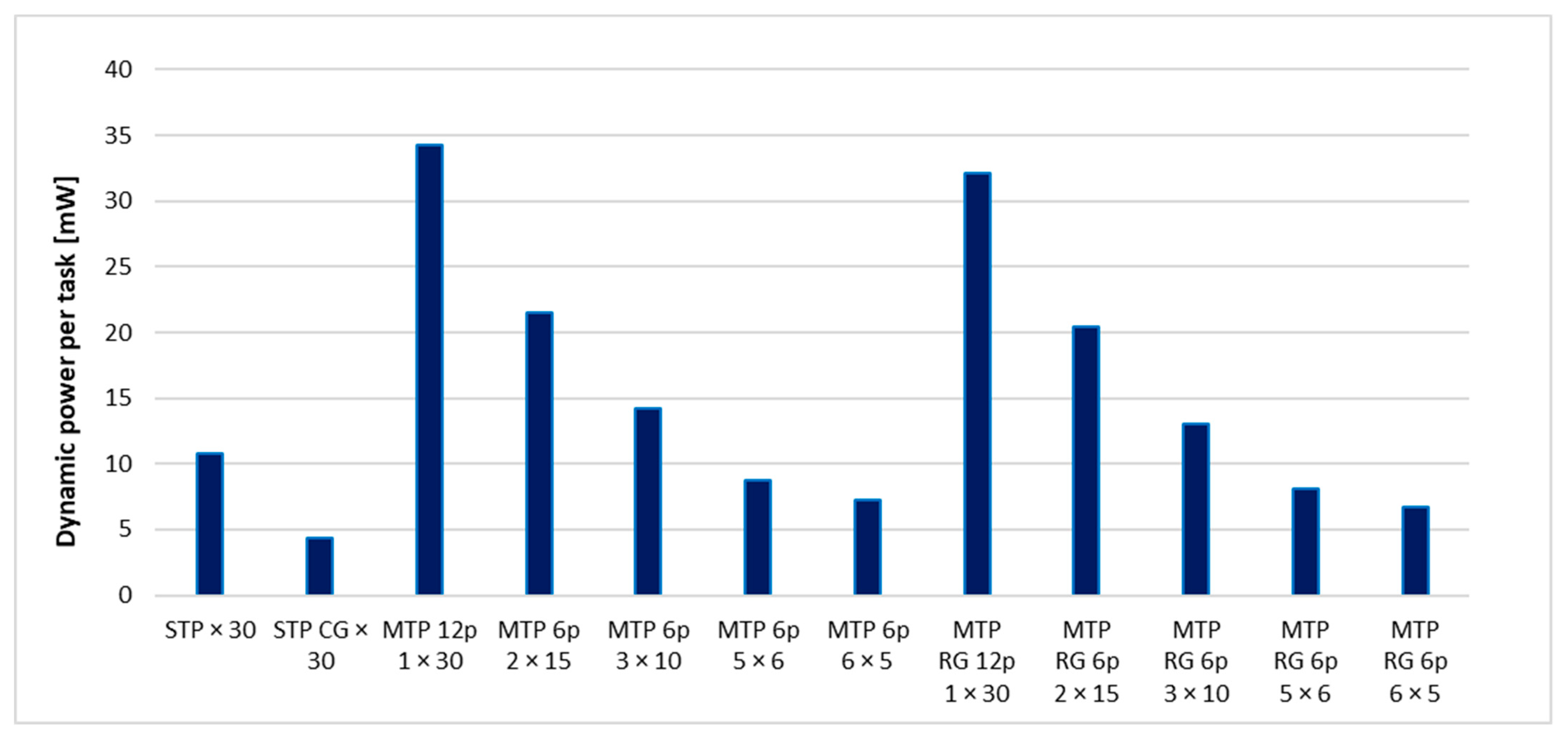

5.3. Global Comparisons of All Tested Structures

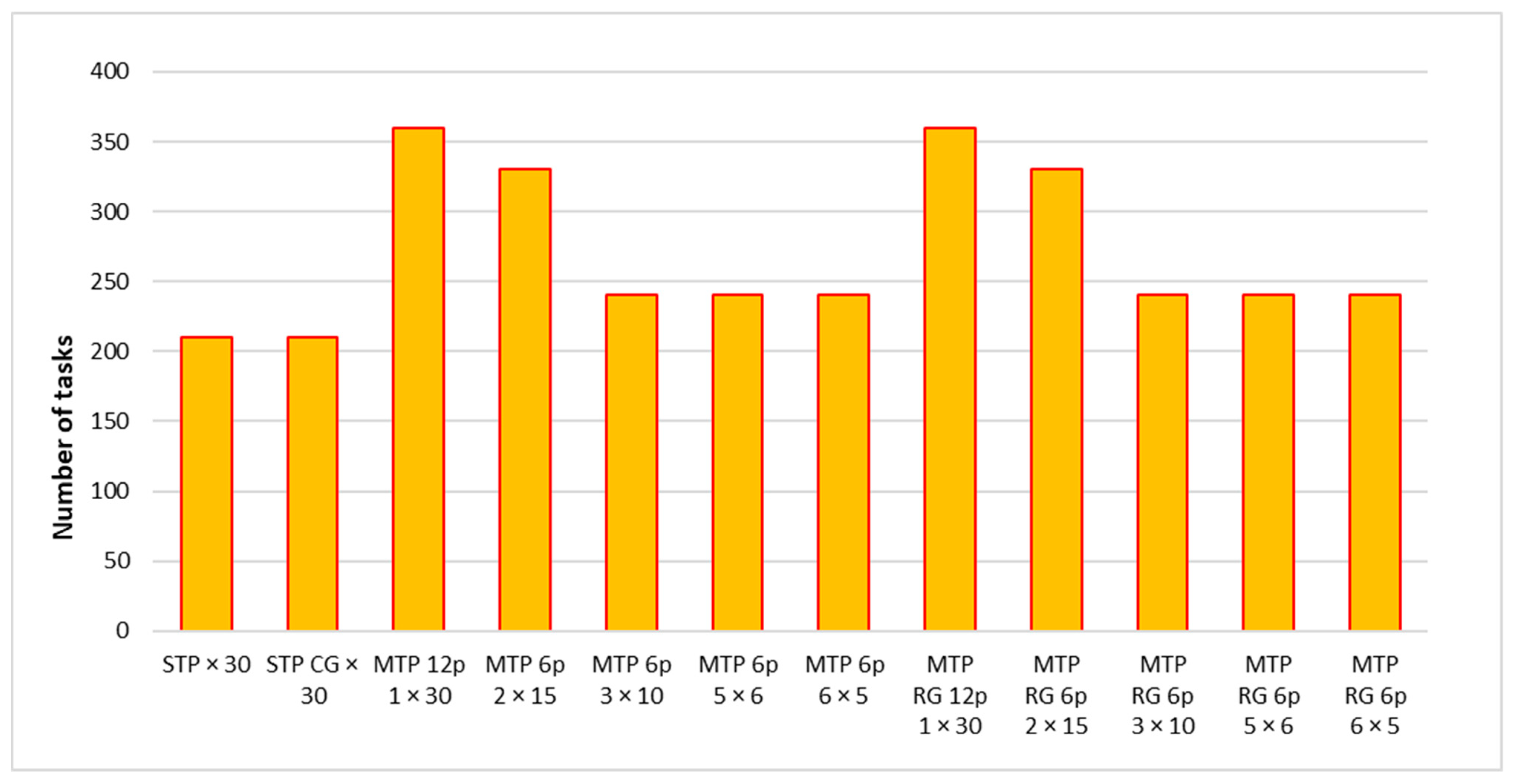

5.4. Quantitative Analysis—The Maximum Processed Tasks

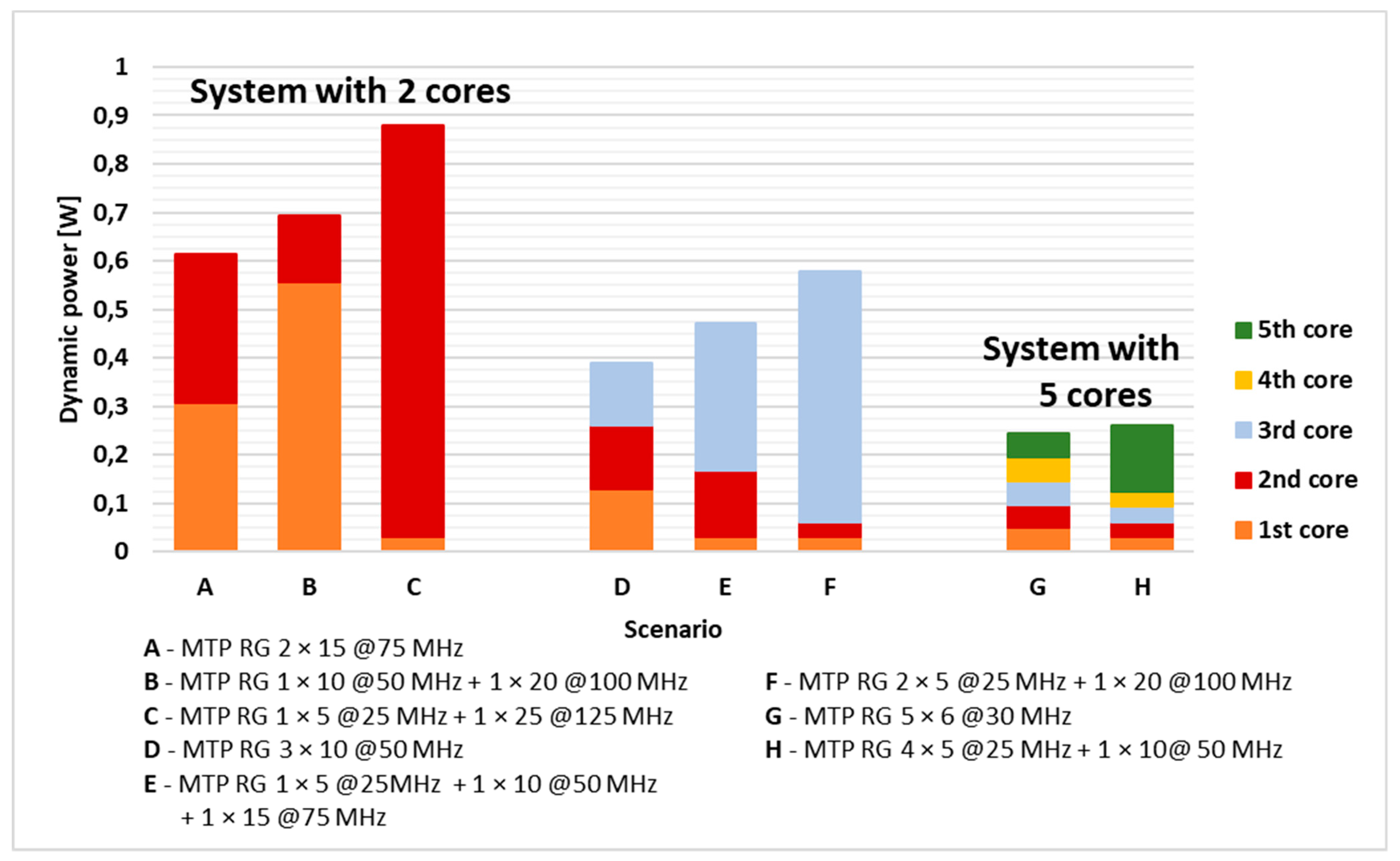

5.5. Analyses of Mixed System Configurations

5.6. Supplementary Discussion of Results

6. Conclusions and Further Work

- the development of an original, energy-efficient, multicore architecture and its flexible models;

- the extension of the developed multitasking structure with mechanisms and modules controlling the gating procedures;

- the proposing of a set of metrics for analyzing the basic properties of real-time systems; and

- having conducted a series of experiments, analyses of the obtained results, and the formulation of design guidelines that allowed selecting the optimal system configuration (scenario).

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Edwards, S.A.; Lee, E.A. The Case for the Precision Timed (PRET) Machine. In Proceedings of the 44th ACM/IEEE Design Automation Conference, New Orleans, LA, USA, 27–30 May 2007; pp. 264–265. [Google Scholar]

- Antolak, E.; Pułka, A. Energy-Efficient Task Scheduling in Design of Multithread Time Predictable Real-Time Systems. IEEE Access 2021, 9, 121111–121127. [Google Scholar] [CrossRef]

- Golly, Ł.; Milik, A.; Pulka, A. High Level Model of Time Predictable Multitask Control Unit. IFAC Pap. 2015, 48, 348–353. [Google Scholar] [CrossRef]

- Pułka, A.; Milik, A. Multithread RISC Architecture Based on Programmable Interleaved Pipelining. In Proceedings of the IEEE ICECS 2009 Conference, Medina-Hammamet, Tunisia, 13–16 December 2009; pp. 647–650. [Google Scholar]

- Buttazzo, G.C. Hard Real-Time Computing Systems; Springer: New York, NY, USA, 2011. [Google Scholar]

- Ruiz, P.A.; Rivas, M.A.; Harbour, M.G. Non-Blocking Synchronization Between Real-Time and Non-Real-Time Applications. IEEE Access 2020, 8, 147618–147634. [Google Scholar] [CrossRef]

- Duenha, L.; Madalozzo, G.A.; Santiago, T.; Moraes, F.G.; Azevedo, R. MPSoCBench: A benchmark for high-level evaluation of multiprocessor system-on-chip tools and methodologies. J. Parallel Distrib. Comput. 2016, 95, 138–157. [Google Scholar] [CrossRef]

- Li, K. Energy and time constrained task scheduling on multiprocessor computers with discrete speed levels. J. Parallel Distrib. Comput. 2016, 95, 15–28. [Google Scholar] [CrossRef]

- Schoeberl, M.; Abbaspour, S.; Akesson, B. T-CREST: Time-predictable multi-core architecture for embedded systems. J. Syst. Archit. 2015, 61, 449–471. [Google Scholar] [CrossRef]

- Wilhelm, R. Real time spent on real time. Commun. ACM 2020, 63, 54–60. [Google Scholar] [CrossRef]

- Lee, E.; Messerschmitt, D. Pipeline interleaved programmable DSP’s: Architecture. IEEE Trans. Acoust. Speech Signal Process 1987, 35, 1320–1333. [Google Scholar] [CrossRef]

- Davis, R.I.; Altmeyer, S.; Indrusiak, L.S.; Maiza, C.; Nélis, V.; Reineke, J. An extensible framework for multicore response time analysis. Real Time Syst. 2018, 54, 607–661. [Google Scholar] [CrossRef] [Green Version]

- Schoeberl, M.; Schleuniger, P.; Puffitsch, W.; Brandner, F.W.; Probst, C. Towards a Time-predictable Dual-Issue Microprocessor: The Patmos Approach. In Proceedings of the First Workshop on Bringing Theory to Practice: Predictability and Performance in Embedded Systems (PPES 2011), Grenoble, France, 18 March 2011; pp. 11–21. [Google Scholar]

- Pedram, M. Power Minimization in IC Design, Principles and Applications. ACM Trans. Des. Automat. Electron. Syst. 1996, 1, 3–56. [Google Scholar] [CrossRef]

- Kim, D.; Ko, Y.; Lim, S. Energy-Efficient Real-Time Multi-Core Assignment Scheme for Asymmetric Multi-Core Mobile Devices. IEEE Access 2020, 8, 117324–117334. [Google Scholar] [CrossRef]

- Lorenzon, A.F.; Cera, M.C.; Beck, A.C. Investigating different general-purpose and embedded multicores to achieve optimal trade-offs between performance and energy. J. Parallel Distrib. Comput. 2016, 95, 107–123. [Google Scholar] [CrossRef]

- Xie, G.; Zeng, G.; Xiao, X.; Li, L.; Li, K. Energy-Efficient Scheduling Algorithms for Real-Time Parallel Applications on Heterogeneous Distributed Embedded Systems. IEEE Trans. Parallel Distrib. Syst. 2017, 28, 3426–3442. [Google Scholar] [CrossRef]

- Chniter, H.; Mosbahi, O.; Khalgui, M.; Zhou, M.; Li, Z. Improved Multi-Core Real-Time Task Scheduling of Reconfigurable Systems with Energy Constraints. IEEE Access 2020, 8, 95698–95713. [Google Scholar] [CrossRef]

- Ge, Y.; Liu, R. A Group-Based Energy-Efficient Dual Priority Scheduling for Real-Time Embedded Systems. Information 2020, 11, 191. [Google Scholar] [CrossRef] [Green Version]

- Huang, C. HDA: Hierarchical and dependency-aware task mapping for network-on-chip based embedded systems. J. Syst. Archit. 2020, 108, 101740. [Google Scholar] [CrossRef]

- Rehman, A.U.; Ahmad, Z.; Jehangiri, A.I.; Ala’Anzy, M.A.; Othman, M.; Umar, A.I.; Ahmad, J. Dynamic Energy Efficient Resource Allocation Strategy for Load Balancing in Fog Environment. IEEE Access 2020, 8, 199829–199839. [Google Scholar] [CrossRef]

- Salloum, C.; Elshuber, M.; Höftberger, O.; Isakovic, H.; Wasicek, A. The ACROSS MPSoC—A new generation of multi-core processors designed for safety-critical embedded systems. Microprocess. Microsyst. 2012, 37, 1020–1032. [Google Scholar] [CrossRef]

- Glaser, F.; Tagliavini, G.; Rossi, D.; Haugou, G.; Huang, Q.; Benini, L. Energy-Efficient Hardware-Accelerated Synchronization for Shared-L1-Memory Multiprocessor Clusters. IEEE Trans. Parallel Distrib. Syst. 2021, 32, 633–648. [Google Scholar] [CrossRef]

- Geier, M.; Brändle, M.; Chakraborty, S. Insert & Save: Energy Optimization in IP Core Integration for FPGA-based Real-time Systems. In Proceedings of the 2021 IEEE 27th Real-Time and Embedded Technology and Applications Symposium (RTAS), Nashville, TN, USA, 18–21 May 2021; pp. 80–91. [Google Scholar] [CrossRef]

- Wimer, S.; Koren, I. Design Flow for Flip-Flop Grouping in Data-Driven Clock Gating. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2014, 22, 771–778. [Google Scholar] [CrossRef] [Green Version]

- Bezati, E.; Casale-Brunet, S.; Mattavelli, M.; Janneck, J.W. Clock-Gating of Streaming Applications for Energy Efficient Implementations on FPGAs. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 2017, 36, 699–703. [Google Scholar] [CrossRef]

- Bsoul, A.A.M.; Wilton, S.J.E.; Tsoi, K.H.; Luk, W. An FPGA Architecture and CAD Flow Supporting Dynamically Controlled Power Gating. IEEE Trans. Very Large Scale Integr. (VLSI) Syst. 2016, 24, 178–191. [Google Scholar] [CrossRef]

- Çakmak, I.Y.; Toms, W.; Navaridas, J.; Luján, M. Cyclic Power-Gating as an Alternative to Voltage and Frequency Scaling. IEEE Comput. Archit. Lett. 2016, 15, 77–80. [Google Scholar] [CrossRef]

- Greenberg, S.; Rabinowicz, J.; Tsechanski, R.; Paperno, E. Selective State Retention Power Gating Based on Gate-Level Analysis. IEEE Trans. Circuits Syst. I Regul. Pap. 2014, 61, 1095–1104. [Google Scholar] [CrossRef]

- Stallings, W. Reduced instruction set computer architecture. Proc. IEEE 1988, 76, 38–55. [Google Scholar] [CrossRef]

- VC707 Evaluation Board for the Virtex-7 FPGA. Available online: https://www.xilinx.com/support/documentation/boards_and_kits/vc707/ug885_VC707_Eval_Bd.pdf (accessed on 1 November 2019).

- Mälardalen: WCET Benchmark Programs. Available online: http://www.mrtc.mdh.se/projects/wcet/benchmarks.html (accessed on 1 March 2021).

- Hussein, J.; Klein, M.; Hart, M. Lowering Power at 28 nm with Xilinx 7 Series Devices; Xilinx White Paper, WP389; 2015; Available online: https://www.xilinx.com/support/documentation/white_papers/wp389_Lowering_Power_at_28nm.pdf (accessed on 5 December 2021).

| Scenario | Frequency (Fsys [MHz]) | Total Power (Pall [mW]) | Cores’ Power (PCPU [mW]) | FPGA Utilization | |||

|---|---|---|---|---|---|---|---|

| Slice LUT | Slice Reg | F7 Muxes | F8 Muxes | ||||

| STP × 5 | 25 | 175 | 54 | 7053 | 5206 | 1170 | 505 |

| STP × 5 | 50 | 22 | 108 | 7053 | 5206 | 1170 | 505 |

| STP × 5 | 100 | 328 | 215 | 7053 | 5206 | 1170 | 505 |

| STP × 5 | 150 | 453 | 327 | 7053 | 5239 | 1170 | 505 |

| STP × 10 | 25 | 231 | 11 | 14,091 | 10,376 | 2340 | 1010 |

| STP × 10 | 50 | 332 | 22 | 14,091 | 10,376 | 2340 | 1010 |

| STP × 10 | 100 | 551 | 438 | 14,091 | 10,376 | 2340 | 1010 |

| STP × 10 | 150 | 786 | 66 | 14,091 | 10,439 | 2340 | 1010 |

| STP × 20 | 25 | 34 | 219 | 28,167 | 20,716 | 4680 | 2020 |

| STP × 20 | 50 | 549 | 437 | 28,166 | 20,716 | 4680 | 2020 |

| STP × 20 | 100 | 986 | 873 | 28,167 | 20,716 | 4680 | 2020 |

| STP × 20 | 150 | 1441 | 1315 | 28,167 | 20,790 | 4680 | 2020 |

| STP CG × 5 | 25 | 125 | 23 | 7038 | 5303 | 1170 | 505 |

| STP CG × 5 | 50 | 149 | 45 | 7038 | 5303 | 1170 | 505 |

| STP CG × 5 | 100 | 198 | 9 | 7038 | 5303 | 1170 | 505 |

| STP CG × 5 | 150 | 245 | 137 | 7038 | 5334 | 1170 | 505 |

| STP CG × 10 | 25 | 147 | 45 | 14,061 | 10,573 | 2340 | 1010 |

| STP CG × 10 | 50 | 192 | 88 | 14,061 | 10,573 | 2340 | 1010 |

| STP CG × 10 | 100 | 284 | 176 | 14,061 | 10,573 | 2340 | 1010 |

| STP CG × 10 | 150 | 379 | 271 | 14,061 | 10,634 | 2340 | 1010 |

| STP CG × 20 | 25 | 19 | 88 | 28,107 | 21,113 | 4680 | 2020 |

| STP CG × 20 | 50 | 279 | 175 | 28,107 | 21,113 | 4680 | 2020 |

| STP CG × 20 | 100 | 456 | 348 | 28,107 | 21,113 | 4680 | 2020 |

| STP CG × 20 | 150 | 644 | 536 | 28,107 | 21,190 | 4680 | 2020 |

| Scenario | Frequency (Fsys [MHz]) | Minindistance | Cores’ Power (PCPU [mW]) | FPGA Utilization | |||

|---|---|---|---|---|---|---|---|

| Slice LUT | Slice Reg | F7 Muxes | F8 Muxes | ||||

| MTP 12p 1 × 30 | 150 | 30 | 1028 | 25,208 | 18,494 | 6850 | 3030 |

| MTP 5p 2 × 15 | 75 | 15 | 646 | 26,039 | 16,888 | 6712 | 3030 |

| MTP 5p 3 × 10 | 50 | 10 | 427 | 34,596 | 17,450 | 6741 | 3030 |

| MTP 5p 5 × 6 | 30 | 6 | 262 | 35,795 | 18,489 | 6215 | 3030 |

| MTP 5p 6 × 5 | 25 | 5 | 217 | 36,687 | 19,024 | 6240 | 3030 |

| MTP RG 12p 1 × 30 | 150 | 30 | 963 | 25,008 | 18,521 | 6763 | 3030 |

| MTP RG 5p 2 × 15 | 75 | 15 | 613 | 26,274 | 16,922 | 6654 | 3030 |

| MTP RG 5p 3 × 10 | 50 | 10 | 391 | 34,908 | 17,493 | 6741 | 3030 |

| MTP RG 5p 5 × 6 | 30 | 6 | 243 | 36,202 | 18,541 | 6210 | 3030 |

| MTP RG 5p 6 × 5 | 25 | 5 | 200 | 36,982 | 19,026 | 6240 | 3030 |

| Scenario | Frequency (Fsys [MHz]) | Minindistance | Cores’ Power (PCPU [mW]) | FPGA Utilization | |||

|---|---|---|---|---|---|---|---|

| Slice LUT | Slice Reg | F7 Muxes | F8 Muxes | ||||

| STP × 30 | 25 | 5 | 322 | 42,243 | 31,057 | 7020 | 3030 |

| STP × 30 CG | 25 | 5 | 130 | 42,251 | 31,660 | 7020 | 3030 |

| MTP 5p 3 × 10 | 50 | 10 | 427 | 34,596 | 17,450 | 6741 | 3030 |

| MTP 5p 5 × 6 | 30 | 6 | 262 | 35,795 | 18,489 | 6215 | 3030 |

| MTP 5p 6 × 5 | 25 | 5 | 217 | 26,274 | 16,922 | 6654 | 3030 |

| MTP RG 5p 3 × 10 | 50 | 10 | 391 | 34,908 | 17,493 | 6741 | 3030 |

| MTP RG 5p 5 × 6 | 30 | 6 | 243 | 36,202 | 18,541 | 6210 | 3030 |

| MTP RG 5p 6 × 5 | 25 | 5 | 200 | 36,982 | 19,026 | 6240 | 3030 |

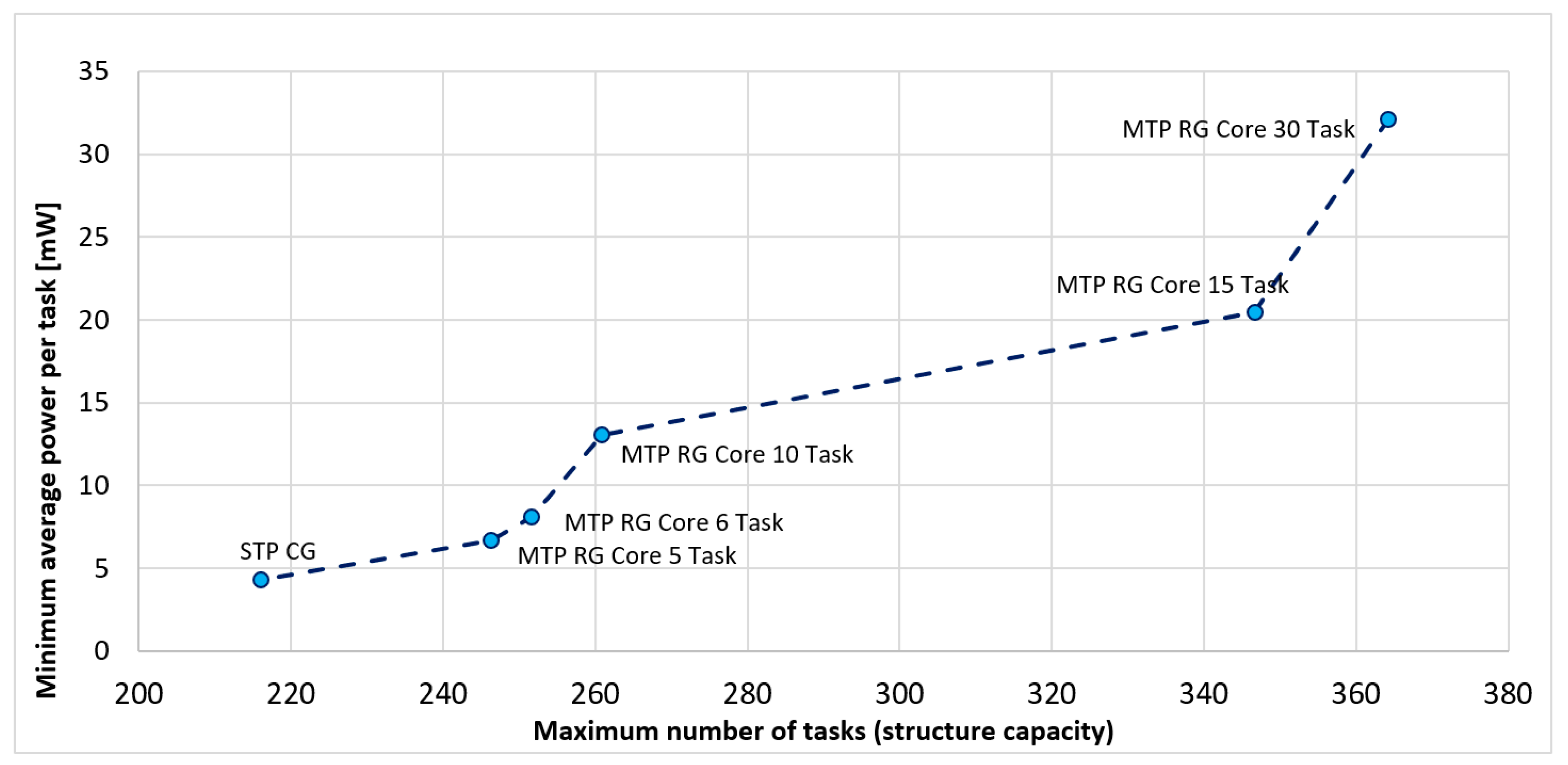

| Scenario | Frequency (Fsys [MHz]) | Maximum Number of Tasks | Maximum Number of Cores | Minimum Total Average Power per Core (AP [mW]) |

|---|---|---|---|---|

| MTP RG core 30 task | 150 | 360 | 12 | 32.10 |

| MTP RG core 15 task | 75 | 345 | 23 | 20.43 |

| MTP RG core 10 task | 50 | 260 | 26 | 13.03 |

| MTP RG core 6 task | 30 | 250 | 42 | 8.10 |

| MTP RG core 5 task | 25 | 245 | 49 | 6.67 |

| STP CG | 25 | 215 | 215 | 4.33 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Antolak, E.; Pułka, A. An Analysis of the Impact of Gating Techniques on the Optimization of the Energy Dissipated in Real-Time Systems. Appl. Sci. 2022, 12, 1630. https://doi.org/10.3390/app12031630

Antolak E, Pułka A. An Analysis of the Impact of Gating Techniques on the Optimization of the Energy Dissipated in Real-Time Systems. Applied Sciences. 2022; 12(3):1630. https://doi.org/10.3390/app12031630

Chicago/Turabian StyleAntolak, Ernest, and Andrzej Pułka. 2022. "An Analysis of the Impact of Gating Techniques on the Optimization of the Energy Dissipated in Real-Time Systems" Applied Sciences 12, no. 3: 1630. https://doi.org/10.3390/app12031630