Incorporating Code Structure and Quality in Deep Code Search

Abstract

:1. Introduction

2. Related Work

2.1. Recurrent Neural Network

2.2. Attention Mechanism

2.3. Joint Embedding Mechanism

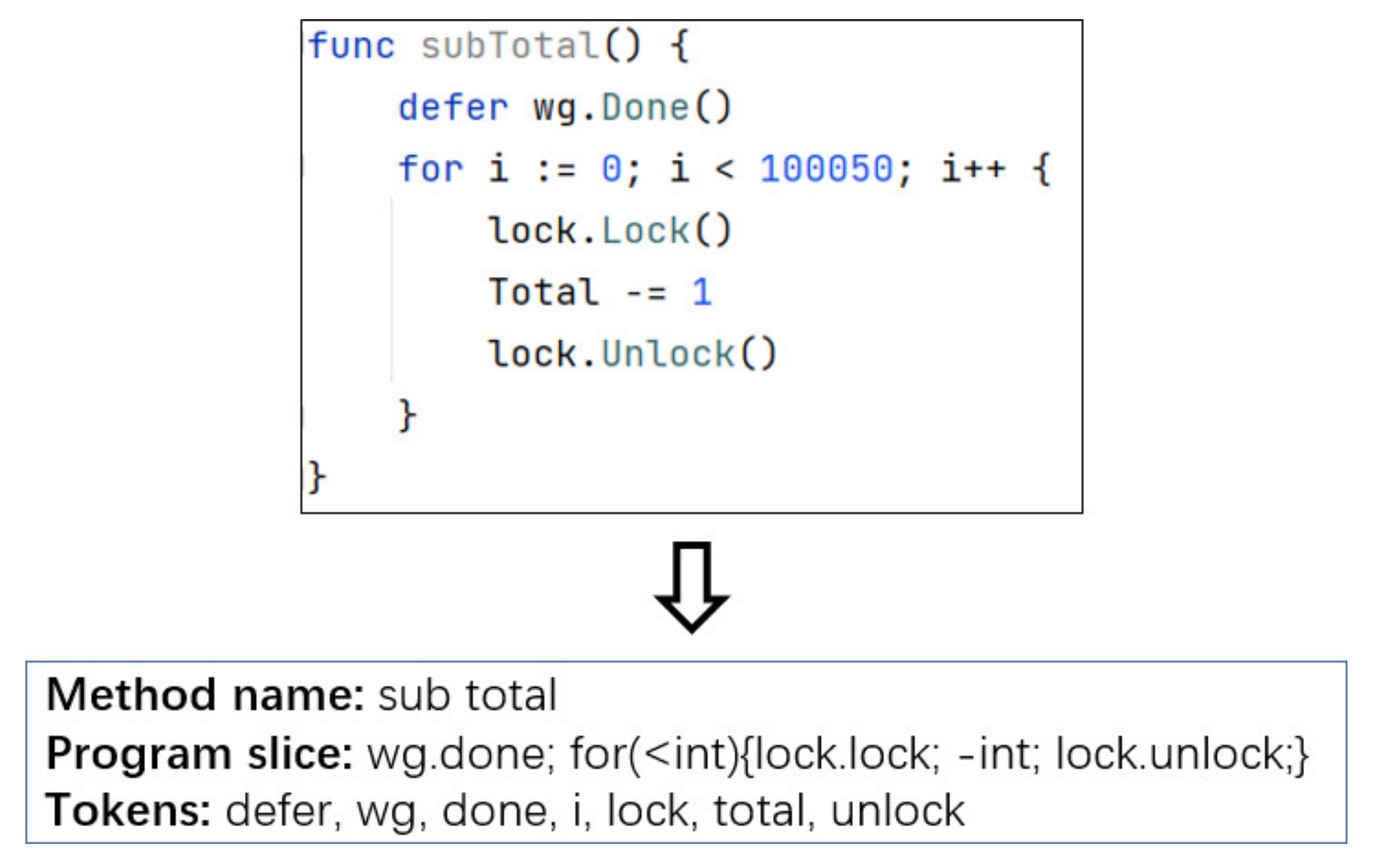

3. Code Representation

- For each variable declaration statement, program slice analyzes its corresponding variable type and adds new keyword before the variable type. Taking Golang as an example, var a string is transformed to new string.

- For each condition statement, program slice retains its structural information and if/else keywords. For example, is transformed to , where , , and are the program slices of statements , , and .

- For each loop statement, program slice retains its judgment conditions and cycle body and adds the for keyword. For example, is transformed to , where and are the program slices of condition and statement .

- For each range statement, program slice retains its judgment conditions and cycle body and adds the range keyword. For example, is transformed to , where and are the program slices of condition and statement .

- For each return statement, program slice analyzes its returned variable type and adds return keyword before the variable type. For example, is transformed to .

- For each switch statement, program slice retains its judgment conditions and body and adds the switch keyword. For example, is transformed to , where and are the program slices of condition and statement .

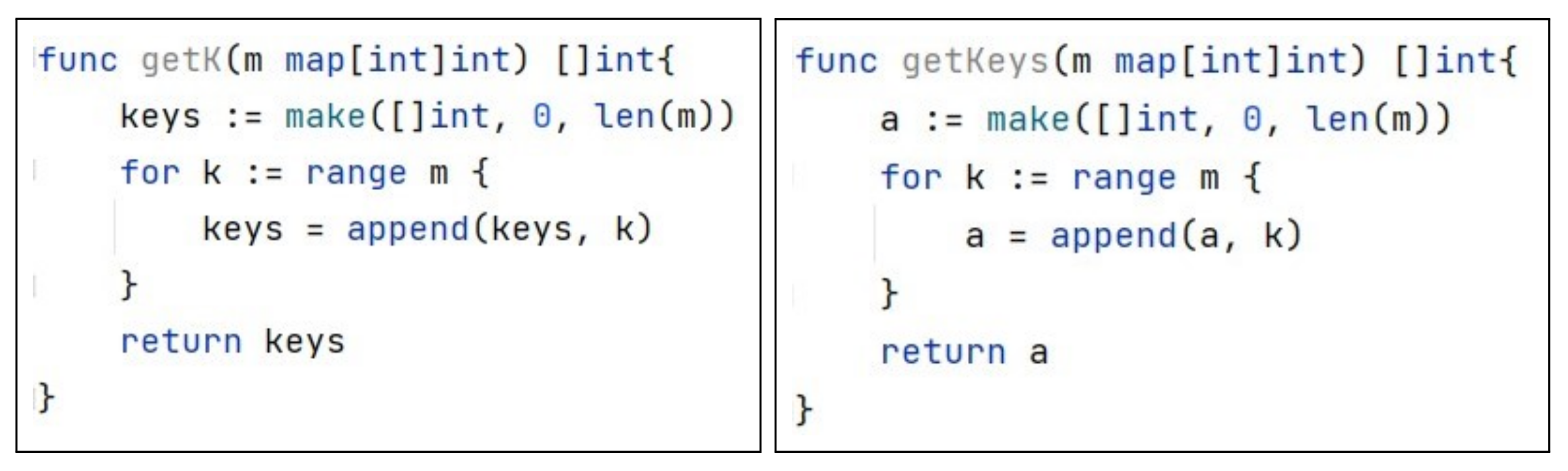

- For each call expression, program slice retains only the object type and the called method name. For example, for or , program slice generates or C, where A and C are the names of class or struct for object a and c.

- For each nested function calls, program slice uses nested definitions to represent each layer of function calls. For example, is transformed to , where are the name of class or struct for object .

- For each operation expression, program slice preserves operation symbols and result types. For example, is converted to .

4. Model

4.1. Architecture

- Description embedding module (DE-Module) embeds natural language descriptions into description vectors.

- Method feature embedding module (MF-Module) embeds code snippets into code vectors.

- Similarity module calculates the degree of similarity between description vectors and code vectors.

4.1.1. Description Embedding Module

4.1.2. Method Feature Embedding Module

4.1.3. Similarity Module

4.2. Model Training

5. Evaluation

5.1. Experimental Setup

- (1)

- Offline training: SQ-DeepCS is trained by the method described in Section 4 through code snippets with descriptions in the codebase.

- (2)

- Offline search codebase embedding: SQ-DeepCS embeds all the code snippets in the codebase to a set of code vectors. These vectors will be cached for fast similarity calculation.

- (3)

- Online code searching: In this part, when a user submits a natural language query, SQ-DeepCS embeds the query into a query vector using the DE-Module and calculates the cosine similarity between the query vector and the vectors in the codebase. The top K relevant results will be returned to the user.

5.2. Evaluation Method

5.2.1. Evaluation Questions

- (1)

- The question should be a concrete and achievable programming task. There are various questions in Stack Overflow, but some problems are not related to programming tasks. For example, ‘When is the init() function run’, ‘What should be the values of GOPATH and GOROOT’, and ‘Removing packages installed with go get’. We only retain questions related to concrete and achievable programming tasks and filter out other problems such as knowledge sharing and judgement.

- (2)

- The question should not be a duplication of another question. We only retain the unique questions and remove all similar questions.

- (3)

- The question should have an accepted answer and the answer should contain a code snippet to solve the current question.

5.2.2. Evaluation Metrics

5.3. Compared Method

- (1)

- P-DeepCS: Program slice based DeepCS (P-DeepCS) uses program slice instead of API sequence in DeepCS. This is to explore the impact of introducing program slice.

- (2)

- A-DeepCS: Attention based DeepCS (A-DeepCS) uses attention layers instead of maxpooling layers in DeepCS. This is to explore the impact of introducing attention mechanism.

- (3)

- PA-DeepCS: Program slice and attention based DeepCS (PA-DeepCS) use program slice to represent method body and use attention mechanism to weight embedded vector. However, when fusing the vectors of method name, program slice, and tokens, PA-DeepCS still uses a fully connected layer.

- (4)

- SQ-DeepCS: SQ-DeepCS is our proposed method. The key difference of PA-DeepCS and SQ-DeepCS is that SQ-DeepCS adopts an attention layer to concentrate the vectors of method name, program slice, and tokens.

- (1)

- NCS: NCS extracts some specific keywords as semantic features of code snippets, such as method name, method invocations, and enums. NCS combines these keywords with fastText [35] and conventional IR techniques, such as TF-IDF. The encoder of NCS adopts unsupervised training mode.

- (2)

- At-CodeSM: At-CodeSM used Tree LSTM [36] to process the abstract syntax tree of code snippets. At-CodeSM extracted three features of code snippets: method name, token, and AST. For tokens of code snippets, At-CodeSM use LSTM and attention mechanism to obtain the vector of tokens. When fusing the vectors of method name, AST, and tokens, At-CodeSM uses a fusion layer.

5.4. Results

6. Discussions

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- Yan, S.; Yu, H.; Chen, Y.; Shen, B.; Jiang, L. Are the Code Snippets What We Are Searching for? A Benchmark and an Empirical Study on Code Search with Natural-Language Queries. In Proceedings of the 2020 IEEE 27th International Conference on Software Analysis, Evolution and Reengineering (SANER), London, ON, Canada, 18–21 February 2020; pp. 344–354. [Google Scholar] [CrossRef]

- Sachdev, S.; Li, H.; Luan, S.; Kim, S.; Sen, K.; Chandra, S. Retrieval on Source Code: A Neural Code Search. In Proceedings of the 2nd ACM SIGPLAN International Workshop on Machine Learning and Programming Languages, Philadelphia, PA, USA, 18–22 June 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 31–41. [Google Scholar] [CrossRef]

- Stolee, K.T.; Elbaum, S.; Dobos, D. Solving the Search for Source Code. ACM Trans. Softw. Eng. Methodol. 2014, 23, 1–45. [Google Scholar] [CrossRef]

- Chen, Q.; Zhou, M. A neural framework for retrieval and summarization of source code. In Proceedings of the 2018 33rd IEEE/ACM International Conference on Automated Software Engineering (ASE), Montpellier, France, 3–7 September 2018; pp. 826–831. [Google Scholar]

- Campbell, B.A.; Treude, C. NLP2Code: Code Snippet Content Assist via Natural Language Tasks. In Proceedings of the 2017 IEEE International Conference on Software Maintenance and Evolution (ICSME), Shanghai, China, 17–22 September 2017; pp. 628–632. [Google Scholar] [CrossRef] [Green Version]

- Cambronero, J.; Li, H.; Kim, S.; Sen, K.; Chandra, S. When Deep Learning Met Code Search. In Proceedings of the 2019 27th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Tallinn, Estonia, 26–30 August 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 964–974. [Google Scholar] [CrossRef] [Green Version]

- Lv, F.; Zhang, H.; Lou, J.G.; Wang, S.; Zhang, D.; Zhao, J. CodeHow: Effective Code Search Based on API Understanding and Extended Boolean Model (E). In Proceedings of the 2015 30th IEEE/ACM International Conference on Automated Software Engineering (ASE), Lincoln, NE, USA, 9–13 November 2015; pp. 260–270. [Google Scholar] [CrossRef]

- Linstead, E.; Bajracharya, S.; Ngo, T.; Rigor, P.; Lopes, C.; Baldi, P. Sourcerer: Mining and searching internet-scale software repositories. Data Min. Knowl. Discov. 2009, 18, 300–336. [Google Scholar] [CrossRef]

- Sivaraman, A.; Zhang, T.; Van den Broeck, G.; Kim, M. Active Inductive Logic Programming for Code Search. In Proceedings of the 2019 IEEE/ACM 41st International Conference on Software Engineering (ICSE), Montreal, QC, Canada, 25–31 May 2019; pp. 292–303. [Google Scholar] [CrossRef] [Green Version]

- Ke, Y.; Stolee, K.T.; Goues, C.L.; Brun, Y. Repairing Programs with Semantic Code Search (T). In Proceedings of the 2015 30th IEEE/ACM International Conference on Automated Software Engineering (ASE), Lincoln, NE, USA, 9–13 November 2015; pp. 295–306. [Google Scholar] [CrossRef]

- Gu, X.; Zhang, H.; Kim, S. Deep Code Search. In Proceedings of the 2018 IEEE/ACM 40th International Conference on Software Engineering (ICSE), Gothenburg, Sweden, 27 May–3 June 2018; pp. 933–944. [Google Scholar] [CrossRef]

- Meng, Y. An Intelligent Code Search Approach Using Hybrid Encoders. Wirel. Commun. Mob. Comput. 2021, 2021, 9990988. [Google Scholar] [CrossRef]

- Ai, L.; Huang, Z.; Li, W.; Zhou, Y.; Yu, Y. SENSORY: Leveraging Code Statement Sequence Information for Code Snippets Recommendation. In Proceedings of the 2019 IEEE 43rd Annual Computer Software and Applications Conference (COMPSAC), Milwaukee, WI, USA, 15–19 July 2019; Volume 1, pp. 27–36. [Google Scholar] [CrossRef]

- Alon, U.; Zilberstein, M.; Levy, O.; Yahav, E. Code2vec: Learning Distributed Representations of Code. Proc. ACM Program. Lang. 2019, 3, 1–29. [Google Scholar] [CrossRef] [Green Version]

- Balog, M.; Gaunt, A.L.; Brockschmidt, M.; Nowozin, S.; Tarlow, D. DeepCoder: Learning to Write Programs. arXiv 2016, arXiv:1611.01989. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. arXiv 2014, arXiv:1409.3215. [Google Scholar]

- Tufano, M.; Pantiuchina, J.; Watson, C.; Bavota, G.; Poshyvanyk, D. On learning meaningful code changes via neural machine translation. In Proceedings of the 2019 IEEE/ACM 41st International Conference on Software Engineering (ICSE), Montreal, QC, Canada, 25–31 May 2019; pp. 25–36. [Google Scholar]

- Shuai, J.; Xu, L.; Liu, C.; Yan, M.; Xia, X.; Lei, Y. Improving code search with co-attentive representation learning. In Proceedings of the 28th International Conference on Program Comprehension, Seoul, Korea, 13–15 July 2020; pp. 196–207. [Google Scholar]

- Siami-Namini, S.; Tavakoli, N.; Namin, A.S. The performance of LSTM and BiLSTM in forecasting time series. In Proceedings of the 2019 IEEE International Conference on Big Data (Big Data), Los Angeles, CA, USA, 9–12 December 2019; pp. 3285–3292. [Google Scholar]

- Zhou, Q.; Wu, H. NLP at IEST 2018: BiLSTM-attention and LSTM-attention via soft voting in emotion classification. In Proceedings of the 9th Workshop on Computational Approaches to Subjectivity, Sentiment and Social Media Analysis, Brussels, Belgium, 31 October 2018; pp. 189–194. [Google Scholar]

- Xu, G.; Meng, Y.; Qiu, X.; Yu, Z.; Wu, X. Sentiment analysis of comment texts based on BiLSTM. IEEE Access 2019, 7, 51522–51532. [Google Scholar] [CrossRef]

- Allamanis, M.; Peng, H.; Sutton, C. A Convolutional Attention Network for Extreme Summarization of Source Code. In Proceedings of the 33rd International Conference on Machine Learning, New York, NY, USA, 19–24 June 2016; Balcan, M.F., Weinberger, K.Q., Eds.; PMLR: New York, NY, USA, 2016; Volume 48, pp. 2091–2100. [Google Scholar]

- Iyer, S.; Konstas, I.; Cheung, A.; Zettlemoyer, L. Summarizing source code using a neural attention model. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics, Long Papers, Berlin, Germany, 7–12 August 2016; Volume 1, pp. 2073–2083. [Google Scholar]

- Wan, Y.; Shu, J.; Sui, Y.; Xu, G.; Zhao, Z.; Wu, J.; Yu, P.S. Multi-modal attention network learning for semantic source code retrieval. arXiv 2019, arXiv:1909.13516. [Google Scholar]

- Kang, H.J.; Bissyandé, T.F.; Lo, D. Assessing the generalizability of code2vec token embeddings. In Proceedings of the 2019 34th IEEE/ACM International Conference on Automated Software Engineering (ASE), San Diego, CA, USA, 11–15 November 2019; pp. 1–12. [Google Scholar]

- Wang, G.; Li, C.; Wang, W.; Zhang, Y.; Shen, D.; Zhang, X.; Henao, R.; Carin, L. Joint Embedding of Words and Labels for Text Classification. arXiv 2018, arXiv:1805.04174. [Google Scholar]

- Karpathy, A.; Fei-Fei, L. Deep visual-semantic alignments for generating image descriptions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3128–3137. [Google Scholar]

- Gu, X.; Zhang, H.; Zhang, D.; Kim, S. Deep API Learning. In Proceedings of the 2016 24th ACM SIGSOFT International Symposium on Foundations of Software Engineering, Seattle, WA, USA, 13–18 November 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 631–642. [Google Scholar] [CrossRef]

- Zhang, J.; Wang, X.; Zhang, H.; Sun, H.; Wang, K.; Liu, X. A novel neural source code representation based on abstract syntax tree. In Proceedings of the 2019 IEEE/ACM 41st International Conference on Software Engineering (ICSE), Montreal, QC, Canada, 25–31 May 2019; pp. 783–794. [Google Scholar]

- Allamanis, M.; Brockschmidt, M.; Khademi, M. Learning to Represent Programs with Graphs. arXiv 2017, arXiv:1711.00740. [Google Scholar]

- Keivanloo, I.; Rilling, J.; Zou, Y. Spotting working code examples. In Proceedings of the 36th International Conference on Software Engineering, Hyderabad, India, 31 May–7 June 2014; pp. 664–675. [Google Scholar]

- Li, X.; Wang, Z.; Wang, Q.; Yan, S.; Xie, T.; Mei, H. Relationship-aware code search for JavaScript frameworks. In Proceedings of the 2016 24th ACM SIGSOFT International Symposium on Foundations of Software Engineering, Seattle, WA, USA, 13–18 November 2016; pp. 690–701. [Google Scholar]

- Ye, X.; Bunescu, R.; Liu, C. Learning to rank relevant files for bug reports using domain knowledge. In Proceedings of the 22nd ACM SIGSOFT International Symposium on Foundations of Software Engineering, Hong Kong, China, 16–21 November 2014; pp. 689–699. [Google Scholar]

- Raghothaman, M.; Wei, Y.; Hamadi, Y. Swim: Synthesizing what i mean-code search and idiomatic snippet synthesis. In Proceedings of the 2016 IEEE/ACM 38th International Conference on Software Engineering (ICSE), Austin, TX, USA, 14–22 May 2016; pp. 357–367. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching Word Vectors with Subword Information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef] [Green Version]

- Tai, K.S.; Socher, R.; Manning, C.D. Improved semantic representations from tree-structured long short-term memory networks. arXiv 2015, arXiv:1503.00075. [Google Scholar]

| No. | Query | FRank | ||||

|---|---|---|---|---|---|---|

| DCS | AD | PD | PAD | SQD | ||

| 1 | how to check if a map contains a key in go? | NF | NF | 1 | 7 | 1 |

| 2 | how to convert an int value to string in go? | NF | 2 | 7 | 2 | 3 |

| 3 | how to check if a file exists in go? | 1 | 2 | 1 | 1 | 2 |

| 4 | how to find the type of an object in go? | NF | 2 | 1 | 1 | 4 |

| 5 | read a file in lines | 1 | NF | NF | 6 | NF |

| 6 | how to multiply duration by integer? | 8 | 3 | 4 | 1 | 2 |

| 7 | how to generate a random string of a fixed length in go? | 5 | 1 | NF | 1 | NF |

| 8 | checking the equality of two slices | 3 | NF | NF | NF | 3 |

| 9 | how can i read from standard input in the console? | NF | 10 | NF | 10 | NF |

| 10 | how to write to a file using go | 2 | 1 | 1 | 1 | 1 |

| 11 | how do i send a json string in a post request in go | 3 | 5 | 2 | 4 | 10 |

| 12 | getting a slice of keys from a map | NF | NF | 1 | 9 | 8 |

| 13 | convert string to int in go? | 4 | 1 | 10 | 9 | 5 |

| 14 | how to get the directory of the currently running file? | NF | 1 | 6 | NF | 1 |

| 15 | convert byte slice to io.Reader | NF | NF | NF | NF | NF |

| 16 | how to set headers in http get request? | 4 | 1 | 1 | 1 | 3 |

| 17 | how to trim leading and trailing white spaces of a string? | NF | 4 | 8 | NF | 1 |

| 18 | how to connect to mysql from go? | 9 | NF | NF | NF | NF |

| 19 | how to parse unix timestamp to time.Time | NF | NF | 2 | 2 | 10 |

| 20 | subtracting time.duration from time in go | 3 | 2 | NF | NF | NF |

| 21 | convert string to time | 3 | NF | 9 | 6 | 1 |

| 22 | how to set timeout for http get requests in golang? | NF | NF | 2 | NF | 5 |

| 23 | how to set http status code on http responsewriter | NF | 2 | 3 | 10 | NF |

| 24 | how to stop a goroutine | NF | NF | NF | NF | 7 |

| 25 | how to find out element position in slice? | NF | NF | NF | 5 | NF |

| 26 | partly json unmarshal into a map in go | 6 | 3 | NF | 1 | 6 |

| 27 | how to index characters in a golang string? | 10 | NF | 1 | NF | NF |

| 28 | how to get the name of a function in go? | 3 | NF | NF | NF | 1 |

| 29 | convert go map to json | 7 | 2 | NF | 2 | 3 |

| 30 | convert interface to int | 1 | NF | 9 | 10 | NF |

| 31 | how to convert a bool to a string in go? | NF | 9 | 2 | 2 | 1 |

| 32 | obtain user’s home directory | NF | 4 | 9 | NF | NF |

| 33 | how do i convert a string to a lower case representation | NF | 1 | NF | 1 | 6 |

| 34 | how do i compare strings in golang? | NF | 4 | 7 | 6 | NF |

| 35 | mkdir if not exists using golang | NF | 3 | NF | 3 | NF |

| 36 | sort a map by values | NF | NF | 1 | 5 | 2 |

| 37 | how to check if a string is numeric in go | NF | NF | NF | NF | 4 |

| 38 | convert an integer to a float number | 1 | 5 | NF | NF | NF |

| 39 | delete max in BTree | 1 | NF | 3 | 5 | 1 |

| 40 | how to clone a map | 1 | NF | NF | NF | 1 |

| 41 | string to date conversion | 2 | NF | NF | NF | NF |

| 42 | get database config | 1 | NF | 4 | 2 | 1 |

| 43 | convert string to duration | 1 | 3 | NF | 1 | 2 |

| 44 | generate random integers within a specific range | 7 | NF | NF | NF | NF |

| 45 | generate an md5 hash | 5 | NF | NF | 3 | 3 |

| Approach | S@1 | S@5 | S@10 | P@1 | P@5 | P@10 | MRR |

|---|---|---|---|---|---|---|---|

| DeepCS | 0.156 | 0.422 | 0.556 | 0.156 | 0.164 | 0.162 | 0.315 |

| A-DeepCS | 0.133 | 0.467 | 0.511 | 0.133 | 0.169 | 0.153 | 0.304 |

| P-DeepCS | 0.156 | 0.356 | 0.533 | 0.156 | 0.16 | 0.149 | 0.313 |

| PA-DeepCS | 0.2 | 0.444 | 0.644 | 0.2 | 0.182 | 0.187 | 0.348 |

| NCS | 0.156 | 0.356 | 0.511 | 0.156 | 0.147 | 0.142 | 0.251 |

| At-CodeSM | 0.178 | 0.467 | 0.578 | 0.178 | 0.178 | 0.16 | 0.323 |

| SQ-DeepCS | 0.222 | 0.511 | 0.644 | 0.222 | 0.182 | 0.171 | 0.374 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yu, H.; Zhang, Y.; Zhao, Y.; Zhang, B. Incorporating Code Structure and Quality in Deep Code Search. Appl. Sci. 2022, 12, 2051. https://doi.org/10.3390/app12042051

Yu H, Zhang Y, Zhao Y, Zhang B. Incorporating Code Structure and Quality in Deep Code Search. Applied Sciences. 2022; 12(4):2051. https://doi.org/10.3390/app12042051

Chicago/Turabian StyleYu, Hao, Yin Zhang, Yuli Zhao, and Bin Zhang. 2022. "Incorporating Code Structure and Quality in Deep Code Search" Applied Sciences 12, no. 4: 2051. https://doi.org/10.3390/app12042051

APA StyleYu, H., Zhang, Y., Zhao, Y., & Zhang, B. (2022). Incorporating Code Structure and Quality in Deep Code Search. Applied Sciences, 12(4), 2051. https://doi.org/10.3390/app12042051