1. Introduction

Biometrics refers to measurements of a biological trait [

1] and their employment for individual recognition. Involuntarily, we apply biometric recognition in many daily situations. For example, when someone who is not in our contact list calls us by phone, we try to associate the voice we hear with the people we know. If the voice matches one of our memories, we quickly recognize the person. Biometric recognition systems are automated methods to recognize an individual.

There are two phases in a biometric recognition system [

1]. In the first phase, enrollment, the individual registers his/her biometric data, which are stored in the system (normally, in a database). The registered data are known as template. In the second phase, matching, the individual is recognized from new biometric data. The second phase is carried out as many times as the individual has to be recognized. A biometric recognition system is composed of four stages: acquisition, preprocessing, feature extraction, template storage, and matching. Samples of biometric traits are obtained during the acquisition stage, in which sensors get raw data. These data are preprocessed by applying filters and extracting regions of interest, among others. The feature extraction stage is applied to generate a representation of the samples that facilitates the generation and storage of templates at enrollment and the comparison with them at matching. The matching result (also known as the score) shows how similar templates are.

Comparisons can be split into genuine and impostor. Genuine comparisons are computed between samples from the same individual, while impostor comparisons are computed between samples from different individuals. False non-match rate (FNMR) and false match rate (FMR) are usual indicators for the evaluation of the authentication performance. They are also known as false rejection rate (FRR) and false acceptance rate (FAR), respectively. FNMR is determined by the number of genuine comparisons with a similarity score smaller than the authentication threshold. On the other hand, FMR is determined by the number of impostor comparisons with a score greater than the threshold. From these indicators, equal error rate (EER) is the value where FNMR and FMR coincide.

Nowadays, biometric recognition systems are employed in many application fields for access control in electronic commerce, banking, tourism, migration, offices, hospitals, cloud, etc. The advantages of biometrics over passwords or identity cards is that biometric data do not need to be remembered and the risk of theft is reduced because biological measurements are intrinsic to individuals. Biometric recognition systems became very popular after they were introduced in daily life devices (laptops, tablets, or smartphones) to protect personal data and carry out secure payments. The introduction of biometric recognition systems in mobile phones was in 2004 (almost twenty years ago) in the Pantech GI100. In 2007, fingerprint recognition was included in the Toshiba G500, consolidating the use of fingerprint sensors in mobile phones. The acceptance of fingerprint recognition in the smartphone market started in 2013 with the inclusion of the Touch-ID system in the iPhone 5S. As a reaction to the Apple action, Android included fingerprint recognition in the Samsung Galaxy S5 in 2014. In the last years, the number of smartphones with fingerprint recognition has increased exponentially. Meanwhile, the development of facial recognition technologies has gained importance over the years. In 2012, Google registered a patent for facial smartphone unlocking and integrated it in Android 4.0 systems. Similar to the Touch-ID system, Apple included the Face-ID system in the iPhone X in 2017. The acquisition in Face-ID (like in other facial recognition systems) employs an infrared dot projector module in addition to the camera.

Biometric recognition based on vascular traits is a relatively recent technique that has not yet been sufficiently studied and exploited [

2]. Vascular patterns are formed by the structure of blood vessels within the body. The typical acquisition is based on sensing in an optical way the absorption of near-infrared (NIR) light by the hemoglobin in the bloodstream. Under illumination from NIR LEDs, vessels appear as dark structures and the surrounding tissues appear bright because vessels have much more light absorption in that spectrum. Although arteries or veins can be employed as biometric traits, veins are easier to detect, and their images are clearer. There are more veins than arteries in the human body and veins are closer to the skin surface. The body parts usually considered for biometric recognition based on veins are fingers, hands, and wrists [

2].

There are several properties that should be considered for a biometric trait [

1]: (1) universality (if all individuals possess the biometric trait); (2) distinctiveness or uniqueness (if the biometric trait is sufficiently different for individuals); (3) permanence (if the trait does not vary over time); (4) performance (if the recognition is accurate and fast); (5) circumvention (if the trait can be imitated using artifacts); (6) collectability or measurability (if the acquisition is easy to carry out); and (7) acceptability (if the individuals accept the acquisition of the trait and the use of the recognition system).

Depending on the biometric trait, these properties vary. In the case of fingerprints and faces, universality, distinctiveness, performance, and acceptability are high. Permanence is medium because fingerprints (by the uses of the fingers) and faces (by the individual aging) change over time. Regarding collectability, contact is usually required in the case of fingerprints, which means that skin oiliness, dust, or creams may reduce the quality of the images acquired. In addition, specific sensors are required. For these reasons, the collectability of fingerprints is medium. Collectability for faces is also medium. In this case, non-specific cameras can be employed, but significant changes in the appearance due to the growth of a full beard or wearing masks affect facial recognition. Circumvention is medium in both cases since it is possible to spoof fingerprints and faces by using silicon fingers (for fingerprints) and photos, videos, or 3D face masks (for faces).

In the case of veins, universality, distinctiveness, permanence, performance, and protection against circumvention are high because everyone has veins, vein patterns are different among individuals, the veins are usually invariant throughout the life, the error rate can be low and the recognition can be at real time, and finally, veins are inside the body and are difficult to spoof using artifacts. However, collectability and acceptability are medium. Although acquisition techniques are contactless, environmental factors, such as light, temperature, or humidity, and biological factors, e.g., the thickness of the layers of human tissues and diseases, such as anemia, hyperthermia, or hypotension, affect vein recognition.

Furthermore, specific and large-sized acquisition devices, not suitable for ordinary smartphones, are typically employed to acquire veins [

3,

4,

5,

6]. There are only a few works focused on the vein acquisition with portable devices and smartphones [

7,

8,

9,

10,

11,

12]. However, a specific device is designed in [

8,

9], additional illumination is employed together with a modified smartphone to remove the NIR blocking filter in [

10], smartphones with an infrared camera are employed in [

7], and very preliminary works are presented in [

11,

12].

This work aims to evaluate acquisition methods and vein recognition algorithms using ordinary smartphone cameras, i.e., without modification or additional modules. The sophistication of ordinary smartphone cameras improves the quality of the captures. Besides, there are smartphone applications (apps) that acquire vein images in the visible domain and apply filtering techniques to improve the contrast of superficial veins, simulating the acquisition in the infrared spectrum. These apps are widely used to locate veins for medical purposes, such as blood extraction [

13].

Our contribution in this work is to develop and implement a vein recognition system by using the Xiaomi Redmi Note 8 (a very commercial smartphone with an ordinary camera) and to collect and evaluate a database of vein images acquired by the IRVeinViewer app (a free app for Android systems to acquire veins). In order to evaluate the influence of environmental factors, different day times under different conditions (day outdoor, indoor with natural light, indoor with natural light and a dark homogeneous background, indoor with artificial light, and darkness) are considered. In order to evaluate biological factors, people from different ages and genders are considered.

The state-of-the-art biometric recognition algorithms and quality assessment algorithms included in [

7] and in the PLUS OpenVein toolkit [

14] are analyzed as a first step to select the algorithms of our implementation. Among the recognition algorithms, the most extended are based on the extraction of a binary information (e.g., the maximum curvature [

15]) and the extraction of local texture descriptors (e.g., SIFT [

16]). Regarding quality assessment algorithms, the techniques employed in the literature are based on global contrast factor (GCF) [

17], a measurement of the brightness uniformity and clarity (Wang17) [

18], and signal to noise ratio based on the human visual system (HSNR) [

19].

The paper is structured as follows.

Section 2 reviews the related work. The details of how the database was created with the IRVeinViewer app are given in

Section 3. Details of the implemented system and their evaluation with the created database in terms of recognition performance and quality control of the acquired images are shown in

Section 4. Finally, conclusions and future work are given in

Section 5.

2. Related Work

A relevant step in vein recognition is the acquisition of samples. High-quality images reduce the complexity of image preprocessing and increase recognition accuracy. The acquisition process is primarily based on two properties [

1]: (1) an inoffensive near-infrared (NIR) light that can enter the skin with a radiation wavelength larger than visible light (around 750–1000 nm), and (2) the presence of hemoglobin in the bloodstream, which absorbs this radiation. Hemoglobin can be classified as oxygenated and deoxygenated, depending on whether it contains dioxygen or not. Deoxygenated hemoglobin shows a maximum absorption at wavelengths of 760 nm, while oxygenated hemoglobin shows a higher absorption at 800 nm [

20].

Vein acquisition devices are usually composed of a NIR illumination source and a camera. Based on their positions, two different methods are used for the acquisition: reflection and refraction (or transmission), which are optical phenomena that occur when a ray of light propagating through a medium encounters a separating surface of another medium. During reflection, part of the incident light changes direction and continues to propagate in the original medium. Therefore, images are obtained using the light reflected by the tissues and veins. In this case, the camera and the NIR light source are on the same side and the biometric sample is in front of them. During refraction, part of the light is transmitted to the other medium. So, in the case of transmission, the refracted light is captured by a camera in front of the NIR light source and the biometric sample is placed between the light source and the camera.

In the refraction method, the light has to pass through the human tissue until the camera captures it. Therefore, infrared light should have higher intensity. Besides, due to the locations of the infrared light source and the camera, the acquisition device is bulkier. On the contrary, the reflection method allows a more compact design with a lower power consumption. In this work, we focus on the reflection-based acquisition because it is more suitable for smartphones. In the literature, there are several proposals based on the reflection method to acquire veins in the infrared spectrum from the palms, dorsal hands, fingers, and wrists. These proposals are summarized in the following.

For palm veins, the acquisition prototype described in [

8] was used to acquire the VERA PalmVein database. The prototype contains a CCD camera and twenty 940-nm NIR LEDs placed on the edges of a printed circuit board (PCB). In addition, it includes a 920-nm high-pass filter to reduce ambient light and an ultrasound distance sensor to turn off the acquisition when the hand is not placed at an adequate distance. A graphical user interface (GUI), which allows for checking whether the palm is centered in the image and the infrared light is adequate, guides the acquisition. The samples were acquired inside a building. The database was evaluated in [

9] considering the region of interest (ROI) of the images. The EER obtained was 9.86% for images without enhancement and 6.25% for images with enhancement.

The acquisition prototype described in [

10] was used for dorsal hands. It employed the camera of a Nexus 5 smartphone modified to remove the infrared blocking filter. As the infrared lighting source, a structure was created with sixteen 850-nm NIR LEDs placed in a circle with the smartphone camera in its center. In addition, a 780-nm high-pass filter was used to reduce the influence of ambient light. Each LED was controlled individually from an app developed for the acquisition that interacted with an Arduino-based control board. The acquisition was carried out inside a car and inside a building. The extraction of the ROI was carried out manually and the images were enhanced. The recognition performance evaluation showed that the best EER value was 4.13% and the worst EER was 24.30%.

For finger veins, the acquisition prototype described in [

8] was composed of twelve 850-nm NIR LEDs arranged in three groups of four LEDs in a PCB to provide an adequate illumination. In addition, the power of each group of LEDs was adjusted by means of a software program. In this way, it is possible to provide very homogeneous lighting, avoiding saturation in the central point or insufficient lighting at the edges. Since the veins are deeper in the fingers, optimal illumination is needed. A CMOS camera was employed to acquire images from a minimum distance of 10 cm. The prototype also included a 740-nm high-pass filter to reduce the effect of ambient light. The ROI was extracted, and the images were enhanced. Recognition performance results have not been reported yet.

For wrist veins, the UC3M-CV2 database [

7] was acquired using the infrared cameras and NIR illumination of two Xiaomi smartphones. The acquisition considered several environmental conditions (outdoor/indoor and external artificial or natural light without direct sunlight at different daytimes). A software program to guide the wrist position was developed as part of the acquisition protocol and images were enhanced. From the evaluation of the recognition performance, the resulting EER values ranged from 6.82% to 18.72%.

The acquisition of veins in the visible spectrum was preliminarily explored in [

11,

12] from images captured with ordinary smartphone cameras. In [

11], veins are extracted and processed in the RGB domain and performance of the acquisition is evaluated for medical applications (e.g., vein puncture guidance). In [

12], the identification capability of three kinds of neural networks processing the information obtained from the ROI is evaluated. The highest success identification rates range from 94.11% to 96.07% in the three structures.

3. Creation of a Database with a Viewer App for Vein Images

In this work, a Xiaomi Redmi Note 8 smartphone and the IRVeinViewer app were used for the acquisition of the images. The used smartphone has an ordinary quad camera with 48 mega pixels. As many other smartphone cameras, it has a NIR blocking filter to improve the quality of the acquired images. Hence, acquisition of veins based on the absorption of the IR light is not possible. The IRVeinViewer App is a free Android application that can be used as a vein scanner. Currently, there are other similar apps, such as VeinSeek, VeinCamera, or VeinScanner. These apps transform the images captured in the visible spectrum into NIR-like images by enhancing the contrast and visualization of green and blue channels. The limitation is that only the most superficial veins can be detected. The common use of these apps is for medical applications, not for biometric recognition purposes.

Once the IRVeinViewer app is started, the user has to focus the body area with the back camera. The application adjusts the optimal contrast and shows the simulated IR images in real-time on the smartphone screen.

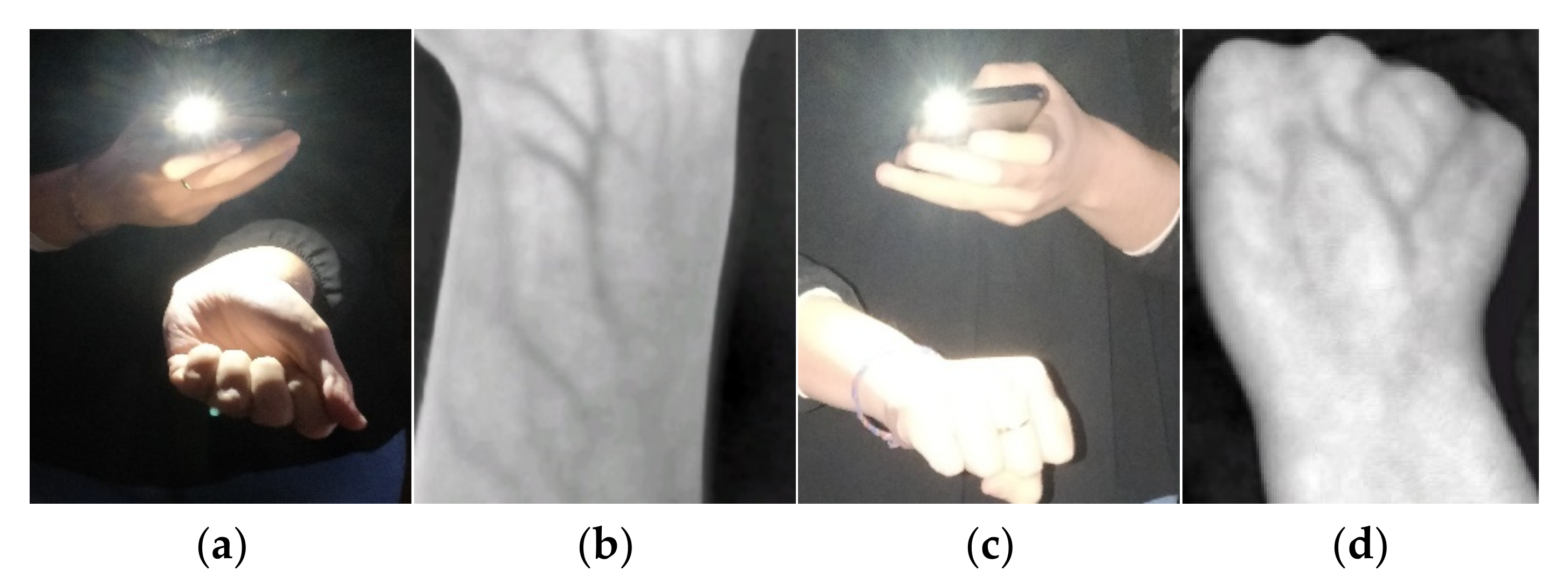

Figure 1 shows acquisition examples. Since we noticed that deep veins (like palm and finger veins) were not well acquired with the app, we focused on veins from the dorsal hand and wrist.

Some factors must be considered besides the acquisition device: biological, environmental, and sample position. Regarding biological factors, the thickness of the subcutaneous layers can hinder the acquisition of veins correctly, and diseases (such as anemia, hyperthermia, or hypotension) can influence the way in which blood flows through the veins. Regarding environmental factors, sunlight contains a part of the infrared wavelength spectrum that converts images from blue to violet, and the recognition performance can be affected in this situation. Changes in temperature and humidity can also affect how blood flows through the veins.

In order to evaluate environmental factors, different day times under five conditions were considered:

- -

Outdoor: open areas with non-direct sunlight.

- -

Indoor natural light 1: closed areas (inside a building) with natural light.

- -

Indoor natural light 2: closed areas with natural light and a dark homogeneous background to capture the sample.

- -

Indoor artificial light: closed areas (inside a building) with artificial light.

- -

Indoor darkness: closed areas in absence of light.

It is important to consider that the smartphone flashlight was always used, since it was not possible to turn it off. This could affect the acquisition in certain light conditions.

Veins from the wrist and dorsal hand (2 body areas) were acquired from 10 volunteers for both left and right hands (2 sides). For each condition, 5 samples were taken. The complete database is composed of 1000 veins images (10 individuals × 2 body areas × 2 sides × 5 conditions × 5 samples). The ages of the volunteers ranged from 18 to 75 to assess biological factors. The average age in the participants of this database is 36.4 years. Additionally, both genders were considered, with 8 women and 2 men.

The acquisition followed a protocol with the following steps:

Inform about the target and procedure of the acquisition.

Deliver an informed consent document.

Collect information about age and gender.

Capture 5 biometric samples considering body side and area (left wrist, left dorsal hand, right wrist, and right dorsal hand) and condition (outdoor, indoor natural light 1, indoor natural light 2, indoor artificial light, and darkness).

Samples were acquired by the individuals who held the smartphone and interacted with the app. Since the IRVeinViewer has not been developed to take pictures, the samples were collected by means of screenshots. In general, individuals tried to acquire samples containing enough information about the veins.

Since the acquisition of the images was non-guided, an essential step is the extraction of the region of interest (ROI), which is the recognition area with the most discriminative information. For this work, manual extraction was performed. However, a guided acquisition system (similar to that proposed in [

7] for vein images acquired from wrists) could be applied.

Figure 2 shows examples of veins images acquired from a wrist and a dorsal hand for each condition established. ROI extraction was applied to the acquisitions under outdoor, indoor natural light 1, indoor artificial light, and darkness conditions. In the case of the indoor natural light 2 condition, since the vein images were acquired with a dark homogeneous background, ROI extraction was not applied, and only foreground was extracted.

5. Discussion

In the context of biometric authentication systems for access control, the use of an app for ordinary smartphones is very interesting to increase the usability (collectability) and acceptability of the system. While facial and fingerprint recognition has been widely employed in smartphones, the use of vein-based traits has not yet been sufficiently studied and exploited. Custom-designed devices with near-infrared light sources usually perform vein acquisition in vascular biometric systems. Some of these designed devices may be expensive and cannot be used in some contexts because of their portability and size. In this work, we have explored the feasibility of an app, the free IRVeinViewer app, that detects superficial veins from images captured in the visible spectrum by an ordinary smartphone camera.

Since deep veins are not well acquired, we designed and evaluated our system with a database composed of 1000 images of dorsal hands and wrists since they have more superficial veins and are easier to acquire with a smartphone. Among the feature-extraction algorithms reported for vein-based recognition, we employed the maximum curvature (MC) and SIFT (scale-invariant feature transform) to evaluate in terms of the equal error rate (EER) the authentication performance obtained. The results show that SIFT algorithm provides better EER values than MC and that wrist images allow better authentication than images of dorsal hands. Using SIFT on wrist images, the EER values range from 0.63% to 16.80%. The system implemented in Android allows for performance in real time, with the feature extraction stage being the slowest stage (it lasts 127.62 ms).

The best and worst EER values with our database are similar to those obtained with databases whose images were acquired with specific devices. Hence, it is worth further improving the cheaper solution explored herein.

The influence of environmental factors in the authentication performance was evaluated considering acquisitions performed outdoors and indoors (with natural and artificial light or no light). The results show that indoor acquisitions are somewhat better than outdoor acquisitions. However, our conclusion is that how the user acquires the images with the app from one time to another has a greater influence, i.e., if the user carries out matching in the same environment as enrollment, and after a short time from enrollment, the authentication is better. Therefore, one way of improving this work is to develop a smart app that guides the user in the acquisition. We will consider this as future work.

The methods employed in the literature for the quality estimation of images did not detect images with unclearly acquired veins. Hence, it is worth exploring other methods such as the genuine score-based evaluation considered in this work. This is something to further explore in a smart app.

Since vein-based biometrics offers a high circumvention but their EER values are not expected to be low enough in ordinary smartphones, it is a good choice for multimodal biometric systems. Finally, another future line of research will be the application of convolutional neural networks (CNNs) for vein recognition on ordinary smartphones since the preliminary results in the literature are promising.