An Improved Point Clouds Model for Displacement Assessment of Slope Surface by Combining TLS and UAV Photogrammetry

Abstract

:1. Introduction

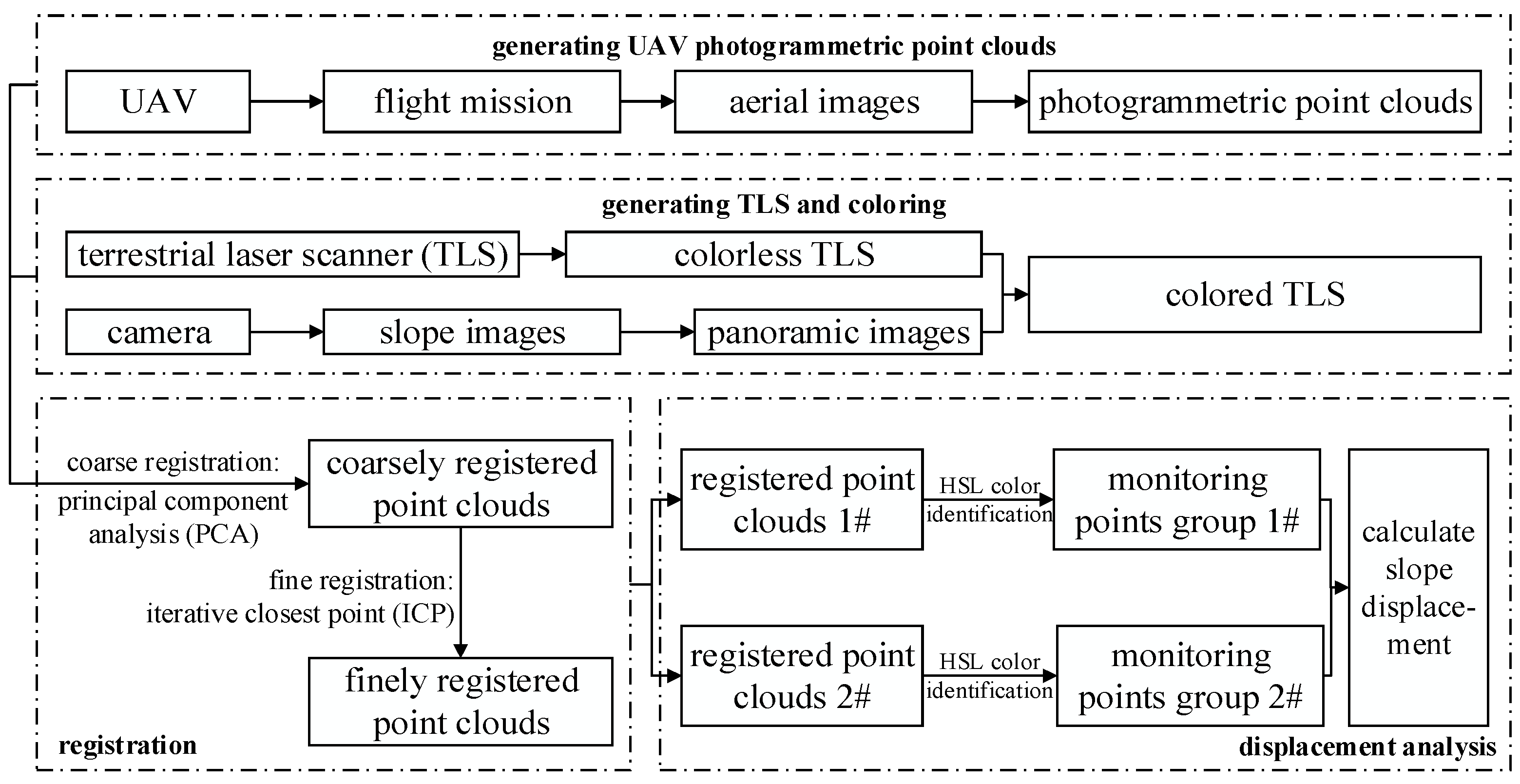

2. Methodology

2.1. Generating UAV Photogrammetric Point Clouds

2.1.1. Image Preprocessing

2.1.2. Spatial Conversion

2.1.3. Generating Encrypted Point Clouds

- (1)

- Feature Matching: features discovery by Harris and Gaussians operators are first matched across input images, which yields a sparse set of patches associated with salient image regions;

- (2)

- Patch expansion: as the main part of the PMVS, output a dense set of patches by spreading the initial patches to around pixels;

- (3)

- Filtering: incorrect matches need to be eliminated by visibility constraints.

2.2. Generating TLS and Coloring

2.2.1. Laser Scanning Point Cloud Acquisition

2.2.2. Point Clouds Coloring

2.3. Registration

2.3.1. Coarse Registration

2.3.2. Fine Registration

- (1)

- Constructing the objective function of the least square method and setting the threshold as :where, the objective function is the mean of the sum of each distances between corresponding point pair, is in the point set and is in the point set , and are the rotation matrix and translation matrix respectively.

- (2)

- Find the centroid and of the point set and , and convert the position relation of each point into the relative centroid position relation:

- (3)

- The matrix is constructed according to and obtained after transformation, so that:

- (4)

- Singular value decomposition of matrix can be obtained:

- (5)

- When ≥ 2, :When = 3, there is a unique optimal rotation matrix and translation matrix , making the objective function minimum.

- (6)

- Finally, the value of the objective function was calculated under the current rotation matrix and translation matrix, and be compared with the threshold. If the value is less than the threshold, the iteration ends; otherwise, the cycle is repeated step (2).

2.4. Color Space Selection

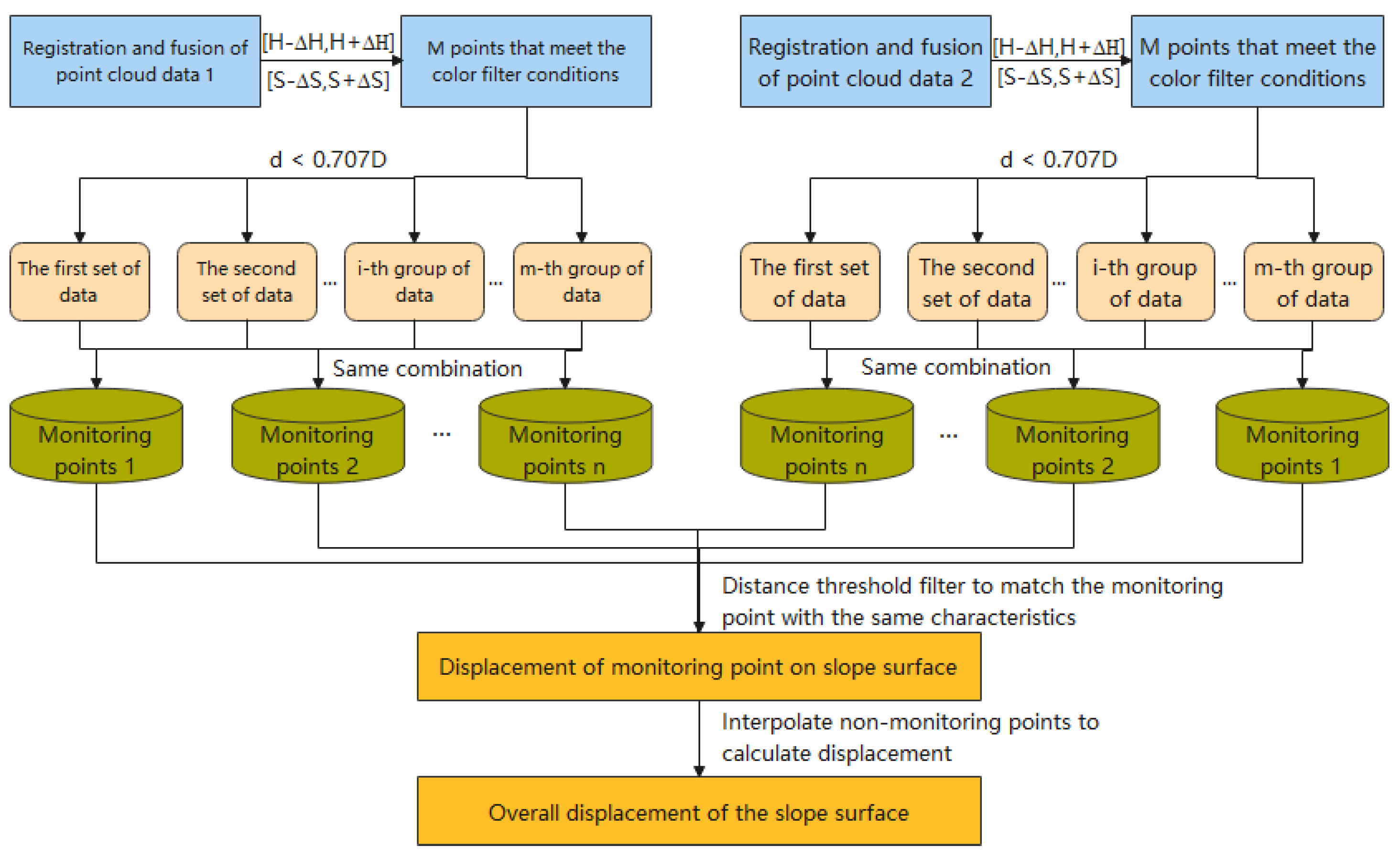

2.5. Displacement Calculation

- (1)

- Firstly, the recognition range of H and S parameters in the color of HSL space was determined according to the collected images and point clouds were screened to extract all points within the range of , from point clouds;

- (2)

- The square side length of the monitoring point was named as D, and the distance dij between the ith point (0 < i ≤ m) and the jth point (0 < j ≤ m and j ≠ i) among the m points was calculated and screened out. If dij < 0.707 d (, the distance from the center of the square monitoring point to the four corners), the jth point was divided into the ith group of data;

- (3)

- If there are points in group i + 1 that are the same characteristics as those in group i, the data in group i + 1 and group I are considered to represent the same monitoring point, and the two sets of data are combined to obtain n sets of data, where n should be equal to the number of monitoring points arranged;

- (4)

- Calculate N groups of data determined in the previous step to find the centroid of each group of data points, that is, the centroid is considered to be the center point within the painting range of the monitoring point, representing the position of the monitoring point;

- (5)

- According to the monitoring point was identified, the closest two points between the monitoring point in the second time registration point clouds and the monitoring points in the first time registration point clouds are regarded as the monitoring point with the same characteristics. Setting the distance threshold avoids matching the situation of the dislocation. When access to two of the coordinates of point clouds of monitoring points with the same characteristics, the calculation can be carried out according to the displacement calculation formula of the two-point coordinates.

3. Results

3.1. Experimental Area

3.2. Accuracy and Flight Altitude Correlation

3.3. Comparison between Different Methods

3.4. On-Site Practical Result

4. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| TLS | Terrestrial laser scanning |

| UAV | Unmanned aerial vehicle |

| HSL | Hue, Saturation, Lightness |

| RTK | Real-time kinematic |

| ALS | Airborne laser scanning |

| MLS | Mobile laser scanning |

| GA | Genetic algorithm |

| PCA | Principal Component Analysis |

| ICP | Iterative Closest Point |

| PMVS | Patch-based Multi-view Stereo |

| VTK | Visualization Toolkit |

References

- Angeli, M.G.; Pasuto, A.; Silvano, S. A critical review of landslide monitoring experiences. Eng. Geol. 2000, 55, 133–147. [Google Scholar] [CrossRef]

- Lan, H.; Zhou, C.; Wang, L.; Zhang, H.; Li, R. Land slide hazard spatial analysis and prediction using GIS in the Xiaojiang watershed, Yunnan, China. Eng Geol. 2004, 76, 109–128. [Google Scholar] [CrossRef]

- Dai, F.; Lee, C.; Ngai, Y. Landslide risk assessment and management: An overview. Eng. Geol. 2002, 64, 65–87. [Google Scholar] [CrossRef]

- Whitworth, M.; Anderson, I.; Hunter, G. Geomorphological assessment of complex landslide systems using field reconnaissance and terrestrial laser scanning. Dev. Ear. Surf. Process. 2011, 15, 459–474. [Google Scholar]

- Gao, J.; Chao, L.; Jian, W. A new method for mining deformation monitoring with GPS-RTK. Trans. Nonferrous Met. Soc. China 2011, 21, s659–s664. [Google Scholar] [CrossRef]

- Wang, S.; Zhang, Z.; Wang, C. Multistep rocky slope stability analysis based on unmanned aerial vehicle photogrammetry. Environ. Earth Sci. 2019, 78, 1–16. [Google Scholar] [CrossRef]

- Chen, J.; Liu, D. Bottom-up image detection of water channel slope damages based on superpixel segmentation and support vector machine. Adv. Eng. Inform. 2021, 47, 101205. [Google Scholar] [CrossRef]

- Li, Y.; Liu, P.; Chen, S.; Jia, K.; Liu, T. The Identification of Slope Crack Based on Convolutional Neural Network. In Proceedings of the International Conference on Artificial Intelligence and Security, Dublin, Ireland, 19–23 July 2021; Springer: Cham, Switzerland, 2021; pp. 16–26. [Google Scholar]

- Nord-Larsen, T.; Schumacher, J. Estimation of forest resources from a country wide laser scanning survey and national forest inventory data. Remote Sens. Environ. 2012, 119, 148–157. [Google Scholar] [CrossRef]

- Teza, G.; Galgaro, A.; Zaltron, N. Terrestrial laser scanner to detect landslide displacement fields: A new approach. Int. J. Remote Sens. 2007, 28, 3425–3446. [Google Scholar] [CrossRef]

- Caudal, P.; Grenon, M.; Turmel, D. Analysis of a Large Rock Slope Failure on the East Wall of the LAB Chrysotile Mine in Canada: LiDAR Monitoring and Displacement Analyses. Rock. Mech. Rock. Eng. 2017, 50, 807–824. [Google Scholar] [CrossRef]

- Yang, B.; Zang, Y.; Dong, Z. An automated method to register airborne and terrestrial laser scanning point clouds. ISPRS J. Photogram. Eng. Remote Sens. 2015, 109, 62–76. [Google Scholar] [CrossRef]

- Telling, J.; Lyda, A.; Hartzell, P.; Glennie, C. Review of Earth science research using terrestrial laser scanning. Earth-Sci. Rev. 2017, 169, 35–68. [Google Scholar] [CrossRef] [Green Version]

- Young, A.P.; Olsen, M.J.; Driscoll, N.; Rick, R.E.; Gutierrez, R.; Guza, R.T.; Johnstone, E.; Kuester, F. Comparison of airborne and terrestrial lidar estimates of seacliff erosion in Southern California. ISPRS J. Photogram. Eng. Remote Sens. 2010, 76, 421–427. [Google Scholar] [CrossRef] [Green Version]

- Ohnishi, Y.; Nishiyama, S.; Yano, T. A study of the application of digital photogrammetry to slope monitoring systems. Int. J Rock Mech. Min. Sci. 2006, 43, 756–766. [Google Scholar] [CrossRef]

- Zhao, S.; Kang, F.; Li, J. Displacement monitoring for slope stability evaluation based on binocular vision systems. Optik 2018, 171, 658–671. [Google Scholar] [CrossRef]

- Popescu, D.; Ichim, L. Image Recognition in UAV Application Based on Texture Analysis. In Proceedings of the International Conference on Advanced Concepts for Intelligent Vision Systems, Catania, Italy, 26–29 October 2015; pp. 693–704. [Google Scholar]

- Zhou, G.; Kang, C.; Shi, B. SPIE Proceedings. In Proceedings of the International Conference on Intelligent Earth Observing and Applications 2015—UAV for landslide Mapping and Deformation Analysis, Guilin, China, 23–24 October 2015. [Google Scholar]

- Lucieer, A.; Jong, S.; Turner, D. Mapping landslide displacements using Structure from Motion (SfM) and image correlation of multi-temporal UAV photography. Prog. Phys. Geogr. 2014, 38, 97–116. [Google Scholar] [CrossRef]

- Yoon, H.; Shin, J.; Spencer, B. Structural Displacement Measurement Using an Unmanned Aerial System. Comput-Aided. Civ. Inf. 2018, 33, 183–192. [Google Scholar] [CrossRef]

- Besl, P.; Mckay, N. A method for registration of 3-D shapes. IEEE. Trans. Pattern Anal. Mach. Intell. 1992, 14, 239–256. [Google Scholar] [CrossRef]

- Makadia, A.; Patterson, A.; Daniilidis, K. Fully Automatic Registration of 3D Point Clouds. IEEE. Comput. Soc. Conf. Comput. Vis. Pattern. Recg. 2006, 1, 1297–1304. [Google Scholar]

- Yang, B.; Chen, C. Automatic registration of UAV-borne sequent images and LiDAR data. ISPRS J. Photogramm. Remote Sens. 2015, 101, 262–274. [Google Scholar] [CrossRef]

- Yun, D.; Kim, S.; Heo, H. Automated registration of multi-view point clouds using sphere targets. Adv. Eng. Inf. 2015, 29, 930–939. [Google Scholar] [CrossRef]

- Gressin, A.; Mallet, C.; Demantké, J. Towards 3D lidar point cloud registration improvement using optimal neighborhood knowledge. ISPRS J. Photogramm. Remote Sens. 2013, 79, 240–251. [Google Scholar] [CrossRef] [Green Version]

- Men, H.; Gebre, B.; Pochiraju, K. Color point cloud registration with 4D ICP algorithm. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Shanghai, China, 9–13 May 2011; pp. 1511–1516. [Google Scholar]

- Yan, L.; Tan, J.; Liu, H. Registration of TLS and MLS Point Cloud Combining Genetic Algorithm with ICP. Acta Geod. Et Cartogr. Sin. 2018, 47, 528–536. [Google Scholar]

- Xue, S.; Zhang, Z.; Lv, Q.; Meng, X.; Tu, X. Point Cloud Registration Method for Pipeline Workpieces Based on PCA and Improved ICP Algorithms. In Proceedings of the IOP Conference Series: Materials Science and Engineering, Kunming, China, 22–23 June 2019; Volume 612, p. 032188. [Google Scholar]

- Rosnell, T.; Honkavaara, E. Point Cloud Generation from Aerial Image Data Acquired by a Quadrocopter Type Micro Unmanned Aerial Vehicle and a Digital Still Camera. Sensors 2012, 12, 453–480. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Luo, L.; Yang, J. A hybrid ARIMA-SVR approach for forecasting emergency patient flow. J. Ambient Intell. Hum. Comput. 2019, 10, 3315–3323. [Google Scholar] [CrossRef]

- Zhang, Z. A Flexible New Technique for Camera Calibration. IEEE. Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef] [Green Version]

- Luo, J.; Gwun, O. A comparison of SIFT, PCA-SIFT and SURF. Int. J. Image Process. 2009, 3, 143–152. [Google Scholar]

- Furukawa, Y.; Ponce, J. Accurate, dense, and robust multi-view stereopsis. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1362–1376. [Google Scholar] [CrossRef]

- Shi, Q.Z. Research on Reconstruction of Surface Surface Based on TLS Data; JiangXi University of Science and Technology: Ganzhou, China, 2015. [Google Scholar]

- Barequet, G.; Har-Peled, S. Efficiently Approximating the Minimum-Volume Bounding Box of a Point Set in Three Dimensions. J. Algorithms 2001, 38, 91–109. [Google Scholar] [CrossRef]

- Semary, N.A.; Hadhoud, M.M.; Abbas, A.M. An effective compression technique for HSL color model. J. Comput. Sci. Technol. 2011, 1, 29–33. [Google Scholar]

| No | 1 | 2 | 3 | 4 | 5 |

|---|---|---|---|---|---|

| Width × height/pixel | 5472 × 3684 | 4608 × 3405 | |||

| SIFT time-consuming/s | 4.313 | 4.582 | 2.750 | 2.752 | 2.576 |

| The number of feature points extracted by SIFT | 426,067 | 448,317 | 13,796 | 29,538 | 9216 |

| SURF time-consuming/s | 3.915 | 4.833 | 1.734 | 1.910 | 1.517 |

| The number of feature points extracted by SURF | 139,640 | 127,072 | 4850 | 9156 | 3089 |

| Scanning Parameters | Precision Grade | |||||

|---|---|---|---|---|---|---|

| Preview the Scan | Accuracy Level 1 | Accuracy Level 2 | Accuracy Level 3 | Expanded Scan | ||

| Maximum range | 120 m | 120 m | 120 m | 120 m | 340 m | |

| Scan time | 1 min | 2 min | 3 min | 10 min | 20 min | |

| The spacing of point clouds at different distances | 10 m | 15.1 mm | - | - | - | - |

| 30 m | - | 22.6 mm | 11.3 mm | 5.7 mm | 7.5 mm | |

| 300 m | - | - | - | - | 75.4 mm | |

| The number of cloud point generated | 8.7 × 105 | 3.4 × 106 | 1.38 × 108 | 5.55 × 108 | 3.12 × 108 | |

| Tool Classification | Tool Name | |

|---|---|---|

| Hardware device | UAV | DJI Matrice 600 Pro |

| TLS | Trimble TX8 | |

| Digital Camera | Canon 5D MarkIII | |

| Workstation | Dell PowerEdge R930 | |

| Software | Flight control software | DJI Go 4 |

| DJI GS Pro | ||

| TLS data processing software | Trimble Realworks 10.0.1 | |

| UAV point cloud generation software | Pix4D 4.4.12 | |

| Development environment | System development platform | Visual Studio 2015 |

| Programming language | Visual C++ | |

| Front-end interface framework | Qt 5.7.0 | |

| Image processing algorithm library | OpenCV, PCL | |

| Graphics processing and rendering visualization tools | Visualization Toolkit |

| Shooting Time | Monitoring Point Number | Monitoring Point Color | |||||

|---|---|---|---|---|---|---|---|

| R | G | B | H | S | L | ||

| 10:30 a.m. | MP 1 | 232 | 131 | 145 | 352 | 0.44 | 0.91 |

| MP 2 | 237 | 138 | 152 | 352 | 0.42 | 0.93 | |

| MP 3 | 237 | 130 | 152 | 348 | 0.45 | 0.93 | |

| MP 4 | 235 | 134 | 151 | 350 | 0.43 | 0.92 | |

| MP 5 | 240 | 132 | 144 | 353 | 0.45 | 0.94 | |

| 3:53 p.m. | MP 1 | 199 | 101 | 118 | 350 | 0.49 | 0.78 |

| MP 2 | 209 | 109 | 125 | 350 | 0.48 | 0.82 | |

| MP 3 | 192 | 111 | 119 | 354 | 0.42 | 0.75 | |

| MP 4 | 191 | 113 | 126 | 350 | 0.41 | 0.75 | |

| MP 5 | 201 | 113 | 126 | 351 | 0.44 | 0.79 | |

| Index | UAV | TLS | UAV + TLS |

|---|---|---|---|

| Number | 10 | 7 | 10 |

| Horizontal error | 10 mm | - | - |

| Inclined error | 20–30 mm | 10 mm | - |

| RMSE | 1.2 mm | 0.48 mm | 0.73 mm |

| No. | ||||||

|---|---|---|---|---|---|---|

| 1 | 0.4 | 0.4 | 0.1 | 0.6 | 0.5 | 0.1 |

| 2 | 5.1 | 20.1 | 0.6 | 20.7 | 20.7 | 0.0 |

| 3 | 0.3 | 0.3 | 0.5 | 0.7 | 0.8 | 0.1 |

| 4 | 0.6 | 0.5 | 0.2 | 0.8 | 0.6 | 0.2 |

| 5 | 0.5 | 0.7 | 0.1 | 0.9 | 0.7 | 0.2 |

| 6 | 0.5 | 0.5 | 0.2 | 0.7 | 0.8 | 0.1 |

| 7 | 0.8 | 0.4 | 0 | 0.9 | 0.6 | 0.3 |

| 8 | 1.1 | 0.6 | 0.5 | 1.4 | 2.2 | 0.8 |

| 9 | 0.7 | 0.5 | 0.5 | 1 | 1.5 | 0.4 |

| 10 | 8 | 17.1 | 0.6 | 18.9 | 17.8 | 1.1 |

| 11 | 0.8 | 0.1 | 0.2 | 0.8 | 0.8 | 0.0 |

| 12 | 0.5 | 0.1 | 0.2 | 0.6 | 0.5 | 0.1 |

| 13 | 0.5 | 0.5 | 0.2 | 0.7 | 0.6 | 0.1 |

| 14 | 0.9 | 1.1 | 0.2 | 1.4 | 1.6 | 0.2 |

| 15 | 0.7 | 0.8 | 0.3 | 1.1 | 0.2 | 0.9 |

| 16 | 0.6 | 0.7 | 0.2 | 0.9 | 0.9 | 0.0 |

| 17 | 0.6 | 0.5 | 0.3 | 0.8 | 0.6 | 0.2 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jia, H.; Zhu, G.; Guo, L.; He, J.; Liang, B.; He, S. An Improved Point Clouds Model for Displacement Assessment of Slope Surface by Combining TLS and UAV Photogrammetry. Appl. Sci. 2022, 12, 4320. https://doi.org/10.3390/app12094320

Jia H, Zhu G, Guo L, He J, Liang B, He S. An Improved Point Clouds Model for Displacement Assessment of Slope Surface by Combining TLS and UAV Photogrammetry. Applied Sciences. 2022; 12(9):4320. https://doi.org/10.3390/app12094320

Chicago/Turabian StyleJia, He, Guojin Zhu, Lina Guo, Junyi He, Binjie Liang, and Sunwen He. 2022. "An Improved Point Clouds Model for Displacement Assessment of Slope Surface by Combining TLS and UAV Photogrammetry" Applied Sciences 12, no. 9: 4320. https://doi.org/10.3390/app12094320