Abstract

The rapid development and availability of drones has raised growing interest in their numerous applications, especially for aerial remote-sensing tasks using the Internet of Drones (IoD) for smart city applications. Drones image a large-scale, high-resolution, and no visible band short wavelength infrared (SWIR) ground aerial map of the investigated area for remote sensing. However, due to the high-altitude environment, a drone can easily jitter due to dynamic weather conditions, resulting in blurred SWIR images. Furthermore, it can easily be influenced by clouds and shadow images, thereby resulting in the failed construction of a remote-sensing map. Most UAV remote-sensing studies use RGB cameras. In this study, we developed a platform for intelligent aerial remote sensing using SWIR cameras in an IoD environment. First, we developed a prototype for an aerial SWIR image remote-sensing system. Then, to address the low-quality aerial image issue and reroute the trajectory, we proposed an effective lightweight multitask deep learning-based flying model (LMFM). The experimental results demonstrate that our proposed intelligent drone-based remote-sensing system efficiently stabilizes the drone using our designed LMFM approach in the onboard computer and successfully builds a high-quality aerial remote-sensing map. Furthermore, the proposed LMFM has computationally efficient characteristics that offer near state-of-the-art accuracy at up to 6.97 FPS, making it suitable for low-cost low-power devices.

1. Introduction

An effective way of monitoring vast areas of interest is using satellite remote sensing. However, drones have evolved rapidly for smart city applications recently [1] and have become an alternative method for satellite remote sensing [2,3]. The cameras mounted on a drone can take high-resolution images of the ground from a high-altitude perspective and capture images in sequence. All images are sent to the cloud through Internet of Drone (IoD) technology [4] and constructed into an aerial map. Drones are used for several popular tasks, especially drone surveillance applications such as air quality sensing [5], environmental monitoring [6], traffic surveillance [7], agricultural monitoring [8], and so on. Compared with traditional manned aerial systems in a dull surveillance task, drones efficiently and accurately perform remote-sensing tasks.

Drones can continuously and reliably perform environment monitoring tasks for remote sensing. Drones have mounted cameras and provide high-resolution environment images of the ground in a target area. However, analyzing each frame is costly and requires a high computing time. Thus, merging the multiple images into a large-scale map is more efficient for drone remote-sensing applications. By the image stitching of multiple overlapping aerial images, we obtain a large-scale panorama called an aerial map. A large-scale aerial map makes post-processing easier and more comprehensive for object or event descriptions, possibly in a shorter time [9].

Based on [10], this review article suggests that performing remote-sensing tasks by using multi- or hyperspectral data and multitask learning is a novel approach. Thus, images acquired only from the RGB camera do not satisfy the requirements of drone remote-sensing applications, such as in agricultural applications [11]. Recently, there has been increasing demand for capturing no visible band images to improve the efficiency of drone remote-sensing applications. A spectral domain of interest is the short-wavelength infrared (SWIR) region situated between 1.1 m and 1.7 m [12]. The SWIR camera produces clear images in the SWIR spectrum and sees some objects that are blocked by the fog, rain, and mist. Thus, it is more resistant to fog or smoke, low light, or nighttime conditions [13]. Hence, it is useful for drone remote sensing, because the SWIR spectrum reveals many features not obtained in the visible spectrum.

1.1. Motivation

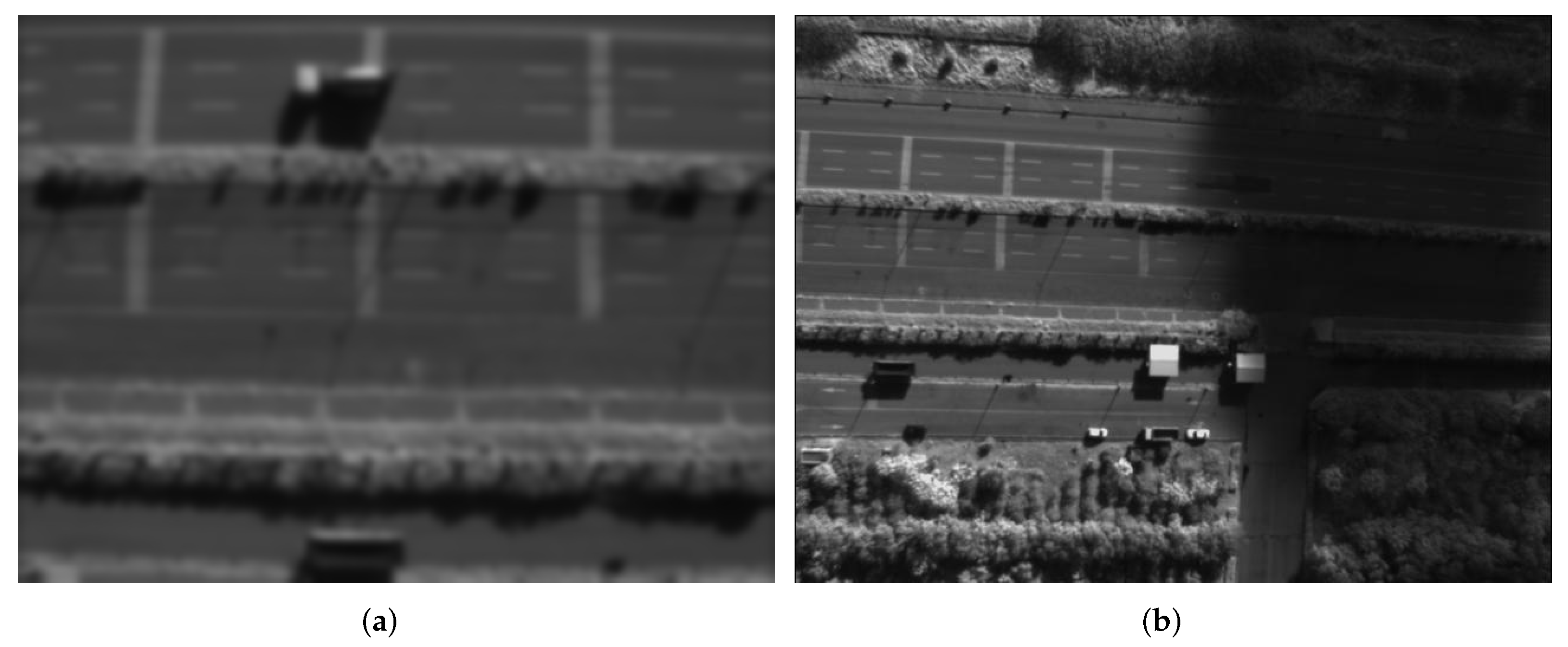

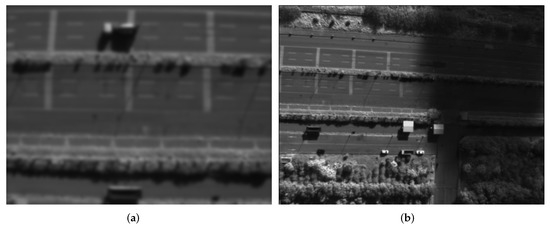

In this study, we use a drone-based aerial remote-sensing system to capture aerial SWIR images and then build an aerial SWIR map for a remote-sensing task. However, the drone is easily influenced by the high-altitude flying environment, thereby capturing unclear SWIR images (Figure 1). Generally, drone remote-sensing tasks are conducted under strong breeze conditions and in high-altitude environments. However, the drone’s jittering generates a blurred SWIR image or a shadow of the SWIR image, resulting in an unclear aerial SWIR image map. This means the remote-sensing task has failed and must be performed again. Therefore, to overcome this challenge, this study proposes a lightweight multitask deep learning-based flying model (LMFM) to achieve effective aerial SWIR map construction for remote sensing. The proposed LMFM controls the drone’s flying action according to the captured SWIR image map.

Figure 1.

Flawed SWIR images captured during the aerial remote-sensing result in re-executing the aerial remote-sensing task. (a) Blurred SWIR image. (b) Shadow caused by clouds.

1.2. Contribution

Through this study, we intend to use the deep learning model to automate the flying of drones that are subjected to an external disturbance while capturing high-quality SWIR images. The following are the main contributions of this study:

- (1)

- In this study, we develop a special platform for intelligent aerial remote sensing using SWIR cameras instead of the RGB cameras that are commonly used in most UAV remote-sensing studies in an IoD environment;

- (2)

- A novel end-to-end LMFM is proposed for remote-sensing tasks with multimodel data input and multitask output to stabilize a drone under dynamic weather conditions;

- (3)

- The proposed LMFM efficiently computes and provides near state-of-the-art accuracy at up to 6.97 FPS, making it suitable for low-cost low-power devices.

The remainder of this article is organized as follows. Section 2 first discusses the related work on drone-based remote-sensing technologies. Section 3 explains the system framework, and the problem is defined. Section 4 describes intelligent aerial remote sensing. Section 5 reports on the experimental results of the proposed framework. Finally, Section 6 presents the conclusion.

2. Related Work

Satellite remote sensing is an effective way to monitor vast interesting areas. Unfortunately, it is limited by the satellite’s revisit and the high cost of data acquisition [14]. Mohanty et al. [15] explored five approaches based on improvements of U-Net and Mask-R convolutional neural network models to detect the positions of buildings on satellite images. The work was focused on dealing with the object detection issue in the image recognition research field. However, drones have become a novel alternative method for satellite remote sensing. Recently, researchers have paid more attention to aerial maps for drone remote-sensing applications [16]. For example, Li et al. [17] designed a unified framework that includes a scale-adaptive strategy as well as circular flow anchor selection and feature extraction to detect and count vehicles from drone images simultaneously. Due to the targets in drone-based scenes being small and dense, this method extracts features and detects small objects. Huang et al. [18] developed a scene detection and estimation method using an unsupervised classification method upon completing the flight mission. The drone navigation was based on GPS information, and target recognition was applied using the histogram of oriented gradient feature extraction and linear support vector machine (SVM) algorithm. However, both the image capturing and process execution were on the ground station. Rohan et al. [19] proposed real-time object detection and tracking using a CNN with a single-shot detector architecture for real-time implementation at parrot AR drone 2. However, a major challenge for real-time implementation is reducing the computational time. They divided the computational load onto the CPU and GPU, resulting in a 10-ms computational time. Bah et al. [20] applied a CNN-based Hough transform to automatically detect crop rows in images taken from a drone. For autonomous guidance of drones in agriculture monitoring tasks, accurate detection of crop rows is required to improve the environmental quality.

Currently, drones perform numerous tasks using images and aerial panorama images captured in various working environments. They are suitable for performing the regular monitoring of a large-scale target region because of their high mobility and low-cost characteristics [21]. Through aerial panorama image construction, drones make monitoring the environment in a target area more efficient. Thus, aerial map construction for drone remote-sensing applications provides useful information and is used for deep learning model enhancements and efficiencies [9]. Therefore, aerial remote-sensing research continues to draw attention. Fang et al. [22] proposed a stitched panorama construction method using color blending calculation from the images imputed into the drone. This method exploits the similarity between neighboring pixels in a UAV image to reduce the computational time in post-processing. Bang et al. [23] presented an automatic panorama construction algorithm using blur filtering, key frame selection, and camera correction. They produced a resultant panorama image with high-quality details in a real subway construction site in Korea.

Images captured using an RGB camera is a general method in drones. However, nowadays, multispectral imaging has become an attractive sensing modality, especially for SWIR images. Belyaev et al. [24] developed an iterative aerial map at the encoder-decoder simultaneously from the infrared video captured during drone flying missions. The advantage of this scheme is that it reconstructs the images of the video sequence by using a decoder to avoid storing the aerial map, thereby lowering the bit rate. Honkavaara et al. [25] studied the best accuracy of near-infrared and SWIR hyperspectral frame cameras for moisture estimation. They indicated that drones could significantly improve their efficiency and safety in various remote-sensing applications.

Drones can improve environmental monitoring safety, utility, and efficiency. Generally, drone-based remote sensing needs to collect several images and then analyze the images for its application. However, using images acquired from drones can be more difficult than using still images, especially when using those from the SWIR camera. Therefore, this paper contributes to aerial SWIR map construction using lightweight end-to-end deep learning-based drone flying control. From our proposed aerial SWIR image map, we can detect and analyze the target in a monitoring area efficiently.

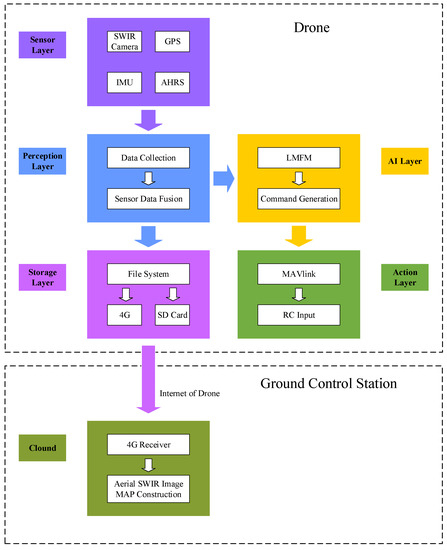

3. Remote-Sensing System Framework and Problem Defined

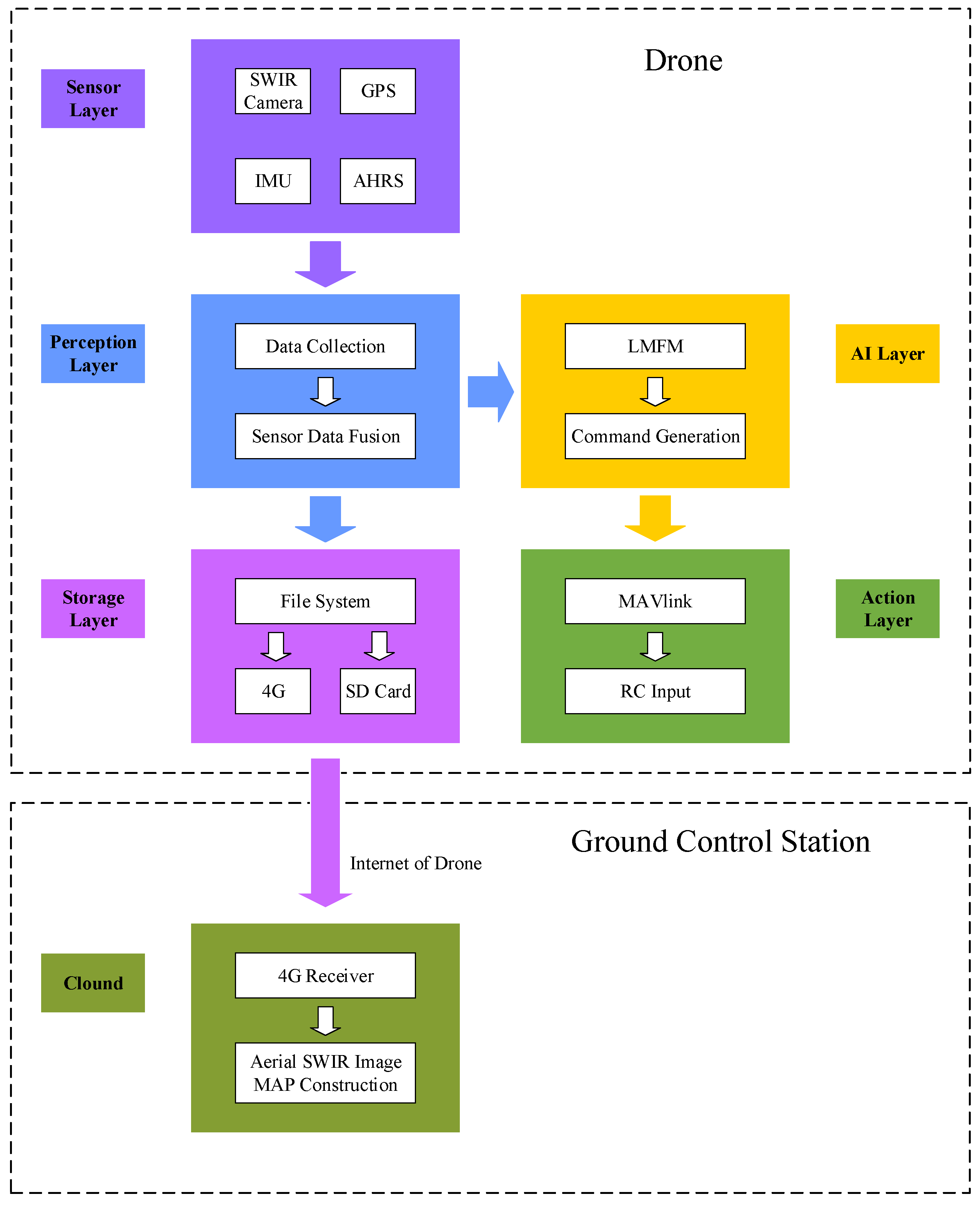

The proposed intelligent aerial remote-sensing system for constructing the aerial SWIR image map is composed of five layers for flying control and data acquisition in an onboard computer, as shown in Figure 2. The sensor layer includes all physical sensors equipped by the drone, such as an SWIR camera (Raptor OWL 640 camera), GPS, inertial measurement unit (IMU), and altitude and heading reference system (AHRS). All sensors are connected to the onboard computer, which is an Nvidia Jetson NANO, and obtain the data to send to the perception layer. The perception layer aims to capture the data from sensors and synchronize all frequencies. Our proposed LMFM was tested in the AI layer and generated a drone control command to send to the action layers. Finally, the storage layer records all data and sends it to the ground control station (GCS). This section describes the flying system settings and data acquisition in the sensor and perception layers.

Figure 2.

The structure of the whole system has five layers in the onboard computer. The aerial map construction process was performed in the ground control station.

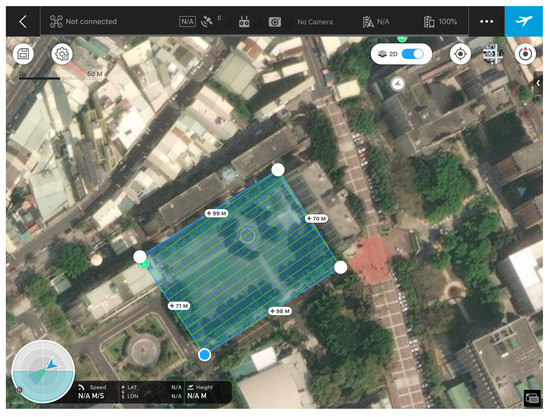

3.1. The Predefined Flight Path Planning

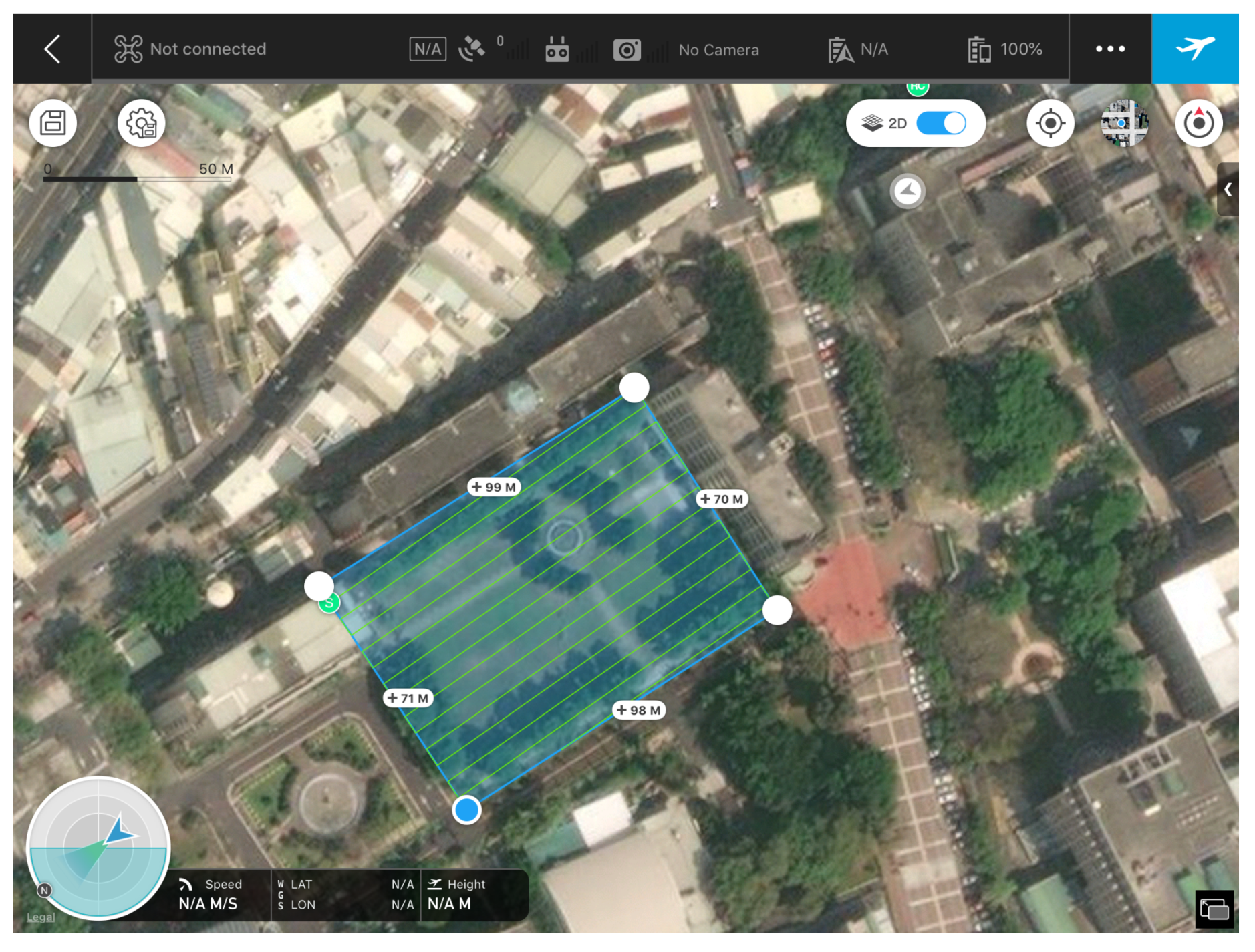

Drones are suitable for performing drone remote sensing, such as air quality and pollution monitoring. Most drone remote-sensing applications need drones to fly around a wide area and sense environmental information. Therefore, drones need a predefined path and must fly along it. Thus, a drone plans a local trajectory using the LMFM to fine-tune its motion. Here, we applied the mission planning software to plan a flying path in the GCS. The drone was powered by one battery, and the standard flight time was 27 min. The onboard computer and all sensors in the sensor layer were powered by another 4S 1000-mAh battery. Both the Zenmuse X4S (RGB camera) and Raptor OWL 640 SWIR cameras were mounted on the drone. The predefined flying path was planned on the GCS, as shown in Figure 3. Then, the drone was deployed to capture only the SWIR images in the predefined flying path.

Figure 3.

The predefined flying path for a drone remote-sensing task.

3.2. The SWIR Camera and Frame Grabber

Traditional RGB cameras, such as the Zenmuse X4S, cannot see features behind fog or smoke, making them more environmentally limited than SWIR. SWIR cameras can see through fog or smoke [26] and capture clear images from longer distances. Additionally, the SWIR spectrum shows several features that are not obtained in the visible spectrum. Thus, we used an SWIR camera equipped with a 0.4∼0.7-m sensor spectral and 1.1∼2.3-m lens filter to capture SWIR images at 1.1-m and 1.7-m bands. These images bring useful complementary information to situations where visible imaging cameras are ineffective [27].

Due to the SWIR camera (Raptor OWL 640) applying the camera link standard for data communication we could not use traditional methods, such as Ethernet and USB to capture the images. The camera link standard is a serial communication protocol for camera interface applications. It was designed for industrial video products and image capturing using a frame grabber (iPORT CL-U3 external frame grabbers) connected to the SWIR camera with a camera link cable and another USB port link to an onboard computer. In this study, we applied the USB 2.0 cable between the frame grabber and the onboard computer, since USB 3.0 devices generate radio interference at 2.4 GHz, causing damage to GPS devices [28]. Consequently, shields can be used to isolate USB 3.0 radiation interference signals or apply the USB 2.0 cable to the entire system.

3.3. The GPS, IMU, and AHRS Model

The location information in the aerial SWIR map construction system can be captured from a 5-Hz GPS, IMU, and AHRS. The GPS provides the location information of drones, such as the current time, latitude, longitude, and height, whereas AHRS shows the current flight state, such as the roll, pitch, and heading of the drone for navigation. IMU measures a body’s specific force, angular rate, and orientation of the body. The onboard computer captures data from the GPS, IMU, and AHRS through the universal asynchronous receiver–transmitter interface and then writes it into the exchangeable image file (EXIF) of each aerial image.

3.4. The Onboard Computer: Nvidia Jetson NANO

An Nvidia Jetson NANO was used as the onboard computer for process computing and image processing. Because the SWIR image is a high-resolution image, it needs a small-sized computing platform with a powerful graphic computing capacity and low energy consumption. Thus, the NANO module was mounted on a carrier board which had a 4-core ARM A57 @ 1.43 GHz processor, 128-core Maxwell 472 GFLOPs GPU, 4 GB RAM, 4 USB 3.0 interfaces, and a USB 2.0 Micro-B interface. The power usage in performance was around 5 W. It was little in size, low power consumption, and lightweight, making it suitable for drone application. However, we downgraded the four USB 3.0 interfaces to USB 2.0 cables to avoid any adverse effect on the GPS devices. The operating system was Ubuntu 18.04, and the flying motion command was sent to the drone using the DroneKit library via a serial communication connector.

3.5. Problem Defined

Drone-based remote sensing is an alternative method for satellite remote-sensing. Drones capture images in sequence, which are then merged into a large-scale map in the ground control station. However, the drone is easily influenced in the high-altitude flying environment, thereby capturing unclear SWIR images. Thus, merging several unclear SWIR images would result in a blurred large-scale map, requiring performing the tasks again. This study deals with the sequence of unclear SWIR images in a drone-based remote-sensing system using automatic drone flying control. When the blurred SWIR image or the shadow area of the SWIR image has been detected, the drone must replan a new trajectory or maintain its altitude. We propose an LMFM that uses a lightweight end-to-end deep learning architecture with multimodel data input and multitask output to solve the multitask problem and for implementation in low-cost low-power devices. The proposed LMFM is presented in depth in the following section.

4. Intelligent Aerial Remote Sensing

The drone’s remote sensing captures several clear SWIR images for aerial map construction. Thus, the proposed lightweight multitask deep learning model ensures that each aerial SWIR image is clear and unaffected by infleunce from wind by controlling the flying action of the drone during flight. When the flying mission was complete, aerial SWIR image map construction was performed in the GCS using image stitching technology.

4.1. The Lightweight Multitask Deep Learning Model in a Drone in Edge Computing

Our learning approach aims at reactively predicting drone motion for the captured SWIR images. Due to the drone jitter and weather conditions, the captured SWIR image may become blurred. The blur leads to losing sharpness at the edges and increasing invisibility. Therefore, to improve the image quality, it is imperative to control the drone action during the acquisition process.

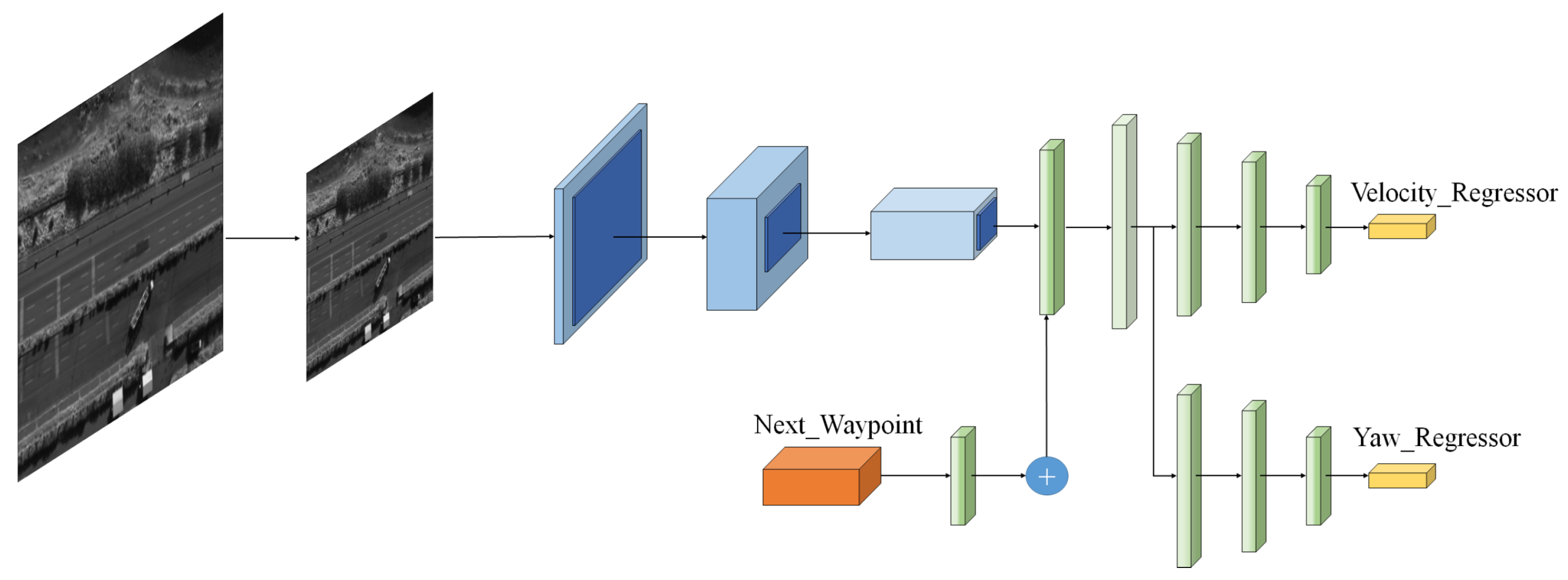

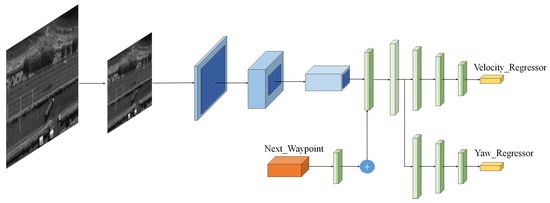

In this study, we propose a lightweight multitask deep learning-based flying model (LMFM) in the AI layer to deal with this issue, as shown in Algorithm 1. Since we aimed to reduce the AI model processing time and implementation in a real drone, our learning approach reactively predicts drone motion from the captured SWIR image using a lightweight double CNN with small size and parameters, as shown in Figure 4. The motion predicting the result, which is converted into a flying control command two regression values later (rotor rotation speed: 4), is the remote control input.

Figure 4.

The lightweight multitask deep learning model to achieve real-time computational capacity in a drone.

The image was preprocessed and resized and then input into the deep learning model. Initially, an image captured from the SWIR camera is resized to 224 × 224. The normalized image is input to a convolutional layer (222 × 222 × 8) followed by a max-pooling layer (111 × 111 × 8), a second convolutional layer (109 × 109 × 16) followed by a second max-pooling layer (54 × 54 × 16), and a third convolutional layer (52 × 52 × 32) followed by a max-pooling (26 × 26 × 32), in which the output is flattened and concatenated with the feature from “Next_Waypoint”. “Next_Waypoint” is all the remaining waypoints of the predefined path. Then, the concatenated feature is shared and fed into two subnetworks to predict the velocity and yaw.

The subnetworks include a dense layer with a depth of 256, whose output is sent to another dense layer with a depth of 32. Finally, the architecture was separated into two branches, which were two linear regressions, including the “Velocity_Regressor” and “Yaw_Regressor” such that v and denote the velocity and steering angle, respectively.

| Algorithm 1 Flying control via LMFM |

| Input:SWIR_image, next_waypoint, LMFM model Output:

|

A drone mathematical model is a multiple-input-multiple-output system with six degrees of freedom for its operation. It consists of the position and Euler angles , which are the roll, pitch, and yaw, respectively. We assumed that the drone flew at a fixed altitude to perform the remote-sensing tasks. Consequently, we approximated the control system as follows:

Thus, the dynamics of the drone are defined as follows:

The motion of a drone can be fully controlled by changing the propeller rotation at speed , According to [29], the propeller rotation can be controlled using the control vector :

where b and d denote the thrust factor and drag factor coefficients, respectively. Consequently, we could convert the propeller rotation into a command and send it to the Pixhawk via the MAVlink communication protocol. Thus, drones could fly using the predicted velocity and yaw angle.

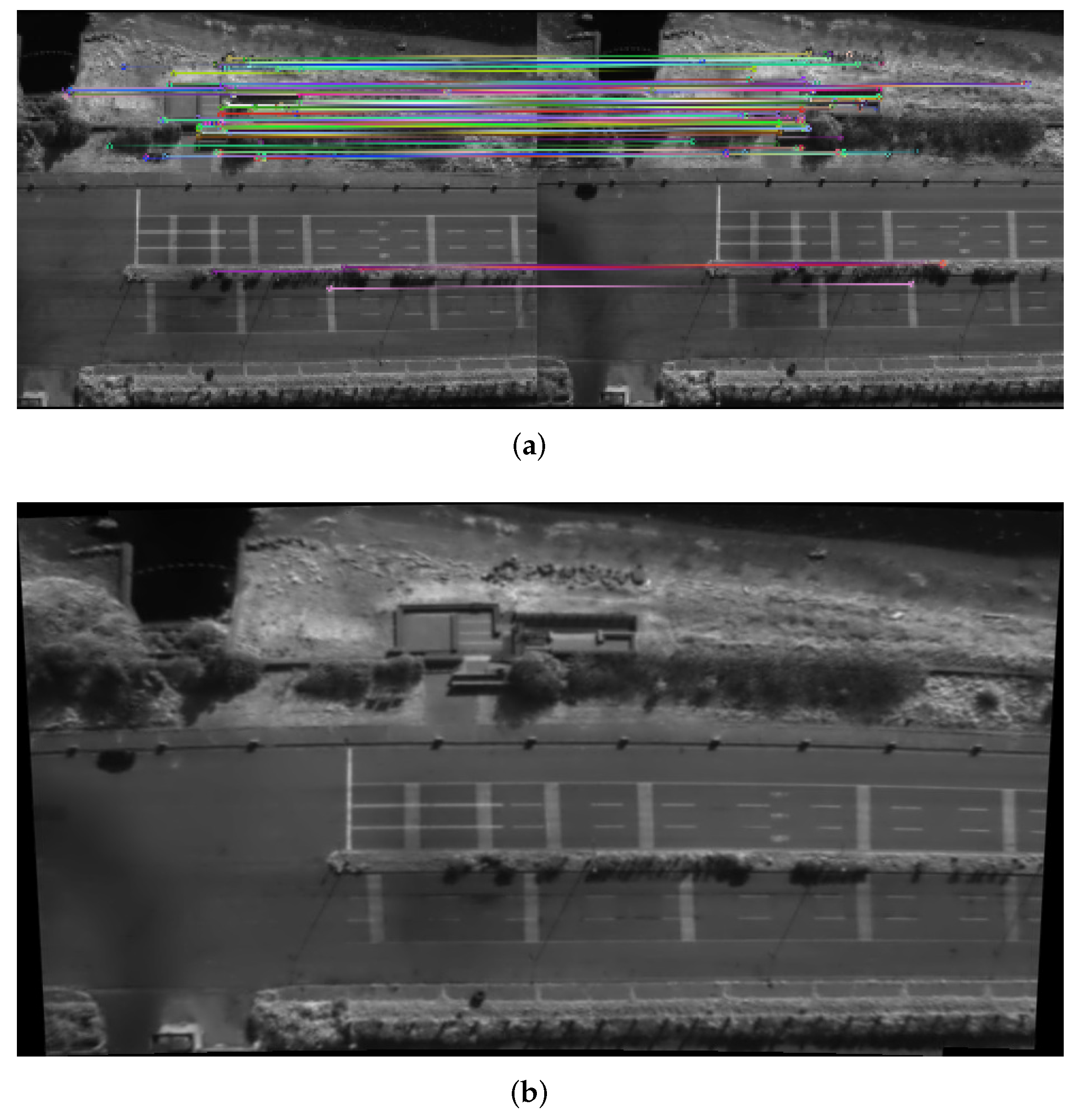

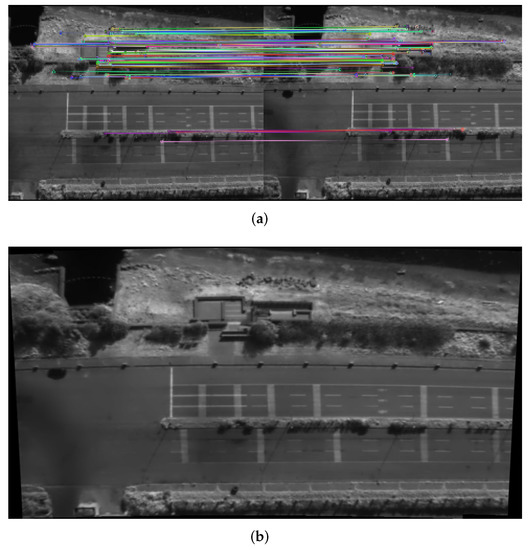

4.2. Aerial Remote Sensing Map Construction on GCS in Cloud Computing

Analyses of the aerial SWIR image map show that it made the drone’s remote-sensing application faster and more comprehensive with a good perception of the environment. Its processed information was useful. The aerial remote-sensing map is the seamless joining of overlapping aerial images to generate a large-scale image. When the drone flies along the mission path in the target region, a series of SWIR image sequences is captured. The aerial SWIR image map construction model merges neighboring SWIR images to form a wider view and high-resolution aerial panoramic map with a large coverage area. Figure 5 shows two aerial SWIR images with overlapping regions, and select key features in these images are shown in Figure 5a, resulting in a stitched image, as shown in Figure 5b.

Figure 5.

Aerial SWIR remote-sensing map construction using two SWIR images. (a) The key feature selection process. (b) The result of aerial SWIR image map construction.

5. Experiment Results

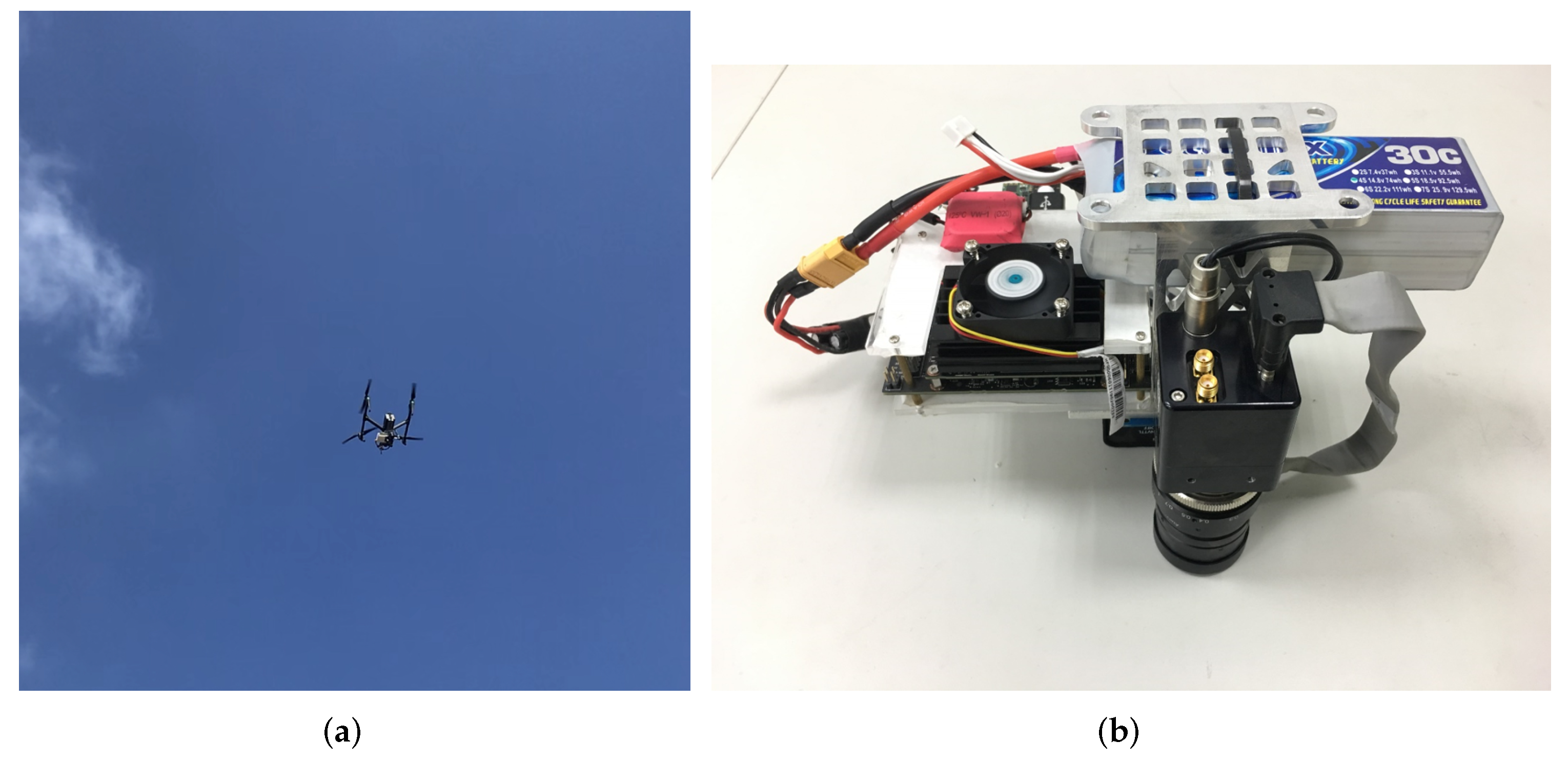

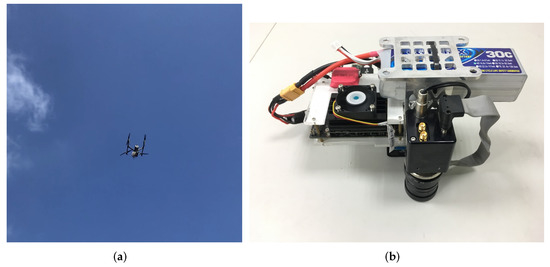

The outdoor experiments were conducted in an open field near National Formosa University in Yunlin County, Taiwan. Figure 6 shows the entire system mounted on the drone, and Table 1 lists the payload weight and power budget. The total payload of all items at an external fixation and equipped on the drone was 1053 g, with a power budget smaller than 17.5 W. The total payload included the SWIR camera and its kits, fixation, onboard computer, GPS, battery, cable, and frame grabber. The payload weight and power budget management are crucial in an aerial mission and affect the drone’s flying time. In our experiment, our proposed framework ensured the mission time being more than 30 min.

Figure 6.

Prototype of the intelligent aerial remote-sensing system. (a) Drone flying using the proposed system. (b) The whole payload of the proposed system.

Table 1.

Payload weight and power budget.

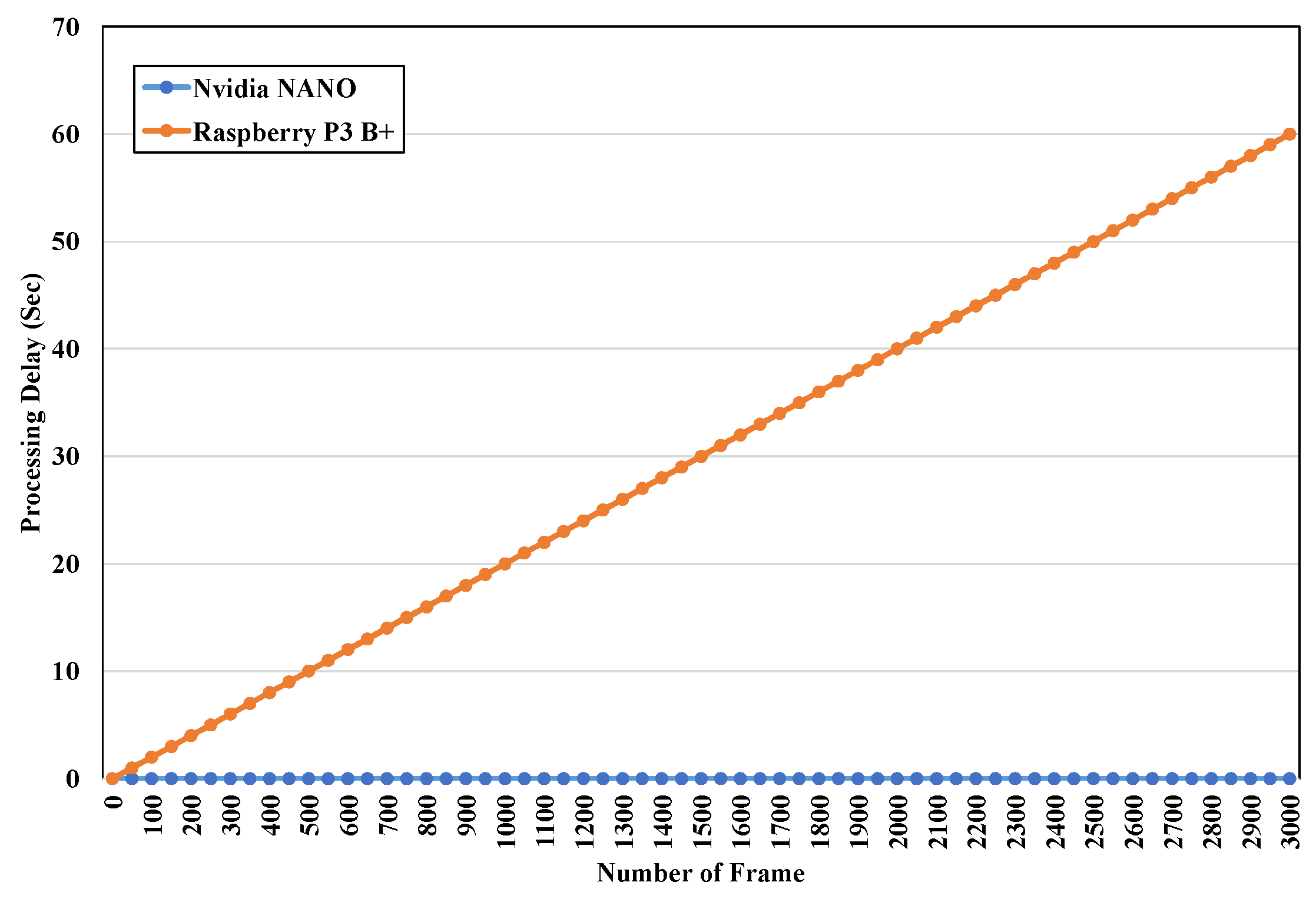

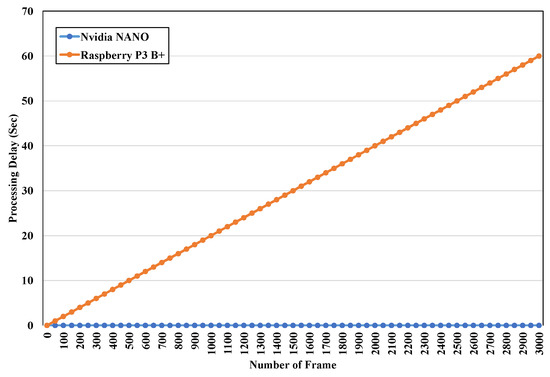

5.1. The Performance of the Onboard Computer

Most drone surveillance applications use a Raspberry Pi as the onboard computer because it is cheaper than the Nvidia Jetson NANO. In this study, we applied the Nvidia Jetson NANO as the onboard computer equipped with the drone. It requires more hardware and power than the Raspberry Pi. We compared the processing delay between Nvidia NANO and Raspberry Pi 3B+, as shown in Figure 7. The process executed on the NANO was recorded in real time with no processing delay during the aerial SWIR image-capturing task. However, executing the same process on a Raspberry Pi 3B+ caused a processing delay. Note that at 3000 frames, the processing delay was 60 s, making the Raspberry Pi 3B+ unsuitable for handling the SWIR image process in real-time applications. Although the Raspberry Pi has the advantage of a small size, light weight, and low power consumption, it had lower graphic computing performance than the Nvidia Jetson NANO. Thus, the Nvidia Jetson NANO was more suitable to mount on an UAV to perform the SWIR image collection task.

Figure 7.

Processing delay of Raspberry Pi 3B+ and Nvidia NANO.

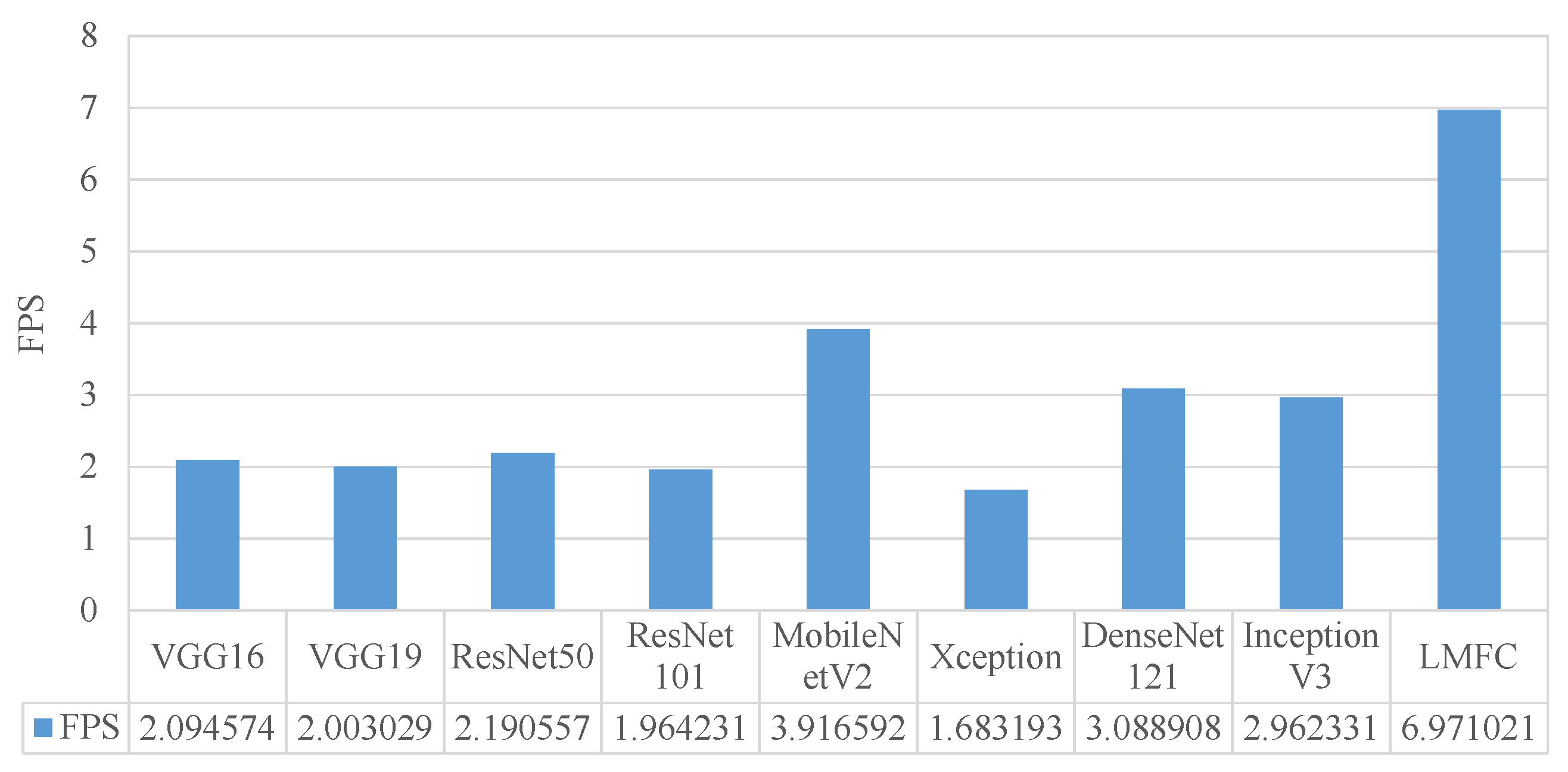

5.2. Lightweight Deep Learning Model in an Onboard Computer

Generally, the drone’s onboard computer is equipped to perform real-time aerial remote-sensing tasks. The deep learning model requires a lightweight architecture design because of the limited computing capacity of the onboard computer. Thus, we analyzed the performance of the average execution time for our proposed model. First, we controlled the drone to flying in the air and collected the human pilot’s behavior dataset as the ground truth. Then, we divided the dataset into training and test sets. All the data were allocated to the training and test sets in 0.6 and 0.4 ratios, respectively. The initial learning rate was set to 0.1 with 500 epochs. Thus, we applied the frames per second (), mean square error (), and mean absolute error () as the crucial metrics for this study:

where n is the total test samples and is the processing time of the deep learning model:

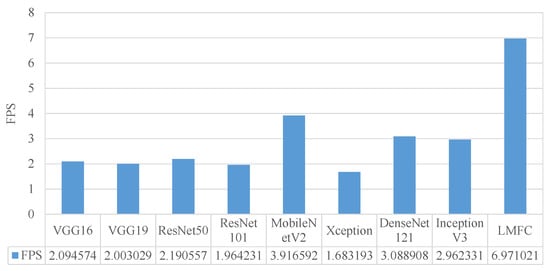

where is the predicted value and is the original value. From Figure 8, it can be seen that our proposed LMFC model had the highest FPS compared with the other models. This was attributed to the LMFC model being a lightweight model. Furthermore, it predicted the two regression values—velocity and yaw—for multitasking effectively. Consequently, the deep learning-based methods, by using our proposed LMFC model, controlled the drone as well as obtaining clear SWIR images for remote-sensing tasks. The model’s performance analysis is shown in Table 2. Our proposed LMFM had similar low and values to the other models. However, our proposed LMFM had better values and a lower computational time. Thus, it was the most suitable one to be mounted on the onboard computer.

Figure 8.

The results for the FPS when the deep learning model was running on the onboard computer.

Table 2.

Model performance comparison when performing on the onboard computer.

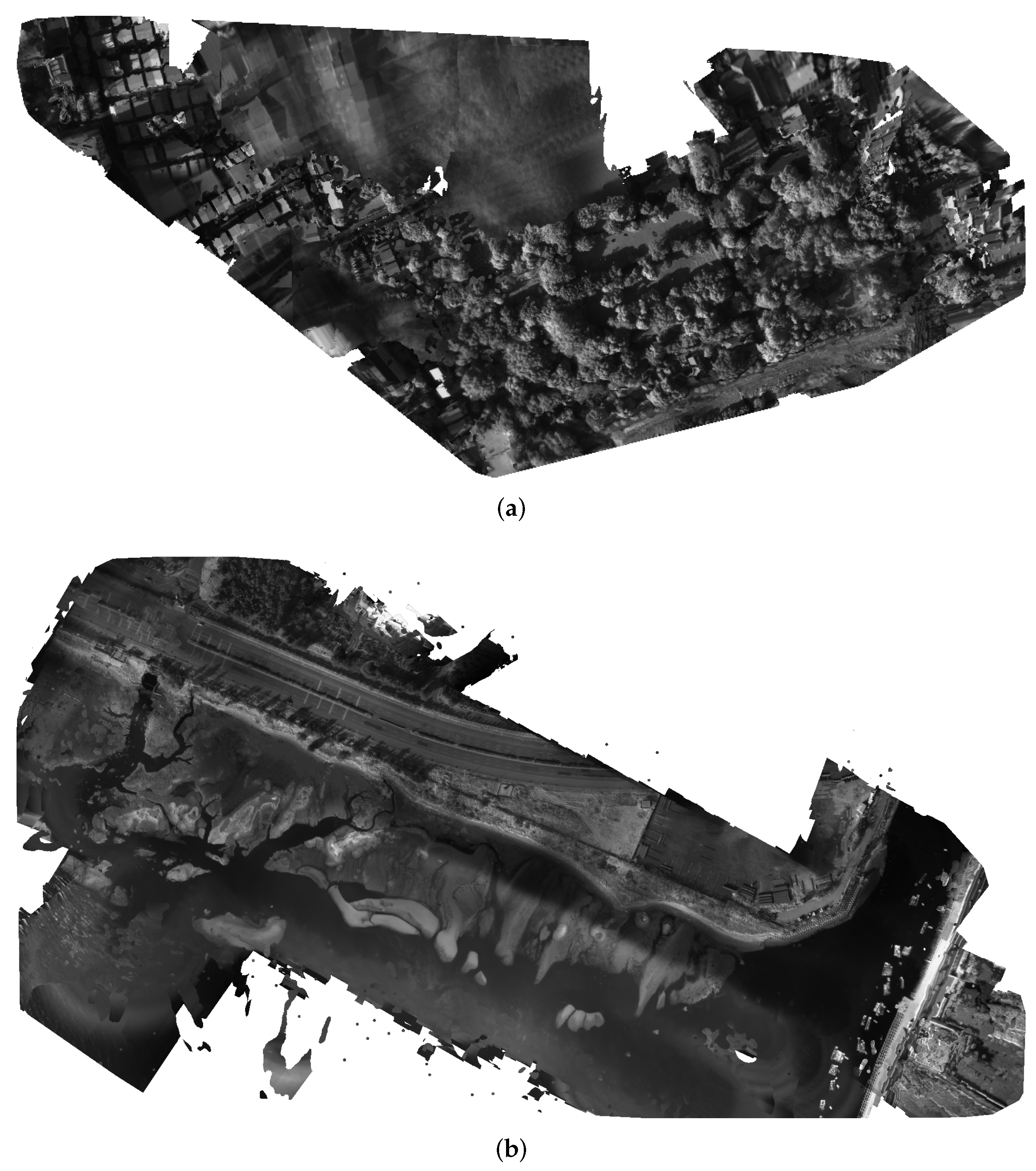

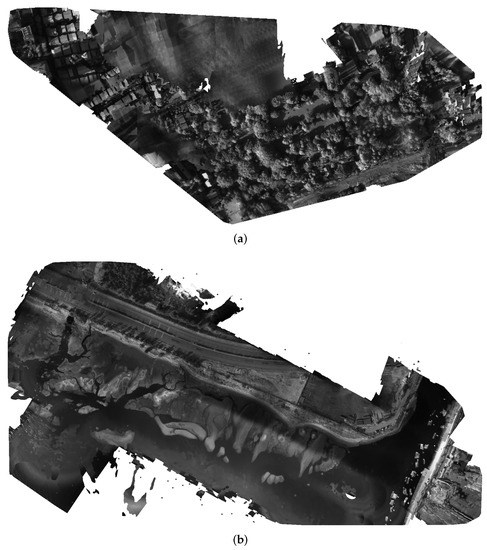

5.3. Aerial Remote Sensing Map Construction

A drone is a useful way to monitor vast areas of interest, and it offers low costs and efficient data collection with high-resolution images for remote sensing. During drone surveillance tasks, it flies at a high altitude—sometimes higher than 100 meters—and may encounter a strong breeze. A strong breeze or moderate gale occurring at a high altitude causes the drone to jitter. Figure 9a shows the results of the aerial SWIR image map construction, which applied the drone control strategy from [24] and contained several blurry areas. Due to the drone jittering in a high-altitude environment, the SWIR image became blurred and generated a blurred area on the SWIR image map. The blurred aerial SWIR image map cannot be used for any aerial remote-sensing tasks.

Figure 9.

The results of aerial remote-sensing map construction at 300 meters high. (a) The blurred aerial SWIR image map. (b) The high-quality aerial SWIR image map via our proposed LMFM method.

Our proposed LMFM relatively controlled the drone action using the captured SWIR image quality. Through a lightweight CNN with a small size and small parameters, the drone can predict the next action and calculate a new waypoint to capture a clear SWIR image, as shown in Figure 9b. The resulting aerial SWIR image map shows the full information in the SWIR spectrum and helps analyze the items or objects in it. The industrial area was close to the sea, and the drone flew at 300 m above it in the sky, with a strong breeze always available. However, the aerial map in Figure 9b is clear and has no blurred areas, because our proposed method could capture high-quality SWIR images in real time via controlling the drone’s action using a lightweight deep learning model.

6. Conclusions

A drone is a useful way to monitor vast areas of interest. It offers low costs and efficient data collection with high-resolution images and can be used to gather information for building a large-scale image map for remote sensing and other smart city applications. It combines both edge and cloud computing to achieve useful aerial remote sensing using the IoD. However, drones perform these tasks at high altitudes, which causes them to jitter from strong breezes. Thus, the captured images may become blurred and combine to form an unuseful aerial remote-sensing map, especially for high-resolution SWIR images. In this study, we developed a useful intelligent aerial remote-sensing map system to overcome this issue. Our proposed system platform includes the hardware, software, data grabber device, IoD device, and SWIR camera to build an aerial remote-sensing map. Furthermore, we designed a lightweight multitask deep learning-based flying model (LMFM) to plan the future flying motion of the drone using currently captured SWIR images. The experimental results show that the designed intelligent aerial remote-sensing system effectively stabilized the drone using the LMFM for edge computing to build a high-quality aerial SWIR map under wind disturbance.

Author Contributions

Conceptualization, C.-Y.L. and H.-J.L.; methodology, C.-Y.L.; software, H.-J.L.; validation, H.-J.L., M.-Y.Y. and J.L.; formal analysis, C.-Y.L.; investigation, H.-J.L.; resources, M.-Y.Y. and J.L.; data curation, H.-J.L.; writing—original draft preparation, C.-Y.L.; writing—review and editing, H.-J.L.; visualization, M.-Y.Y.; supervision, J.L.; project administration, H.-J.L.; funding acquisition, M.-Y.Y. and J.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work is supported in part by the Ministry of Science and Technology of Taiwan under grant MOST 109-2221-E-150-004-MY3, and National Space Organization under grant NSPO-S-108135, Taiwan.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Shakhatreh, H.; Sawalmeh, A.H.; Al-Fuqaha, A.; Dou, Z.; Almaita, E.; Khalil, I.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles (UAVs): A Survey on Civil Applications and Key Research Challenges. IEEE Access 2019, 7, 48572–48634. [Google Scholar] [CrossRef]

- Li, Y.; Peng, B.; He, L.; Fan, K.; Tong, L. Road Segmentation of Unmanned Aerial Vehicle Remote Sensing Images Using Adversarial Network with Multiscale Context Aggregation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 2279–2287. [Google Scholar] [CrossRef]

- Padró, J.C.; Muñoz, F.J.; Planas, J.; Pons, X. Comparison of Four UAV Georeferencing Methods for Environmental Monitoring Purposes Focusing on the Combined use with Airborne and Satellite Remote Sensing Platforms. Int. J. Appl. Earth Obs. Geoinf. 2019, 75, 130–140. [Google Scholar] [CrossRef]

- Gharibi, M.; Boutaba, R.; Waslander, S.L. Internet of drones. IEEE Access 2016, 4, 1148–1162. [Google Scholar] [CrossRef]

- Yang, Y.; Zheng, Z.; Bian, K.; Song, L.; Han, Z. Real-Time Profiling of Fine-Grained Air Quality Index Distribution Using UAV Sensing. IEEE Internet Things J. 2018, 5, 186–198. [Google Scholar] [CrossRef]

- Ratajczak, R.; Crispim-Junior, C.F.; Faure, E.; Fervers, B.; Tougne, L. Automatic Land Cover Reconstruction from Historical Aerial Images: An Evaluation of Features Extraction and Classification Algorithms. IEEE Trans. Image Process. 2019, 28, 3357–3371. [Google Scholar] [CrossRef]

- Chen, Z.; Wang, C.; Wen, C.; Teng, X.; Chen, Y.; Guan, H.; Luo, H.; Cao, L.; Li, J. Vehicle Detection in High-Resolution Aerial Images via Sparse Representation and Superpixels. IEEE Trans. Geosci. Remote Sens. 2016, 54, 103–116. [Google Scholar] [CrossRef]

- Fan, Z.; Lu, J.; Gong, M.; Xie, H.; Goodman, E.D. Automatic Tobacco Plant Detection in UAV Images via Deep Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 876–887. [Google Scholar] [CrossRef]

- Kim, J.; Kim, S.; Ju, C.; Son, H.I. Unmanned Aerial Vehicles in Agriculture: A Review of Perspective of Platform, Control, and Applications. IEEE Access 2019, 7, 105100–105115. [Google Scholar] [CrossRef]

- Osco, L.P.; Junior, J.M.; Ramos, A.P.M.; de Castro Jorge, L.A.; Fatholahi, S.N.; de Andrade Silva, J.; Matsubara, E.T.; Pistori, H.; Gonçalves, W.N.; Li, J. A review on deep learning in UAV remote sensing. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 2–21. [Google Scholar] [CrossRef]

- Li, Z.; Isler, V. Large Scale Image Mosaic Construction for Agricultural Applications. IEEE Robot. Autom. Lett. 2016, 1, 295–302. [Google Scholar] [CrossRef]

- Nicolo, F.; Schmid, N.A. Long Range Cross-Spectral Face Recognition: Matching SWIR against Visible Light Images. IEEE Trans. Inf. Forensics Secur. 2012, 7, 1717–1726. [Google Scholar] [CrossRef]

- Ghiass, R.S.; Arandjelović, O.; Bendada, A.; Maldague, X.P. Infrared face recognition: A comprehensive review of methodologies and databases. Pattern Recognit. 2014, 47, 2807–2824. [Google Scholar] [CrossRef] [Green Version]

- Bhardwaj, A.; Sam, L.; Akanksha; Martín-Torres, F.J.; Kumar, R. UAVs as remote sensing platform in glaciology: Present applications and future prospects. Remote Sens. Environ. 2016, 175, 196–204. [Google Scholar] [CrossRef]

- Mohanty, S.P.; Czakon, J.; Kaczmarek, K.A.; Pyskir, A.; Tarasiewicz, P.; Kunwar, S.; Rohrbach, J.; Luo, D.; Prasad, M.; Fleer, S.; et al. Deep Learning for Understanding Satellite Imagery: An Experimental Survey. Front. Artif. Intell. 2020, 3, 1–21. [Google Scholar] [CrossRef]

- Savkin, A.V.; Huang, H. A Method for Optimized Deployment of a Network of Surveillance Aerial Drones. IEEE Syst. J. 2019, 13, 4474–4477. [Google Scholar] [CrossRef]

- Li, W.; Li, H.; Wu, Q.; Chen, X.; Ngan, K.N. Simultaneously Detecting and Counting Dense Vehicles from Drone Images. IEEE Trans. Ind. Electron. 2019, 66, 9651–9662. [Google Scholar] [CrossRef]

- Huang, Y.P.; Sithole, L.; Lee, T.T. Structure from Motion Technique for Scene Detection Using Autonomous Drone Navigation. IEEE Trans. Syst. Man Cybern. Syst. 2019, 49, 2559–2570. [Google Scholar] [CrossRef]

- Rohan, A.; Rabah, M.; Kim, S.H. Convolutional Neural Network-Based Real-Time Object Detection and Tracking for Parrot AR Drone 2. IEEE Access 2019, 7, 69575–69584. [Google Scholar] [CrossRef]

- Bah, M.D.; Hafiane, A.; Canals, R. CRowNet: Deep Network for Crop Row Detection in UAV Images. IEEE Access 2019, 8, 5189–5200. [Google Scholar] [CrossRef]

- Mukherjee, A.; Misra, S.; Raghuwanshi, N.S. A survey of unmanned aerial sensing solutions in precision agriculture. J. Netw. Comput. Appl. 2019, 148, 5189–5200. [Google Scholar] [CrossRef]

- Fang, F.; Wang, T.; Fang, Y.; Zhang, G. Fast Color Blending for Seamless Image Stitching. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1115–1119. [Google Scholar] [CrossRef]

- Bang, S.; Kim, H.; Kim, H. UAV-based Automatic Generation of High-Resolution Panorama at a Construction Site with a Focus on Preprocessing for Image Stitching. Autom. Constr. 2017, 84, 70–80. [Google Scholar] [CrossRef]

- Belyaev, E.; Forchhammer, S. An Efficient Storage of Infrared Video of Drone Inspections via Iterative Aerial Map Construction. IEEE Signal Process. Lett. 2019, 26, 1157–1161. [Google Scholar] [CrossRef]

- Honkavaara, E.; Eskelinen, M.A.; Pölönen, I.; Saari, H.; Ojanen, H.; Mannila, R.; Holmlund, C.; Hakala, T.; Litkey, P.; Rosnell, T.; et al. Remote Sensing of 3-D Geometry and Surface Moisture of a Peat Production Area Using Hyperspectral Frame Cameras in Visible to Short-Wave Infrared Spectral Ranges Onboard a Small Unmanned Airborne Vehicle (UAV). IEEE Trans. Geosci. Remote Sens. 2016, 54, 5440–5454. [Google Scholar] [CrossRef] [Green Version]

- Cárdenas, J.; Figueroa, M. Multimodal Image Registration between SWIR and LWIR Images in an Embedded System. In Proceedings of the 2018 21st Euromicro Conference on Digital System Design (DSD), Prague, Czech Republic, 29–31 August 2018. [Google Scholar]

- Zucco, M.; Pisani, M.; Mari, D. A novel hyperspectral camera concept for SWIR-MWIR applications. In Proceedings of the 2017 IEEE International Workshop on Metrology for AeroSpace (MetroAeroSpace), Padua, Italy, 21–23 June 2017. [Google Scholar]

- Intel. USB 3.0* Radio Frequency Interference Impact on 2.4GHz Wireless Devices. Available online: https://www.intel.com/content/www/us/en/products/docs/io/universal-serial-bus/usb3-frequency-interference-paper.html/ (accessed on 1 April 2012).

- Merheb, A.R.; Noura, H.; Bateman, F. Emergency Control of AR Drone Quadrotor UAV Suffering a Total Loss of One Rotor. IEEE/ASME Trans. Mechatronics 2017, 22, 961–971. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).