GAIA: Great-Distribution Artificial Intelligence-Based Algorithm for Advanced Large-Scale Commercial Store Management

Abstract

:1. Introduction

2. Related Works

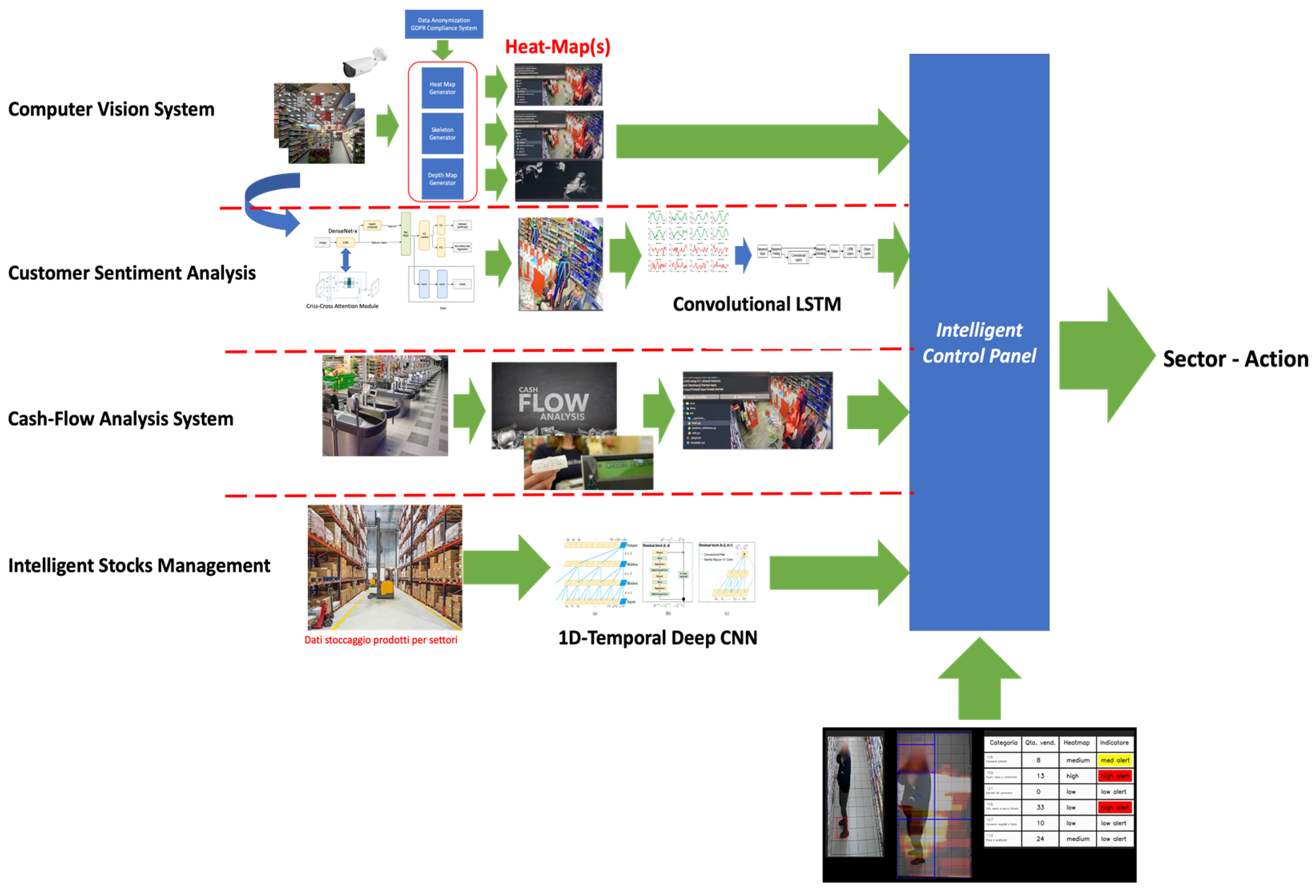

3. Methods and Materials: The Proposed Pipeline

3.1. GAIA: Computer Vision Subsystem

3.2. GAIA: The Customer Sentiment Analysis System

3.3. GAIA: The Cashflow Analysis System

3.4. GAIA: The Intelligent Stock Management System

3.5. GAIA: The Intelligent Control Panel

- IF OCVSS > ThCVSS AND “customer interested” AND OCashflow < ThCashflow AND “medium risk of out-of-stock” THEN “high-risk assessment”;

- IF OCVSS < ThCVSS AND “customer undecided” AND OCashflow > ThCashflow AND “low risk of out-of-stock” THEN “low-risk assessment”

4. Experimental Results

5. Discussion and Conclusions

6. Patents

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Oyekanlu, E.; Scoles, K. Towards Low-Cost, Real-Time, Distributed Signal and Data Processing for Artificial Intelligence Applications at Edges of Large Industrial and Internet Networks. In Proceedings of the 2018 IEEE First International Conference on Artificial Intelligence and Knowledge Engineering (AIKE), Laguna Hills, CA, USA, 26–28 September 2018; pp. 166–167. [Google Scholar] [CrossRef]

- Priya, S.N.; Swadesh, G.; Thirivikraman, K.; Ali, M.V.; Kumar, M.R. Autonomous Supermarket Robot Assistance using Machine Learning. In Proceedings of the 2021 7th International Conference on Advanced Computing and Communication Systems (ICACCS), Coimbatore, India, 19–20 March 2021; pp. 996–999. [Google Scholar] [CrossRef]

- Paik, S.; Rim, S. Demand Control Chart. In Proceedings of the 2006 IEEE International Conference on Service Operations and Logistics, and Informatics, Shanghai, China, 21–23 June 2006; pp. 587–592. [Google Scholar] [CrossRef]

- Mitsukuni, K.; Tsushima, I.; Komoda, N. Evaluation of optimal ordering method for coupling point production system. In Proceedings of the 1999 7th IEEE International Conference on Emerging Technologies and Factory Automation, Proceedings ETFA ‘99 (Cat. No.99TH8467), Barcelona, Spain, 18–21 October 1999; Volume 2, pp. 1469–1474. [Google Scholar] [CrossRef]

- Rosado, L.; Goncalves, J.; Costa, J.; Ribeiro, D.; Soares, F. Supervised learning for Out-of-Stock detection in panoramas of retail shelves. In Proceedings of the 2016 IEEE International Conference on Imaging Systems and Techniques (IST), Chania, Greece, 4–6 October 2016; pp. 406–411. [Google Scholar] [CrossRef]

- Šećerović, L.; Papić, V. Detecting missing products in commercial refrigerators using convolutional neural networks. In Proceedings of the 2018 14th Symposium on Neural Networks and Applications (NEUREL), Belgrade, Serbia, 20–21 November 2018; pp. 1–4. [Google Scholar] [CrossRef]

- Damian, A.I.; Piciu, L.; Marinescu, C.; Tapus, N. ProVe—Self-supervised pipeline for automated product replacement and cold-starting based on neural language models. In Proceedings of the 2021 23rd International Conference on Control Systems and Computer Science (CSCS), Bucharest, Romania, 26–28 May 2021; pp. 98–105. [Google Scholar] [CrossRef]

- Haihan, L.; Guanglei, Q.; Nana, H.; Xinri, D. Shopping Recommendation System Design Based On Deep Learning. In Proceedings of the 2021 6th International Conference on Intelligent Computing and Signal Processing (ICSP), Xi’an, China, 9–11 April 2021; pp. 998–1001. [Google Scholar] [CrossRef]

- Utku, A.; Akcayol, M.A. A New Deep Learning-Based Prediction Model for Purchase Time Prediction. In Proceedings of the 2021 6th International Conference on Computer Science and Engineering (UBMK), Ankara, Turkey, 15–17 September 2021; pp. 6–9. [Google Scholar] [CrossRef]

- Sarwar, M.A.; Daraghmi, Y.-A.; Liu, K.-W.; Chi, H.-C.; Ik, T.-U.; Li, Y.-L. Smart Shopping Carts Based on Mobile Computing and Deep Learning Cloud Services. In Proceedings of the 2020 IEEE Wireless Communications and Networking Conference (WCNC), Seoul, Korea, 25–28 May 2020; pp. 1–6. [Google Scholar] [CrossRef]

- Shekokar, N.; Kasat, A.; Jain, S.; Naringrekar, P.; Shah, M. Shop and Go: An innovative approach towards shopping using Deep Learning and Computer Vision. In Proceedings of the 2020 Third International Conference on Smart Systems and Inventive Technology (ICSSIT), Tirunelveli, India, 20–22 August 2020; pp. 1201–1206. [Google Scholar] [CrossRef]

- Zhang, S.; Zhang, D.; Zhong, H.; Wang, G. A Multiclassification Model of Sentiment for E-Commerce Reviews. IEEE Access 2020, 8, 189513–189526. [Google Scholar] [CrossRef]

- Liu, B. Sentiment Analysis: Mining Opinions Sentiments and Emotions; Cambridge University Press: New York, NY, USA, 2015. [Google Scholar]

- Liu, B. Sentiment analysis and subjectivity. In Handbook of Natural Language Processing; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Cambria, E. Affective computing and sentiment analysis. IEEE Intell. Syst. 2016, 31, 102–107. [Google Scholar] [CrossRef]

- Rogojanu, I.; Suciu, G.; Ditu, M.-C.; Pasat, A. Smart Shopping Technologies for Indoor Markets. In Proceedings of the 2018 IEEE International Conference on Computational Science and Engineering (CSE), Bucharest, Romania, 29–31 October 2018; pp. 99–103. [Google Scholar] [CrossRef]

- Hu, T.-L. Mobile Shopping Website Interaction Consumer Shopping Behaviour and Website App Loyalty—An Empirical Investigation of the Nine Mobile Shopping Website App. Available online: http://globalbizresearch.org/Singapore_Conference2015/pdf/S567.pdf (accessed on 25 April 2022).

- Pironti, M.; Bagheri, M.; Pisano, P. Can proximity technologies impact on organisation business model—An empirical approach. Int. J. Technol. Transf. Commer. 2017, 15, 19–37. [Google Scholar] [CrossRef]

- Puccinelli, N.; Goodstein, R.C.; Grewal, D.; Price, R.; Raghubir, P.; Stewart, D. Customer Experience Management in Retailing: Understanding the Buying Process. J. Retail. 2009, 85, 15–30. [Google Scholar] [CrossRef]

- Lin, X.Y.; Ho, T.W.; Fang, C.C.; Yen, Z.S.; Yang, B.J.; Lai, F. A mobile indoor positioning system based on iBeacon technology. In Proceedings of the Engineering in Medicine and Biology Society (EMBC) 2015 37th Annual International Conference of the IEEE, Milan, Italy, 25–29 August 2015; pp. 4970–4973. [Google Scholar]

- Vochin, M.; Vulpe, A.; Suciu, G.; Boicescu, L. Intelligent Displaying and Alerting System Based on an Integrated Communications Infrastructure and Low-Power Technology. In World Conference on Information Systems and Technologies; Springer: Cham, Switzerland, 2017; pp. 135–141. [Google Scholar]

- Dong, Y.; Ye, X.; He, X. A novel camera calibration method combined with calibration toolbox and genetic algorithm. In Proceedings of the 2016 IEEE 11th Conference on Industrial Electronics and Applications (ICIEA), Hefei, China, 5–7 June 2016; pp. 1416–1420. [Google Scholar] [CrossRef]

- Liu, P.; Zhang, J.; Guo, K. A Camera Calibration Method Based on Genetic Algorithm. In Proceedings of the 2015 7th International Conference on Intelligent Human-Machine Systems and Cybernetics, Hangzhou, China, 26–27 August 2015; pp. 565–568. [Google Scholar] [CrossRef]

- Lu, L.; Li, H. Study of camera calibration algorithm based on spatial perpendicular intersect. In Proceedings of the 2010 2nd International Conference on Signal Processing Systems, Dalian, China, 5–7 July 2010; pp. V3-125–V3-128. [Google Scholar] [CrossRef]

- Tan, S.; Lu, G.; Jiang, Z.; Huang, L. Improved YOLOv5 Network Model and Application in Safety Helmet Detection. In Proceedings of the 2021 IEEE International Conference on Intelligence and Safety for Robotics (ISR), Tokoname, Japan, 4–6 March 2021; pp. 330–333. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Chen, X.; Zhou, Z.; Ying, Y.; Qi, D. Real-time Human Segmentation using Pose Skeleton Map. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; pp. 8472–8477. [Google Scholar] [CrossRef]

- Xu, C.; Yu, X.; Wang, Z.; Ou, L. Multi-View Human Pose Estimation in Human-Robot Interaction. In Proceedings of the IECON 2020 The 46th Annual Conference of the IEEE Industrial Electronics Society, Singapore, 18–21 October 2020; pp. 4769–4775. [Google Scholar] [CrossRef]

- Lie, W.-N.; Lin, G.-H.; Shih, L.-S.; Hsu, Y.; Nguyen, T.H.; Nhu, Q.N.Q. Fully Convolutional Network for 3D Human Skeleton Estimation from a Single View for Action Analysis. In Proceedings of the 2019 IEEE International Conference on Multimedia & Expo Workshops (ICMEW), Shanghai, China, 8–12 July 2019; pp. 1–6. [Google Scholar] [CrossRef]

- Chen, N.; Chang, Y.; Liu, H.; Huang, L.; Zhang, H. Human Pose Recognition Based on Skeleton Fusion from Multiple Kinects. In Proceedings of the 2018 37th Chinese Control Conference (CCC), Wuhan, China, 25–27 July 2018; pp. 5228–5232. [Google Scholar] [CrossRef]

- Sajjad, F.; Ahmed, A.F.; Ahmed, M.A. A Study on the Learning Based Human Pose Recognition. In Proceedings of the 2017 9th IEEE-GCC Conference and Exhibition (GCCCE), Manama, Bahrain, 8–11 May 2017; pp. 1–8. [Google Scholar] [CrossRef]

- Ding, M.; Fan, G. Articulated and Generalized Gaussian Kernel Correlation for Human Pose Estimation. IEEE Trans. Image Process. 2016, 25, 776–789. [Google Scholar] [CrossRef] [PubMed]

- Wibawa, I.P.D.; Machbub, C.; Rohman, A.S.; Hidayat, E.M.I. Improving Dynamic Bounding Box using Skeleton Keypoints for Hand Pose Estimation. In Proceedings of the 2020 6th International Conference on Interactive Digital Media (ICIDM), Bandung, Indonesia, 14–15 December 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Ryu, H.; Kim, S.-H.; Hwang, Y. Skeleton-based Human Action Recognition Using Spatio-Temporal Geometry (ICCAS 2019). In Proceedings of the 2019 19th International Conference on Control, Automation and Systems (ICCAS), Jeju, Korea, 15–18 October 2019; pp. 329–332. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar] [CrossRef]

- Zilong, H.; Wang, X.; Huang, L.; Huang, C.; Wei, Y.; Liu, W. CCNet: Criss-Cross Attention for Semantic Segmentation. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019; pp. 603–612. [Google Scholar] [CrossRef] [Green Version]

- Jingyao, W.; Naigong, Y.; Firdaous, E. Gesture recognition matching based on dynamic skeleton. In Proceedings of the 2021 33rd Chinese Control and Decision Conference (CCDC), Kunming, China, 22–24 May 2021; pp. 1680–1685. [Google Scholar] [CrossRef]

- Liu, J.; Zhang, T.; Gou, Y.; Wang, X.; Li, B.; Guan, W. Convolutional LSTM networks for seawater temperature prediction. In Proceedings of the 2019 IEEE International Conference on Signal, Information and Data Processing (ICSIDP), Chongqing, China, 11–13 December 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Zhu, G.; Zhang, L.; Yang, L.; Mei, L.; Shah, S.A.A.; Bennamoun, M.; Shen, P. Redundancy and Attention in Convolutional LSTM for Gesture Recognition. IEEE Trans. Neural Netw. Learn. Syst. 2020, 31, 1323–1335. [Google Scholar] [CrossRef] [PubMed]

- Sambrekar, P.; Chickerur, S. Movie Frame Prediction Using Convolutional Long Short Term Memory. In Proceedings of the 2019 2nd International Conference on Intelligent Computing, Instrumentation and Control Technologies (ICICICT), Kannur, India, 5–6 July 2019; pp. 1–5. [Google Scholar] [CrossRef]

- Bai, S.; Kolter, J.Z.; Koltun, V. An Empirical Evaluation of Generic Convolutional and Recurrent Networks for Sequence Modeling. arXiv 2018, arXiv:1803.01271. Available online: https://arxiv.org/abs/1803.01271 (accessed on 25 April 2022).

- Oord, A.V.D.; Dieleman, S.; Zen, H.; Simonyan, K.; Vinyals, O.; Graves, A.; Kalchbrenner, N.; Senior, A.; Kavukcuoglu, K. WaveNet: A Generative Model for Raw Audio. arXiv 2016, arXiv:1609.03499. Available online: https://arxiv.org/abs/1609.03499 (accessed on 25 April 2022).

- Tompson, J.; Goroshin, R.; Jain, A.; LeCun, Y.; Bregler, C. Efficient Object Localization Using Convolutional Networks. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Boston, MA, USA, 7–12 June 2015; pp. 648–656. [Google Scholar] [CrossRef] [Green Version]

- Shao, S.; Zhao, Z.; Li, B.; Xiao, T.; Yu, G.; Zhang, X.; Sun, J. CrowdHuman: A Benchmark for Detecting Human in a Crowd. arXiv 2018, arXiv:1805.00123. [Google Scholar]

- Duan, H.; Zhao, Y.; Chen, K.; Shao, D.; Lin, D.; Dai, B. Revisiting Skeleton-based Action Recognition. arXiv 2021, arXiv:2104.13586. [Google Scholar]

| Receipt Fields |

|---|

| Data |

| Hours |

| Amount of the receipt |

| Type of sold stock (with stock identification code) |

| Quantity of sold stock |

| Receipt Fields |

|---|

| Quantity of product in stock |

| Quantity of the last purchase order |

| Product promotion (active/not active) |

| Deep Network | AP (Average Precision) | mIoU |

|---|---|---|

| Faster-R-CNN (ResNet backbone) | 93.75% | 80.09% |

| Mask-R-CNN (DenseNet backbone) | 93.91% | 83.22% |

| YOLOv5 | 94.05% | 83.17% |

| Proposed | 95.33% | 85.07% |

| Deep Network | Accuracy |

|---|---|

| Graph-Convolutional Network | 91.31% |

| 3D-CNN | 92.07% |

| Faster-R-CNN | 89.65% |

| Mask-R-CNN | 90.83% |

| Proposed | 92.91% |

| Deep Network | Accuracy |

|---|---|

| Graph-Convolutional Network | 83.80% |

| 3D-CNN | 84.88% |

| Faster-R-CNN | 77.87% |

| Mask-R-CNN | 79.02% |

| Proposed | 82.01% |

| Deep Network | Accuracy |

|---|---|

| Deep LSTM | 91.25% |

| MLP | 90.96% |

| SVM | 87.91% |

| Proposed | 94.32% |

| GAIA System Structure | High Risk | Medium Risk | Low Risk |

|---|---|---|---|

| full pipeline | 91.42% | 93.54% | 90.00% |

| without computer vision block | 85.71% | 87.09% | 86.66% |

| without customer sentiment block | 82.85% | 90.32% | 83.33% |

| without cashflow analyzer block | 80.09% | 80.64% | 83.33% |

| without intelligent stock management | 85.71% | 87.09% | 83.33% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Giaconia, C.; Chamas, A. GAIA: Great-Distribution Artificial Intelligence-Based Algorithm for Advanced Large-Scale Commercial Store Management. Appl. Sci. 2022, 12, 4798. https://doi.org/10.3390/app12094798

Giaconia C, Chamas A. GAIA: Great-Distribution Artificial Intelligence-Based Algorithm for Advanced Large-Scale Commercial Store Management. Applied Sciences. 2022; 12(9):4798. https://doi.org/10.3390/app12094798

Chicago/Turabian StyleGiaconia, Cettina, and Aziz Chamas. 2022. "GAIA: Great-Distribution Artificial Intelligence-Based Algorithm for Advanced Large-Scale Commercial Store Management" Applied Sciences 12, no. 9: 4798. https://doi.org/10.3390/app12094798

APA StyleGiaconia, C., & Chamas, A. (2022). GAIA: Great-Distribution Artificial Intelligence-Based Algorithm for Advanced Large-Scale Commercial Store Management. Applied Sciences, 12(9), 4798. https://doi.org/10.3390/app12094798