Assessing the Applicability of Machine Learning Models for Robotic Emotion Monitoring: A Survey

Abstract

1. Introduction

2. Background

2.1. Human Signal Sources

2.1.1. Brain Signals

2.1.2. Heart Signals

2.1.3. Skin Signals

2.1.4. Lungs Signals

2.1.5. Imaging Signals

2.1.6. Gait Sequences

2.1.7. Speech Signals

2.2. ML Models

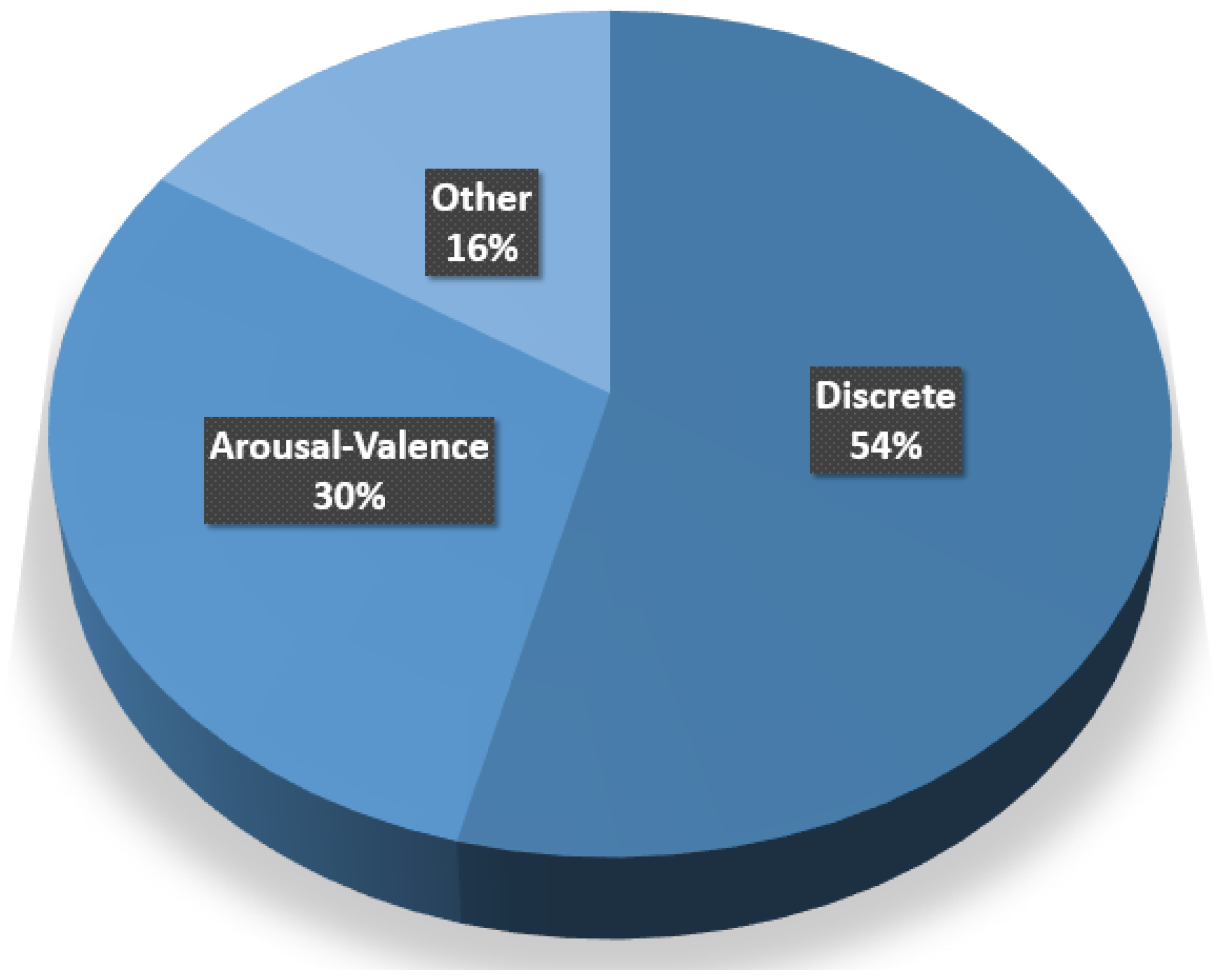

2.3. Emotional Levels

2.3.1. Discrete Emotions

2.3.2. Continuous Emotions

3. Methods

4. Results and Discussion

| Authors | Parcitipant No. | Source | Datasets | Methods | Accuracy Valence | Accuracy Arousal |

|---|---|---|---|---|---|---|

| Altun et al. [125] | 32 | Tactile | Tactile | DT | 56 | 48 |

| Mohammadi et al. [126] | 32 | Brain | EEG | KNN | 86.75 | 84.05 |

| Wiem et al. [127] | 24 | Fusion | ECG, RV | SVM | 69.47 | 69.47 |

| Wiem et al. [128] | 25 | Fusion | ECG, RV | SVM | 68.75 | 68.50 |

| Wiem et al. [129] | 24 | Fusion | ECG, RV | SVM | 56.83 | 54.73 |

| Yonezawa et al. [130] | 18 | Tactile | Tactile | Fuzzy | 69.1 | 63.1 |

| Alazrai et al. [131] | 32 | Brain | EEG | SVM | 88.9 | 89.8 |

| Bazgir et al. [132] | 32 | Brain | EEG | SVM | 91.1 | 91.3 |

| Henia et al. [133] | 27 | Fusion | ECG, GSR, ST, RV | SVM | 57.44 | 59.57 |

| Marinoiu et al. [134] | 7 | Imaging | RGB, 3D | NN | 36.2 | 37.8 |

| henia et al. [135] | 24 | Fusion | ECG, EDA, ST, Resp. | SVM | 59.57 | 60.41 |

| Salama et al. [136] | 32 | Imaging | RGB | NN | 87.44 | 88.49 |

| Pandey et al. [137] | 32 | Imaging | RGB | NN | 63.5 | 61.25 |

| Su et al. [138] | 12 | Fusion | EEG, RGB | Fusion | 72.8 | 77.2 |

| Ullah et al. [139] | 32 | Brain | EEG | DT | 77.4 | 70.1 |

| Yin et al. [140] | 457 | Skin | EDA | NN | 73.43 | 73.65 |

| Algarni et al. [141] | 94 | Imaging | RGB | NN | 99.4 | 96.88 |

| Panahi et al. [142] | 58 | Heart | ECG | SVM | 78.32 | 76.83 |

| Kumar et al. [106] | 94 | Imaging | RGB | NN | 83.79 | 83.79 |

| Martínez-Tejada et al. [116] | 40 | Brain | EEG | SVM | 59 | 68 |

5. Summary

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Twenge, J.M.; Joiner, T.E.; Rogers, M.L.; Martin, G.N. Increases in depressive symptoms, suicide-related outcomes, and suicide rates among US adolescents after 2010 and links to increased new media screen time. Clin. Psychol. Sci. 2018, 6, 3–17. [Google Scholar] [CrossRef]

- Novotney, A. The risks of social isolation. Am. Psychol. Assoc. 2019, 50, 32, Erratum in Am. Psychol. Assoc. 2019, 22, 2020. [Google Scholar]

- Mushtaq, R.; Shoib, S.; Shah, T.; Mushtaq, S. Relationship between loneliness, psychiatric disorders and physical health? A review on the psychological aspects of loneliness. J. Clin. Diagn. Res. JCDR 2014, 8, WE01. [Google Scholar] [CrossRef] [PubMed]

- Loades, M.E.; Chatburn, E.; Higson-Sweeney, N.; Reynolds, S.; Shafran, R.; Brigden, A.; Linney, C.; McManus, M.N.; Borwick, C.; Crawley, E. Rapid systematic review: The impact of social isolation and loneliness on the mental health of children and adolescents in the context of COVID-19. J. Am. Acad. Child Adolesc. Psychiatry 2020, 59, 1218–1239. [Google Scholar] [CrossRef]

- Snell, K. The rise of living alone and loneliness in history. Soc. Hist. 2017, 42, 2–28. [Google Scholar] [CrossRef]

- Bemelmans, R.; Gelderblom, G.J.; Jonker, P.; De Witte, L. Socially assistive robots in elderly care: A systematic review into effects and effectiveness. J. Am. Med Dir. Assoc. 2012, 13, 114–120. [Google Scholar] [CrossRef]

- Cooper, S.; Di Fava, A.; Vivas, C.; Marchionni, L.; Ferro, F. ARI: The social assistive robot and companion. In Proceedings of the 2020 29th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Naples, Italy, 31 August–4 September 2020; pp. 745–751. [Google Scholar]

- Castelo, N.; Schmitt, B.; Sarvary, M. Robot Or Human? How Bodies and Minds Shape Consumer Reactions to Human-Like Robots. ACR N. Am. Adv. 2019, 47, 3. [Google Scholar]

- Fox, O.R. Surgeon Completes 100th Knee Replacement Using Pioneering Robot in Bath. Somersetlive, 11 December 2020. [Google Scholar]

- Case Western Reserve University. 5 Medical Robots Making a Difference in Healthcare at CWRU. Available online: https://online-engineering.case.edu/blog/medical-robots-making-a-difference (accessed on 19 December 2022).

- Mouchet-Mages, S.; Baylé, F.J. Sadness as an integral part of depression. Dialogues Clin. Neurosci. 2008, 10, 321. [Google Scholar] [CrossRef]

- Dzedzickis, A.; Kaklauskas, A.; Bucinskas, V. Human emotion recognition: Review of sensors and methods. Sensors 2020, 20, 592. [Google Scholar] [CrossRef]

- Mohammed, S.N.; Hassan, A.K.A. A survey on emotion recognition for human robot interaction. J. Comput. Inf. Technol. 2020, 28, 125–146. [Google Scholar]

- Saxena, A.; Khanna, A.; Gupta, D. Emotion recognition and detection methods: A comprehensive survey. J. Artif. Intell. Syst. 2020, 2, 53–79. [Google Scholar] [CrossRef]

- Yadav, S.P.; Zaidi, S.; Mishra, A.; Yadav, V. Survey on machine learning in speech emotion recognition and vision systems using a recurrent neural network (RNN). Arch. Comput. Methods Eng. 2022, 29, 1753–1770. [Google Scholar] [CrossRef]

- Brain Basics: The Life and Death of a Neuron. Available online: https://www.ninds.nih.gov/health-information/public-education/brain-basics/brain-basics-life-and-death-neuron (accessed on 19 December 2022).

- Louis, E.K.S.; Frey, L.; Britton, J.; Hopp, J.; Korb, P.; Koubeissi, M.; Lievens, W.; Pestana-Knight, E. Electroencephalography (EEG): An Introductory Text and Atlas of Normal and Abnormal Findings in Adults. Child. Infants 2016. [Google Scholar] [CrossRef]

- Malmivuo, J.; Plonsey, R. 13.1 INTRODUCTION. In Bioelectromagnetism; Oxford University Press: Oxford, UK, 1995. [Google Scholar]

- Soundariya, R.; Renuga, R. Eye movement based emotion recognition using electrooculography. In Proceedings of the 2017 Innovations in Power and Advanced Computing Technologies (i-PACT), Vellore, India, 21–22 April 2017; pp. 1–5. [Google Scholar]

- Lim, J.Z.; Mountstephens, J.; Teo, J. Emotion recognition using eye-tracking: Taxonomy, review and current challenges. Sensors 2020, 20, 2384. [Google Scholar] [CrossRef] [PubMed]

- Schurgin, M.; Nelson, J.; Iida, S.; Ohira, H.; Chiao, J.; Franconeri, S. Eye movements during emotion recognition in faces. J. Vis. 2014, 14, 14. [Google Scholar] [CrossRef]

- Nickson, C. Non-Invasive Blood Pressure. 2020. Available online: https://litfl.com/non-invasive-blood-pressure/ (accessed on 19 December 2022).

- Porter, M. What Happens to Your Body When You’Re Stressed—and How Breathing Can Help. 2021. Available online: https://theconversation.com/what-happens-to-your-body-when-youre-stressed-and-how-breathing-can-help-97046 (accessed on 19 December 2022).

- Joseph, G.; Joseph, A.; Titus, G.; Thomas, R.M.; Jose, D. Photoplethysmogram (PPG) signal analysis and wavelet de-noising. In Proceedings of the 2014 Annual International Conference on Emerging Research Areas: Magnetics, Machines and Drives (AICERA/iCMMD), Kottayam, India, 24–26 July 2014; pp. 1–5. [Google Scholar]

- Jones, D. The Blood Volume Pulse—Biofeedback Basics. 2018. Available online: https://www.biofeedback-tech.com/articles/2016/3/24/the-blood-volume-pulse-biofeedback-basics (accessed on 19 December 2022).

- Tyapochkin, K.; Smorodnikova, E.; Pravdin, P. Smartphone PPG: Signal processing, quality assessment, and impact on HRV parameters. In Proceedings of the 2019 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Berlin, Germany, 23–27 July 2019; pp. 4237–4240. [Google Scholar]

- Pollreisz, D.; TaheriNejad, N. A simple algorithm for emotion recognition, using physiological signals of a smart watch. In Proceedings of the 2017 39th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Jeju, Korea, 11–15 July 2017; pp. 2353–2356. [Google Scholar]

- Tayibnapis, I.R.; Yang, Y.M.; Lim, K.M. Blood Volume Pulse Extraction for Non-Contact Heart Rate Measurement by Digital Camera Using Singular Value Decomposition and Burg Algorithm. Energies 2018, 11, 1076. [Google Scholar] [CrossRef]

- John Hopkins Medicine. Electrocardiogram. Available online: https://www.hopkinsmedicine.org/health/treatment-tests-and-therapies/electrocardiogram (accessed on 19 December 2022).

- Shi, Y.; Ruiz, N.; Taib, R.; Choi, E.; Chen, F. Galvanic skin response (GSR) as an index of cognitive load. In Proceedings of the CHI’07 Extended Abstracts on Human Factors in Computing Systems, San Jose, CA, USA, 28 April–3 May 2007; pp. 2651–2656. [Google Scholar]

- Farnsworth, B. What is GSR (Galvanic Skin Response) and How Does It Work. 2018. Available online: https://imotions.com/blog/learning/research-fundamentals/gsr/ (accessed on 19 December 2022).

- McFarland, R.A. Relationship of skin temperature changes to the emotions accompanying music. Biofeedback Self-Regul. 1985, 10, 255–267. [Google Scholar] [CrossRef]

- Ghahramani, A.; Castro, G.; Becerik-Gerber, B.; Yu, X. Infrared thermography of human face for monitoring thermoregulation performance and estimating personal thermal comfort. Build. Environ. 2016, 109, 1–11. [Google Scholar] [CrossRef]

- Homma, I.; Masaoka, Y. Breathing rhythms and emotions. Exp. Physiol. 2008, 93, 1011–1021. [Google Scholar] [CrossRef]

- Chu, M.; Nguyen, T.; Pandey, V.; Zhou, Y.; Pham, H.N.; Bar-Yoseph, R.; Radom-Aizik, S.; Jain, R.; Cooper, D.M.; Khine, M. Respiration rate and volume measurements using wearable strain sensors. NPJ Digit. Med. 2019, 2, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Massaroni, C.; Lopes, D.S.; Lo Presti, D.; Schena, E.; Silvestri, S. Contactless monitoring of breathing patterns and respiratory rate at the pit of the neck: A single camera approach. J. Sens. 2018, 2018, 4567213. [Google Scholar] [CrossRef]

- Neulog. Respiration Monitor Belt Logger Sensor NUL-236. Available online: https://neulog.com/respiration-monitor-belt/ (accessed on 19 December 2022).

- Kwon, K.; Park, S. An optical sensor for the non-invasive measurement of the blood oxygen saturation of an artificial heart according to the variation of hematocrit. Sens. Actuators A Phys. 1994, 43, 49–54. [Google Scholar] [CrossRef]

- Tian, Y.; Kanade, T.; Cohn, J.F. Facial expression recognition. In Handbook of Face Recognition; Springer: Berlin/Heidelberg, Germany, 2011; pp. 487–519. [Google Scholar]

- Revina, I.M.; Emmanuel, W.S. A survey on human face expression recognition techniques. J. King Saud Univ.-Comput. Inf. Sci. 2021, 33, 619–628. [Google Scholar] [CrossRef]

- Li, J.; Mi, Y.; Li, G.; Ju, Z. CNN-based facial expression recognition from annotated rgb-d images for human–robot interaction. Int. J. Humanoid Robot. 2019, 16, 1941002. [Google Scholar] [CrossRef]

- Piana, S.; Stagliano, A.; Odone, F.; Verri, A.; Camurri, A. Real-time automatic emotion recognition from body gestures. arXiv 2014, arXiv:1402.5047. [Google Scholar]

- Ahmed, F.; Bari, A.H.; Gavrilova, M.L. Emotion recognition from body movement. IEEE Access 2019, 8, 11761–11781. [Google Scholar] [CrossRef]

- Xu, S.; Fang, J.; Hu, X.; Ngai, E.; Guo, Y.; Leung, V.; Cheng, J.; Hu, B. Emotion recognition from gait analyses: Current research and future directions. arXiv 2020, arXiv:2003.11461. [Google Scholar] [CrossRef]

- Bhatia, Y.; Bari, A.H.; Hsu, G.S.J.; Gavrilova, M. Motion capture sensor-based emotion recognition using a bi-modular sequential neural network. Sensors 2022, 22, 403. [Google Scholar] [CrossRef]

- Janssen, D.; Schöllhorn, W.I.; Lubienetzki, J.; Fölling, K.; Kokenge, H.; Davids, K. Recognition of emotions in gait patterns by means of artificial neural nets. J. Nonverbal Behav. 2008, 32, 79–92. [Google Scholar] [CrossRef]

- Higginson, B.K. Methods of running gait analysis. Curr. Sport. Med. Rep. 2009, 8, 136–141. [Google Scholar] [CrossRef] [PubMed]

- Koolagudi, S.G.; Rao, K.S. Emotion recognition from speech: A review. Int. J. Speech Technol. 2012, 15, 99–117. [Google Scholar] [CrossRef]

- Vogt, T.; André, E. Comparing feature sets for acted and spontaneous speech in view of automatic emotion recognition. In Proceedings of the 2005 IEEE International Conference on Multimedia and Expo, Amsterdam, The Netherlands, 6 July 2005; pp. 474–477. [Google Scholar]

- Kanjo, E.; Younis, E.M.; Ang, C.S. Deep learning analysis of mobile physiological, environmental and location sensor data for emotion detection. Inf. Fusion 2019, 49, 46–56. [Google Scholar] [CrossRef]

- Brownlee, J. Master Machine Learning Algorithms: Discover How They Work and Implement Them from Scratch. Machine Learning Mastery. 2016. Available online: https://machinelearningmastery.com/machine-learning-mastery-weka/ (accessed on 9 December 2022).

- Bonaccorso, G. Machine Learning Algorithms; Packt Publishing Ltd.: Birmingham, UK, 2017. [Google Scholar]

- Cho, G.; Yim, J.; Choi, Y.; Ko, J.; Lee, S.H. Review of machine learning algorithms for diagnosing mental illness. Psychiatry Investig. 2019, 16, 262. [Google Scholar] [CrossRef] [PubMed]

- Juba, B.; Le, H.S. Precision-recall versus accuracy and the role of large data sets. AAAI Conf. Artif. Intell. 2019, 33, 4039–4048. [Google Scholar] [CrossRef]

- Taylor, R. Interpretation of the correlation coefficient: A basic review. J. Diagn. Med. Sonogr. 1990, 6, 35–39. [Google Scholar] [CrossRef]

- Willmott, C.J.; Matsuura, K. Advantages of the mean absolute error (MAE) over the root mean square error (RMSE) in assessing average model performance. Clim. Res. 2005, 30, 79–82. [Google Scholar] [CrossRef]

- Domínguez-Jiménez, J.A.; Campo-Landines, K.C.; Martínez-Santos, J.C.; Delahoz, E.J.; Contreras-Ortiz, S.H. A machine learning model for emotion recognition from physiological signals. Biomed. Signal Process. Control 2020, 55, 101646. [Google Scholar] [CrossRef]

- Hossain, M.Z.; Gedeon, T.; Sankaranarayana, R. Using temporal features of observers’ physiological measures to distinguish between genuine and fake smiles. IEEE Trans. Affect. Comput. 2018, 11, 163–173. [Google Scholar] [CrossRef]

- Metallinou, A.; Katsamanis, A.; Narayanan, S. A hierarchical framework for modeling multimodality and emotional evolution in affective dialogs. In Proceedings of the 2012 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Kyoto, Japan, 25–30 March 2012; pp. 2401–2404. [Google Scholar]

- Ekman, P.; Friesen, W.V.; Ellsworth, P. Emotion in the Human Face: Guidelines for Research and an Integration of Findings; Elsevier: Amsterdam, The Netherlands, 2013; Volume 11. [Google Scholar]

- Gu, S.; Wang, F.; Patel, N.P.; Bourgeois, J.A.; Huang, J.H. A model for basic emotions using observations of behavior in Drosophila. Front. Psychol. 2019, 10, 781. [Google Scholar] [CrossRef]

- Krohne, H. Stress and coping theories. Int. Encycl. Soc. Behav. Sci. 2002, 22, 15163–15170. [Google Scholar]

- Kuppens, P.; Tuerlinckx, F.; Russell, J.A.; Barrett, L.F. The relation between valence and arousal in subjective experience. Psychol. Bull. 2013, 139, 917. [Google Scholar] [CrossRef] [PubMed]

- Carmona, P.; Nunes, D.; Raposo, D.; Silva, D.; Silva, J.S.; Herrera, C. Happy hour-improving mood with an emotionally aware application. In Proceedings of the 2015 15th International Conference on Innovations for Community Services (I4CS), Nuremberg, Germany, 8–10 July 2015; pp. 1–7. [Google Scholar]

- Yu, Y.C. A cloud-based mobile anger prediction model. In Proceedings of the 2015 18th International Conference on Network-Based Information Systems, Taipei, Taiwan, 2–4 September 2015; pp. 199–205. [Google Scholar]

- Li, M.; Xie, L.; Wang, Z. A transductive model-based stress recognition method using peripheral physiological signals. Sensors 2019, 19, 429. [Google Scholar] [CrossRef] [PubMed]

- Spaulding, S.; Breazeal, C. Frustratingly easy personalization for real-time affect interpretation of facial expression. In Proceedings of the 2019 8th International Conference on Affective Computing and Intelligent Interaction (ACII), Cambridge, UK, 3–6 September 2019; pp. 531–537. [Google Scholar]

- Wei, J.; Chen, T.; Liu, G.; Yang, J. Higher-order multivariable polynomial regression to estimate human affective states. Sci. Rep. 2016, 6, 1–13. [Google Scholar] [CrossRef]

- Mencattini, A.; Martinelli, E.; Ringeval, F.; Schuller, B.; Di Natale, C. Continuous estimation of emotions in speech by dynamic cooperative speaker models. IEEE Trans. Affect. Comput. 2016, 8, 314–327. [Google Scholar] [CrossRef]

- Hassani, S.; Bafadel, I.; Bekhatro, A.; Al Blooshi, E.; Ahmed, S.; Alahmad, M. Physiological signal-based emotion recognition system. In Proceedings of the 2017 4th IEEE International Conference on Engineering Technologies and Applied Sciences (ICETAS), Salmabad, Bahrain, 29 November–1 December 2017; pp. 1–5. [Google Scholar]

- de Oliveira, L.M.; Junior, L.C.C.F. Aplicabilidade da inteligência artificial na psiquiatria: Uma revisão de ensaios clínicos. Debates Psiquiatr. 2020, 10, 14–25. [Google Scholar] [CrossRef]

- Al-Qaderi, M.K.; Rad, A.B. A brain-inspired multi-modal perceptual system for social robots: An experimental realization. IEEE Access 2018, 6, 35402–35424. [Google Scholar] [CrossRef]

- Miguel, H.O.; Lisboa, I.C.; Gonçalves, Ó.F.; Sampaio, A. Brain mechanisms for processing discriminative and affective touch in 7-month-old infants. Dev. Cogn. Neurosci. 2019, 35, 20–27. [Google Scholar] [CrossRef]

- Mehmood, R.M.; Du, R.; Lee, H.J. Optimal feature selection and deep learning ensembles method for emotion recognition from human brain EEG sensors. IEEE Access 2017, 5, 14797–14806. [Google Scholar] [CrossRef]

- Soroush, M.Z.; Maghooli, K.; Setarehdan, S.K.; Nasrabadi, A.M. A novel approach to emotion recognition using local subset feature selection and modified Dempster-Shafer theory. Behav. Brain Funct. 2018, 14, 1–15. [Google Scholar]

- Pan, L.; Yin, Z.; She, S.; Song, A. Emotional State Recognition from Peripheral Physiological Signals Using Fused Nonlinear Features and Team-Collaboration Identification Strategy. Entropy 2020, 22, 511. [Google Scholar] [CrossRef] [PubMed]

- Abd Latif, M.; Yusof, H.M.; Sidek, S.; Rusli, N. Thermal imaging based affective state recognition. In Proceedings of the 2015 IEEE International Symposium on Robotics and Intelligent Sensors (IRIS), Langkawi, Malaysia, 18–20 October 2015; pp. 214–219. [Google Scholar]

- Fan, J.; Wade, J.W.; Bian, D.; Key, A.P.; Warren, Z.E.; Mion, L.C.; Sarkar, N. A Step towards EEG-based brain computer interface for autism intervention. In Proceedings of the 2015 37th Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC), Milan, Italy, 25–29 August 2015; pp. 3767–3770. [Google Scholar]

- Khezri, M.; Firoozabadi, M.; Sharafat, A.R. Reliable emotion recognition system based on dynamic adaptive fusion of forehead biopotentials and physiological signals. Comput. Methods Programs Biomed. 2015, 122, 149–164. [Google Scholar] [CrossRef] [PubMed]

- Tivatansakul, S.; Ohkura, M. Emotion recognition using ECG signals with local pattern description methods. Int. J. Affect. Eng. 2015, 15, 51–61. [Google Scholar] [CrossRef]

- Boccanfuso, L.; Wang, Q.; Leite, I.; Li, B.; Torres, C.; Chen, L.; Salomons, N.; Foster, C.; Barney, E.; Ahn, Y.A.; et al. A thermal emotion classifier for improved human-robot interaction. In Proceedings of the 2016 25th IEEE International Symposium on Robot and Human Interactive Communication (RO-MAN), New York, NY, USA, 26–31 August 2016; pp. 718–723. [Google Scholar]

- Ruiz-Garcia, A.; Elshaw, M.; Altahhan, A.; Palade, V. Deep learning for emotion recognition in faces. In Proceedings of the International Conference on Artificial Neural Networks; Springer: Cham, Switzerland, 2016; pp. 38–46. [Google Scholar]

- Mohammadpour, M.; Hashemi, S.M.R.; Houshmand, N. Classification of EEG-based emotion for BCI applications. In Proceedings of the 2017 Artificial Intelligence and Robotics (IRANOPEN), Qazvin, Iran, 9 April 2017; pp. 127–131. [Google Scholar]

- Lowe, R.; Andreasson, R.; Alenljung, B.; Lund, A.; Billing, E. Designing for a wearable affective interface for the NAO Robot: A study of emotion conveyance by touch. Multimodal Technol. Interact. 2018, 2, 2. [Google Scholar] [CrossRef]

- Noor, S.; Dhrubo, E.A.; Minhaz, A.T.; Shahnaz, C.; Fattah, S.A. Audio visual emotion recognition using cross correlation and wavelet packet domain features. In Proceedings of the 2017 IEEE International WIE Conference on Electrical and Computer Engineering (WIECON-ECE), Dehradun, India, 18–19 December 2017; pp. 233–236. [Google Scholar]

- Ruiz-Garcia, A.; Elshaw, M.; Altahhan, A.; Palade, V. A hybrid deep learning neural approach for emotion recognition from facial expressions for socially assistive robots. Neural Comput. Appl. 2018, 29, 359–373. [Google Scholar] [CrossRef]

- Wei, W.; Jia, Q.; Feng, Y.; Chen, G. Emotion recognition based on weighted fusion strategy of multichannel physiological signals. Comput. Intell. Neurosci. 2018, 2018, 5296523. [Google Scholar] [CrossRef] [PubMed]

- Wei, W.J. Development and evaluation of an emotional lexicon system for young children. Microsyst. Technol. 2019, 27, 1535–1544. [Google Scholar] [CrossRef]

- Goulart, C.; Valadão, C.; Delisle-Rodriguez, D.; Funayama, D.; Favarato, A.; Baldo, G.; Binotte, V.; Caldeira, E.; Bastos-Filho, T. Visual and thermal image processing for facial specific landmark detection to infer emotions in a child-robot interaction. Sensors 2019, 19, 2844. [Google Scholar] [CrossRef]

- Gu, Y.; Wang, Y.; Liu, T.; Ji, Y.; Liu, Z.; Li, P.; Wang, X.; An, X.; Ren, F. EmoSense: Computational intelligence driven emotion sensing via wireless channel data. IEEE Trans. Emerg. Top. Comput. Intell. 2019, 4, 216–226. [Google Scholar] [CrossRef]

- Huang, Y.; Tian, K.; Wu, A.; Zhang, G. Feature fusion methods research based on deep belief networks for speech emotion recognition under noise condition. J. Ambient. Intell. Humaniz. Comput. 2019, 10, 1787–1798. [Google Scholar] [CrossRef]

- Ilyas, C.M.A.; Schmuck, V.; Haque, M.A.; Nasrollahi, K.; Rehm, M.; Moeslund, T.B. Teaching pepper robot to recognize emotions of traumatic brain injured patients using deep neural networks. In Proceedings of the 2019 28th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), New Delhi, India, 14–18 October 2019; pp. 1–7. [Google Scholar]

- Lopez-Rincon, A. Emotion recognition using facial expressions in children using the NAO Robot. In Proceedings of the 2019 International Conference on Electronics, Communications and Computers (CONIELECOMP), Cholula, Mexico, 27 February–1 March 2019; pp. 146–153. [Google Scholar]

- Ma, F.; Zhang, W.; Li, Y.; Huang, S.L.; Zhang, L. An end-to-end learning approach for multimodal emotion recognition: Extracting common and private information. In Proceedings of the 2019 IEEE International Conference on Multimedia and Expo (ICME), Shanghai, China, 8–12 July 2019; pp. 1144–1149. [Google Scholar]

- Mithbavkar, S.A.; Shah, M.S. Recognition of emotion through facial expressions using EMG signal. In Proceedings of the 2019 International Conference on Nascent Technologies in Engineering (ICNTE), Navi Mumbai, India, 4–5 January 2019; pp. 1–6. [Google Scholar]

- Rahim, A.; Sagheer, A.; Nadeem, K.; Dar, M.N.; Rahim, A.; Akram, U. Emotion Charting Using Real-time Monitoring of Physiological Signals. In Proceedings of the 2019 International Conference on Robotics and Automation in Industry (ICRAI), Rawalpindi, Pakistan, 21–22 October 2019; pp. 1–5. [Google Scholar]

- Taran, S.; Bajaj, V. Emotion recognition from single-channel EEG signals using a two-stage correlation and instantaneous frequency-based filtering method. Comput. Methods Programs Biomed. 2019, 173, 157–165. [Google Scholar] [CrossRef] [PubMed]

- Bălan, O.; Moise, G.; Moldoveanu, A.; Leordeanu, M.; Moldoveanu, F. An investigation of various machine and deep learning techniques applied in automatic fear level detection and acrophobia virtual therapy. Sensors 2020, 20, 496. [Google Scholar] [CrossRef]

- Chen, L.; Su, W.; Feng, Y.; Wu, M.; She, J.; Hirota, K. Two-layer fuzzy multiple random forest for speech emotion recognition in human-robot interaction. Inf. Sci. 2020, 509, 150–163. [Google Scholar] [CrossRef]

- Ding, I.J.; Hsieh, M.C. A hand gesture action-based emotion recognition system by 3D image sensor information derived from Leap Motion sensors for the specific group with restlessness emotion problems. Microsyst. Technol. 2020, 28, 403–415. [Google Scholar] [CrossRef]

- Melinte, D.O.; Vladareanu, L. Facial expressions recognition for human–robot interaction using deep convolutional neural networks with rectified adam optimizer. Sensors 2020, 20, 2393. [Google Scholar] [CrossRef] [PubMed]

- Shu, L.; Yu, Y.; Chen, W.; Hua, H.; Li, Q.; Jin, J.; Xu, X. Wearable emotion recognition using heart rate data from a smart bracelet. Sensors 2020, 20, 718. [Google Scholar] [CrossRef] [PubMed]

- Uddin, M.Z.; Nilsson, E.G. Emotion recognition using speech and neural structured learning to facilitate edge intelligence. Eng. Appl. Artif. Intell. 2020, 94, 103775. [Google Scholar] [CrossRef]

- Yang, J.; Wang, R.; Guan, X.; Hassan, M.M.; Almogren, A.; Alsanad, A. AI-enabled emotion-aware robot: The fusion of smart clothing, edge clouds and robotics. Future Gener. Comput. Syst. 2020, 102, 701–709. [Google Scholar] [CrossRef]

- Zvarevashe, K.; Olugbara, O. Ensemble learning of hybrid acoustic features for speech emotion recognition. Algorithms 2020, 13, 70. [Google Scholar] [CrossRef]

- Kumar, A.; Sharma, K.; Sharma, A. MEmoR: A multimodal emotion recognition using affective biomarkers for smart prediction of emotional health for people analytics in smart industries. Image Vis. Comput. 2022, 123, 104483. [Google Scholar] [CrossRef]

- Hsu, S.M.; Chen, S.H.; Huang, T.R. Personal Resilience Can Be Well Estimated from Heart Rate Variability and Paralinguistic Features during Human–Robot Conversations. Sensors 2021, 21, 5844. [Google Scholar] [CrossRef] [PubMed]

- D’Onofrio, G.; Fiorini, L.; Sorrentino, A.; Russo, S.; Ciccone, F.; Giuliani, F.; Sancarlo, D.; Cavallo, F. Emotion Recognizing by a Robotic Solution Initiative (EMOTIVE Project). Sensors 2022, 22, 2861. [Google Scholar] [CrossRef] [PubMed]

- Modi, S.; Bohara, M.H. Facial emotion recognition using convolution neural network. In Proceedings of the 2021 5th International Conference on Intelligent Computing and Control Systems (ICICCS), Madurai, India, 6–8 May 2021; pp. 1339–1344. [Google Scholar]

- Chang, Y.; Sun, L. EEG-Based Emotion Recognition for Modulating Social-Aware Robot Navigation. In Proceedings of the 2021 43rd Annual International Conference of the IEEE Engineering in Medicine & Biology Society (EMBC), Virtual, 1–5 November 2021; pp. 5709–5712. [Google Scholar]

- Mittal, T.; Bhattacharya, U.; Chandra, R.; Bera, A.; Manocha, D. M3er: Multiplicative multimodal emotion recognition using facial, textual, and speech cues. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 25–29 October 2021; Volume 34, pp. 1359–1367. [Google Scholar]

- Tuncer, T.; Dogan, S.; Subasi, A. A new fractal pattern feature generation function based emotion recognition method using EEG. Chaos Solitons Fractals 2021, 144, 110671. [Google Scholar] [CrossRef]

- Nimmagadda, R.; Arora, K.; Martin, M.V. Emotion recognition models for companion robots. J. Supercomput. 2022, 78, 13710–13727. [Google Scholar] [CrossRef]

- Zhao, Y.; Xu, K.; Wang, H.; Li, B.; Qiao, M.; Shi, H. MEC-enabled hierarchical emotion recognition and perturbation-aware defense in smart cities. IEEE Internet Things J. 2021, 8, 16933–16945. [Google Scholar] [CrossRef]

- Ilyas, O. Pseudo-colored rate map representation for speech emotion recognition. Biomed. Signal Process. Control. 2021, 66, 102502. [Google Scholar]

- Martínez-Tejada, L.A.; Maruyama, Y.; Yoshimura, N.; Koike, Y. Analysis of personality and EEG features in emotion recognition using machine learning techniques to classify arousal and valence labels. Mach. Learn. Knowl. Extr. 2020, 2, 7. [Google Scholar] [CrossRef]

- Filippini, C.; Perpetuini, D.; Cardone, D.; Merla, A. Improving Human–Robot Interaction by Enhancing NAO Robot Awareness of Human Facial Expression. Sensors 2021, 21, 6438. [Google Scholar] [CrossRef]

- Hefter, E.; Perry, C.; Coiro, N.; Parsons, H.; Zhu, S.; Li, C. Development of a Multi-sensor Emotional Response System for Social Robots. In Interactive Collaborative Robotics; Springer: Cham, Switzerland, 2021; pp. 88–99. [Google Scholar]

- Shan, Y.; Li, S.; Chen, T. Respiratory signal and human stress: Non-contact detection of stress with a low-cost depth sensing camera. Int. J. Mach. Learn. Cybern. 2020, 11, 1825–1837. [Google Scholar] [CrossRef]

- Gümüslü, E.; Erol Barkana, D.; Köse, H. Emotion recognition using EEG and physiological data for robot-assisted rehabilitation systems. In Proceedings of the Companion Publication of the 2020 International Conference on Multimodal Interaction, Virtual, 25–29 October 2021; pp. 379–387. [Google Scholar]

- Mocanu, B.; Tapu, R. Speech Emotion Recognition using GhostVLAD and Sentiment Metric Learning. In Proceedings of the 2021 12th International Symposium on Image and Signal Processing and Analysis (ISPA), Zagreb, Croatia, 13–15 September 2021; pp. 126–130. [Google Scholar]

- Hossain, M.Z.; Daskalaki, E.; Brüstle, A.; Desborough, J.; Lueck, C.J.; Suominen, H. The role of machine learning in developing non-magnetic resonance imaging based biomarkers for multiple sclerosis: A systematic review. BMC Med. Inform. Decis. Mak. 2022, 22, 242. [Google Scholar] [CrossRef]

- Lam, J.S.; Hasan, M.R.; Ahmed, K.A.; Hossain, M.Z. Machine Learning to Diagnose Neurodegenerative Multiple Sclerosis Disease. In Asian Conference on Intelligent Information and Database Systems; Springer: Singapore, 2022; pp. 251–262. [Google Scholar]

- Deng, J.; Hasan, M.R.; Mahmud, M.; Hasan, M.M.; Ahmed, K.A.; Hossain, M.Z. Diagnosing Autism Spectrum Disorder Using Ensemble 3D-CNN: A Preliminary Study. In Proceedings of the 2022 IEEE International Conference on Image Processing (ICIP), Bordeaux, France, 16–19 October 2022; pp. 3480–3484. [Google Scholar]

- Altun, K.; MacLean, K.E. Recognizing affect in human touch of a robot. Pattern Recognit. Lett. 2015, 66, 31–40. [Google Scholar] [CrossRef]

- Mohammadi, Z.; Frounchi, J.; Amiri, M. Wavelet-based emotion recognition system using EEG signal. Neural Comput. Appl. 2017, 28, 1985–1990. [Google Scholar] [CrossRef]

- Wiem, M.B.H.; Lachiri, Z. Emotion assessing using valence-arousal evaluation based on peripheral physiological signals and support vector machine. In Proceedings of the 2016 4th International Conference on Control Engineering & Information Technology (CEIT), Hammamet, Tunisia, 16–18 December 2016; pp. 1–5. [Google Scholar]

- Wiem, M.B.H.; Lachiri, Z. Emotion recognition system based on physiological signals with Raspberry Pi III implementation. In Proceedings of the 2017 3rd International Conference on Frontiers of Signal Processing (ICFSP), Paris, France, 6–8 September 2017; pp. 20–24. [Google Scholar]

- Wiem, M.B.H.; Lachiri, Z. Emotion sensing from physiological signals using three defined areas in arousal-valence model. In Proceedings of the 2017 International Conference on Control, Automation and Diagnosis (ICCAD), Hammamet, Tunisia, 19–21 January 2017; pp. 219–223. [Google Scholar]

- Yonezawa, T.; Mase, H.; Yamazoe, H.; Joe, K. Estimating emotion of user via communicative stuffed-toy device with pressure sensors using fuzzy reasoning. In Proceedings of the 2017 14th International Conference on Ubiquitous Robots and Ambient Intelligence (URAI), Jeju, Korea, 28 June–1 July 2017; pp. 916–921. [Google Scholar]

- Alazrai, R.; Homoud, R.; Alwanni, H.; Daoud, M.I. EEG-based emotion recognition using quadratic time-frequency distribution. Sensors 2018, 18, 2739. [Google Scholar] [CrossRef] [PubMed]

- Bazgir, O.; Mohammadi, Z.; Habibi, S.A.H. Emotion recognition with machine learning using EEG signals. In Proceedings of the 2018 25th National and 3rd International Iranian Conference on Biomedical Engineering (ICBME), Qom, Iran, 29–30 November 2018; pp. 1–5. [Google Scholar]

- Henia, W.M.B.; Lachiri, Z. Emotion classification in arousal-valence dimension using discrete affective keywords tagging. In Proceedings of the 2017 International Conference on Engineering & MIS (ICEMIS), Monastir, Tunisia, 8–10 May 2017; pp. 1–6. [Google Scholar]

- Marinoiu, E.; Zanfir, M.; Olaru, V.; Sminchisescu, C. 3d human sensing, action and emotion recognition in robot assisted therapy of children with autism. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 2158–2167. [Google Scholar]

- Henia, W.M.B.; Lachiri, Z. Multiclass SVM for affect recognition with hardware implementation. In Proceedings of the 2018 15th International Multi-Conference on Systems, Signals & Devices (SSD), Yasmine Hammamet, Tunisia, 19–22 March 2018; pp. 480–485. [Google Scholar]

- Salama, E.S.; El-Khoribi, R.A.; Shoman, M.E.; Shalaby, M.A.W. EEG-based emotion recognition using 3D convolutional neural networks. Int. J. Adv. Comput. Sci. Appl. 2018, 9, 329–337. [Google Scholar] [CrossRef]

- Pandey, P.; Seeja, K. Subject independent emotion recognition from EEG using VMD and deep learning. J. King Saud Univ.-Comput. Inf. Sci. 2019, 34, 1730–1738. [Google Scholar] [CrossRef]

- Su, Y.; Li, W.; Bi, N.; Lv, Z. Adolescents environmental emotion perception by integrating EEG and eye movements. Front. Neurorobotics 2019, 13, 46. [Google Scholar] [CrossRef]

- Ullah, H.; Uzair, M.; Mahmood, A.; Ullah, M.; Khan, S.D.; Cheikh, F.A. Internal emotion classification using EEG signal with sparse discriminative ensemble. IEEE Access 2019, 7, 40144–40153. [Google Scholar] [CrossRef]

- Yin, G.; Sun, S.; Zhang, H.; Yu, D.; Li, C.; Zhang, K.; Zou, N. User Independent Emotion Recognition with Residual Signal-Image Network. In Proceedings of the 2019 IEEE International Conference on Image Processing (ICIP), Taipei, Taiwan, 22–25 September 2019; pp. 3277–3281. [Google Scholar]

- Algarni, M.; Saeed, F.; Al-Hadhrami, T.; Ghabban, F.; Al-Sarem, M. Deep Learning-Based Approach for Emotion Recognition Using Electroencephalography (EEG) Signals Using Bi-Directional Long Short-Term Memory (Bi-LSTM). Sensors 2022, 22, 2976. [Google Scholar] [CrossRef]

- Panahi, F.; Rashidi, S.; Sheikhani, A. Application of fractional Fourier transform in feature extraction from ELECTROCARDIOGRAM and GALVANIC SKIN RESPONSE for emotion recognition. Biomed. Signal Process. Control. 2021, 69, 102863. [Google Scholar] [CrossRef]

- Raschka, S. When Does Deep Learning Work Better Than SVMs or Random Forests®? 2016. Available online: https://www.kdnuggets.com/2016/04/deep-learning-vs-svm-random-forest.html (accessed on 19 December 2022).

- Ugail, H.; Al-dahoud, A. A genuine smile is indeed in the eyes–The computer aided non-invasive analysis of the exact weight distribution of human smiles across the face. Adv. Eng. Inform. 2019, 42, 100967. [Google Scholar] [CrossRef]

- Heaven, D. Why faces don’t always tell the truth about feelings. Nature 2020, 578, 502–505. [Google Scholar] [CrossRef] [PubMed]

- Ball, T.; Kern, M.; Mutschler, I.; Aertsen, A.; Schulze-Bonhage, A. Signal quality of simultaneously recorded invasive and non-invasive EEG. Neuroimage 2009, 46, 708–716. [Google Scholar] [CrossRef] [PubMed]

- Sokolov, S. Neural Network Based Multimodal Emotion Estimation. ICAS 2018 2018, 12, 4–7. [Google Scholar]

| Authors | Participant No. | Source | Dataset | Methods | Highest Accuracy |

|---|---|---|---|---|---|

| Latif et al. [77] | 1 | Skin | ST | SVM | 63.5 |

| Fan et al. [78] | 16 | Brain | EEG | KNN | 86 |

| Khezri et al. [79] | 25 | Fusion | EEG, EMG | SVM | 82.7 |

| Tivatansakul et al. [80] | 8 | Heart | ECG | KNN | 95.25 |

| Boccanfuso et al. [81] | 10 | Skin | ST, EDA | SVM | 77.5 |

| Ruiz-Garcia et al. [82] | 188 | Imaging | RGB | NN | 96.93 |

| Mehmood et al. [74] | 21 | Brain | EEG | DT | 76.6 |

| Mohammadpour et al. [83] | 32 | Brain | EEG | NN | 59.19 |

| Lowe et al. [84] | 64 | Tactile | Tactile | SVM | 22.3 |

| Noor et al. [85] | 44 | Fusion | SA, RGB | KNN | 96.67 |

| Ruiz-Garcia et al. [86] | 70 | Imaging | RGB | Fusion | 96.26 |

| Wei et al. [87] | 30 | Fusion | EEG, ECG, Resp., EDA | SVM | 84.60 |

| Wei et al. [88] | 27 | Fusion | EEG, ECG, Resp., EDA | SVM | 84.62 |

| Goulart et al. [89] | 28 | Imaging | RGB, ST | LDA | 85.75 |

| Gu et al. [90] | 14 | Fusion | Motion, RGB, Radio | KNN | 84.8 |

| Huang et al. [91] | 487 | Speech | SA | Fusion | 94.50 |

| Ilyas et al. [92] | 221 | Imaging | RGB | Fusion | 91 |

| Lopez-Rincon et al. [93] | 1192 | Imaging | RGB | NN | 44.9 |

| Ma et al. [94] | 52 | Fusion | SA, RGB | NN | 86.89 |

| Mithbavkar et al. [95] | 1 | Muscle | EMG | NN | 99.69 |

| Rahim et al. [96] | 40 | Fusion | ECG, GSR | NN | 93 |

| Taran et al. [97] | 20 | Brain | EEG | SVM | 93.13 |

| Balan et al. [98] | 8 | Fusion | EEG, HR, EDA | KNN | 99.5 |

| Chen et al. [99] | 4 | Speech | SA | DT | 87.85 |

| Ding et al. [100] | 4 | Imaging | 3D | KNN | 92.8 |

| Melinte et al. [101] | 24,336 | Imaging | RGB | NN | 90.14 |

| Shu et al. [102] | 25 | Heart | HR | DT | 84 |

| Uddin et al. [103] | 339 | Speech | SA | NN | 93 |

| Yang et al. [104] | 3 | Imaging | RGB | NN | 99.9 |

| Zvarevashe et al. [105] | 28 | Speech | SA | DT | 99.55 |

| Ahmed et al. [43] | 30 | Imaging | RGB | LDA | 94.67 |

| Kumar et al. [106] | 94 | Imaging | RGB | NN | 91.02 |

| Hsu et al. [107] | 32 | Fusion | GSR, SA, ECG | KNN | 86 |

| D’Onofrio et al. [108] | 27 | Imaging | RGB | DT | 99 |

| Modi et al. [109] | Sim. | Imaging | RGB | NN | 82.5 |

| Chang et al. [110] | Sim. | Brain | EEG | LDA | 99.44 |

| Mittal et al. [111] | 10 | Fusion | RGB, SA | NN | 89 |

| Tuncer et al. [112] | Sim. | Brain | EEG | SVM | 99.82 |

| Nimmagadda et al. [113] | Sim. | Imaging | RGB | NN | 80.6 |

| Zhao et al. [114] | 12 | Imaging | RGB | FGSM | 93.31 |

| Ilyas et al. [115] | 10 | Speech | SA | NN | 91.32 |

| Martínez-Tejada et al. [116] | 40 | Fusion | EEG | NN | 89 |

| Filippini et al. [117] | 24 | Imaging | RGB | NN | 91 |

| Hefter et al. [118] | 70,000 | Imaging | RGB | NN | 93 |

| Shan et al. [119] | 84 | Lungs | KINECT | SVM | 99.67 |

| Gümüslü et al. [120] | 15 | Fusion | EEG, BVP, ST, SC | Fusion | 94.58 |

| Mocanu et al. [121] | 24 | Speech | SA | NN | 83.95 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Khan, M.A.R.; Rostov, M.; Rahman, J.S.; Ahmed, K.A.; Hossain, M.Z. Assessing the Applicability of Machine Learning Models for Robotic Emotion Monitoring: A Survey. Appl. Sci. 2023, 13, 387. https://doi.org/10.3390/app13010387

Khan MAR, Rostov M, Rahman JS, Ahmed KA, Hossain MZ. Assessing the Applicability of Machine Learning Models for Robotic Emotion Monitoring: A Survey. Applied Sciences. 2023; 13(1):387. https://doi.org/10.3390/app13010387

Chicago/Turabian StyleKhan, Md Ayshik Rahman, Marat Rostov, Jessica Sharmin Rahman, Khandaker Asif Ahmed, and Md Zakir Hossain. 2023. "Assessing the Applicability of Machine Learning Models for Robotic Emotion Monitoring: A Survey" Applied Sciences 13, no. 1: 387. https://doi.org/10.3390/app13010387

APA StyleKhan, M. A. R., Rostov, M., Rahman, J. S., Ahmed, K. A., & Hossain, M. Z. (2023). Assessing the Applicability of Machine Learning Models for Robotic Emotion Monitoring: A Survey. Applied Sciences, 13(1), 387. https://doi.org/10.3390/app13010387