LSTM-Based Transformer for Transfer Passenger Flow Forecasting between Transportation Integrated Hubs in Urban Agglomeration

Abstract

1. Introduction

- (1)

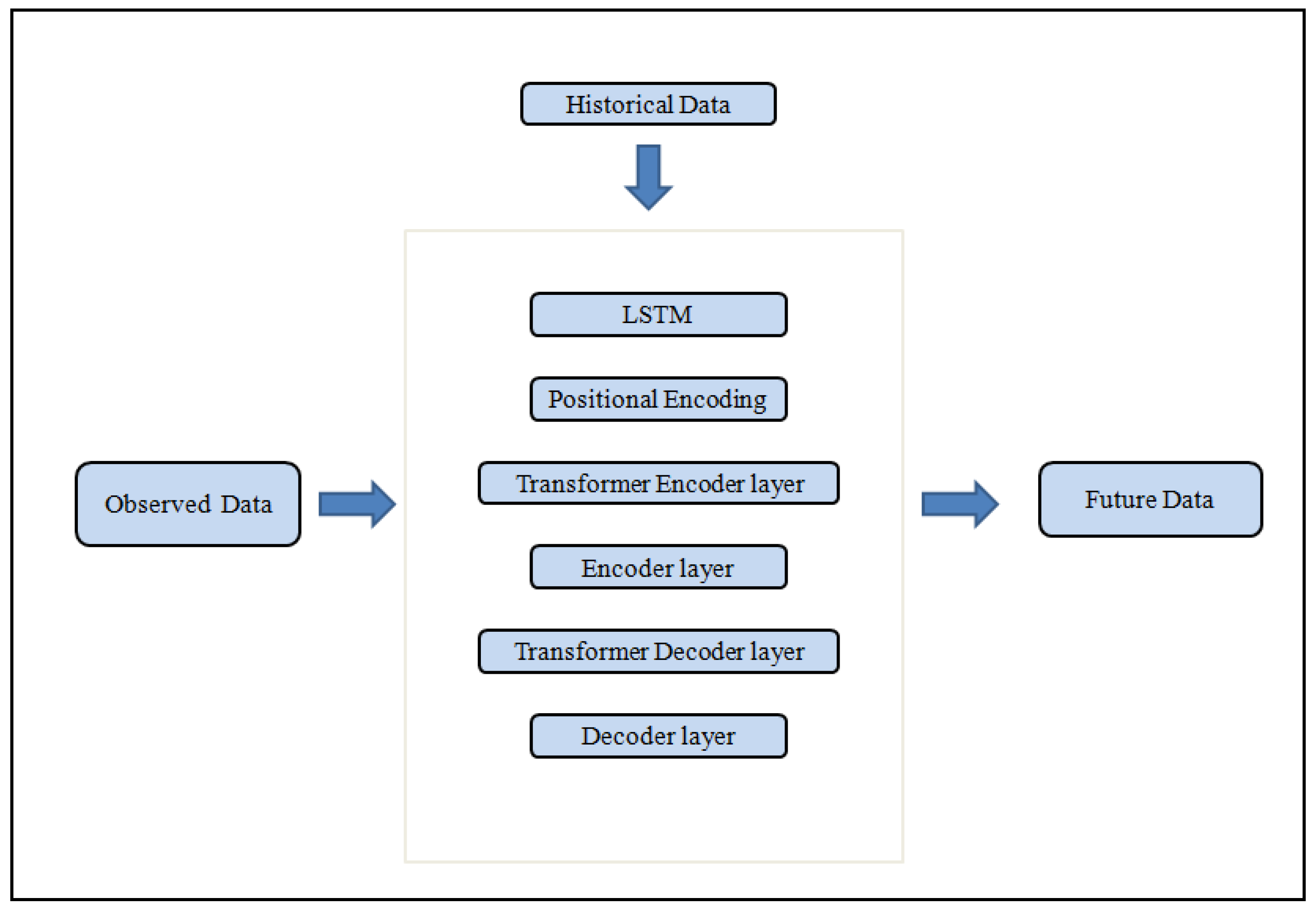

- For short-term prediction of inter-hub transfer passengers in the urban agglomeration, the transformer framework based on attention mechanisms is applied. A more valuable and adaptable prediction method is created for large-scale time series prediction of interchange passengers with notable temporal characteristics.

- (2)

- To resolve the long-time dependence in the time series prediction process and to turn the historical traffic passenger sequences into vectors that the transformer can recognize, the LSTM is utilized to pre-process the historical transfer passenger data.

- (3)

- The passenger transfer between two significant passenger hubs in the Beijing-Tianjin-Hebei urban agglomeration is forecasted using the LSTM-based transformer based on 10–23 May 2021, and the forecast results serve as a reference point for increasing the operational effectiveness of the multimodal transportation system.

2. Related Work

2.1. Recurrent Neural Networks, Long Short-Term Memory and Gated Recurrent Units

2.2. Attention Mechanism and Transformer

3. Methods

3.1. Methodological Architecture

3.2. Temporal Embedding with LSTM

3.3. Encoder

3.4. Decoder

3.5. Evaluation

4. Experiments and Results

4.1. Experiments Environment and Data Description

4.2. Data Pro-Processing and Training

4.3. Results

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Gangyan, X.; Ruibing, Z.; Suxiu, X.; Xiaofei, K.; Xuan, Q. Personalized Multimodal Travel Service Design for sustainable intercity transport. J. Clean. Prod. 2021, 308, 127367. [Google Scholar]

- Yan, H.; Huiming, Z. The intercity railway connections in China: A comparative analysis of high-speed train and conventional train services. Transp. Policy 2022, 120, 89–103. [Google Scholar]

- Dorian, A.B. Individual, household, and urban form determinants of trip chaining of non-work travel in México City. J. Trans. Geogr. 2022, 98, 103227. [Google Scholar]

- Min, Y.; Shu-hong, M.; Wei, Z.; Xi-fang, C. Estimation Markov Decision Process of Multimodal Trip Chain between Integrated Transportation Hubs in Urban Agglomeration Based on Generalized Cost. J. Adv. Transp. 2022, 2022, 5027133. [Google Scholar]

- Wong, Y.Z.; Hensher, D.A.; Mulley, C. Mobility as a service (MaaS): Charting a future context. Transp. Res. Part A Policy Pract. 2019, 131, 5–19. [Google Scholar] [CrossRef]

- Xiaowei, L.; Ruiyang, M.; Yanyong, G.; Wei, W.; Bin, Y.; Jun, C. Investigation of factors and their dynamic effects on intercity travel modes competition. Travel Behav. Soc. 2021, 23, 166–176. [Google Scholar]

- Korkmaz, E.; Akgüngör, A.P. The forecasting of air transport passenger demands in Turkey by using novel meta-heuristic algorithms. Concurr. Comp. Pract. Exp. 2021, 33, e6263. [Google Scholar] [CrossRef]

- Xie, M.Q.; Li, X.M.; Zhou, W.L.; Fu, Y.B. Forecasting the Short-Term Passenger Flow on High-Speed Railway with Neural Networks. Comput. Intel. Neurosc. 2014, 375487. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, N.; Chen, Y.; Zhang, Y. Short-term forecasting of urban rail transit ridership based on ARIMA and wavelet decomposition. Proc. AIP Conf. 2018, 1967, 040025. [Google Scholar]

- Li, J.W. Short-Time Passenger Volume Forecasting of Urban Rail Transit Based on Multiple Fusion. Appl. Mech. Mater. 2014, 641, 773–776. [Google Scholar] [CrossRef]

- Alekseev, K.P.G.; Seixas, J.M. Forecasting the Air Transport Demand for Passengers with Neural Modelling. In Proceedings of the Brazilian Symposium on Neural Networks, Pernambuco, Brazil, 11–14 November 2002; pp. 86–91. [Google Scholar]

- Ma, X.; Dai, Z.; He, Z.; Ma, J.; Wang, Y.; Wang, Y. Learning Traffic as Images: A Deep Convolutional Neural Network for Large-Scale Transportation Network Speed Prediction. Sensors 2017, 17, 818. [Google Scholar] [CrossRef] [PubMed]

- Yarin, G.; Zoubin, G. A theoretically grounded application of dropout in recurrent neural networks. NIPS 2016, 29, 1019–1027. [Google Scholar]

- Huang, W.; Song, G.; Hong, H.; Xie, K. Deep Architecture for Traffic Flow Prediction: Deep Belief Networks With Multitask Learning. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2191–2201. [Google Scholar] [CrossRef]

- Ma, X.; Tao, Z.; Wang, Y.; Yu, H.; Wang, Y. Long short-term memory neural network for traffic speed prediction using remote microwave sensor data. Transp. Res. Part C Emerg. Technol. 2015, 54, 187–197. [Google Scholar] [CrossRef]

- Rui, F.; Zuo, Z.; Li, L. Using LSTM and GRU neural network methods for traffic flow prediction. In Proceedings of the 31st Youth Academic Annual Conference of Chinese Association of Automation (YAC), Wuhan, China, 11–13 November 2016; pp. 324–328. [Google Scholar]

- Xiao, Y.; Liu, J.J.; Hu, Y.; Wang, Y.; Lai, K.K.; Wang, S. A neuro-fuzzy combination model based on singular spectrum analysis for air transport demand forecasting. J. Air Transp. Manag. 2014, 39, 1–11. [Google Scholar] [CrossRef]

- Jinlei, Z.; Feng, C.; Guo, Y. Multi-graph convolutional network for short-term passenger flow forecasting in urban rail transit. IET Intel. Transp. Syst. 2020, 14, 1210–1217. [Google Scholar]

- Cui, Z.; Henrickson, K.; Ke, R.; Wang, Y. Traffic Graph Convolutional Recurrent Neural Network: A Deep Learning Framework for Network-Scale Traffic Learning and Forecasting. IEEE Trans. Intell. Transp. Syst. 2019, 21, 4883–4894. [Google Scholar] [CrossRef]

- Li, L.; Wang, Y.; Zhong, G.; Zhang, J.; Ran, B. Short-to-medium Term Passenger Flow Forecasting for Metro Stations using a Hybrid Model. KSCE J. Civ. Eng. 2017, 22, 1937–1945. [Google Scholar] [CrossRef]

- Zhizhen, L.; Hong, C. Short-Term Online Taxi-Hailing Demand Prediction Based on the Multimode Traffic Data in Metro Station Areas. J. Transp. Eng. Part A Syst. 2022, 148, 05022003. [Google Scholar]

- Xu, M.; Dai, W.; Liu, C.; Gao, X.; Lin, W.; Qi, G.; Xiong, H. Spatial-Temporal Transformer Networks for Traffic Flow Forecasting. arXiv 2020, arXiv:2001.02908. [Google Scholar]

- Huaxiu, Y.; Xianfeng, T.; Hua, W.; Guanjie, Z.; Zhenhui, L. Revisiting Spatial-Temporal Similarity A Deep Learning Framework for Traffic Prediction. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; Volume 33, pp. 5668–5675. [Google Scholar]

- Reza, S.; Ferreira, M.C.; Machado, J.J.M.; Tavares, J.M.R. A multi-head attention-based transformer model for traffic flow forecasting with a comparative analysis to recurrent neural. Expert Syst. Appl. 2022, 202, 117275. [Google Scholar] [CrossRef]

- Rangapuram, S.S.; Seeger, M.W.; Gasthaus, J.; Stella, L.; Wang, Y.; Januschowski, T. Deep state space models for time series forecasting. In Proceedings of the 32nd International Conference on Neural Information Processing Systems (NIPS’18), Montreal, QC, Canada, 3–8 December 2018; Curran Associates Inc.: Red Hook, NY, USA, 2018; pp. 7796–7805. [Google Scholar]

- Salinas, D.; Flunkert, V.; Gasthaus, T.J.; Januschowski, T. DeepAR: Probabilistic forecasting with autoregressive recurrent networks. Int. J. Forecast. 2019, 36, 1181–1191. [Google Scholar] [CrossRef]

- Wen, R.; Torkkola, K.; Narayanaswamy, B.; Madeka, D. A multi-horizon quantile recurrent forecaster. arXiv 2017, arXiv:1711.11053. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Wen, T.H.; Gasic, M.; Mrksic, N.; Su, P.H.; Vandyke, D.; Young, S. Young, Semantically conditioned LSTM-based natural language generation for spoken dialogue systems. arXiv 2015, arXiv:1508.1745. [Google Scholar]

- Graves, A.; Jaitly, N.; Mohamed, A.R. Hybrid speech recognition with deep bidirectional LSTM. In Proceedings of the 2013 IEEE Workshop on Automatic Speech Recognition and Understanding, Olomouc, Czech Republic, 8–12 December 2013; pp. 273–278. [Google Scholar]

- Khan, Z.; Khan, S.M.; Dey, K.; Chowdhury, M. Development and Evaluation of Recurrent Neural Network-Based Models for Hourly Traffic Volume and Annual Average Daily Traffic Prediction. Transp. Res. Rec. J. Transp. Res. Board 2019, 2673, 489–503. [Google Scholar] [CrossRef]

- Volodymyr, M.; Nicolas, H.; Alex, G. Recurrent Models of Visual Attention. Adv. Neural Inf. Proces. Syst. 2014. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2015, arXiv:1409.0473. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. NIPS 2017. [Google Scholar] [CrossRef]

- Choi, E.; Bahadori, M.T.; Sun, J.; Kulas, J.; Schuetz, A.; Stewart, W. Retain: An Interpretable Predictive Model for Healthcare Using Reverse Time Attention Mechanism; NIPS: Barcelona, Spain, 2016; pp. 3504–3512. [Google Scholar]

- Li, S.; Jin, X.; Xuan, Y.; Zhou, X.; Chen, W.; Wang, Y.X.; Yan, X. Enhancing the locality and breaking the memory bottleneck of Transformer on time series forecasting. NeurIPS 2019. [Google Scholar] [CrossRef]

- Zhu, X.; Fu, B.; Yang, Y.; Ma, Y.; Hao, J.; Chen, S.; Liu, S.; Li, T.; Liu, S.; Guo, W.; et al. Attention-based recurrent neural network for influenza epidemic prediction. BMC Bioinform. 2019, 20, 575. [Google Scholar] [CrossRef] [PubMed]

- Kondo, K.; Ishikawa, A.; Kimura, M. Sequence to Sequence with Attention for Influenza Prevalence Prediction using Google Trends. In Proceedings of the 2019 3rd International Conference on Computational Biology and Bioinformatics, Nagoya, Japan, 17–19 October 2019. [Google Scholar] [CrossRef]

- Cho, K.; van Merriënboer, B.; Gulcehre, C.; Bahdanau, D.; Bougares, F.; Schwenk, H.; Bengio, Y. Learning Phrase Representations Using RNN Encoder-Decoder for Statistical Machine Translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1724–1734. [Google Scholar]

- Haoyang, Y.; Xiaolei, M. Learning Dynamic and Hierarchical Traffic Spatiotemporal Features with Transformer. IEEE Transact. Intell. Transp. Syst. 2021, 23, 11. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Yue, M.; Ma, S. LSTM-Based Transformer for Transfer Passenger Flow Forecasting between Transportation Integrated Hubs in Urban Agglomeration. Appl. Sci. 2023, 13, 637. https://doi.org/10.3390/app13010637

Yue M, Ma S. LSTM-Based Transformer for Transfer Passenger Flow Forecasting between Transportation Integrated Hubs in Urban Agglomeration. Applied Sciences. 2023; 13(1):637. https://doi.org/10.3390/app13010637

Chicago/Turabian StyleYue, Min, and Shuhong Ma. 2023. "LSTM-Based Transformer for Transfer Passenger Flow Forecasting between Transportation Integrated Hubs in Urban Agglomeration" Applied Sciences 13, no. 1: 637. https://doi.org/10.3390/app13010637

APA StyleYue, M., & Ma, S. (2023). LSTM-Based Transformer for Transfer Passenger Flow Forecasting between Transportation Integrated Hubs in Urban Agglomeration. Applied Sciences, 13(1), 637. https://doi.org/10.3390/app13010637