Abstract

The lack of helmet use in motorcyclists is one of the main risk factors with severe consequences in traffic accidents. Wearing a certified motorcycle helmet can reduce the risk of head injuries by 69% and fatalities by 42%. At present there are systems that detect the use of the helmet in a very precise way, however they are not robust enough to guarantee a safe journey, that is why is proposed an intelligent model for detecting the helmet in real time using training images of a camera mounted on the motorcycle, and convolutional neural networks that allow constant monitoring of the region of interest to identify the use of the helmet. As a result, a model was obtained capable of identifying when the helmet is used or not in an objective and constant manner while the user is making a journey, with a performance of 97.24%. Thus, it was possible to conclude that this new safety perspective provides a first approach to the generation of new preventive systems that help reduce accident rates in these means of transport. As future work, it is proposed to improve the model with different images that may violate the helmet detection.

1. Introduction

The means of transport have been changing over the last few years, and the use of motorbikes has been increasing, as a result of the growth of the middle class who are looking for affordable vehicles, and also because of the urbanization from which a new demand for mobility is derived, which is mainly has seen coverage by the individual private transport industry [1]. The financing facilities to acquire these vehicles, better fuel economy, their ability to manage on different terrains and in conditions where traffic is congested, and low maintenance costs are major factors driving their use in lower-middle income countries [2,3]. This development inevitably also exposes the users of this means of transport to negative consequences such as traffic accidents, in which the potential damage is usually high for motorcyclists and their passengers, since there is no structure of the motorcycle that protects them as if it happens in four-wheeled vehicles [4].

Road traffic crashes cause more than 1.35 million deaths annually and are the first cause of death in children and young adults; in particular, 28% of these deaths globally correspond to users of two- or three-wheeled vehicles. Among the leading causes of death, serious injury and disability of motorcyclists are head and neck injuries, which are estimated to result in 50% of fatalities [5]. Correct helmet use is a proven effective intervention, which, decreases by 42% in the risk of fatal trauma and by 69% the risk of head trauma in road crashes [6,7], despite this, in low- to middle-income countries (LMIC), more than 50% of motorcyclists do not use the helmets while riding. There are several factors that are related to this problem such as helmet weight, thermal discomfort, auditory/visual effects and other cultural factors [8]. Globally, 94% of the countries have a national law that require the use of helmets among motorcyclists, but, in a large number of countries the loopholes in these laws potentially limit their effectiveness, so it can be inferred that the fact of the mere enactment of mandatory helmet laws does not lead to improved helmet wearing behavior among motorcyclists [9,10].

The international community should continue promoting actions to improve practices in the areas of road safety management and the implementation in other parts of the world of interventions that have had good results, taking into account different cultural aspects that may affect their effectiveness [6]. For the vehicle design part, certain characteristics and technology must be integrated that improve its safety by avoiding or reducing the risk of injuries for users [11]. Since 2006, different organizations worldwide have collaborated in the creation of a series of manuals in order to provide guidance on the implementation of interventions that can improve road safety, and the first of these manuals was entitled “helmets” in the which purpose was to encourage the use of helmets by motorcyclists [12]; similarly, in 2009 the Asian Injury Prevention Foundation (AIP Foundation) developed the “Global Helmet Vaccine Initiative” (GHVI), which is based on 5 strategies to increase the use of the helmet [13], in the same way, the “International Transport Forum” (ITF), which organizes a global dialogue for better transport, drafted a work for 2022 where priority actions are formulated with the general objective of improving the safety of motorcyclists [14]. Currently, the objective of increasing the use of certified helmets is part of the action plan to improve road safety in the decade 2021–2030 [11].

In order to detect the helmet use by motorcyclists in the traditional way, the personnel responsible for road safety install checkpoints, and visually check that drivers are indeed wearing helmets, otherwise fines are imposed according to the legislation, although this system can be circumvented or evaded by taking an alternate route [8]. As a result of this, several studies have been developed to try to solve the problem of the lack of helmet use and thus reduce deaths and injuries in road accidents involving motorcycles.

Government’s in different countries are trying to mitigate this problem by implementing flexible, effective and low-cost methods. Consequently, Intelligent Transportation Systems (ITS) have been developed, which are advanced applications that combine electronics, communication systems, computers and sensors. These ITS integrate vehicles, their users and the roads to provide real-time information to increase safety in traffic systems. In the development of various ITS for motorcycle and helmet detection, different technologies have been used, such as radar, sound sensors, optical sensors, among others [15]. The available technologies for motorcycle detection and recognition also face two major problems: the computational capacity (since most of them are deployed on the road) and the degree of detection reliability [16]. An example of this is the surveillance methods that use video cameras installed at specific points on the roads to identify offenders who do not wear helmets while riding motorcycles; however, these systems are passive and require human assistance, so their efficiency decreases over a prolonged period of time. Automation of these types of systems can help to have reliable and robust monitoring, as well as significant reducing the amount of human resources necessary for its operation [17], but, taking into account that in most of the streets there are no cameras that are monitoring the traffic, installing cameras in all places cannot be a viable and economically sustainable solution [18].

Related Work

In recent years, combining different image processing techniques with intelligent models has shown to be a good proposal in the task of detecting helmets in motorcyclists, because the machine learning algorithms, specially the deep learning algorithms can efficiently recognize a helmet in an image or video [17]. Forero et al. [16] proposes to analyze traffic videos with an artificial intelligence model, which uses a pre-trained convolutional neural network to classify motorcycles and detect the use of protective helmet from the morphology of the object, this model obtained as results for the detection of motorcycles an accuracy of 97.14% and 85.29% for the detection of the helmet. In the work of Singh et al. [17], they reports the accuracy obtained in the training stage of the models, where the use of machine learning algorithms as classifier varies, which are K-nearest neighbor, decision trees, support vector machines and convolutional neural networks, the latter being the ones that have delivered the best results with 99.1% accuracy in the classification of drivers with and without helmet. Another example of the use of these tools is the one proposed by Rohith et al. [19] where from analyzing traffic videos, the helmet detection model in which the InceptionV3 pre-trained convolutional neural network is used, a 74% accuracy was obtained in the validation stage of the proposal. Similarly, Shine et al. [20], propose identify the use of helmet, analyzing traffic videos obtained from a fixed position, with the difference that they use a custom convolutional neural network, reaching an accuracy of 96.98%. Lin et al. [21] proposes a model by detecting helmet use where Inception V3 retraining is used together with the Multiple Transfer Learning Technique (MTL), achieving a final test accuracy of 80.6%. Other proposals use object detection algorithms based on convolutional neural networks belonging to the YOLO (You Only Look Once) series to perform the helmet detection task from images obtained of the urban traffic. Cheng et al. [22] uses the algorithm called SAS-YOLOv3-tiny that obtained 71.6% in the accuracy metric and 80.9% for Recall in its testing stage. Similarly Jia et al. [23] use in the stage where the helmet is detected a improved version of the fifth version of YOLO which is superior in terms of speed and precision to its predecessors, called YOLOv5-HD, in this work the Precision reaches 98.0% and Recall 97.2%. Another proposal by Waris et al. [24], is based on the use of another deep learning algorithm called Faster R-CNN, which consists of two main modules RPN and Fast R-CNN, which, in the end, try to find the helmet in a given image, this work achieved an accuracy of 97.69%.

In other works that aim to improve motorcycle safety, it is proposed to develop smart helmets. Mohd Rasli [25], proposes to detect the user’s head with a force sensor installed in the helmet, this signal is sent from a transmitter circuit to a receiver by means of a radio frequency module in order to control the ignition of the motorcycle and produce a sound to remind you of helmet use.

On the other hand, there are several ITS developed for four-wheeled vehicles that can be introduced and adapted to motorcycles, one of them is the seat belt reminder system, which can be adapted in the case of helmet use, as over the years reminder systems have played a vital role in increasing seat belt use [15]. The work presented by Kashevnik et al. [26], proposes to detect the use of the seat belt based on the analysis of images obtained with a camera inside the vehicle cabin, they generate a model based on the use of the architecture of a neural network named YOLO-Net, which classified the images into three categories, seat belt correctly fastened, seat belt fastened behind the back, and seat belt not on, reaching an accuracy of 93%.

The aim of this research work is to generate an artificial intelligence model for real-time detection of helmet use in motorcyclists, in order to improve the safety of these vehicles with prevention and not with the correction of the activity (helmet use). This intelligent model is based on the use of image processing and deep learning techniques.

The paper is organized as follows: Section 2 explains the materials and methods used to data acquisition through video capturing. Section 3 presents the results obtained from the proposed methodology for helmet detection in real time. Section 4, the results obtained in the previous section are discussed in order to highlight the contribution of the work in relation to the literature. Finally, Section 5 concludes and proposes future work for improvement detection oh helmet.

2. Materials and Methods

This proposal base the detection of helmet use from the analysis of images, which can be obtained with a monitoring system integrated into the motorcycle, and not as an external monitoring system, this in order to ensure that the helmet use is under surveillance since the driver wishes to start his journey.

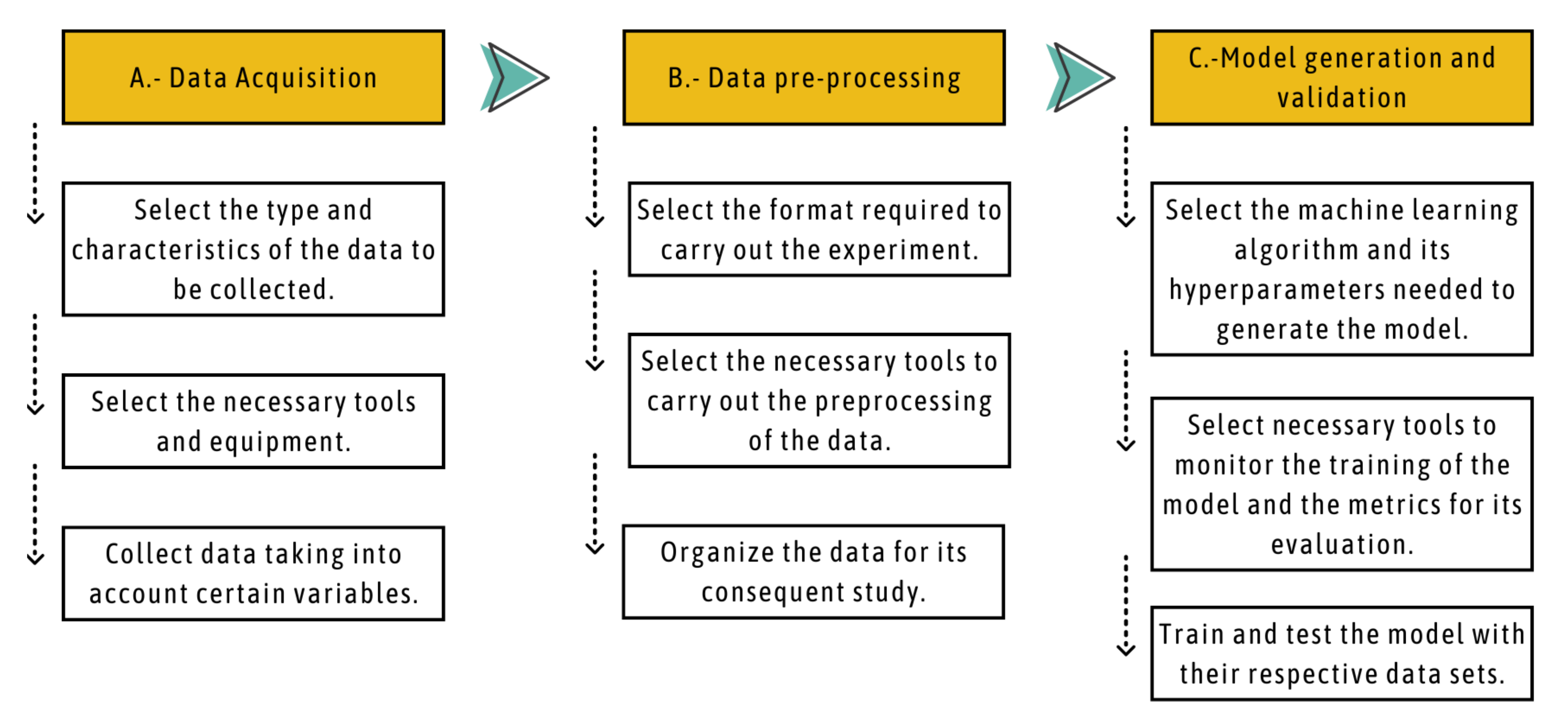

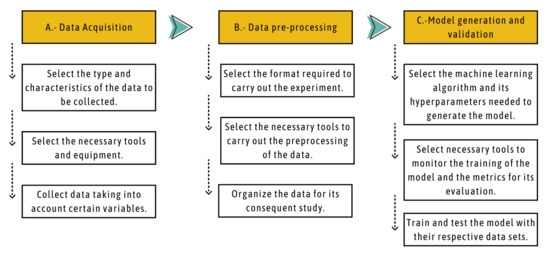

The Figure 1 shows the methodology followed for the development of the helmet detection project in motorcyclists. In the first stage, the videos that will serve as input data are collected. In stage 2, data preprocessing consists of extracting a certain number of frames (images) from the videos, which will be used in stage 3 for training and validation of the deep learning model in order to classify motorcyclists with and without helmets in real time, in this last step different deep learning techniques are integrated. Each stage is explained in detail in the next subsections.

Figure 1.

Proposed methodology for the generation of an intelligent model for helmet detection in motorcyclists.

2.1. Data Acquisition

Consists of measuring or capturing the relevant information for the research work [27]. According to the proposal presented, images of people with and without helmets will be collected, since deep learning methods can work with audio data, images, etc. and automatically learn the necessary representations to perform detection or classification tasks [28]. This phase will be divided into two sections, planning and execution.

In the planning, a motorcycle brand Vento 2021 model “Rocketman” was selected, which is the second best-selling motorcycle brand in Mexico since it is the geographical area where the research study was conducted [29]. Next, a GoPro HERO5 Session 10 MP 4 K Ultra HD Wifi-Sports Camera (4 K Ultra HD, 3840 × 2160 Pixels, 120 fps, 1280 × 720, 1920 × 1080, 2560 × 1440, 3840 × 2160 Pixels, 720 p, 960 p, 1080 p, 1440 p, NTSC, PAL) was chosen as the device to record the drivers, because it has different capture modes and video settings, as well as the feature of incorporating a micro SD memory for storing the files generated and its ease of mounting on motorcycles. Table 1 presents the video configurations used in the experimentation. Then, the study subjects who participated in the tests were selected, where 13 were students of the Autonomous University of Zacatecas, with ages between 21 and 30 years old and 3 other drivers were residents of the state of Zacatecas, Mexico with ages between 30 and 41 years old. In this experiment, a total of 16 participants were used, of which 7 are women and 9 are men as a first approximation for a new approach to safety, based on the fact that the number of male drivers in LMIC is usually greater than 90%, as can be seen in the data presented by Datos México [30]. Finally, it was determined that the helmets to be used would be certified full-face and modular helmets, for the following reasons: they are the safest helmets for motorcycle riders [31] and finally for being in line with objective 7 planned by the World Health Organization [11].

Table 1.

Video settings of video camera GoPro Hero 5 Session.

In the execution part, and given that this proposal is intended to serve as the basis for the development of an intelligent system for monitoring the use of the helmet which is part of the motorcycle itself, the acquisition of the videos must be carried out from a position that meets two points, one, where don’t obstruct the user’s visual field and two, where don’t compromise the visualization of data presented on the motorcycle’s dashboard. That said, and as shown in Figure 2, the camera will be installed on the handlebars of the motorcycle on the side of the dashboard at a height where it does not significantly affect the user’s field of vision.

Figure 2.

Position of the camera mounted on the motorcycle.

Once the position of the camera is selected, the videos will be recorded. Before starting the capture, the participants will receive instructions to try to start the motorcycle while carrying out the activity, simulating that the helmet use monitoring system was already installed, so that the images collected are useful for work.

In this part, in order for the model to be robust, that is, that it can correctly classify the use of the helmet, it will be sought to have to a certain extent variety in terms of the use of personal items such as caps, face masks and sunglasses by the participants.

2.2. Data Pre-Processing

In this section, the objective task will be extract certain number of frames per second (FPS) in JPG format from the videos obtained in the data acquisition stage, for this it’s necessary to develop a script for the extraction of these frames (images) from each of the videos in Python language with jupyter notebook, which is a web application for creating and sharing computational documents [32], below is the pseudo code for this step.

Pseudo code for extract frames.

#import the necessary libraries

#define the constant SFPS

SFPS = 4

#define a function to format timedelta objects

function formattimedelta(td):

result = string(td)

result, ms = result.split(".")

ms = int(ms)

ms = round(ms / 1e4)

return f(result)

#define a function to get the list of durations where to save the frames

function ḡetsavingframesdurations(cap, savingfps):

s = []

get clip duration with cv2

for ī in range(0, clipduration, 1 / savingfps):

s.append(i)

return s

#set the working directory

#open the video file with functions of cv2

#get the FPS of the video with functions of cv2 (fps)

set a variable to the minimum value of SFPS and the fps

savingframespersecond = min(fps, SFPS)

#get the list of duration spots to save (savingframesdurations)

count = 0

#start a loop that runs until there are no more frames to read

while Ŧrue:

#read a frame

if not is read:

break

frameduration = count / fps

try:

#get the earliest duration to save (closestduration)

except:

#the list is empty, all duration frames were saved

break

if frameduration >= closestduration:

#save the frame (framedurationformatted)

#name the frame

#drop the duration spot from the list, since this duration spot is already saved

count += 1

#release the capture

Once the images have been extracted and labeled in their corresponding category, the database must be divided into training and test sets to avoid the over-fitting, that is, when the model describes all the data perfectly well without being really acceptable [33]. The pseudo code for this last data pre-processing step is shown below.

Pseudo code for separating data into training and test sets.

import the necessary libraries

Set the path to the directory containing the files to be moved

#Get a list of all files in the directory

#Check the total of files in the directory

#Calculate the number of files to be moved (30% for test set)

#Start a for loop in the range of the number of files to move

#Choose a random file from the directory

#Set the source path to the chosen file

#Set the destination path to the target directory

#Move the file

#If the source and destination are the same file

print(“Source and destination represent the same file.”)

#If there is a permission error

print(“Permission denied.”)

#For any other error

except:

print(“Error occurred while copying file.”)

print(aa)

#Print the number of files moved

For the hardware part, all the data preprocessing and the following model generation section will be carried out on a computer with the following characteristics: brand: lenovo, model: ideapad 3 (15), processor: AMD RYZEN 7 5700U, RAM memory: 16 GB.

2.3. Model Generation and Validation

The use of deep learning tools such as Convolutional Neural Networks (CNN) has had excellent results in applications that deal with unstructured data such as images. This algorithm, which is based on the human visual system and takes its name from a linear mathematical operation between matrices called convolution [34], can recognize visual patterns directly from the pixels of an image [35].

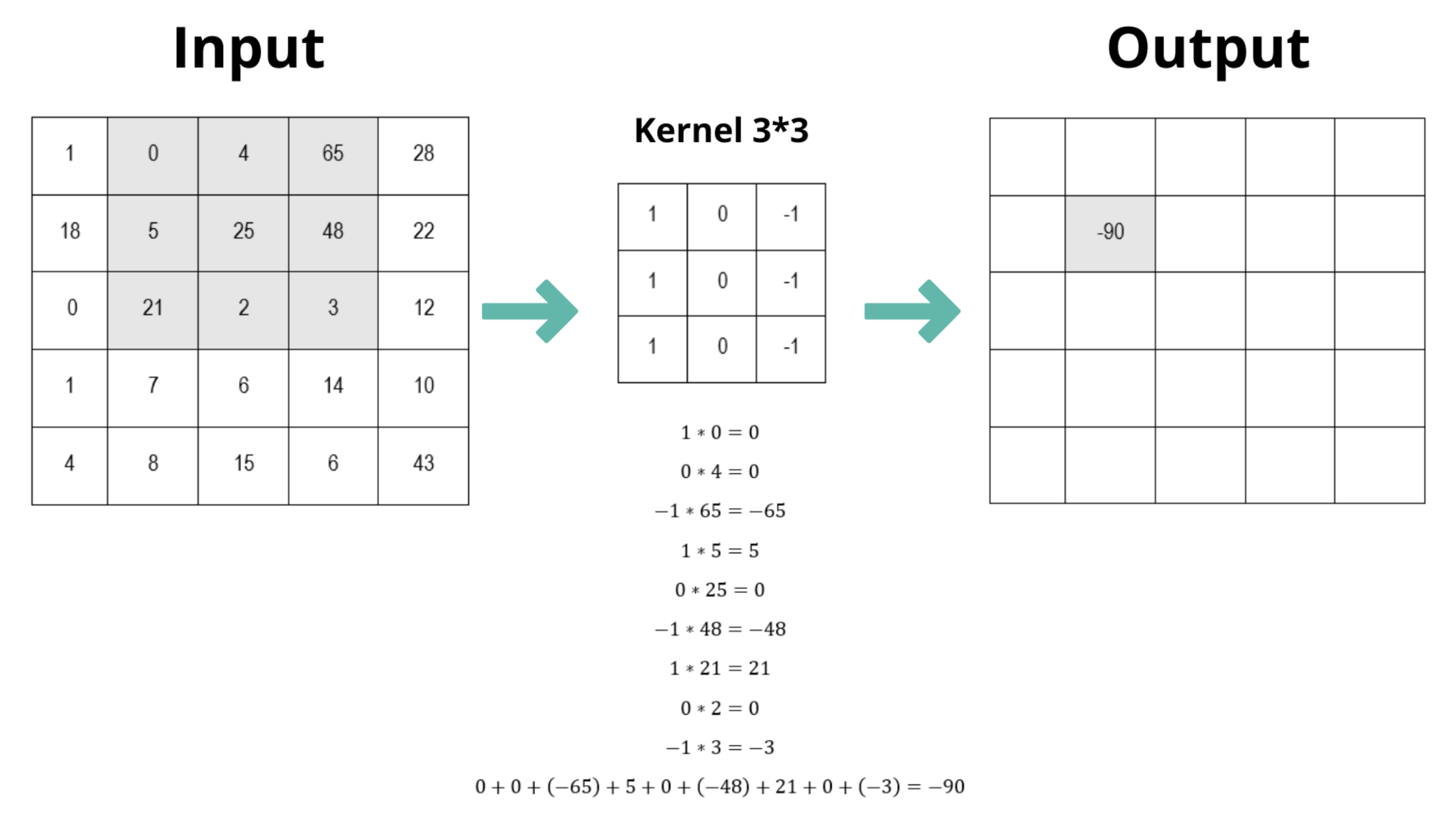

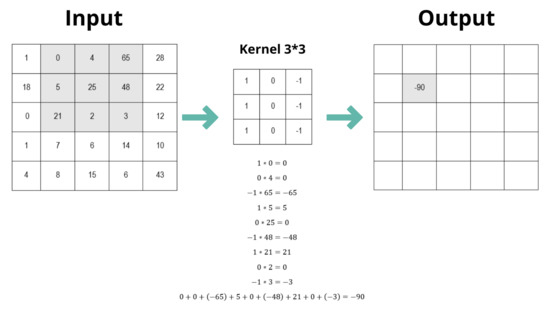

These networks (CNN) typically contain three main components; the main one is the convolution operations, that consists of taking a group of nearby pixels from the input image and mathematically operating these groups against a small matrix called kernel, that goes through the entire image to finally produce a new matrix that contains certain distinctive features of the image, an example of this operation is shown in Figure 3, and we can see it described mathematically in Equation (1);

where n refers to the number of elementes, ∗ denotes the operation of convolution, b is the bias of outputs, W is a filter of size Kx × Ky and f is the activation function.

Figure 3.

Example of convolution operation.

Another important component is pooling operations, which reduce the size of images while preserving the most important features of the image; finally, we also have nonlinear activation operations called activation functions, which are necessary to avoid learning trivial linear representations of the image [36].

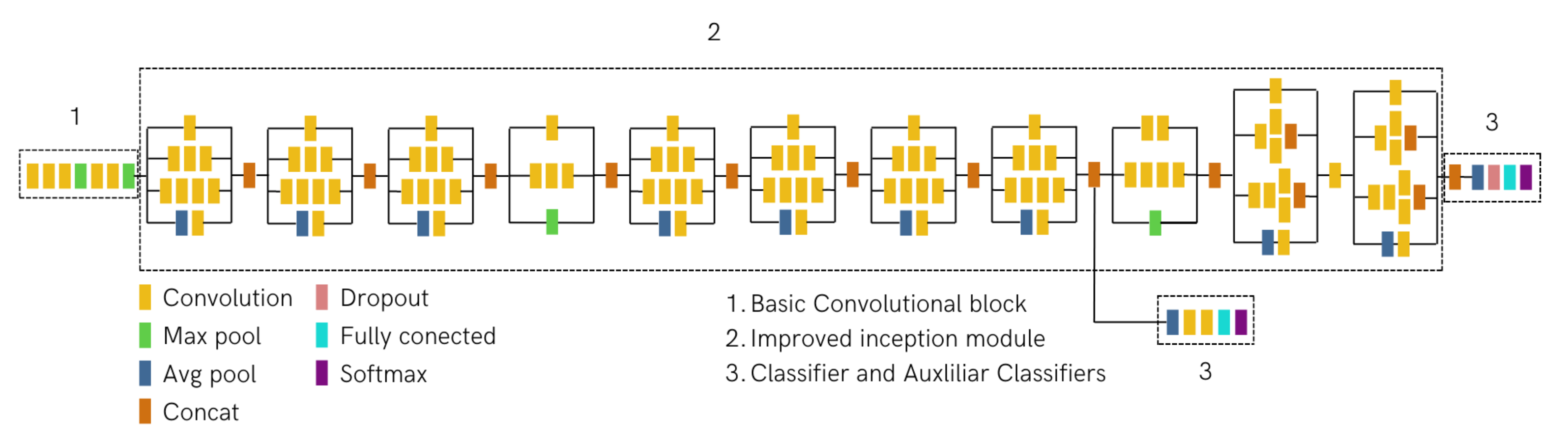

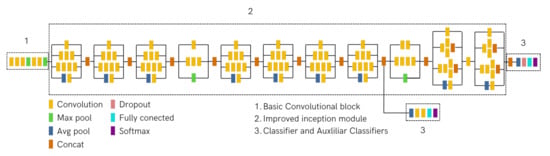

In the proposed methodology will use Tensorflow [37], a second-generation technology developed by Google, specialized for the implementation of large-scale machine learning models, which supports the use of deep learning models such as CNNs. Today Google has made available to researchers different pre-trained models, such as Inception v3, which has been shown to achieve greater than 78.1% accuracy in training the data set with more than 1 million images entitled ImageNet [38]. One of the main characteristics of Inception networks in scenarios where memory or computational capacity are limited, is that the computational cost required to run them is much lower than other types of networks such as VGGNet or its successors. Table 2 shows the architecture of the Inception v3 CNN, and the Figure 4 shows a high level diagram of the model, where 3 main sections can be identified, number one the basic convolutional block that is used for feature extraction, number two the improved inception module in which multi-scale convolutions are conducted in parallel, and the convolutional results of each branch are further concatenated, and number 3 the classifier [36,39].

Table 2.

Inception V3 architecture. Where filter concat 1 each 5 × 5 convolution is replaced by two 3 × 3 convolution, filter concat 2 after the factorization of n × n convolutions and filter concat 3 expanded the filter bank outputs.

Figure 4.

High level diagram of Inception V3.

The inception V3 network consists of ReLU activation functions which can remove the back propagation vanishing gradient problem during training and reduce the training time [40]. The equation of this function is shown below.

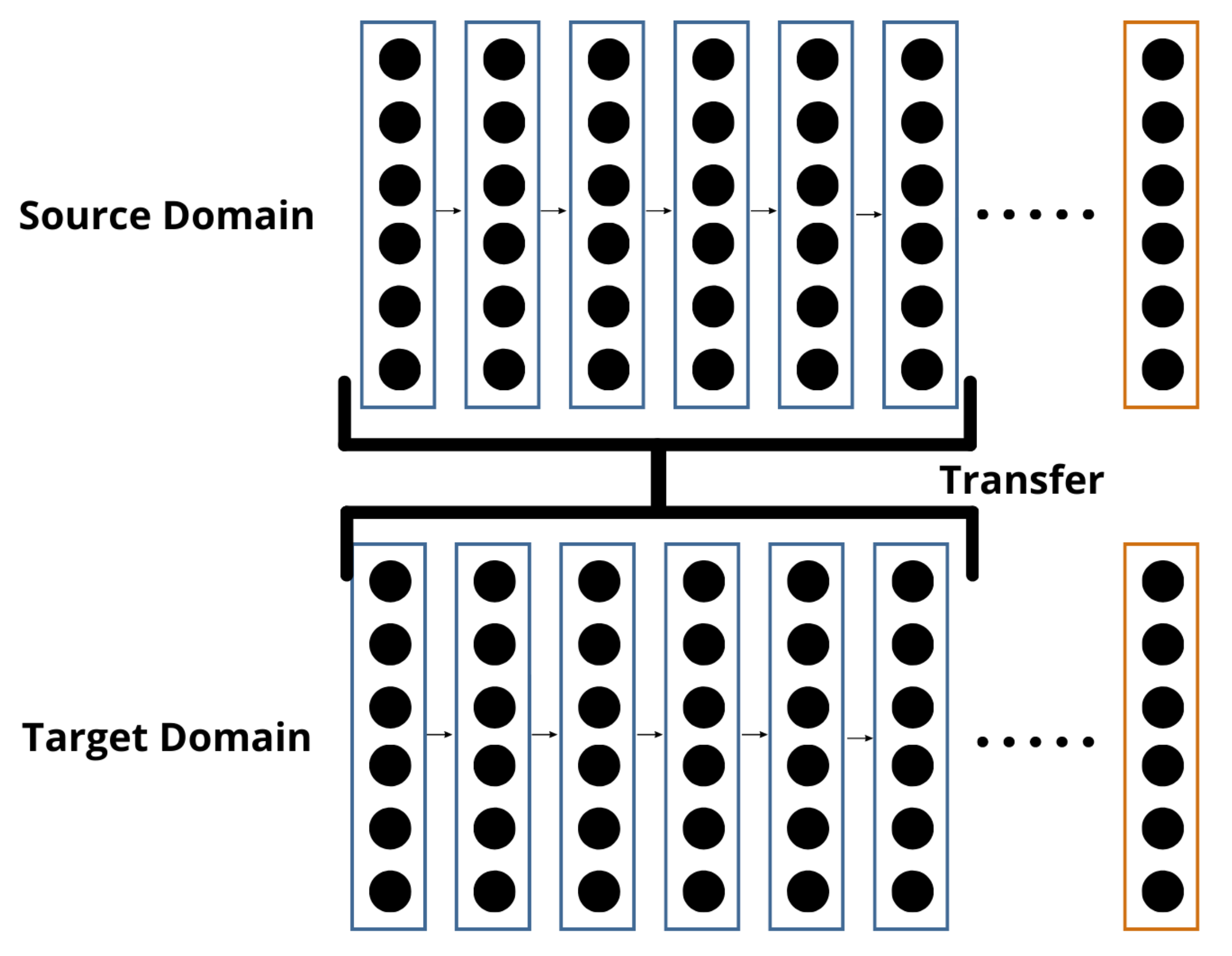

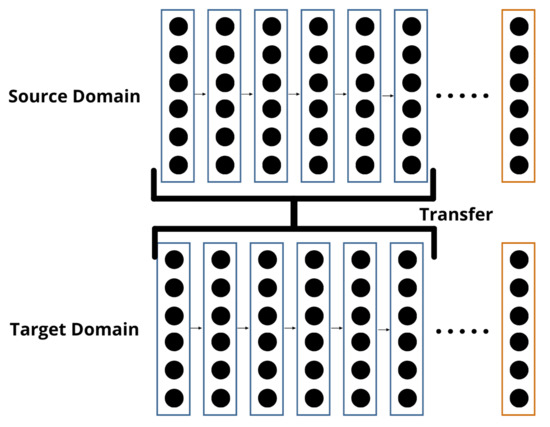

Once the CNN architecture has been established, the Transfer Learning technique will be applied, which is a tool already validated by the scientific community and presented by Tan et al. [41], which allows us to reduce the time and computational cost for training the classification model of helmet use in motorcyclists, which is usually very high when training a deep learning model from scratch. This technique tries to transfer the learning from one domain to another domain, which is categorized into four groups, among which is network-based, in which it refers to the partial reuse of a pre-trained network in a certain domain, which includes the structure and connection parameters as shown in Figure 5.

Figure 5.

Sketch map of network-based deep transfer learning.

As mentioned in the above definition, partial reuse of the network means that for the experiment the last layer will be modified to obtain two possible output results (with helmet and without helmet) through sigmoid Equation (3), where the input is any value between negative infinity and positive infinity number and the output can only have values between zero and one.

This last layer will be trained by back propagation algorithm, which can be broken down into four steps: (1) Feed-forward computation, (2) Back propagation to the output layer, (3) Back propagation to the hidden layer, (4) Weight updates [42]. The adjust of weight parameter will use the cross-entropy cost function, where the error between the given output of the last layer and the real value of the given example is calculated. The Formula of cross-entropy loss is shown in the Equation (4),

where “x” is the actual value and “y” is the predicted value. For this experimentation, Tensorflow’s Adam optimizer will be used with a learning rate of 0.01. This is an algorithm for first-order gradient-based optimization of stochastic objective functions, which is based on adaptive estimates of lower-order moments, and generally performs well in situations where problems address large amounts of data or parameters [43]. For each training epoch, a batch size of 200 images sufficient to improve model performance was handled. The retraining script will output a “.pb” file with the new modified classification model, and a “.txt” file containing the new classes (“with helmet” and “without helmet”). Both files are in a format that Python can read. This part of the InceptionV3 retraining is based on the work published by Tensorflow [44].

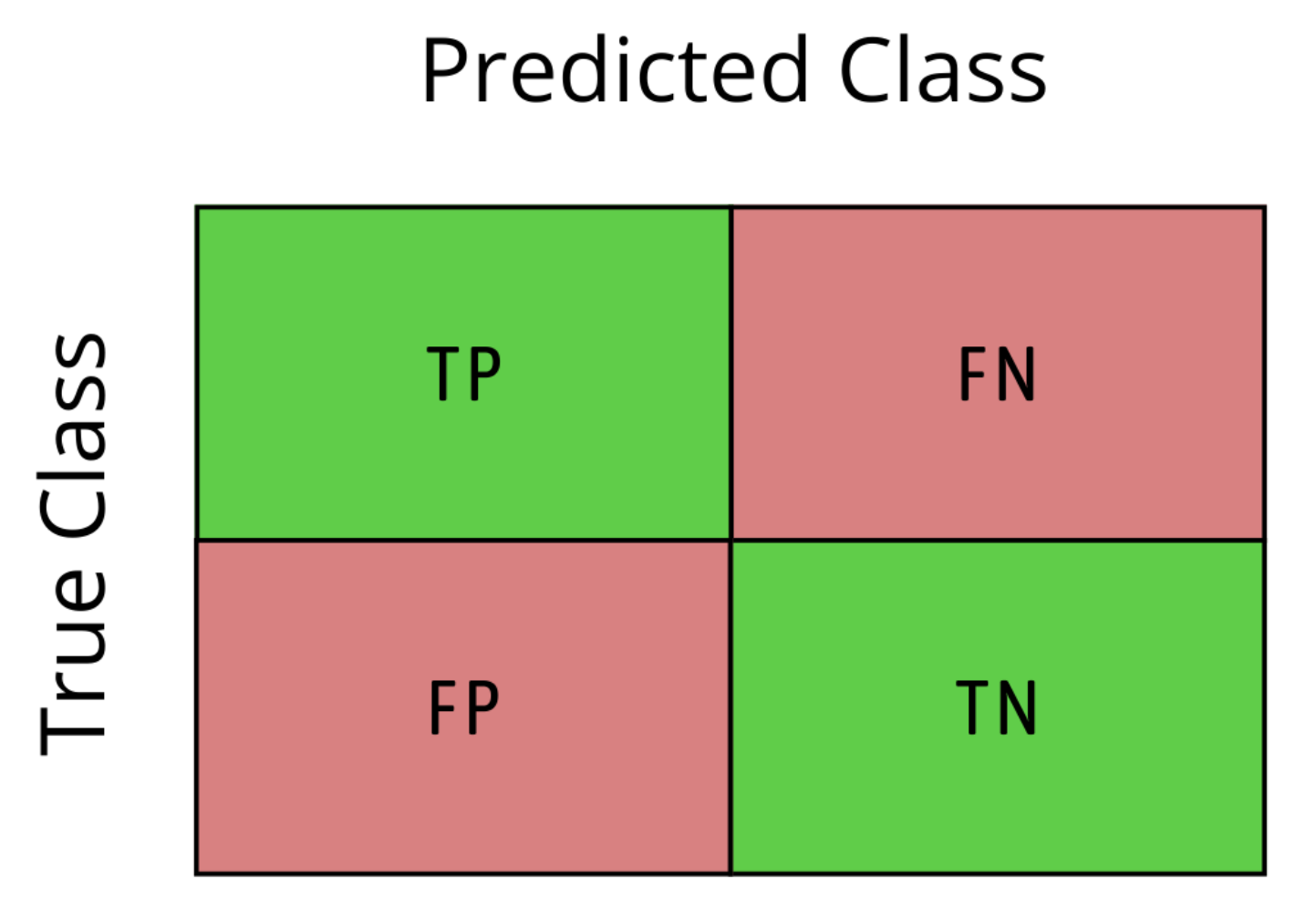

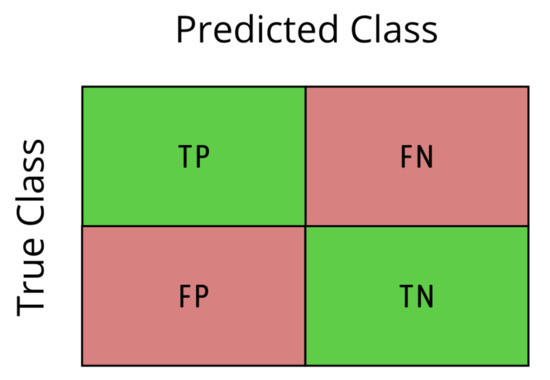

In the test phase a new script will use the re-trained model to generate helmet usage predictions on the set of test images, and five different metrics: accuracy, sensitivity, specificity, Area Under the Curve (AUC) and Precision will measure the performance of the model detecting helmeted and unhelmeted drivers. All this evaluation metrics are based on a table called Confusion matrix, where the rows represents the actual values, and the columns the predicted values [45]. An example of two-class confussion matrix is shown in Figure 6.

Figure 6.

A two-class Confusion matrix.

TN refers to true negative results, TP to true positive results, FN refers to false negative results, and FP is the abbreviation of false positive results. The Accuracy metric is the ratio of the number of true results between the total number of samples, this is shown in Equation (5):

The sensitivity metric is the ratio of the total positive samples that are correctly classified as positive and the specificity is the proportion of negative samples that are correctly classified as negative [46].

The metric Area Under the Curve (AUC) is the area under the Receiver Operating Characteristic (ROC) curve, that is a graphical representation of the performance of a classifier. The AUC can have values between 0 and 1, where values close to one indicate that the classifier performs well [45]. To calculate the AUC is through trapezoidal integration [47], shown in Equation (7).

where = and .

The last evaluation criteria is the Precision presented in Equation (9).

The pseudo code corresponding to this model testing stage is presented below.

Pseudo code for test the model.

#import the necessary libraries

#get the path of the model and the labels

#load the label file

#load the graph file

#loads the paths and class of the images contained in the test set

#save the paths in the variable “paths”

#save the class in the variable “realout”

#get the size of the test set

rows=paths.size

#create an empty vector for save the paths of the incorrect predictions

pathsinc=[]

#creates a size vector of the test set for the predictions

predictout=np.zeros(rows,dtype=int)

#initializes “i” to control the position of the predictions.

i=0

#start a session for running TensorFlow operations.

with ŧf.Session() as sess:

#get the last tensor of the model

#start a loop that runs until there are no more images to load

while(i<rows):

#get the path

#prepares the image for model input

#make the predicition

#classifies the prediction to class 0 or 1

if ((predictions[0][0] > predictions[0][1])):

With helmeth

predictout[i]=1

if ((predictions[0][1] > predictions[0][0])):

Without helmet

predictout[i]=0

#print the prediction class and the real class for visualize how its working the model

print(“prediction # ”,i, “ ”,predictout[i],“ // ”, realout[i])

#if the prediction is incorrect save the path of the image in the vector pathsinc

if(predictout[i]!= realout[i]):

pathsinc.append(paths[i])

#update the variable i

i=i+1

#export the predictions

#export the paths of the errors

#Calculate the metrics

3. Results

This section presents the experimental results obtained according to the different 3 phases of the proposed methodology.

3.1. Results of Data Acquisition

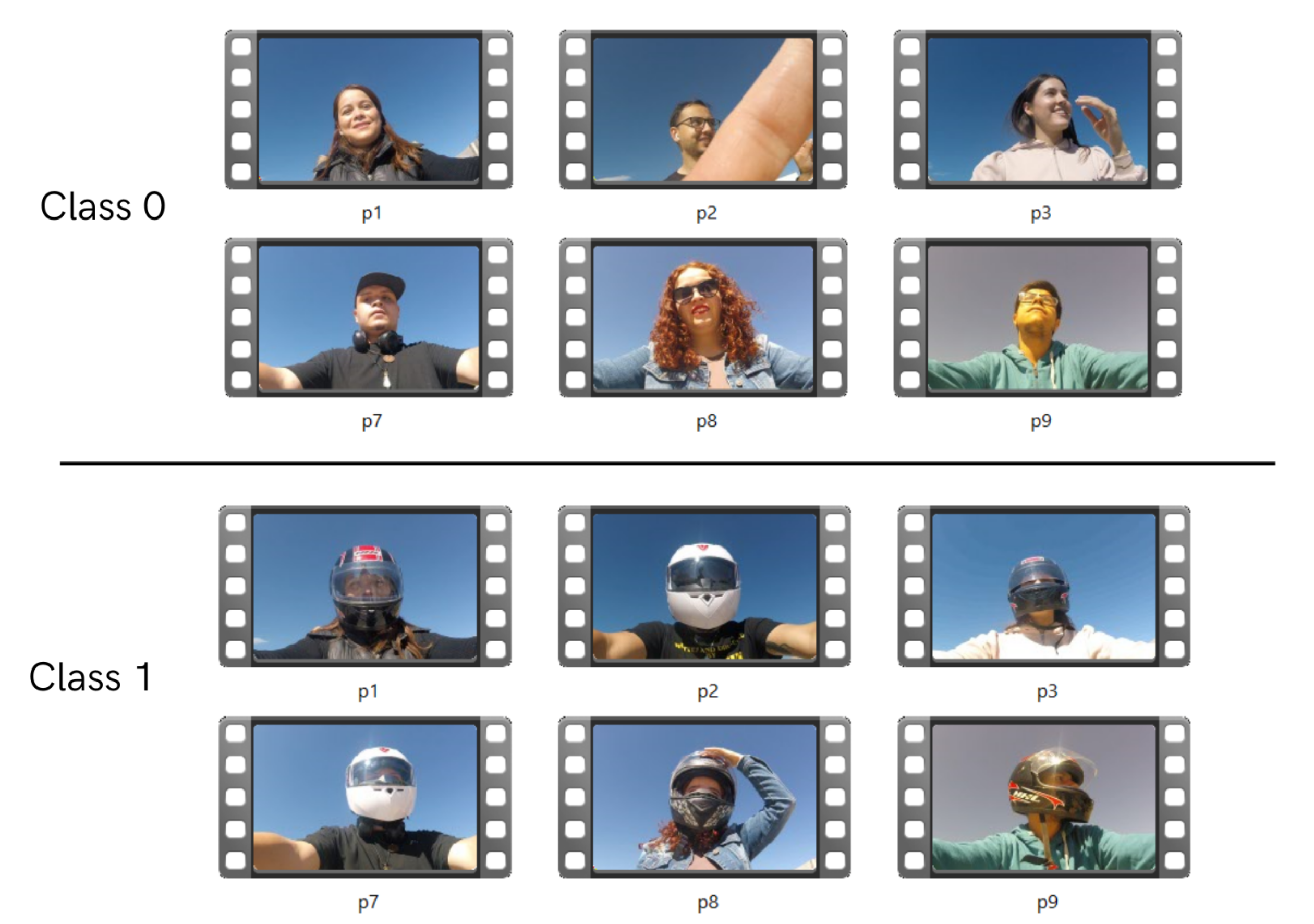

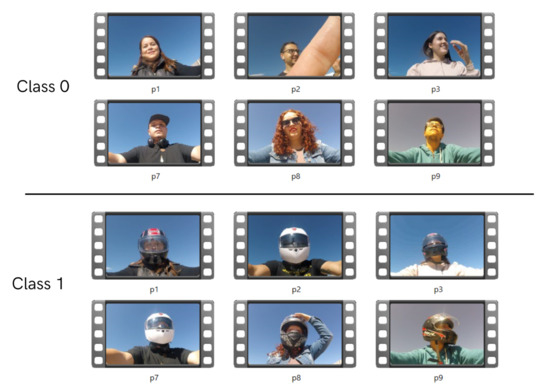

In this phase, 32 videos were collected where each of the 16 drivers participated in two recordings, one with a helmet and the other without using it, resulting in a balanced database between the two classes. These videos lasted approximately two minutes, and were saved in mp4 format with the aforementioned configuration, it is worth mentioning that the videos were recorded in spaces for vehicle parking, both in the facilities of the Autonomous University of Zacatecas and residents of, Zacatecas, Mexico. Nine participants used full face type helmets and the remaining seven used the modular or flip-up type helmet. The study tests were carried out with the motorcycle without movement or static in order to provide greater safety to the study subjects. Also, participants were asked to simulate normal riding and different head movements to strengthen the data set for different rider positions. Figure 7 shows an example of the collected videos categorized

Figure 7.

Example of collected videos.

3.2. Results of Data Pre-Processing

Four frames of the thirty available were extracted in each second of the recordings. With the execution of a developed script, each of these images is moved to a directory depending on its class. All the images was named with the participant number, the code “cc” for person with helmet and “sc” for person without helmet, and also the exact time of the recording corresponding to the extracted frame; the dimensions of the images remain 1920 by 1080 pixels. In total, 7465 images of people with helmets and 7680 for people without helmets were obtained, which adding these two figures gives us a result of 15,145 images. Of this total, we use 70% for the training model phase and 30% for the test phase. The following Table 3 shows the distribution of these new generated sets.

Table 3.

Training and testing sets.

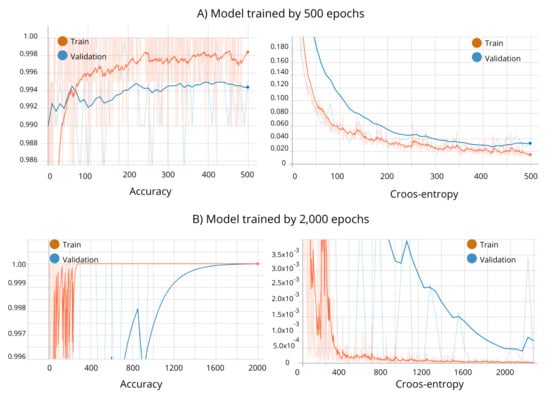

3.3. Results of Model Generation and Validation

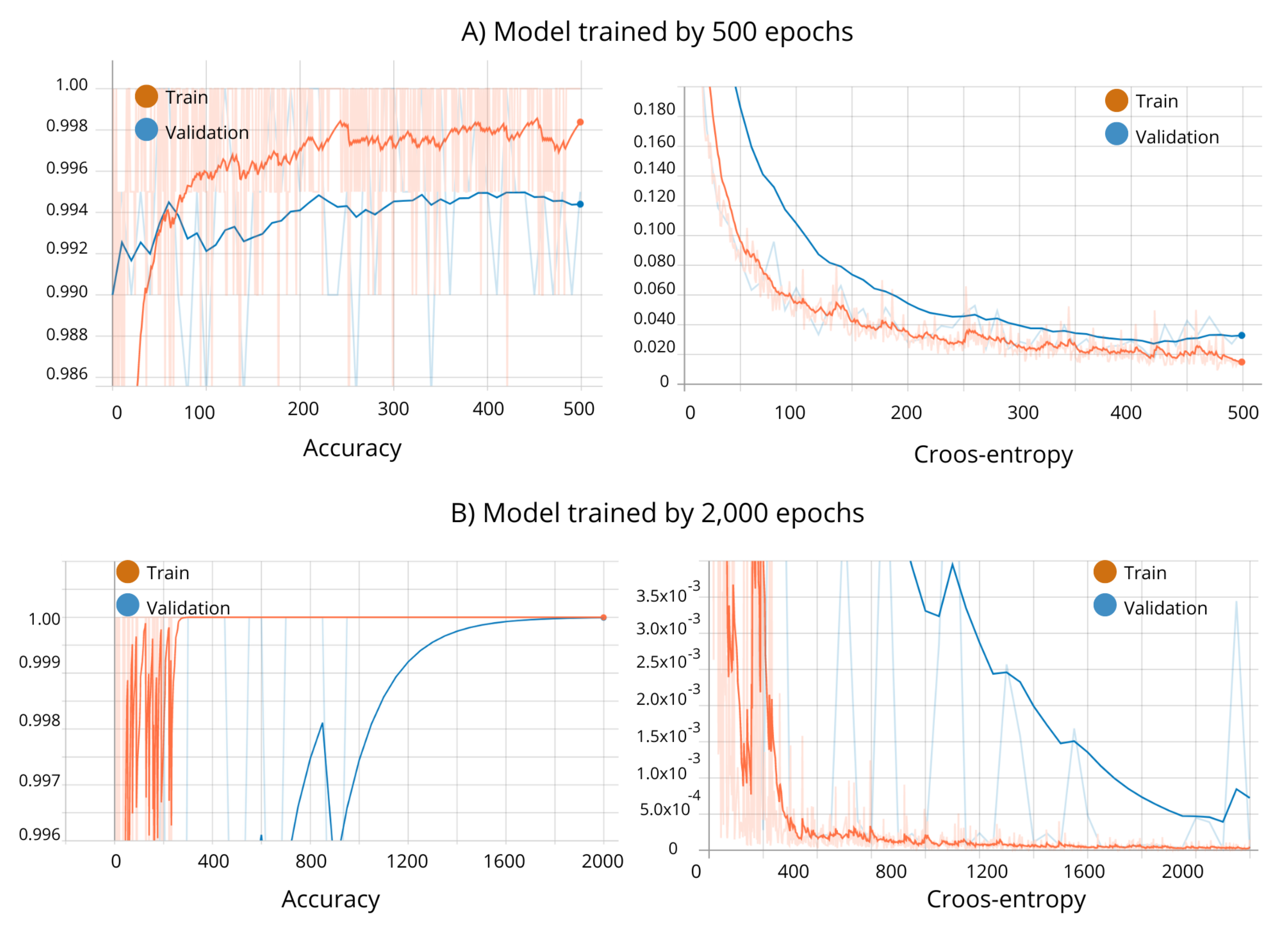

Two models were trained with the set of 10,602 images, varying the number of epochs, specifically 500 and 2000 respectively. In this phase, the accuracy of the model and the cross-entropy were monitored every 10 epochs. As can be seen in Figure 8, the performance of both models is satisfactory, since the loss of the validation is higher than the loss in the training process [48].

Figure 8.

Accuracy and cross-entropy monitoring in the model training stage.

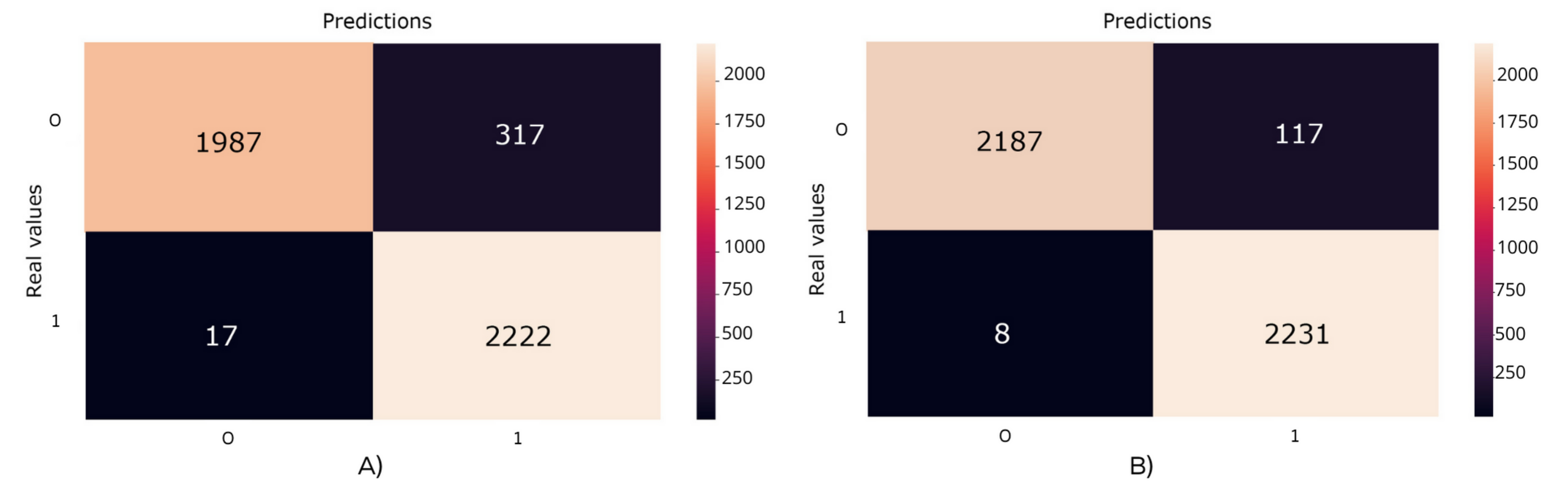

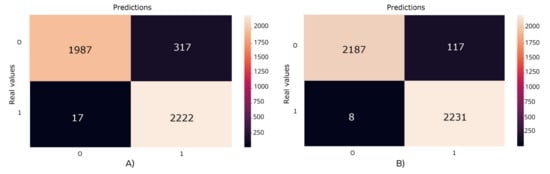

The Figure 9A, present the confusion matrix obtained through the classification of helmeted and unhelmeted motorcyclist using the model one trained by 500 epochs in the test set, and the Figure 9B, shows the confusion matrix corresponding to the results obtained from the classification using the model two trained by 2000 epochs. It is easy to see which in the first confusion matrix, a total of 4209 images, equivalent to 92.64% of the set of test images, were correctly categorized; 1987 of study subjects without helmets and 2222 with helmets. In the case of the second confusion matrix, a total of 4418 images were correctly classified, equivalent to 97.24% of the test set; 2187 from study subjects without helmets and the remaining 2231 with helmets.

Figure 9.

(A) Confusion matrix corresponding to Model 1 test (trained by 500 epochs). (B) Confusion matrix corresponding to Model 2 test (trained by 2000 epochs).

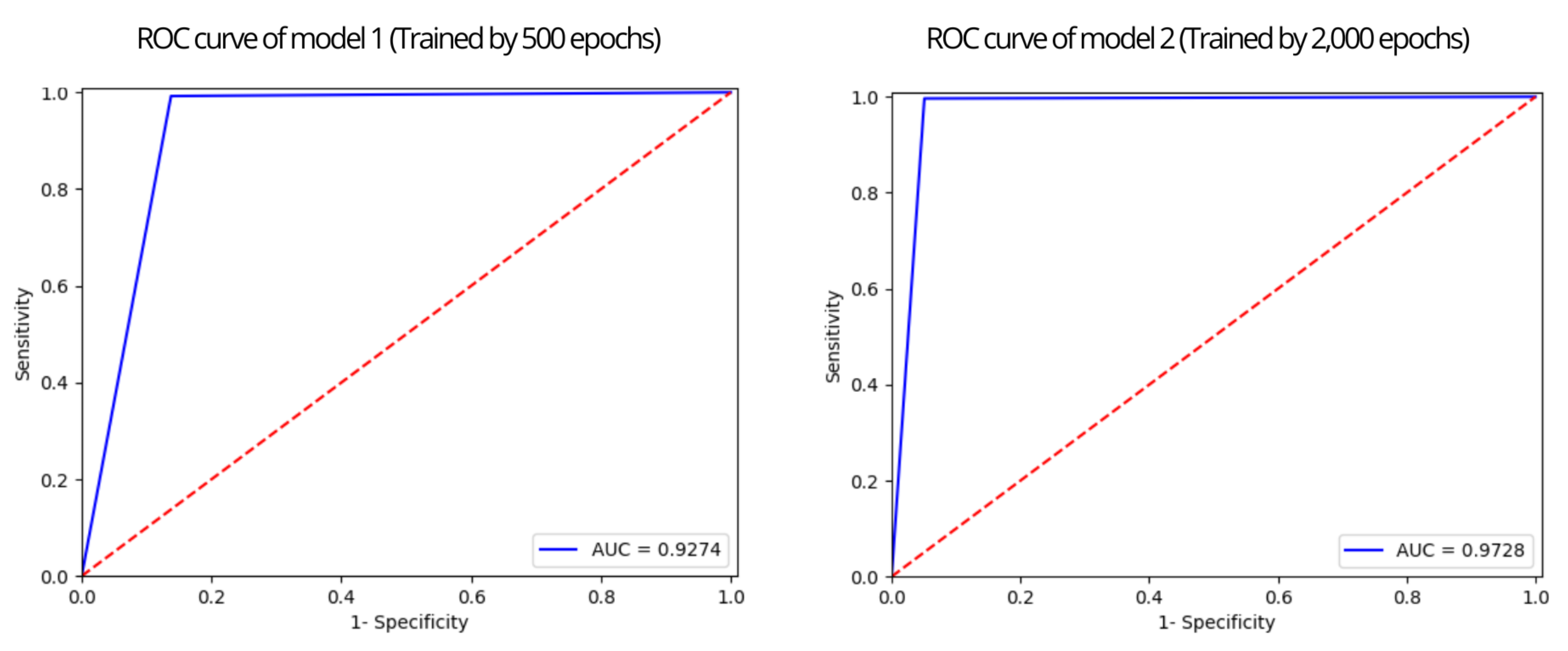

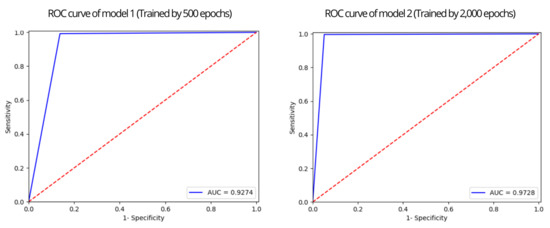

In Figure 10 shows the two ROC curves resulting from testing the aforementioned models on the test set, the line blue shows the relation between the sensitivity and the specificity of each model. The red line shows what the result would be like if the model were very poor in classification. The respective AUC values are: 0.9274 for the first model and 0.9728 for the second.

Figure 10.

ROC curves.

Table 4 presents the Accuracy, Sensitivity and Specificity metrics resulting from the evaluations of the models using the test set.

Table 4.

Accuracy, Sensitivity, Specificity and Precision in the test set obtained by model.

These results show that thanks to the proposed methodology it is possible to detect in an objective and satisfactory manner the adequate use of the helmet in real time, since the validation rates obtained from different metrics prove it, and having the best performance model 2 trained for 2000 epochs.

4. Discussion

Table 5 shows the results obtained in the literature by different research proposals where differents convolutional neural networks are used as a method of helmet detection and a comparison is made with the best model established in this work. As can be seen the accuracy rates are similar in most of the works presented, however the author Singh et al. [17], shows a greater difference in classification with respect to the others, this is because the accuracy rate only refers to that obtained in the training process of the model and in the proposal refers to the performance of the model in the testing stage where images that the model has never seen are used to classify them, this means that the neural network used has a better understanding to generalize between the different categories established (with helmet and without helmet). In the case of Rohith et al. [19], in spite of using a training methodology of an inception V3 convolutional neural network, the number of images fro train and test the model is much smaller, which causes a drop in performance.

Table 5.

Comparison of results between related works and our proposed work by classification of helmeted and unhelmeted motorcyclists.

On the other hand, the case of the Shine et al. [20] presents a excellent performance using a segmentation process for the initial identification of the motorcycle and then the detection of the helmet, their disadvantage is since its work involves the system being fixed in strategic areas, it runs the risk of being violated in different like: avoiding the monitoring area, using the helmet only in those surveillance areas, and in addition to the fact that monitoring is not constant throughout the driver’s journey, finally, the test set of their model is 33% smaller than our proposal, which can affect the results of the validation metrics. An important advantage of using pre-trained models is that they have the ability to obtain good results if the experimentation scenario changes, however, using a custom network in another scenario can bring different results to those previously obtained. In the work presented by Lin et al. [21], who uses the Inceptionv3 architecture together with the Multi Transfer Learning technique, the accuracy is lower because the proposal classifies the use of the helmet in all the occupants of the motorcycle and not only in the driver, which makes this a multi-class problem. In the case of Cheng et al. [22], we can see that the accuracy of the model is clearly affected by the small amount of data available to train and validate the model. According to the results mentioned by Jia et al. [23], the YOLO series algorithms are a viable option to identify helmet use in images where the number of elements present is varied, as it is in the case of urban traffic images. Finally, Waris et al. [24] report metrics very similar to that achieved by our proposal, with the difference that 200,000 training epochs were needed to train their model to reach their best result.

With the comparison presented we can see that this work in our first approach shows to have strong points as shown by the values of the evaluation metrics.The big difference that our work has with the proposals that currently exist is that it is planned so that in the future it will be part of a preventive system that is part of the motorcycle, it will be able to detect the use of the helmet in real time, thus having a preventive safety method with a high confidence index that helps prevent serious and even fatal head injuries by ensuring that the motorcyclist is wearing his helmet.

To end this discussion, it is a fact that to date there are different researches that seek to improve the road safety of motorcyclist users, however the literature shows that these helmet detection systems focus on fixed video security methods that can be easily circumvented, which ultimately seek to be corrective systems for the action of helmet use and do not ensure that the motorcyclist uses this protective element throughout the journey.

5. Conclusions

In conclusion, it can be said that the research and experimentation work carried out in this article provides a first approach to the generation of new preventive safety systems that guarantee the use of the helmet from the moment the motorcyclist begins his journey until the end of it, based on the fact that such preventive systems become part of the motorcycle and thus really take advantage of the real-time detection of the helmet.In addition, this new motorcycle safety approach of monitoring helmet use from a position on the motorcycle has not been studied based on the literature review conducted in this research. It has been shown that intelligent models that are generated with deep learning algorithms such as CNNs are capable of identifying in real time the absence of essential elements for your safety, such as a helmet, with an accuracy of 97.24%, which means that it presents highly satisfactory results in relation to some of the related works. However, the previously proposed models suggest their implementation in fixed areas, which implies different forms of violation of the surveillance systems of responsible helmet use for the safety of motorcyclists, in addition to the fact that such proposals have the disadvantage that in order to comply with the aim of increase helmet use, they must work in conjunction with other systems, for example databases of registered motorcycles, and not directly with the motorcycle or the driver. Besides, it can be concluded that the amount of data and the tools necessary for its processing must be directly related to obtain good results, in this first approximation of our work where data collection has been limited was a good choice decided to use the transfer learning for improve the performance of the model.

Future Work

Although this proposal seeks to improve the safety of motorcyclists and reduce the rate of road accidents caused by these means of transport, there is still much work to be done, which is why the following points are proposed as future work.

Reinforce the set of training images with different elements that may confuse the model, increasing the number of test subjects in the experimental phase, thus strengthening the helmet detection model in different situations such as the environment or elements within the area of interest that could hinder the classification process. On the other hand, it should be noted that this work is established as a first approximation for real-time helmet detection in motorcyclists, since specialists in the field of safety and development of intelligent models based on deep learning suggest a larger number of study subjects that can help to improve the classification models.

Another work proposal, is to compare different CNN architectures in the classification of helmet use, for example the Wearnet proposed by Li et al. [49], which has had outstanding advantages in terms of classification speed and ideal model size for applications working in real time, or to test with the architectures used in the related works mentioned above that had satisfactory results in the classification task.

Author Contributions

Conceptualization, J.M.R., J.M.C.-P. and C.H.E.-S.; methodology, J.M.R., H.L.-G. and C.E.G.-T.; software, J.M.R. and R.S.R.; validation, J.I.G.-T., H.G.-R. and J.M.C.-P.; formal analysis, J.M.R. and J.I.G.-T.; investigation, J.M.R., H.L.-G. and J.M.C.-P.; resources, D.R. and K.O.V.-C.; writing—original draft preparation, J.M.R. and C.H.E.-S.; writing—review and editing, J.M.R., J.M.C.-P. and R.S.R.; supervision, D.R. and K.O.V.-C.; project administration, H.L.-G. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

CONACYT and H.A. Ojocaliente Zacatecas for supporting the author Jaime Mercado Reyna.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Cadavid, L.; Salazar-Serna, K. Mapping the Research Landscape for the Motorcycle Market Policies: Sustainability as a Trend—A Systematic Literature Review. Sustainability 2021, 13, 10813. [Google Scholar] [CrossRef]

- Jittrapirom, P.; Knoflacher, H.; Mailer, M. The conundrum of the motorcycle in the mix of sustainable urban transport. Transp. Res. Procedia 2017, 25, 4869–4890. [Google Scholar] [CrossRef]

- Abdi, N.; Robertson, T.; Petrucka, P.; Crizzle, A.M. Do motorcycle helmets reduce road traffic injuries, hospitalizations and mortalities in low and lower-middle income countries in Africa? A systematic review and meta-analysis. BMC Public Health 2021, 22, 824. [Google Scholar] [CrossRef]

- Cheng, A.S.; Liu, K.P.; Tulliani, N. Relationship Between Driving-violation Behaviours and Risk Perception in Motorcycle Accidents. Hong Kong J. Occup. Ther. 2015, 25, 32–38. [Google Scholar] [CrossRef]

- MacLeod, J.B.; Digiacomo, J.C.; Tinkoff, G. An evidence-based review: Helmet efficacy to reduce head injury and mortality in motorcycle crashes: EAST practice management guidelines. J. Trauma-INJ Infect. Crit. Care 2010, 69, 1101–1111. [Google Scholar] [CrossRef]

- World Health Organization. Powered Two-and Three-Wheeler Safety: A Road Safety Manual For Decision-Makers and Practitioners; World Health Organization: Geneve, Switzerland, 2017. [Google Scholar]

- WHO. Global Status Report on Road on Road Safety; WHO: Geneve, Switzerland, 2018. [Google Scholar]

- Tabary, M.; Ahmadi, S.; Amirzade-Iranaq, M.H.; Shojaei, M.; Asl, M.S.; Ghodsi, Z.; Azarhomayoun, A.; Ansari-Moghaddam, A.; Atlasi, R.; Araghi, F.; et al. The effectiveness of different types of motorcycle helmets—A scoping review. Accid. Anal. Prev. 2021, 154, 106065. [Google Scholar] [CrossRef] [PubMed]

- Sharif, P.M.; Pazooki, S.N.; Ghodsi, Z.; Nouri, A.; Ghoroghchi, H.A.; Tabrizi, R.; Shafieian, M.; Heydari, S.T.; Atlasi, R.; Sharif-Alhoseini, M.; et al. Effective factors of improved helmet use in motorcyclists: A systematic review. BMC Public Health 2023, 23, 26. [Google Scholar] [CrossRef]

- Araujo, M.; Illanes, E.; Chapman, E.; Rodrigues, E. Effectiveness of interventions to prevent motorcycle injuries: Systematic review of the literature. Int. J. INJ Control. Saf. Promot. 2017, 24, 406–422. [Google Scholar] [CrossRef]

- WHO. WHO Kicks off a Decade of Action for Road Safety; WHO: Geneve, Switzerland, 2021. [Google Scholar]

- WHO. Helmets: A Road Safety Manual for Decision-makers and Practictioners; WHO Library Cataloguing in Publication Data; WHO: Geneve, Switzerland, 2006; pp. 1–147. [Google Scholar]

- Craft, G.; Bui, T.V.; Sidik, M.; Moore, D.; Ederer, D.J.; Parker, E.M.; Ballesteros, M.F.; Sleet, D.A. A Comprehensive Approach to Motorcycle-Related Head Injury Prevention: Experiences from the Field in Vietnam, Cambodia, and Uganda. Int. J. Environ. Res. Public Health 2017, 14, 1486. [Google Scholar] [CrossRef]

- Forum, I.T. Improving Motorcyclist Safety: Priority actions for Safe System Integration. Available online: https://www.itf-oecd.org/improving-motorcyclist-safety (accessed on 3 March 2023).

- Ambak, K.; Rahmat, R.; Ismail, R. Intelligent Transport System for Motorcycle Safety and Issues. Eur. J. Sci. Res. 2009, 28, 600–611. [Google Scholar]

- Forero, M.A.V. Detection of motorcycles and use of safety helmets with an algorithm using image processing techniques and artificial intelligence models. In Proceedings of the MOVICI-MOYCOT 2018: Joint Conference for Urban Mobility in the Smart City, Medellin, Colombia, 18–20 April 2018; pp. 1–9. [Google Scholar] [CrossRef]

- Singh, A.; Singh, D.; Singh, J.; Singh, P.; Kaur, D.A. Helmet & Number Plate Detection Using Deep Learning and Its Comparative Analysis. In Proceedings of the International Conference on Innovative Computing & Communication (ICICC) 2022, Delhi, India, 19–20 February 2022. [Google Scholar]

- Goyal, A.; Agarwal, D.; Subramanian, A.; Jawahar, C.V.; Sarvadevabhatla, R.K. Detecting, Tracking and Counting Motorcycle Rider Traffic Violations on Unconstrained Roads. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022. [Google Scholar]

- Rohith, C.A.; Nair, S.A.; Nair, P.S.; Alphonsa, S.; John, N.P. An efficient helmet detection for MVD using deep learning. In Proceedings of the International Conference on Trends in Electronics and Informatics, ICOEI 2019, Tirunelveli, India, 23–25 April 2019; pp. 282–286. [Google Scholar] [CrossRef]

- Shine, L.; Jiji, C.V. Automated detection of helmet on motorcyclists from traffic surveillance videos: A comparative analysis using hand-crafted features and CNN. Multimed. Tools Appl. 2020, 79, 14179–14199. [Google Scholar] [CrossRef]

- Lin, H.; Deng, J.D.; Albers, D.; Siebert, F.W. Helmet Use Detection of Tracked Motorcycles Using CNN-Based Multi-Task Learning. IEEE Access 2020, 8, 162073–162084. [Google Scholar] [CrossRef]

- Cheng, R.; He, X.; Zheng, Z.; Wang, Z. Multi-Scale Safety Helmet Detection Based on SAS-YOLOv3-Tiny. Appl. Sci. 2021, 11, 3652. [Google Scholar] [CrossRef]

- Jia, W.; Xu, S.; Liang, Z.; Zhao, Y.; Min, H.; Li, S.; Yu, Y. Real-time automatic helmet detection of motorcyclists in urban traffic using improved YOLOv5 detector. IET Image Process. 2021, 15, 3623–3637. [Google Scholar] [CrossRef]

- Waris, T.; Asif, M.; Ahmad, M.B.; Mahmood, T.; Zafar, S.; Shah, M.; Ayaz, A. CNN-Based Automatic Helmet Violation Detection of Motorcyclists for an Intelligent Transportation System. Math. Probl. Eng. 2022, 2022, 8246776. [Google Scholar] [CrossRef]

- Rasli, M.K.A.M.; Madzhi, N.K.; Johari, J. Smart Helmet with Sensors for Accident Prevention. In Proceedings of the 2013 International Conference on Electrical, Electronics and System Engineering (ICEESE), Selangor, Malaysia, 4–5 December 2013; pp. 21–26. [Google Scholar]

- Kashevnik, A.; Ali, A.; Lashkov, I.; Shilov, N. Seat Belt Fastness Detection Based on Image Analysis from Vehicle In-cabin Camera. In Proceedings of the 2020 26th Conference of Open Innovations Association (FRUCT), Yaroslavl, Russia, 20–24 April 2020; pp. 143–150. [Google Scholar] [CrossRef]

- Sampieri, R.H. Fundamentos de Investigacion; McGraw-Hill/Interamericana: New York, NY, USA, 2017. [Google Scholar]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Team MCD. Mexico 2022. Motorcycles Market Hits The 9th Record in A String. Available online: https://www.motorcyclesdata.com/2023/02/03/mexico-motorcycles/ (accessed on 15 March 2023).

- Data Mexico. Conductores de Motocicleta: Salarios, Diversidad, Industrias e Informalidad Laboral. Available online: https://datamexico.org/es/profile/occupation/conductores-de-motocicleta (accessed on 28 April 2023).

- Padway, M. Motorcycle Accident Statistics Updated to 2023-MLF Blog. 2023. Available online: https://www.motorcyclelegalfoundation.com/motorcycle-accident-statistics-safety/ (accessed on 2 March 2023).

- Kluyver, T.; Ragan-Kelley, B.; Pérez, F.; Granger, B.; Bussonnier, M.; Frederic, J.; Kelley, K.; Hamrick, J.; Grout, J.; Corlay, S.; et al. Jupyter Notebooks—A publishing format for reproducible computational workflows. In Positioning and Power in Academic Publishing: Players, Agents and Agendas; Loizides, F., Schmidt, B., Eds.; IOS Press: Amsterdam, The Netherlands, 2016; pp. 87–90. [Google Scholar]

- Gholamy, A.; Kreinovich, V.; Kosheleva, O. A Pedagogical Explanation A Pedagogical Explanation Part of the Computer Sciences Commons. Available online: https://scholarworks.utep.edu/cgi/viewcontent.cgi?article=2202&context=cs_techrep (accessed on 23 March 2023).

- Albawi, S.; Mohammed, T.A.; Al-Zawi, S. Understanding of a convolutional neural network. In Proceedings of the 2017 International Conference on Engineering and Technology, ICET 2017, Antalya, Turkey, 21–23 August 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Kuo, C.C.J. Understanding Convolutional Neural Networks with A Mathematical Model. arXiv 2016, arXiv:1609.04112. [Google Scholar] [CrossRef]

- Lin, C.; Li, L.; Luo, W.; Wang, K.; Guo, J. Transfer Learning Based Traffic Sign Recognition Using Inception-v3 Model. Period. Polytech. Transp. Eng. 2018, 47, 242–249. [Google Scholar] [CrossRef]

- Abadi, M.; Agarwal, A.; Barham, P.; Brevdo, E.; Chen, Z.; Citro, C.; Corrado, G.S.; Davis, A.; Dean, J.; Devin, M.; et al. TensorFlow: Large-Scale Machine Learning on Heterogeneous Distributed Systems. arXiv 2016. [Google Scholar] [CrossRef]

- Guía avanzada de Inception v3|Cloud TPU|Google Cloud. Available online: https://cloud.google.com/tpu/docs/inception-v3-advanced?hl=es-419 (accessed on 7 March 2023).

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef]

- Nair, V.; Hinton, G.E. Rectified linear units improve Restricted Boltzmann machines. In Proceedings of the ICML 2010-Proceedings, 27th International Conference on Machine Learning, Haifa, Israel, 21–24 June 2010. [Google Scholar]

- Chuanqi, T.; Sun, F.; Tao, K.; Wenchang, Z.; Chao, Y.; Chunfang, L. A Survey on Deep Transfer Learning; Springer International Publishing: Cham, Switzerland, 2018; pp. 270–279. [Google Scholar]

- Cilimkovic, M. Neural Networks and Back Propagation Algorithm. Available online: https://drive.uqu.edu.sa/_/takawady/files/NeuralNetworks.pdf (accessed on 21 March 2023).

- Kingma, D.P.; Ba, J.L. Adam: A Method for Stochastic Optimization. arXiv 2015, arXiv:1412.6980. [Google Scholar]

- How to Retrain an Image Classifier for New Categories|TensorFlow. Available online: https://web.archive.org/web/20180703133602/https://www.tensorflow.org/tutorials/image_retraining (accessed on 20 October 2022).

- Özbilgin, F.; Kurnaz, Ç; Aydın, E. Prediction of Coronary Artery Disease Using Machine Learning Techniques with Iris Analysis. Diagnostics 2023, 13, 1081. [Google Scholar] [CrossRef] [PubMed]

- Banaei, N.; Moshfegh, J.; Mohseni-Kabir, A.; Houghton, J.M.; Sun, Y.; Kim, B. Machine learning algorithms enhance the specificity of cancer biomarker detection using SERS-based immunoassays in microfluidic chips. RSC Adv. 2019, 9, 1859–1868. [Google Scholar] [CrossRef] [PubMed]

- Bradley, A.P. The use of the area under the ROC curve in the evaluation of machine learning algorithms. Pattern Recognit. 1997, 30, 1145–1159. [Google Scholar] [CrossRef]

- Pathar, R.; Adivarekar, A.; Mishra, A.; Deshmukh, A. Human Emotion Recognition using Convolutional Neural Network in Real Time. In Proceedings of the 2019 1st International Conference on Innovations in Information and Communication Technology (ICIICT), Chennai, India, 25–26 April 2019; pp. 1–7. [Google Scholar] [CrossRef]

- Li, W.; Zhang, L.; Wu, C.; Cui, Z.; Niu, C. A new lightweight deep neural network for surface scratch detection. Int. J. Adv. Manuf. Technol. 2022, 123, 1999–2015. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).