Environment Perception with Chameleon-Inspired Active Vision Based on Shifty Behavior for WMRs

Abstract

Featured Application

Abstract

1. Introduction

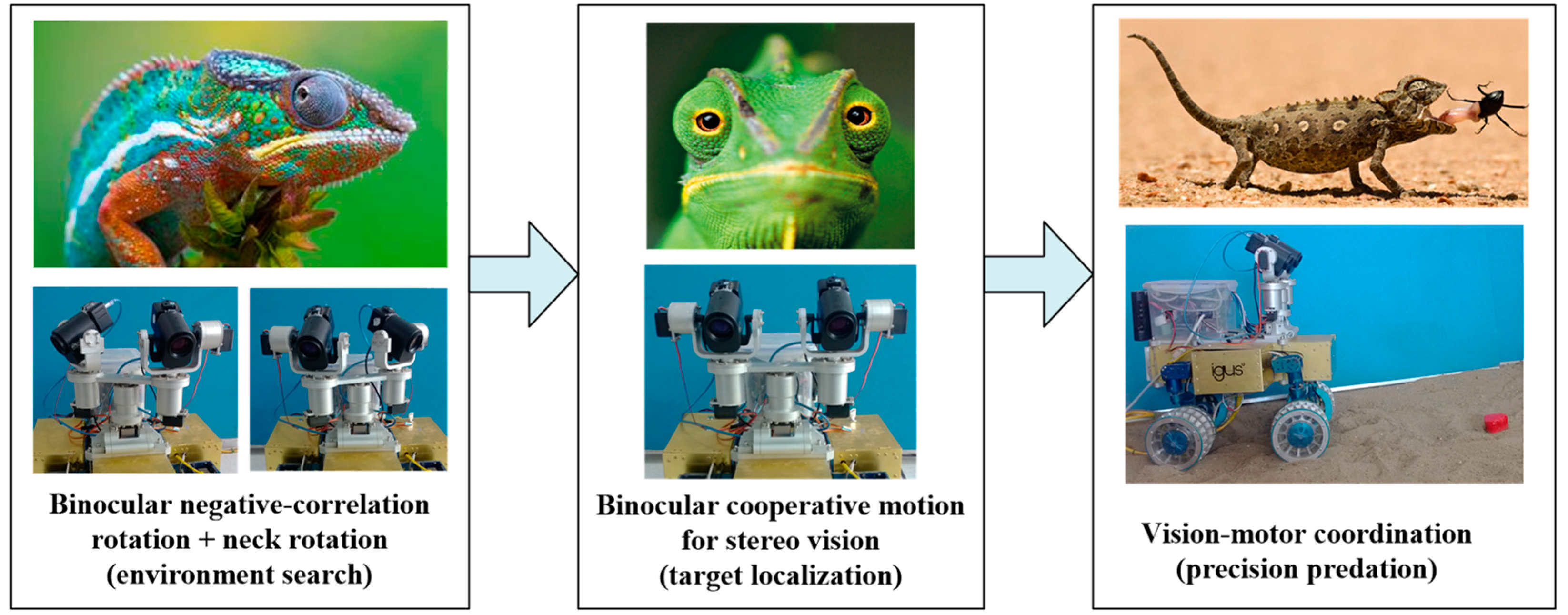

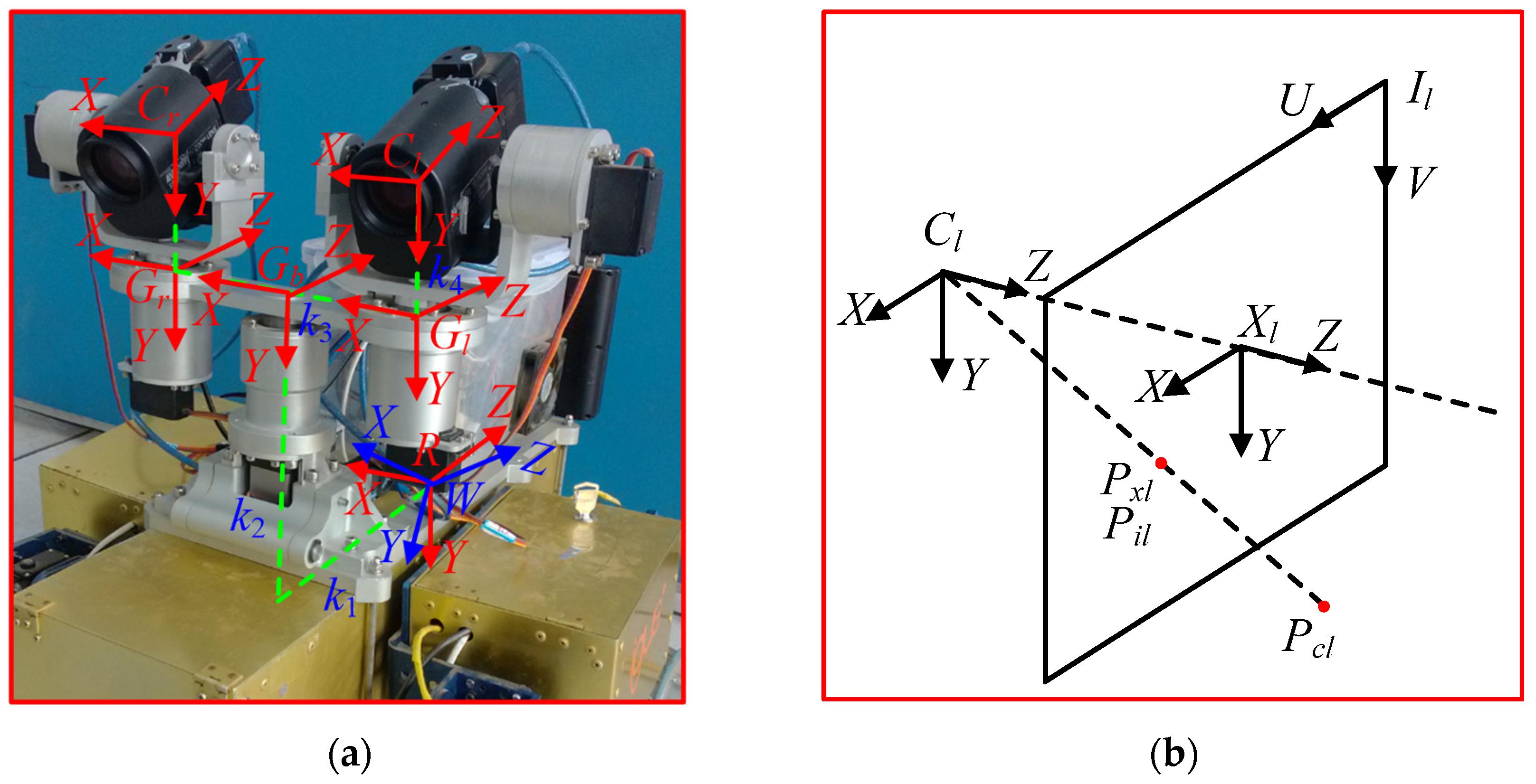

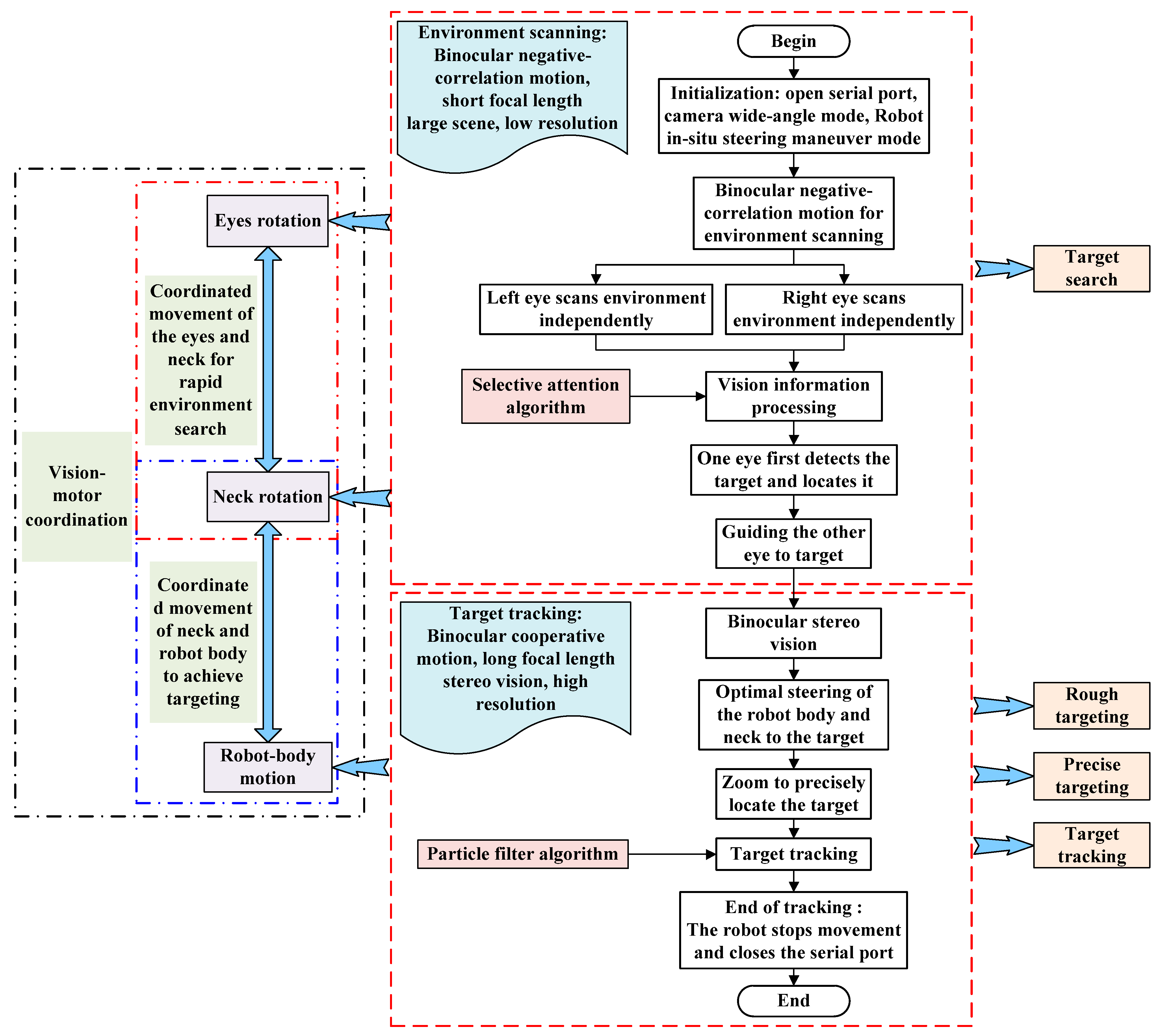

2. Visual Perception Modelling in Chameleon-Inspired Shifty-Behavior Mode for WMRs

3. Target Search Modelling with Chameleon-Inspired Binocular Negative-Correlation Motion for WMRs

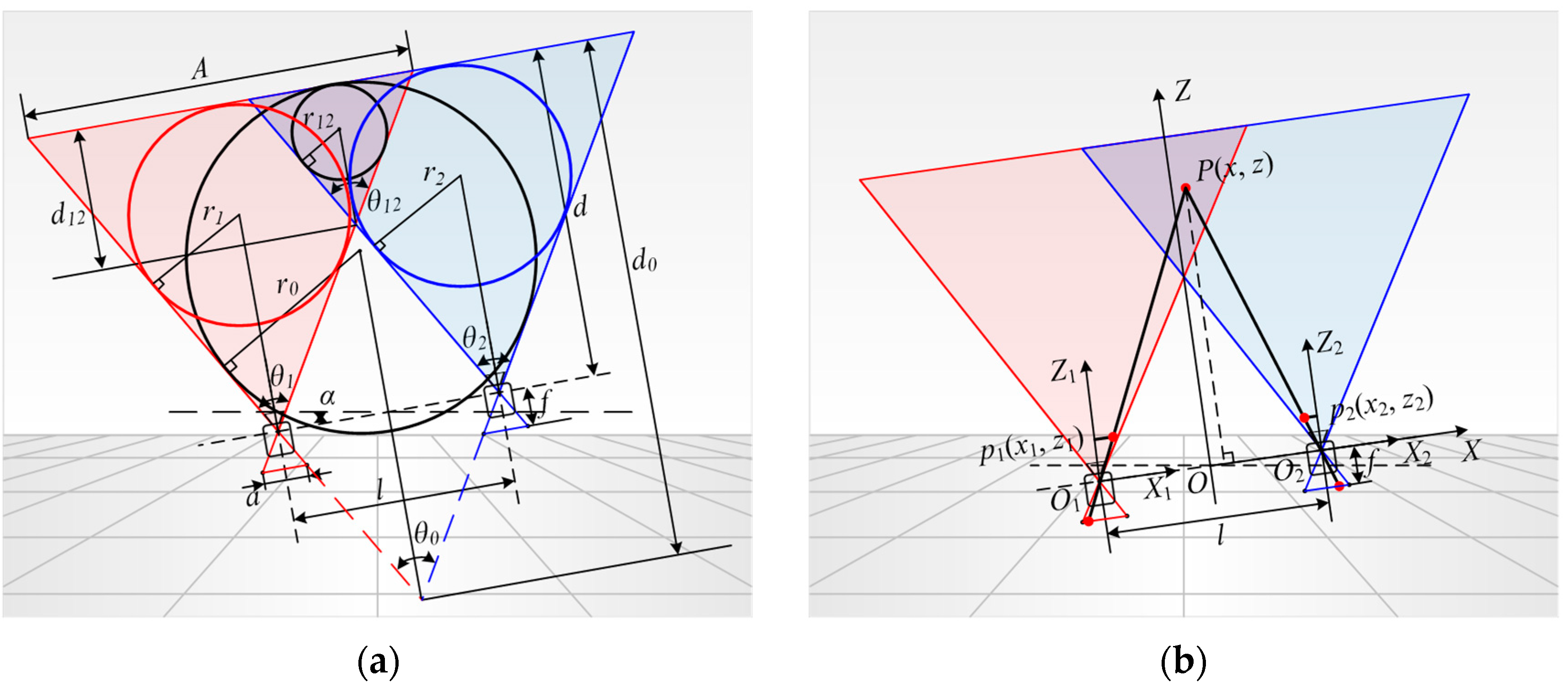

4. Modelling of FOV in Chameleon-Inspired Active Vision Based on Shifty-Behavior Mode for WMRs

4.1. FOV Model with Binocular Synchronous Motion

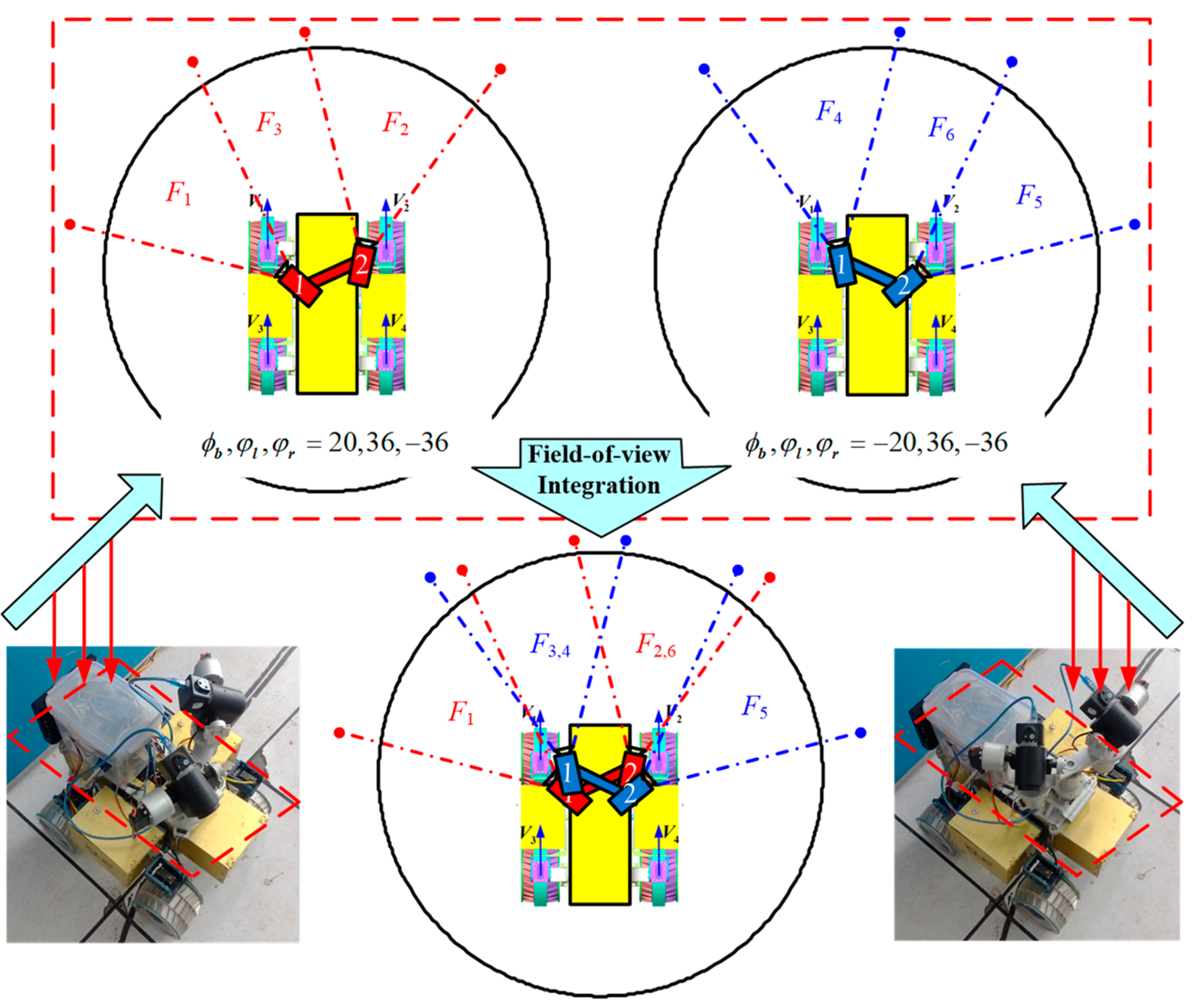

4.2. FOV Model with Binocular Negative-Correlation Motion

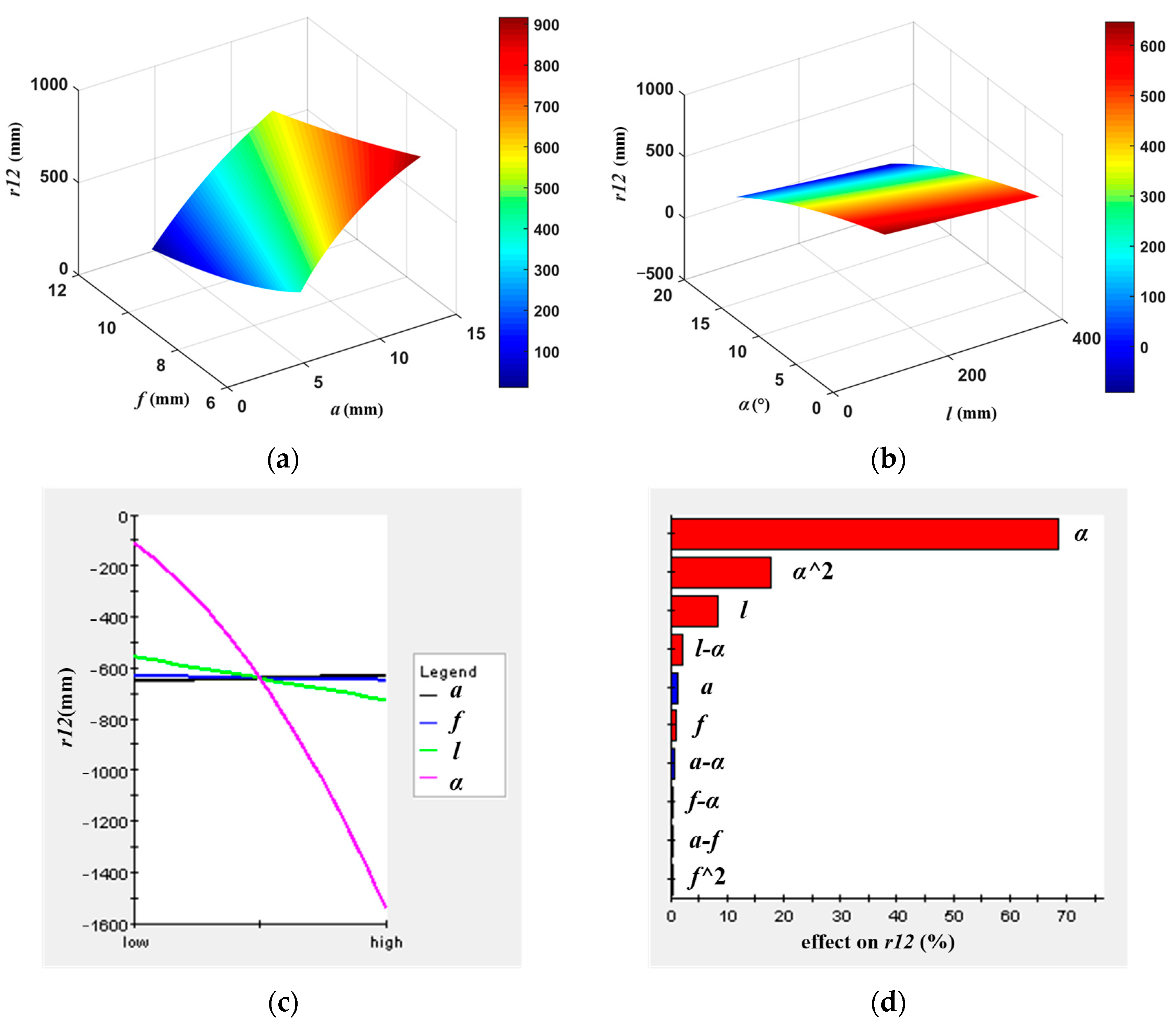

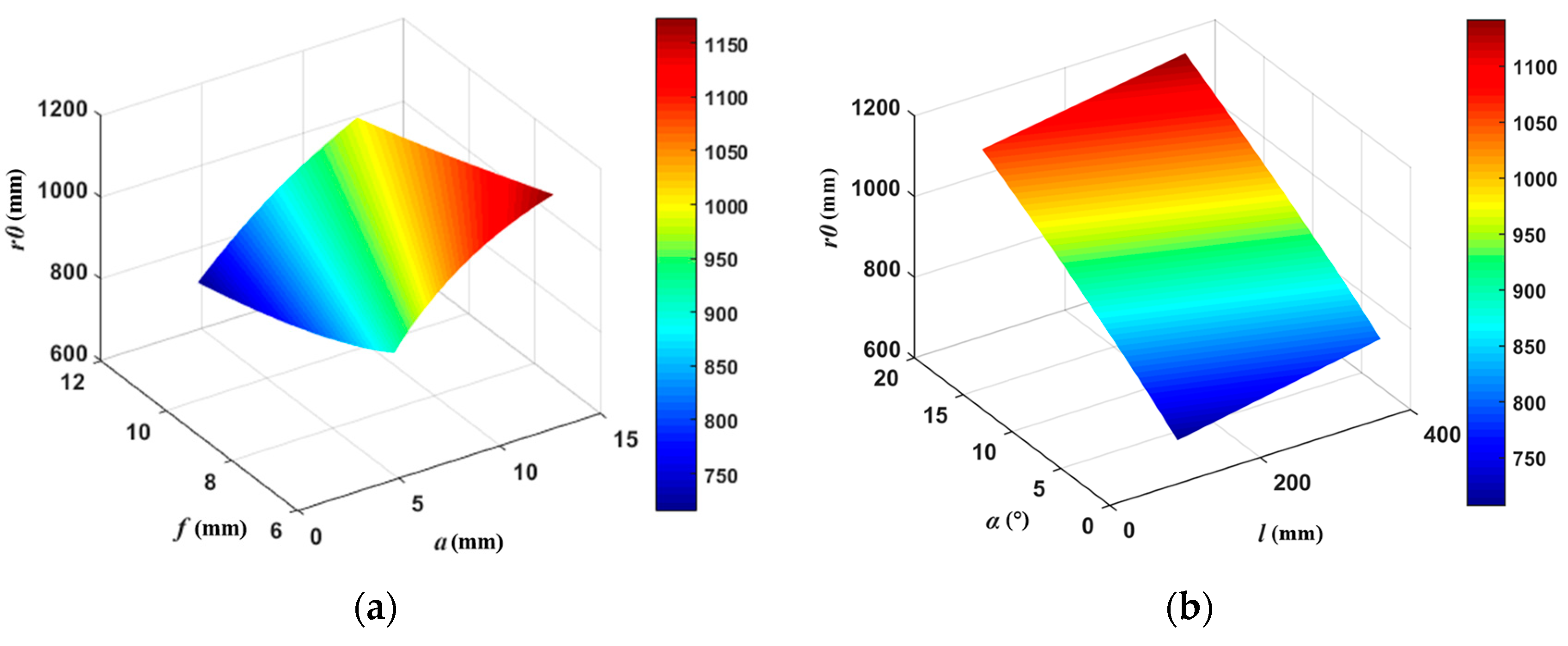

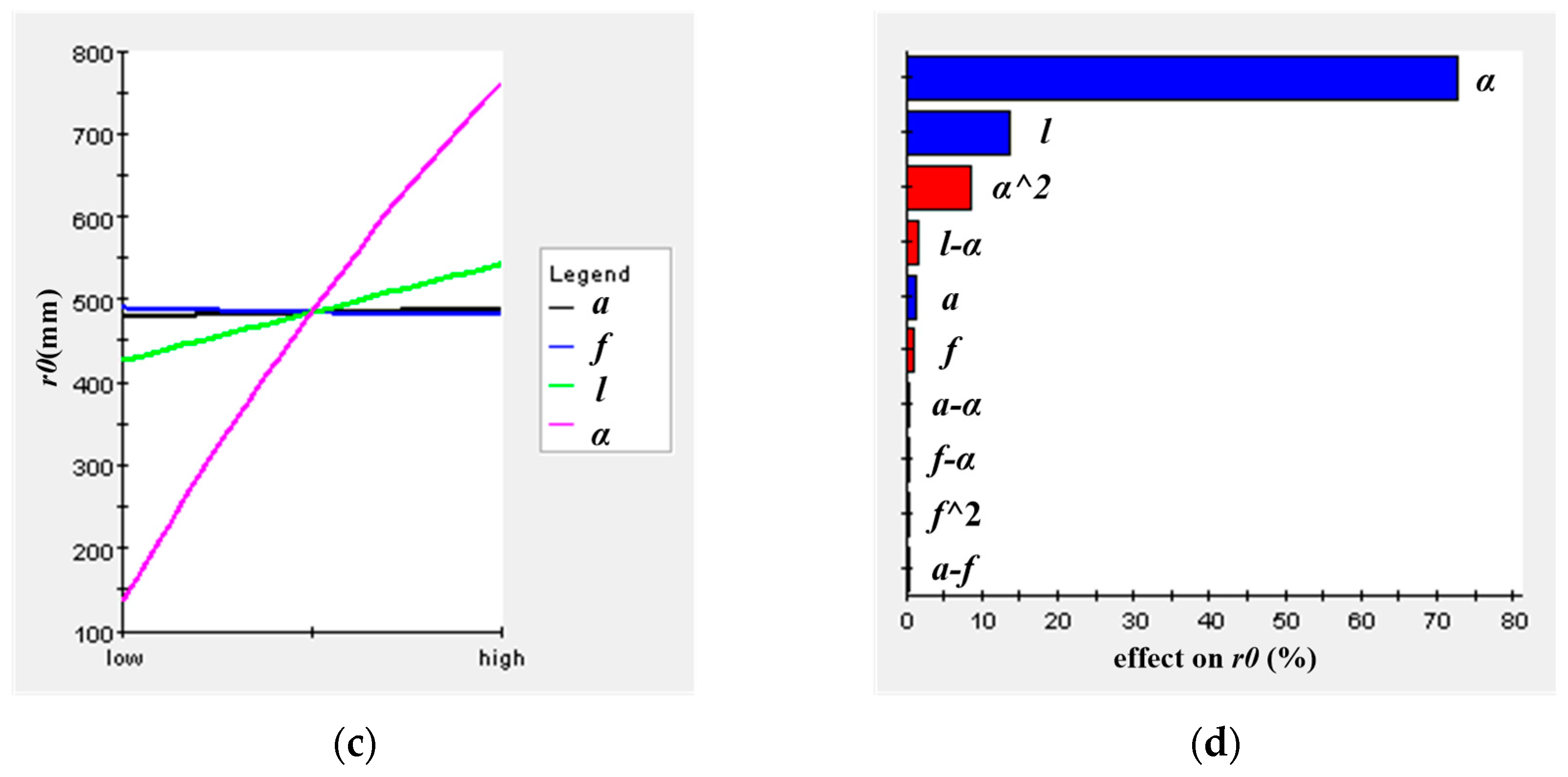

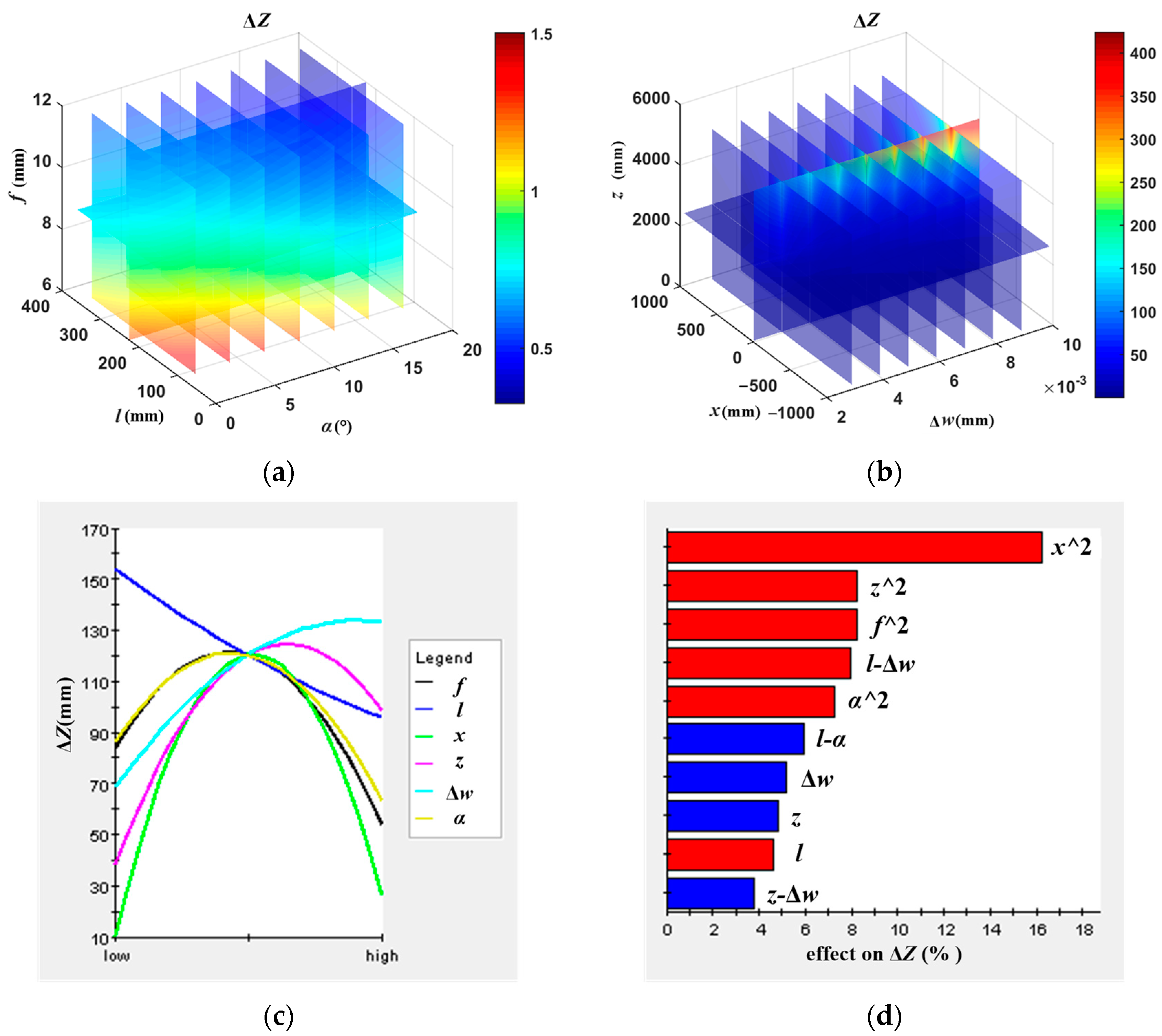

4.3. Simulation Analysis of FOV Model

5. Environment Perception Strategy in Chameleon-Inspired Active Vision Based on Shifty-Behavior Mode for WMRs

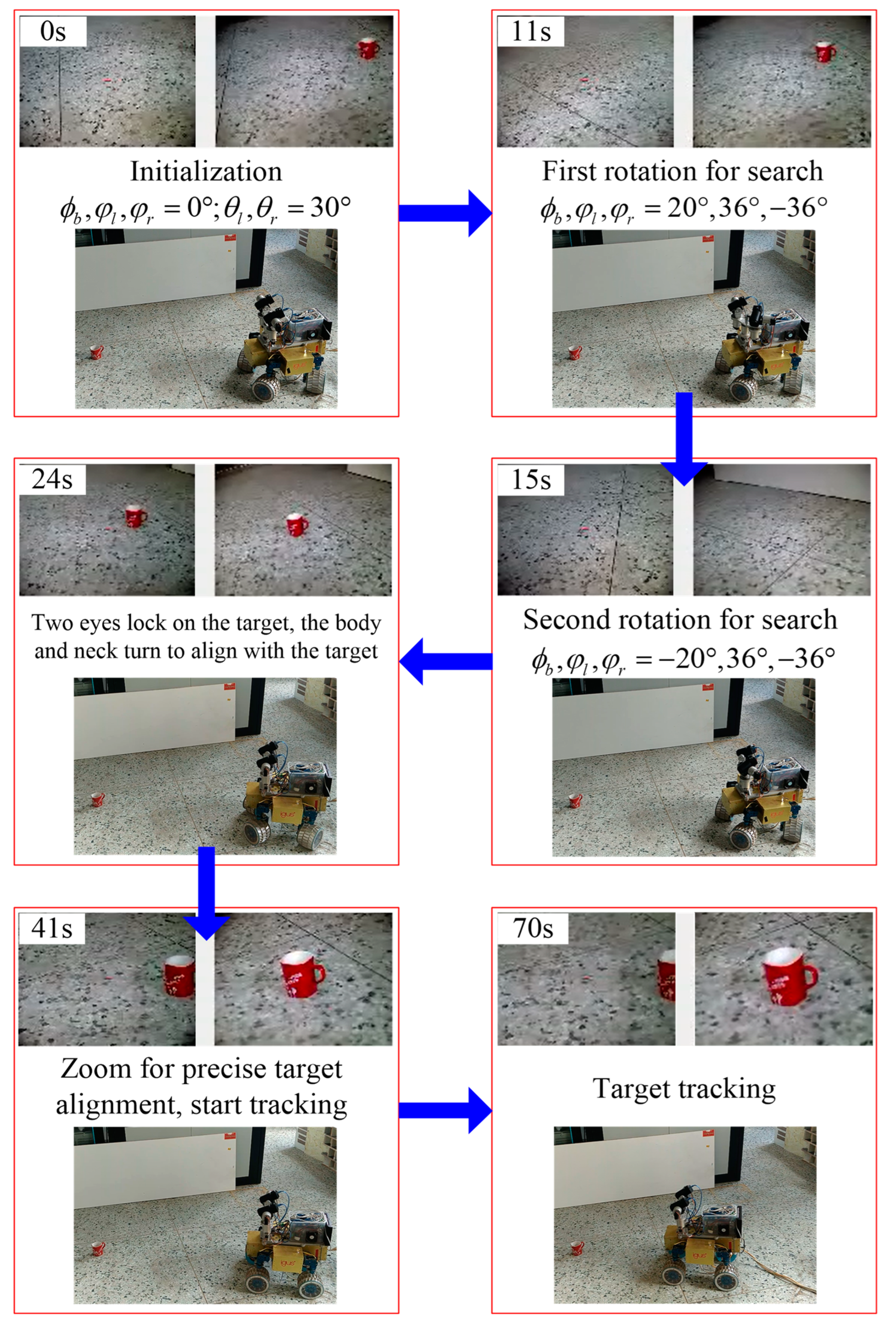

6. Experiments

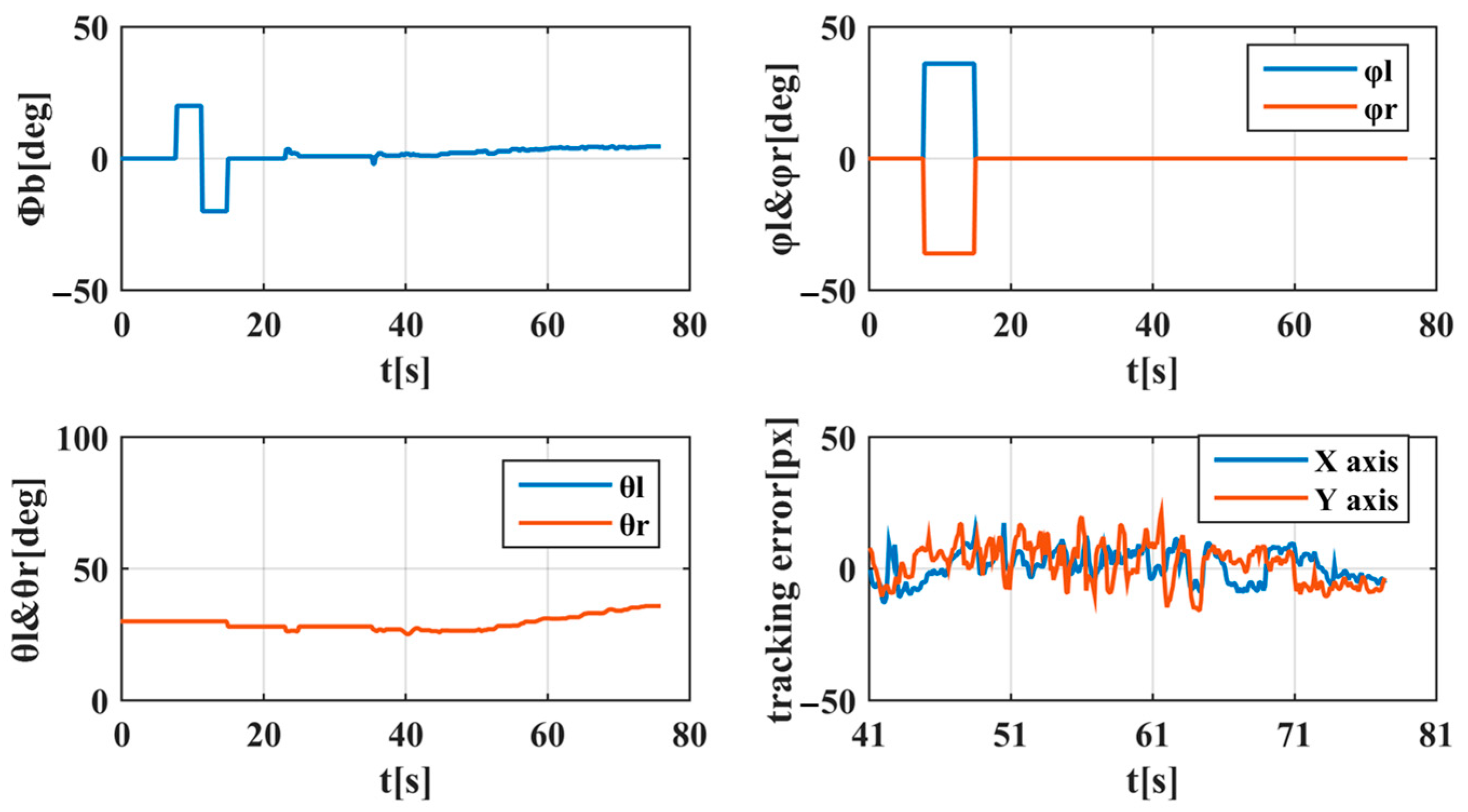

6.1. Target Tracking Experiment of WMRs on Rigid, Flat Ground

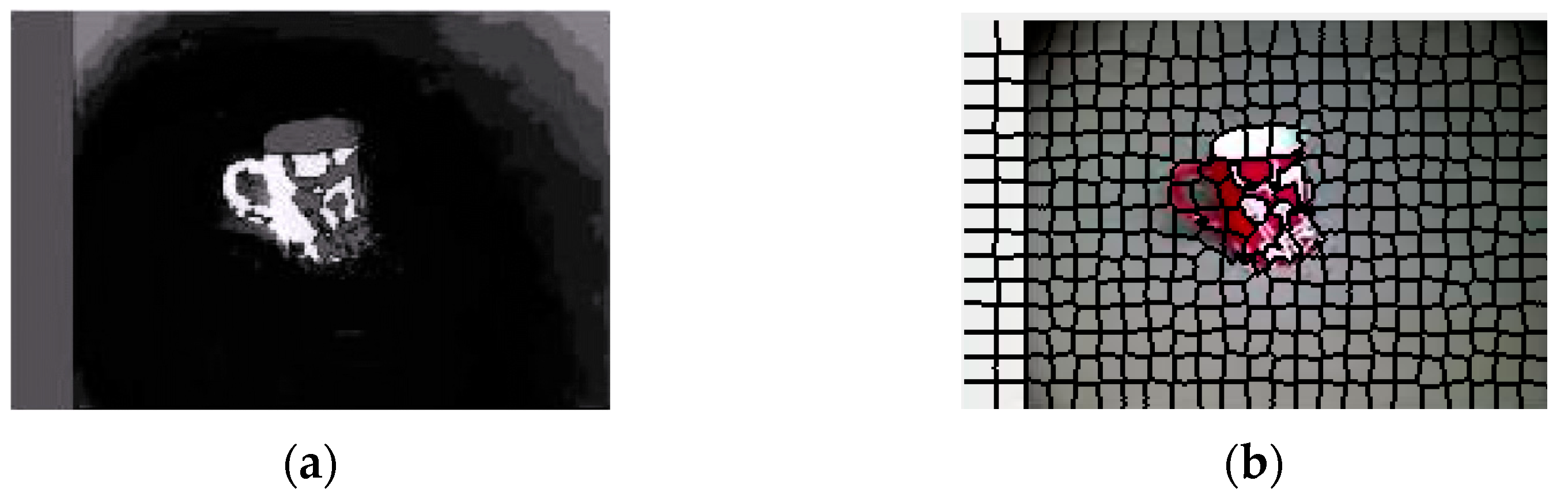

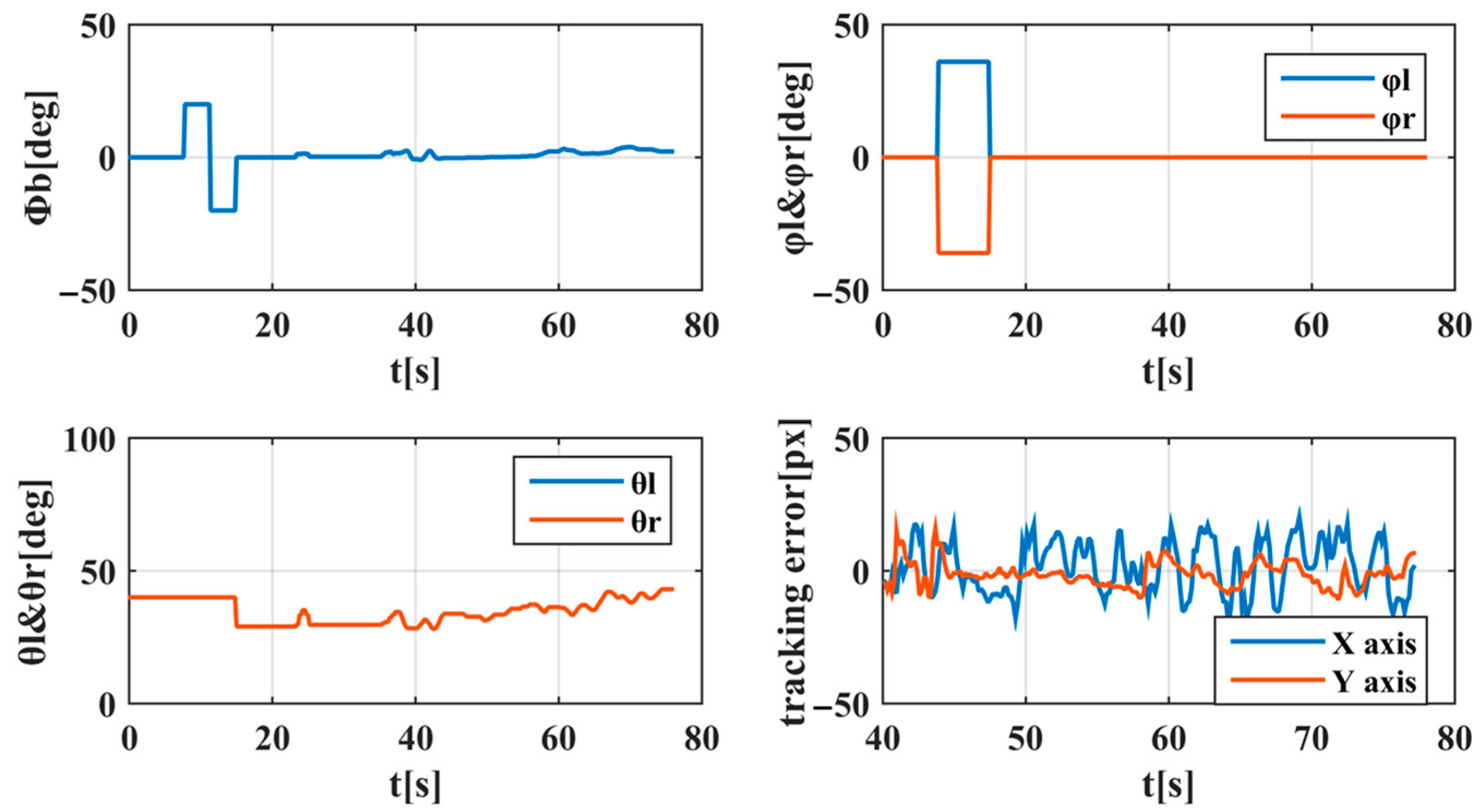

6.2. Target Tracking Experiment of WMRs on Soft–Rough Sand

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zhang, H.; Lee, S. Robot Bionic Vision Technologies: A Review. Appl. Sci. 2022, 12, 7970. [Google Scholar] [CrossRef]

- Dominguez-Morales, M.J.; Jimenez-Fernandez, A.; Jimenez-Moreno, G.; Conde, C.; Cabello, E.; Linares-Barranco, A. Bio-Inspired Stereo Vision Calibration for Dynamic Vision Sensors. IEEE Access 2019, 7, 138415–138425. [Google Scholar] [CrossRef]

- Corke, P. Robotics, Vision and Control; Springer Tracts in Advanced Robotics; Springer International Publishing: Cham, Switzerland, 2017; Volume 118, ISBN 978-3-319-54412-0. [Google Scholar]

- Bajcsy, R. Active Perception vs. Passive Perception. In Proceedings of the IEEE Workshop on Computer Vision, Bellaire, MI, USA, 13–16 October 1985; pp. 55–62. [Google Scholar]

- Bajcsy, R.; Aloimonos, Y.; Tsotsos, J.K. Revisiting Active Perception. Auton Robot 2018, 42, 177–196. [Google Scholar] [CrossRef]

- Tsotsos, J.K. A Computational Perspective on Visual Attention; MIT Press: Cambridge, MA, USA, 2021. [Google Scholar]

- Lev-Ari, T.; Lustig, A.; Ketter-Katz, H.; Baydach, Y.; Katzir, G. Avoidance of a Moving Threat in the Common Chameleon (Chamaeleo chamaeleon): Rapid Tracking by Body Motion and Eye Use. J. Comp. Physiol. A 2016, 202, 567–576. [Google Scholar] [CrossRef]

- Ketter-Katz, H.; Lev-Ari, T.; Katzir, G. Vision in Chameleons—A Model for Non-Mammalian Vertebrates. Semin. Cell Dev. Biol. 2020, 106, 94–105. [Google Scholar] [CrossRef] [PubMed]

- Billington, J.; Webster, R.J.; Sherratt, T.N.; Wilkie, R.M.; Hassall, C. The (Under)Use of Eye-Tracking in Evolutionary Ecology. Trends Ecol. Evol. 2020, 35, 495–502. [Google Scholar] [CrossRef] [PubMed]

- Herrel, A.; Meyers, J.J.; Aerts, P.; Nishikawa, K.C. The Mechanics of Prey Prehension in Chameleons. J. Exp. Biol. 2000, 203, 3255–3263. [Google Scholar] [CrossRef] [PubMed]

- Ott, M.; Schaeffel, F. A Negatively Powered Lens in the Chameleon. Nature 1995, 373, 692–694. [Google Scholar] [CrossRef]

- Ott, M.; Schaeffel, F.; Kirmse, W. Binocular Vision and Accommodation in Prey-Catching Chameleons. J. Comp. Physiol. A Sens. Neural Behav. Physiol. 1998, 182, 319–330. [Google Scholar] [CrossRef]

- Ott, M. Chameleons Have Independent Eye Movements but Synchronise Both Eyes during Saccadic Prey Tracking. Exp. Brain Res. 2001, 139, 173–179. [Google Scholar] [CrossRef] [PubMed]

- Avni, O.; Borrelli, F.; Katzir, G.; Rivlin, E.; Rotstein, H. Scanning and Tracking with Independent Cameras—A Biologically Motivated Approach Based on Model Predictive Control. Auton Robot 2008, 24, 285–302. [Google Scholar] [CrossRef]

- Avni, O.; Borrelli, F.; Katzir, G.; Rivlin, E.; Rotstein, H. Using Dynamic Optimization for Reproducing the Chameleon Visual System. In Proceedings of the 45th IEEE Conference on Decision and Control, San Diego, CA, USA, 13–15 December 2006; IEEE: Piscataway, NJ, USA, 2006; pp. 1770–1775. [Google Scholar]

- Prasad, R.; Vinothini, G.; Kumar, G.L.; Paul, S.; Geetha, S.; Surya Prabha, U.S. Chameleon Eye Motion Thruster for Missile System with Genetic Ontology Controller and Uncommon Transmission Antenna. In Proceedings of the 2015 SAI Intelligent Systems Conference (IntelliSys), London, UK, 10–11 November 2015; IEEE: Piscataway, NJ, USA, 2015; pp. 571–575. [Google Scholar]

- Xu, H.; Xu, Y.; Fu, H.; Xu, Y.; Gao, X.Z.; Alipour, K. Coordinated Movement of Biomimetic Dual PTZ Visual System and Wheeled Mobile Robot. Ind. Robot. Int. J. 2014, 41, 557–566. [Google Scholar] [CrossRef]

- Zhao, L.; Kong, L.; Qiao, X.; Zhou, Y. System Calibration and Error Rectification of Binocular Active Visual Platform for Parallel Mechanism. In Proceedings of the Intelligent Robotics and Applications: First International Conference, ICIRA 2008, Wuhan, China, 15–17 October 2008; Proceedings, Part I 1. Springer: Berlin/Heidelberg, Germany, 2008; pp. 734–743. [Google Scholar]

- Zhao, L.; Kong, L.; Wang, Y. Error Analysis of Binocular Active Hand-Eye Visual System on Parallel Mechanisms. In Proceedings of the 2008 International Conference on Information and Automation, Changsha, China, 20–23 June 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 95–100. [Google Scholar]

- Zhou, J.; Wan, D.; Wu, Y. The Chameleon-Like Vision System. IEEE Signal Process. Mag. 2010, 27, 91–101. [Google Scholar] [CrossRef]

- Tsai, J.; Wang, C.-W.; Chang, C.-C.; Hu, K.-C.; Wei, T.-H. A Chameleon-like Two-Eyed Visual Surveillance System. In Proceedings of the 2014 International Conference on Machine Learning and Cybernetics, Lanzhou, China, 13–16 July 2014; IEEE: Piscataway, NJ, USA, 2014; pp. 734–740. [Google Scholar]

- Chen, R.; Chen, J.-Q.; Sun, Y.; Wu, L.; Guo, J.-L. A Chameleon Tongue Inspired Shooting Manipulator With Vision-Based Localization and Preying. IEEE Robot. Autom. Lett. 2020, 5, 4923–4930. [Google Scholar] [CrossRef]

- Liu, Y.; Zhu, D.; Peng, J.; Wang, X.; Wang, L.; Chen, L.; Li, J.; Zhang, X. Robust Active Visual SLAM System Based on Bionic Eyes. In Proceedings of the 2019 IEEE International Conference on Cyborg and Bionic Systems (CBS), Munich, Germany, 18–20 September 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 340–345. [Google Scholar]

- Li, J.; Zhang, X. The Performance Evaluation of a Novel Methodology of Fixational Eye Movements Detection. Int. J. Biosci. Biochem. Bioinform. 2013, 3, 262–266. [Google Scholar] [CrossRef]

- Gu, Y.; Sato, M.; Zhang, X. A Binocular Camera System for Wide Area Surveillance. Eizo Joho Media Gakkaishi 2009, 63, 1828–1837. [Google Scholar] [CrossRef]

- Wang, Q.; Zou, W.; Xu, D.; Zhu, Z. Motion Control in Saccade and Smooth Pursuit for Bionic Eye Based on Three-Dimensional Coordinates. J. Bionic. Eng. 2017, 14, 336–347. [Google Scholar] [CrossRef]

- Wang, Q.; Zou, W.; Xu, D. 3D Perception of Biomimetic Eye Based on Motion Vision and Stereo Vision. Robot 2015, 37, 760–768. [Google Scholar] [CrossRef]

- Wang, Q.; Yin, Y.; Zou, W.; Xu, D. Measurement Error Analysis of Binocular Stereo Vision: Effective Guidelines for Bionic Eyes. IET Sci. Meas. Technol. 2017, 11, 829–838. [Google Scholar] [CrossRef]

- Fan, D.; Liu, Y.; Chen, X.; Meng, F.; Liu, X.; Ullah, Z.; Cheng, W.; Liu, Y.; Huang, Q. Eye Gaze Based 3D Triangulation for Robotic Bionic Eyes. Sensors 2020, 20, 5271. [Google Scholar] [CrossRef]

- Chen, X.; Wang, C.; Zhang, W.; Lan, K.; Huang, Q. An Integrated Two-Pose Calibration Method for Estimating Head-Eye Parameters of a Robotic Bionic Eye. IEEE Trans. Instrum. Meas. 2020, 69, 1664–1672. [Google Scholar] [CrossRef]

- Chen, J.; Chen, Y.; Zhao, H.; Ma, T. Development of Neural-network-based Stereo Bionic Compound Eyes with Fiber Bundles. Concurr. Comput. 2022, 35, e7464. [Google Scholar] [CrossRef]

- Chen, X.; Wang, C.; Zhang, T.; Hua, C.; Fu, S.; Huang, Q. Hybrid Image Stabilization of Robotic Bionic Eyes. In Proceedings of the 2018 IEEE International Conference on Robotics and Biomimetics (ROBIO), Kuala Lumpur, Malaysia, 12–15 December 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 808–813. [Google Scholar]

- Zhao, W.; Liu, H.; Lewis, F.L.; Wang, X. Data-Driven Optimal Formation Control for Quadrotor Team With Unknown Dynamics. IEEE Trans. Cybern. 2022, 52, 7889–7898. [Google Scholar] [CrossRef]

- Tu Vu, V.; Pham, T.L.; Dao, P.N. Disturbance Observer-Based Adaptive Reinforcement Learning for Perturbed Uncertain Surface Vessels. ISA Trans. 2022, 130, 277–292. [Google Scholar] [CrossRef]

- Zhao, W.; Liu, H.; Lewis, F.L. Data-Driven Fault-Tolerant Control for Attitude Synchronization of Nonlinear Quadrotors. IEEE Trans. Automat. Contr. 2021, 66, 5584–5591. [Google Scholar] [CrossRef]

- Dao, P.N.; Liu, Y. Adaptive Reinforcement Learning in Control Design for Cooperating Manipulator Systems. Asian J. Control 2022, 24, 1088–1103. [Google Scholar] [CrossRef]

- Soechting, J.F.; Flanders, M. Moving in Three-Dimensional Space: Frames of Reference, Vectors, and Coordinate Systems. Annu. Rev. Neurosci. 1992, 15, 167–191. [Google Scholar] [CrossRef]

- Li, Y.; Shum, H.-Y.; Tang, C.-K.; Szeliski, R. Stereo Reconstruction from Multiperspective Panoramas. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 45–62. [Google Scholar] [CrossRef]

- Hamdia, K.M.; Zhuang, X.; Rabczuk, T. An Efficient Optimization Approach for Designing Machine Learning Models Based on Genetic Algorithm. Neural Comput Applic 2021, 33, 1923–1933. [Google Scholar] [CrossRef]

- Dutta, A.; Mondal, A.; Dey, N.; Sen, S.; Moraru, L.; Hassanien, A.E. Vision Tracking: A Survey of the State-of-the-Art. SN Comput. Sci. 2020, 1, 57. [Google Scholar] [CrossRef]

- Grosfeld-Nir, A.; Ronen, B.; Kozlovsky, N. The Pareto Managerial Principle: When Does It Apply? Int. J. Prod. Res. 2007, 45, 2317–2325. [Google Scholar] [CrossRef]

- Zhang, X. New Developments for Net-Effect Plots. Wiley Interdiscip. Rev. Comput. Stat. 2013, 5, 105–113. [Google Scholar] [CrossRef]

- Ding, J.; Chen, J.; Lin, J.; Wan, L. Particle Filtering Based Parameter Estimation for Systems with Output-Error Type Model Structures. J. Frankl. Inst. 2019, 356, 5521–5540. [Google Scholar] [CrossRef]

- Arend, M.G.; Schäfer, T. Statistical Power in Two-Level Models: A Tutorial Based on Monte Carlo Simulation. Psychol. Methods 2019, 24, 1. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Liu, T.; Wang, G.; Chan, K.L.; Yang, Q. Video Tracking Using Learned Hierarchical Features. IEEE Trans. Image Process. 2015, 24, 1424–1435. [Google Scholar] [CrossRef] [PubMed]

- Cheng, M.-M.; Mitra, N.J.; Huang, X.; Torr, P.H.S.; Hu, S.-M. Global Contrast Based Salient Region Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 569–582. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC Superpixels Compared to State-of-the-Art Superpixel Methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef] [PubMed]

| Sensors | Parameters | Values |

|---|---|---|

| Lens | Model | SSL06036M |

| Size of target surface | 1/3″ | |

| Focal length | 6.0–36.0 mm | |

| Minimum focus distance | 1.3 m | |

| Back focus distance | 12.50 mm | |

| CMOS | Model | Suntime-200 |

| Pixel size | 3.2 μm × 3.2 μm | |

| Scanning type | Line-by-line scanning | |

| Resolution | 320 × 240, 640 × 480, 1280 × 1024 | |

| Transmission rate | 30 fps: 320 × 240, 640 × 480 |

| Parameters | Descriptions | Range (mm) | Parameters | Descriptions | Range (mm) |

|---|---|---|---|---|---|

| Length of CMOS target surface | Monocular horizontal FOV | ||||

| Focal length | Binocular overlapping horizontal FOV | N/A | |||

| Distance between two eyes | Binocular merging horizontal FOV | N/A | |||

| Object distance | N/A | Radius of the inner tangent circle of the monocular horizontal FOV | N/A | ||

| Distance from the object to the vertex of the binocular horizontal overlapping FOV | N/A | Radius of the inner tangent circle of the binocular horizontal overlapping FOV | N/A | ||

| Distance from the object to the vertex of the binocular horizontal merging FOV | N/A | Radius of the inner tangent circle of the binocular horizontal merging FOV | N/A | ||

| Length of target object | N/A | Size of CMOS image element | |||

| Position of the target object in the effective horizontal FOV | N/A |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Y.; Liu, C.; Cui, H.; Song, Y.; Yue, X.; Feng, L.; Wu, L. Environment Perception with Chameleon-Inspired Active Vision Based on Shifty Behavior for WMRs. Appl. Sci. 2023, 13, 6069. https://doi.org/10.3390/app13106069

Xu Y, Liu C, Cui H, Song Y, Yue X, Feng L, Wu L. Environment Perception with Chameleon-Inspired Active Vision Based on Shifty Behavior for WMRs. Applied Sciences. 2023; 13(10):6069. https://doi.org/10.3390/app13106069

Chicago/Turabian StyleXu, Yan, Cuihong Liu, Hongguang Cui, Yuqiu Song, Xiang Yue, Longlong Feng, and Liyan Wu. 2023. "Environment Perception with Chameleon-Inspired Active Vision Based on Shifty Behavior for WMRs" Applied Sciences 13, no. 10: 6069. https://doi.org/10.3390/app13106069

APA StyleXu, Y., Liu, C., Cui, H., Song, Y., Yue, X., Feng, L., & Wu, L. (2023). Environment Perception with Chameleon-Inspired Active Vision Based on Shifty Behavior for WMRs. Applied Sciences, 13(10), 6069. https://doi.org/10.3390/app13106069