Enhancing Skills Demand Understanding through Job Ad Segmentation Using NLP and Clustering Techniques

Abstract

1. Introduction

2. Job Requirements and Natural Language Processing Application

3. Materials and Methods

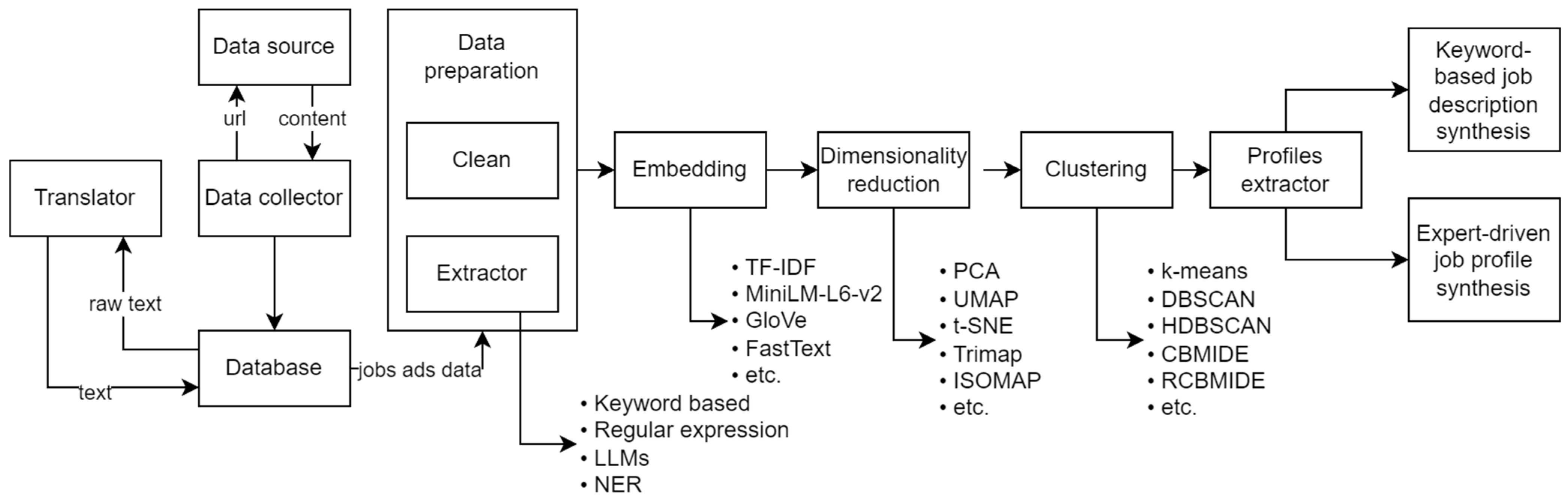

3.1. Data Gathering, Processing, Extraction, and Analysis

“This is the job description. Requirements: experience, English etc. Company offers: health insurance, flexible work”.

“Extract job requirements from the text provided:

This is the job description requirements: experience, English etc. The company offers health insurance and flexible work”.

“Based on the text provided, the job requirements are experience (specifics not mentioned) English proficiency (specifics not mentioned). It is important to note that without further context, it is difficult to determine the level of experience or proficiency required for the job”.

3.2. Text Vectorization

- Word2Vec, proposed by Mikolov et al. (2013) [38], is a popular word embedding technique that generates dense vector representations of words by predicting the context of a given the word using an external neural network. These embeddings capture the semantic and syntactic relationships between words. The word vectors can be averaged, summed, or combined for a sentence or document-level representation using more sophisticated techniques including weighted averages or the smooth inverse frequency method [39]. Word2Vec consists of two primary architectures: Continuous bag of words (CBOW) and Skip-Gram. CBOW predicts a target word based on its surrounding context, while Skip-Gram predicts the context given a target word. There are several limitations to the Word2Vec method: It requires substantial computational resources for training on large corpora. It focuses on word-level embeddings, which may not capture sentence- or document-level semantics. Despite these limitations, Word2Vec does possess several benefits: It captures semantic and syntactic relationships between words. Generates dense continuous vectors, reducing dimensionality compared to sparse methods such as TF-IDF. Pre-trained models are available for various languages and domains.

- GloVe (Global Vectors for Word Representation) is another word embedding method introduced by Pennington et al. (2014) [40]. It generates word embeddings based on the global co-occurrence statistics of words in a corpus. Similar to Word2Vec, GloVe embeddings can be aggregated to create a sentence or document-level representations similarly.

- The bag-of-words (BoW) model is a simple text vectorization method that represents documents as fixed-size vectors based on the frequency of words they contain [41]. While BoW does not capture the order of words or semantic relationships, it is computationally efficient. It can be effective for certain text analytical tasks.

- Developed by Bojanowski et al. (2017) [42], FastText is an extension of the Word2Vec model that generates embeddings for sub-word units (such as N-grams) instead of entire words. This approach enables capturing of morphological information and better handling of the rare and out-of-vocabulary words. The sentence or document-level embeddings can be obtained by aggregating the sub-word embeddings.

- Doc2Vec, also known as paragraph vectors, is an extension of Word2Vec introduced by Le and Mikolov (2014) [43]. It generates dense vector representations for entire documents by considering both the words and the document as an input during the training process. This method can capture the overall semantic meaning of a document and can be directly used for document-level tasks.

3.3. Dimensionality Reduction Methods

3.4. Clustering Methods Used in the Research

4. Results

4.1. Dynamic of the Specific Requirements/Keywords in Lithuania

4.2. Feature Extraction of the Job Adverts

4.3. Comparative Analysis of Dimensionality Reduction Results

4.4. Clustering Results

4.5. Jobs Advertisement Requirements Cluster Analysis for Demand Understanding

5. Discussion

5.1. Extrapolating this Study to Other Countries

5.2. Future Directions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix B

| Profile | Synthesized Keyword-Based Job Profile |

|---|---|

| 1 | This profile is about a construction and engineering professional with expertise in electrical systems, automation, and energy management. They have experience in project management and are skilled in using AutoCAD for technical design and documentation. This individual holds the relevant education and qualifications, including a certificate in a specialized area. They are knowledgeable in equipment maintenance, handling works related to electrical engineering, and can read and understand technical documentation. Their background also includes managing and designing various construction and engineering projects. |

| 4 | This profile is about a professional with strong English language and communication skills, proficiency in computer literacy, and experienced in clerical and office-related tasks. They have a good knowledge of MS Office tools and can effectively communicate in various situations. The individual is also responsible, takes initiative, and can quickly adapt to new environments. They have a higher level of education and might have an interest in or experience with cars. Their language skills may extend to other languages as well, highlighting their overall linguistic abilities. |

| 5 | This profile is about a digital marketing and advertising professional with expertise in various aspects of online marketing, such as Google Ads, SEO, and social media management. They have experience in content creation, campaign management, and analytics, utilizing tools such as Adobe Photoshop and Illustrator for graphic design and creative purposes. Their skills include managing and optimizing advertising campaigns on platforms such as Facebook and other digital channels. They possess a strong creativity and are proficient in using marketing analytics tools to measure the success of their campaigns. Overall, this individual is well-versed in the digital media landscape, and has a deep understanding of how to leverage various platforms and tools to achieve marketing objectives. |

| 6 | This profile is about a bilingual professional with fluency in both the Lithuanian and English languages, possessing excellent clerical and administrative skills. They have experience in office administration and are proficient in using MS Office tools and other computer applications. Their strong written and oral communication abilities allow them to excel in various situations, providing excellent service in both languages. They can write and speak fluently and correctly in Lithuanian and English, demonstrating their adaptability in diverse environments. This individual’s background includes a good knowledge of administrative tasks and effective communication in multiple languages, making them a valuable asset in any organization requiring multilingual support. |

| 7 | This profile is about a logistics and transportation professional with experience in cargo and freight expeditions. They have a strong background in the field of transport and supply chain management, with the ability to handle various situations, including stressful ones. They possess excellent negotiation skills and can make quick, result-oriented decisions. This individual is also proficient in multiple languages, including English, Polish, and German, which allows them to effectively communicate and coordinate in diverse environments. Their knowledge of the logistics sector and expertise in transport make them a valuable asset to any organization involved in the movement of goods and freight. |

| 8 | This profile is about a responsible and diligent driver with a valid license for a specific category of vehicles. They have experience driving cars and maintaining a clean criminal record, as well as a good driving record without any bad habits. This individual demonstrates responsibility, honesty, punctuality, and accountability in their work. They may also have knowledge of the German language, which could be beneficial in certain driving situations or locations. Their strong sense of diligence and commitment to safe driving practices make them a reliable and trustworthy candidate for any driving-related job. |

| 12 | This profile is about a professional in the kitchen, restaurant, and catering industry who is enthusiastic about food and its preparation. They have experience as a cook, possibly specializing in pizza and other culinary delights. They are committed to maintaining high standards of hygiene, cleanliness, and orderliness in their work environment, adhering to established norms and regulations. This individual values quality in food production and preparation while also demonstrating helpfulness, honesty, and teamwork. Their love for the culinary arts, combined with their dedication to maintaining high standards in the kitchen, makes them an excellent candidate for roles in the food and restaurant industry. |

| 23 | This profile is about a professional in the fields of chemistry and biology, with a strong background in laboratory work, biotechnology, and natural sciences research. They have a master’s degree from a university, showcasing their expertise in scientific principles and methods. Their experience includes working with chemical analysis, quality control, and adhering to ISO standards and other requirements. This individual is knowledgeable in various scientific techniques and possesses strong literacy in the sciences. Their dedication to maintaining high-quality research and understanding of both chemistry and biology make them an excellent candidate for roles in the scientific and biotechnology industries. |

| 32 | This profile is about a professional in the medical and pharmaceutical fields, with experience in pharmacy, clinical practice, and medicine. They have a valid license and a higher degree in the sciences from a university, demonstrating their expertise in the field. This individual possesses excellent literacy in medical and pharmaceutical topics, and has experience working with patients, doctors, and other healthcare professionals. They may also have experience in product marketing and administrative tasks within a clinic or healthcare setting. Their strong background in medicine and pharmaceuticals, along with their dedication to patient care and professionalism, make them an ideal candidate for roles in the healthcare and pharmaceutical industries. |

References

- Nielsen, P.; Holm, J.R.; Lorenz, E. Work policy and automation in the fourth industrial revolution. In Globalisation, New and Emerging Technologies, and Sustainable Development; Routledge: Abingdon, UK, 2021; pp. 189–207. [Google Scholar]

- Lloyd, C.; Payne, J. Rethinking country effects: Robotics, AI and work futures in Norway and the UK. New Technol. Work. Employ. 2019, 34, 208–225. [Google Scholar] [CrossRef]

- Frey, C.B.; Osborne, M.A. The future of employment: How susceptible are jobs to computerisation? Technol. Forecast. Soc. Chang. 2017, 114, 254–280. [Google Scholar] [CrossRef]

- Quintini, G. Automation, Skills Use and Training; Technical Report; OECD Publishing: Paris, France, 2018. [Google Scholar]

- Bacher, J.; Tamesberger, D. The Corona Generation: (Not) Finding Employment during the Pandemic. CESifo Forum 2021, 22, 3–7. [Google Scholar]

- Arntz, M.; Gregory, T.; Zierahn, U. The Risk of Automation for Jobs in OECD Countries: A Comparative Analysis; OECD Publishing: Paris, France, 2016. [Google Scholar]

- OECD. OECD Skills Studies OECD Skills Strategy Lithuania Assessment and Recommendations; OECD Publishing: Paris, France, 2021. [Google Scholar]

- Hershbein, B.; Kahn, L.B. Do recessions accelerate routine-biased technological change? Evidence from vacancy postings. Am. Econ. Rev. 2018, 108, 1737–1772. [Google Scholar] [CrossRef]

- Verma, A.; Lamsal, K.; Verma, P. An investigation of skill requirements in artificial intelligence and machine learning job advertisements. Ind. High. Educ. 2022, 36, 63–73. [Google Scholar] [CrossRef]

- Deming, D.; Kahn, L.B. Skill requirements across firms and labor markets: Evidence from job postings for professionals. J. Labor Econ. 2018, 36, S337–S369. [Google Scholar] [CrossRef]

- Boselli, R.; Cesarini, M.; Mercorio, F.; Mezzanzanica, M. Using machine learning for labour market intelligence. In Proceedings of the Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2017, Skopje, Macedonia, 18–22 September 2017; pp. 330–342. [Google Scholar]

- Brynjolfsson, E.; Horton, J.J.; Ozimek, A.; Rock, D.; Sharma, G.; Tuye, H.-Y. COVID-19 and Remote Work: An Early Look at US Data; National Bureau of Economic Research: Cambridge, MA, USA, 2020. [Google Scholar]

- Autor, D.; Reynolds, E. The Nature of Work after the COVID Crisis: Too Few Low-Wage Jobs; Brookings Institution: Washington, DC, USA, 2020. [Google Scholar]

- Kramer, A.; Kramer, K.Z. The potential impact of the COVID-19 pandemic on occupational status, work from home, and occupational mobility. J. Vocat. Behav. 2020, 119, 103442. [Google Scholar] [CrossRef]

- Fabo, B. The Corona-Inducted Shift Towards Intermediate Digital Skills Across Occupations in Slovakia. In Digital Labour Markets in Central and Eastern European Countries; Routledge: Abingdon, UK, 2023; pp. 37–48. [Google Scholar]

- Rebele, J.E.; Pierre, E.K.S. A commentary on learning objectives for accounting education programs: The importance of soft skills and technical knowledge. J. Account. Educ. 2019, 48, 71–79. [Google Scholar] [CrossRef]

- Brunello, G.; Wruuck, P. Skill shortages and skill mismatch: A review of the literature. J. Econ. Surv. 2021, 35, 1145–1167. [Google Scholar] [CrossRef]

- Wagner, J.A.; Hollenbeck, J.R. Organizational Behavior: Securing Competitive Advantage; Routledge: Abingdon, UK, 2020. [Google Scholar]

- Ibrahim, R.; Boerhannoeddin, A.; Bakare, K.K. The effect of soft skills and training methodology on employee performance. Eur. J. Train. Dev. 2017, 41, 388–406. [Google Scholar] [CrossRef]

- Heckman, J.J.; Kautz, T. Hard evidence on soft skills. Labour Econ. 2012, 19, 451–464. [Google Scholar] [CrossRef] [PubMed]

- Asbari, M.; Purwanto, A.; Ong, F.; Mustikasiwi, A.; Maesaroh, S.; Mustofa, M.; Hutagalung, D.; Andriyani, Y. Impact of hard skills, soft skills and organizational culture: Lecturer innovation competencies as mediating. EduPsyCouns J. Educ. Psychol. Couns. 2020, 2, 101–121. [Google Scholar]

- De Mauro, A.; Greco, M.; Grimaldi, M.; Ritala, P. Human resources for Big Data professions: A systematic classification of job roles and required skill sets. Inf. Process. Manag. 2018, 54, 807–817. [Google Scholar] [CrossRef]

- Autor, D.H. Work of the Past, Work of the Future. AEA Pap. Proc. 2019, 109, 1–32. [Google Scholar] [CrossRef]

- Groysberg, B.; Lee, J.; Price, J.; Cheng, J. The leader’s guide to corporate culture. Harv. Bus. Rev. 2018, 96, 44–52. [Google Scholar]

- Isphording, I.E. Language and labor market success. In International Encyclopedia of the Social & Behavioral Sciences; Institute of Labor Economics: Bonn, Germany, 2014. [Google Scholar]

- Berg, P.; Kossek, E.E.; Misra, K.; Belman, D. Work-life flexibility policies: Do unions affect employee access and use? ILR Rev. 2014, 67, 111–137. [Google Scholar] [CrossRef]

- Bilal, M.; Malik, N.; Khalid, M.; Lali, M.I.U. Exploring industrial demand trend’s in Pakistan software industry using online job portal data. Univ. Sindh J. Inf. Commun. Technol. 2017, 1, 17–24. [Google Scholar]

- Clarke, M. Rethinking graduate employability: The role of capital, individual attributes and context. Stud. High. Educ. 2018, 43, 1923–1937. [Google Scholar] [CrossRef]

- Mahany, A.; Khaled, H.; Elmitwally, N.S.; Aljohani, N.; Ghoniemy, S. Negation and Speculation in NLP: A Survey, Corpora, Methods, and Applications. Appl. Sci. 2022, 12, 5209. [Google Scholar] [CrossRef]

- Kalyan, K.S.; Rajasekharan, A.; Sangeetha, S. Ammus: A survey of transformer-based pretrained models in natural language processing. arXiv 2021, arXiv:2108.05542. [Google Scholar]

- Devlin, J.; Chang, M.-W.; Lee, K.; Toutanova, K. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv 2018, arXiv:1810.04805. [Google Scholar]

- Fellbaum, C. WordNet. In Theory and Applications of Ontology: Computer Applications; Springer: Berlin/Heidelberg, Germany, 2010; pp. 231–243. [Google Scholar]

- Black, S.; Biderman, S.; Hallahan, E.; Anthony, Q.; Gao, L.; Golding, L.; He, H.; Leahy, C.; McDonell, K.; Phang, J. Gpt-neox-20b: An open-source autoregressive language model. arXiv 2022, arXiv:2204.06745. [Google Scholar]

- Li, J.; Sun, A.; Han, J.; Li, C. A survey on deep learning for named entity recognition. IEEE Trans. Knowl. Data Eng. 2020, 34, 50–70. [Google Scholar] [CrossRef]

- Joulin, A.; Grave, E.; Bojanowski, P.; Douze, M.; Jégou, H.; Mikolov, T. Fasttext. zip: Compressing text classification models. arXiv 2016, arXiv:1612.03651. [Google Scholar]

- Salton, G. Introduction to Modern Information Retrieval; McGraw-Hill: New York, NY, USA, 1983. [Google Scholar]

- Reimers, N.; Gurevych, I. Sentence-bert: Sentence embeddings using siamese bert-networks. arXiv 2019, arXiv:1908.10084. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. In Proceedings of the 26th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 5–10 December 2013. [Google Scholar]

- Arora, S.; Liang, Y.; Ma, T. A simple but tough-to-beat baseline for sentence embeddings. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Harris, Z.S. Distributional structure. Word 1954, 10, 146–162. [Google Scholar] [CrossRef]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching word vectors with subword information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef]

- Le, Q.; Mikolov, T. Distributed representations of sentences and documents. In Proceedings of the International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 1188–1196. [Google Scholar]

- Bellman, R.; Kalaba, R. On adaptive control processes. IRE Trans. Autom. Control 1959, 4, 1–9. [Google Scholar] [CrossRef]

- Wang, Y.; Yao, H.; Zhao, S. Auto-encoder based dimensionality reduction. Neurocomputing 2016, 184, 232–242. [Google Scholar] [CrossRef]

- Dong, Y.; Du, B.; Zhang, L.; Zhang, L. Dimensionality reduction and classification of hyperspectral images using ensemble discriminative local metric learning. IEEE Trans. Geosci. Remote Sens. 2017, 55, 2509–2524. [Google Scholar] [CrossRef]

- Thomas, R.; Judith, J. Hybrid dimensionality reduction for outlier detection in high dimensional data. Int. J. 2020, 8, 5883–5888. [Google Scholar]

- Li, M.; Wang, H.; Yang, L.; Liang, Y.; Shang, Z.; Wan, H. Fast hybrid dimensionality reduction method for classification based on feature selection and grouped feature extraction. Expert Syst. Appl. 2020, 150, 113277. [Google Scholar] [CrossRef]

- McInnes, L.; Healy, J.; Melville, J. Umap: Uniform manifold approximation and projection for dimension reduction. arXiv 2018, arXiv:1802.03426. [Google Scholar]

- Sumithra, V.; Surendran, S. A review of various linear and non linear dimensionality reduction techniques. Int. J. Comput. Sci. Inf. Technol. 2015, 6, 2354–2360. [Google Scholar]

- Abdi, H.; Williams, L.J. Principal component analysis. Wiley Interdiscip. Rev. Comput. Stat. 2010, 2, 433–459. [Google Scholar] [CrossRef]

- Du, X.; Zhu, F. A novel principal components analysis (PCA) method for energy absorbing structural design enhanced by data mining. Adv. Eng. Softw. 2019, 127, 17–27. [Google Scholar] [CrossRef]

- Iannucci, L. Chemometrics for data interpretation: Application of principal components analysis (PCA) to multivariate spectroscopic measurements. IEEE Instrum. Meas. Mag. 2021, 24, 42–48. [Google Scholar] [CrossRef]

- Fan, C.; Sun, Y.; Zhao, Y.; Song, M.; Wang, J. Deep learning-based feature engineering methods for improved building energy prediction. Appl. Energy 2019, 240, 35–45. [Google Scholar] [CrossRef]

- Van Der Maaten, L. t-SNE. 2019. Available online: https://lvdmaaten.github.io/tsne (accessed on 25 March 2023).

- Linderman, G.C.; Steinerberger, S. Clustering with t-SNE, provably. SIAM J. Math. Data Sci. 2019, 1, 313–332. [Google Scholar] [CrossRef]

- Kobak, D.; Linderman, G.C. Initialization is critical for preserving global data structure in both t-SNE and UMAP. Nat. Biotechnol. 2021, 39, 156–157. [Google Scholar] [CrossRef]

- Becht, E.; McInnes, L.; Healy, J.; Dutertre, C.-A.; Kwok, I.W.; Ng, L.G.; Ginhoux, F.; Newell, E.W. Dimensionality reduction for visualizing single-cell data using UMAP. Nat. Biotechnol. 2019, 37, 38–44. [Google Scholar] [CrossRef] [PubMed]

- Böhm, J.N.; Berens, P.; Kobak, D. A unifying perspective on neighbor embeddings along the attraction-repulsion spectrum. arXiv 2020, arXiv:2007.08902. [Google Scholar]

- Arunkumar, N.; Mohammed, M.A.; Abd Ghani, M.K.; Ibrahim, D.A.; Abdulhay, E.; Ramirez-Gonzalez, G.; de Albuquerque, V.H.C. K-means clustering and neural network for object detecting and identifying abnormality of brain tumor. Soft Comput. 2019, 23, 9083–9096. [Google Scholar] [CrossRef]

- Jain, A.K. Data clustering: 50 years beyond K-means. Pattern Recognit. Lett. 2010, 31, 651–666. [Google Scholar] [CrossRef]

- Singh, A.; Yadav, A.; Rana, A. K-means with Three different Distance Metrics. Int. J. Comput. Appl. 2013, 67, 13–17. [Google Scholar] [CrossRef]

- Schubert, E.; Sander, J.; Ester, M.; Kriegel, H.P.; Xu, X. DBSCAN revisited, revisited: Why and how you should (still) use DBSCAN. ACM Trans. Database Syst. 2017, 42, 1–21. [Google Scholar] [CrossRef]

- Campello, R.J.; Moulavi, D.; Zimek, A.; Sander, J. Hierarchical density estimates for data clustering, visualization, and outlier detection. ACM Trans. Knowl. Discov. Data 2015, 10, 1–51. [Google Scholar] [CrossRef]

- Zhang, T.; Ramakrishnan, R.; Livny, M. BIRCH: An efficient data clustering method for very large databases. ACM SIGMOD Rec. 1996, 25, 103–114. [Google Scholar] [CrossRef]

- Dueck, D.; Frey, B.J. Non-metric affinity propagation for unsupervised image categorization. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio de Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Guan, R.; Shi, X.; Marchese, M.; Yang, C.; Liang, Y. Text clustering with seeds affinity propagation. IEEE Trans. Knowl. Data Eng. 2010, 23, 627–637. [Google Scholar] [CrossRef]

- Fang, Q.; Sang, J.; Xu, C.; Rui, Y. Topic-sensitive influencer mining in interest-based social media networks via hypergraph learning. IEEE Trans. Multimed. 2014, 16, 796–812. [Google Scholar] [CrossRef]

- Ng, A.; Jordan, M.; Weiss, Y. On spectral clustering: Analysis and an algorithm. Adv. Neural Inf. Process. Syst. 2001, 14, 849–856. [Google Scholar]

- Janani, R.; Vijayarani, S. Text document clustering using spectral clustering algorithm with particle swarm optimization. Expert Syst. Appl. 2019, 134, 192–200. [Google Scholar] [CrossRef]

- Lukauskas, M.; Ruzgas, T. A New Clustering Method Based on the Inversion Formula. Mathematics 2022, 10, 2559. [Google Scholar] [CrossRef]

- Lukauskas, M.; Ruzgas, T. Reduced Clustering Method Based on the Inversion Formula Density Estimation. Mathematics 2023, 11, 661. [Google Scholar] [CrossRef]

- Venna, J.; Kaski, S. Neighborhood preservation in nonlinear projection methods: An experimental study. In Proceedings of the Artificial Neural Networks—ICANN 2001: International Conference, Vienna, Austria, 21–25 August 2001; pp. 485–491. [Google Scholar]

- Rousseeuw, P.J. Silhouettes: A graphical aid to the interpretation and validation of cluster analysis. J. Comput. Appl. Math. 1987, 20, 53–65. [Google Scholar] [CrossRef]

- Davies, D.L.; Bouldin, D.W. A cluster separation measure. IEEE Trans. Pattern Anal. Mach. Intell. 1979, PAMI-1, 224–227. [Google Scholar] [CrossRef]

- Caliński, T.; Harabasz, J. A dendrite method for cluster analysis. Commun. Stat.-Theory Methods 1974, 3, 1–27. [Google Scholar] [CrossRef]

| Components | PCA | UMAP | Trimap | t-SNE | ISOMAP |

|---|---|---|---|---|---|

| 2 | 0.756 | 0.933 | 0.831 | 0.871 | 0.748 |

| 3 | 0.805 | 0.950 | 0.882 | 0.881 | 0.821 |

| 4 | 0.844 | 0.955 | 0.908 | 0.883 | 0.859 |

| 5 | 0.876 | 0.961 | 0.931 | 0.886 | 0.896 |

| 6 | 0.898 | 0.964 | 0.942 | 0.902 | 0.908 |

| 7 | 0.915 | 0.966 | 0.950 | 0.912 | 0.920 |

| 8 | 0.928 | 0.969 | 0.955 | 0.928 | 0.938 |

| 9 | 0.940 | 0.971 | 0.961 | 0.937 | 0.949 |

| 10 | 0.948 | 0.973 | 0.964 | 0.954 | 0.965 |

| 15 | 0.968 | 0.974 | 0.971 | 0.966 | 0.977 |

| 20 | 0.980 | 0.975 | 0.974 | 0.975 | 0.989 |

| 25 | 0.986 | 0.978 | 0.976 | 0.978 | 0.989 |

| 30 | 0.990 | 0.980 | 0.977 | 0.979 | 0.990 |

| 35 | 0.992 | 0.983 | 0.978 | 0.981 | 0.991 |

| 40 | 0.993 | 0.984 | 0.978 | 0.982 | 0.991 |

| 50 | 0.996 | 0.986 | 0.978 | 0.984 | 0.992 |

| Method | Parameters | Davies–Bouldin | Calinski–Harabasz |

|---|---|---|---|

| K-means | {‘n_clusters’: 5} | 0.9143 | 3386 |

| {‘n_clusters’: 10} | 1.0041 | 2995 | |

| {‘n_clusters’: 20} | 1.0487 | 2551 | |

| DBSCAN | {‘eps’: 0.2, ‘min_samples’: 30} | 1.1245 | 1352 |

| {‘eps’: 0.3, ‘min_samples’: 20} | 1.1568 | 1458 | |

| {‘eps’: 0.3, ‘min_samples’: 50} | 1.2658 | 1589 | |

| HDBSCAN | {‘cluster_selection_epsilon’: 0.3, ‘min_cluster_size’: 50, ‘min_samples’: 20} | 0.4475 | 2698 |

| {‘cluster_selection_epsilon’: 0.2, ‘min_cluster_size’: 20, ‘min_samples’: 5} | 0.7968 | 1398 | |

| {‘cluster_selection_epsilon’: 0.3, ‘min_cluster_size’: 30, ‘min_samples’: 5} | 0.9033 | 1548 | |

| BIRCH | {‘branching_factor’: 100, ‘n_clusters’: 5, ‘threshold’: 0.4} | 1.1823 | 3216 |

| {‘branching_factor’: 10, ‘n_clusters’: 4, ‘threshold’: 0.3} | 1.2641 | 2515 | |

| {‘branching_factor’: 20, ‘n_clusters’: 30, ‘threshold’: 0.4} | 1.2951 | 1927 | |

| Affinity propagation | {‘damping’: 0.5} | 1.1374 | 1011 |

| {‘damping’: 0.8} | 1.2493 | 1265 | |

| {‘damping’: 0.7} | 1.2623 | 1255 | |

| CBMIDE | {‘n_components’: 2} | 1.1731 | 1689 |

| {‘n_components’: 30} | 1.1875 | 1456 | |

| {‘n_components’: 20} | 1.2041 | 1265 | |

| RCBMIDE | {‘n_components’: 4} | 1.0931 | 2035 |

| {‘n_components’: 20} | 1.1175 | 1689 | |

| {‘n_components’: 10} | 1.1540 | 1356 |

| Profile | Synthesized Keyword-Based Job Profile |

|---|---|

| 2 | This profile is about a finance and accounting professional with a strong background in economics and financial management. They have experience working as an accountant and are skilled in using Excel and other MS Office programs, as well as accounting software such as Navision and Rivilė. Their expertise includes tax preparation, regulation compliance, and legal aspects related to financial operations. They possess analytical and critical thinking abilities, which contribute to their effectiveness in the financial field. The individual has a higher level of education, potentially a degree in business or economics and has several years of experience in industry. They may also have knowledge of the financial regulations specific to a certain republic or region. |

| 3 | This profile is about a software development and testing professional with expertise in various programming languages, tools, and technologies. They have experience working with databases, APIs, security, and data management in both web and Windows environments. They are also knowledgeable in Agile project management methodologies and have a strong understanding of software systems and development processes. Their skills include SQL, Python, and JavaScript programming, as well as using various testing and development tools. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lukauskas, M.; Šarkauskaitė, V.; Pilinkienė, V.; Stundžienė, A.; Grybauskas, A.; Bruneckienė, J. Enhancing Skills Demand Understanding through Job Ad Segmentation Using NLP and Clustering Techniques. Appl. Sci. 2023, 13, 6119. https://doi.org/10.3390/app13106119

Lukauskas M, Šarkauskaitė V, Pilinkienė V, Stundžienė A, Grybauskas A, Bruneckienė J. Enhancing Skills Demand Understanding through Job Ad Segmentation Using NLP and Clustering Techniques. Applied Sciences. 2023; 13(10):6119. https://doi.org/10.3390/app13106119

Chicago/Turabian StyleLukauskas, Mantas, Viktorija Šarkauskaitė, Vaida Pilinkienė, Alina Stundžienė, Andrius Grybauskas, and Jurgita Bruneckienė. 2023. "Enhancing Skills Demand Understanding through Job Ad Segmentation Using NLP and Clustering Techniques" Applied Sciences 13, no. 10: 6119. https://doi.org/10.3390/app13106119

APA StyleLukauskas, M., Šarkauskaitė, V., Pilinkienė, V., Stundžienė, A., Grybauskas, A., & Bruneckienė, J. (2023). Enhancing Skills Demand Understanding through Job Ad Segmentation Using NLP and Clustering Techniques. Applied Sciences, 13(10), 6119. https://doi.org/10.3390/app13106119