Machine Learning and Image-Processing-Based Method for the Detection of Archaeological Structures in Areas with Large Amounts of Vegetation Using Satellite Images

Abstract

1. Introduction

2. Related Work

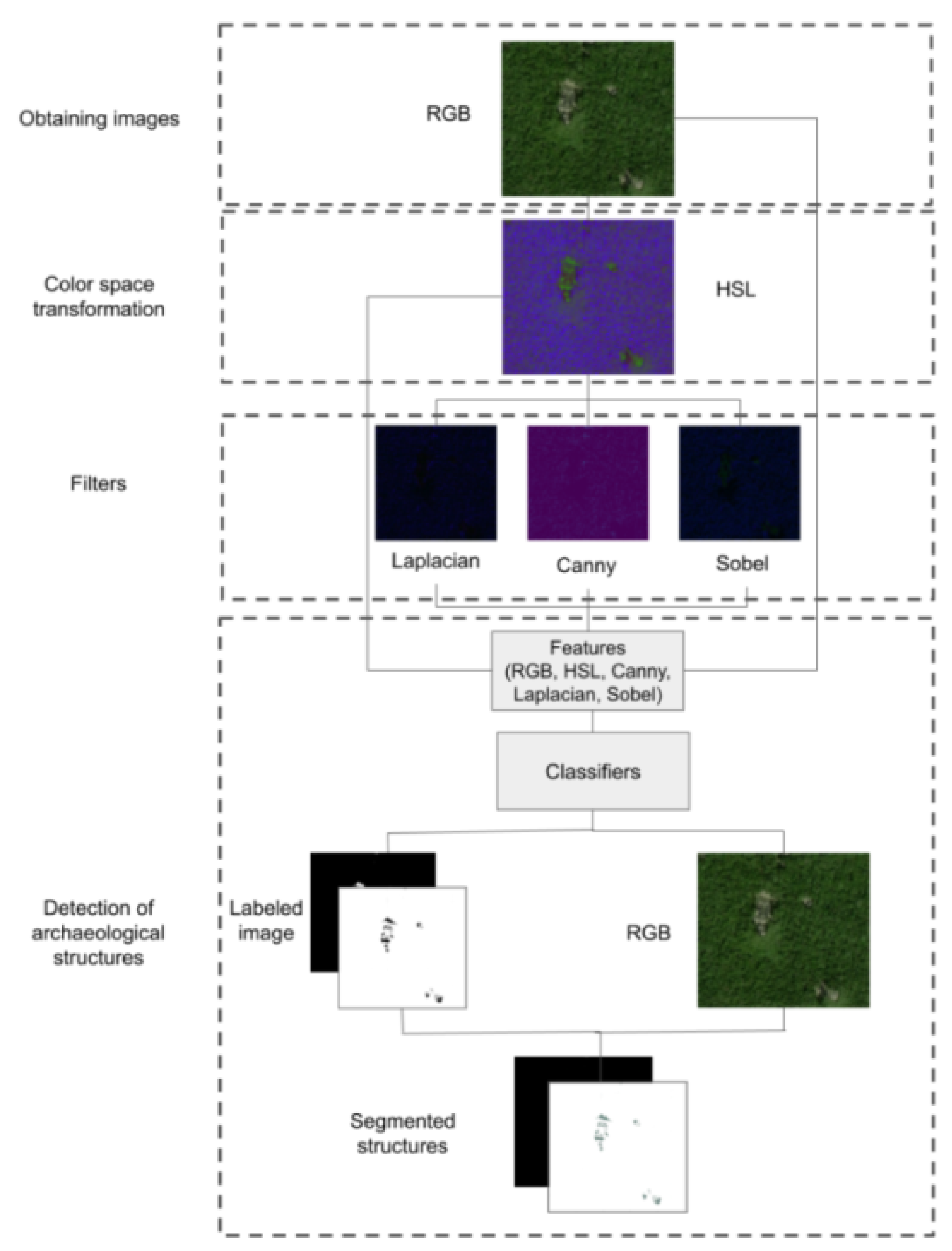

3. Proposed Methods

| Algorithm 1 Workflow of our proposed method. |

|

3.1. Obtaining Images

3.2. Color Space Transformation

- RGB color space: The typical format involves a 24-bit encoding, where the image is constructed using three primary colors—red (R), green (G), and blue (B)—each represented by a separate channel that is processed by cameras and computers. With 8 bits allocated to each color channel, there are 256 distinct values possible per channel, resulting in a total of 16,777,216 color combinations. Additive color mixing is used in this color space to create the final image, which is divided into the three channels (R, G, and B) and treated as distinct features for each color channel.

- HSL color space: This consists of three color channels: H (hue), which represents the primary colors (red, green, and blue); L (lightness), which takes into account the amount of light in an image, where the amount of light will tend to black; and S (saturation), where the amount of saturation in the image will depend on whether the color turns gray or maintains the original color. Choosing this color channel allows us to carry out the processing of the image in individual planes, because the color is represented in the image hue and lightness, while the saturation is used as a masking image that isolates the area of interest in the image [7].

3.3. Filters

- The Canny filter is based on the first derivative of a Gaussian function. However, since raw image data is often affected by noise, the original image is preprocessed with a Gaussian filter to reduce the noise. This results in a slightly blurred version of the original image [37].

- The Laplacian filter on the other hand, is an edge detector that calculates the second derivatives of an image, measuring the rate of change in the first derivative. It is used to determine whether a change in adjacent pixel values represents an edge or a continuous progression [37].

- Finally, the Sobel filter uses a small, separable, integer-valued filter in both the horizontal and vertical directions to convolve the image. This filter is relatively inexpensive in terms of runtime [37].

3.4. Detection of Archaeological Structures

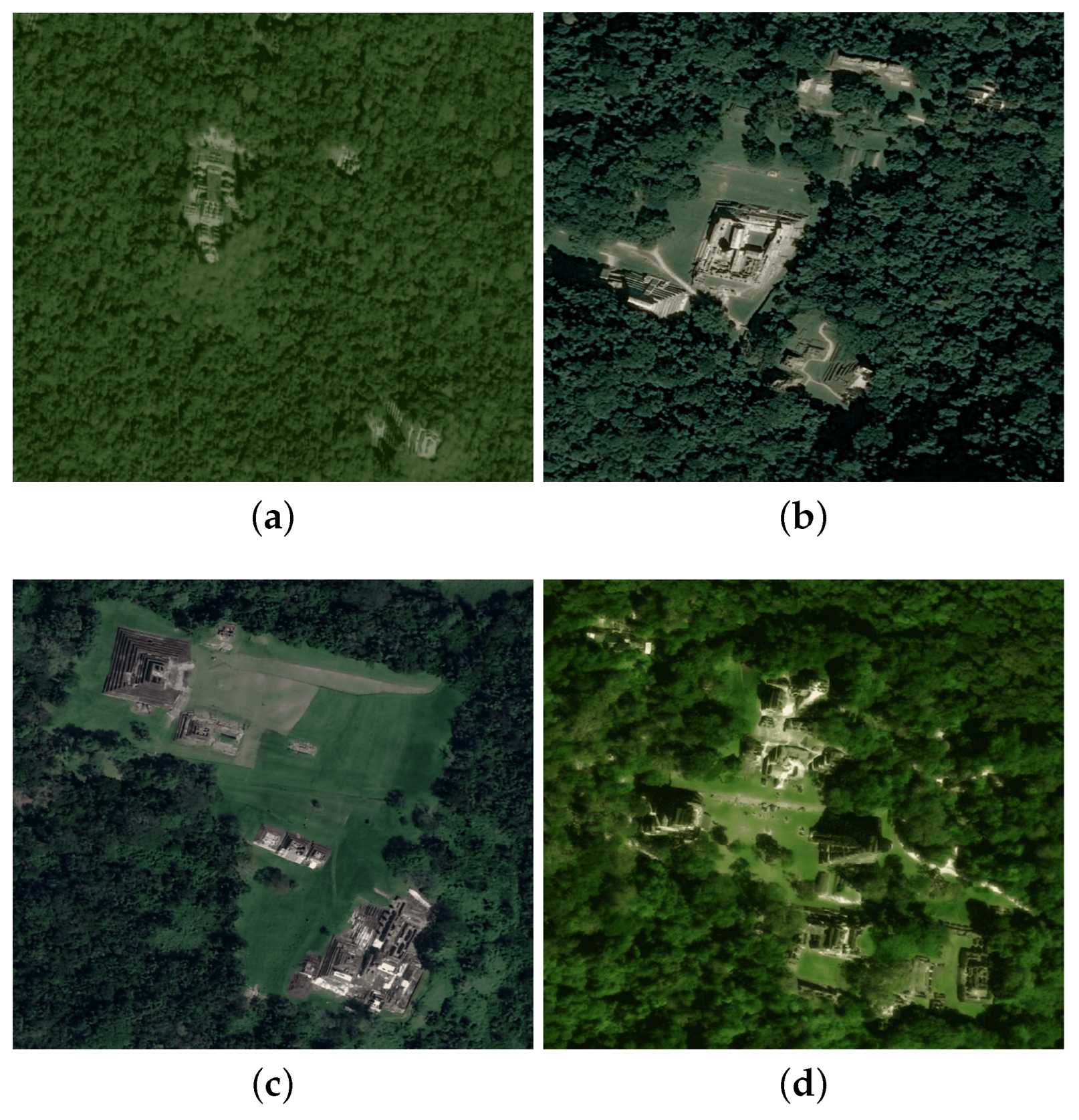

4. Experiments

5. Discussion

6. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chaudhuri, D.; Kushwaha, N.K.; Samal, A.; Agarwal, R.C. Automatic Building Detection From High-Resolution Satellite Images Based on Morphology and Internal Gray Variance. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 1767–1779. [Google Scholar] [CrossRef]

- Sirmacek, B.; Unsalan, C. A Probabilistic Framework to Detect Buildings in Aerial and Satellite Images. IEEE Trans. Geosci. Remote Sens. 2011, 49, 211–221. [Google Scholar] [CrossRef][Green Version]

- Chen, X.; Xiang, S.; Liu, C.L.; Pan, C.H. Vehicle Detection in Satellite Images by Hybrid Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1797–1801. [Google Scholar] [CrossRef]

- Sun, X.; Wang, P.; Wang, C.; Liu, Y.; Fu, K. PBNet: Part-based convolutional neural network for complex composite object detection in remote sensing imagery. ISPRS J. Photogramm. Remote Sens. 2021, 173, 50–65. [Google Scholar] [CrossRef]

- Can, G.; Mantegazza, D.; Abbate, G.; Chappuis, S.; Giusti, A. Semantic segmentation on Swiss3DCities: A benchmark study on aerial photogrammetric 3D pointcloud dataset. Pattern Recognit. Lett. 2021, 150, 108–114. [Google Scholar] [CrossRef]

- Bourgeois, J.; Meganck, M. Aerial Photography and Archaeology 2003: A Century of Information; Papers Presented During the Conference Held at the Ghent University, December 10th–12th, 2003; Academia Press: Cambridge, MA, USA, 2005; Volume 4. [Google Scholar]

- Gonzalez, R.C.; Richard, E. Woods Digital Image Processing; Pearson: London, UK, 2018. [Google Scholar]

- Caspari, G. Mapping and damage assessment of “royal” burial mounds in the Siberian Valley of the Kings. Remote Sens. 2020, 12, 773. [Google Scholar] [CrossRef][Green Version]

- Luo, L.; Wang, X.; Guo, H.; Lasaponara, R.; Zong, X.; Masini, N.; Wang, G.; Shi, P.; Khatteli, H.; Chen, F.; et al. Airborne and spaceborne remote sensing for archaeological and cultural heritage applications: A review of the century (1907–2017). Remote Sens. Environ. 2019, 232, 111280. [Google Scholar] [CrossRef]

- Caspari, G.; Crespo, P. Convolutional neural networks for archaeological site detection–Finding “princely” tombs. J. Archaeol. Sci. 2019, 110, 104998. [Google Scholar] [CrossRef]

- Traviglia, A.; Torsello, A. Landscape pattern detection in archaeological remote sensing. Geosciences 2017, 7, 128. [Google Scholar] [CrossRef][Green Version]

- Lambers, K.; Verschoof-van der Vaart, W.B.; Bourgeois, Q.P. Integrating remote sensing, machine learning, and citizen science in Dutch archaeological prospection. Remote Sens. 2019, 11, 794. [Google Scholar] [CrossRef][Green Version]

- Soroush, M.; Mehrtash, A.; Khazraee, E.; Ur, J.A. Deep learning in archaeological remote sensing: Automated qanat detection in the kurdistan region of Iraq. Remote Sens. 2020, 12, 500. [Google Scholar] [CrossRef][Green Version]

- Gansell, A.R.; van de Meent, J.W.; Zairis, S.; Wiggins, C.H. Stylistic clusters and the Syrian/South Syrian tradition of first-millennium BCE Levantine ivory carving: A machine learning approach. J. Archaeol. Sci. 2014, 44, 194–205. [Google Scholar] [CrossRef][Green Version]

- Hörr, C.; Lindinger, E.; Brunnett, G. Machine learning based typology development in archaeology. J. Comput. Cult. Herit. (JOCCH) 2014, 7, 1–23. [Google Scholar] [CrossRef]

- Wilczek, J.; Monna, F.; Gabillot, M.; Navarro, N.; Rusch, L.; Chateau, C. Unsupervised model-based clustering for typological classification of Middle Bronze Age flanged axes. J. Archaeol. Sci. Rep. 2015, 3, 381–391. [Google Scholar] [CrossRef]

- Barone, G.; Mazzoleni, P.; Spagnolo, G.V.; Raneri, S. Artificial neural network for the provenance study of archaeological ceramics using clay sediment database. J. Cult. Herit. 2019, 38, 147–157. [Google Scholar] [CrossRef]

- Adams, R.E.; Brown, W.E.; Culbert, T.P. Radar mapping, archaeology, and ancient Maya land use. Science 1981, 213, 1457–1468. [Google Scholar] [CrossRef]

- Inomata, T.; Triadan, D.; López, V.A.V.; Fernandez-Diaz, J.C.; Omori, T.; Bauer, M.B.M.; Hernández, M.G.; Beach, T.; Cagnato, C.; Aoyama, K.; et al. Monumental architecture at Aguada Fénix and the rise of Maya civilization. Nature 2020, 582, 530–533. [Google Scholar] [CrossRef]

- Hansen, R.D.; Morales-Aguilar, C.; Thompson, J.; Ensley, R.; Hernández, E.; Schreiner, T.; Suyuc-Ley, E.; Martínez, G. LiDAR analyses in the contiguous Mirador-Calakmul Karst Basin, Guatemala: An introduction to new perspectives on regional early Maya socioeconomic and political organization. Anc. Mesoam. 2022, 1–40. [Google Scholar] [CrossRef]

- Monna, F.; Magail, J.; Rolland, T.; Navarro, N.; Wilczek, J.; Gantulga, J.O.; Esin, Y.; Granjon, L.; Allard, A.C.; Chateau-Smith, C. Machine learning for rapid mapping of archaeological structures made of dry stones–Example of burial monuments from the Khirgisuur culture, Mongolia–. J. Cult. Herit. 2020, 43, 118–128. [Google Scholar] [CrossRef]

- Lindsay, I.; Mkrtchyan, A. Free and Low-Cost Aerial Remote Sensing in Archaeology: An Overview of Data Sources and Recent Applications in the South Caucasus. Adv. Archaeol. Pract. 2023, 1–20. [Google Scholar] [CrossRef]

- Jiang, H.; Peng, M.; Zhong, Y.; Xie, H.; Hao, Z.; Lin, J.; Ma, X.; Hu, X. A Survey on Deep Learning-Based Change Detection from High-Resolution Remote Sensing Images. Remote Sens. 2022, 14, 1552. [Google Scholar] [CrossRef]

- UIS. World Heritage in Danger. 2021. Available online: https://whc.unesco.org/en/158/ (accessed on 14 May 2023).

- Levin, N.; Ali, S.; Crandall, D.; Kark, S. World Heritage in danger: Big data and remote sensing can help protect sites in conflict zones. Glob. Environ. Chang. 2019, 55, 97–104. [Google Scholar] [CrossRef]

- Davis, D.S. Geographic disparity in machine intelligence approaches for archaeological remote sensing research. Remote Sens. 2020, 12, 921. [Google Scholar] [CrossRef][Green Version]

- Van der Maaten, L.; Boon, P.; Lange, G.; Paijmans, H.; Postma, E. Computer Vision and Machine Learning for Archaeology. In Proceedings of the 34th Computer Applications and Quantitative Methods in Archaeology, Fargo, ND, USA, 18–21 April 2006; pp. 112–130. [Google Scholar]

- Character, L.; Ortiz, A., Jr.; Beach, T.; Luzzadder-Beach, S. Archaeologic Machine Learning for Shipwreck Detection Using Lidar and Sonar. Remote Sens. 2021, 13, 1759. [Google Scholar] [CrossRef]

- Pinilla-Buitrago, L.A.; Carrasco-Ochoa, J.A.; Martinez-Trinidad, J.F. Including Foreground and Background Information in Maya Hieroglyph Representation. In Proceedings of the Mexican Conference on Pattern Recognition, Puebla, Mexico, 27–30 June 2018; pp. 238–247. [Google Scholar]

- Pinilla-Buitrago, L.A.; Carrasco-Ochoa, J.A.; Martínez-Trinidad, J.F.; Román-Rangel, E. Improved hieroglyph representation for image retrieval. J. Comput. Cult. Herit. (JOCCH) 2019, 12, 1–15. [Google Scholar] [CrossRef]

- Hollesen, J.; Jepsen, M.S.; Harmsen, H. The Application of RGB, Multispectral, and Thermal Imagery to Document and Monitor Archaeological Sites in the Arctic: A Case Study from South Greenland. Drones 2023, 7, 115. [Google Scholar] [CrossRef]

- Liu, Y.; Hu, Q.; Wang, S.; Zou, F.; Ai, M.; Zhao, P. Discovering the Ancient Tomb under the Forest Using Machine Learning with Timing-Series Features of Sentinel Images: Taking Baling Mountain in Jingzhou as an Example. Remote Sens. 2023, 15, 554. [Google Scholar] [CrossRef]

- Bachagha, N.; Elnashar, A.; Tababi, M.; Souei, F.; Xu, W. The Use of Machine Learning and Satellite Imagery to Detect Roman Fortified Sites: The Case Study of Blad Talh (Tunisia Section). Appl. Sci. 2023, 13, 2613. [Google Scholar] [CrossRef]

- Demydov, V. SAS Planet. Available online: http://www.sasgis.org/ (accessed on 14 May 2023).

- Seong, H.; Son, H.; Kim, C. A comparative study of machine learning classification for color-based safety vest detection on construction-site images. KSCE J. Civ. Eng. 2018, 22, 4254–4262. [Google Scholar] [CrossRef]

- Castillo, R.; Hernández, J.M.; Inzunza, E.; Torres, J.P. Procesamiento Digital de Imágenes Empleando Filtros Espaciales. In Proceedings of the Décima Segunda Conferencia Iberoamericana en Sistemas, Cibernética e Informática: CISCI 2013, Orlando, FL, USA, 9–12 July 2013. [Google Scholar]

- Intel Corporation, Bradski, Gary and Kaehler, Adrian and Others Open Source Computer Vision Library. Available online: https://docs.opencv.org (accessed on 14 May 2023).

| Archaeological Site | Structure | Non-Structure |

|---|---|---|

| Calakmul | 178 | 222 |

| Palenque | 133 | 204 |

| Comalcalco | 193 | 564 |

| Tikal | 113 | 192 |

| Algorithm | Precision | Recall | F1 Score | Accuracy |

|---|---|---|---|---|

| Calakmul | ||||

| KNN | 1.00 | 1.00 | 1.00 | 1.00 |

| NN | 1.00 | 1.00 | 1.00 | 1.00 |

| RF | 1.00 | 1.00 | 1.00 | 1.00 |

| SVM | 1.00 | 1.00 | 1.00 | 1.00 |

| LR | 1.00 | 1.00 | 1.00 | 1.00 |

| Palenque | ||||

| KNN | 1.00 | 0.99 | 0.99 | 0.99 |

| NN | 1.00 | 1.00 | 1.00 | 1.00 |

| RF | 1.00 | 1.00 | 1.00 | 1.00 |

| SVM | 1.00 | 0.96 | 0.98 | 0.98 |

| LR | 1.00 | 1.00 | 1.00 | 1.00 |

| Comalcalco | ||||

| KNN | 1.00 | 0.97 | 0.98 | 0.99 |

| NN | 0.99 | 0.98 | 0.99 | 0.99 |

| RF | 1.00 | 0.99 | 0.99 | 0.99 |

| SVM | 0.98 | 1.00 | 0.99 | 0.99 |

| LR | 1.00 | 0.99 | 0.99 | 0.99 |

| Tikal | ||||

| KNN | 1.00 | 1.00 | 1.00 | 1.00 |

| NN | 1.00 | 1.00 | 1.00 | 1.00 |

| RF | 1.00 | 1.00 | 1.00 | 1.00 |

| SVM | 1.00 | 1.00 | 1.00 | 1.00 |

| LR | 1.00 | 1.00 | 1.00 | 1.00 |

| Algorithm | Precision | Recall | F1 Score | Accuracy |

|---|---|---|---|---|

| KNN | 0.97 | 0.92 | 0.94 | 0.94 |

| NN | 0.95 | 0.94 | 0.94 | 0.96 |

| RF | 0.96 | 0.92 | 0.94 | 0.96 |

| SVM | 0.96 | 0.95 | 0.95 | 0.97 |

| LR | 0.96 | 0.94 | 0.95 | 0.97 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fuentes-Carbajal, J.A.; Carrasco-Ochoa, J.A.; Martínez-Trinidad, J.F.; Flores-López, J.A. Machine Learning and Image-Processing-Based Method for the Detection of Archaeological Structures in Areas with Large Amounts of Vegetation Using Satellite Images. Appl. Sci. 2023, 13, 6663. https://doi.org/10.3390/app13116663

Fuentes-Carbajal JA, Carrasco-Ochoa JA, Martínez-Trinidad JF, Flores-López JA. Machine Learning and Image-Processing-Based Method for the Detection of Archaeological Structures in Areas with Large Amounts of Vegetation Using Satellite Images. Applied Sciences. 2023; 13(11):6663. https://doi.org/10.3390/app13116663

Chicago/Turabian StyleFuentes-Carbajal, José Alberto, Jesús Ariel Carrasco-Ochoa, José Francisco Martínez-Trinidad, and Jorge Arturo Flores-López. 2023. "Machine Learning and Image-Processing-Based Method for the Detection of Archaeological Structures in Areas with Large Amounts of Vegetation Using Satellite Images" Applied Sciences 13, no. 11: 6663. https://doi.org/10.3390/app13116663

APA StyleFuentes-Carbajal, J. A., Carrasco-Ochoa, J. A., Martínez-Trinidad, J. F., & Flores-López, J. A. (2023). Machine Learning and Image-Processing-Based Method for the Detection of Archaeological Structures in Areas with Large Amounts of Vegetation Using Satellite Images. Applied Sciences, 13(11), 6663. https://doi.org/10.3390/app13116663