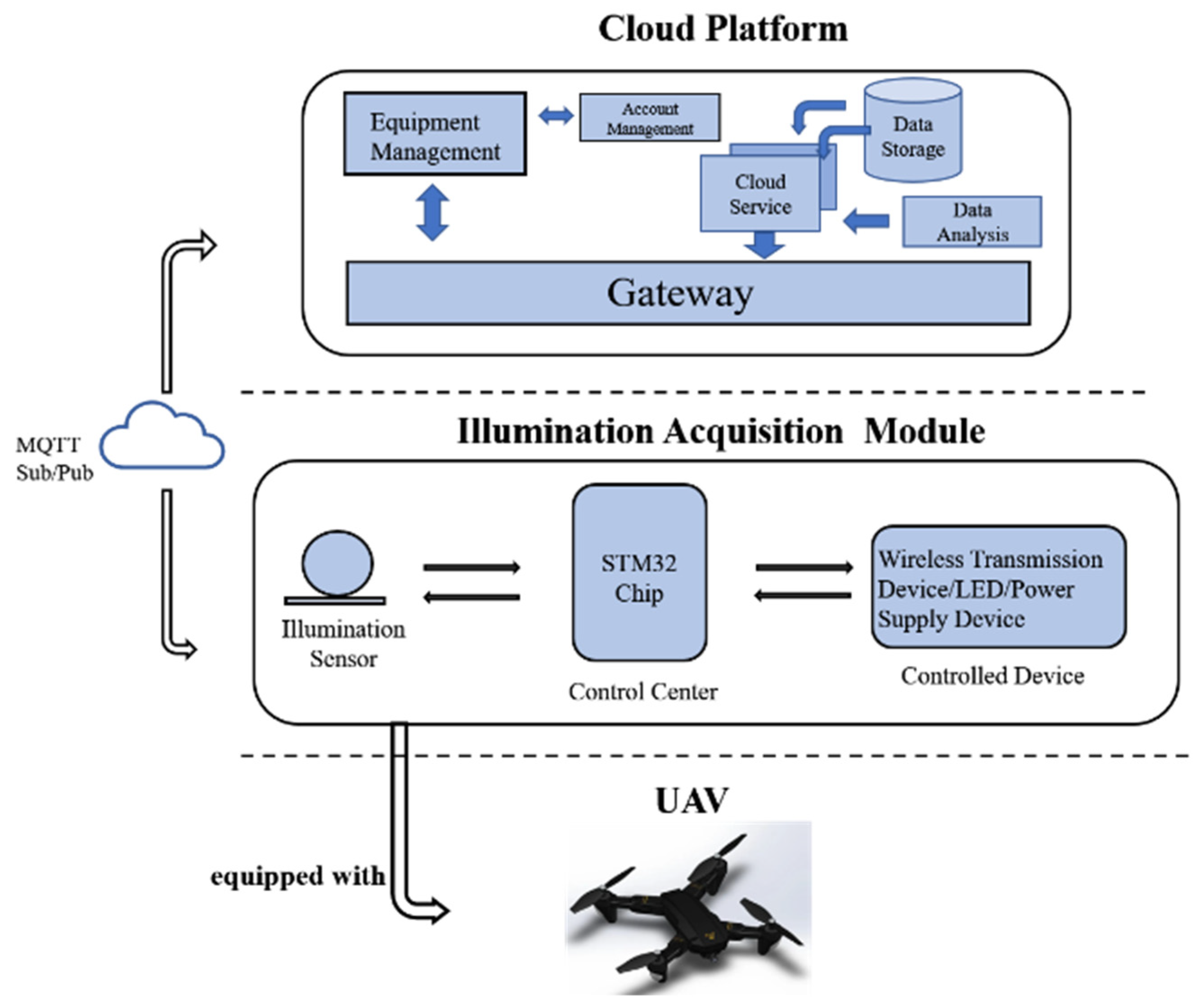

2.1. Illumination Acquisition System Architecture

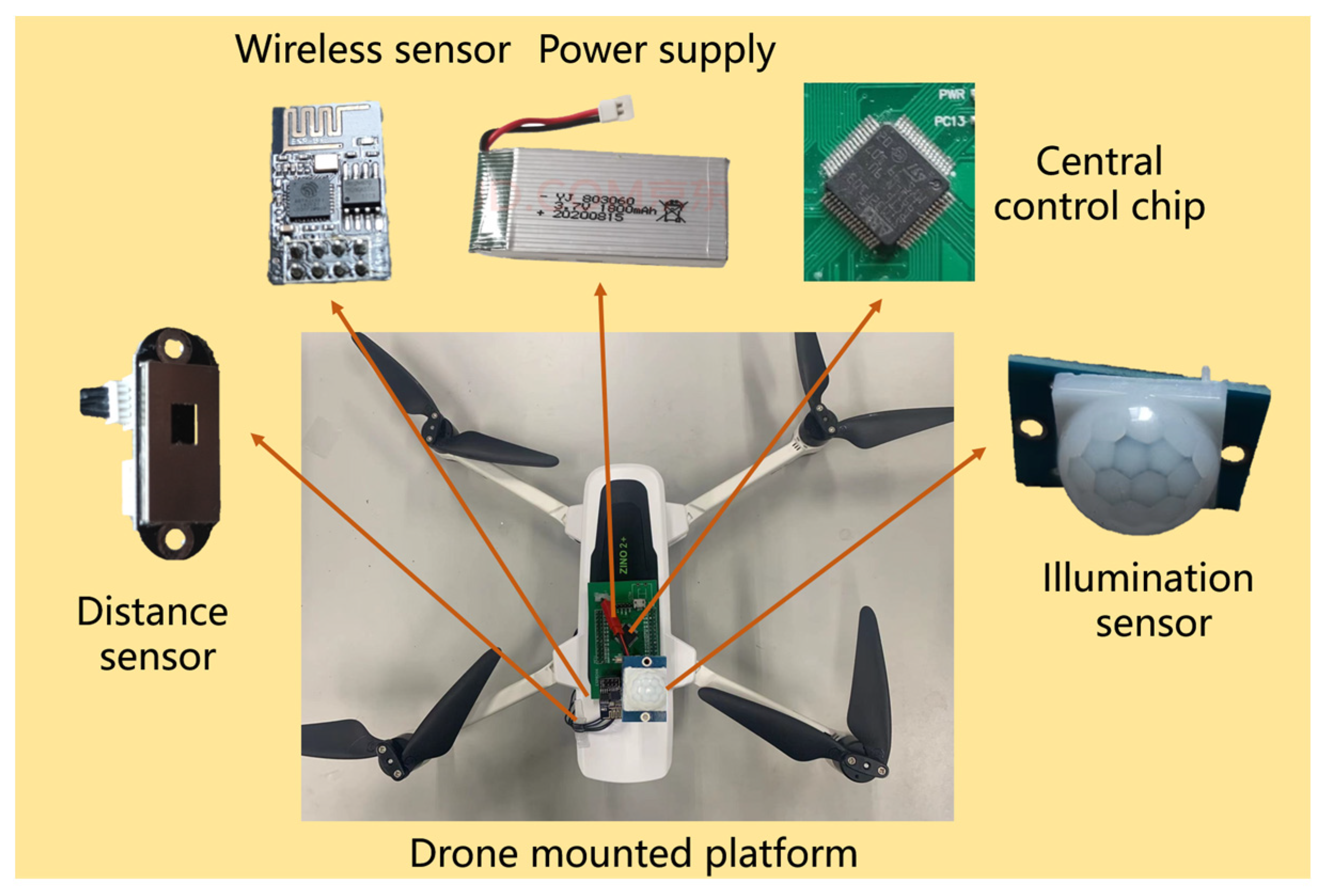

The visualization function is defined as spectral luminous efficiency to represent the visual sensitivity of the human eye at different wavelengths, according to the human eye vision properties. Since stadium illuminance is mainly suitable for athletes and spectators, the process of measuring sports stadium illuminance needs to make the response of the detection equipment consistent with that of the human eye. And the illuminance sensor, which is the main information input for the dynamic measurement system, needs to have the characteristics of fast measurement speed and high measurement accuracy. The photodiode illumination sensor is chosen as the system’s illumination acquisition component, which is made with ROHM Semiconductor’s BH1750FVI chip. It is commonly used in electronic devices and projects that require light detection and measurement. Key features of the BH1750FVI include its compact size, low power consumption, and digital output. It uses a built-in 16-bit analog-to-digital converter (ADC) to provide accurate light intensity readings. The sensor is capable of measuring a wide range of light levels, from very low to high intensities. As a real-time acquisition system mounted on a UAV, the system should have low power consumption and be small in size, and it also needs to have high real-time performance. The STM32 chip made in STMicroelectronics is used as the central processing unit of the operating system proposed in this paper. The STM32 model chosen for this paper is the STM32F103RCT6 in order to facilitate the mounting, which is characterized by small size, low power consumption, and fast processing speed. Furthermore, it can be powered by the FreeRTOS operating system, which has higher real-time performance as a result of the multithreaded operation through time-slice rotation. The system also has the function of transmitting illumination data to the cloud platform wirelessly, which is based on the WiFi communication module, named ESP826 made in Espressif Systems [

18]. The total data-transfer rate of the ESP8266 model is designed for 2 Mbps, and the model number is ESP8266-01S.

WiFi wireless transmission technology is easy to network, and no wiring is required for data transmission [

14]. Meanwhile, the data-transmission distance can reach about 100 m. To cope with illuminance measurements at different height levels, the laser distance sensor is selected. In this paper, the distance sensor of the type is ToF Sense-UART, which can be easily communicated with different modules. OneNET IoT platform is selected as a data-receiving platform in wireless transmission that supports adaptation to various network environments and protocol types. The IoT platform has rich functions, including fast access to various sensors and smart hardware, rich APIs, application templates to support the development of various industry applications, and so on.

As for the UAV-carrying platform, the HUBSON ZINO2+ UAV was chosen as the platform of illumination acquisition. It is a professional-grade UAV that can hover accurately and stably indoors or outdoors, and it can load with a weight of 500 g. The UAV supports a variety of flight modes, such as variable speed and constant speed flight. In addition, the mobile app provides real-time feedback, recording the single flight time and hovering position. The HUBSAN ZINO2+ UAV used in this experiment has an optical flow positioning function, which is mainly used to determine the position information of the indoor environment. When the UAV is indoors, the optical-flow navigation system, which is a positioning method that has been used in UAV positioning and control systems in recent years, can determine the current position information based on the information acquired by a specific camera that is on the tail of the UAV. The structure of the illuminance acquisition module is as

Figure 1.

As shown in

Figure 1, the system is structured in three main layers, including UAV, illumination acquisition module, and cloud platform. The UAV is used as a working platform for the entire illuminance acquisition system and plays an important role in illuminance acquisition as well as in the positioning of measurement points. The illumination acquisition module is used as the data input and control command-execution part of the system, consisting of an illumination sensor (BH1750FVI), a WiFi transmission section (ESP8266), a laser distance measurement module (ToF Sense), and a Li-ion battery power supply. The illuminance acquisition module can get illuminance data every 180 ms. The individual parts of the module transmit data to each other via the different communication protocols, where data transfer between the illuminance sensor and the STM32F103RCT6 is based on the I2C communication protocol, and command delivery and data transfer between ESP8266 and STM32F103RCT6 via UART serial communication protocol, and STM32RCT6F103 also can control the laser distance-measurement module to complete the height-measurement judgment. When the UAV illumination acquisition system is used to acquire illumination in the sports venue, the distribution of illumination varies at different heights, as proved by the inverse squared ratio. Therefore, a distance measurement module is required to be carried in the system. The ToF Sense can perform distance measurement with a refresh frequency of 30 HZ, and the measuring distance is from 0.03–8 M with an accuracy of ±0.03 M. In addition, the ToF Sense’s small size and low power consumption make it very suitable for use as a sensor on the UAV. The communication between the various parts of the system is shown in

Figure 2.

2.2. System Software Design

The system proposed in this paper uses the Keil uVision5 integrated development environment, including designing the program to complete the illumination acquisition and setting the ESP8266 module to operate in the appropriate mode to connect to the cloud platform and transfer the data. For the programming in this paper, the FreeRTOS real-time operating system is chosen as the basis for the programming. The FreeRTOS real-time operating system is free and highly real time, with a compact kernel and open-source source code. The FreeRTOS operating system is based on a task, which is a program entity that completes a segment for a specific purpose, to complete the overall system program design. And the design of the overall system is completed by switching between the priority of each task and the control of the four task states. The state transitions between the various task states of the FreeRTOS real-time operating system are shown in

Figure 3.

As shown in

Figure 3, tasks in the FreeRTOS operating system are divided into four states, called ready, block, running, and suspend. Whenever a task is created successfully, it is automatically put in the ready state, and if the current task has a higher priority than the running task, it enters the running state, but if the priority of the current task is not higher than the priority of the running task, the current task will enter the ready state. When a task in the running state calls the function associated with vTaskDelay(), the task switches to the blocking state. A task in a blocking state will not be able to execute and be called again, and it will end up in the blocking state when the blocking condition is met or when the event time change in the diagram occurs. And in the FreeRTOS operating system, the task of all the tasks in the ready state, which is with the highest priority, goes into the running state. In addition, when the vTaskSuspend() function is called, the task will be converted to a pending state and the pending task will not be scheduled indefinitely, If the task is rescheduled, it can only be unmounted with the vTaskResume() function.

For the system presented in the paper, when the system is first powered up, it will first detect if it has received a signal to start illumination measurement, and when a signal to start illumination measurement is detected, it will turn on illumination measurement. Before starting the illuminance measurement, the height of the illuminance measurement needs to be specified via the cloud platform. When the UAV takes off, the illuminance acquisition system will compare the specified measurement height received with the current height, and the system will start collecting illuminance if the UAV illuminance acquisition system is within ±0.1 m of the specified measurement height. The collected illuminance data is then transmitted to the OneNET cloud platform, which can plot the illuminance collection variation curve in real time based on the data received. Once the illuminance has been collected, the local-outlier factor algorithm is used to first reject the outliers and then calculate the average of the illuminance data collected at the same point to determine the illuminance value at the point. The program-flow diagram of the illumination acquisition system proposed in this paper is shown in

Figure 4.

2.3. System Outlier Handling Algorithm

There are some outliers in the acquired data due to the instability of the drone hovering and flying in the process of illuminance acquisition by the system and changes in the external environment can also greatly interfere with the measurement of the UAV. Data acquired by a UAV-based illumination acquisition system have the characteristics of a random distribution, which is not a linear distribution, and not a Gaussian distribution. The detection of outliers in such data has important implications for the stability and accuracy of the system. These outliers can lead to large inaccuracies in measurement results, so it is necessary to find and eliminate outliers in measurements. Outliers are defined as data that are distinctive in the dataset so that they make one suspect that these data are not random deviations but are generated by a different mechanism according to Euclidean distance. The specific definition of outliers is as follows based on the description of Euclidean distance. The acquisition set to R = {r(x1), r(x2), …, r(xn)} and S = {f(x1), f(x2), …, f(xn)} is generated by two mechanisms, which are defined as r(x) and f(x) respectively, according to the data acquisition defined as D = {x1, x2, …, xn}. And then owned by is defined as outliers in the case of that M(xi) ≫ M(xj) (i ≠ j) under the condition that f(xi) is not equal to r(xi), with the defined as an outlier feature.

As shown in

Figure 5, big data objects follow a certain distribution law, but the data x is clearly deviated, so it can be considered that it is produced by a different mechanism and judged as an outlier. In general, outliers can be divided into global outliers, local outliers, situational (or conditional) outliers, and collective outliers. In the design of the illumination measurement system, the LOF algorithm is selected as the detection method of outliers in this paper. The local-outlier factor is mainly used to determine whether the sample is anomalous by calculating the outlier factor and comparing whether it is far from dense data. The local-outlier factor is a density-based local-outlier detection algorithm, and the specific implementation is shown below. The

d(

o,

p) is defined as the distance from point

p to point

o;

dk(

o) is defined as the

k-distance, which is to radiate outward with

p as the center of the circle until the

k neighboring point is covered; and

Nk(

o) is the

k-distance neighborhood of data point

p defined by the set of all points within the k-distance of point

p. The

reach_distk(

o,

p) is defined as shown in Equation (1).

The distance definition diagram is shown in

Figure 6.

The local reachable density is defined in Equation (2) based on the above-defined representation.

The

lrdk(

p) characterizes the density of the point

p, and it is known the higher the density of point

p and the surrounding points, the reachable distance of each point may be the smaller respective

k-distance, corresponding to a larger

lrd value; the lower the density of point

p and the surrounding points, the reachable distance of each point may be the larger actual distance between two points, corresponding to a larger

lrd value. In addition, the local-outlier factor can also be defined as Equation (3) by using the

lrd value.

From Equation (3), we can see that the local-outlier factor of point

p is the ratio of the average locally reachable density of all points in the

Nk(

p) neighborhood of point

p to the locally reachable density of point

p. When this ratio is greater than 1, the density of point

p is less than the density of its surrounding points, and point

p may be an outlier; when this ratio is less than 1, the density of point

p is greater than the density of its surrounding points, and point

p may be a normal point. The density value can be infinity if the number of duplicates is greater than the number of

k neighbors; therefore, the weighted local-outlier factor (WLOF) is defined if the data contains duplicates. The details are as follows.

As shown in Equation (4), the

w(

o) is the number of duplicates in the data. After computing the weight values, the algorithm treats each set of duplicates as one data value. The calculation of the weighted local outlier is shown in Equation (5)

In the use of the LOF algorithm, it is necessary to first determine the threshold of the LOF algorithm. In the paper, we used DIALux evo version 11.1 to model the venue and make it comply with the Venue Illumination Standards specified by the Standard for lighting design and test of sports venues (JGJ 153-2016). In the venue model scenario, a uniform light distribution is set, with an illuminance uniformity of 0.62, and it also can meet the standard for lighting design and the requirements for the illuminance of a venue with televised coverage [

19]. When the UAV is acquiring data, the UAV will sway within a small area of the measurement point, according to the performance of the drone in actual measurements, so this paper chose a 20 cm × 20 cm rectangular calculation surface to simulate the dynamic range of the UAV, and the illuminance uniformity of 25 points in each rectangle (the ratio of minimum illuminance to average illuminance) is about 0.99 through DIALux simulation. We have also taken full account of the representativeness of the calculation surface about the selection of the small calculation surface; therefore, the planes were set at different illumination intervals with reference to the equivalence curve of the illumination distribution. In terms of height, we selected a calculation surface every 5 cm height for a total of 5 calculation surfaces and determined the illuminance calculation surface at a height of 1 m, 2 m, 3 m, and 4 m separately, to meet the requirements of measuring different height illumination values in different competition venues.

The selection of calculation surfaces within the model and the results of the local-outlier factor detection are shown in

Figure 7 and

Figure 8, respectively.

As shown in

Figure 7, The horizontal axis represents the local-outlier factor value calculated from the illuminance data points in different data acquisition planes. As we can see, most of the local-outlier factor values are concentrated within 0.5, indicating that the data density is relatively high, and it also shows that there is very little variation in illuminance in the plane of calculation. In illuminance measurement, points within a small plane are specified as measurement-point illuminance values, and the measurement points are faced with the situation of calculating the uniformity of illumination. Furthermore, the vertical axis represents the number of points that obtained different local-outlier-factor values. We removed 10 percent of the data as the threshold to determine the data to remove the effect of excessive differences in illuminance values between different calculation planes. In

Figure 7, the blue vertical line is the result of the threshold calculated for the different height planes of data, where the results are 0.63, 0.69, 0.51, and 0.65, respectively. As shown in the picture, the points to the right of the blue vertical line are the discarded data in the dataset. Due to the large differences in illuminance values between different high calculation planes, they cannot be used as criteria for the determination of outliers, so the LOF algorithm determines the threshold value so that it detects the 5 percent of training observations as anomalies.

With the use of the algorithm, the nearest point of the selected point is calculated according to the maximum-variance method. The smaller value is selected in the first divided area, and then the algorithm can again look for the smaller value in the second divided region until the smallest value is found in the calculation process. The flow chart of the LOF algorithm is shown in

Figure 8.