DPGWO Based Feature Selection Machine Learning Model for Prediction of Crack Dimensions in Steam Generator Tubes

Abstract

1. Introduction

2. Problem Statement

3. Methodology

- Step 1:

- Read the GLCM features and their corresponding crack dimension datasets.

- Step 2:

- Prioritize the features’ order based on

- (a)

- Mutual Information Score (MIS)

- (b)

- F Score (FS)

- Step 3:

- Arrange the features based on MIS.

- Step 4:

- Select the first 15 features and fix one of the crack’s dimensions as the target value.

- Step 5:

- Set a machine learning model and select R2 and RMSE as performance metrics of the machine learning model.

- Step 6:

- Set the size of a training data set, and based on that, separate the training and testing data sets along their target values.

- Step 7:

- Fit the ML model for the data set and predict both training and testing target values.

- Step 8:

- Compute the response values (performance metrics, namely R2 and RMSE) of the ML model for both the training and testing data sets.

- Step 9:

- Repeat steps 5 to 8 by changing the different ML models.

- Step 10:

- Repeat steps 4 to 9 by changing the number of features to 17, 19, and 21.

- Step 11:

- Repeat steps 3 to 10 by arranging the features based on the F Score.

- Step 12:

- Conduct an ANOVA and statistical test to test the significance of the parameters on the response values.

- Step 10:

- Implement Deng’s method to select the best machine learning model based on R2 and RMSE values.

- Step 11:

- Tune the hyper-parameters of the best machine learning model.

- Step 12:

- Implement the MFO, GWO, and DPGWO algorithms by randomly selecting the given features along with their target values between 15 and 21.

- Step 13:

- Compare the performance of algorithms using performance indicators (Inverted Generational Distance and Spacing) and Friedman’s Test. Select the best number of features and their combinations.

- Step 14:

- Repeat the steps from 3 to 15 for other dimensions of the crack, like crack depth and crack width.

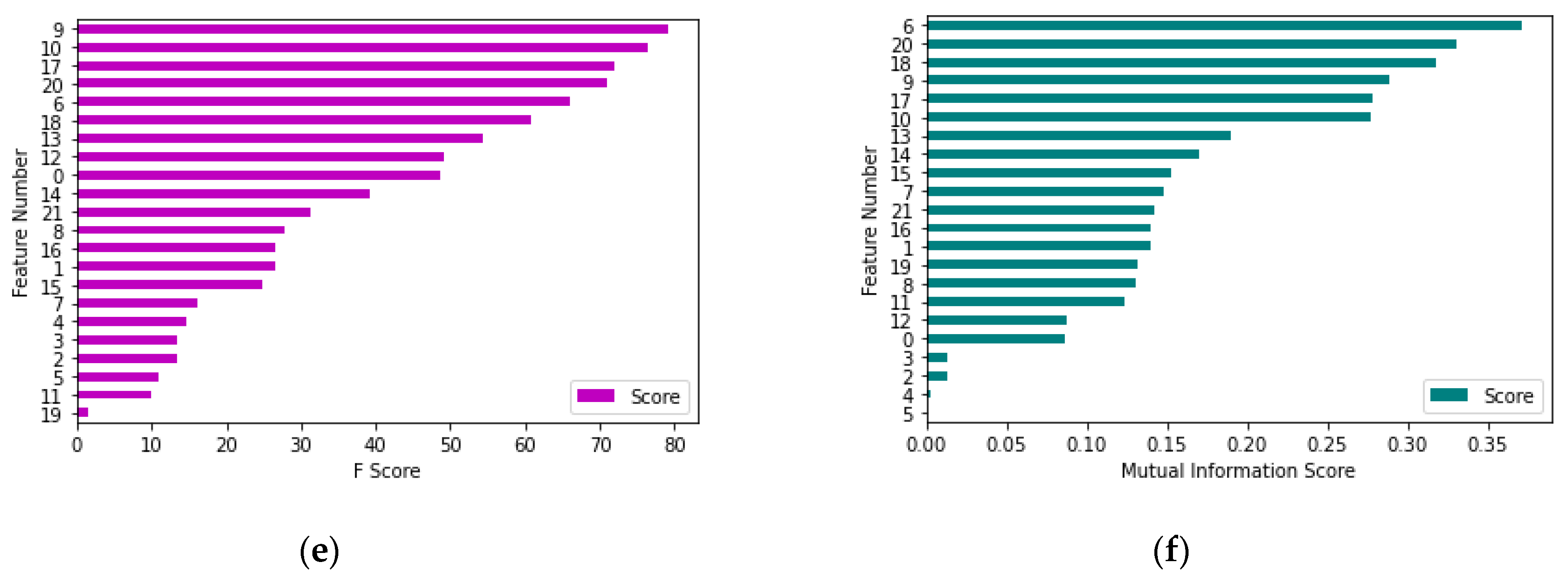

3.1. Stage 1: Prioritizing the Features

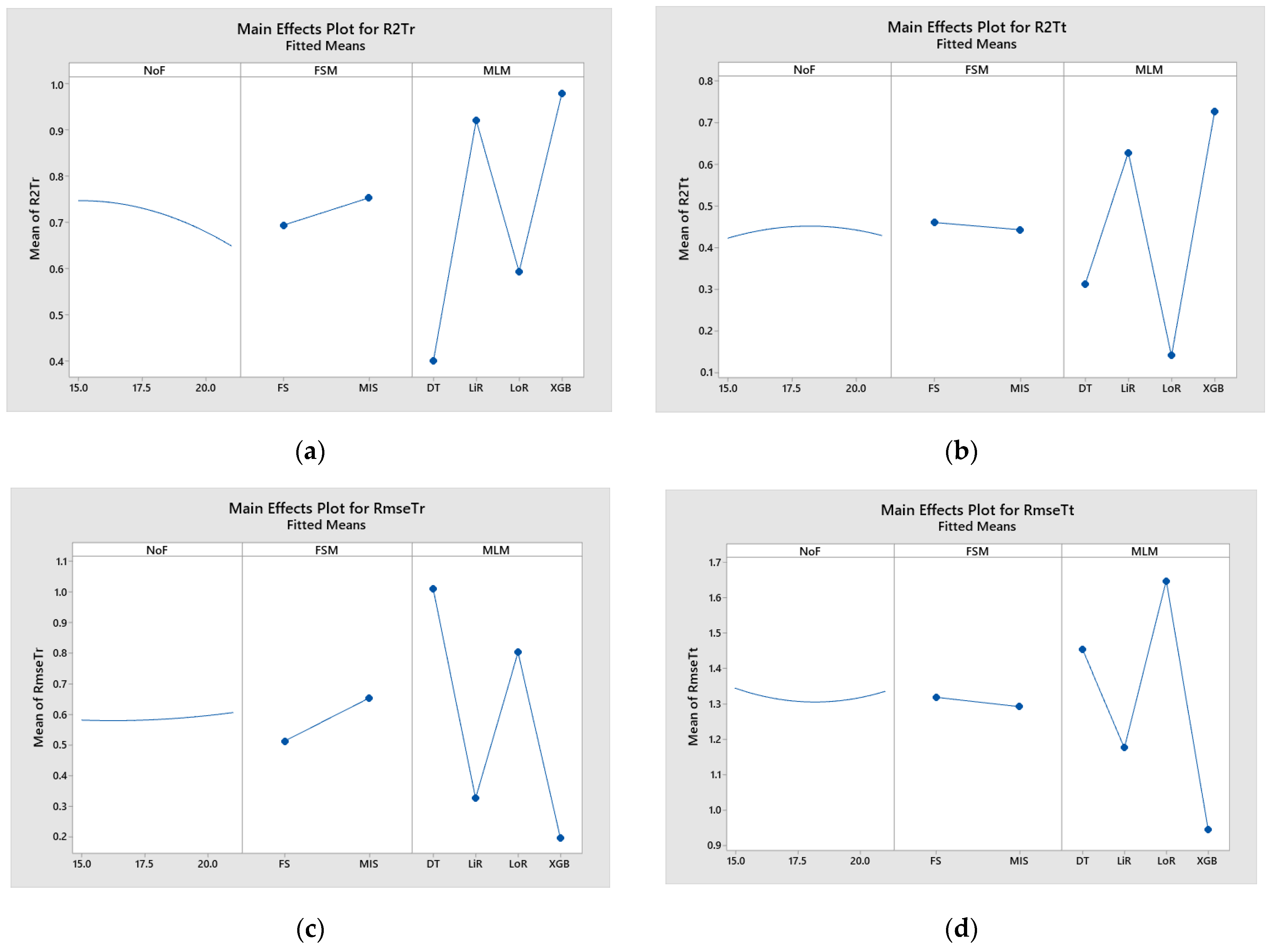

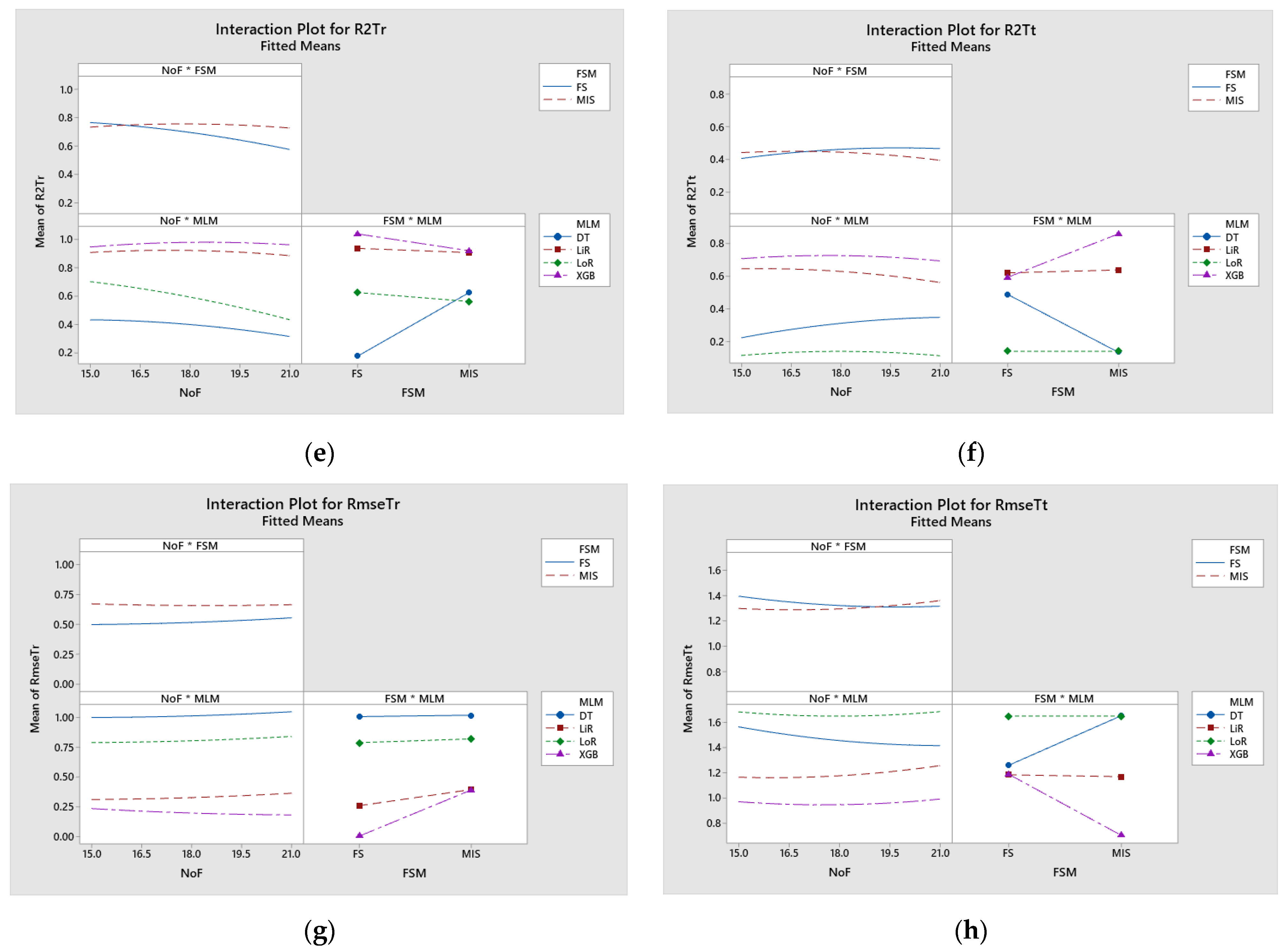

3.2. Stage 2: Taguchi Orthogonal Array Experimental Design for ML Model Selection

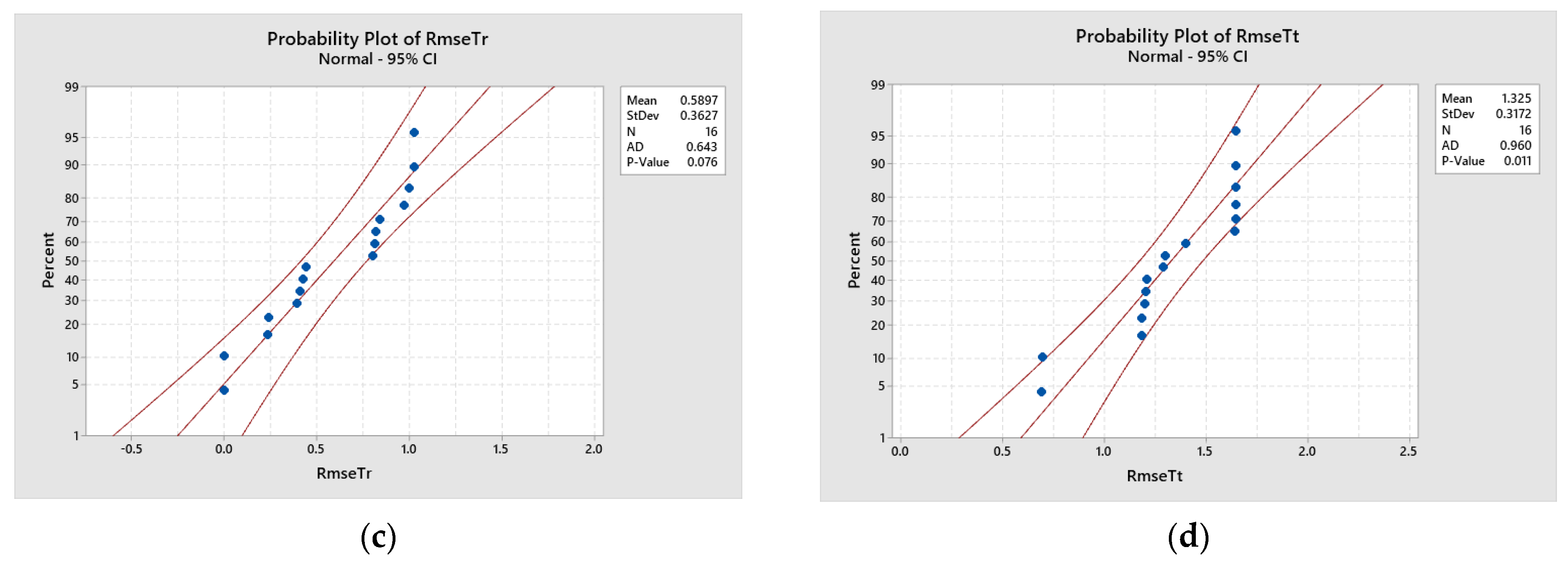

3.3. Stage 3: ANOVA Test

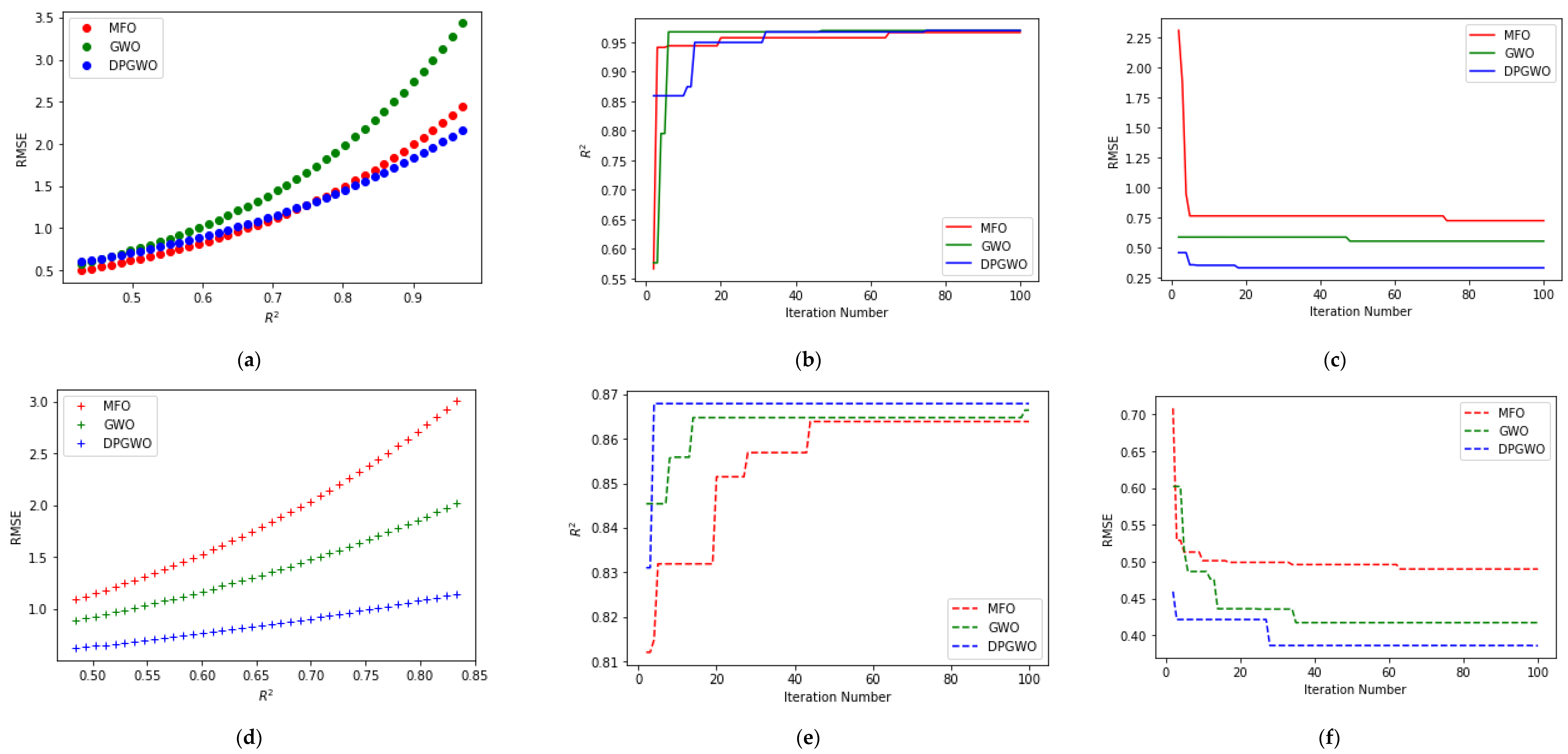

3.4. Stage 4: Meta-Heuristic Algorithms for Feature Selection

3.4.1. Gray Wolf Optimization

- Cross-over and mutation operators to solve economic dispatch problems.

- Different penalty functions are used to solve constrained engineering problems.

- Tournament selection and modified augmented Lagrangian multiplier methods to handle constraint problems.

- Genetic operators to improve exploration and exploitation in multi-objective problems.

- Non-dominated sorting operator to solve multi-objective problems.

- Hybridization of other meta-heuristic algorithms with GWO.

3.4.2. Dynamic Population Gray Wolf Optimization (DPGWO)

- Introducing life for each wolf during population initialization.

- Fixing the age and probability of reproducing capability of the wolf.

- Choosing the disease probability to control the dynamic population size.

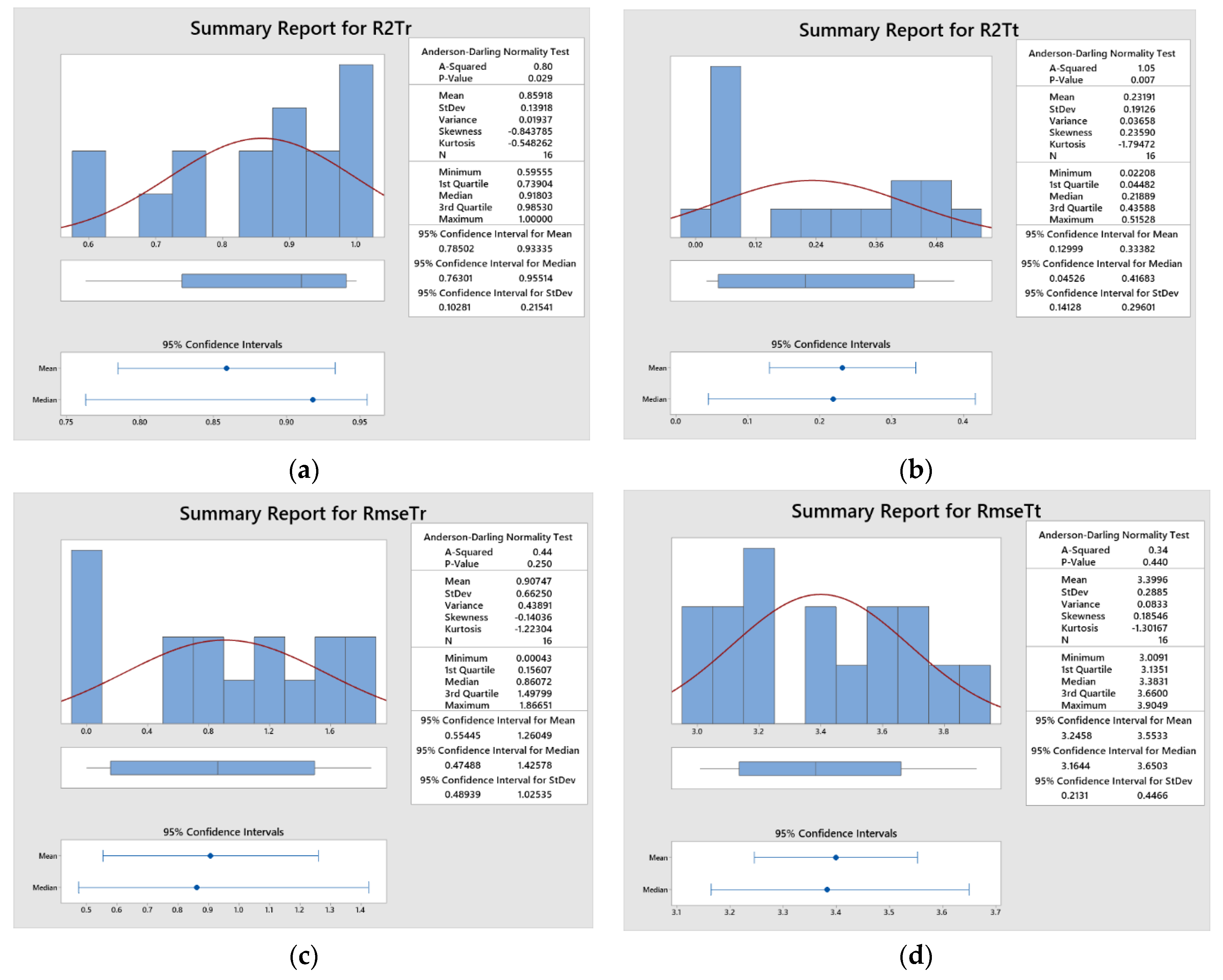

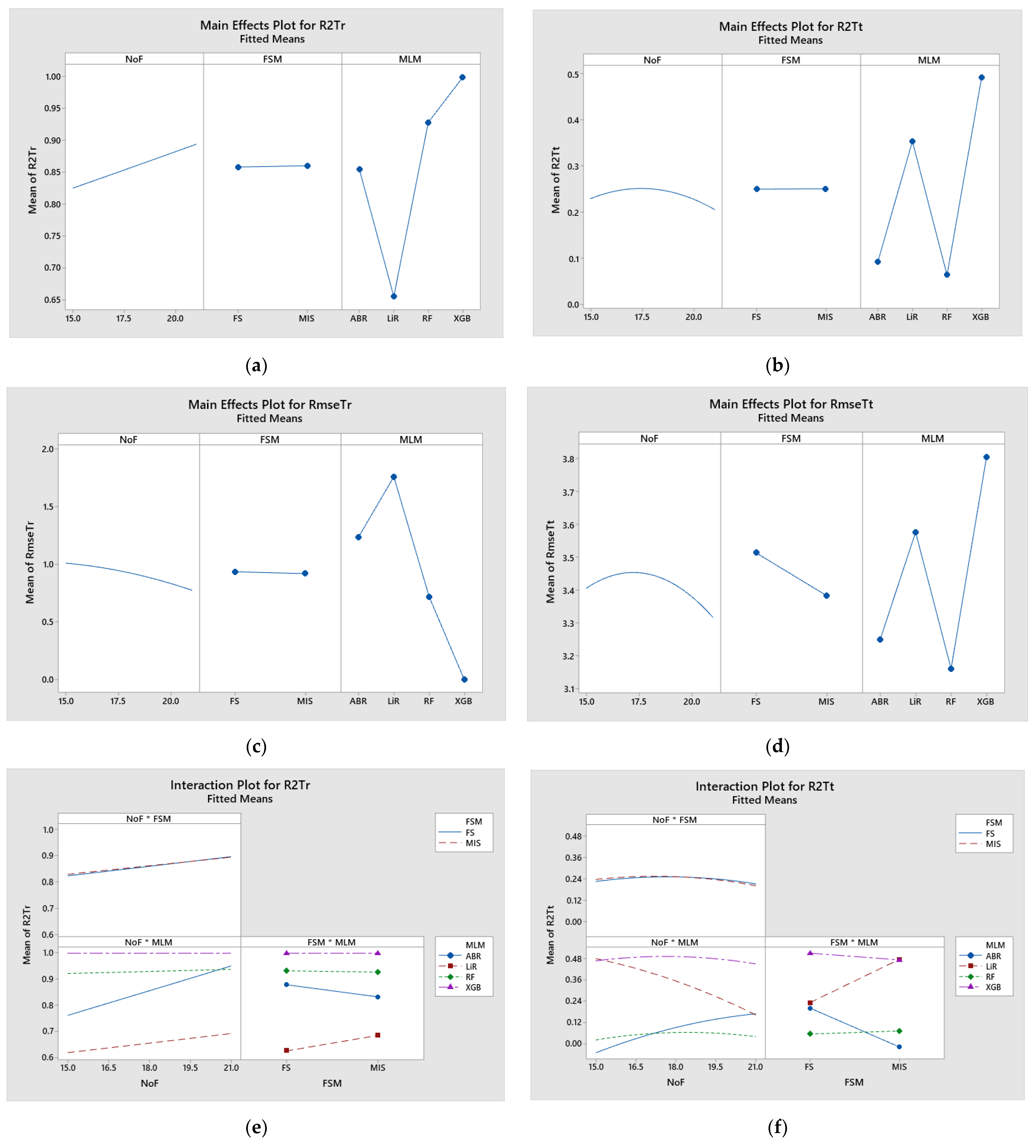

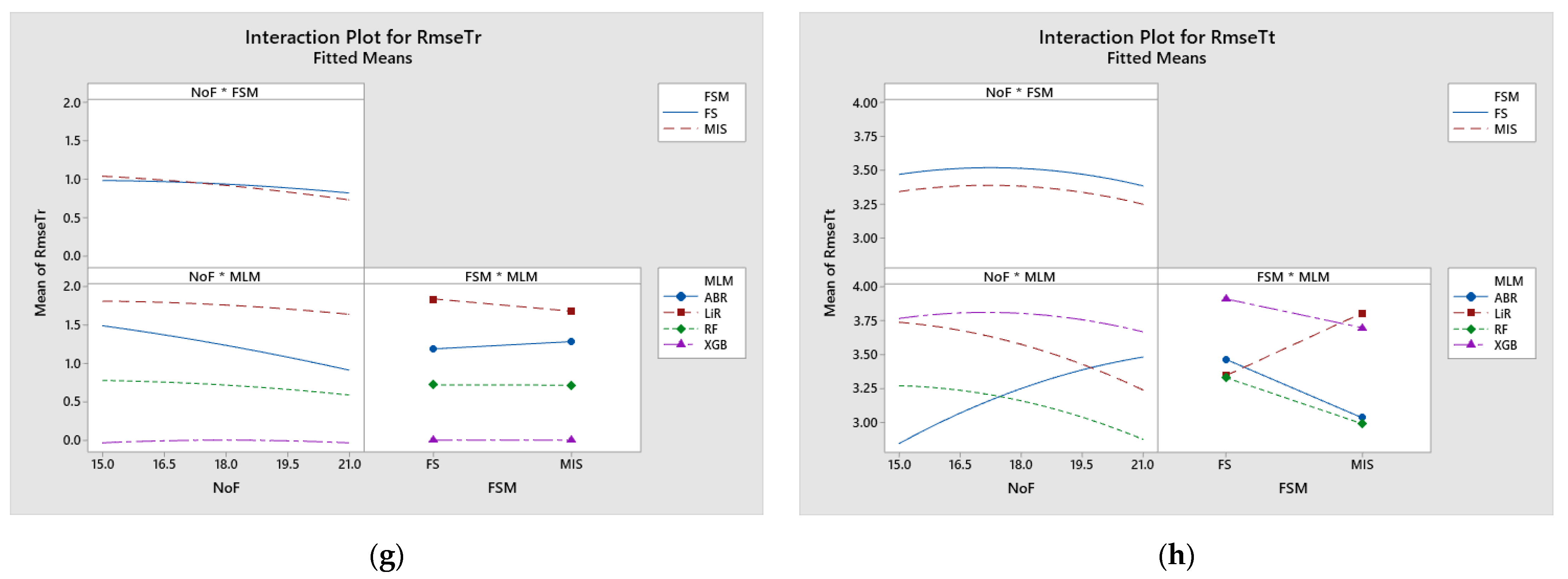

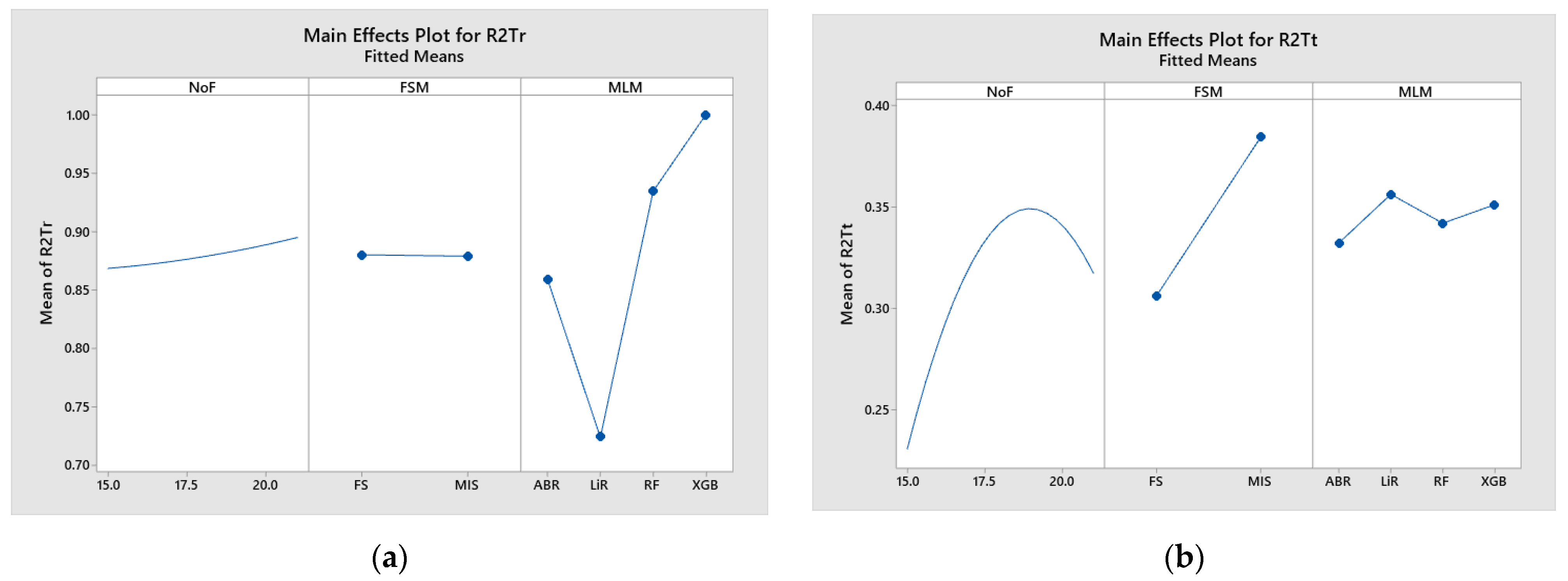

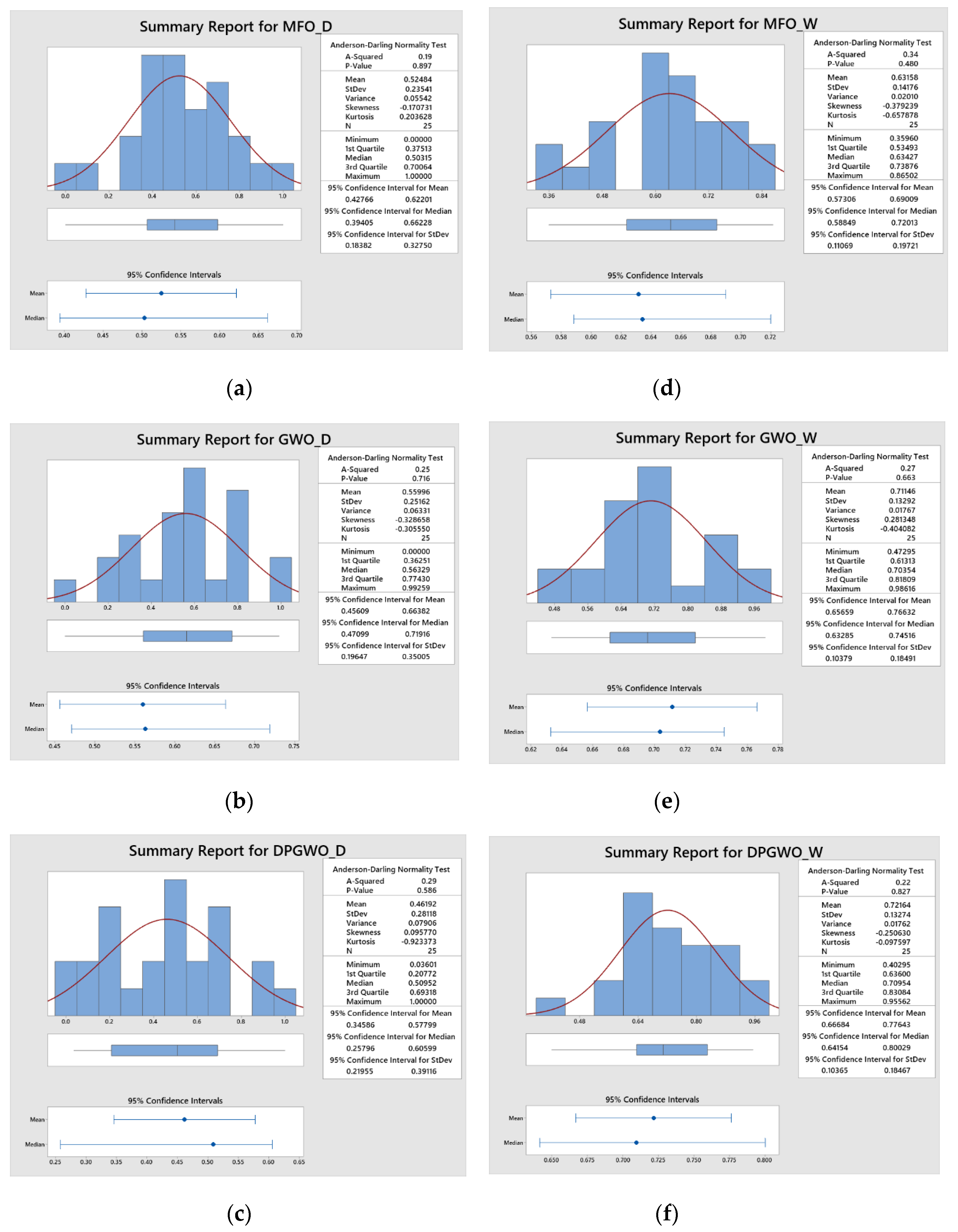

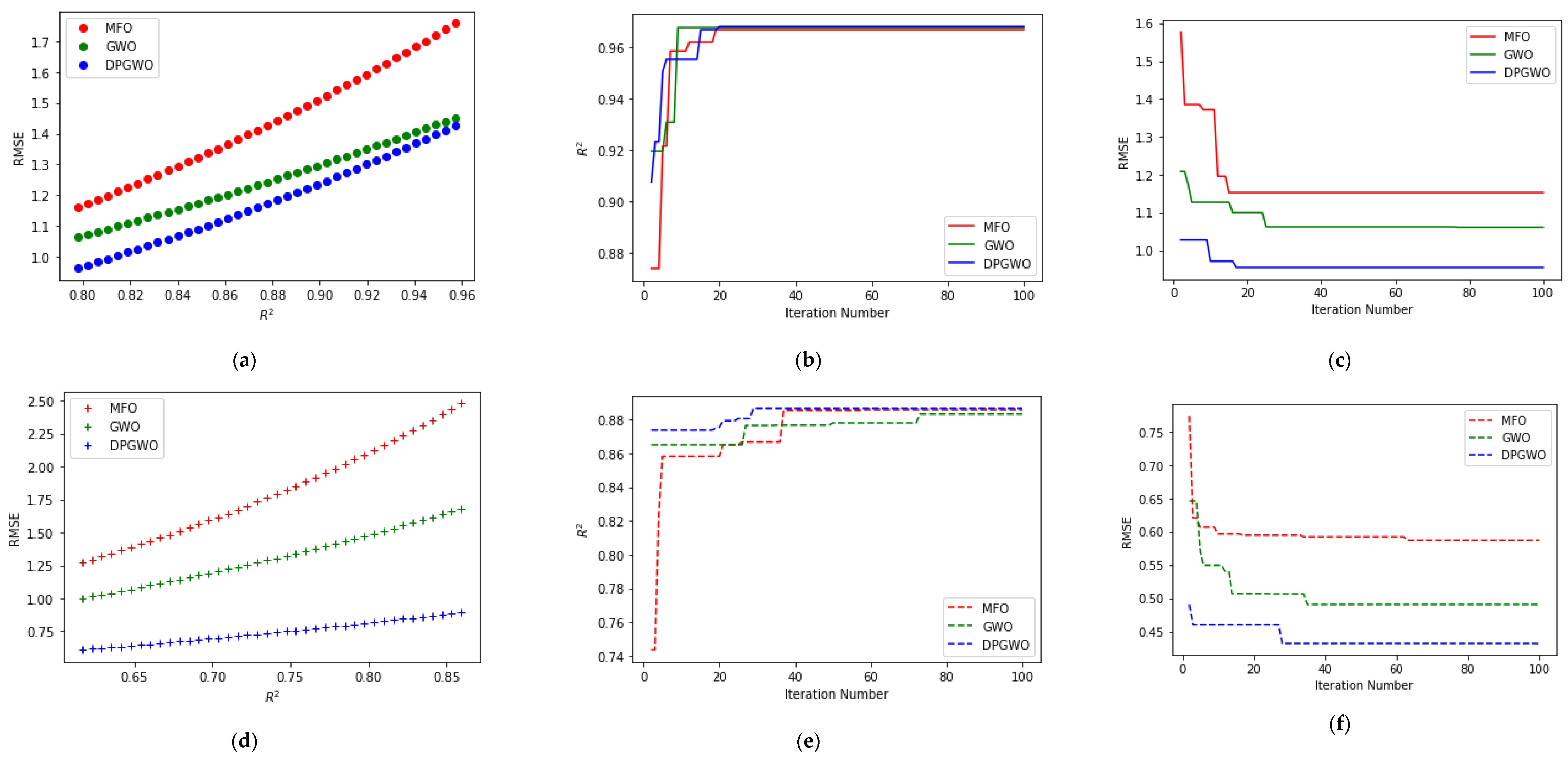

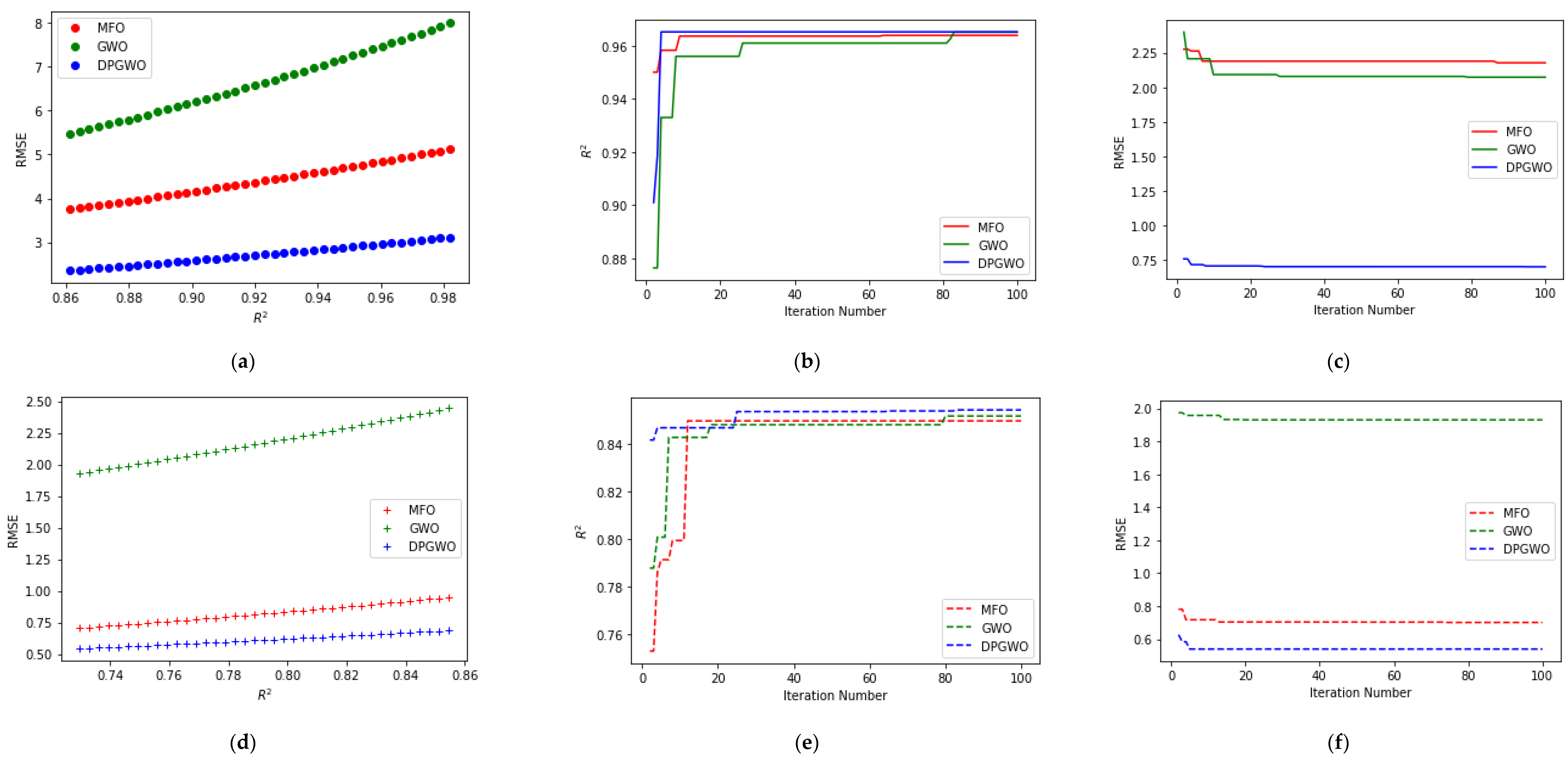

4. Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Parameter | No. of Levels | Levels | |||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | ||

| FSM | 2 | FS | MIS | ||

| MLM | 4 | ABR | LiR | RF | XGB |

| NoF | 4 | 15 | 17 | 19 | 21 |

| E.No. | FSM | MLM | NoF | R2Tr | R2Tt | RmseTr | RmseTt | Deng’s Value |

|---|---|---|---|---|---|---|---|---|

| 1 | FS | ABR | 15 | 0.7741 | 0.0441 | 1.3924 | 3.0091 | 0.3319 |

| 2 | FS | ABR | 17 | 0.8537 | 0.1680 | 1.2802 | 3.3972 | 0.4154 |

| 3 | MIS | ABR | 19 | 0.8699 | 0.0221 | 1.1833 | 3.1780 | 0.3480 |

| 4 | MIS | ABR | 21 | 0.9154 | 0.0446 | 0.9110 | 3.2148 | 0.3941 |

| 5 | FS | LiR | 15 | 0.5956 | 0.3609 | 1.8665 | 3.5540 | 0.4292 |

| 6 | FS | LiR | 17 | 0.6049 | 0.2698 | 1.8390 | 3.3689 | 0.3965 |

| 7 | MIS | LiR | 19 | 0.6883 | 0.4080 | 1.6204 | 3.6648 | 0.4693 |

| 8 | MIS | LiR | 21 | 0.7274 | 0.2882 | 1.5332 | 3.5175 | 0.4361 |

| 9 | MIS | RF | 15 | 0.9207 | 0.0444 | 0.8105 | 3.1208 | 0.4061 |

| 10 | MIS | RF | 17 | 0.9228 | 0.0584 | 0.7603 | 3.0285 | 0.4208 |

| 11 | FS | RF | 19 | 0.9330 | 0.0469 | 0.6982 | 3.2378 | 0.4224 |

| 12 | FS | RF | 21 | 0.9412 | 0.0455 | 0.6224 | 3.0668 | 0.4325 |

| 13 | MIS | XGB | 15 | 1.0000 | 0.4452 | 0.0006 | 3.6458 | 0.6737 |

| 14 | MIS | XGB | 17 | 1.0000 | 0.4829 | 0.0005 | 3.7246 | 0.6806 |

| 15 | FS | XGB | 19 | 1.0000 | 0.5153 | 0.0006 | 3.9049 | 0.6836 |

| 16 | FS | XGB | 21 | 1.0000 | 0.4663 | 0.0004 | 3.7602 | 0.6761 |

| Source | DF | R2Tr | R2Tt | RMSETr | RMSETt | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Adj SS | Adj MS | F-Value | p-Value | Adj SS | Adj MS | F-Value | p-Value | Adj SS | Adj MS | F-Value | p-Value | Adj SS | Adj MS | F-Value | p-Value | ||

| Regression | 13 | 0.29005 | 0.02231 | 87.17000 | 0.01100 | 0.54741 | 0.04211 | 65.07000 | 0.01500 | 6.58227 | 0.50633 | 753.53000 | 0.00100 | 1.22728 | 0.09441 | 8.76000 | 0.10700 |

| NoF | 1 | 0.00018 | 0.00018 | 0.70000 | 0.49100 | 0.00530 | 0.00530 | 8.20000 | 0.10300 | 0.00054 | 0.00054 | 0.81000 | 0.46300 | 0.03826 | 0.03826 | 3.55000 | 0.20000 |

| FSM | 1 | 0.00001 | 0.00001 | 0.02000 | 0.89300 | 0.00028 | 0.00028 | 0.44000 | 0.57700 | 0.00329 | 0.00329 | 4.90000 | 0.15700 | 0.00192 | 0.00192 | 0.18000 | 0.71400 |

| MLM | 3 | 0.00416 | 0.00139 | 5.42000 | 0.16000 | 0.02247 | 0.00749 | 11.57000 | 0.08100 | 0.05840 | 0.01947 | 28.97000 | 0.03400 | 0.10052 | 0.03351 | 3.11000 | 0.25300 |

| NoF*NoF | 1 | 0.00000 | 0.00000 | 0.00000 | 0.98300 | 0.00336 | 0.00336 | 5.20000 | 0.15000 | 0.00377 | 0.00377 | 5.60000 | 0.14200 | 0.02372 | 0.02372 | 2.20000 | 0.27600 |

| NoF*FSM | 1 | 0.00001 | 0.00001 | 0.03000 | 0.88400 | 0.00005 | 0.00005 | 0.08000 | 0.80900 | 0.00236 | 0.00236 | 3.51000 | 0.20200 | 0.00001 | 0.00001 | 0.00000 | 0.98100 |

| NoF*MLM | 3 | 0.00241 | 0.00080 | 3.14000 | 0.25100 | 0.01630 | 0.00543 | 8.39000 | 0.10800 | 0.01977 | 0.00659 | 9.81000 | 0.09400 | 0.08768 | 0.02923 | 2.71000 | 0.28100 |

| FSM*MLM | 3 | 0.00113 | 0.00038 | 1.47000 | 0.42900 | 0.02182 | 0.00727 | 11.24000 | 0.08300 | 0.00680 | 0.00227 | 3.37000 | 0.23700 | 0.09859 | 0.03287 | 3.05000 | 0.25700 |

| Error | 2 | 0.00051 | 0.00026 | 0.00129 | 0.00065 | 0.00134 | 0.00067 | 0.02154 | 0.01077 | ||||||||

| Total | 15 | 0.29057 | 0.54870 | 6.58361 | 1.24882 | ||||||||||||

| Parameter | No. of Levels | Levels | |||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | ||

| FSM | 2 | FS | MIS | ||

| MLM | 4 | ABR | LiR | RF | XGB |

| NoF | 4 | 15 | 17 | 19 | 21 |

| E.No. | FSM | MLM | NoF | R2Tr | R2Tt | RMSETr | RmseTt | Deng’s Value |

|---|---|---|---|---|---|---|---|---|

| 1 | FS | ABR | 15 | 0.8447 | 0.2031 | 0.1349 | 0.3008 | 0.4569 |

| 2 | FS | ABR | 17 | 0.8633 | 0.2435 | 0.1240 | 0.2896 | 0.4771 |

| 3 | MIS | ABR | 19 | 0.8573 | 0.3026 | 0.1271 | 0.2798 | 0.4879 |

| 4 | MIS | ABR | 21 | 0.8789 | 0.3411 | 0.1191 | 0.2724 | 0.5040 |

| 5 | FS | LiR | 15 | 0.6983 | 0.3227 | 0.1795 | 0.2604 | 0.4434 |

| 6 | FS | LiR | 17 | 0.7047 | 0.3961 | 0.1781 | 0.2391 | 0.4616 |

| 7 | MIS | LiR | 19 | 0.7382 | 0.3611 | 0.1663 | 0.2561 | 0.4643 |

| 8 | MIS | LiR | 21 | 0.7632 | 0.1582 | 0.1518 | 0.2909 | 0.4248 |

| 9 | MIS | RF | 17 | 0.9351 | 0.3889 | 0.0820 | 0.2648 | 0.5484 |

| 10 | MIS | RF | 19 | 0.9361 | 0.3163 | 0.0800 | 0.2822 | 0.5360 |

| 11 | FS | RF | 19 | 0.9328 | 0.2949 | 0.0800 | 0.2665 | 0.5324 |

| 12 | FS | RF | 21 | 0.9337 | 0.3332 | 0.0793 | 0.2635 | 0.5408 |

| 13 | MIS | XGB | 15 | 1.0000 | 0.1967 | 0.0006 | 0.2825 | 0.6073 |

| 14 | MIS | XGB | 17 | 1.0000 | 0.4196 | 0.0006 | 0.2440 | 0.6444 |

| 15 | FS | XGB | 19 | 1.0000 | 0.3050 | 0.0005 | 0.2688 | 0.6263 |

| 16 | FS | XGB | 21 | 1.0000 | 0.3386 | 0.0006 | 0.2570 | 0.6326 |

| Variable | Mean | StDev | Variance | Minimum | Q1 | Median | Q3 | Maximum | Range | p-Value |

|---|---|---|---|---|---|---|---|---|---|---|

| R2Tr | 0.8804 | 0.1061 | 0.0113 | 0.6983 | 0.7836 | 0.9058 | 0.9840 | 1.0000 | 0.3017 | 0.0950 |

| R2Tt | 0.3076 | 0.0743 | 0.0055 | 0.1582 | 0.2564 | 0.3195 | 0.3561 | 0.4196 | 0.2614 | 0.3030 |

| RmseTr | 0.0940 | 0.0648 | 0.0042 | 0.0005 | 0.0203 | 0.1006 | 0.1476 | 0.1795 | 0.1790 | 0.0750 |

| RmseTt | 0.2699 | 0.0170 | 0.0003 | 0.2391 | 0.2579 | 0.2676 | 0.2825 | 0.3008 | 0.0617 | 0.9400 |

| Source | DF | R2Tr | R2Tt | RMSETr | RMSETt | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Adj SS | Adj MS | F-Value | p-Value | Adj SS | Adj MS | F-Value | p-Value | Adj SS | Adj MS | F-Value | p-Value | Adj SS | Adj MS | F-Value | p-Value | ||

| Regression | 13 | 0.16896 | 0.01300 | 835.7 | 0.001 | 0.06584 | 0.00507 | 0.6 | 0.773 | 0.06290 | 0.00484 | 298.85 | 0.003 | 0.00383 | 0.00030 | 1.15 | 0.556 |

| NoF | 1 | 0.00000 | 0.00000 | 0.19 | 0.703 | 0.01402 | 0.01402 | 1.66 | 0.326 | 0.00000 | 0.00000 | 0.02 | 0.896 | 0.00049 | 0.00049 | 1.93 | 0.299 |

| FSM | 1 | 0.00005 | 0.00005 | 3.43 | 0.205 | 0.00126 | 0.00126 | 0.15 | 0.736 | 0.00002 | 0.00002 | 0.91 | 0.442 | 0.00011 | 0.00011 | 0.42 | 0.584 |

| MLM | 3 | 0.00149 | 0.00050 | 31.84 | 0.031 | 0.02175 | 0.00725 | 0.86 | 0.577 | 0.00045 | 0.00015 | 9.18 | 0.1 | 0.00073 | 0.00024 | 0.95 | 0.55 |

| NoF*NoF | 1 | 0.00003 | 0.00003 | 1.79 | 0.313 | 0.01175 | 0.01175 | 1.39 | 0.359 | 0.00001 | 0.00001 | 0.3 | 0.637 | 0.00038 | 0.00038 | 1.5 | 0.346 |

| NoF*FSM | 1 | 0.00002 | 0.00002 | 1.38 | 0.36 | 0.00118 | 0.00118 | 0.14 | 0.744 | 0.00001 | 0.00001 | 0.41 | 0.589 | 0.00011 | 0.00011 | 0.44 | 0.576 |

| NoF*MLM | 3 | 0.00033 | 0.00011 | 7.13 | 0.126 | 0.01897 | 0.00633 | 0.75 | 0.615 | 0.00007 | 0.00002 | 1.45 | 0.434 | 0.00059 | 0.00020 | 0.78 | 0.606 |

| FSM*MLM | 3 | 0.00020 | 0.00007 | 4.28 | 0.195 | 0.00723 | 0.00241 | 0.29 | 0.836 | 0.00003 | 0.00001 | 0.62 | 0.666 | 0.00056 | 0.00019 | 0.73 | 0.624 |

| Error | 2 | 0.00003 | 0.00002 | 0.01686 | 0.00843 | 0.00003 | 0.00002 | 0.00051 | 0.00026 | ||||||||

| Total | 15 | 0.16899 | 0.08270 | 0.06294 | 0.00434 | ||||||||||||

Appendix B

| Algorithm A1: Pseudocode of Moth Flame Optimization Algorithm [27] |

| Initialize the parameters for Moth-flame Initialize no. of moths (Mj—i = 1,2,…nm) and their positions as ‘nof’ number of randomly selected features from the given 22 GLCM features list For each i = 1:n do Determine the fitness function fi (i = 1,2,…nm) of each moth—Performance of ML model In terms of R2 and RMSE values for both training and testing data sets using Deng’s Method End For While (iteration ≤ max_iteration) do Update the position of Mi Calculate the no. of flames Evaluate the fitness function fi If (iteration == 1) then F = sort (M) OF = sort (OM) Else F = sort (Mt−1,Mt) OF = sort (Mt−1,Mt) End if For each i = 1:n do For each j = 1:d do Update the values of r and t Calculate the value of D w.r.t. corresponding Moth Update M(i,j) w.r.t. corresponding Moth End For End For End While Display the best objective values with their no. of features and their combinations |

| Algorithm A2: Pseudocode for Grey Wolf Optimization Algorithm [28] |

| Initialize no. of grey wolf (Xj—i = 1,2,…ng) and its positions as ‘nof’ number of randomly selected features from the given 22 GLCM features list While (itr < nitr) Determine the fitness function fvi (i = 1,2,…ng) of each wolf—Performance of ML model In terms of R2 and RMSE values for both training and testing data sets using Deng’s Method Sort fvi in descending order and set as sfi and store the first wolf’s data as Xitr. and Fvitr. Using the sorted data sfi, assign Xa. = X1., Xb. = X2. and Xd. = X3. Compute For each wolf Update the position using b1 = 2 × b × rand()-b and c1 = 2 × rand() and Da. = abs(c1 × Xa. − Xi.) and X1. = Xa. − b1 × Da. b2 = 2 × b × rand()-b and c2 = 2 × rand() and Db. = abs(c2 × Xb. − Xi.) and X2. = Xb. − b2 × Db. b3 = 2 × b × rand()-b and c3 = 2 × rand() and Dd. = abs(c3 × Xd. − Xi.) and X3. = Xd. − b3 × Dd. Xi. = (X1. + X2. + X3.)/3 Check Xi. within bounds End End Display the best objective values with their no. of features and their combinations |

| Algorithm A3: Pseudocode for Dynamic Population Grey Wolf Optimization Algorithm |

| Initialize no. of grey wolf (Xj—i = 1,2,…ng) and its positions as ‘nof’ number of randomly selected features from the given 22 GLCM features list. Set maximum population size (Nm), reproduction probability (pr), and disease probability (pd) Generate the life (Li) of each grey wolf within the maximum iteration number. While (itr < nitr) For each wolf If Li = itr Remove the wolf from the population and update the size of the population End End For each wolf If Li ≥ Aa If rand() ≤ pr Generate a child wolf and update the size of the population End End End If Ng > Nm For each wolf If rand() < pd Remove the wolf from the population and update the size of the population End End End Determine the fitness function fvi (i = 1,2,…Ng) of each wolf—Performance of ML model In terms of R2 and RMSE values for both training and testing data sets using Deng’s Method Sort fvi in descending order and set as sfi and store the first wolf’s data as Xitr. and Fitr. Using the sorted data sfi, assign Xa. = X1., Xb. = X2. and Xd. = X3. Compute For each wolf Update the position using b1 = 2 × b × rand()-b and c1 = 2 × rand() and Da. = abs(c1 × Xa. − Xi.) and X1. = Xa. − b1 × Da. b2 = 2 × b × rand()-b and c2 = 2 × rand() and Db. = abs(c2 × Xb. − Xi.) and X2. = Xb. − b2 × Db. b3 = 2 × b × rand()-b and c3 = 2 × rand() and Dd. = abs(c3 × Xd. − Xi.) and X3. = Xd. − b3 × Dd. Xi. = (X1. + X2. + X3.)/3 Check Xi. within bounds End End Display the best objective values with their no. of features and their combinations |

References

- Gupta, M.; Khan, M.A.; Butola, R.; Singari, R.M. Advances in applications of Non-Destructive Testing (NDT): A Review. Adv. Mater. Process. Technol. 2021, 8, 2286–2307. [Google Scholar] [CrossRef]

- Liu, C.; Peng, Z.; Cui, J.; Huang, X.; Li, Y.; Chen, W. Development of crack and damage in shield tunnel lining under seismic loading: Refined 3D finite element modeling and analyses. Thin-Walled Struct. 2023, 185, 110647. [Google Scholar] [CrossRef]

- Gao, Z.; Hong, S.; Dang, C. An experimental investigation of subcooled pool boiling on downward-facing surfaces with microchannels. Appl. Therm. Eng. 2023, 226, 120283. [Google Scholar] [CrossRef]

- Liu, H.; Yue, Y.; Liu, C.; Spencer, B.; Cui, J. Automatic recognition and localization of underground pipelines in GPR B-scans using a deep learning model. Tunn. Undergr. Space Technol. 2023, 134, 104861. [Google Scholar] [CrossRef]

- Xia, Y.; Shi, M.; Zhang, C.; Wang, C.; Sang, X.; Liu, R.; Zhao, P.; An, G.; Fang, H. Analysis of flexural failure mechanism of ultraviolet cured-in-place-pipe materials for buried pipelines rehabilitation based on curing temperature monitoring. Eng. Fail. Anal. 2022, 14, 106763. [Google Scholar] [CrossRef]

- Wang, Y.-Y.; Lou, M.; Wang, Y.; Wu, W.-G.; Yang, F. Stochastic Failure Analysis of Reinforced Thermoplastic Pipes Under Axial Loading and Internal Pressure. China Ocean Eng. 2022, 36, 614–628. [Google Scholar] [CrossRef]

- Zeng, L.; Lv, T.; Chen, H.; Ma, T.; Fang, Z.; Shi, J. Flow accelerated corrosion of X65 steel gradual contraction pipe in high CO2 partial pressure environments. Arab. J. Chem. 2023, 16, 104935. [Google Scholar] [CrossRef]

- Singh, W.S.; Rao, B.P.; Thirunavukkarasu, S.; Mahadevan, S.; Mukhopadhyay, C.; Jayakumar, T. Development of magnetic flux leakage technique for examination of steam generator tubes of prototype fast breeder reactor. Ann. Nucl. Energy 2015, 83, 57–64. [Google Scholar] [CrossRef]

- Zhang, J.; Liu, X.; Xiao, J.; Yang, Z.; Wu, B.; He, C. A comparative study between magnetic field distortion and magnetic flux leakage techniques for surface defect shape reconstruction in steel plates. Sens. Actuators A Phys. 2019, 288, 10–20. [Google Scholar] [CrossRef]

- Suresh, V.; Abudhahir, A.; Daniel, J. Development of magnetic flux leakage measuring system for detection of defect in small diameter steam generator tube. Measurement 2017, 95, 273–279. [Google Scholar] [CrossRef]

- Suresh, V.; Abudhahir, A.; Daniel, J. Characterization of defects on ferromagnetic tubes using magnetic flux leakage. IEEE Trans. Magn. 2019, 55, 6200510. [Google Scholar] [CrossRef]

- Daniel, J.; Abudhahir, A.; Paulin, J.J. Magnetic Flux Leakage (MFL) based defect characterization of steam generator tubes using artificial neural networks. J. Magn. 2017, 22, 34–42. [Google Scholar] [CrossRef]

- Wang, W.; Kiik, M.; Peek, N.; Curcin, V.; Marshall, I.J.; Rudd, A.G.; Wang, Y.; Douiri, A.; Wolfe, C.D.; Bray, B. A systematic review of machine learning models for predicting outcomes of stroke with structured data. PLoS ONE 2020, 15, e0234722. [Google Scholar] [CrossRef]

- Liu, M.; Gu, Q.; Yang, B.; Yin, Z.; Liu, S.; Yin, L.; Zheng, W. Kinematics Model Optimization Algorithm for Six Degrees of Freedom Parallel Platform. Appl. Sci. 2023, 13, 3082. [Google Scholar] [CrossRef]

- Xie, L.; Zhu, Y.; Yin, M.; Wang, Z.; Ou, D.; Zheng, H.; Liu, H.; Yin, G. Self-feature-based point cloud registration method with a novel convolutional Siamese point net for optical measurement of blade profile. Mech. Syst. Signal Process. 2022, 178, 109243. [Google Scholar] [CrossRef]

- Lu, H.; Zhu, Y.; Yin, M.; Yin, G.; Xie, L. Multimodal Fusion Convolutional Neural Network With Cross-Attention Mechanism for Internal Defect Detection of Magnetic Tile. IEEE Access 2022, 10, 60876–60886. [Google Scholar] [CrossRef]

- Devi, R.M.; Premkumar, M.; Kiruthiga, G.; Sowmya, R. IGJO: An improved golden jackel optimization algorithm using local escaping operator for feature selection problems. Neural Process. Lett. 2023, 1–89. [Google Scholar] [CrossRef]

- Qaraad, M.; Amjad, S.; Hussein, N.K.; Elhosseini, M.A. An innovative quadratic interpolation salp swarm-based local escape operator for large-scale global optimization problems and feature selection. Neural Comput. Appl. 2022, 34, 17663–17721. [Google Scholar] [CrossRef]

- Houssein, E.H.; Saber, E.; Ali, A.A.; Wazery, Y.M. Centroid mutation-based Search and Rescue optimization algorithm for feature selection and classification. Expert Syst. Appl. 2022, 191, 116235. [Google Scholar] [CrossRef]

- Ganesh, N.; Shankar, R.; Čep, R.; Chakraborty, S.; Kalita, K. Efficient feature selection using weighted superposition attraction optimization algorithm. Appl. Sci. 2023, 13, 3223. [Google Scholar] [CrossRef]

- Hu, F.; Qiu, L.; Xiang, Y.; Wei, S.; Sun, H.; Hu, H.; Weng, X.; Mao, L.; Zeng, M. Spatial network and driving factors of low-carbon patent applications in China from a public health perspective. Front. Public Health 2023, 11, 1121860. [Google Scholar] [CrossRef]

- Dai, X.; Xiao, Z.; Jiang, H.; Alazab, M.; Lui, J.C.S.; Min, G.; Dustdar, S.; Liu, J. Task Offloading for Cloud-Assisted Fog Computing With Dynamic Service Caching in Enterprise Management Systems. IEEE Trans. Ind. Inform. 2023, 19, 662–672. [Google Scholar] [CrossRef]

- Priyadarshini, J.; Premalatha, M.; Čep, R.; Jayasudha, M.; Kalita, K. Analyzing Physics-Inspired Metaheuristic Algorithms in Feature Selection with K-Nearest-Neighbor. Appl. Sci. 2023, 13, 906. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Mahalingam, S.K.; Nagarajan, L.; Velu, C.; Dharmaraj, V.K.; Salunkhe, S.; Hussein, H.M.A. An Evolutionary Algorithmic Approach for Improving the Success Rate of Selective Assembly through a Novel EAUB Method. Appl. Sci. 2022, 12, 8797. [Google Scholar] [CrossRef]

- Deng, H. A similarity-based approach to ranking multicriteria alternatives. In Advanced Intelligent Computing Theories and Applications. With Aspects of Artificial Intelligence: Third International Conference on Intelligent Computing, ICIC 2007, Qingdao, China, 21–24 August 2007; Huang, D.S., Heutte, L., Loog, M., Eds.; Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2007; Volume 4682, pp. 253–262. [Google Scholar]

- Mirjalili, S.; Aljarah, I.; Mafarja, M.; Heidari, A.A.; Faris, H. Grey Wolf Optimizer: Theory, Literature Review, and Application in Computational Fluid Dynamics Problems. In Nature-Inspired Optimizers; Springer: Cham, Switzerland, 2019; pp. 87–105. [Google Scholar] [CrossRef]

- Arivalagan, S.; Sappani, R.; Čep, R.; Kumar, M.S. Optimization and Experimental Investigation of 3D Printed Micro Wind Turbine Blade Made of PLA Material. Materials 2023, 16, 2508. [Google Scholar] [CrossRef]

- Saremi, S.; Mirjalili, S.Z.; Mirjalili, S.M. Evolutionary population dynamics and grey wolf optimizer. Neural Comput. Appl. 2015, 26, 1257–1263. [Google Scholar] [CrossRef]

- Khalilpourazari, S.; Naderi, B.; Khalilpourazary, S. Multi-Objective Stochastic Fractal Search: A Powerful Algorithm for Solving Complex Multi-Objective Optimization Problems. Soft Comput. 2019, 24, 3037–3066. [Google Scholar] [CrossRef]

| FNo. | FName | Feature Name |

|---|---|---|

| 0 | UNF | Energy/Uniformity |

| 1 | ETR | Entropy |

| 2 | DSL | Dissimilarity |

| 3 | CST | Contrast |

| 4 | ID | Inverse Difference |

| 5 | CN | Correlation |

| 6 | H | Homogeneity |

| 7 | AC | Auto correlation |

| 8 | CS | Cluster shade |

| 9 | CP | Cluster prominence |

| 10 | MP | Maximum Probability |

| 11 | SS | Sum of squares |

| 12 | SA | Sum average |

| 13 | SV | Sum Variance |

| 14 | SE | Sum entropy |

| 15 | DV | Difference Variance |

| 16 | DE | Difference entropy |

| 17 | IMC (1) | Information measure of correlation1 |

| 18 | IMC (2) | Information measure of correlation 2 |

| 19 | MCC | Maximal Correlation Coefficient |

| 20 | INN | Inverse Difference Normalized |

| 21 | IDN | Inverse different moment normalized |

| Model | Name |

|---|---|

| DT | Decision Tree Regressor |

| LoR | Logistic Regressor |

| LiR | Linear Regressor |

| XGB | Extreme Gradient Booster |

| ABR | Adaptive Booster Regressor |

| RF | Random Forest Regressor |

| Parameter | No. of Levels | Levels | |||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | ||

| FSM | 2 | FS | MIS | ||

| MLM | 4 | DT | LiR | LoR | XGB |

| NoF | 4 | 15 | 17 | 19 | 21 |

| E.No. | FSM | MLM | NoF | R2Tr | R2Tt | RMSETr | RMSETt | Deng’s Value |

|---|---|---|---|---|---|---|---|---|

| 1 | FS | DT | 15 | 0.2619 | 0.3737 | 0.9684 | 1.4000 | 0.4569 |

| 2 | FS | DT | 17 | 0.1998 | 0.4572 | 1.0000 | 1.3000 | 0.4771 |

| 3 | MIS | DT | 19 | 0.6100 | 0.1434 | 1.0278 | 1.6440 | 0.4879 |

| 4 | MIS | DT | 21 | 0.5940 | 0.1429 | 1.0282 | 1.6446 | 0.5040 |

| 5 | FS | LiR | 15 | 0.9596 | 0.6091 | 0.2346 | 1.2090 | 0.4434 |

| 6 | FS | LiR | 17 | 0.9592 | 0.6209 | 0.2389 | 1.1815 | 0.4616 |

| 7 | MIS | LiR | 19 | 0.9221 | 0.6109 | 0.3917 | 1.1977 | 0.4643 |

| 8 | MIS | LiR | 21 | 0.9078 | 0.5437 | 0.4225 | 1.2860 | 0.4248 |

| 9 | MIS | LoR | 15 | 0.6002 | 0.1449 | 0.8200 | 1.6425 | 0.5484 |

| 10 | MIS | LoR | 17 | 0.6120 | 0.1441 | 0.8150 | 1.6433 | 0.5360 |

| 11 | FS | LoR | 19 | 0.5860 | 0.1434 | 0.8008 | 1.6441 | 0.5324 |

| 12 | FS | LoR | 21 | 0.3960 | 0.1430 | 0.8400 | 1.6445 | 0.5408 |

| 13 | MIS | XGB | 15 | 0.8652 | 0.8646 | 0.4410 | 0.6942 | 0.6073 |

| 14 | MIS | XGB | 17 | 0.8757 | 0.8705 | 0.4055 | 0.6869 | 0.6444 |

| 15 | FS | XGB | 19 | 1.0000 | 0.5988 | 0.0005 | 1.1801 | 0.6263 |

| 16 | FS | XGB | 21 | 1.0000 | 0.5841 | 0.0006 | 1.2013 | 0.6326 |

| Source | DF | R2Tr | R2Tt | ||||||

|---|---|---|---|---|---|---|---|---|---|

| Adj SS | Adj MS | F-Value | p-Value | Adj SS | Adj MS | F-Value | p-Value | ||

| Regression | 13 | 1.0433 | 0.0803 | 31.9400 | 0.0310 | 1.0445 | 0.0803 | 2780.6000 | 0.0000 |

| NoF | 1 | 0.0008 | 0.0008 | 0.3300 | 0.6250 | 0.0033 | 0.0033 | 113.4200 | 0.0090 |

| FSM | 1 | 0.0001 | 0.0001 | 0.0500 | 0.8480 | 0.0000 | 0.0000 | 0.4700 | 0.5640 |

| MLM | 3 | 0.0051 | 0.0017 | 0.6700 | 0.6450 | 0.0057 | 0.0019 | 65.8000 | 0.0150 |

| NoF*NoF | 1 | 0.0020 | 0.0020 | 0.8100 | 0.4640 | 0.0021 | 0.0021 | 72.5800 | 0.0130 |

| NoF*FSM | 1 | 0.0037 | 0.0037 | 1.4900 | 0.3470 | 0.0013 | 0.0013 | 44.0600 | 0.0220 |

| NoF*MLM | 3 | 0.0053 | 0.0018 | 0.7000 | 0.6320 | 0.0025 | 0.0008 | 28.7400 | 0.0340 |

| FSM*MLM | 3 | 0.0414 | 0.0138 | 5.4900 | 0.1580 | 0.0394 | 0.0131 | 454.0900 | 0.0020 |

| Error | 2 | 0.0050 | 0.0025 | 0.0001 | 0.0000 | ||||

| Total | 15 | 1.0484 | 1.0446 | ||||||

| RMSETr | RMSETt | ||||||||

| Adj SS | Adj MS | F-Value | p-Value | Adj SS | Adj MS | F-Value | p-Value | ||

| Regression | 13 | 1.9731 | 0.1518 | 711.9000 | 0.0010 | 1.5092 | 0.1161 | 1722.1200 | 0.0010 |

| NoF | 1 | 0.0002 | 0.0002 | 0.7300 | 0.4820 | 0.0057 | 0.0056 | 83.7600 | 0.0120 |

| FSM | 1 | 0.0005 | 0.0005 | 2.2300 | 0.2740 | 0.0000 | 0.0000 | 0.1500 | 0.7340 |

| MLM | 3 | 0.0057 | 0.0019 | 8.8700 | 0.1030 | 0.0063 | 0.0021 | 31.2100 | 0.0310 |

| NoF*NoF | 1 | 0.0004 | 0.0004 | 1.6500 | 0.3270 | 0.0037 | 0.0037 | 55.4600 | 0.0180 |

| NoF*FSM | 1 | 0.0005 | 0.0004 | 2.0900 | 0.2850 | 0.0022 | 0.0022 | 32.8400 | 0.0290 |

| NoF*MLM | 3 | 0.0009 | 0.0003 | 1.4000 | 0.4410 | 0.0034 | 0.0011 | 16.8800 | 0.0560 |

| FSM*MLM | 3 | 0.0179 | 0.0060 | 27.9500 | 0.0350 | 0.0778 | 0.0259 | 384.5400 | 0.0030 |

| Error | 2 | 0.0004 | 0.0002 | 0.0001 | 0.0001 | ||||

| Total | 15 | 1.9735 | 1.5093 | ||||||

| Characteristic | FSM | MLM | NoF | R2Tr | R2Tt | RMSETr | RMSETt | Deng’s Value |

|---|---|---|---|---|---|---|---|---|

| Length | MIS | XGB | 17 | 0.8757 | 0.8705 | 0.4055 | 0.6869 | 0.6444 |

| Depth | FS | XGB | 19 | 1.0000 | 0.5153 | 0.0006 | 3.9049 | 0.6836 |

| Width | MIS | XGB | 17 | 1.0000 | 0.4196 | 0.0006 | 0.2440 | 0.6444 |

| Solution for | FSM | ML | NoF | R2Tr—Fit | R2Tt—Fit | RMSETr—Fit | RMSETt—Fit | Composite Desirability |

|---|---|---|---|---|---|---|---|---|

| Crack Length | MIS | XGB | 17 | 0.890569 | 0.875854 | 0.387879 | 0.655271 | 0.868386 |

| Crack Depth | FS | XGB | 21 | 0.999998 | 0.621851 | 0.0199202 | 3.39233 | 0.840767 |

| Crack Width | MIS | XGB | 19 | 0.999706 | 0.679007 | 0.0006879 | 0.231555 | 0.887385 |

| Parameters | Levels | ||||

|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | |

| n_estimators | 100 | 500 | 900 | 1100 | 1500 |

| base_score | 0.25 | 0.5 | 0.75 | 1 | |

| learning_rate | 0.05 | 0.1 | 0.15 | ||

| Booster | gbtree | Gblinear | |||

| Parameters | Crack Dimensions | ||

|---|---|---|---|

| Length | Depth | Width | |

| n_estimators | 500 | 1500 | 100 |

| base_score | 1 | 0.75 | 1 |

| learning_rate | 0.05 | 0.15 | 0.05 |

| Booster | Gblinear | gblinear | gbtree |

| Name of the Parameters | Value |

|---|---|

| Position of the moth close to the flame (t) | −1 to −2 |

| Update mechanism | Logarithmic spiral |

| No. of moths (N) | 30 |

| No. of iterations (nitr) | 100 |

| Name of the Parameters | GWO | DPGWO |

|---|---|---|

| No. of grey wolf (Ng) | 20 | 20 |

| No. of Iterations (nitr) | 50 | 50 |

| Scale Factor (SF) | 2 to 0 | 2 to 0 |

| Reproduction Probability (pr) | Not applicable | 0.45 (0.35 to 0.55) |

| Disease Probability (pd) | 0.05 (0.03 to 0.07) |

| Crack Dimension | Algorithm | IGD | SP |

|---|---|---|---|

| Crack Length | MFO | 0.10066 | 0.05977 |

| GWO | 0.10413 | 0.04707 | |

| DPGWO | 0.09652 | 0.04558 | |

| Crack Length | MFO | 0.23810 | 0.49592 |

| GWO | 0.21844 | 0.24535 | |

| DPGWO | 0.19339 | 0.08908 | |

| Crack Length | MFO | 0.20932 | 0.15989 |

| GWO | 0.34371 | 0.13514 | |

| DPGWO | 0.08245 | 0.06335 |

| Crack Dimensions | Mean Rank | Probability | ||

|---|---|---|---|---|

| MFO | GWO | DPGWO | ||

| Crack Length | 2.2 | 1.56 | 2.24 | 0.0263 |

| Crack Depth | 1.98 | 1.78 | 2.23 | 0.0049 |

| Crack Width | 1.84 | 2.04 | 2.12 | 0.0093 |

| FNo. | Fname | Crack Length | Crack Depth | Crack Width | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| TM | DM | OT | TM | DM | OT | TM | DM | OT | ||||||||

| MFO | GWO | DPGWO | MFO | GWO | DPGWO | MFO | GWO | DPGWO | ||||||||

| 0 | UNF | N | N | N | ||||||||||||

| 1 | ETR | N | N | N | N | N | N | N | ||||||||

| 2 | DSL | N | N | N | N | N | ||||||||||

| 3 | CST | N | N | N | N | N | N | N | ||||||||

| 4 | ID | N | N | N | N | N | N | N | ||||||||

| 5 | CN | N | N | N | N | N | N | |||||||||

| 6 | H | N | N | |||||||||||||

| 7 | AC | N | N | N | N | N | N | N | N | |||||||

| 8 | CS | |||||||||||||||

| 9 | CP | |||||||||||||||

| 10 | MP | |||||||||||||||

| 11 | SS | |||||||||||||||

| 12 | SA | |||||||||||||||

| 13 | SV | |||||||||||||||

| 14 | SE | |||||||||||||||

| 15 | DV | |||||||||||||||

| 16 | DE | N | N | N | N | |||||||||||

| 17 | IMC(1) | N | N | N | N | |||||||||||

| 18 | IMC(2) | N | ||||||||||||||

| 19 | MCC | |||||||||||||||

| 20 | INN | N | ||||||||||||||

| 21 | IDN | N | N | N | N | N | N | N | N | N | N | |||||

| No. of Features | 19 | 17 | 17 | 17 | 17 | 19 | 21 | 17 | 17 | 17 | 17 | 19 | 17 | 17 | 17 | |

| FNo. | FName |

|---|---|

| 1 | ETR |

| 3 | CST |

| 4 | ID |

| 7 | AC |

| 21 | IDN |

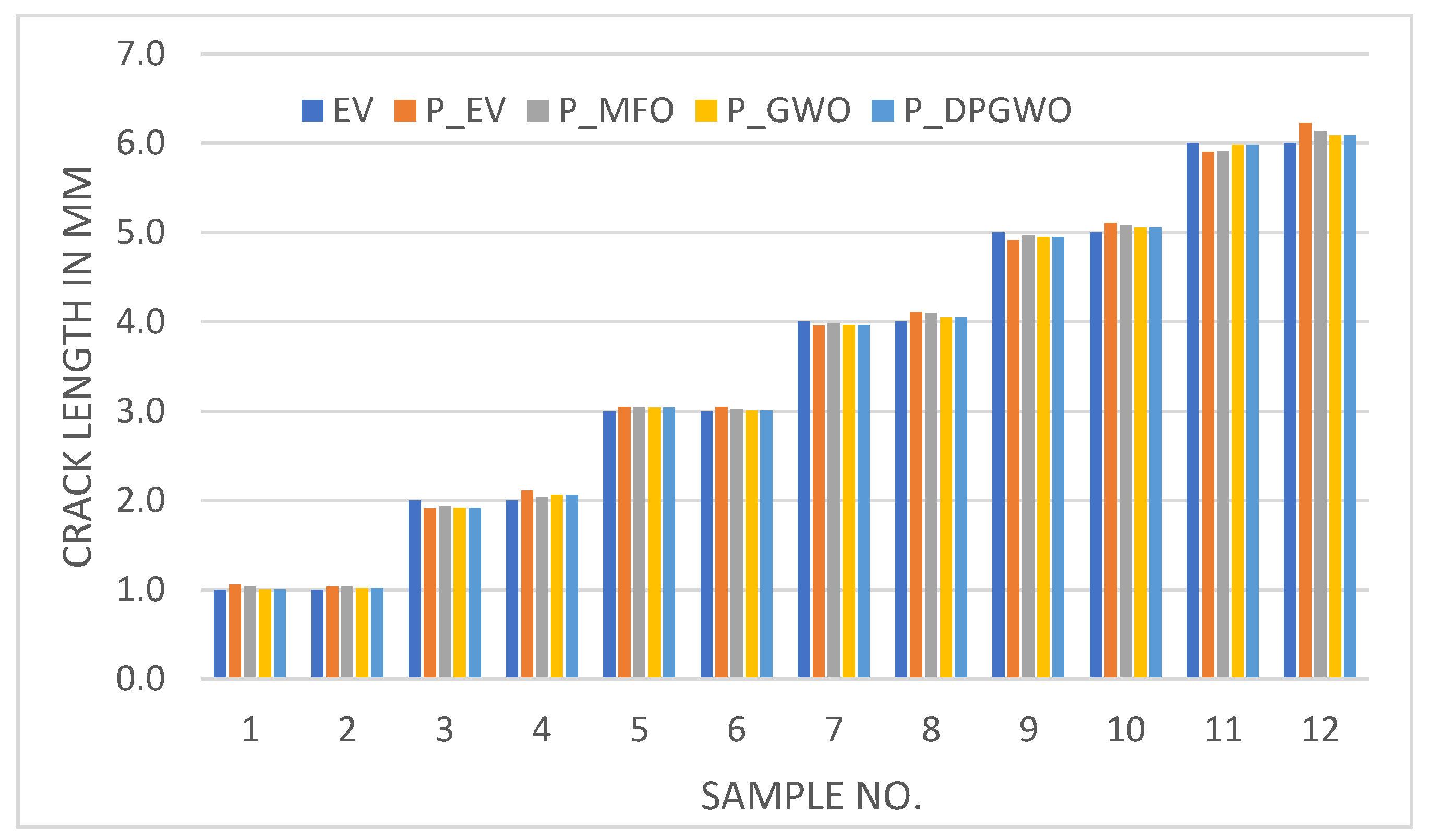

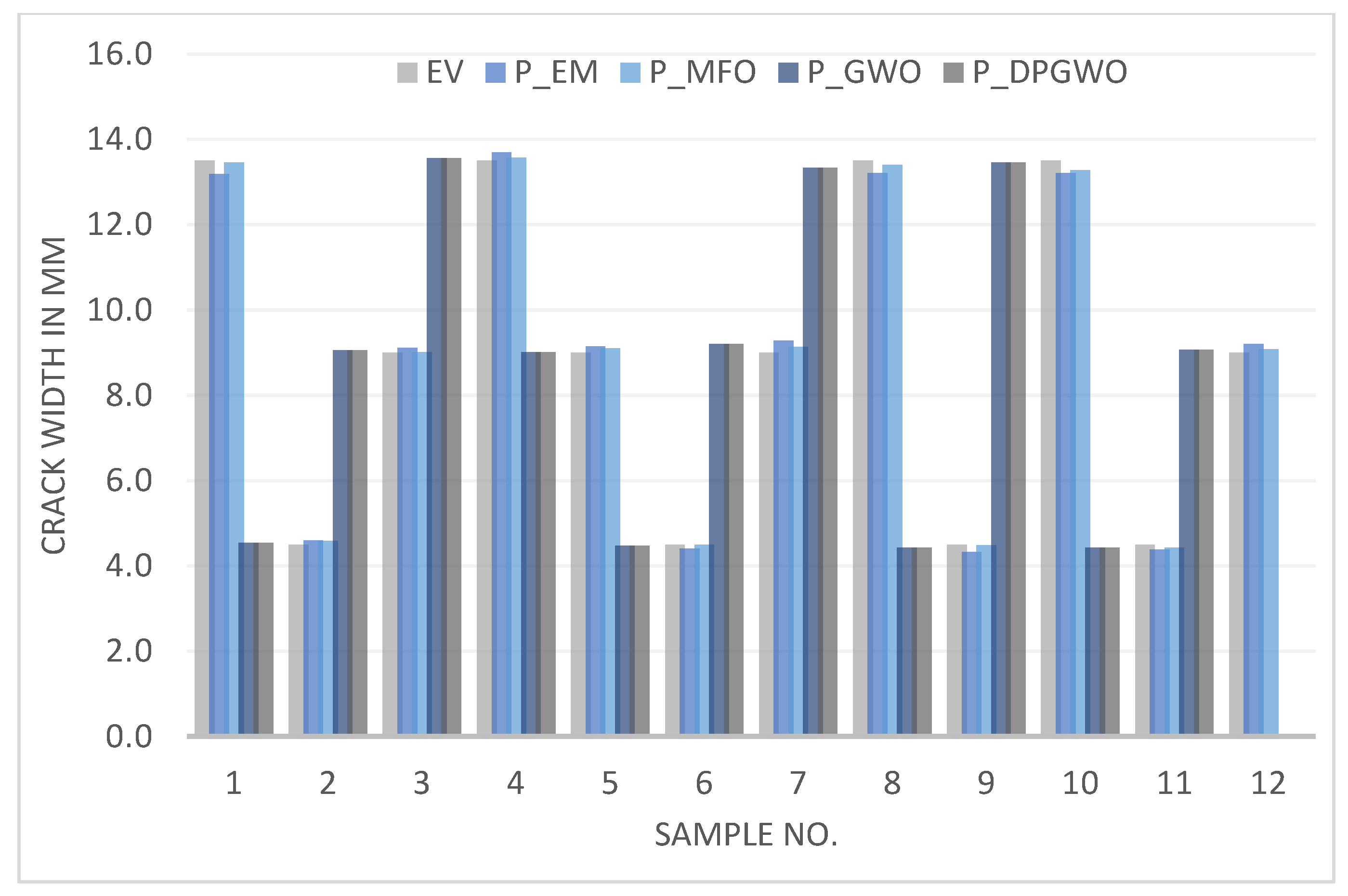

| Performance Metrics | EM | MFO | GWO | DPGWO |

|---|---|---|---|---|

| R2 | 0.9970 | 0.9987 | 0.9981 | 0.9992 |

| 0.9938 | 0.9975 | 0.9966 | 0.9989 | |

| 0.9969 | 0.9993 | 0.9990 | 0.9994 | |

| RMSE | 0.101 | 0.066 | 0.078 | 0.050 |

| 0.032 | 0.020 | 0.023 | 0.014 | |

| 0.208 | 0.099 | 0.126 | 0.089 | |

| MAE | 0.0872 | 0.0563 | 0.0568 | 0.0417 |

| 0.0247 | 0.0147 | 0.0135 | 0.0099 | |

| 0.1922 | 0.0792 | 0.1028 | 0.0727 | |

| MAPE | 2.93 | 1.88 | 1.60 | 1.38 |

| 3.09 | 1.72 | 1.60 | 1.16 | |

| 2.25 | 0.91 | 1.09 | 0.91 |

| Variable | Samples | Mean | StDev | Variance | Minimum | Q1 | Median | Q3 | Maximum | Skewness | Kurtosis |

|---|---|---|---|---|---|---|---|---|---|---|---|

| DPGWO | 12 | 45 | 8.23 | 67.68 | 32.1 | 39.95 | 43.7 | 52.77 | 59.6 | 0.22 | −0.65 |

| GWO | 12 | 38.4 | 6.98 | 48.75 | 29.6 | 31.62 | 39.25 | 41.38 | 51.8 | 0.57 | −0.09 |

| MFO | 12 | 108.42 | 15.64 | 244.77 | 89.6 | 95.93 | 103.75 | 126 | 133.7 | 0.42 | −1.36 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

William, M.V.A.; Ramesh, S.; Cep, R.; Mahalingam, S.K.; Elangovan, M. DPGWO Based Feature Selection Machine Learning Model for Prediction of Crack Dimensions in Steam Generator Tubes. Appl. Sci. 2023, 13, 8206. https://doi.org/10.3390/app13148206

William MVA, Ramesh S, Cep R, Mahalingam SK, Elangovan M. DPGWO Based Feature Selection Machine Learning Model for Prediction of Crack Dimensions in Steam Generator Tubes. Applied Sciences. 2023; 13(14):8206. https://doi.org/10.3390/app13148206

Chicago/Turabian StyleWilliam, Mathias Vijay Albert, Subramanian Ramesh, Robert Cep, Siva Kumar Mahalingam, and Muniyandy Elangovan. 2023. "DPGWO Based Feature Selection Machine Learning Model for Prediction of Crack Dimensions in Steam Generator Tubes" Applied Sciences 13, no. 14: 8206. https://doi.org/10.3390/app13148206

APA StyleWilliam, M. V. A., Ramesh, S., Cep, R., Mahalingam, S. K., & Elangovan, M. (2023). DPGWO Based Feature Selection Machine Learning Model for Prediction of Crack Dimensions in Steam Generator Tubes. Applied Sciences, 13(14), 8206. https://doi.org/10.3390/app13148206