1. Introduction

In Korea, 64.5% of the territory is composed of mountainous areas, and the west wind generated by high pressure in the spring passes through the Taebaek Mountains, decreasing moisture and causing dry weather in the east. Therefore, the Yeongdong region of Gangwon-do in the eastern part of Korea is exposed to the risk of forest fire every year. Large-scale forest fires cause serious disasters all over the world, not only in Korea. In June 2019, a large-scale forest fire in Australia (11 million hectares) took 6 months to extinguish, and a forest fire in the western United States in 2021 (1.91 million hectares) also took 3 months. In Vancouver and neighboring British Columbia, hot and dry weather in the summer of 2021 caused more than 1500 forest fire cases [

1]. For the early suppression of forest fires, research to detect forest fires at an early stage by using various sensing technologies is being actively conducted worldwide [

2].

Fire detection systems can be classified into two types: a method of physically detecting a fire using various sensors and a method of detecting a fire using an image. For example, smoke can be detected through an ion sensor, or flame can be detected using an infrared sensor [

3]. There is also a study using ultraviolet flame detection sensors [

4]. When the sensor detects a small flame, a push message can be sent to a smartphone through the Raspberry Pi and the GCM server. These sensors perform well in an indoor environment, but in a large outdoor space, it is difficult for smoke or heat to reach the sensor, so detection may be delayed or erroneous [

5]. To solve this problem, there have been studies sharing the results detected by sensors by establishing a wireless sensor network (WSN) [

6,

7,

8,

9,

10]. This method can share the detected results and can cover a relatively wide area as the number of devices increases, but has a disadvantage in that cost also increases.

Recently, various image processing methods have been widely used to detect a fire in a wide area at a relatively low cost. In general, many studies have been conducted to analyze the color and movement of flames through image-processing, combining RGB color analysis and YCbCr color analysis [

11,

12,

13,

14,

15,

16,

17,

18]. Various techniques, such as heuristic algorithms or fuzzy logic, can be used together to increase detection efficiency. Recently, machine learning technologies have also been used to detect fires in images [

19,

20,

21,

22,

23,

24]. Although fire detection technology using RGB images is developing, there may be limitations in detection accuracy, and there have been studies using a thermal imaging camera to compensate for this [

25,

26,

27]. Fire detection ability was analyzed using a thermal imaging camera according to the distance between the fire source and the thermal imaging camera. When compared with optical cameras, it was found that thermal imaging cameras are capable of earlier fire detection than optical cameras. The technology for detecting fires in images is expected to continue to improve. However, existing methods of detecting fire in an image or video cannot detect a fire out of the field of view. In addition, it is difficult to specify the location of the fire. There are some cases [

28] where analysis results were shared by combining image-based fire detection technology and WSN, but the focus was on fire detection itself, not on localization. There are very few cases that estimate the location of a fire that can occur in a wide area, such as a forest fire. There have been studies [

29,

30] using drones or satellite images to locate fire points in a wide area. However, since it is unknown when a fire will occur, it is difficult to monitor a fire in real time with these methods.

Each of these existing fire detection technologies has its own merits, and there are appropriate options depending on the situation. However, there are limitations to their application to fires that can occur over a wide area, such as forest fires. For example, a method using sensors can only detect a local fire, and even if a WSN is built with multiple sensors, a cost problem arises. Image-based analysis can only detect fires within the camera’s field of view and it is difficult to locate the fire point. In the case of a thermal imaging camera, there is a disadvantage in that the installation cost of each camera is high. When using drones or satellite images, it is possible to detect a wide area, but it is difficult to detect a fire immediately. Therefore, this study aimed to develop a technology that can detect a wide area at once, process fire detection in real time, and estimate the fire point while applying image-based technology at a relatively low cost. In order to cover a wide area, it is useful to install many inexpensive devices rather than a small number of expensive ones. In addition, it would be useful if the optimal installation point was analyzed to effectively detect forest fires. However, there have been few studies on the detection of a wide area with low-cost devices and sharing the results.

In this study, we developed a forest fire detection system that can identify forest fire sites early and estimate the location using a fisheye lens and IoT technology. The developed technique was applied to Mt. Bukjeong near the Samcheok Campus of Kangwon National University in Samcheok City, Korea. The optimal installation point was analyzed using Geographical Information System (GIS) spatial analysis considering the topography of a terrain, and a system was installed to analyze the location of forest fires in real-time by receiving fisheye lens images transmitted from the Raspberry Pi system.

2. Study Area

To apply the forest fire detection system developed in this study, Mt. Bukjeong, in Samcheok-si, Gangwon-do, was selected as the study area. The school and city hall are located near Mt. Bukjeong, so forest fires can cause great danger. Mt. Bukjeong has a trail with a total length of 1520 m, and the altitude is 102 m.

Figure 1a is the result of taking 69 images using a drone and creating an orthoimage using Pix4Dmapper software (

https://www.pix4d.com/product/pix4dmapper-photogrammetry-software/, accessed on 20 May 2023). Since it is impossible to conduct an experiment that actually causes a forest fire for safety reasons, a location estimation experiment was conducted by detecting a researcher moving along the trail instead of an actual fire.

Figure 1b shows a digital surface model (DSM) representing the altitude of the terrain, and

Figure 1c is a 3-D model of the study area. These models were created by Pix4Dmapper using drone images. The fisheye-lens-based forest fire detection system developed in this study was installed at a basin-shaped point (

Figure 1c) to secure a wide field of view.

3. Methods

In this study, Raspberry Pi, a small and low-cost computer, was used to develop an effective fire detection system. A fisheye camera with a 220-degree angle of view was installed for the omnidirectional capture, and the system was controlled through a laptop computer using the remote control function of Raspberry Pi. By connecting the laptop and Raspberry Pi to the same Wi-Fi, the Raspberry Pi was remotely controlled by the laptop, reducing the size and cost of the product and minimizing power consumption. By writing the Python code for the operation of the Raspberry Pi, images were continuously taken and image data could be transmitted to the Firebase database through the Wi-Fi communication function. To analyze continuously transmitted images in real-time and quickly detect forest fires, a desktop computer accesses images in the database and uses deep learning-based object detection technology to detect forest fires. In addition, by using the relative coordinates of forest fires detected in polar images and DSM, the location of a forest fire can be specified on a map. Forest fires typically travel upwind toward the top of a mountain and begin to spread in the direction of the wind. Therefore, it is necessary to install a fire detection system at a low altitude in a basin terrain to monitor a large area of a mountain slope. In this study, the system was installed in a place with a wide visible area by applying GIS-based viewshed analysis.

3.1. Development of Device Using Raspberry Pi and Fisheye Lens Camera

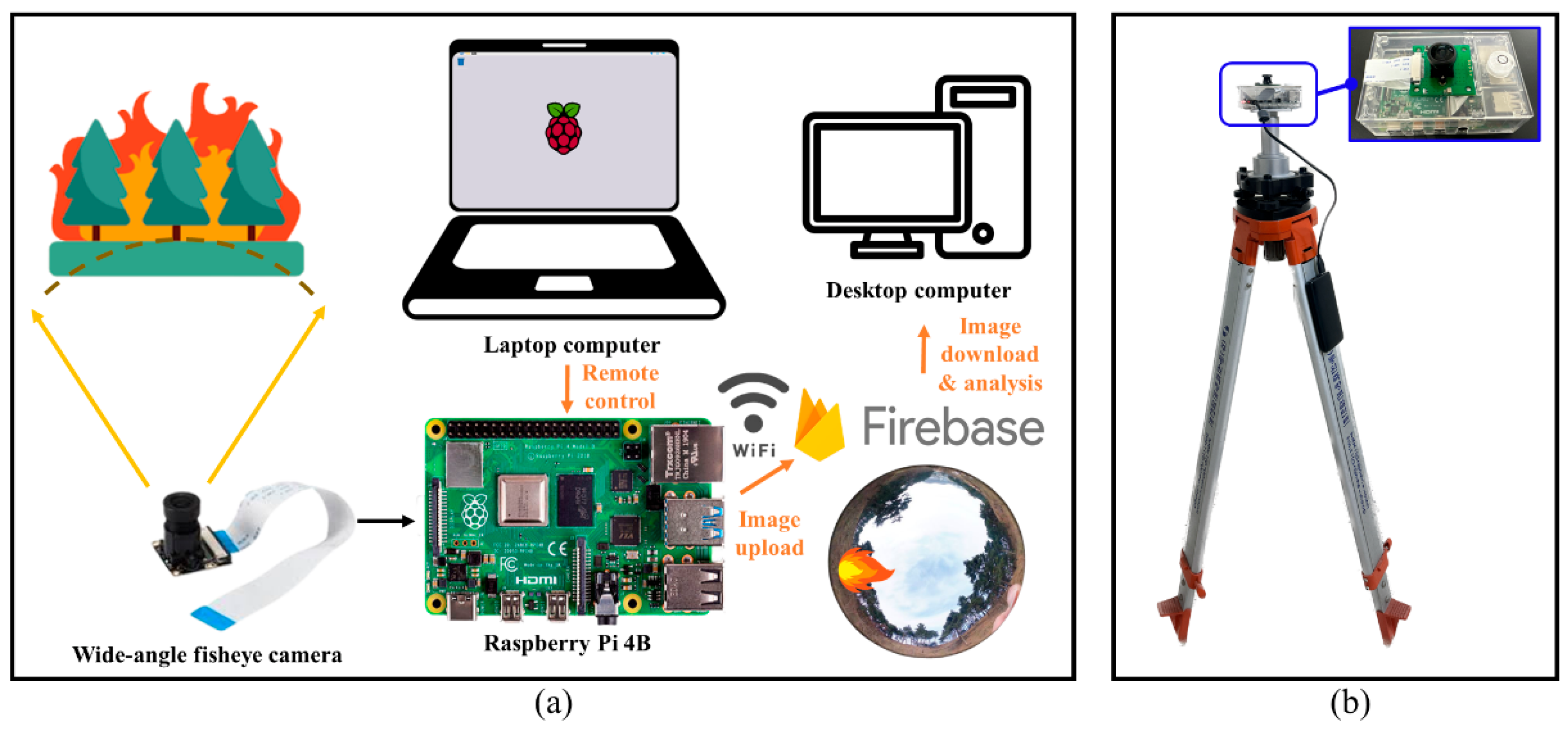

In this study, a Raspberry Pi 4Bwas used to take images through a fisheye camera and transmit the captured images to the Firebase database.

Figure 2a shows a conceptual diagram of how the developed system operates. Images taken through the fisheye lens are transmitted to the Firebase database in real-time and analyzed on a desktop computer. The system can be configured so that images can be taken and transmitted periodically at equal time intervals.

Figure 2b shows a photograph of the developed system installed on a tripod. For the power supply, an auxiliary battery with a storage capacity of 10,000 mAh was used, which has an output voltage and current of 5 V and 2 A. To obtain an omnidirectional image at a specific point, a 220-degree wide-angle fisheye camera for Raspberry Pi was used. The resolution of the camera is 2592 × 1944, and the focal length is 4 mm.

Figure 3a shows an image taken using the fisheye camera in a transparent hemisphere (

Figure 3b) in which the latitude intervals were displayed at 10-degree intervals. It can be seen that the captured image is displayed in polar coordinates, and the azimuth angle and the elevation angle from the horizon are expressed as equal angles without distortion. The system was developed to continuously transmit images to the Firebase database using the Python programming of the Raspberry Pi. Firebase is a mobile and web application development platform that supports the Real Time Stream Protocol (RTSP) method database, stores data in JSON format in a NoSql cloud database and synchronizes with clients in real time.

3.2. Deep-Learning-Based Fire Image Detection and Location Tracking

The main purpose of this study is to estimate the location of the detected fire on a map, but, for this purpose, the technique of properly detecting the fire is also important. The desktop computer will access the images in the Firebase database sent from the Raspberry Pi to perform real-time forest fire detection. In order to accurately recognize fire in the image, You Only Look Once version 4 (YOLO v4), a deep-learning-based object detection technology, was applied. YOLO v4 consists of three parts: backbone, neck, and head [

31]. The backbone is a pretrained convolutional neural network (CNN) from input images. The backbone acts as a feature extraction network that computes a feature map. The neck consists of a spatial pyramid pooling (SPP) module and a path aggregation network (PAN) and connects the backbone and head. The neck concatenates the feature maps from different layers of the backbone network and sends them as inputs to the head, which then processes the aggregated features and classifies the objects. In this study, a pretrained CNN was used as the base network. In addition, transfer learning was performed to configure the YOLO v4 deep learning network for a new fire data set. For a data set consisting of about 2500 fire images, we labeled rectangular regions of interest (ROIs) for object detection and created a custom YOLO v4 detection network.

Figure 4a shows a picture taken of real fire and red-colored objects using a fisheye lens, and it was confirmed that fire can be detected by distinguishing it from red-colored objects.

Figure 4b shows a picture taken in a relatively dark environment, and it was confirmed that fire can be detected by distinguishing it from bright light. If the size of an object in an image is too small, detection may be difficult. Because objects are compressed in polar images, fire detection was performed by converting polar images into panoramic images for effective detection and location estimation. In this study, the center coordinates of the anchor box were extracted and used to calculate the geographic location of the detected fire.

The purpose of this study is to specify the location of the fire point on the map. First, it is necessary to know the location of the developed system. For this, the location of the installed system was measured using GPS. Python code was written to calculate the azimuth angles from the center of the polar image to the center of the detected fire. In addition, the elevation angle of the fire can be calculated. If you know the azimuth angles, you can know the direction of the fire. However, if you do not know the elevation angles or topography of the target area, you cannot know the distance from the system to the fire. In this study, DSM, which represents the height of the surrounding terrain, was used to calculate the distance to the fire point.

Figure 5a shows an example of a contour map extracted from the DSM, and it is assumed that forest fires broke out at two points in this terrain. If the azimuth calculated from the polar image (

Figure 5b) and the location of the system are known, the fire is located somewhere above the yellow line shown in

Figure 5a.

Figure 5c shows cross-sections from the observation point where the camera is installed to forest fires A and B. If you know the height of each point and the elevation angle obtained from the polar image, you can calculate the distance to the forest fires. Also, the blue semicircle shows the 180-degree angle of view of the fisheye camera, and the orange sector shows the 90-degree angle of view of the normal camera. Normal cameras only take pictures of the sky when looking vertically upward, whereas fisheye cameras can take pictures in all directions. This is because fisheye cameras have a wider shooting range than normal cameras. The greater the change in the elevation of the topography, the more accurate the distance estimation, and the smaller the change in elevation, the easier it is for errors to occur. The term error here does not mean an error in the direction between the device and the fire, but a distance error between two points, that is, a position error due to a distance error. For example, on flat land, both near and far points in the same direction can be located at the same point in polar coordinates. On the other hand, if the relief of the terrain is large, the difference in elevation angle is large, so the location of the fire can be accurately calculated. This error is fundamentally difficult to solve with only one device, but it is not a big problem in an extensive mountainous terrain, which is the main target area of this study. Another type of error can be caused by not installing the device properly. To minimize this error, the fisheye camera should be installed so that the direction of the lens is perpendicular to the horizon (i.e., level the device), and the north should be accurately identified before installation.

4. Results

The forest fire detection system developed in this study was applied to Mt. Bukjeong near the Samcheok Campus of Kangwon National University. The size of the target area is about 194,000 m

2, and the device was installed in a location (129.1660° E, 37.4518° N) with a wide view as a result of GIS analysis.

Figure 6 shows the result of analyzing the visible area using viewshed analysis in QGIS to select a suitable area to install the system. Viewshed analysis is an algorithm that can determine the visible area from a given location by considering the elevation of each point from DSM data.

Figure 6a is the result of analyzing the visibility index using DSM in the study area. A point with a higher visibility index value means a point where a wider area is visible or is visible from many points. The place marked with a red circle is the point where the system is installed, and it is a flat point with a high visibility index and good accessibility.

Figure 6b shows the result of the viewshed analysis at the installation point, and the shaded areas are the points predicted to be visible from that point. On the other hand, in the case of a red triangle point with a low visibility index, only narrow areas are visible at that point, as shown in

Figure 6c.

Figure 7a shows a picture of the developed system installed in the study area. The system was installed after leveling it using a tripod and checking the north direction. Since it is important to know the height of the observation system to estimate the location of the forest fire, the height of the tripod was also measured.

Figure 7b shows an example of an image taken through thesystem.

Figure 7c,d present images taken through the camera of a Samsung Galaxy Note 10 with a horizontal angle of view of 61.5° and a vertical angle of view of 44.4°.

Figure 7c is the case where the direction of the camera is facing the front, and

Figure 7d is the case facing the vertical top. Because the angle of view of a normal camera is narrow, it can be confirmed that a narrower area is captured than when using a fisheye camera.

Figure 8a is the result of some of the continuous images taken during the day. The location of the moving person was marked with a red point, and it was detected after assuming that the red point was a fire. Aside from being unable to experiment with a real fire for safety reasons, it was useful to conduct the test this way to verify the accuracy of the position estimation. Although the experiment was conducted by detecting a moving person, the same method can be applied to an actual fire. The azimuth and elevation angles from the center point of each image to the red point (where the person is located) are shown in

Table 1. For verification, the location of the moving person was recorded using GPS, and the GPS location at the time each image was taken is shown in

Figure 9a as a yellow point. The location of the subject estimated through the polar image and DSM is shown in

Figure 9a as a white point. The analysis points are about 25.0 m away from the observation point on average and, in this case, the average value of positional errors between the positions measured by GPS and the positions predicted from the polar image was about 0.97 m. Although there is a slight error between the location recorded by GPS and the estimated location, it was confirmed that a reasonable estimation is possible.

Figure 8b is the result of some of the continuous images taken at night, and the subject is a person holding a flashlight. When taking images at night, the light from downtown can be confused with the flashlight, but in this experiment, only the location of the flashlight was used for the purpose of location estimation. The object detection applied in this study can distinguish between fire and light even in a dark environment. The analysis was performed assuming that the bright point was the point at which the fire occurred, and

Table 1 shows the azimuth and elevation angles from the center point of the image to the bright point. Even in the night experiment, the location of the person holding the flashlight was recorded using GPS. The location recorded using GPS is shown in

Figure 9b as a green point, and the location estimated using the image and DSM is shown as a white point. The analysis points are about 26.8 m away from the observation point on average and, in this case, the average value of positional errors between the positions measured by GPS and the positions predicted from the polar image was about 1.32 m. As in the daytime experiment, it was confirmed that reasonable location estimation was possible in the night.

Figure 10a,b shows cross-sectional views up to the point where fire was detected for six images taken during the day and at night, respectively. In the cross-section, the area where the height of the terrain changes rapidly is where the DSM pixel shows a high value because there are trees. A red straight line is drawn along the elevation angle, and the point at which this line intersects the green line (terrain) is the point where the fire broke out. Since the location of the system is known and the azimuth and distance to the fire can be calculated, the location of the forest fire can be specified on a map. The result is shown in

Figure 9, and it was possible to record that the subject moved from east to west along the trail. It can be seen that the distance estimation error can increase if the camera is not level when the height change of the terrain is not large. In this study, the device was leveled and fixed, and installed by identifying the north with a compass.

5. Discussion

In this study, a system that can continuously capture polar images and detect fires was developed by combining a fisheye camera and a Raspberry Pi. A technique was developed to calculate the azimuth and elevation angles by detecting the point at which the fire occurred in the polar image, and to estimate the location on the map by using it together with the DSM, which represents the topography. The technology developed in this study will be useful for the early suppression of fires by detecting and locating fires at an early stage. However, there are still issues that need to be improved, which are summarized as follows.

The fisheye lens used in this study can directly obtain polar images, and fire detection and azimuth and elevation angle calculations were applied to these polar images. However, as the pixels of the polar image are closer to the horizon, objects can be compressed. Therefore, to accurately detect fire in such polar images, it is recommended to use high-resolution images as much as possible, which means that an expensive lens is used and the size of the image data to be transmitted increases. In particular, when detecting a fire at a distant point, because the fire appears to be very small, it may be difficult to detect and to determine its exact location.

In this study, the Wi-Fi function built into the Raspberry Pi was used to transmit images by using the hotspot function of the smartphone. However, it may be difficult to maintain an online status in a mountainous area where forest fires are very likely to occur. Therefore, for commercialization, it is necessary to think more deeply about online access. These considerations are equally needed for power supply. It is also necessary to think about whether it is better to reduce costs by using batteries or by supplying constant power through wires, even though this is somewhat costly.

In this study, DSM made from drone images was used for location estimation. In the study area, there are not many trees, so it was possible to create a relatively sophisticated DSM. However, it will be difficult to make a sophisticated DSM because trees are dense in mountainous areas where actual field application is required. Therefore, it would be realistic to use an open DEM or DSM with slightly lower resolution for general use. Since the use of a DSM with low resolution means that errors in location prediction increase, it is necessary to make an optimal choice regarding whether to estimate a relatively accurate location by creating a high-quality DSM or to make a rough estimation at a lower cost.

In this study, a forest fire was detected and localized with one fisheye lens, but a more accurate localization would be possible if multiple fisheye lenses were installed and each image was taken for the same fire. This method is similar to the principle of GPS, which uses three or more satellites to estimate a position. However, one of the advantages of this study is that it covers all directions with one device, so installing multiple devices in a small area is contrary to this advantage. Therefore, the optimal installation method should take into account the relationship between cost and accuracy, as mentioned above. In addition, the developed system observes the area of the hemisphere by installing one fisheye lens vertically upward, so it is impossible to observe areas that are lower than the system. For effective application, it is sometimes necessary to observe lower places by installing the lens on the top or middle of a mountain, so two fisheye lenses should be installed in one system to monitor all directions in the form of a sphere.

In this study, the central point was extracted from the fire area and the location of the central point was calculated, but an actual fire can spread over a wide area over time. In the future, we plan to conduct additional research so that the area where the fire occurred can be displayed as an area rather than a point on the map.

6. Conclusions

Forest fires are catastrophic disasters that cause enormous damage worldwide. Depending on how quickly they are detected and dealt with, these vast losses can be reduced. In order to detect forest fires, various studies have been conducted with methods using satellite images and cameras or sensors, but there are few studies on quickly detecting forest fires while monitoring a wide area. In this study, a fisheye camera capable of capturing a wide area in all directions was used, and images captured through Raspberry Pi were continuously transmitted to a database and a function capable of detecting fire in the image was implemented. In addition, the location of the fire on the map was estimated by applying DSM-based GIS analysis. As a result of conducting an experiment on Mt. Bukjeong near Kangwon National University Samcheok Campus, it was confirmed that the position of a moving object can be reasonably estimated with the developed technology. Since it was not possible to experiment with real fire, the point where the target was located was marked with a virtual red color point during the day. At night, a flashlight was assumed to be a fire and detected. If the technology developed in this study is commercialized, it will be possible to quickly detect fire and notify when a fire occurs by monitoring a wide area at a relatively low cost. However, because a large area is displayed in one image, it is difficult to detect a fire that is too far away. It is also necessary to improve the resolution of the image and the accuracy of tracking the location. Since the cost may increase for accurate predictions, an appropriate selection must consider cost and accuracy and, for this purpose, it is necessary to conduct experiments in more diverse environments. In this study, the image was transmitted to the server and the location of the fire was estimated through GIS analysis. The developed device connects to the server, but cannot share data by building a WSN among the devices. In an environment where it is difficult to access the Internet, there is a need to share data between devices, so we plan to conduct additional research by applying WSN in the future. The amount of data transmission can be reduced if fire detection is performed by the device and only the result is transmitted, without transmitting the images. In addition, if combined with various sensors, such as a smoke sensor, heat sensor, and thermal infrared sensor, it is expected that more intuitive fire detection around the device will be possible. It is thought that if the accuracy and practicality are improved by additional research in the future, this will greatly contribute to the field of forest fire detection and prevention.