DialogCIN: Contextual Inference Networks for Emotional Dialogue Generation

Abstract

1. Introduction

- We constructed a Chinese dialogue dataset named LifeDialog, encompassing annotations for emotional, sentence type, and topic features of the dialogues. The dataset underwent meticulous manual annotation to mitigate the influence of noise on the data and to provide a high-quality resource for Chinese dialogue generation research.

- We presented DialogCIN, a contextual inference network devised to generate emotionally enriched dialogues by comprehending conversations from a cognitive standpoint, encompassing both the global and speaker levels.

- We proposed an “Inference Module” that emulates the human cognitive reasoning process by iteratively performing the reasoning process on the acquired dialogue context at various levels. This iterative approach facilitates a comprehensive comprehension of the underlying logical information embedded within the dialogue.

- We conducted a comparative evaluation of the proposed DialogCIN model and the baseline model using the LifeDialog dataset, thereby highlighting the advantages of the former.

2. Related Work

2.1. Open Domain Dialogue Generation

2.2. Emotional Dialog Generation

2.3. Datasets for Dailogue Generation

3. Proposed Method

3.1. Task Formulation

3.2. Inference-Based Encoder

3.2.1. Utterance Features

3.2.2. Representation Unit

3.2.3. Understand Unit

3.2.4. Emotion Predictor

3.3. Emotion-Aware Decoder

3.4. Training Objective

4. Proposed Dataset

4.1. Data Collection

4.2. Data Processing

4.3. Data Features

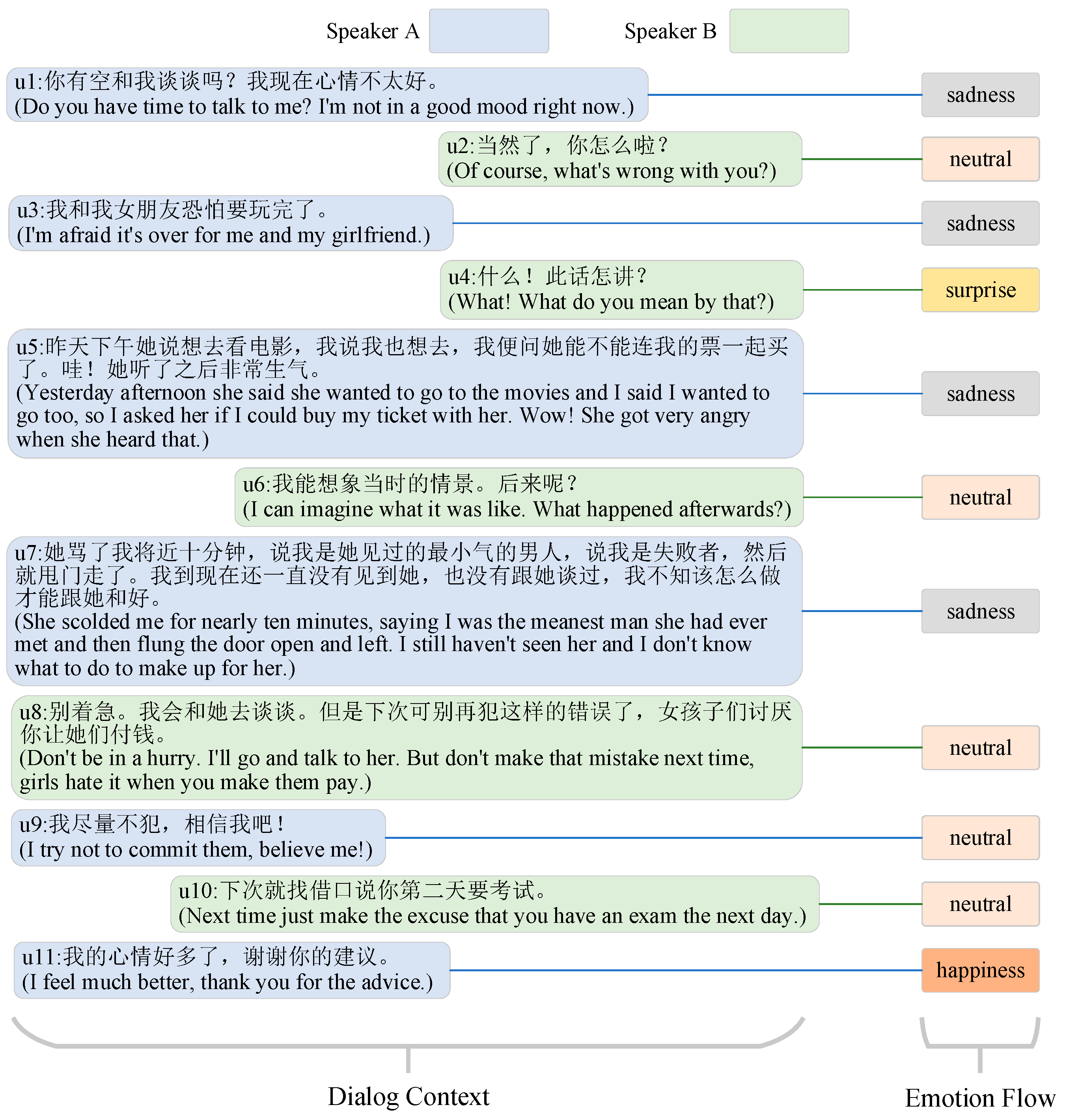

- We enriched the corpus with a diverse set of features, thereby enhancing the semantic representation of the dialogues. These features encompass emotional attributes of utterances, sentence type characteristics of utterances, and topic-related attributes of dialogues.

- We manually annotated seven different and common emotions, such as happiness, sadness, and anger, in the LifeDialog dataset to ensure the quality of the annotations, and provided sufficient data support for other related tasks, such as emotion recognition in dialogues.

- The LifeDialog dataset encompassed dialogues from a diverse range of topics, including, but not limited to, daily life, workplace, travel, and more. This broad coverage of topics distinguished it from domain-specific dialogue datasets that focused on particular domains like medical or legal conversations. As a result, the LifeDialog dataset exhibited a high level of versatility and applicability across a wide range of domains and applications in the field of natural language processing.

- In contrast to datasets like the LCCC dataset and other Chinese dialogue datasets that are primarily derived from posts and replies on social media platforms, the LifeDialog dataset is exclusively composed of manually written and carefully selected data. This meticulous data curation process guarantees that our dataset maintains higher grammatical standards and more accurately captures the characteristics of real-life conversations.

5. Experiments

5.1. Experimental Setting

5.2. Comparison Experiment and Result Analysis

5.3. Evaluation Metrics

5.3.1. Automatic Metrics

5.3.2. Human Evaluation

5.4. Results and Analysis

5.4.1. Comparison Experiment and Result Analysis

5.4.2. Human Evaluation Results

5.4.3. Ablation Experiment and Result Analysis

- Effect of Understand Unit

- Effect of Representation Unit

- Effect of Different Level

5.4.4. Parameter Experiment and Result Analysis

5.4.5. Case Study

- Case 1

- Case 2

- Case 3

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Prendinger, H.; Ishizuka, M. The Empathic Companion: A Character-Based Interface that Addresses Users’ Affective states. Appl. Artif. Intell. 2005, 19, 267–285. [Google Scholar] [CrossRef]

- Partala, T.; Surakka, V. The effects of affective interventions in human–computer interaction. Interact. Comput. 2004, 16, 295–309. [Google Scholar] [CrossRef]

- Keshtkar, F.; Inkpen, D. A pattern-based model for generating text to express emotion. In Proceedings of the Affective Computing and Intelligent Interaction: Fourth International Conference, ACII 2011, Memphis, TN, USA, 9–12 October 2011; Springer: Berlin/Heidelberg, Germany, 2011; pp. 11–21. [Google Scholar]

- Skowron, M. Affect listeners: Acquisition of affective states by means of conversational systems. In Revised Selected Papers, Proceedings of the Development of Multimodal Interfaces: Active Listening and Synchrony: Second COST 2102 International Training School, Dublin, Ireland, 23–27 March 2009; Springer: Berlin/Heidelberg, Germany, 2010; pp. 169–181. [Google Scholar]

- Zhou, H.; Huang, M.; Zhang, T.; Zhang, T.; Zhu, X.; Liu, B. Emotional chatting machine: Emotional conversation generation with internal and external memory. In Proceedings of the AAAI Conference on Artificial Intelligence, Orleans, LA, USA, 2–7 February 2018; p. 32. [Google Scholar]

- Wei, W.; Liu, J.; Mao, X.; Guo, G.; Zhu, F.; Zhou, P.; Hu, Y. Emotion-aware chat machine: Automatic emotional response generation for human-like emotional interaction. In Proceedings of the 28th ACM International Conference on Information and Knowledge Management, Beijing, China, 3–7 November 2019; pp. 1401–1410. [Google Scholar]

- Li, Q.; Chen, H.; Ren, Z.; Chen, Z.; Tu, Z.; Ma, J.E. Multi-resolution Interactive Empathetic Dialogue Generation. arXiv 2019, arXiv:1911.08698. [Google Scholar]

- Liang, Y.; Meng, F.; Zhang, Y.; Chen, Y.; Xu, J.; Zhou, J. Infusing multi-source knowledge with heterogeneous graph neural network for emotional conversation generation. In Proceedings of the AAAI Conference on Artificial Intelligence, Vancouver, BC, Canada, 2–9 February 2021; Volume 35, pp. 13343–13352. [Google Scholar]

- Mao, Y.; Cai, F.; Guo, Y.; Chen, H. Incorporating emotion for response generation in multi-turn dialogues. Appl. Intell. 2022, 52, 7218–7229. [Google Scholar] [CrossRef]

- Li, M.; Zhang, J.; Lu, X.; Zong, C. Dual-View Conditional Variational Auto-Encoder for Emotional Dialogue Generation. Trans. Asian Low-Resour. Lang. Inf. Process. 2021, 21, 1–18. [Google Scholar] [CrossRef]

- Wang, H.; Lu, Z.; Li, H.; Chen, E. A dataset for research on short-text conversations. In Proceedings of the 2013 Conference on Empirical Methods in Natural Language Processing, Seattle, WA, USA, 18–21 October 2013; pp. 935–945. [Google Scholar]

- Wang, Y.; Ke, P.; Zheng, Y.; Chen, E. A large-scale chinese short-text conversation dataset. In Part I 9, Proceedings of the Natural Language Processing and Chinese Computing: 9th CCF International Conference, NLPCC 2020, Zhengzhou, China, 14–18 October 2020; Springer International Publishing: Berlin/Heidelberg, Germany, 2020; pp. 91–103. [Google Scholar]

- Qian, H.; Li, X.; Zhong, H.; Guo, Y.; Ma, Y.; Zhu, Y.; Liu, Z.; Dou, Z.; Wen, J.R. Pchatbot: A large-scale dataset for personalized chatbot. In Proceedings of the 44th International ACM SIGIR Conference on Research and Development in Information Retrieval, Virtual Event, Canada, 11–15 July 2021; pp. 2470–2477. [Google Scholar]

- Schuster, M.; Paliwal, K.K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Zhou, X.; Dong, D.; Wu, H.; Zhao, S.; Yu, D.; Tian, H.; Liu, X.; Yan, R. Multi-view response selection for human-computer conversation. In Proceedings of the 2016 Conference on Empirical Methods in Natural Language Processing, Austin, TX, USA, 1–5 November 2016; pp. 372–381. [Google Scholar]

- Zhang, Z.; Li, J.; Zhu, P.; Zhao, H.; Liu, G. Modeling multi-turn conversation with deep utterance aggregation. arXiv 2018, arXiv:1806.09102. [Google Scholar]

- Tao, C.; Wu, W.; Xu, C.; Hu, W.; Zhao, D.; Yan, R. Multi-representation fusion network for multi-turn response selection in retrieval-based chatbots. In Proceedings of the Twelfth ACM International Conference on Web Search and Data Mining, Melbourne, Australia, 11–15 February 2019; pp. 267–275. [Google Scholar]

- Serban, I.; Sordoni, A.; Bengio, Y.; Courville, A.; Pineau, J. Building end-to-end dialogue systems using generative hierarchical neural network models. In Proceedings of the AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; p. 30. [Google Scholar]

- Elman, J.L. Finding structure in time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Lee, J.Y.; Lee, K.A.; Gan, W.S. A Randomized Link Transformer for Diverse Open-Domain Dialogue Generation. In Proceedings of the 4th Workshop on NLP for Conversational AI, Dublin, Ireland, 27 May 2022; pp. 1–11. [Google Scholar]

- Shen, L.; Zhan, H.; Shen, X.; Feng, Y. Learning to select context in a hierarchical and global perspective for open-domain dialogue generation. In Proceedings of the ICASSP 2021–2021 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Toronto, ON, Canada, 6–11 June 2021; pp. 7438–7442. [Google Scholar]

- Zhang, H.; Lan, Y.; Pang, L.; Guo, J.; Cheng, X. Recosa: Detecting the relevant contexts with self-attention for multi-turn dialogue generation. arXiv 2019, arXiv:1907.05339. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the NIPS’14: Proceedings of the 27th International Conference on Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Shen, L.; Feng, Y. CDL: Curriculum dual learning for emotion-controllable response generation. arXiv 2020, arXiv:2005.00329. [Google Scholar]

- Song, Z.; Zheng, X.; Liu, L.; Xu, M.; Huang, X.J. Generating responses with a specific emotion in dialog. In Proceedings of the 57th Annual Meeting of the Association for Computational Linguistics, Florence, Italy, 28 July–2 August 2019; pp. 3685–3695. [Google Scholar]

- Uthus, D.C.; Aha, D.W. The ubuntu chat corpus for multiparticipant chat analysis. In Proceedings of the 2013 AAAI Spring Symposium Series, Palo Alto, CA, USA, 25–27 March 2013. [Google Scholar]

- Li, Y.; Su, H.; Shen, X.; Li, W.; Cao, Z.; Niu, S. Dailydialog: A manually labelled multi-turn dialogue dataset. arXiv 2017, arXiv:1710.03957. [Google Scholar]

- Tiedemann, J. News from OPUS-A collection of multilingual parallel corpora with tools and interfaces. Recent Adv. Nat. Lang. Process. 2009, 5, 237–248. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.H.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. arXiv 2014, arXiv:1412.3555. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the NIPS 2017, Long Beach, CA, USA, 4–7 December 2017. Advances in Neural Information Processing Systems 30. [Google Scholar]

- Ekman, P. An argument for basic emotions. Cogn. Emot. 1992, 6, 169–200. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Tian, Z.; Yan, R.; Mou, L.; Song, Y.; Feng, Y.; Zhao, D. How to Make Context More Useful? An Empirical Study on Context-Aware Neural Conversational Models. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, Vancouver, BC, Canada, 30 July–4 August 2017; pp. 231–236. [Google Scholar]

- Zhang, W.; Cui, Y.; Wang, Y.; Zhu, Q.; Li, L.; Zhou, L.; Liu, T. Context-sensitive generation of open-domain conversational responses. In Proceedings of the 27th International Conference on Computational Linguistics, Santa Fe, NM, USA, 21–25 August 2018; pp. 2437–2447. [Google Scholar]

- Xing, C.; Wu, W.; Wu, Y.; Liu, J.; Huang, Y.; Zhou, M.; Ma, W. Topic aware neural response generation. In Proceedings of the AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017; p. 31. [Google Scholar]

- Li, J.; Monroe, W.; Ritter, A.; Galley, M.; Gao, J.; Jurafsky, D. Deep reinforcement learning for dialogue generation. arXiv 2016, arXiv:1606.01541. [Google Scholar]

| Spilt | Quantity |

|---|---|

| Train | 8039 |

| Dev | 1026 |

| Test | 980 |

| Total | 10,045 |

| Total Dialogues | 10,045 |

| Average Utterances Per Dialogue | 7.1 |

| Average Tokens Per Dialogue | 112.7 |

| Average Tokens Per Utterance | 16.0 |

| Models | PPL | BLEU | Distinct-1 | Distinct-2 |

|---|---|---|---|---|

| WSeq | 95.78 | 0.0096 | 0.0366 | 0.179 |

| HRED | 101.53 | 0.0084 | 0.0379 | 0.1871 |

| DSHRED | 97.70 | 0.01 | 0.0379 | 0.1929 |

| ReCoSa | 98.41 | 0.0099 | 0.0346 | 0.1868 |

| DialogCIN | 91.36 | 0.0104 | 0.0395 | 0.2151 |

| Model | Semantic Coherence | Emotional Appropriateness |

|---|---|---|

| WSeq | 2.78 | 2.81 |

| HRED | 2.97 | 3.05 |

| DSHRED | 3.13 | 3.17 |

| ReCoSa | 3.32 | 3.41 |

| DialogCIN | 3.78 | 3.65 |

| Part | Representation | Understand | PPL | BLEU | D-1 | D-2 | ||

|---|---|---|---|---|---|---|---|---|

| Context | Speaker | Context | Speaker | |||||

| 1 | √ | √ | √ | √ | 91.36 | 0.0104 | 0.0395 | 0.2151 |

| 2 | √ | √ | √ | × | 90.43 | 0.0094 | 0.0384 | 0.2094 |

| √ | √ | × | √ | 93.21 | 0.0093 | 0.0379 | 0.2063 | |

| 3 | √ | √ | × | × | 94.93 | 0.0091 | 0.0363 | 0.1972 |

| √ | × | × | × | 99.91 | 0.0087 | 0.0349 | 0.1926 | |

| × | √ | × | × | 98.79 | 0.0089 | 0.0344 | 0.1898 | |

| × | × | × | × | 103.15 | 0.0079 | 0.0310 | 0.1644 | |

| N_Modules | PPL | BLEU | Distinct-1 | Distinct-2 |

|---|---|---|---|---|

| 1 | 93.42 | 0.0098 | 0.0367 | 0.2068 |

| 2 | 91.36 | 0.0104 | 0.0395 | 0.2151 |

| 3 | 98.85 | 0.0095 | 0.0332 | 0.1942 |

| 4 | 106.25 | 0.0097 | 0.0313 | 0.1931 |

| Case 1 | Context | A:我听说你得到了一个去欧洲培训半年的机会,是真的吗? (A: Is it true that you have been offered an opportunity to undergo a six-month training trip in Europe?) |

| B:是真的,我下个月14号就要动身。 (B: It’s true, I’m moving on the 14th of next month.) | ||

| WSeq | 好的。(OK.) | |

| HRED | 这个好。(This is good.) | |

| DSHRED | 不错不错。(Nice nice.) | |

| ReCoSa | 我也想有这种机会。(I would like to have that opportunity.) | |

| DialogCIN | 祝贺你!得到这么好的机会。 (Congratulations! Getting such a great opportunity.) | |

| Case 2 | Context | A:生日快乐,林小姐。这是我送给您的礼物。 (A: Happy Birthday, this is my gift to you.) |

| B:哦,谢谢!您太好了。啊!是一个瓷瓶。 (B: Oh, thank you! You are too kind. Ah! It’s a porcelain vase.) | ||

| WSeq | 是吗?(Is it?) | |

| HRED | 我也是这么想的。(That’s what I thought.) | |

| DSHRED | 这和玉瓶哪个好看?(Which looks better, this or the jade bottle?) | |

| ReCoSa | 我也喜欢,它很漂亮。(I like it too, it’s beautiful.) | |

| DialogCIN | 这是我的荣幸,很高兴你喜欢。 (It was my pleasure and I’m glad you enjoyed it.) | |

| Case 3 | Context | A:怎么一副垂头丧气的样子? (A: Why do you look so downcast?) |

| B:我正郁闷着呢,考试考砸了。 (B: I was depressed, having failed my exams.) | ||

| WSeq | 我想是的。(I think so.) | |

| HRED | 你怎么知道的?(How do you know?) | |

| DSHRED | 没事的。(It’s all right.) | |

| ReCoSa | 不要伤心。(Don’t be sad.) | |

| DialogCIN | 别这么沮丧,你可以重考嘛。 (Don’t be so discouraged. You can retake the exam.) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lou, W.; Yang, W.; Wei, F. DialogCIN: Contextual Inference Networks for Emotional Dialogue Generation. Appl. Sci. 2023, 13, 8629. https://doi.org/10.3390/app13158629

Lou W, Yang W, Wei F. DialogCIN: Contextual Inference Networks for Emotional Dialogue Generation. Applied Sciences. 2023; 13(15):8629. https://doi.org/10.3390/app13158629

Chicago/Turabian StyleLou, Wenzhe, Wenzhong Yang, and Fuyuan Wei. 2023. "DialogCIN: Contextual Inference Networks for Emotional Dialogue Generation" Applied Sciences 13, no. 15: 8629. https://doi.org/10.3390/app13158629

APA StyleLou, W., Yang, W., & Wei, F. (2023). DialogCIN: Contextual Inference Networks for Emotional Dialogue Generation. Applied Sciences, 13(15), 8629. https://doi.org/10.3390/app13158629