Abstract

In order to streamline and summarize the status quo of human–computer interaction (HCI) design research in minimally invasive surgery robots, and to inspire and promote in-depth design research in related fields, this study utilizes literature research methods, inductive summarizing methods, and comparative analysis methods to analyze and organize the usage scenarios, users, interaction content and form, and relevant design methods of minimally invasive surgery robots, with the purpose of arriving at a review. Through a summary method, this study will obtain outcomes such as design requirements, interaction information classification, and the advantages and disadvantages of different interaction forms, and then make predictions of future trends in this field. Research findings show that the HCI design in the relevant field display a highly intelligent, human-centered, and multimodal development trend through the application of cutting-edge technology, taking full account of work efficiency and user needs. However, meanwhile, there are problems such as the absence of guidance by a systematic user knowledge framework and incomplete design evaluation factors, which need to be supplemented and improved by researchers in related fields in the future.

1. Introduction

Surgical robots, embodying cross-disciplinary knowledge and integrating multiple high-tech elements [], are comprehensive medical devices that can reduce patient incision trauma and infection risk through precise control and the replacement of manual operations []. They provide a work mode for surgeons that reduces physical exertion and focuses on disease analysis, thereby promoting efficient surgical treatment. The application and promotion of surgical robots are a manifestation of the transformation of technological achievements such as artificial intelligence and 5G, which are in line with the concept of the Global Digital Health Strategy (2020–2024) proposed by the World Health Organization. The potential for remote operations also provides more possibilities for future global diagnostic and treatment methods [].

HCI is the interactive process between people and the digital environment, i.e., the process of information control and exchange from the user to the system []. The goal of HCI design is to provide users with experiences that align with their specific knowledge background []. With the improvement and development of design disciplines, the design concept of user-centered design (UCD) [] has gradually been recognized by the industry []. HCIhhHCI, as an essential component of the surgical robot [], is a channel for the surgeon to perceive, understand, and analyze feedback information, and make situational decisions by relying on their professional abilities []. Good HCI design can improve surgical efficiency and user experience [], and is widespread concern of researchers in relevant fields.

Minimally invasive surgery (MIS) refers to a surgical method that delivers special instruments, physical energy, or chemical agents into the human body through minor trauma or natural channels to deactivate, remove, repair, or reconstruct internal body abnormalities, deformities, or trauma for therapeutic purposes. Its outstanding feature is that it causes significantly less trauma to patients compared to traditional surgery []. Surgical robots, with their broad imaging field of view, high positioning accuracy, and remote control capabilities [], have become an effective means of assisting in performing minimally invasive surgery. Depending on the type of surgery [], MIS robots can be classified into six types—laparoscopic surgery robots, vascular interventional surgery robots, percutaneous puncture surgery robots, neurosurgical robots, natural orifice surgery robots, and orthopedic surgery robots []—as shown in Table 1.

Table 1.

Classification of minimally invasive surgery robots.

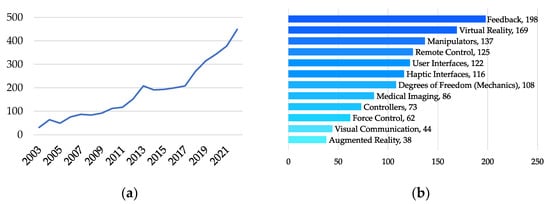

2. Methods and Materials

This study employs various research methodologies such as literature review, inductive summarization, and comparative analysis. The primary literature source is the Engineering Village database, in combination with professional databases such as Web of Science and CNKI. It collects and organizes related literature worldwide over the last 20 years, refining and analyzing the results of literature research in relevant fields from the perspective of HCI design. The subject search terms were set as (surgical robot OR robotic surgery) AND interaction. Searches were conducted in the Engineering Village database, the Web of Science database, and the CNKI database with a publication time limit set to the last 20 years. The search yielded a total of 3619 papers, with 1496 papers from the Engineering Village database, 1980 papers from the Web of Science database, and 143 papers from the CNKI database. The results were statistically analyzed as illustrated in Figure 1: an annual count of publications over the past 20 years is displayed in Figure 1a, revealing a year-by-year increasing trend in related research. Papers were also classified based on their research theme, as shown in Figure 1b. The HCI design research of surgical robots mainly focuses on force feedback, virtual reality, manipulators, and haptic interfaces. The study summarizes six different types of robot workflows for MIS and usage scenarios, four directional requirements for HCI design of minimally invasive surgery robots, and three types of interaction information in related fields. It also comparatively analyzes 23 representative surgical robot products on the market, summarizing four commonly used HCI methods for surgical robots and their advantages and disadvantages. Starting from the perspective of HCI design, this paper analyzes and organizes the related achievements based on the above three methods to form a review report. It aims to fill the absence and shortcomings of review results in this field, providing inspiration and guidance for subsequent scholars to deepen design research.

Figure 1.

(a) Annual number of publications statistics; and (b) research direction: classification and statistics.

3. HCI Design Requirements for Minimally Invasive Surgery Robots

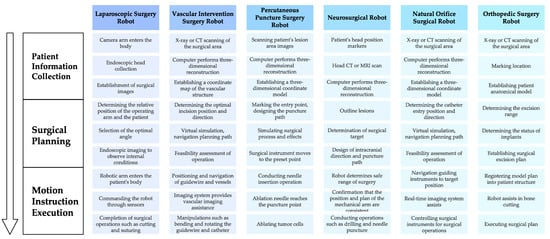

Through contextual inquiry and user group analysis, design directions in relevant fields can be analyzed. Figure 2 organizes the minimally invasive surgical procedures and usage scenarios for different types of robots, summarizing three common stages: patient information collection, surgical planning, and motion instruction execution [,,,,,,,,].

Figure 2.

Usage scenarios.

Patient information collection: Before surgery, patient information is collected through relevant equipment, and images are reconstructed and visualized in three dimensions. Moreover, surgical instrument localization can be achieved by calculating the positional relationship between the robot, the optical system, and the patient.

Surgical planning: The surgeon determines the location of the lesion and the characteristics of the surrounding tissue based on the subcutaneous image information of the patient, and plans the optimal surgical path through image-guided surgery (IGS) [] and reference point marking, providing guidance for the navigation system [].

Motion instruction execution: The surgeon controls the patient-side robot to execute surgical instructions through master devices such as joysticks []. The motion information of the master device is transmitted to the slave device after being processed by the control system, thereby driving the surgical instruments to complete various movements like punctures [].

Robotic minimally invasive surgery is completed by multiple surgeons. The entire product service system involves three types of users: patients, primary surgeons, and assistant surgeons []. The operation of the master control system is the responsibility of the primary surgeon, who is under high work pressure. The primary surgeon needs to rely on their personal medical knowledge to analyze complex conditions, and make judgments and decisions, thereby ensuring the safety of the patient’s surgery. Therefore, in the design process of the HCI system for minimally invasive surgery robots, the goal should be to reduce the workload of doctors, assist users in building good cognitive models, and, thus, improve work efficiency and operational experience [].

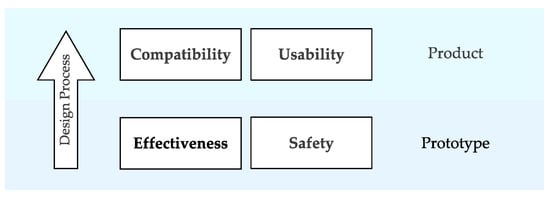

The unique application scenarios and user groups propose directional requirements for the HCI design of minimally invasive surgery robots. Based on the above analysis and taking into account existing product research in HCI, and in conjunction with the three-level standard system for related products proposed by O’Toole et al. [], as shown in Figure 3, the design requirements in four directions are summarized as follows: effectiveness, safety, compatibility, and usability.

Figure 3.

HCI design requirements.

- (1)

- Effectiveness: Effectiveness is a key indicator for measuring the clinical effect of minimally invasive surgery robots []. Good effectiveness can be reflected in the increase of the surgery success rate and operation precision or the decrease of blood loss and complications []. Traditional minimally invasive surgery may cause fatigue due to the long periods of high-intensity work, leading to a decline in operational precision and hand–eye co-ordination []. Robotic surgery itself can reduce manual operation and overcome these shortcomings. Some products further enhance the effectiveness of minimally invasive surgery robots through tactile feedback and optimized operation processes [].

- (2)

- Safety: Safety involves multiple performance indicators such as system monitoring, abnormal data handling, and device self-protection. The safety performance of the interactive system is vital to patient life and health and is a factor that must be considered in the design process []. System safety can be enhanced through methods such as fault-tolerant design, early warning design, and the control of interaction synchronization. For instance, the product can be endowed with the ability for auto-correction to avoid mistakes due to arm tremors when operating the device []; and hardware and software redundancy can ensure that a single fault point will not harm the patient []; the delay between the control end and the operation end should be kept within 330 ms to ensure that remote or local control commands can be accurately, and truthfully transmitted to the operation end in real-time [].

- (3)

- Compatibility: Compatibility refers to the degree of adaptation between the interaction system and the device operating environment and user group. To ensure the efficient progress of the surgery, the design of surgical robots must take into account the complexity of the operating environment []. On the hardware side, the design of a small and lightweight control platform can reduce the operating room space, making pre-surgery setup work easier to complete []. At the same time, during the software design process, the related needs of doctors of different age groups should be considered to provide a compatible interaction platform for different users [].

- (4)

- Usability: Usability refers to the applicability and ease of use of minimally invasive surgery robots in actual clinical applications, involving multiple evaluation indicators such as operation time, system complexity, and learning cost []. To improve the usability of the interactive system, it is necessary to reduce user cognitive load and divide interaction levels as much as possible, achieve efficiency and accuracy in information acquisition, and carry out targeted design for all common carriers used for interactive processes []. The non-overlap of visual space and operation space is a key factor affecting the usability of minimally invasive surgery robots. To ensure the smoothness and immersion of surgeons’ operations, precise spatial-mapping models should be established between the main operation hand, monitor, slave operation hand, and endoscope of the product [], and the optimal mapping ratio should be established based on minimal fatigue [].

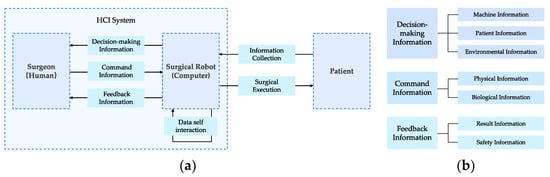

4. HCI Information Content

Sun [] has divided the HCI behavior of minimally invasive surgery robots into explicit information interaction behavior, implicit information interaction behavior, and potential information interaction behavior. Explicit information refers to the type of information that users can intuitively obtain to meet the basic needs of doctors during the surgery process; implicit interaction information refers to the information generated by data from interaction; and potential interaction information refers to the information interaction behavior that is currently unrealized but may be achieved in the future with technology development. The UCD concept in the field of design studies focuses more on the research of explicit information []. This article further divides explicit information into decision-making information, command information, and feedback information based on the interaction process and the three-level theory of product information processing proposed by Norman in emotional design [], and summarizes and sorts out the related content, as shown in Table 2.

Table 2.

HCI information content.

- (1)

- Decision-making information: Decision-making information refers to the information provided to doctors at each stage of surgery to decide the next step []. Doctors can understand patient lesions, tissue, and the equipment’s relative positions through decision-making information, forming references and a decision-making basis, and assisting in surgical protocol planning and execution. Based on the source of information, it can be divided into machine information, patient information, and environmental information. Machine information is the information of the machine equipment itself, such as the position of the mechanical arm, the operating status of the machine, and the information on the machine exterior. Patient information is mainly various postures and subcutaneous information, such as the location of lesions or vascular structures. This part of the information presents a real and accurate tissue model in the system through 3D reconstruction technology and IGS, and displays features that are not easily distinguished by the naked eye []. Environmental information mainly refers to the information content provided by assistant doctors in the surgical team. The main surgeon makes decisions by taking a holistic view and based on any additional information outside of the field of vision.

- (2)

- Command information: Command information is the information that doctors input into the minimally invasive surgery robots in the master–slave interaction framework. According to different input methods, command information can be divided into physical information and biological information []. Physical information refers to the command information input through devices such as display screens, mice, joysticks, and touch screens, which can realize automatic or semi-automatic execution []. Biological information refers to the type of information formed through input methods such as voice, vision, gestures, and touch. After the command information is input into the minimally invasive surgery robot system through sensors, it drives the mechanical arm to simulate the doctor’s actions and complete the surgery, achieving the purpose of separation, cutting, suturing, etc. [].

- (3)

- Feedback information: Feedback information is the response and result feedback of the minimally invasive surgery robot system to operation instructions, which is divided into two types according to the content: result feedback information and safety feedback information. The feedback of motion results requires the main operation hand and the slave mechanical arm to follow the principle of consistent motion control []. The related system generates feedback based on user behavior information to assist doctors in completing behavior effect judgment. Safety feedback will indicate potential system hazards and risks, thereby helping to enhance surgical process control and safety assurance. When the data are abnormal, it will alarm the system to avoid causing a larger impact [].

The flow and category of these three types of information in the HCI system are shown in Figure 4.

Figure 4.

(a) The flow in the HCI system; and (b) information category.

5. Evolution of HCI Mode

To meet the need for information exchange during surgical procedures, current interactive systems of surgical robots can provide users with voice, vision, and touch interaction channels, forming a multimodal interactive system []. Table 3 organizes representative products in relevant fields and summarizes four types of interaction modes: graphical interfaces, haptic feedback, eye tracking, and voice control.

Table 3.

Application of interactive form of surgical robot.

5.1. GUI

The graphical user interface (GUI) is a visible control system based on graphical symbols and other visual elements. Interacting with a surgical robot through a graphical user interface is the most common interaction method. The GUI can assist surgeons in forming an intuitive and realistic understanding of patient conditions preoperatively, thus improving preoperative planning efficiency. Currently, in related fields, the focus is on improving cognitive efficiency and user experience, focusing on the following three aspects:

- (1)

- The integration and optimization of information content and the redesign of the existing interface: When designing the Sils robot, Vaida et al. integrated surgical information received by the surgeon, parameters of patient interest, machine status, and optional features into the GUI to assist surgeons in better interacting with the product [].

- (2)

- The incorporation of display technologies such as AR and VR to change the way information is presented: Liao et al. designed a 3D virtual interactive interface for ophthalmic microsurgery robots [], enhancing visualization effects and aiding users in gaining better control over the product. The GUI designed by Dagnino et al. based on virtual reality technology can assist surgeons in orthopedic preoperative planning, achieving virtual fracture reduction [,].

- (3)

- The enhancement of image quality based on 3D reconstruction: Currently, relevant field researchers improve image quality through 3D modeling and algorithm optimization to aid surgeons in surgical planning. The medical 3D visualization software Mint Liver can help surgeons obtain more realistic, detailed, and 3D lesion information through 3D models []. Zeng et al. used 3D reconstruction for studies on type III hilar cholangiocarcinoma surgery, confirming the safety and effectiveness of related technologies []. Roberti et al. designed an endoscope specifically to provide stereoscopic views for laparoscopic surgery, building a visual anatomical environment through high-precision 3D reconstruction [].

However, such technology has certain limitations []. Its image effects can be affected by the original data and software used. When the object of reconstruction is original CT or MRI images, some data loss can decrease image clarity, causing difficulties in decision making for surgeons.

5.2. Voice Control

Voice control is a method of human–machine interaction in which the human exchanges information with the machine through voice in order to ultimately achieve user goals. Voice interaction can effectively control surgical robots while freeing the users’ hands [], reducing hand tremors due to fatigue, and helping surgeons overcome the dilemma of having to interrupt operations to move related equipment []. Representative products include the AESOP series of laparoscopic surgery robots [,] and the KaLAR surgical assistant [].

Current research in this field mainly focuses on the following two aspects:

- (1)

- The optimization of operation procedures and experience through the development of voice modules: Tang [] established a voice-based HCI mode that enables robots to perform corresponding actions through voice control, solving the problem of poor co-ordination between assisting surgeons and primary surgeons. Maysara et al. [] developed a voice interface to assist in controlling autonomous camera systems, providing surgeons with high-quality surgical camera views.

- (2)

- The enhancement of voice control effects: Zinchenko et al. [] proposed a new intentional voice control method to control the long-distance motion of the robotic arm and proposed a method for voice-to-motion calibration to reduce motion ambiguity.

Voice interaction may result in misrecognition, and, compared with artificial assistance, it has a slower response speed [], presenting certain safety risks.

5.3. Eye Tracking

Eye tracking is an interactive method that realizes HCI by analyzing eye motion information. Eye-tracking interaction can enhance the user experience by minimizing external intervention [] and simplifying operations [], increasing freedom [], reducing communication costs and mental load, assisting the surgeon in concentrating on critical operations. A representative product is the Senhance Surgical System, which provides surgeons with a well-centralized surgical view through eye movement control []. Current research in related fields is focused on maintaining the level of user attention and optimizing the operational experience, primarily concentrating on the following two aspects:

- (1)

- Improving the usability of the interaction system through eye-tracking technology: Li et al. [] proposed an HCI model based on eye motion control that can control the movement of a robotic arm by gaze, reducing the frequency of surgery interruptions and ensuring the surgeon’s focus while simplifying the surgical procedure. Cao et al. [] used SVM and PNN to develop an interaction system to control an endoscope robotic arm based on pupil changes, and verified through experiments that this method has more advantages over traditional methods. Guo et al. [] developed a system based on eye tracking for needle positioning during surgery, which reduced the frequency of switching between control devices and surgical tools, and verified through experiments that this method effectively improves operation smoothness.

- (2)

- Optimizing calibration methods to improve recognition accuracy: Fujii et al. [] proposed a new online calibration method for gaze trackers, which improves the gaze-tracking accuracy. Tostado et al. [] proposed a calibration method based on robot-generated 3D space continuous trajectories, which improves the reliability of gaze point estimation.

Although eye-tracking interaction has been applied in some products, there is still much room for improvement in control accuracy []. The high postural requirements of eye trackers for wearers [] have become a key factor limiting the large-scale promotion of eye-tracking interaction in relevant fields.

5.4. Haptic Feedback

Haptic feedback refers to reproducing touch sensations for users through forces, vibrations, and other series of actions []. Representative products include the Versius surgical robot [], Senhance surgical system [,], and MiroSurger surgical system []. Surgeons perceive and control the size of the forces and the physiological characteristics of tissues during the surgical procedure through haptic feedback []. This can effectively compensate for cognitive biases caused by environmental isolation, avoid secondary injuries to patients due to excessive force [], and alleviate surgeons’ muscle tension and fatigue during surgery, thereby improving the stability and safety of the operation [].

Current research in this field mainly focuses on the following two aspects:

- (1)

- Verifying the effectiveness of haptic feedback: Wottawa et al. [] conducted experiments based on animal models and concluded that the use of haptic feedback could significantly reduce tissue damage during surgery. Lim et al. [] developed a combined haptic and kinesthetic feedback system and verified the effectiveness of haptic feedback during remote operations.

- (2)

- Exploring more usable and lower-cost haptic feedback systems: Jin et al. [] developed a new robot tactile sensing auxiliary system, which realized force feedback based on magneto-rheological fluid and helped surgeons to better detect the force situation between surgical instruments and the vascular environment. Park et al. [] created a grip-type haptic device and integrated it into the surgical robot console, enabling surgeons to have the same tactile experience as traditional surgery. Kim et al. [] proposed a haptic device designed based on magneto-rheological sponge cells to provide feedback on organ viscoelasticity.

At present, due to technical limitations, surgical robots cannot truly reproduce the tactile sensation experienced by surgeons during traditional surgery, and there is still potential for improvement in the operation experience.

6. HCI Design Methods

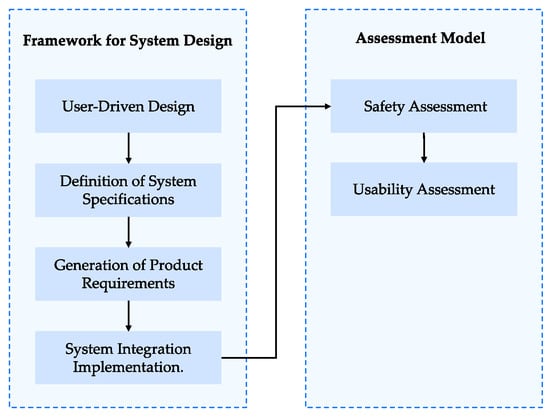

The UCD methodology has been widely used in the design practice and evaluation in related fields [], forming a systematic design framework and an assessment model, as shown in Figure 5.

Figure 5.

Design process.

6.1. Framework for System Design

During the process of translation into practice, minimally invasive surgery robots need the guidance and support of design methodology. The medical robot design framework proposed by Sánchez et al. [] and Dombre et al. [] is of guiding significance in carrying out the HCI design of surgical robots, specifically including:

- (1)

- User-driven design: The ultimate goal of surgical robots is not to replace surgeons, but to extend surgeons’ surgical capabilities as a type of assistive tool []. If surgeons’ needs, capabilities, and characteristics are not fully considered in the early stages of design, the interaction system cannot be correctly developed and evaluated []. User-driven design requires the comprehensive use of contextual inquiry, usability testing, and focus groups to achieve design innovation [].

- (2)

- The definition of system specifications: The definition of system specifications involves the delineation of specific functions and interaction content, mainly including defining surgical processes, safety constraints, and user expectations [].

- (3)

- The generation of product requirements: The standard system and system specifications determine that the product needs to meet different types of requirements. The requirements for surgical robot products are divided into functional and non-functional requirements []. Functional requirements require the system to “achieve” goals, i.e., the indispensable system functions and the interactive operation points; non-functional requirements require the system to “meet” standards, i.e., the system constraints and impacts that the functional operation points should meet, used to guide the generation of specific functional interaction forms. Incorrect definitions of non-functional requirements can lead to errors and safety issues []. Therefore, the correct methods should be used for definition.

- (4)

- The system integration implementation: The development process of the surgical robot system should fully consider user requirements and the effectiveness and usability of the functions. The V-model is a systematic process suitable for the development of medical equipment products, which emphasizes the importance of user requirements and testing work, and can help the development team improve the safety, reliability, and stability of product functions, and meet the requirements related to human–machine interaction in medical devices []. McHugh M et al. [] provided a hybrid agile development model based on the V-model, introducing case studies and user stories, aimed at better meeting user requirements, improving the usability of the interaction system, and the consistency and universality of product functions.

6.2. Assessment Model

After the HCI design is completed, it is necessary to establish evaluation indicators and evaluate the design through actual testing. By summarizing the evaluation indicators for minimally invasive surgery robots, safety evaluation and usability evaluation are identified as the two evaluation dimensions []:

- (1)

- Safety assessment: The purpose of safety evaluation is to identify risks and reduce their likelihood of occurrence. The seven main risk areas of minimally invasive surgery robots are image processing and planning, registration, robot motion, control reliability, vigilance, sanitation and disinfection, and clinical workflow []. Li et al. [] proposed a safety evaluation method for the design of surgical forceps interfaces, and Fei [] proposed a systematic method (hazard identification and safety insurance control) to analyze, control, and evaluate the safety of medical robots.

- (2)

- Usability assessment: Usability evaluation includes the applicability evaluation and ease-of-use evaluation of related products in clinical applications, and can be divided into subjective and objective evaluations based on different evaluation indicators []. Subjective evaluation indicators mainly reflect the user’s subjective feelings; objective evaluation indicators include task completion time, task completion rate, and the size of the exerted force, among others []. Ballester et al. [] proposed that analyzing the entire system (robot + operator) is more important than merely analyzing the performance of subsystems (robot and operator separately), and evaluated different systems from the user operation perspective. Zhou et al. [] established an evaluation model that takes into account both objective and subjective factors, which can evaluate the proficiency of surgical robot users. Hou et al. [] analyzed people’s cognitive patterns and subjective feelings brought about by the design of medical device products through research on the distribution map of imagery scales.

7. Discussion and Future Perspectives

7.1. Development Trends

- (1)

- High degree of intelligence: The interaction system of minimally invasive surgery robots has realized intelligent functions such as surgical space analysis, precise positioning, path planning, and real-time navigation, and has formed intelligent interaction modes such as voice control, eye movement interaction, and tactile feedback. With the innovation and breakthrough of future network technology and control technology, related fields will further deepen the degree of intelligence in operation modes, information analysis and presentation, and evaluation alarms, and improve the safety and usability of the surgical interaction system.

- (2)

- Humanization: The current research on the interaction design direction of minimally invasive surgery robots has not fully reflected the user-centered design concept, and the application innovation led by engineering technology is still the mainstream model. This has also led to high learning costs and operation difficulties in the marketization process of related fields. Future research should focus on user needs and form design innovations driven by users.

- (3)

- Deepening multimodal interaction: Minimally invasive surgery robots have formed visual, tactile, and auditory interaction methods in the form of interaction. Multimodal interaction provides users with more selectable spaces and participation methods while promoting the completion of surgical tasks. However, the development of related systems is not mature yet, and there is huge potential for deepening and improvement in the design.

7.2. Design Challenges

The interaction design of minimally invasive surgery robots should focus on conducting user-centered design research to make up for the shortcomings of related results. Through the above research, it is found that the following two aspects are the problems that need to be faced and solved in this field, and they are also the challenges faced by designers:

- (1)

- Building a user knowledge framework: Integrating user resources and integrating the user knowledge framework has guiding significance for the design of the interaction system of minimally invasive surgery robots. Methods such as contextual inquiry and cognitive task analysis can be used to conduct research to obtain user data. However, the difficulty lies in the need for a large amount of time and manpower costs. Some medical equipment manufacturers do not have the conditions to hire expert doctors to collect information. Therefore, how to integrate user information in the design process is a challenge faced by this related field []. In addition, effectively mapping user input and knowledge to the design and completing the transformation from requirements to design results also pose relevant capability requirements for designers.

- (2)

- Perfecting the design evaluation mechanism: Currently, in the development of the design process of minimally invasive surgery robots, performance evaluation has received more and more attention. In addition, evaluation studies have been conducted from multiple aspects such as safety performance, learning cost, proficiency, and product cognition. However, the existing design evaluation system has not fully considered physiological and psychological factors such as user operation habits, operation comfort, and emotional experience. How to quantify evaluation indicators and obtain accurate design evaluation results through user testing, and, thereby, establish a more scientific and comprehensive design evaluation system, is a challenge faced by the current research in many different fields.

8. Conclusions

This paper selects minimally invasive surgery robots as the research object. By starting from the perspective of interaction design, this paper reviews the existing achievements in terms of interaction design requirements, interaction information content, interaction methods, and interaction design methods. This helps to fill the gap and deficiencies in the review research results in related fields. Based on the above theoretical achievements, this paper envisions the future development trend of interaction design for minimally invasive surgery robots and summarizes and generalizes the problems and challenges faced by related field research, hoping to provide inspiration and guidance for the subsequent deepening of topics in this field.

Author Contributions

Conceptualization, B.S. (Bowen Sun); methodology, D.L. and S.L.; software, B.S. (Bowen Song); validation, C.Q.; formal analysis, B.S. (Bowen Song); investigation, D.L.; resources, C.L.; data curation, C.Q.; writing—original draft preparation, D.L., B.S. (Bowen Song) and S.L.; writing—review and editing, C.Q.; visualization, S.L.; supervision, B.S. (Bowen Sun) and C.L.; project administration, B.S. (Bowen Sun); funding acquisition, B.S. (Bowen Sun), Q.L. and X.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by “Research topic of Philosophy and Social Sciences in Xiongan New Area, Strategic Research on the transformation and upgrading of Traditional Industries Empowered by Industrial Design in Xiongan New Area”, grant number: XASK20220802.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Wang, C.Y.; Xie, G.N.; Zhao, D.N. Overview of the development of medical surgical robots. Tool Technol. 2016, 7, 3–12. [Google Scholar]

- Buettner, R.; Renner, A.; Boos, A. A systematic literature review of research in the surgical field of medical robotics. In Proceedings of the 2020 IEEE 44th Annual Computers, Software, and Applications Conference (COMPSAC), Madrid, Spain, 13–17 July 2020; pp. 517–522. [Google Scholar]

- Navarro, E.M.; Álvarez, A.N.R.; Anguiano, F.I.S. A new telesurgery generation supported by 5G technology: Benefits and future trends. Procedia. Comput. Sci. 2022, 200, 31–38. [Google Scholar] [CrossRef]

- Lu, X.B. Research on Interaction Design Methods in Information Design. Sci. Technol. Rev. 2007, 235, 18–21. [Google Scholar]

- Fischer, G. User modeling in human—Computer interaction. User Model. User-Adapt. Interact. 2001, 11, 65–86. [Google Scholar] [CrossRef]

- Qin, J.Y. Information Dimensions and Interaction Design Principles. Packag. Eng. 2018, 39, 57–68. [Google Scholar]

- Wiebelitz, L.; Schmid, P.; Maier, T. Designing User-friendly Medical AI Applications-Methodical Development of User-centered Design Guidelines. In Proceedings of the 2022 IEEE International Conference on Digital Health (ICDH), Barcelona, Spain, 10–16 July 2022; pp. 23–28. [Google Scholar]

- Hein, A.; Lueth, T.C. Control algorithms for interactive shaping [surgical robots]. In Proceedings of the 2001 IEEE International Conference on Robotics and Automation (ICRA), Seoul, Republic of Korea, 21–26 May 2001; pp. 2025–2030. [Google Scholar]

- Griffin, J.A.; Zhu, W.; Nam, C.S. The role of haptic feedback in robotic-assisted retinal microsurgery systems: A systematic review. IEEE Trans. Haptics 2016, 10, 94–105. [Google Scholar] [CrossRef]

- Wang, G.B.; Peng, F.Y.; Wang, S.X. A PERSPECTIVE ON MEDICAL ROBOTICS FOR MINIMALLY INVASIVE SURGERY Review on the Shuangqing Seminar Titled “Fundamental Theory andKey Technology of Robotics for Minimally Invasive Surgery”. Bull. Natl. Nat. Sci. Found. China 2009, 23, 209–214. [Google Scholar]

- Dogangil, G.; Davies, B.L.; Rodriguez y Baena, F. A review of medical robotics for minimally invasive soft tissue surgery. Proc. Inst. Mech. Eng. H 2010, 224, 653–679. [Google Scholar] [CrossRef]

- Wang, J. Research on Development Status of Surgical Robot Based on Patent Analysis. In Proceedings of the 2021 6th International Conference on Automation, Control and Robotics Engineering (CACRE), Guangzhou, China, 19–22 November 2021; pp. 33–37. [Google Scholar]

- Department of Medicine and Health, Chinese Academy of Engineering; Tsinghua University Institute of Intelligent Medicine. China Smart Health Bluebook; Tsinghua University: Beijing, China, 2022; p. 65. [Google Scholar]

- Yan, Z.Y.; Liang, Y.L.; Du, Z.J. Review on the development of robot technology in Laparoscopy. Robot. Tech. Appl. 2020, 194, 24–29. [Google Scholar]

- Broeders, I.A.M.J.; Ruurda, J. Robotics revolutionizing surgery: The intuitive surgical “da Vinci” system. Ind. Robot. Int. J. 2001, 28, 387–392. [Google Scholar] [CrossRef]

- Fang, P.N. Research on Percutaneous Puncture Path Planning and Remote Control for Minimally Invasive Surgery. Master’s Thesis, Tianjin University, Tianjin, China, 2020. [Google Scholar]

- Duan, X.G.; Wen, H.; He, R. Advances and Key Techniques of Percutaneous Puncture Robots for Thorax and Abdomen. Robot 2021, 43, 567–584. [Google Scholar]

- Duan, X.G.; Chen, Y.; Xu, M.; Liang, P. Liver Tumor Microwave Ablation Surgery Robot. Robot. Tech. Appl. 2011, 4, 33–37. [Google Scholar]

- Zheng, H.Y. Study of Ultrasound-guided Robot for Percutaneous Microwave Ablation of Liver Cancer. Master’s Thesis, Beijing University of Chemical Technology, Beijing, China, 2014. [Google Scholar]

- Wu, H. Study on Information Collection and Control Technology of Robot for Liver Cancer Puncture Ablation. Master’s Thesis, South China University of Technology, Guangzhou, China, 2020. [Google Scholar]

- Khanna, O.; Beasley, R.; Franco, D.; DiMaio, S. The path to surgical robotics in neurosurgery. Oper. Neurosurg. 2021, 20, 514–520. [Google Scholar] [CrossRef]

- Jacofsky, D.J.; Allen, M. Robotics in arthroplasty: A comprehensive review. J. Arthroplast. 2016, 31, 2353–2363. [Google Scholar] [CrossRef]

- Herrell, S.D.; Kwartowitz, D.M.; Milhoua, P.M.; Galloway, R.L. Toward image guided robotic surgery: System validation. J. Urol. 2009, 181, 783–790. [Google Scholar] [CrossRef]

- Wang, P.Q. Summary of research on navigation and control technology for surgical robots. China Sci. Technol. Panor. Mag. 2017, 57–59. [Google Scholar]

- Crowther, M. Phase 4 research: What happens when the rubber meets the road? Hematology. Am. Soc. Hematol. Educ. Program. 2013, 2013, 15–18. [Google Scholar] [CrossRef] [PubMed][Green Version]

- Su, H. Design Method and Space Mapping of Minimally Invasive Surgical Robot Image System. Ph.D. Thesis, Tianjin University, Tianjin, China, 2016. [Google Scholar]

- Sun, Q.H. Methodology Based on Information Interaction Framework for Minimally Invasive Surgical Robot Design. Master’s Thesis, Harbin Institute of Technology, Harbin, China, 2020. [Google Scholar]

- Wang, R. The Research of Interaction Design of Tele-Surgery Guidance and Teaching System. Master’s Thesis, East China University of Science and Technology, Shanghai, China, 2015. [Google Scholar]

- O’toole, M.D.; Bouazza-Marouf, K.; Kerr, D.; Gooroochurn, M.; Vloeberghs, M. A methodology for design and appraisal of surgical robotic systems. Robotica 2010, 28, 297–310. [Google Scholar] [CrossRef]

- Culjat, M.O.; Bisley, J.W.; King, C.H. Tactile feedback in surgical robotics. In Surgical Robotics: Systems Applications and Visions; Springer: Berlin/Heidelberg, Germany, 2011; pp. 449–468. [Google Scholar]

- Zhang, Y.; Chen, X.; Liu, R.; Liu, B. Advances in interactive robotic system for minimally invasive surgery. Beijing Biomed. Eng. 2014, 33, 650–654+657. [Google Scholar]

- Li, W.G.; Cui, J. Robot-assisted surgery: History, current status and future prospects. Chin. J. Mod. Med. 2012, 22, 45–50. [Google Scholar]

- Alaa, E.A.; Prateek, M.; Septimiu, E.S. Robotics In Vivo: A Perspective on Human–Robot Interaction in Surgical Robotics. Annu. Rev. Control Robot. Auton. Syst. 2020, 3, 221–242. [Google Scholar]

- Ji, Z.P. Development of minimally invasive surgery robot. Mod. Manuf. Eng. 2017, 444, 149+157–161. [Google Scholar]

- Taylor, R.H.; Menciassi, A.; Fichtinger, G.; Fiorini, P.; Dario, P. Medical robotics and computer-integrated surgery. In Springer Handbook of Robotics; Springer: Cham, Switzerland, 2016; pp. 1657–1684. [Google Scholar]

- Piao, M.B.; Fu, Y.L.; Wang, S.G. Development of surgical assistant robots and analysis of key technologies. Mach. Des. Manuf. 2008, 1, 174–176. [Google Scholar]

- Li, Z.; Wang, G.Z.; Ling, H.; Zhang, G.K.; Li, W.; Zhou, Z.R.; Wang, S.X.; Zhu, S.H. Evaluation of Master/Slave Mapping Proportion of Minimally Invasive Surgery Robot Based on Intraoperative Trajectory. Chin. J. Mech. Eng. 2018, 54, 69–75. [Google Scholar] [CrossRef]

- Norman, D.A. Emotional Design: Why We Love (or Hate) Everyday Things; Basic Books: New York, NY, USA, 2004. [Google Scholar]

- Guo, Y.; Yang, Y.; Feng, M. Review on development status and key technologies of surgical robots. In Proceedings of the 2020 IEEE International Conference on Mechatronics and Automation (ICMA), Beijing, China, 13–16 October 2020; pp. 105–110. [Google Scholar]

- Xie, P.; Wang, L.N. Overview of patents for intelligent human-machine interaction technology of surgical robots. Electron. Compon. Inf. Technol. 2021, 5, 107–108. [Google Scholar]

- Trejos, A.L.; Patel, R.V.; Malthaner, R.A.; Schlachta, C.M. Development of force-based metrics for skills assessment in minimally invasive surgery. Surg. Endosc. 2014, 28, 2106–2119. [Google Scholar] [CrossRef]

- COWLEY, G. Introducing“Robodoc”. A robot finds his calling-inthe operating room. Newsweek 1992, 120, 86. [Google Scholar]

- Kalan, S.; Chauhan, S.; Coelho, R.F. History of robotic surgery. J. Robot. Surg. 2010, 4, 141–147. [Google Scholar] [CrossRef]

- Vaida, C.; Andras, I.; Birlescu, I. Preliminary control design of a single-incision laparoscopic surgery robotic system. In Proceedings of the 2021 25th International Conference on System Theory, Control and Computing (ICSTCC), Iasi, Romania, 20–23 October 2021; pp. 384–389. [Google Scholar]

- Liao, Y.B. Research on The Control Method of Robot in Ophthalmic Microsurgical. Master’s Thesis, Harbin Institute of Technology, Harbin, China, 2020. [Google Scholar]

- Dagnino, G.; Georgilas, I.; Köhler, P. Navigation system for robot-assisted intra-articular lower-limb fracture surgery. Int. J. Comput. Assist. Radiol. Surg. 2016, 11, 1831–1843. [Google Scholar] [CrossRef]

- Georgilas, I.; Dagnino, G.; Tarassoli, P. Robot-assisted fracture surgery: Surgical requirements and system design. Ann. Biomed. Eng. 2018, 46, 1637–1649. [Google Scholar] [CrossRef]

- Pianka, F.; Baumhauer, M.; Stein, D. Liver tissue sparing resection using a novel planning tool. Langenbecks Arch. Surg. 2011, 396, 201–208. [Google Scholar] [CrossRef] [PubMed]

- Zeng, N.; Tao, H.; Fang, C. Individualized preoperative planning using three-dimensional modeling for Bismuth and Corlette type III hilar cholangiocarcinoma. World J. Surg. Oncol. 2016, 14, 44. [Google Scholar] [CrossRef] [PubMed]

- Roberti, A.; Piccinelli, N.; Falezza, F. A time-of-flight stereoscopic endoscope for anatomical 3D reconstruction. In Proceedings of the 2021 International Symposium on Medical Robotics (ISMR), Atlanta, GA, USA, 17–19 November 2021; pp. 1–7. [Google Scholar]

- Fang, C.; An, J.; Bruno, A. Consensus recommendations of three-dimensional visualization for diagnosis and management of liver diseases. Hepatol. Int. 2020, 14, 437–453. [Google Scholar] [CrossRef] [PubMed]

- Lane, T. A short history of robotic surgery. Ann. R. Coll. Surg. Engl. 2018, 100, 5–7. [Google Scholar] [CrossRef]

- Morrell, A.L.G.; Morrell-Junior, A.C.; Morrell, A.G. The history of robotic surgery and its evolution: When illusion becomes reality. Rev. Col. Bras. Cir. 2021, 48, e20202798. [Google Scholar] [CrossRef]

- Kim, J.; Lee, Y.J.; Ko, S.Y. Compact camera assistant robot for minimally invasive surgery: KaLAR. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004; pp. 2587–2592. [Google Scholar]

- Tang, M. Research on Monocular Reconstruction and Voice Control for Robot-Assisted Minimally Invasive Surgery. Master’s Thesis, Harbin Institute of Technology, Harbin, China, 2020. [Google Scholar]

- Elazzazi, M.; Jawad, L.; Hilfi, M. A Natural Language Interface for an Autonomous Camera Control System on the da Vinci Surgical Robot. Robotics 2022, 11, 40. [Google Scholar] [CrossRef]

- Zinchenko, K.; Wu, C.Y.; Song, K.T. A study on speech recognition control for a surgical robot. IEEE Trans. Ind. Inform. 2016, 13, 607–615. [Google Scholar] [CrossRef]

- Li, Z.; Chiu, P.W.Y. Robotic endoscopy. Visc. Med. 2018, 34, 45–51. [Google Scholar] [CrossRef]

- Li, S.; Zhang, J.; Xue, L. Attention-aware robotic laparoscope for human-robot cooperative surgery. In Proceedings of the 2013 IEEE International Conference on Robotics and Biomimetics (ROBIO), Shenzhen, China, 12–14 December 2013; pp. 792–797. [Google Scholar]

- Guo, J.; Liu, Y.; Qiu, Q. A novel robotic guidance system with eye-gaze tracking control for needle-based interventions. IEEE Trans. Cogn. Dev. Syst. 2019, 13, 179–188. [Google Scholar] [CrossRef]

- Shi, L.; Copot, C.; Vanlanduit, S. Application of visual servoing and eye tracking glass in human robot interaction: A case study. In Proceedings of the 2019 23rd International Conference on System Theory, Control and Computing (ICSTCC), Sinaia, Romania, 9–11 October 2019; pp. 515–520. [Google Scholar]

- Li, P.; Hou, X.; Duan, X. Appearance-based gaze estimator for natural interaction control of surgical robots. IEEE Access 2019, 7, 25095–25110. [Google Scholar] [CrossRef]

- Cao, Y.; Miura, S.; Kobayashi, Y. Pupil variation applied to the eye tracking control of an endoscopic manipulator. IEEE Robot. Autom. Lett. 2016, 1, 531–538. [Google Scholar] [CrossRef]

- Fujii, K.; Gras, G.; Salerno, A. Gaze gesture based human robot interaction for laparoscopic surgery. Med. Image. Anal. 2018, 44, 196–214. [Google Scholar] [CrossRef] [PubMed]

- Tostado, P.M.; Abbott, W.W.; Faisal, A.A. 3D gaze cursor: Continuous calibration and end-point grasp control of robotic actuators. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 3295–3300. [Google Scholar]

- Xin, H.; Zelek, J.S.; Carnahan, H. Laparoscopic surgery, perceptual limitations and force: A review. In Proceedings of the First Canadian Student Conference on Biomedical Computing, Kingston, ON, Canada, 25–26 January 2006. [Google Scholar]

- Alkatout, I.; Salehiniya, H.; Allahqoli, L. Assessment of the Versius robotic surgical system in minimal access surgery: A systematic review. J. Clin. Med. 2022, 11, 3754. [Google Scholar] [CrossRef] [PubMed]

- deBeche-Adams, T.; Eubanks, W.S.; de la Fuente, S.G. Early experience with the Senhance®-laparoscopic/robotic platform in the US. J. Robot. Surg. 2019, 13, 357–359. [Google Scholar] [CrossRef] [PubMed]

- Kaštelan, Ž.; Knežević, N.; Hudolin, T.; Kuliš, T.; Penezić, L.; Goluža, E.; Gidaro, S.; Ćorušić, A. Extraperitoneal radical prostatectomy with the Senhance Surgical System robotic platform. Croat. Med. J. 2019, 60, 556–559. [Google Scholar] [CrossRef]

- Millan, B.; Nagpal, S.; Ding, M. A scoping review of emerging and established surgical robotic platforms with applications in urologic surgery. Société Int. D’urologie J. 2021, 2, 300–310. [Google Scholar] [CrossRef]

- Eltaib, M.E.H.; Hewit, J.R. Tactile sensing technology for minimal access surgery—A review. Mechatronics 2003, 13, 1163–1177. [Google Scholar] [CrossRef]

- Amirabdollahian, F.; Livatino, S.; Vahedi, B. Prevalence of haptic feedback in robot-mediated surgery: A systematic review of literature. J. Robot. Surg. 2018, 12, 11–25. [Google Scholar] [CrossRef]

- Wottawa, C.R.; Genovese, B.; Nowroozi, B.N. Evaluating tactile feedback in robotic surgery for potential clinical application using an animal model. Surg. Endosc. 2016, 30, 3198–3209. [Google Scholar] [CrossRef]

- Lim, S.C.; Lee, H.K.; Park, J. Role of combined tactile and kinesthetic feedback in minimally invasive surgery. Int. J. Med. Robot. 2015, 11, 360–374. [Google Scholar] [CrossRef]

- Jin, X.; Guo, S.; Guo, J. Development of a tactile sensing robot-assisted system for vascular interventional surgery. IEEE Sens. J. 2021, 21, 12284–12294. [Google Scholar] [CrossRef]

- Park, Y.J.; Lee, E.S.; Choi, S.B. A Cylindrical Grip Type of Tactile Device Using Magneto-Responsive Materials Integrated with Surgical Robot Console: Design and Analysis. Sensors 2022, 22, 1085. [Google Scholar] [CrossRef] [PubMed]

- Kim, S.; Kim, P.; Park, C.Y. A new tactile device using magneto-rheological sponge cells for medical applications: Experimental investigation. Sens. Actuators A Phys. 2016, 239, 61–69. [Google Scholar] [CrossRef]

- Martin, J.L.; Norris, B.J.; Murphy, E. Medical device development: The challenge for ergonomics. Appl. Ergon. 2008, 39, 271–283. [Google Scholar] [CrossRef]

- Sánchez, A.; Poignet, P.; Dombre, E. A design framework for surgical robots: Example of the Araknes robot controller. Robot. Auton. Syst. 2014, 62, 1342–1352. [Google Scholar] [CrossRef]

- Dombre, E.; Poignet, P.; Pierrot, F. Design of medical robots. Med. Robot. 2013, 141–176. [Google Scholar]

- DeFranco, J.; Kassab, M.; Laplante, P. The nonfunctional requirement focus in medical device software: A systematic mapping study and taxonomy. Innov. Syst. Softw. Eng. 2017, 13, 81–100. [Google Scholar] [CrossRef]

- McHugh, M.; Cawley, O.; McCaffcry, F. An agile v-model for medical device software development to overcome the challenges with plan-driven software development lifecycles. In Proceedings of the 2013 5th International Workshop on software engineering in health care (SEHC), San Francisco, CA, USA, 20–21 May 2013; pp. 12–19. [Google Scholar]

- Korb, W.; Kornfeld, M.; Birkfellner, W. Risk analysis and safety assessment in surgical robotics: A case study on a biopsy robot. Minim. Invasive. Ther. Allied. Technol. 2005, 14, 23–31. [Google Scholar] [CrossRef]

- Li, W.Z. Research on Assessment Method of Interface Design of Minimally Invasive Grasping Forceps. Ph.D. Thesis, Central South University, Changsha, China, 2014. [Google Scholar]

- Fei, B.; Ng, W.S.; Chauhan, S.; Kwoh, C.K. The safety issues of medical robotics. Reliab. Eng. Syst. Saf. 2001, 73, 183–192. [Google Scholar] [CrossRef]

- Gildersleeve, M.J. Mutual enlightenment: Augmenting human factors research in surgical robotics. IEEE Pulse 2013, 4, 26–31. [Google Scholar] [CrossRef]

- Ballester, P.; Jain, Y.; Haylett, K.R. Comparison of task performance of robotic camera holders EndoAssist and Aesop. Int. Congr. Ser. 2001, 1230, 1100–1103. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, C.; Zhu, L. Study on Operation Proficiency of Surgery Robot User. In Proceedings of the 2016 6th International Conference on Machinery, Materials, Environment, Biotechnology and Computer, Tianjin, China, 11–12 June 2016; pp. 966–971. [Google Scholar]

- Hou, F.; Wang, X.P.; Zhao, Y. Preliminary study on image scale of medical instruments in human-computer interface design. Beijing Biomed. Eng. 2008, 27, 413–415. [Google Scholar]

- Hagedorn, T.J.; Grosse, I.R.; Krishnamurty, S. A concept ideation framework for medical device design. J. Biomed. Inform. 2015, 55, 218–230. [Google Scholar] [CrossRef] [PubMed]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).