Attention-Based Hybrid Deep Learning Network for Human Activity Recognition Using WiFi Channel State Information

Abstract

1. Introduction

- Development of a novel DL framework that enables HAR using WiFi CSI measurements, eliminating the need for manual feature extraction.

- Introduction of a hybrid DL network, CNN-GRU-AttNet, that leverages the strengths of CNN and GRU to automatically extract spatial and temporal features, leading to highly accurate HAR results.

- Integration of an attention mechanism into the CNN-GRU-AttNet network, allowing for the prioritization of important features and time steps, thereby enhancing recognition performance.

- Thorough evaluation of the proposed approach through a series of rigorous experiments, demonstrating its superior performance in HAR using WiFi CSI data.

2. Related Works

2.1. CSI-Based HAR

2.2. DL for HAR

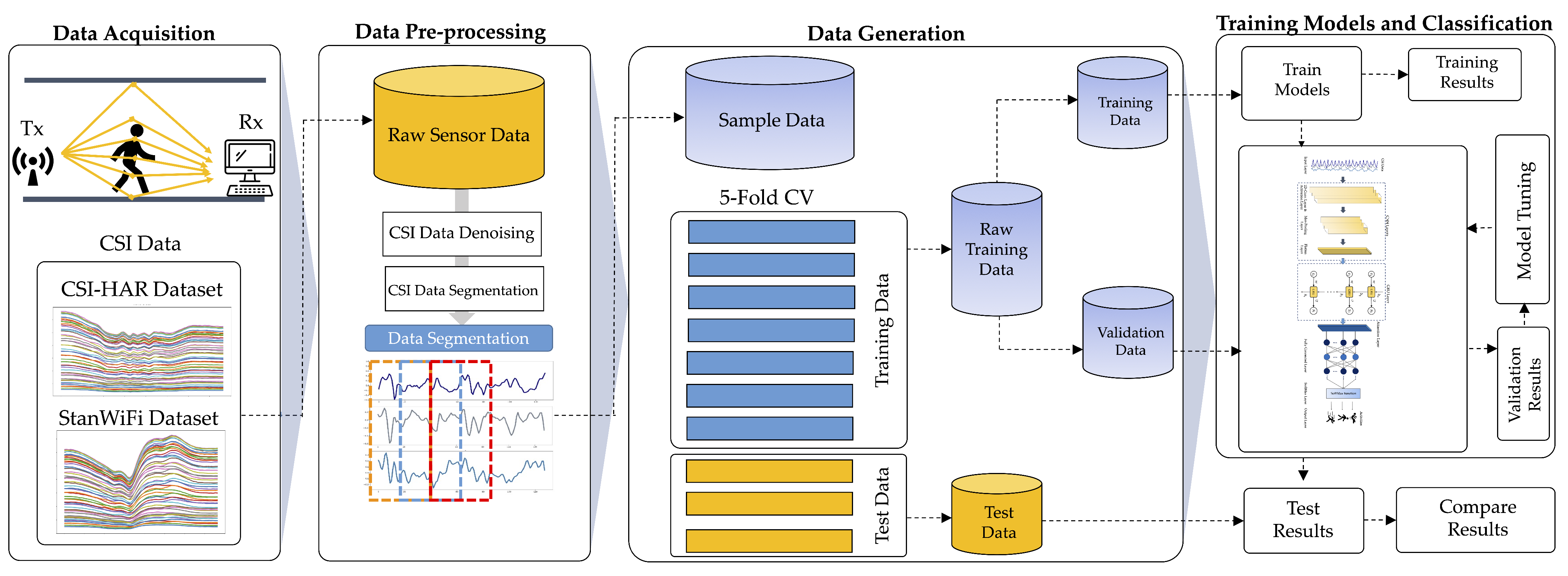

3. Proposed Methodology

3.1. Data Acquisition

3.1.1. CSI-HAR Dataset

3.1.2. StanWiFi Dataset

3.2. Data Pre-Processing

3.2.1. Data Denoising

3.2.2. Segmentation

3.3. Recognition Model

3.4. Hyperparameter and Training

3.5. Network Training and Evaluation Metrics

- True positive: ;

- False positive: ;

- False negative: ;

- True negative: .

4. Experiments and Findings

4.1. Experimental Setting

- To facilitate the comprehension, manipulation, and analysis of sensor data, we employed Numpy and Pandas for efficient data manipulation.

- For effective presentation and visualization of data exploration and model evaluation results, we utilized Matplotlib and Seaborn.

- In our experimental procedures, we leveraged the Scikit-learn library as a tool for data sampling and generation.

- The instantiation and training of the DL models were carried out utilized the TensorFlow, Keras, and TensorBoard frameworks.

4.2. Experimental Findings on CSI-HAR Dataset

4.3. Experimental Findings on StanWiFi Dataset

5. Discussion

5.1. Performance Comparison

5.2. Impact of the Attention Mechanism

5.3. Impact of the PCA Denoising Method

5.4. Performance Analysis for Different Subjects

5.5. Limitations of the Proposed Method

6. Conclusions for Future Research

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hnoohom, N.; Mekruksavanich, S.; Jitpattanakul, A. An Efficient ResNetSE Architecture for Smoking Activity Recognition from Smartwatch. Intell. Autom. Soft Comput. 2023, 35, 1245–1259. [Google Scholar] [CrossRef]

- Thanarajan, T.; Alotaibi, Y.; Rajendran, S.; Nagappan, K. Improved wolf swarm optimization with deep-learning-based movement analysis and self-regulated human activity recognition. AIMS Math. 2023, 8, 12520–12539. [Google Scholar] [CrossRef]

- Mekruksavanich, S.; Jitpattanakul, A. RNN-based deep learning for physical activity recognition using smartwatch sensors: A case study of simple and complex activity recognition. Math. Biosci. Eng. 2022, 19, 5671–5698. [Google Scholar] [CrossRef]

- Jalal, A.; Kim, Y.H.; Kim, Y.J.; Kamal, S.; Kim, D. Robust human activity recognition from depth video using spatiotemporal multi-fused features. Pattern Recognit. 2017, 61, 295–308. [Google Scholar] [CrossRef]

- Sharma, V.; Gupta, M.; Pandey, A.K.; Mishra, D.; Kumar, A. A Review of Deep Learning-based Human Activity Recognition on Benchmark Video Datasets. Appl. Artif. Intell. 2022, 36, 2093705. [Google Scholar] [CrossRef]

- Vrskova, R.; Kamencay, P.; Hudec, R.; Sykora, P. A New Deep-Learning Method for Human Activity Recognition. Sensors 2023, 23, 2816. [Google Scholar] [CrossRef]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J.M. Complex Human Activity Recognition Using Smartphone and Wrist-Worn Motion Sensors. Sensors 2016, 16, 426. [Google Scholar] [CrossRef]

- Reyes-Ortiz, J.L.; Oneto, L.; Samà, A.; Parra, X.; Anguita, D. Transition-Aware Human Activity Recognition Using Smartphones. Neurocomputing 2016, 171, 754–767. [Google Scholar] [CrossRef]

- Mekruksavanich, S.; Hnoohom, N.; Jitpattanakul, A. A Hybrid Deep Residual Network for Efficient Transitional Activity Recognition Based on Wearable Sensors. Appl. Sci. 2022, 12, 4988. [Google Scholar] [CrossRef]

- Yan, H.; Zhang, Y.; Wang, Y.; Xu, K. WiAct: A Passive WiFi-Based Human Activity Recognition System. IEEE Sensors J. 2020, 20, 296–305. [Google Scholar] [CrossRef]

- Liu, F.; Cui, Y.; Masouros, C.; Xu, J.; Han, T.X.; Eldar, Y.C.; Buzzi, S. Integrated Sensing and Communications: Toward Dual-Functional Wireless Networks for 6G and Beyond. IEEE J. Sel. Areas Commun. 2022, 40, 1728–1767. [Google Scholar] [CrossRef]

- Wang, W.; Liu, A.X.; Shahzad, M.; Ling, K.; Lu, S. Device-Free Human Activity Recognition Using Commercial WiFi Devices. IEEE J. Sel. Areas Commun. 2017, 35, 1118–1131. [Google Scholar] [CrossRef]

- Sigg, S.; Scholz, M.; Shi, S.; Ji, Y.; Beigl, M. RF-Sensing of Activities from Non-Cooperative Subjects in Device-Free Recognition Systems Using Ambient and Local Signals. IEEE Trans. Mob. Comput. 2014, 13, 907–920. [Google Scholar] [CrossRef]

- Muaaz, M.; Chelli, A.; Pätzold, M. WiHAR: From Wi-Fi Channel State Information to Unobtrusive Human Activity Recognition. In Proceedings of the 2020 IEEE 91st Vehicular Technology Conference (VTC2020-Spring), Antwerp, Belgium, 25–28 May 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Cheng, X.; Huang, B. CSI-Based Human Continuous Activity Recognition Using GMM–HMM. IEEE Sensors J. 2022, 22, 18709–18717. [Google Scholar] [CrossRef]

- Dang, X.; Cao, Y.; Hao, Z.; Liu, Y. WiGId: Indoor Group Identification with CSI-Based Random Forest. Sensors 2020, 20, 4607. [Google Scholar] [CrossRef] [PubMed]

- Alsaify, B.A.; Almazari, M.M.; Alazrai, R.; Alouneh, S.; Daoud, M.I. A CSI-Based Multi-Environment Human Activity Recognition Framework. Appl. Sci. 2022, 12, 930. [Google Scholar] [CrossRef]

- Moghaddam, M.G.; Shirehjini, A.A.N.; Shirmohammadi, S. A WiFi-based System for Recognizing Fine-grained Multiple-Subject Human Activities. In Proceedings of the 2022 IEEE International Instrumentation and Measurement Technology Conference (I2MTC), Ottawa, ON, Canada, 16–19 May 2022; pp. 1–6. [Google Scholar] [CrossRef]

- Yousefi, S.; Narui, H.; Dayal, S.; Ermon, S.; Valaee, S. A Survey on Behavior Recognition Using WiFi Channel State Information. IEEE Commun. Mag. 2017, 55, 98–104. [Google Scholar] [CrossRef]

- Chen, Z.; Zhang, L.; Jiang, C.; Cao, Z.; Cui, W. WiFi CSI Based Passive Human Activity Recognition Using Attention Based BLSTM. IEEE Trans. Mob. Comput. 2019, 18, 2714–2724. [Google Scholar] [CrossRef]

- Abdelnasser, H.; Youssef, M.; Harras, K.A. WiGest: A ubiquitous WiFi-based gesture recognition system. In Proceedings of the 2015 IEEE Conference on Computer Communications (INFOCOM), Hong Kong, China, 26 April–1 May 2015; pp. 1472–1480. [Google Scholar] [CrossRef]

- Gu, Y.; Quan, L.; Ren, F. WiFi-assisted human activity recognition. In Proceedings of the 2014 IEEE Asia Pacific Conference on Wireless and Mobile, Bali, Indonesia, 28–30 August 2014; pp. 60–65. [Google Scholar] [CrossRef]

- Yang, Z.; Zhou, Z.; Liu, Y. From RSSI to CSI: Indoor Localization via Channel Response. ACM Comput. Surv. 2013, 46, 1–32. [Google Scholar] [CrossRef]

- Zhang, D.; Wang, H.; Wu, D. Toward Centimeter-Scale Human Activity Sensing with Wi-Fi Signals. Computer 2017, 50, 48–57. [Google Scholar] [CrossRef]

- Wang, Y.; Liu, J.; Chen, Y.; Gruteser, M.; Yang, J.; Liu, H. E-Eyes: Device-Free Location-Oriented Activity Identification Using Fine-Grained WiFi Signatures. In Proceedings of the 20th Annual International Conference on Mobile Computing and Networking (MobiCom ’14), New York, NY, USA, 7–11 September 2014; pp. 617–628. [Google Scholar] [CrossRef]

- Wang, F.; Panev, S.; Dai, Z.; Han, J.; Huang, D. Can WiFi Estimate Person Pose? arXiv 2019, arXiv:1904.00277. [Google Scholar]

- Moshiri, P.F.; Shahbazian, R.; Nabati, M.; Ghorashi, S.A. A CSI-Based Human Activity Recognition Using Deep Learning. Sensors 2021, 21, 7225. [Google Scholar] [CrossRef]

- Chahoushi, M.; Nabati, M.; Asvadi, R.; Ghorashi, S.A. CSI-Based Human Activity Recognition Using Multi-Input Multi-Output Autoencoder and Fine-Tuning. Sensors 2023, 23, 3591. [Google Scholar] [CrossRef] [PubMed]

- Elbayad, M.; Besacier, L.; Verbeek, J. Pervasive Attention: 2D Convolutional Neural Networks for Sequence-to-Sequence Prediction. arXiv 2018, arXiv:1808.03867. [Google Scholar]

- Schuster, M.; Paliwal, K. Bidirectional recurrent neural networks. IEEE Trans. Signal Process. 1997, 45, 2673–2681. [Google Scholar] [CrossRef]

- Zhang, J.; Wu, F.; Wei, B.; Zhang, Q.; Huang, H.; Shah, S.W.; Cheng, J. Data Augmentation and Dense-LSTM for Human Activity Recognition Using WiFi Signal. IEEE Internet Things J. 2021, 8, 4628–4641. [Google Scholar] [CrossRef]

- Shang, S.; Luo, Q.; Zhao, J.; Xue, R.; Sun, W.; Bao, N. LSTM-CNN network for human activity recognition using WiFi CSI data. J. Phys. Conf. Ser. 2021, 1883, 012139. [Google Scholar] [CrossRef]

- Yadav, S.K.; Sai, S.; Gundewar, A.; Rathore, H.; Tiwari, K.; Pandey, H.M.; Mathur, M. CSITime: Privacy-preserving human activity recognition using WiFi channel state information. Neural Networks 2022, 146, 11–21. [Google Scholar] [CrossRef] [PubMed]

- Wang, W.; Liu, A.X.; Shahzad, M.; Ling, K.; Lu, S. Understanding and Modeling of WiFi Signal Based Human Activity Recognition. In Proceedings of the 21st Annual International Conference on Mobile Computing and Networking (MobiCom ’15), New York, NY, USA, 7–11 September 2015; pp. 65–76. [Google Scholar] [CrossRef]

- Kingma, D.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–8 May 2015. [Google Scholar]

- Stone, M. Cross-Validatory Choice and Assessment of Statistical Predictions. J. R. Stat. Soc. Ser. B (Methodol.) 1974, 36, 111–147. [Google Scholar] [CrossRef]

- Luong, T.; Pham, H.; Manning, C.D. Effective Approaches to Attention-based Neural Machine Translation. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1412–1421. [Google Scholar] [CrossRef]

| Year | Classifier Model | Dataset | Physical Activities | Accuracy |

|---|---|---|---|---|

| 2019 | 1D-CNN [26] | Private | six casual activities | 88.13% |

| 2017 | LSTM [19] | StanWiFi | lie down; fall; walk; run; sit down; stand up | 90.50% |

| 2019 | ABLSTM [20] | StanWiFi | lie down; fall; walk; run; sit down; stand up | 97.30% |

| Private | empty; jump; pick up; run; sit down; wave hand; walk make phone call; check wristwatch; walk normal and fast; run; jump; lie down; play guitar and piano; play basketball | 90.00% | ||

| 2020 | Dense-LSTM [31] | Private | ||

| 2021 | LSTM-CNN [32] | Private | stand; sit, falling; standing up; stepping | 94.14% |

| 2021 | 2D-CNN [27] | CSI-HAR | lie down; fall; bend; run; sit down; stand up; walk | 95.00% |

| 2022 | CSITime [33] | StanWiFi | lie down; fall; walk; run; sit down; stand up | 98.00% |

| 2023 | MIMI-AE [28] | CSI-HAR | lie down; fall; bend; run; sit down; stand up; walk | 94.49% |

| Dataset | No. of Participants (Age Range) | Collection Tools | Bandwidth and Number of Subcarries | Activities | No. of Samples |

|---|---|---|---|---|---|

| CSI-HAR | 3 (25 to 70 yrs) | Raspberry Pi-4B | 40 MHz and | Lie down | 405 |

| Nexmon CSI Tool | 52 Subcarriers | Fall | 437 | ||

| Bend | 415 | ||||

| Run | 449 | ||||

| Sit down | 413 | ||||

| Stand up | 348 | ||||

| Walk | 398 | ||||

| StanWiFi | 6 (unidentified) | Intel 5300 NIC | 20 MHz and | Lie down | 657 |

| 30 Subcarriers | Fall | 443 | |||

| Run | 1209 | ||||

| Sit down | 400 | ||||

| Stand up | 304 | ||||

| Walk | 1465 |

| Layer Name | Kernel Size | Kernel Number | Padding | Stride |

|---|---|---|---|---|

| Conv1D-1 | 5 | 64 | 2 | 4 |

| Maxpooling-1 | 2 | None | 0 | 1 |

| Conv1D-2 | 7 | 64 | 2 | 1 |

| Maxpooling-2 | 2 | None | 0 | 1 |

| Model | Recognition Performance (Mean ± Std) | |||

|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-Score | |

| CNN | 95.67% (±1.57%) | 95.97% (±1.46%) | 95.76% (±1.51%) | 95.66% (±1.60%) |

| LSTM | 84.12% (±1.22%) | 84.56% (±1.14%) | 84.17% (±1.24%) | 84.10% (±1.18%) |

| BiLSTM | 90.44% (±0.86%) | 90.49% (±0.86%) | 90.38% (±0.84%) | 90.34% (±0.88%) |

| GRU | 89.21% (±2.86%) | 89.14% (±2.92%) | 89.12% (±2.87%) | 89.06% (±2.89%) |

| BiGRU | 95.39% (±0.92%) | 95.38% (±0.94%) | 95.37% (±0.96%) | 95.31% (±0.95%) |

| CNN-GRU-AttNet | 99.62% (±0.26%) | 99.61% (±0.26%) | 99.61% (±0.27%) | 99.61% (±0.26%) |

| Model | Recognition Performance (Mean ± Std) | |||

|---|---|---|---|---|

| Accuracy | Precision | Recall | F1-Score | |

| CNN | 89.08% (±4.61%) | 87.52% (±5.47%) | 89.49% (±3.48%) | 87.55% (±4.74%) |

| LSTM | 93.95% (±2.15%) | 91.32% (±2.66%) | 94.80% (±1.64%) | 92.75% (±2.25%) |

| BiLSTM | 94.73% (±1.73%) | 92.25% (±2.15%) | 94.74% (±1.18%) | 93.18% (±1.77%) |

| GRU | 94.84% (±2.52%) | 92.68% (±3.44%) | 94.84% (±2.32%) | 93.35% (±3.12%) |

| BiGRU | 95.73% (±2.64%) | 94.70% (±2.39%) | 95.02% (±3.78%) | 94.62% (±3.38%) |

| CNN-GRU-AttNet | 98.66% (±0.26%) | 98.43% (±0.29%) | 97.88% (±0.59%) | 98.14% (±0.42%) |

| Dataset | Classifier | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| CSI-HAR | 2D-CNN [27] | 95.00% | - | - | - |

| MIMI-AE [28] | 94.49% | - | - | - | |

| CNN-GRU-AttNet | 99.62% | 99.61% | 99.61% | 99.61% | |

| StanWiFi | LSTM [19] | 90.50% | - | - | - |

| ABLSTM [20] | 97.30% | - | - | - | |

| CSITime [33] | 98.00% | - | - | - | |

| CNN-GRU-AttNet | 98.66% | 98.43% | 97.88% | 98.14% |

| Dataset | Classifier | Accuracy | Precision | Recall | F1-Score |

|---|---|---|---|---|---|

| CSI-HAR | CNN-GRU-AttNet using CSI data without the PCA denosing method | 98.19% | 98.27% | 98.17% | 98.17% |

| CNN-GRU-AttNet using denoised CSI data with the PCA denosing method | 99.62% | 99.61% | 99.61% | 99.61% | |

| StanWiFi | CNN-GRU-AttNet using CSI data without the PCA denosing method | 97.66% | 97.13% | 97.00% | 97.04% |

| CNN-GRU-AttNet using denoised CSI data with the PCA denosing method | 98.66% | 98.43% | 97.88% | 98.14% |

| Subject | Activity | Recognition Performance | ||

|---|---|---|---|---|

| Accuracy | Recall | F1-Score | ||

| Subject 1 | Lie down | 82.4% | 100.0% | 90.3% |

| (an adult) | Fall | 100.0% | 96.6% | 98.2% |

| Bend | 86.2% | 100.0% | 92.6% | |

| Run | 92.1% | 100.0% | 95.9% | |

| Sit down | 100.0% | 76.9% | 87.0% | |

| Stand up | 100.0% | 87.0% | 93.0% | |

| Walk | 100.0% | 89.3% | 94.3% | |

| Subject 2 | Lie down | 100.0% | 96.3% | 98.1% |

| (a middle-aged person) | Fall | 100.0% | 100.0% | 100.0% |

| Bend | 100.0% | 100.0% | 100.0% | |

| Run | 100.0% | 100.0% | 100.0% | |

| Sit down | 96.6% | 96.6% | 96.6% | |

| Stand up | 100.0% | 100.0% | 100.0% | |

| Walk | 96.7% | 100.0% | 98.3% | |

| Subject 3 | Lie down | 100.0% | 100.0% | 100.0% |

| (an elderly person) | Fall | 100.0% | 93.3% | 96.6% |

| Bend | 88.9% | 100.0% | 94.1% | |

| Run | 100.0% | 95.5% | 97.7% | |

| Sit down | 100.0% | 92.9% | 96.3% | |

| Stand up | 92.6% | 100.0% | 96.2% | |

| Walk | 100.0% | 100.0% | 100.0% | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mekruksavanich, S.; Phaphan, W.; Hnoohom, N.; Jitpattanakul, A. Attention-Based Hybrid Deep Learning Network for Human Activity Recognition Using WiFi Channel State Information. Appl. Sci. 2023, 13, 8884. https://doi.org/10.3390/app13158884

Mekruksavanich S, Phaphan W, Hnoohom N, Jitpattanakul A. Attention-Based Hybrid Deep Learning Network for Human Activity Recognition Using WiFi Channel State Information. Applied Sciences. 2023; 13(15):8884. https://doi.org/10.3390/app13158884

Chicago/Turabian StyleMekruksavanich, Sakorn, Wikanda Phaphan, Narit Hnoohom, and Anuchit Jitpattanakul. 2023. "Attention-Based Hybrid Deep Learning Network for Human Activity Recognition Using WiFi Channel State Information" Applied Sciences 13, no. 15: 8884. https://doi.org/10.3390/app13158884

APA StyleMekruksavanich, S., Phaphan, W., Hnoohom, N., & Jitpattanakul, A. (2023). Attention-Based Hybrid Deep Learning Network for Human Activity Recognition Using WiFi Channel State Information. Applied Sciences, 13(15), 8884. https://doi.org/10.3390/app13158884