Swin–MRDB: Pan-Sharpening Model Based on the Swin Transformer and Multi-Scale CNN

Abstract

:1. Introduction

- A pan-sharpening network with three feature extraction branches was designed to extract and combine valuable information from the various input branches.

- The Swin transformer feature extraction module was designed to extract global features from panchromatic and hyperspectral images.

- Multi-scale residuals and dense blocks were added to the network to extract multi-scale local features from hyperspectral images in the image.

- The residual fusion module was used to reconstruct the retrieved features in order to decrease feature loss during fusion.

2. Methods

2.1. Existing Methods

| Algorithm | Technology | Algorithm Type | Reference |

|---|---|---|---|

| PCA | Band replacement | traditional method | [20] |

| GS | Band replacement | traditional method | [21] |

| IHS | Spatial transformation | traditional method | [26] |

| PNN | Two-stage network | deep learning | [23] |

| TFNET | Two-stream network | deep learning | [24] |

| MSDCNN | Two-stream network | deep learning | [25] |

| SRPPNN | Characteristic injection network | deep learning | [26] |

2.2. The Proposed Methods

2.2.1. Swin Transformer Feature Extraction Branch

Swin Transformer Algorithm

Swin Transformer Extracts Branches

2.2.2. Multi-Scale Residuals and Dense Blocks

2.2.3. Residual Feature Fusion Block

2.2.4. Loss Function

2.2.5. Algorithm Overview

| Algorithm 1: Swin–MRDB | |

| Input:hyperspectral training set Htrain, panchromatic image training set Ptrain, hyperspectral test set Htest, panchromatic image test set Ptest, ground truth image G, number of iterations M. | |

| Output: Objective evaluation indicator Vbest. | |

| 1 | % Data preparation |

| 2 | Vbest ← 0 |

| 3 | Read epoch value M |

| 4 | For m = 1……M do |

| 5 | Randomly obtain image data H, P from Htrain and Ptrain. |

| 6 | % feature extraction stage |

| 7 |

|

| 8 |

|

| 9 |

|

| 10 | % feature fusion stage |

| 11 |

|

| 12 | % Parameter calculation stage |

| 13 |

|

| 14 | IF V > Vbest then |

| 15 | Vbest ← V |

| 16 | End |

| 17 | Return Vbest |

3. Experiments

3.1. Datasets

- (1)

- Pavia dataset [36]: The Pavia University data are part of the hyperspectral data from the German airborne reflectance optical spectral imager image of the city of Pavia, Italy, in 2003. The spectral imager continuously images 115 bands in the wavelength range of 0.43–0.86 μm with a spatial resolution of 1.3 m. In total, 12 of these bands are removed due to noise, so the remaining 103 spectral bands are generally used. The size of the data is 610 × 340, containing a total of 2,207,400 pixels, but they contain a large number of background pixels, and only 42,776 pixels in total containing features. Nine types of features are included in these pixels, including trees, asphalt roads, bricks, meadows, etc. We partition HS into a size of 40 × 40 × 103 and Pan into a size of 160 × 160 × 1 size to make training and testing sets for training the network model.

- (2)

- Botswana dataset [37]: The Botswana dataset was acquired using the NASA EO-1 satellite in the Okavango Delta, Botswana, in May 2001, with an image size of 1476 × 256. The wavelength range of the sensor on EO-1 is 400–2500 nm, with a spatial resolution of about 20 m. Removing 107 noise bands, the actual bands used for training are 145. We partition HS into a size of 30 × 30 × 145 and Pan into a size of 120 × 120 × 1 to make training and testing sets for training the network model.

- (3)

- Chikusei dataset [38]: The Chikusei dataset was captured using the Headwall Hyperspec-VNIR-C sensor in Tsukishi, Japan. These data contain 128 bands in the range of 343–1018 nm, with a size of 2517 × 2335 and a spatial resolution of 2.5 m. These data were made public by Dr. Naoto Yokoya and Prof. Akira Iwasaki of the University of Tokyo. We partition HS into a size of 64 × 64 × 128 and Pan into a size of 256 × 256 × 1 to make training and testing sets for training the network model.

3.2. Evaluation Parameters

3.3. Training Setup

3.4. Results

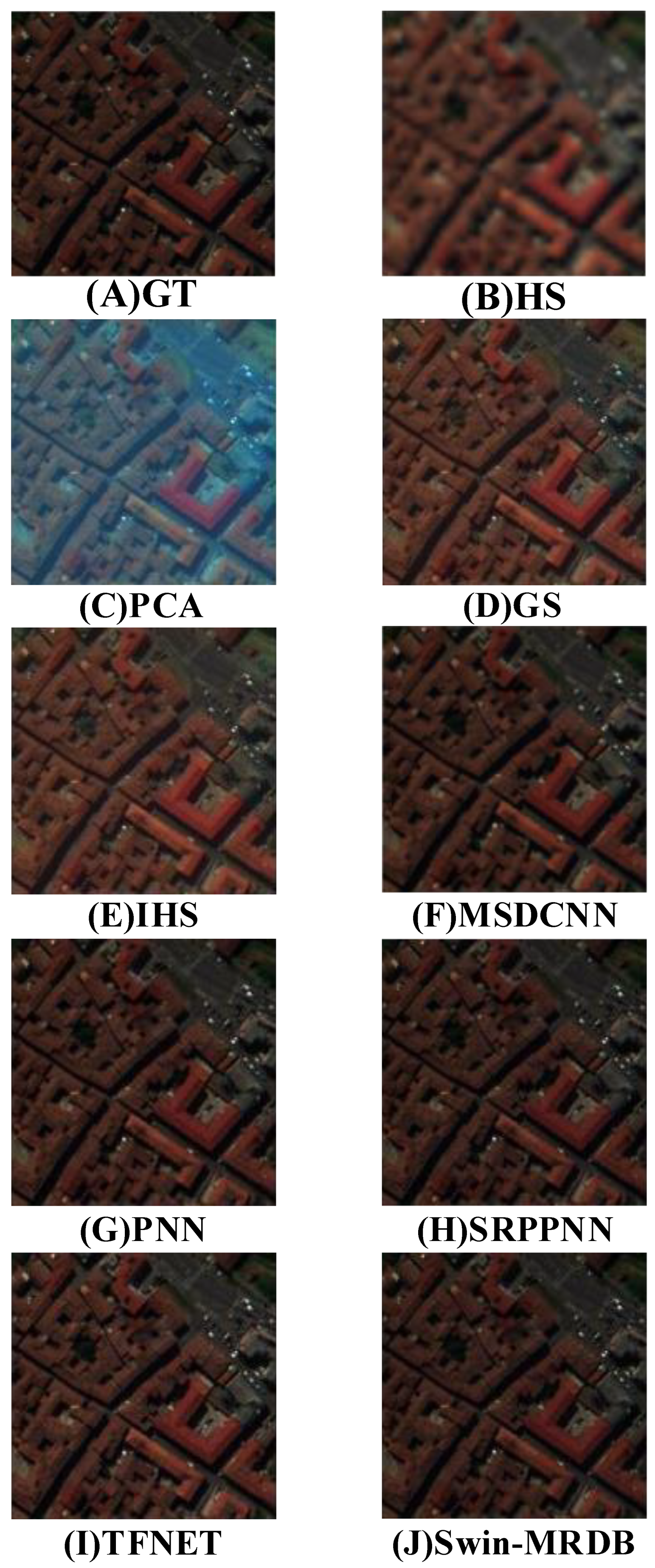

3.4.1. Results for the Pavia Dataset

3.4.2. Results for the Botswana Dataset

3.4.3. Results for the Chikusei Dataset

3.5. Ablation Experiments

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Marghany, M. Remote Sensing and Image Processing in Mineralogy; CRC Press: Boca Raton, FL, USA, 2021; ISBN 978-1-00-303377-6. [Google Scholar]

- Maccone, L.; Ren, C. Quantum Radar. Phys. Rev. Lett. 2020, 124, 200503. [Google Scholar] [CrossRef]

- Ghassemian, H. A Review of Remote Sensing Image Fusion Methods. Inf. Fusion 2016, 32, 75–89. [Google Scholar] [CrossRef]

- Li, J.; Hong, D.; Gao, L.; Yao, J.; Zheng, K.; Zhang, B.; Chanussot, J. Deep Learning in Multimodal Remote Sensing Data Fusion: A Comprehensive Review. Int. J. Appl. Earth Obs. Geoinf. 2022, 112, 102926. [Google Scholar] [CrossRef]

- Kwarteng, P.; Chavez, A. Extracting Spectral Contrast in Landsat Thematic Mapper Image Data Using Selective Principal Component Analysis. In Proceedings of the 6th Thematic Conference on Remote Sensing for Exploration Geology, London, UK, 11–15 September 1988. [Google Scholar]

- Shandoosti, H.R.; Ghassemian, H. Combining the Spectral PCA and Spatial PCA Fusion Methods by an Optimal Filter. Inf. Fusion 2016, 27, 150–160. [Google Scholar] [CrossRef]

- Laben, C.A.; Brower, B.V. Process for Enhancing the Spatial Resolution of Multispectral Imagery Using Pan-Sharpening. U.S. Patent No. 6,011,875, 4 January 2000. [Google Scholar]

- Chavez, P.; Sides, S.; Anderson, J. Comparison of Three Different Methods to Merge Multiresolution and Multispectral Data: Landsat TM and SPOT Panchromatic. Photogramm. Eng. Remote Sens. 1991, 57, 295–303. [Google Scholar] [CrossRef] [Green Version]

- Huang, P.S.; Tu, T.-M. A New Look at IHS-like Image Fusion Methods (Vol 2, Pg 177, 2001). Inf. Fusion 2007, 8, 217–218. [Google Scholar] [CrossRef]

- Rahmani, S.; Strait, M.; Merkurjev, D.; Moeller, M.; Wittman, T. An Adaptive IHS Pan-Sharpening Method. IEEE Geosci. Remote Sens. Lett. 2010, 7, 746–750. [Google Scholar] [CrossRef] [Green Version]

- Choi, J.; Yu, K.; Kim, Y. A New Adaptive Component-Substitution-Based Satellite Image Fusion by Using Partial Replacement. IEEE Trans. Geosci. Remote Sens. 2011, 49, 295–309. [Google Scholar] [CrossRef]

- Aiazzi, B.; Alparone, L.; Baronti, S.; Garzelli, A.; Selva, M. MTF-Tailored Multiscale Fusion of High-Resolution MS and Pan Imagery. Photogramm. Eng. Remote Sens. 2006, 72, 591–596. [Google Scholar] [CrossRef]

- Vivone, G.; Restaino, R.; Chanussot, J. Full Scale Regression-Based Injection Coefficients for Panchromatic Sharpening. IEEE Trans. Image Process. 2018, 27, 3418–3431. [Google Scholar] [CrossRef]

- Khan, M.M.; Chanussot, J.; Condat, L.; Montanvert, A. Indusion: Fusion of Multispectral and Panchromatic Images Using the Induction Scaling Technique. IEEE Geosci. Remote Sens. Lett. 2008, 5, 98–102. [Google Scholar] [CrossRef] [Green Version]

- Imani, M. Adaptive Signal Representation and Multi-Scale Decomposition for Panchromatic and Multispectral Image Fusion. Future Gener. Comput. Syst. Int. J. Escience 2019, 99, 410–424. [Google Scholar] [CrossRef]

- Li, S.; Yang, B. A New Pan-Sharpening Method Using a Compressed Sensing Technique. IEEE Trans. Geosci. Remote Sens. 2011, 49, 738–746. [Google Scholar] [CrossRef]

- Yin, H. Sparse Representation Based Pansharpening with Details Injection Model. Signal Process. 2015, 113, 218–227. [Google Scholar] [CrossRef]

- Jian, L.; Wu, S.; Chen, L.; Vivone, G.; Rayhana, R.; Zhang, D. Multi-Scale and Multi-Stream Fusion Network for Pansharpening. Remote Sens. 2023, 15, 1666. [Google Scholar] [CrossRef]

- Liu, N.; Li, W.; Sun, X.; Tao, R.; Chanussot, J. Remote Sensing Image Fusion With Task-Inspired Multiscale Nonlocal-Attention Network. IEEE Geosci. Remote Sens. Lett. 2023, 20, 1–5. [Google Scholar] [CrossRef]

- Iftene, M.; Arabi, M.E.A.; Karoui, M.S. Transfering super resolution convolutional neural network for remote. Sensing data sharpen-ing. In Proceedings of the Name of IEEE Transfering Super Resolution Convolutional Neural Network for Remote. Sensing Data Sharpening, Amsterdam, The Netherlands, 23–26 September 2018. [Google Scholar]

- Yang, J.; Fu, X.; Hu, Y.; Huang, Y.; Ding, X.; Paisley, J. PanNet: A Deep Network Architecture for Pan-Sharpening. In Proceedings of the Name of 2017 IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 1753–1761. [Google Scholar]

- Peng, J.; Liu, L.; Wang, J.; Zhang, E.; Zhu, X.; Zhang, Y.; Feng, J.; Jiao, L. PSMD-Net: A Novel Pan-Sharpening Method Based on a Multiscale Dense Network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 4957–4971. [Google Scholar] [CrossRef]

- Zheng, Y.; Li, J.; Li, Y.; Cao, K.; Wang, K. Deep Residual Learning for Boosting the Accuracy of Hyperspectral Pansharpening. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1435–1439. [Google Scholar] [CrossRef]

- Aiazzi, B.; Baronti, S.; Selva, M. Improving Component Substitution Pansharpening through Multivariate Regression of MS plus Pan Data. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3230–3239. [Google Scholar] [CrossRef]

- Masi, G.; Cozzolino, D.; Verdoliva, L.; Scarpa, G. Pansharpening by Convolutional Neural Networks. Remote Sens. 2016, 8, 594. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; Liu, Q.; Wang, Y. Remote Sensing Image Fusion Based on Two-Stream Fusion Network. Inf. Fusion 2020, 55, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Yuan, Q.; Wei, Y.; Meng, X.; Shen, H.; Zhang, L. A Multiscale and Multidepth Convolutional Neural Network for Remote Sensing Imagery Pan-Sharpening. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 978–989. [Google Scholar] [CrossRef] [Green Version]

- Cai, J.; Huang, B. Super-Resolution-Guided Progressive Pansharpening Based on a Deep Convolutional Neural Network. IEEE Trans. Geosci. Remote Sens. 2021, 59, 5206–5220. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention Is All You Need. In Proceedings of the Name of Advances in Neural Information Processing Systems 30 (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017; Volume 30. [Google Scholar]

- Han, K.; Wang, Y.; Chen, H.; Chen, X.; Guo, J.; Liu, Z.; Tang, Y.; Xiao, A.; Xu, C.; Xu, Y.; et al. A Survey on Vision Transformer. IEEE Trans. Pattern Anal. Mach. Intell. 2023, 45, 87–110. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer Using Shifted Windows. In Proceedings of the Name of 2021 IEEE/CVF International Conference on Computer Vision (ICCV 2021), Montreal, QC, Canada, 10–17 October 2021; pp. 9992–10002. [Google Scholar]

- Guan, P.; Lam, E.Y. Multistage Dual-Attention Guided Fusion Network for Hyperspectral Pansharpening. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–14. [Google Scholar] [CrossRef]

- Wald, L.; Ranchin, T.; Mangolini, M. Fusion of Satellite Images of Different Spatial Resolutions: Assessing the Quality of Resulting Images. Photogramm. Eng. Remote Sens. 1997, 63, 691–699. [Google Scholar] [CrossRef]

- Plaza, A.; Benediktsson, J.A.; Boardman, J.W.; Brazile, J.; Bruzzone, L.; Camps-Valls, G.; Chanussot, J.; Fauvel, M.; Gamba, P.; Gualtieri, A.; et al. Recent Advances in Techniques for Hyperspectral Image Processing. Remote Sens. Environ. 2009, 113, S110–S122. [Google Scholar] [CrossRef]

- Ungar, S.; Pearlman, J.; Mendenhall, J.; Reuter, D. Overview of the Earth Observing One (EO-1) Mission. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1149–1159. [Google Scholar] [CrossRef]

- Yokoya, N.; Iwasaki, A. Airborne Hyperspectral Data over Chikusei; Technical report SAL-2016-05-27; Space Application Laboratory, The University of Tokyo: Tokyo, Japan, 2016. [Google Scholar]

- Alparone, L.; Wald, L.; Chanussot, J.; Thomas, C.; Gamba, P.; Bruce, L.M. Comparison of Pansharpening Algorithms: Outcome of the 2006 GRS-S Data-Fusion Contest. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3012–3021. [Google Scholar] [CrossRef] [Green Version]

- Yuhas, R.H.; Goetz, A.F.H. Discrimination among Semi-Arid Landscape Endmembers Using the Spectral Angle Mapper (SAM) Algorithm. In Proceedings of the Name of Summaries of the Third Annual JPL Airborne Geoscience Workshop, Pasadena, CA, USA, 7 June 1992. [Google Scholar]

- Karunasingha, D.S.K. Root Mean Square Error or Mean Absolute Error? Use Their Ratio as Well. Inf. Sci. 2022, 585, 609–629. [Google Scholar] [CrossRef]

- Wald, L. Data Fusion. Definitions and Architectures—Fusion of Images of Different Spatial Resolutions. In Proceedings of the Name of Fusion of Earth Data: Merging Point Measurements, Raster Maps, and Remotely Sensed image, Washington, DC, USA, 11 October 2002. [Google Scholar]

- Nan, Y.; Zhang, H.; Zeng, Y.; Zheng, J.; Ge, Y. Intelligent Detection of Multi-Class Pitaya Fruits in Target Picking Row Based on WGB-YOLO Network. Comput. Electron. Agric. 2023, 208. [Google Scholar] [CrossRef]

- Zhou, J.; Zhang, Y.; Wang, J. RDE-YOLOv7: An Improved Model Based on YOLOv7 for Better Performance in Detecting Dragon Fruits. Agronomy 2023, 13, 1042. [Google Scholar] [CrossRef]

- Li, A.; Zhao, Y.; Zheng, Z. Novel Recursive BiFPN Combining with Swin Transformer for Wildland Fire Smoke Detection. FORESTS 2022, 13, 2032. [Google Scholar] [CrossRef]

| Experimental Environment | Edition |

|---|---|

| Deep learning frameworks | Pytorch 1.13.1 |

| Compilers | Python 3.8 |

| Operating system | Window11 |

| GPU | RTX3090 |

| CPU | Intel(R) Xeon(R) Gold 6330 CPU |

| Models | Indicators | |||

|---|---|---|---|---|

| CC | SAM | RMSE | ERGAS | |

| PCA [24] | 0.651 | 16.445 | 6.432 | 9.71 |

| GS [25] | 0.964 | 8.924 | 4.432 | 7.56 |

| IHS [26] | 0.468 | 8.994 | 4.681 | 7.74 |

| PNN [27] | 0.978 | 5.132 | 1.522 | 3.96 |

| TFNET [28] | 0.980 | 4.797 | 1.388 | 3.26 |

| MSDCNN [29] | 0.980 | 4.867 | 1.361 | 3.12 |

| SRPPNN [30] | 0.979 | 5.085 | 1.452 | 3.85 |

| ours | 0.984 | 4.394 | 1.180 | 2.64 |

| Models | Indicators | |||

|---|---|---|---|---|

| CC | SAM | RMSE | ERGAS | |

| PCA [24] | 0.800 | 2.717 | 5.92 | 7.74 |

| GS [21] | 0.958 | 3.251 | 9.08 | 13.55 |

| his [22] | 0.754 | 1.839 | 6.19 | 8.17 |

| PNN [23] | 0.961 | 1.814 | 1.278 | 2.25 |

| TFNET [24] | 0.962 | 1.727 | 1.226 | 2.12 |

| MSDCNN [25] | 0.941 | 2.350 | 1.949 | 2.88 |

| SRPPNN [26] | 0.960 | 1.819 | 1.322 | 2.16 |

| ours | 0.977 | 1.483 | 1.074 | 1.93 |

| Models | Indicators | |||

|---|---|---|---|---|

| CC | SAM | RMSE | ERGAS | |

| PCA [24] | 0.548 | 26.513 | 8.28 | 18.52 |

| GS [21] | 0.658 | 26.178 | 6.71 | 13.41 |

| IHS [22] | 0.672 | 26.566 | 6.75 | 13.64 |

| PNN [23] | 0.970 | 2.778 | 1.523 | 4.41 |

| TFNET [24] | 0.978 | 2.252 | 1.224 | 4.11 |

| MSDCNN [25] | 0.964 | 3.094 | 1.704 | 5.41 |

| SRPPNN [26] | 0.977 | 2.341 | 1.292 | 3.98 |

| ours | 0.981 | 2.219 | 1.223 | 3.85 |

| Model | RMDB-pan | RMDB- MS | CC | SAM | RMSE | ERGAS | ||

|---|---|---|---|---|---|---|---|---|

| Swin–MRDB | √ | × | √ | √ | 0.977 | 1.483 | 1.074 | 1.93 |

| × | √ | √ | √ | 0.892 | 2.461 | 1.942 | 3.38 | |

| √ | × | √ | × | 0.965 | 1.533 | 1.214 | 2.19 | |

| √ | × | × | √ | 0.962 | 1.634 | 1.245 | 2.25 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rong, Z.; Jiang, X.; Huang, L.; Zhou, H. Swin–MRDB: Pan-Sharpening Model Based on the Swin Transformer and Multi-Scale CNN. Appl. Sci. 2023, 13, 9022. https://doi.org/10.3390/app13159022

Rong Z, Jiang X, Huang L, Zhou H. Swin–MRDB: Pan-Sharpening Model Based on the Swin Transformer and Multi-Scale CNN. Applied Sciences. 2023; 13(15):9022. https://doi.org/10.3390/app13159022

Chicago/Turabian StyleRong, Zifan, Xuesong Jiang, Linfeng Huang, and Hongping Zhou. 2023. "Swin–MRDB: Pan-Sharpening Model Based on the Swin Transformer and Multi-Scale CNN" Applied Sciences 13, no. 15: 9022. https://doi.org/10.3390/app13159022

APA StyleRong, Z., Jiang, X., Huang, L., & Zhou, H. (2023). Swin–MRDB: Pan-Sharpening Model Based on the Swin Transformer and Multi-Scale CNN. Applied Sciences, 13(15), 9022. https://doi.org/10.3390/app13159022