Marine Vessel Classification and Multivariate Trajectories Forecasting Using Metaheuristics-Optimized eXtreme Gradient Boosting and Recurrent Neural Networks

Abstract

1. Introduction

- The introduction of a robust framework for tackling pressing and important issues in maritime vessel identification and tracking;

- The proposal of a robust XGBoost-based mechanism for marine vessel identification and classification;

- The proposal of a time series-based approach that leverages LSTM networks for vessel trajectory forecasting;

- The introduction of a boosted PSO specifically for addressing the hyperparameter tuning needs of XGBoost and LSTM;

- The interpretation of the best-performing classification model using explainable AI techniques, to better understand feature importance when tackling this pressing issue.

2. Background

2.1. Extreme Gradient Boosting Algorithm

2.2. Long-Short Term Memory Model

Hyperparameters of LSTM

2.3. Metaheuristic Methods and Related Works

2.4. Shapley Additive Explanations

3. Introduced Modified Metaheuristics

3.1. PSO

3.2. Modified PSO Approach

3.2.1. Chaotic Elite Learning

3.2.2. Lévy Flight

3.3. Modified PSO Pseudo-Code

| Algorithm 1 Pseudo-code of the BPSO metaheuristics |

|

4. Experimental Environment and Preliminaries

4.1. Datasets and Preprocessing

4.2. Experimental Setup

Metrics Used for Validation and Comparative Analysis

5. Experimental Outcomes, Comparative Analysis, and Discussion

5.1. Experimental Observations and Comparative Analysis

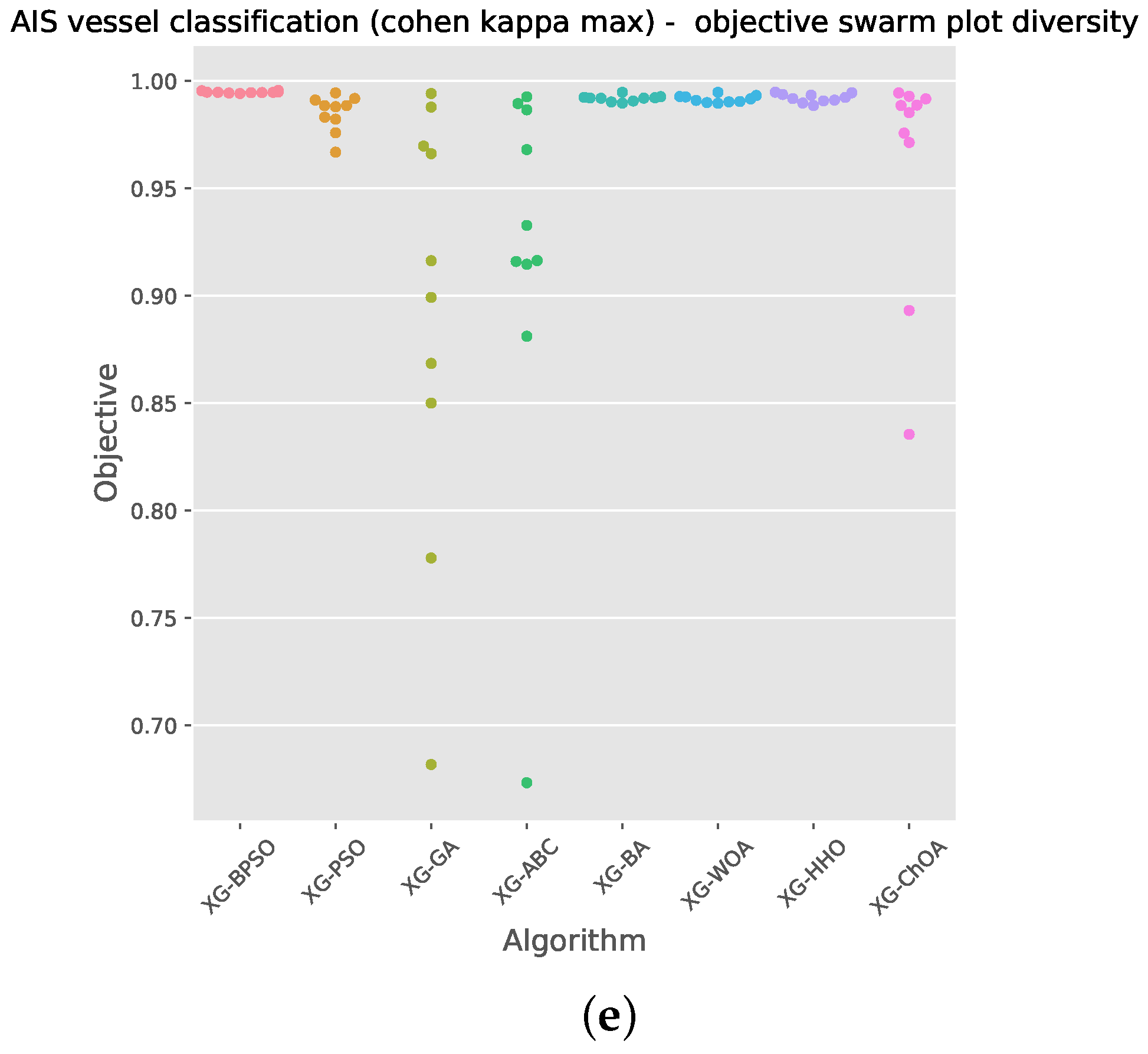

5.2. Experiment 1: Marine Vessel Classification

5.3. Experiment 2: Marine Trajectory Forecasting

5.4. Statistical Evaluation

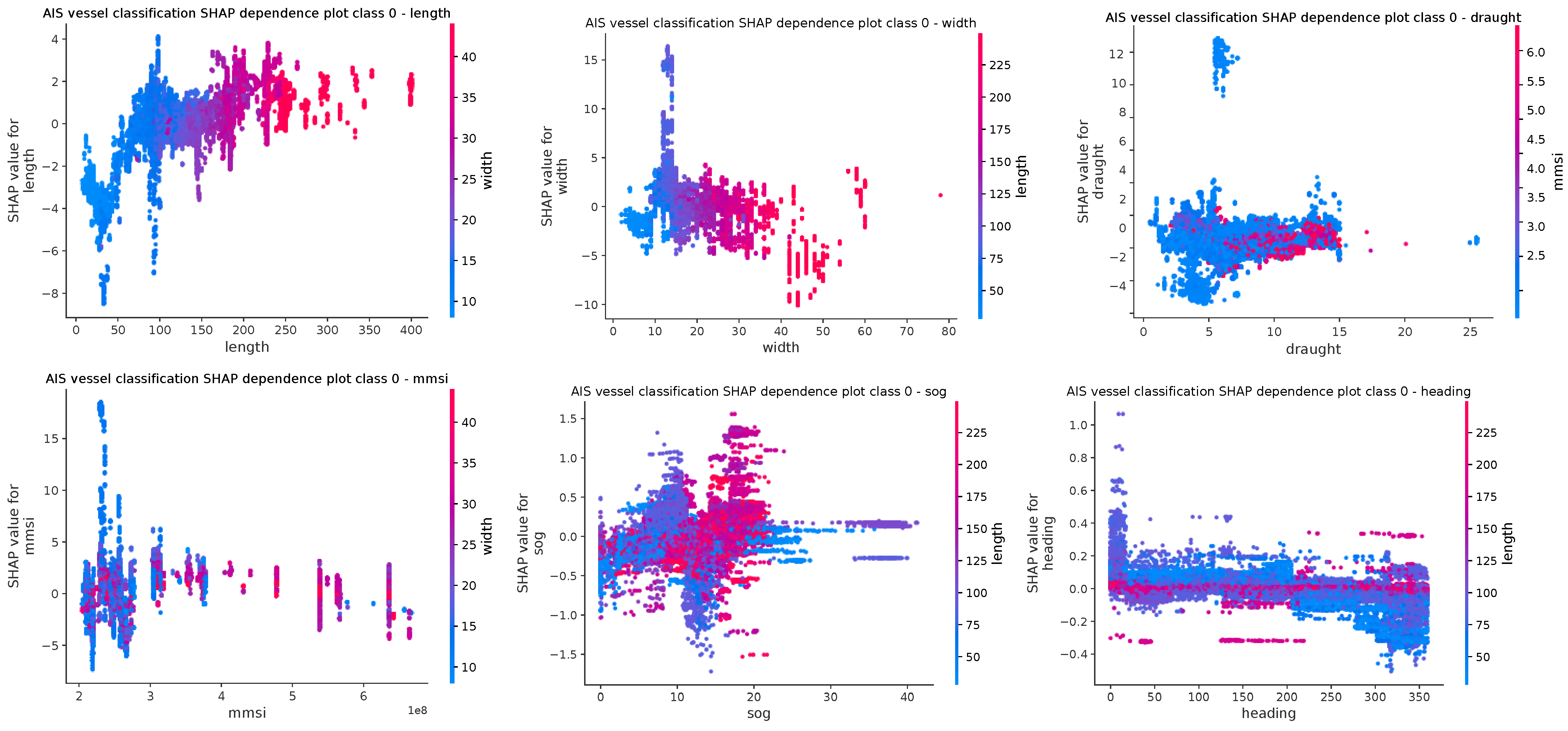

5.5. Top-Performing Model Outcome Interpretation

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Han, X.; Armenakis, C.; Jadidi, M. Modeling vessel behaviours by clustering ais data using optimized dbscan. Sustainability 2021, 13, 8162. [Google Scholar] [CrossRef]

- Renso, C.; Bogorny, V.; Tserpes, K.; Matwin, S.; Macêdo, J. Multiple-aspect analysis of semantic trajectories (MASTER). Int. J. Geogr. Inf. Sci. 2021, 35, 763–766. [Google Scholar] [CrossRef]

- Xiao, Z.; Fang, H.; Jiang, H.; Bai, J.; Havyarimana, V.; Chen, H.; Jiao, L. Understanding Private Car Aggregation Effect via Spatio-Temporal Analysis of Trajectory Data. IEEE Trans. Cybern. 2023, 53, 2346–2357. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Zhu, F.; Huang, Z.; Wan, J.; Ren, Y. Research on Real-Time Anomaly Detection of Fishing Vessels in a Marine Edge Computing Environment. Mob. Inf. Syst. 2021, 2021, 1–15. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhang, Y.; Qian, L.; Zhang, X.; Diao, S.; Liu, X.; Cao, J.; Huang, H. A lightweight ship target detection model based on improved YOLOv5s algorithm. PLoS ONE 2023, 18, e0283932. [Google Scholar] [CrossRef]

- Yang, F.; Qiao, Y.; Wei, W.; Wang, X.; Wan, D.; Damaševičius, R.; Woźniak, M. DDTree: A hybrid deep learning model for real-timewaterway depth prediction and smart navigation. Appl. Sci. 2020, 10, 2270. [Google Scholar] [CrossRef]

- Zhou, Y.; Daamen, W.; Vellinga, T.; Hoogendoorn, S.P. Ship classification based on ship behavior clustering from AIS data. Ocean. Eng. 2019, 175, 176–187. [Google Scholar] [CrossRef]

- Wang, X.; Xiao, Y. A Deep Learning Model for Ship Trajectory Prediction Using Automatic Identification System (AIS) Data. Information 2023, 14, 212. [Google Scholar] [CrossRef]

- Qian, L.; Zheng, Y.; Li, L.; Ma, Y.; Zhou, C.; Zhang, D. A new method of inland water ship trajectory prediction based on long short-term memory network optimized by genetic algorithm. Appl. Sci. 2022, 12, 4073. [Google Scholar] [CrossRef]

- Zheng, Y.; Li, L.; Qian, L.; Cheng, B.; Hou, W.; Zhuang, Y. Sine-SSA-BP Ship Trajectory Prediction Based on Chaotic Mapping Improved Sparrow Search Algorithm. Sensors 2023, 23, 704. [Google Scholar] [CrossRef]

- Chen, B.; Hu, J.; Zhao, Y.; Ghosh, B.K. Finite-time observer based tracking control of uncertain heterogeneous underwater vehicles using adaptive sliding mode approach. Neurocomputing 2022, 481, 322–332. [Google Scholar] [CrossRef]

- Chen, T.; He, T.; Benesty, M.; Khotilovich, V.; Tang, Y.; Cho, H.; Chen, K.; Mitchell, R.; Cano, I.; Zhou, T.; et al. Xgboost: Extreme gradient boosting. R Package Version 0.4-2 2015, 1, 1–4. [Google Scholar]

- Chen, T.; Guestrin, C. Xgboost: A scalable tree boosting system. In Proceedings of the 22nd Acm Sigkdd International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Yang, M.; Wang, Y.; Liang, Y.; Wang, C. A New Approach to System Design Optimization of Underwater Gliders. IEEE/ASME Trans. Mechatron. 2022, 27, 3494–3505. [Google Scholar] [CrossRef]

- Pascanu, R.; Mikolov, T.; Bengio, Y. On the difficulty of training recurrent neural networks. In Proceedings of the 30th International Conference on Machine Learning, Atlanta, GA, USA, 17–19 June 2013; Dasgupta, S., McAllester, D., Eds.; PMLR: Atlanta, GA, USA, 2013; Volume 28, pp. 1310–1318. [Google Scholar]

- Bas, E.; Egrioglu, E.; Kolemen, E. Training simple recurrent deep artificial neural network for forecasting using particle swarm optimization. Granul. Comput. 2022, 7, 411–420. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the ICNN’95-International Conference on Neural Networks, Perth, WA, Australia, 27 November–1 December 1995; Volume 4, pp. 1942–1948. [Google Scholar]

- Yang, X.S.; Slowik, A. Firefly algorithm. In Swarm Intelligence Algorithms; CRC Press: Boca Raton, FL, USA, 2020; pp. 163–174. [Google Scholar]

- Mirjalili, S. Genetic algorithm. In Evolutionary Algorithms and Neural Networks; Springer: Berlin/Heidelberg, Germany, 2019; pp. 43–55. [Google Scholar]

- Heidari, A.A.; Mirjalili, S.; Faris, H.; Aljarah, I.; Mafarja, M.; Chen, H. Harris hawks optimization: Algorithm and applications. Future Gener. Comput. Syst. 2019, 97, 849–872. [Google Scholar] [CrossRef]

- Karaboga, D. Artificial bee colony algorithm. Scholarpedia 2010, 5, 6915. [Google Scholar] [CrossRef]

- Yang, X.S.; Hossein Gandomi, A. Bat algorithm: A novel approach for global engineering optimization. Eng. Comput. 2012, 29, 464–483. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Khishe, M.; Mosavi, M.R. Chimp optimization algorithm. Expert Syst. Appl. 2020, 149, 113338. [Google Scholar] [CrossRef]

- Zivkovic, M.; Bacanin, N.; Tuba, E.; Strumberger, I.; Bezdan, T.; Tuba, M. Wireless Sensor Networks Life Time Optimization Based on the Improved Firefly Algorithm. In Proceedings of the 2020 International Wireless Communications and Mobile Computing (IWCMC), Limassol, Cyprus, 15–19 June 2020; pp. 1176–1181. [Google Scholar]

- Zivkovic, M.; Bacanin, N.; Zivkovic, T.; Strumberger, I.; Tuba, E.; Tuba, M. Enhanced Grey Wolf Algorithm for Energy Efficient Wireless Sensor Networks. In Proceedings of the 2020 Zooming Innovation in Consumer Technologies Conference (ZINC), Novi Sad, Serbia, 26–27 May 2020; pp. 87–92. [Google Scholar]

- Bacanin, N.; Tuba, E.; Zivkovic, M.; Strumberger, I.; Tuba, M. Whale optimization algorithm with exploratory move for wireless sensor networks localization. In Proceedings of the International Conference on Hybrid Intelligent Systems, Bhopal, India, 10–12 December 2019; pp. 328–338. [Google Scholar]

- Zivkovic, M.; Zivkovic, T.; Venkatachalam, K.; Bacanin, N. Enhanced Dragonfly Algorithm Adapted for Wireless Sensor Network Lifetime Optimization. In Data Intelligence and Cognitive Informatics; Springer: Berlin/Heidelberg, Germany, 2021; pp. 803–817. [Google Scholar]

- Bezdan, T.; Zivkovic, M.; Tuba, E.; Strumberger, I.; Bacanin, N.; Tuba, M. Glioma Brain Tumor Grade Classification from MRI Using Convolutional Neural Networks Designed by Modified FA. In Proceedings of the International Conference on Intelligent and Fuzzy Systems, Istanbul, Turkey, 19–21 July 2020; pp. 955–963. [Google Scholar]

- Zivkovic, M.; Bacanin, N.; Antonijevic, M.; Nikolic, B.; Kvascev, G.; Marjanovic, M.; Savanovic, N. Hybrid CNN and XGBoost Model Tuned by Modified Arithmetic Optimization Algorithm for COVID-19 Early Diagnostics from X-ray Images. Electronics 2022, 11, 3798. [Google Scholar] [CrossRef]

- Jovanovic, D.; Antonijevic, M.; Stankovic, M.; Zivkovic, M.; Tanaskovic, M.; Bacanin, N. Tuning Machine Learning Models Using a Group Search Firefly Algorithm for Credit Card Fraud Detection. Mathematics 2022, 10, 2272. [Google Scholar] [CrossRef]

- Petrovic, A.; Bacanin, N.; Zivkovic, M.; Marjanovic, M.; Antonijevic, M.; Strumberger, I. The AdaBoost Approach Tuned by Firefly Metaheuristics for Fraud Detection. In Proceedings of the 2022 IEEE World Conference on Applied Intelligence and Computing (AIC), Sonbhadra, India, 17–19 June 2022; pp. 834–839. [Google Scholar]

- Zivkovic, M.; Bacanin, N.; Venkatachalam, K.; Nayyar, A.; Djordjevic, A.; Strumberger, I.; Al-Turjman, F. COVID-19 cases prediction by using hybrid machine learning and beetle antennae search approach. Sustain. Cities Soc. 2021, 66, 102669. [Google Scholar] [CrossRef]

- Zivkovic, M.; Venkatachalam, K.; Bacanin, N.; Djordjevic, A.; Antonijevic, M.; Strumberger, I.; Rashid, T.A. Hybrid Genetic Algorithm and Machine Learning Method for COVID-19 Cases Prediction. In Proceedings of the International Conference on Sustainable Expert Systems: ICSES 2020, Lalitpur, Nepal, 28–29 September 2021; Volume 176, p. 169. [Google Scholar]

- Bacanin, N.; Bezdan, T.; Tuba, E.; Strumberger, I.; Tuba, M.; Zivkovic, M. Task scheduling in cloud computing environment by grey wolf optimizer. In Proceedings of the 2019 27th Telecommunications Forum (TELFOR), Belgrade, Serbia, 26–27 November 2019; pp. 1–4. [Google Scholar]

- Bezdan, T.; Zivkovic, M.; Tuba, E.; Strumberger, I.; Bacanin, N.; Tuba, M. Multi-objective Task Scheduling in Cloud Computing Environment by Hybridized Bat Algorithm. In Proceedings of the International Conference on Intelligent and Fuzzy Systems, Istanbul, Turkey, 19–21 July 2020; pp. 718–725. [Google Scholar]

- Bezdan, T.; Zivkovic, M.; Antonijevic, M.; Zivkovic, T.; Bacanin, N. Enhanced Flower Pollination Algorithm for Task Scheduling in Cloud Computing Environment. In Machine Learning for Predictive Analysis; Springer: Berlin/Heidelberg, Germany, 2020; pp. 163–171. [Google Scholar]

- Zivkovic, M.; Bezdan, T.; Strumberger, I.; Bacanin, N.; Venkatachalam, K. Improved Harris Hawks Optimization Algorithm for Workflow Scheduling Challenge in Cloud–Edge Environment. In Computer Networks, Big Data and IoT; Springer: Berlin/Heidelberg, Germany, 2021; pp. 87–102. [Google Scholar]

- Bacanin, N.; Zivkovic, M.; Stoean, C.; Antonijevic, M.; Janicijevic, S.; Sarac, M.; Strumberger, I. Application of Natural Language Processing and Machine Learning Boosted with Swarm Intelligence for Spam Email Filtering. Mathematics 2022, 10, 4173. [Google Scholar] [CrossRef]

- Stankovic, M.; Antonijevic, M.; Bacanin, N.; Zivkovic, M.; Tanaskovic, M.; Jovanovic, D. Feature Selection by Hybrid Artificial Bee Colony Algorithm for Intrusion Detection. In Proceedings of the 2022 International Conference on Edge Computing and Applications (ICECAA), Tamilnadu, India, 13–15 October 2022; pp. 500–505. [Google Scholar]

- Alzaqebah, A.; Aljarah, I.; Al-Kadi, O.; Damaševičius, R. A Modified Grey Wolf Optimization Algorithm for an Intrusion Detection System. Mathematics 2022, 10, 999. [Google Scholar] [CrossRef]

- Bezdan, T.; Cvetnic, D.; Gajic, L.; Zivkovic, M.; Strumberger, I.; Bacanin, N. Feature Selection by Firefly Algorithm with Improved Initialization Strategy. In Proceedings of the 7th Conference on the Engineering of Computer Based Systems, Novi Sad, Serbia, 26–27 May 2021; pp. 1–8. [Google Scholar]

- Bacanin, N.; Budimirovic, N.; Venkatachalam, K.; Jassim, H.S.; Zivkovic, M.; Askar, S.; Abouhawwash, M. Quasi-reflection learning arithmetic optimization algorithm firefly search for feature selection. Heliyon 2023, 9, e15378. [Google Scholar] [CrossRef]

- Bacanin, N.; Stoean, C.; Zivkovic, M.; Rakic, M.; Strulak-Wójcikiewicz, R.; Stoean, R. On the benefits of using metaheuristics in the hyperparameter tuning of deep learning models for energy load forecasting. Energies 2023, 16, 1434. [Google Scholar] [CrossRef]

- Stoean, C.; Zivkovic, M.; Bozovic, A.; Bacanin, N.; Strulak-Wójcikiewicz, R.; Antonijevic, M.; Stoean, R. Metaheuristic-Based Hyperparameter Tuning for Recurrent Deep Learning: Application to the Prediction of Solar Energy Generation. Axioms 2023, 12, 266. [Google Scholar] [CrossRef]

- Bacanin, N.; Jovanovic, L.; Zivkovic, M.; Kandasamy, V.; Antonijevic, M.; Deveci, M.; Strumberger, I. Multivariate energy forecasting via metaheuristic tuned long-short term memory and gated recurrent unit neural networks. Inf. Sci. 2023, 642, 119122. [Google Scholar] [CrossRef]

- Milosevic, S.; Bezdan, T.; Zivkovic, M.; Bacanin, N.; Strumberger, I.; Tuba, M. Feed-Forward Neural Network Training by Hybrid Bat Algorithm. In Proceedings of the Modelling and Development of Intelligent Systems: 7th International Conference, MDIS 2020, Sibiu, Romania, 22–24 October 2020; Revised Selected Papers 7. Springer International Publishing: Cham, Switzerland, 2021; pp. 52–66. [Google Scholar]

- Gajic, L.; Cvetnic, D.; Zivkovic, M.; Bezdan, T.; Bacanin, N.; Milosevic, S. Multi-layer Perceptron Training Using Hybridized Bat Algorithm. In Computational Vision and Bio-Inspired Computing; Springer: Berlin/Heidelberg, Germany, 2021; pp. 689–705. [Google Scholar]

- Bacanin, N.; Zivkovic, M.; Al-Turjman, F.; Venkatachalam, K.; Trojovskỳ, P.; Strumberger, I.; Bezdan, T. Hybridized sine cosine algorithm with convolutional neural networks dropout regularization application. Sci. Rep. 2022, 12, 6302. [Google Scholar] [CrossRef] [PubMed]

- Bacanin, N.; Stoean, C.; Zivkovic, M.; Jovanovic, D.; Antonijevic, M.; Mladenovic, D. Multi-Swarm Algorithm for Extreme Learning Machine Optimization. Sensors 2022, 22, 4204. [Google Scholar] [CrossRef] [PubMed]

- Jovanovic, L.; Jovanovic, D.; Bacanin, N.; Jovancai Stakic, A.; Antonijevic, M.; Magd, H.; Thirumalaisamy, R.; Zivkovic, M. Multi-Step Crude Oil Price Prediction Based on LSTM Approach Tuned by Salp Swarm Algorithm with Disputation Operator. Sustainability 2022, 14, 14616. [Google Scholar] [CrossRef]

- Bacanin, N.; Sarac, M.; Budimirovic, N.; Zivkovic, M.; AlZubi, A.A.; Bashir, A.K. Smart wireless health care system using graph LSTM pollution prediction and dragonfly node localization. Sustain. Comput. Inform. Syst. 2022, 35, 100711. [Google Scholar]

- Jovanovic, L.; Jovanovic, G.; Perisic, M.; Alimpic, F.; Stanisic, S.; Bacanin, N.; Zivkovic, M.; Stojic, A. The Explainable Potential of Coupling Metaheuristics-Optimized-XGBoost and SHAP in Revealing VOCs’ Environmental Fate. Atmosphere 2023, 14, 109. [Google Scholar] [CrossRef]

- Jovanovic, G.; Perisic, M.; Bacanin, N.; Zivkovic, M.; Stanisic, S.; Strumberger, I.; Alimpic, F.; Stojic, A. Potential of Coupling Metaheuristics-Optimized-XGBoost and SHAP in Revealing PAHs Environmental Fate. Toxics 2023, 11, 394. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, R.; Fang, Y. LSTM network based on on antlion optimization and its application in flight trajectory prediction. In Proceedings of the 2018 2nd IEEE Advanced Information Management, Communicates, Electronic and Automation Control Conference (IMCEC), Xi’an, China, 25–27 May 2018; pp. 1658–1662. [Google Scholar]

- Xiao, Z.; Li, P.; Havyarimana, V.; Hassana, G.M.; Wang, D.; Li, K. GOI: A novel design for vehicle positioning and trajectory prediction under urban environments. IEEE Sens. J. 2018, 18, 5586–5594. [Google Scholar] [CrossRef]

- Liu, J.; Shi, G.; Zhu, K. Vessel trajectory prediction model based on AIS sensor data and adaptive chaos differential evolution support vector regression (ACDE-SVR). Appl. Sci. 2019, 9, 2983. [Google Scholar] [CrossRef]

- Suo, Y.; Chen, W.; Claramunt, C.; Yang, S. A ship trajectory prediction framework based on a recurrent neural network. Sensors 2020, 20, 5133. [Google Scholar] [CrossRef]

- Cacchiani, V.; Ceschia, S.; Mignardi, S.; Buratti, C. Metaheuristic Algorithms for UAV Trajectory Optimization in Mobile Networks. In Proceedings of the Metaheuristics: 14th International Conference, MIC 2022, Syracuse, Italy, 11–14 July 2022; Springer: Berlin/Heidelberg, Germany, 2023; pp. 30–44. [Google Scholar]

- Hofmann, C.; Topputo, F. Rapid low-thrust trajectory optimization in deep space based on convex programming. J. Guid. Control Dyn. 2021, 44, 1379–1388. [Google Scholar] [CrossRef]

- Wood, D.A. Hybrid bat flight optimization algorithm applied to complex wellbore trajectories highlights the relative contributions of metaheuristic components. J. Nat. Gas Sci. Eng. 2016, 32, 211–221. [Google Scholar]

- Farzipour, A.; Elmi, R.; Nasiri, H. Detection of Monkeypox Cases Based on Symptoms Using XGBoost and Shapley Additive Explanations Methods. Diagnostics 2023, 13, 2391. [Google Scholar] [CrossRef]

- Bhandari, M.; Yogarajah, P.; Kavitha, M.S.; Condell, J. Exploring the Capabilities of a Lightweight CNN Model in Accurately Identifying Renal Abnormalities: Cysts, Stones, and Tumors, Using LIME and SHAP. Appl. Sci. 2023, 13, 3125. [Google Scholar] [CrossRef]

- Fatahi, R.; Nasiri, H.; Homafar, A.; Khosravi, R.; Siavoshi, H.; Chehreh Chelgani, S. Modeling operational cement rotary kiln variables with explainable artificial intelligence methods–A “conscious lab” development. Part. Sci. Technol. 2023, 41, 715–724. [Google Scholar] [CrossRef]

- Dobrojevic, M.; Zivkovic, M.; Chhabra, A.; Sani, N.S.; Bacanin, N.; Amin, M.M. Addressing Internet of Things security by enhanced sine cosine metaheuristics tuned hybrid machine learning model and results interpretation based on SHAP approach. PeerJ Comput. Sci. 2023, 9, e1405. [Google Scholar] [PubMed]

- Lundberg, S.M.; Lee, S.I. A unified approach to interpreting model predictions. Adv. Neural Inf. Process. Syst. 2017, 30, 4768–4777. [Google Scholar]

- Molnar, C. Interpretable Machine Learning; Lulu. com: Morrisville, NC, USA, 2020. [Google Scholar]

- Lundberg, S.M.; Erion, G.; Chen, H.; DeGrave, A.; Prutkin, J.M.; Nair, B.; Katz, R.; Himmelfarb, J.; Bansal, N.; Lee, S.I. From local explanations to global understanding with explainable AI for trees. Nat. Mach. Intell. 2020, 2, 56–67. [Google Scholar] [CrossRef]

- Stojić, A.; Stanić, N.; Vuković, G.; Stanišić, S.; Perišić, M.; Šoštarić, A.; Lazić, L. Explainable extreme gradient boosting tree-based prediction of toluene, ethylbenzene and xylene wet deposition. Sci. Total Environ. 2019, 653, 140–147. [Google Scholar] [CrossRef]

- Jovanovic, L.; Jovanovic, D.; Antonijevic, M.; Nikolic, B.; Bacanin, N.; Zivkovic, M.; Strumberger, I. Improving Phishing Website Detection Using a Hybrid Two-level Framework for Feature Selection and XGBoost Tuning. J. Web Eng. 2023, 22, 543–574. [Google Scholar] [CrossRef]

- Mohamed, A.W.; Hadi, A.A.; Mohamed, A.K.; Awad, N.H. Evaluating the performance of adaptive gainingsharing knowledge based algorithm on cec 2020 benchmark problems. In Proceedings of the 2020 IEEE Congress on Evolutionary Computation (CEC), Glasgow, UK, 19–24 July 2020; pp. 1–8. [Google Scholar]

- Yu, K.; Liang, J.; Qu, B.; Chen, X.; Wang, H. Parameters identification of photovoltaic models using an improved JAYA optimization algorithm. Energy Convers. Manag. 2017, 150, 742–753. [Google Scholar] [CrossRef]

- Chechkin, A.V.; Metzler, R.; Klafter, J.; Gonchar, V.Y. Introduction to the theory of Lévy flights. In Anomalous Transport: Foundations and Applications; Wiley-VCH Verlag GmbH & Co. KGaA: Weinheim, Germany, 2008; pp. 129–162. [Google Scholar]

- Yang, X.S.; Deb, S. Cuckoo search via Lévy flights. In Proceedings of the 2009 World congress on nature & biologically inspired computing (NaBIC), Coimbatore, India, 9–11 December 2009; pp. 210–214. [Google Scholar]

- Kaidi, W.; Khishe, M.; Mohammadi, M. Dynamic levy flight chimp optimization. Knowl.-Based Syst. 2022, 235, 107625. [Google Scholar] [CrossRef]

- Heidari, A.A.; Pahlavani, P. An efficient modified grey wolf optimizer with Lévy flight for optimization tasks. Appl. Soft Comput. 2017, 60, 115–134. [Google Scholar] [CrossRef]

- LaTorre, A.; Molina, D.; Osaba, E.; Poyatos, J.; Del Ser, J.; Herrera, F. A prescription of methodological guidelines for comparing bio-inspired optimization algorithms. Swarm Evol. Comput. 2021, 67, 100973. [Google Scholar] [CrossRef]

- Shapiro, S.S.; Francia, R. An approximate analysis of variance test for normality. J. Am. Stat. Assoc. 1972, 67, 215–216. [Google Scholar] [CrossRef]

- Wilcoxon, F. Individual comparisons by ranking methods. In Breakthroughs in Statistics; Springer: Berlin/Heidelberg, Germany, 1992; pp. 196–202. [Google Scholar]

- Yuan, Y.; Wu, L.; Zhang, X. Gini-Impurity index analysis. IEEE Trans. Inf. Forensics Secur. 2021, 16, 3154–3169. [Google Scholar] [CrossRef]

| Method | Best | Worst | Mean | Median | Std | Var |

|---|---|---|---|---|---|---|

| XG-BPSO | 0.002789 | 0.003470 | 0.003100 | 0.003130 | 0.000202 | |

| XG-PSO | 0.003096 | 0.005375 | 0.004010 | 0.003929 | 0.000739 | |

| XG-GA | 0.004354 | 0.015614 | 0.007037 | 0.005511 | 0.003554 | |

| XG-ABC | 0.004898 | 0.007586 | 0.005736 | 0.005477 | 0.000817 | |

| XG-BA | 0.003368 | 0.005171 | 0.004116 | 0.004184 | 0.000559 | |

| XG-WOA | 0.003198 | 0.004762 | 0.004065 | 0.004133 | 0.000463 | |

| XG-HHO | 0.003674 | 0.007076 | 0.004915 | 0.004286 | 0.001205 | |

| XG-ChOA | 0.003062 | 0.009899 | 0.004720 | 0.004133 | 0.002032 |

| Method | Best | Worst | Mean | Median | Std | Var |

|---|---|---|---|---|---|---|

| XG-BPSO | 0.995391 | 0.994267 | 0.994878 | 0.994829 | 0.000335 | |

| XG-PSO | 0.994886 | 0.991114 | 0.993374 | 0.993507 | 0.001223 | |

| XG-GA | 0.992803 | 0.974130 | 0.988359 | 0.990888 | 0.005892 | |

| XG-ABC | 0.991899 | 0.987456 | 0.990516 | 0.990946 | 0.001351 | |

| XG-BA | 0.994435 | 0.991452 | 0.993197 | 0.993085 | 0.000925 | |

| XG-WOA | 0.994717 | 0.992126 | 0.993282 | 0.993169 | 0.000766 | |

| XG-HHO | 0.993928 | 0.988296 | 0.991873 | 0.992917 | 0.001995 | |

| XG-ChOA | 0.994942 | 0.983617 | 0.992197 | 0.993169 | 0.003366 |

| Class | Precision | Recall | -Score | Support |

|---|---|---|---|---|

| Cargo | 0.998406 | 0.999527 | 0.998966 | 16921 |

| Dredging | 0.987097 | 0.995662 | 0.991361 | 461 |

| Fishing | 1.000000 | 0.994460 | 0.997222 | 1083 |

| HSC | 0.985207 | 0.994003 | 0.989599 | 335 |

| Law enforcement | 1.000000 | 1.000000 | 1.000000 | 136 |

| Military | 0.987085 | 0.988909 | 0.987996 | 541 |

| Passenger | 0.998011 | 0.995370 | 0.996689 | 1512 |

| Pilot | 1.000000 | 0.996139 | 0.998066 | 259 |

| Pleasure | 1.000000 | 1.000000 | 1.000000 | 7 |

| Port tender | 1.000000 | 1.000000 | 1.000000 | 5 |

| Reserved | 0.937500 | 0.849057 | 0.891089 | 53 |

| SAR | 1.000000 | 0.933333 | 0.965517 | 45 |

| Sailing | 0.900000 | 0.642857 | 0.750000 | 14 |

| Tanker | 0.998580 | 0.997730 | 0.998155 | 7050 |

| Towing | 0.880000 | 0.904110 | 0.891892 | 73 |

| Towing long/wide | 0.875000 | 0.980000 | 0.924528 | 50 |

| Tug | 0.995272 | 0.988263 | 0.991755 | 852 |

| accuracy | 0.997211 | 0.997211 | 0.997211 | 0.9972106 |

| macro avg | 0.973068 | 0.956438 | 0.963108 | 29397 |

| weighted avg | 0.997223 | 0.997212 | 0.997194 | 29397 |

| Method | Learning Rate | Min Child Weight | Subsample | Colsample by Tree | Max Depth | Gamma |

|---|---|---|---|---|---|---|

| XG-BPSO | 0.593383 | 1.000000 | 0.984577 | 1.000000 | 10 | 0.000000 |

| XG-PSO | 0.716738 | 2.148387 | 1.000000 | 0.931689 | 10 | 0.239401 |

| XG-GA | 0.741573 | 1.000000 | 0.944507 | 0.685084 | 9 | 0.382662 |

| XG-ABC | 0.709378 | 1.860859 | 0.684326 | 0.710326 | 10 | 0.000000 |

| XG-BA | 0.724748 | 3.134685 | 1.000000 | 1.000000 | 10 | 0.800000 |

| XG-WOA | 0.770394 | 3.170319 | 1.000000 | 1.000000 | 10 | 0.279516 |

| XG-HHO | 0.688403 | 2.552526 | 1.000000 | 1.000000 | 10 | 0.751155 |

| XG-ChOA | 0.642902 | 1.146574 | 1.000000 | 0.931855 | 10 | 0.637534 |

| Method | Best | Worst | Mean | Median | Std | Var |

|---|---|---|---|---|---|---|

| XG-BPSO | 0.995561 | 0.993814 | 0.994878 | 0.994970 | 0.000531 | |

| XG-PSO | 0.994379 | 0.990388 | 0.993022 | 0.993561 | 0.001328 | |

| XG-GA | 0.994098 | 0.982394 | 0.990003 | 0.990381 | 0.003349 | |

| XG-ABC | 0.992582 | 0.983179 | 0.989497 | 0.990521 | 0.002917 | |

| XG-BA | 0.994660 | 0.990496 | 0.993149 | 0.993396 | 0.001194 | |

| XG-WOA | 0.994660 | 0.989709 | 0.993029 | 0.993369 | 0.001442 | |

| XG-HHO | 0.994715 | 0.991679 | 0.993612 | 0.993901 | 0.000850 | |

| XG-ChOA | 0.994379 | 0.988417 | 0.992685 | 0.993227 | 0.001796 |

| Method | Best | Worst | Mean | Median | Std | Var |

|---|---|---|---|---|---|---|

| XG-BPSO | 0.002687 | 0.003742 | 0.003100 | 0.003045 | 0.000321 | |

| XG-PSO | 0.003402 | 0.005817 | 0.004222 | 0.003895 | 0.000803 | |

| XG-GA | 0.003572 | 0.010647 | 0.006047 | 0.005817 | 0.002024 | |

| XG-ABC | 0.004490 | 0.010171 | 0.006353 | 0.005732 | 0.001763 | |

| XG-BA | 0.003232 | 0.005749 | 0.004146 | 0.003997 | 0.000721 | |

| XG-WOA | 0.003232 | 0.006225 | 0.004218 | 0.004014 | 0.000871 | |

| XG-HHO | 0.003198 | 0.005035 | 0.003865 | 0.003691 | 0.000514 | |

| XG-ChOA | 0.003402 | 0.007008 | 0.004426 | 0.004099 | 0.001086 |

| Class | Precision | Recall | -Score | Support |

|---|---|---|---|---|

| Cargo | 0.998818 | 0.999114 | 0.998966 | 16921 |

| Dredging | 0.984979 | 0.995662 | 0.990291 | 461 |

| Fishing | 1.000000 | 0.995383 | 0.997686 | 1083 |

| HSC | 0.979412 | 0.994030 | 0.986667 | 335 |

| Law enforcement | 1.000000 | 0.985294 | 0.992593 | 136 |

| Military | 0.992647 | 0.998152 | 0.995392 | 541 |

| Passenger | 0.999336 | 0.996032 | 0.997681 | 1512 |

| Pilot | 0.992337 | 1.000000 | 0.996154 | 259 |

| Pleasure | 1.000000 | 1.000000 | 1.000000 | 7 |

| Port tender | 1.000000 | 1.000000 | 1.000000 | 5 |

| Reserved | 0.921569 | 0.886792 | 0.903846 | 53 |

| SAR | 1.000000 | 0.888889 | 0.941176 | 45 |

| Sailing | 1.000000 | 0.714286 | 0.833333 | 14 |

| Tanker | 0.997732 | 0.998298 | 0.998015 | 7050 |

| Towing | 0.942857 | 0.904110 | 0.923077 | 73 |

| Towing long/wide | 0.842105 | 0.960000 | 0.897196 | 50 |

| Tug | 0.994097 | 0.988263 | 0.991171 | 852 |

| accuracy | 0.997313 | 0.997313 | 0.997313 | 0.997313 |

| macro avg | 0.979170 | 0.959077 | 0.967250 | 29397 |

| weighted avg | 0.997346 | 0.997313 | 0.997303 | 29397 |

| Method | Learning Rate | Min Child Weight | Subsample | Colsample by Tree | Max Depth | Gamma |

|---|---|---|---|---|---|---|

| XG-BPSO | 0.828630 | 2.737313 | 1.000000 | 0.868831 | 10 | 0.071057 |

| XG-PSO | 0.744281 | 3.435928 | 1.000000 | 0.872910 | 10 | 0.785282 |

| XG-GA | 0.730659 | 3.499590 | 1.000000 | 0.872590 | 10 | 0.256810 |

| XG-ABC | 0.681233 | 1.000000 | 1.000000 | 0.903165 | 9 | 0.550955 |

| XG-BA | 0.900000 | 6.501873 | 1.000000 | 0.871536 | 10 | 0.000000 |

| XG-WOA | 0.813991 | 3.414476 | 1.000000 | 0.860721 | 10 | 0.800000 |

| XG-HHO | 0.707112 | 2.440472 | 1.000000 | 1.000000 | 10 | 0.260687 |

| XG-ChOA | 0.734595 | 1.990239 | 1.000000 | 0.874517 | 10 | 0.684537 |

| Method | Best | Worst | Mean | Median | Std | Var |

|---|---|---|---|---|---|---|

| LSTM-BPSO | ||||||

| LSTM-PSO | ||||||

| LSTM-GA | ||||||

| LSTM-ABC | ||||||

| LSTM-BA | ||||||

| LSTM-WOA | ||||||

| LSTM-HHO | ||||||

| LSTM-ChOA |

| Method | R2 | MAE | MSE | RMSE | IoA | EDE |

|---|---|---|---|---|---|---|

| LSTM-BPSO | 0.997921 | 0.007313 | 0.000098 | 0.009985 | 0.999769 | 0.012385 |

| LSTM-PSO | 0.997912 | 0.006937 | 0.000099 | 0.009987 | 0.999774 | 0.011048 |

| LSTM-GA | 0.997311 | 0.008302 | 0.000128 | 0.011299 | 0.999708 | 0.013484 |

| LSTM-ABC | 0.997361 | 0.008045 | 0.000126 | 0.011216 | 0.999711 | 0.012139 |

| LSTM-BA | 0.997554 | 0.007366 | 0.000117 | 0.010794 | 0.999735 | 0.011591 |

| LSTM-WOA | 0.997002 | 0.009676 | 0.000142 | 0.011930 | 0.999672 | 0.015390 |

| LSTM-HHO | 0.997090 | 0.009045 | 0.000139 | 0.011795 | 0.999679 | 0.013913 |

| LSTM-ChOA | 0.997654 | 0.008605 | 0.000111 | 0.010547 | 0.999744 | 0.013513 |

| Method | Learning Rate | Dropout | Epochs | Layers | Neurons Layer 1 | Neurons Layer 2 |

|---|---|---|---|---|---|---|

| LSTM-BPSO | 0.000656 | 0.005000 | 50 | 1 | 27 | / |

| LSTM-PSO | 0.000125 | 0.020000 | 40 | 1 | 31 | / |

| LSTM-GA | 0.006729 | 0.060654 | 39 | 1 | 22 | / |

| LSTM-ABC | 0.004661 | 0.105701 | 44 | 2 | 16 | 24 |

| LSTM-BA | 0.000086 | 0.005000 | 40 | 1 | 32 | / |

| LSTM-WOA | 0.003918 | 0.154873 | 34 | 1 | 27 | / |

| LSTM-HHO | 0.001845 | 0.093174 | 41 | 1 | 26 | / |

| LSTM-ChOA | 0.002802 | 0.144773 | 46 | 1 | 20 | / |

| Problem | BPSO | PSO | GA | ABC | BA | WOA | HHO | ChOA |

|---|---|---|---|---|---|---|---|---|

| Vessel error | 0.032 | 0.041 | 0.046 | 0.018 | 0.021 | 0.033 | 0.035 | 0.040 |

| Vessel kappa | 0.043 | 0.015 | 0.044 | 0.031 | 0.032 | 0.038 | 0.021 | 0.035 |

| Trajectory | 0.011 | 0.003 | 0.015 | 0.009 | 0.019 | 0.006 | 0.010 | 0.013 |

| Problem/p-Values | PSO | GA | ABC | BA | WOA | HHO | ChOA |

|---|---|---|---|---|---|---|---|

| Vessel error | 0.042 | 0.003 | 0.018 | 0.037 | 0.04 | 0.027 | 0.031 |

| Vessel kappa | 0.043 | 0.027 | 0.023 | 0.045 | 0.044 | 0.047 | 0.036 |

| Trajectory | 0.045 | 0.007 | 0.041 | 0.054 | 0.024 | 0.044 | 0.032 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Petrovic, A.; Damaševičius, R.; Jovanovic, L.; Toskovic, A.; Simic, V.; Bacanin, N.; Zivkovic, M.; Spalević, P. Marine Vessel Classification and Multivariate Trajectories Forecasting Using Metaheuristics-Optimized eXtreme Gradient Boosting and Recurrent Neural Networks. Appl. Sci. 2023, 13, 9181. https://doi.org/10.3390/app13169181

Petrovic A, Damaševičius R, Jovanovic L, Toskovic A, Simic V, Bacanin N, Zivkovic M, Spalević P. Marine Vessel Classification and Multivariate Trajectories Forecasting Using Metaheuristics-Optimized eXtreme Gradient Boosting and Recurrent Neural Networks. Applied Sciences. 2023; 13(16):9181. https://doi.org/10.3390/app13169181

Chicago/Turabian StylePetrovic, Aleksandar, Robertas Damaševičius, Luka Jovanovic, Ana Toskovic, Vladimir Simic, Nebojsa Bacanin, Miodrag Zivkovic, and Petar Spalević. 2023. "Marine Vessel Classification and Multivariate Trajectories Forecasting Using Metaheuristics-Optimized eXtreme Gradient Boosting and Recurrent Neural Networks" Applied Sciences 13, no. 16: 9181. https://doi.org/10.3390/app13169181

APA StylePetrovic, A., Damaševičius, R., Jovanovic, L., Toskovic, A., Simic, V., Bacanin, N., Zivkovic, M., & Spalević, P. (2023). Marine Vessel Classification and Multivariate Trajectories Forecasting Using Metaheuristics-Optimized eXtreme Gradient Boosting and Recurrent Neural Networks. Applied Sciences, 13(16), 9181. https://doi.org/10.3390/app13169181