Enhancing User Engagement in Shared Autonomous Vehicles: An Innovative Gesture-Based Windshield Interaction System

Abstract

:1. Introduction

2. Materials and Methods

2.1. Graphical User Interface

- Conducting stylistic research on existing interface systems in cars to establish the UI’s tone of voice, finalize the interface’s color scheme, and select appropriate icons [15];

- Defining and organizing valuable information for the user during the driving experience, ensuring it aligns with the service provided;

- Simultaneously working on GUI design and implementing the haptic gesture system to ensure interface alignment and consistent user experience;

- Iteratively testing design proposals in a virtual environment to simulate and assess the effectiveness of the interface on a HUD.

- The left side is dedicated to the driving information area, including speed and driving mode details;

- Navigation Info is positioned in the middle to reassure the user by displaying the route and road situation, including an interactive map and traffic updates. A 3D representation of the vehicle is projected onto the interface, allowing the user to proactively monitor the car’s state in the external environment;

- The third area on the right is dedicated to the navigation menu, which has been limited to five main items (navigation, calls, music, documents, points of interest) to accommodate finger interaction.

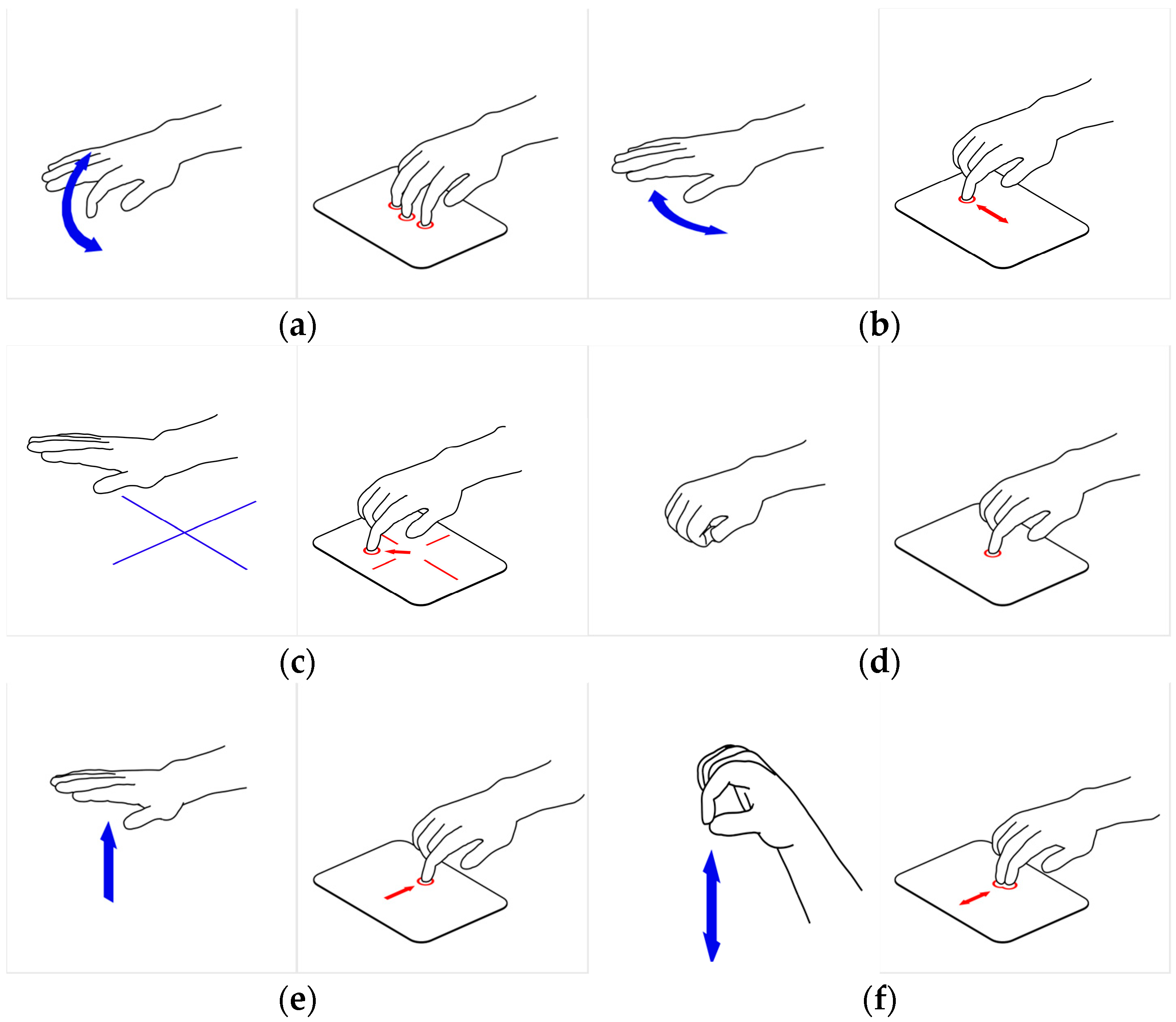

2.2. Interaction Devices and Gestures

- Finger interaction: By keeping the palm and fingers parallel to the floor, the users can activate a specific menu when a single finger is blended. For the trackpad, due to the impossibility of determining the specific finger doing the interaction, a different methodology has been developed: main sections are activated according to the number of fingers positioned on the trackpad;

- Swipe interaction: The hand movement in the 3D space from right to left gets detected and interpreted to return a direction; this movement is used to swipe between different menu elements on the windscreen. The same interaction is used but performed in the 2D space for the trackpad;

- Grid interaction: The information on the windshield is selected by moving the hand through a virtual grid positioned parallel to the floor and perpendicular to the windscreen. The interaction is the same for the trackpad, but fingers should swipe in the direction of the element to select it;

- Confirm: To confirm the selected menu elements, the users should perform a grab gesture by bending all the fingers from an open position to a closed one (“fist”). For the trackpad, they only need to touch and release the surface of the pad with a finger;

- Back: To return from a menu to a previous one, the user should rapidly swipe up, returning to the initial position. For the trackpad, they should perform a quick swipe with their fingers from the center of the pad to the bottom part;

- Volume: To modify the music volume, the users should put two fingers near each other (“pinch”) and then move their hand on the axis perpendicular to the floor to turn up and down the volume. For the trackpad, they should put two fingers on the pad and then drag them up or down to increase or decrease the volume.

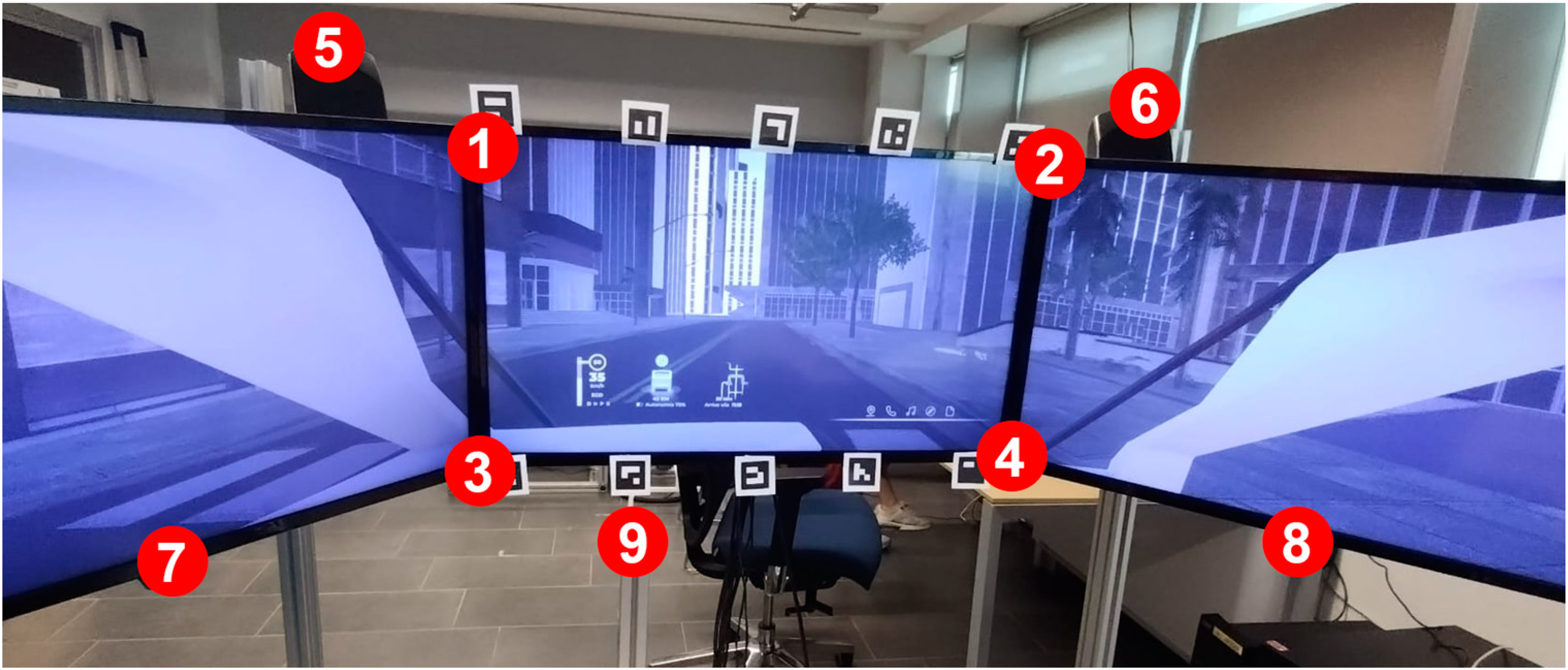

2.3. Driving Simulator

3. Test Campaign

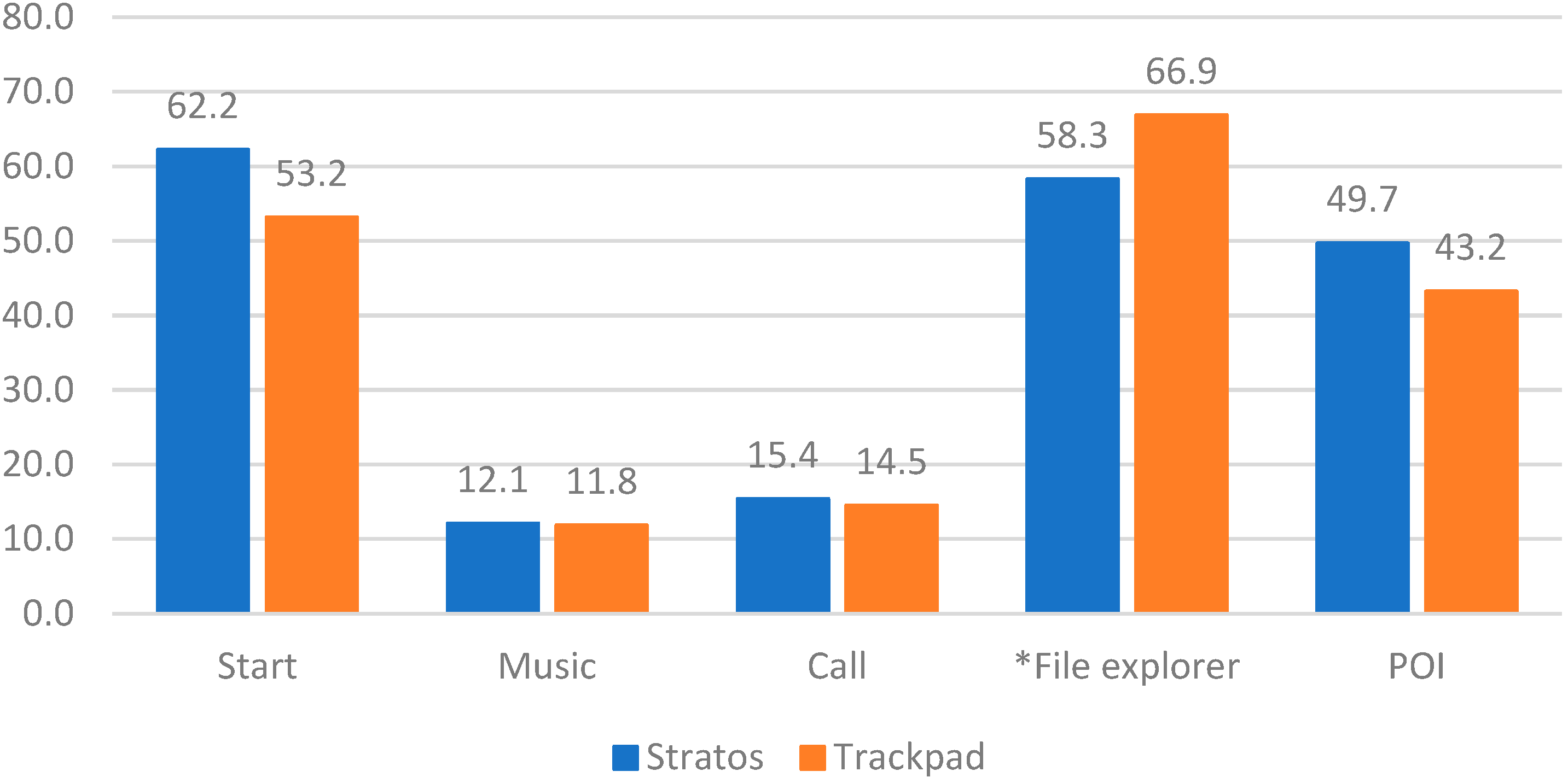

- Start: Set the car’s destination and confirm the trip starting the car; the interactions used were finger interaction, swipe, and confirm;

- Music: Select a song and then adjust the music volume; with finger interaction, swipe, confirm, and adapt them with the pitch gesture;

- Call: Take a call and close it with two swipe interactions;

- File Explorer: Browse the file menu to find, open, and skim a presentation file using the finger interaction, swipe, grid, and confirm;

- POI: Select a Point Of Interest from the specific menu using the finger gesture, the grid interaction, and the confirm gesture.

- The participants were introduced to the research and its objectives. They were then requested to complete an anonymous form, which included general details such as age range and nationality and specific information on their driving practices and familiarity with the interaction mode;

- The subject performed warmup activities to become familiar with the system. During the warmup, the users used a blank scenario with only the GUI and were guided by a moderator on controlling the interface. When all the gestures were explained, the users had five more minutes to explore the system and gain confidence freely, as proposed in similar studies [20,21];

- The subjects were asked to wear the eye-tracking device; then, its calibration was performed;

- The actual test starts; the subjects were asked to follow the instructions given by a pre-recorded neutral voice, repeated only once at the beginning of the task. Eye-tracking data, time, and errors were monitored during the test;

- After completing all the tasks, the participants were instructed to complete the two questionnaires (Raw NASA-TLX and AttrakDiff). Afterward, a short and spontaneous discussion was held to debrief.

Participants

4. Test Results and Insights

4.1. Task Success and Time

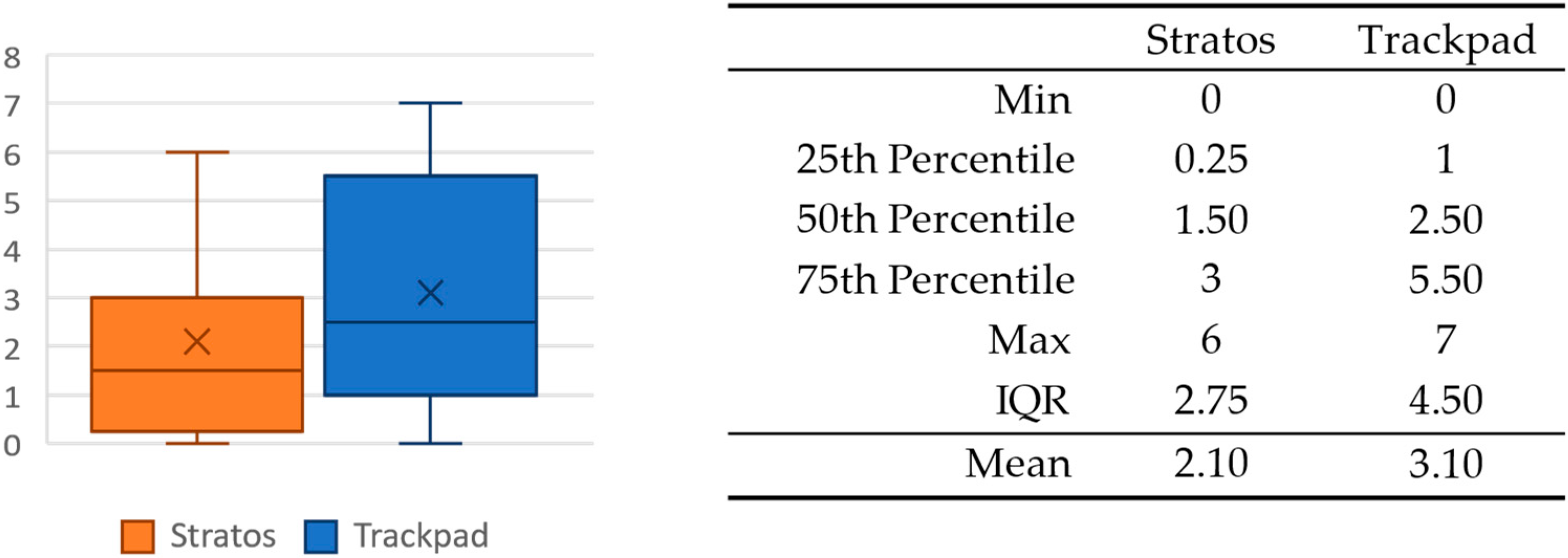

4.2. Number of Distractions Caused by the Interface

4.3. Perceived Physical and Cognitive Load

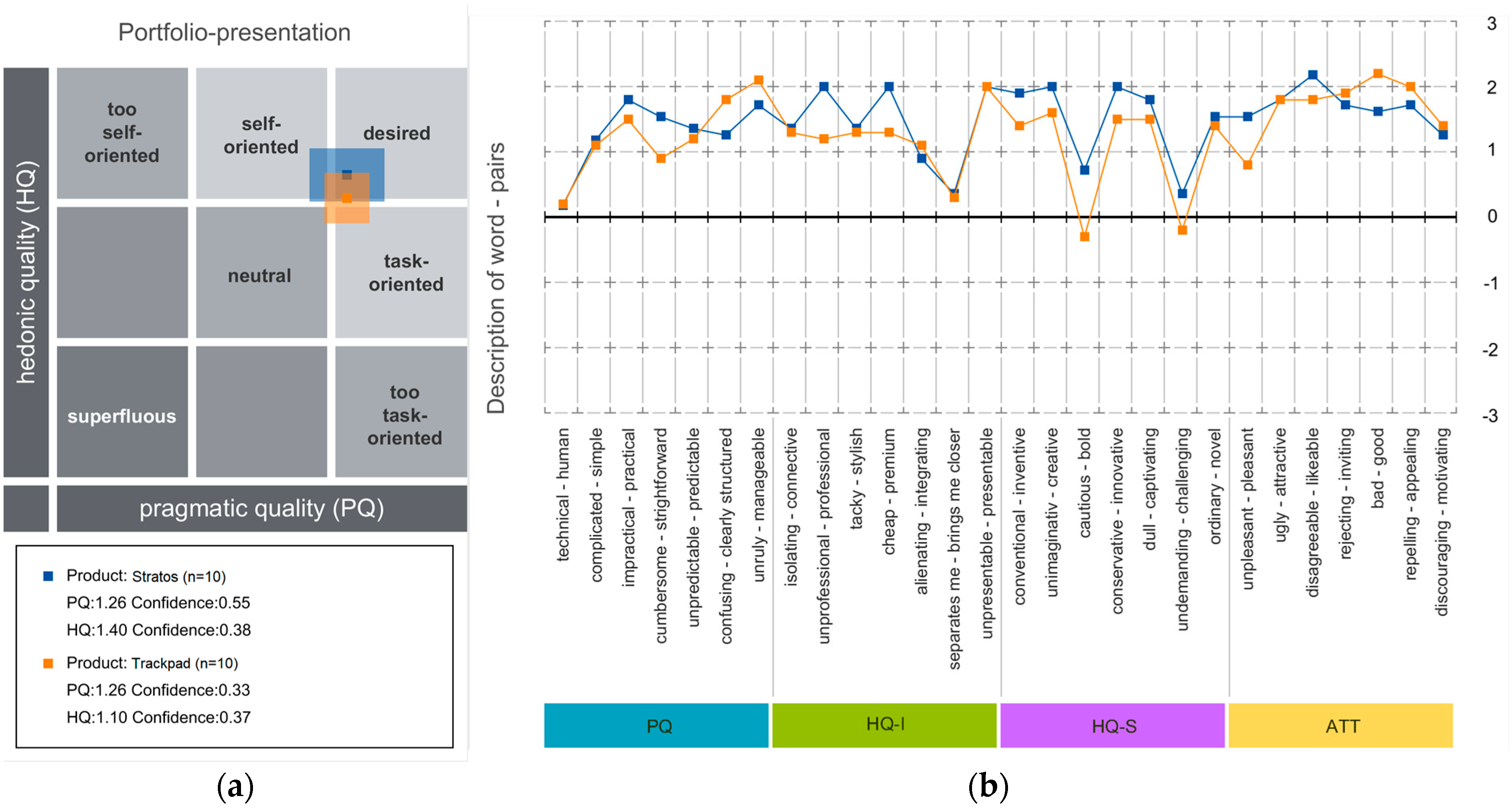

4.4. Attractiveness

4.5. Post-Test Insights: Unstructured Interviews

- Lack of feedback: Users requested more precise feedback from the interface as they often had a perception gap regarding whether they had acted correctly. Particularly for gestures, users emphasized the need for more timely feedback, such as sound or visual references, to help them be more efficient during interaction with the interface;

- UI/UX issues: Several users needed clarification on the menu icons, mistaking the POI icon for the destination icon. Inconsistencies in the interface, such as visual indicators and transparency effects for scrolling, also confused and disrupted users’ familiarity with the interface;

- Responsiveness and complexity of gestures: Certain gestures were more accessible to execute than others. The “grid interaction” proved to be the most challenging and unnatural to master compared to the “swipe interaction”, which is more intuitive and aligns with users’ familiarity with digital devices. On the other hand, the “pinch interaction” for music control received positive feedback, mainly due to the immediate feedback that follows the gesture;

- Hand position and finger usage: Some users needed clarification on whether they had to maintain a specific hand position for the device to detect their hand or if they could relax. Additionally, prolonged haptic feedback was found to be bothersome by some users. Moreover, gestures that required all five fingers posed an accessibility challenge.

- Appreciation for the look and feel: Users found the interface unobtrusive, taking up minimal space and conveniently placed on the windshield, allowing them to focus on the road without distraction. They also appreciated the minimalist design, color scheme, and overall sense of calm it provided;

- Perception of innovation: The proposed interaction system has received positive feedback from users, particularly the gestures that have been implemented. The novelty effect was also apparent with the trackpad, even though it is a more commonly used device;

- Haptic feedback: Users appreciated the haptic feedback, which often guided them during gesture performance, providing a sense of touch and confirming that they had successfully executed the requested actions.

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Nichol, R.J. Airline Head-Up Display Systems: Human Factors Considerations. Int. J. Econ. Manag. Sci. 2015, 4, 248. [Google Scholar] [CrossRef]

- Tonnis, M.; Lange, C.; Klinker, G. Visual Longitudinal and Lateral Driving Assistance in the Head-Up Display of Cars. In Proceedings of the 2007 6th IEEE and ACM International Symposium on Mixed and Augmented Reality, Nara, Japan, 13–16 November 2007; pp. 91–94. [Google Scholar]

- Halin, A.; Verly, J.G.; Van Droogenbroeck, M. Survey and Synthesis of State of the Art in Driver Monitoring. Sensors 2021, 21, 5558. [Google Scholar] [CrossRef] [PubMed]

- Guo, H.; Zhao, F.; Wang, W.; Jiang, X. Analyzing Drivers’ Attitude towards HUD System Using a Stated Preference Survey. Adv. Mech. Eng. 2015, 6, 380647. [Google Scholar] [CrossRef]

- Panasonic Drives You. Available online: https://na.panasonic.com/us/news/panasonic-automotive-brings-expansive-artificial-intelligence-enhanced-situational-awareness-driver (accessed on 14 July 2023).

- Stecher, M.; Michel, B.; Zimmermann, A. The Benefit of Touchless Gesture Control: An Empirical Evaluation of Commercial Vehicle-Related Use Cases. In Advances in Human Aspects of Transportation. AHFE 2017. Advances in Intelligent Systems and Computing; Springer: Cham, Switzerland, 2018; pp. 383–394. [Google Scholar]

- Survey on 3D Hand Gesture Recognition|IEEE Journals & Magazine|IEEE Xplore. Available online: https://ieeexplore.ieee.org/document/7208833 (accessed on 23 May 2023).

- Häuslschmid, R.; Osterwald, S.; Lang, M.; Butz, A. Augmenting the Driver’s View with Peripheral Information on a Windshield Display. In Proceedings of the 20th International Conference on Intelligent User Interfaces, Atlanta, GA, USA, 29 March–1 April 2015. [Google Scholar]

- Ohn-Bar, E.; Tran, C.; Trivedi, M. Hand Gesture-Based Visual User Interface for Infotainment. In Proceedings of the 4th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Portsmouth, UK, 17–19 October 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 111–115. [Google Scholar]

- May, K.; Gable, T.; Walker, B. A Multimodal Air Gesture Interface for In Vehicle Menu Navigation. In Proceedings of the Adjunct Proceedings of the 6th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Seattle, WA, USA, 17–19 September 2014; pp. 1–6. [Google Scholar]

- Ferscha, A.; Riener, A. Pervasive Adaptation in Car Crowds. In Mobile Wireless Middleware, Operating Systems, and Applications-Workshops. MOBILWARE 2009. Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Springer: Berlin/Heidelberg, Germany, 2009; Volume 12, pp. 111–117. [Google Scholar]

- Brand, D.; Büchele, K.; Meschtscherjakov, A. Pointing at the HUD: Gesture Interaction Using a Leap Motion. In Proceedings of the Adjunct Proceedings of the 8th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Ann Arbor, MI, USA, 24–26 October 2016; pp. 167–172. [Google Scholar]

- Lindgren, T. Experiencing Electric Vehicles: The Car as a Digital Platform. In Proceedings of the 55th Hawaii International Conference on System Sciences, Hawaii, HI, USA, 4–7 January 2022. [Google Scholar]

- Deng, J.; Wu, X.; Wang, F.; Li, S.; Wang, H. Analysis and Classification of Vehicle-Road Collaboration Application Scenarios. Procedia Comput. Sci. 2022, 208, 111–117. [Google Scholar] [CrossRef]

- Liu, A.; Tan, H. Research on the Trend of Automotive User Experience. In Proceedings of the Cross-Cultural Design. Product and Service Design, Mobility and Automotive Design, Cities, Urban Areas, and Intelligent Environments Design, Virtual, 26 June–1 July 2022; Rau, P.-L.P., Ed.; Springer International Publishing: Cham, Switzerland, 2022; pp. 180–201. [Google Scholar]

- Haptics|Ultraleap. Available online: https://www.ultraleap.com/haptics/ (accessed on 23 May 2023).

- Idrive|Interaction between Driver, Road Infrastructure, Vehicle, and Environment. Available online: https://www.idrive.polimi.it/ (accessed on 27 June 2023).

- Unity Real-Time Development Platform|3D, 2D, VR & AR Engine. Available online: https://unity.com (accessed on 27 June 2023).

- Pupil Core—Open Source Eye Tracking Platform—Pupil Labs. Available online: https://pupil-labs.com/products/core/ (accessed on 26 July 2023).

- Trojaniello, D.; Cristiano, A.; Sanna, A.; Musteata, S. Evaluating Real-Time Hand Gesture Recognition for Automotive Applications in Elderly Population: Cognitive Load, User Experience and Usability Degree. In Proceedings of the Third International Conference on Informatics and Assistive Technologies for Health-Care, Medical Support and Wellbeing HEALTHINFO 2018, Nice, France, 14–18 October 2018; pp. 36–41. [Google Scholar]

- Szczerba, J.; Hersberger, R.; Mathieu, R. A Wearable Vibrotactile Display for Automotive Route Guidance: Evaluating Usability, Workload, Performance and Preference. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2015, 59, 1027–1031. [Google Scholar] [CrossRef]

- Said, S.; Gozdzik, M.; Roche, T.R.; Braun, J.; Rössler, J.; Kaserer, A.; Spahn, D.R.; Nöthiger, C.B.; Tscholl, D.W. Validation of the Raw National Aeronautics and Space Administration Task Load Index (NASA-TLX) Questionnaire to Assess Perceived Workload in Patient Monitoring Tasks: Pooled Analysis Study Using Mixed Models. J. Med. Internet Res. 2020, 22, e19472. [Google Scholar] [CrossRef] [PubMed]

- Hassenzahl, M. Mit Dem AttrakDiff Die Attraktivität Interaktiver Produkte Messen. In Proceedings of the Usability Professionals UP04, Stuttgart, Germany, 2004; pp. 96–102. [Google Scholar]

- Rümelin, S.; Butz, A. How to Make Large Touch Screens Usable While Driving. In Proceedings of the 5th International Conference on Automotive User Interfaces and Interactive Vehicular Applications, Eindhoven, The Netherlands, 28–30 October 2013; Association for Computing Machinery: New York, NY, USA, 2013; pp. 48–55. [Google Scholar]

| Stratos | Trackpad | Total | |||||

|---|---|---|---|---|---|---|---|

| Sample size | 10 | - | 10 | - | 20 | - | |

| Gender | Female | 4 | 40% | 3 | 30% | 7 | 35% |

| Male | 6 | 60% | 7 | 70% | 13 | 65% | |

| Dominant hand | Right | 9 | 90% | 9 | 90% | 18 | 90% |

| Left | 1 | 10% | - | - | 1 | 5% | |

| Both | - | - | 1 | 10% | 1 | 5% | |

| Has a car driving license? | Yes | 10 | 100% | 10 | 100% | 20 | 100% |

| No | - | - | - | - | - | - | |

| How often do you drive? | Daily | 1 | 10% | 6 | 60% | 7 | 35% |

| Weekly | 5 | 50% | 2 | 20% | 7 | 35% | |

| Monthly | - | - | 1 | 10% | 1 | 5% | |

| Sometime | 4 | 40% | 1 | 10% | 5 | 25% | |

| Have you already used this type of interface? | Yes | 3 | 30% | 9 | 90% | 12 | 60% |

| No | 7 | 70% | 1 | 10% | 8 | 40% | |

| Input Modality | Task | Positive | Negative | ||

|---|---|---|---|---|---|

| Stratos | Start | 9 | 90% | 1 | 10% |

| Music | 10 | 100% | - | - | |

| Call | 10 | 100% | - | - | |

| File Explorer | 8 | 80% | 2 * | 20% | |

| POI | 10 | 100% | - | - | |

| Trackpad | Start | 6 | 60% | 4 | 40% |

| Music | 10 | 100% | - | - | |

| Call | 10 | 100% | - | - | |

| File Explorer | 10 | 100% | - | - | |

| POI | 10 | 100% | - | - | |

| Task | W | p |

|---|---|---|

| Start | 52.5 | 0.821 |

| Music | 45.5 | 0.762 |

| Cell | 52.5 | 0.880 |

| * File explorer | 29.5 | 0.374 |

| POI | 49.0 | 0.971 |

| NASA-TLX Subscale | W | p |

|---|---|---|

| Mental Demand | 70 | 0.138 |

| Physical Demand | 64 | 0.291 |

| Temporal Demand | 38 | 0.378 |

| Performance | 51.5 | 0.939 |

| Effort | 66 | 0.237 |

| Frustration | 73.5 | 0.080 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Bellani, P.; Picardi, A.; Caruso, F.; Gaetani, F.; Brevi, F.; Arquilla, V.; Caruso, G. Enhancing User Engagement in Shared Autonomous Vehicles: An Innovative Gesture-Based Windshield Interaction System. Appl. Sci. 2023, 13, 9901. https://doi.org/10.3390/app13179901

Bellani P, Picardi A, Caruso F, Gaetani F, Brevi F, Arquilla V, Caruso G. Enhancing User Engagement in Shared Autonomous Vehicles: An Innovative Gesture-Based Windshield Interaction System. Applied Sciences. 2023; 13(17):9901. https://doi.org/10.3390/app13179901

Chicago/Turabian StyleBellani, Pierstefano, Andrea Picardi, Federica Caruso, Flora Gaetani, Fausto Brevi, Venanzio Arquilla, and Giandomenico Caruso. 2023. "Enhancing User Engagement in Shared Autonomous Vehicles: An Innovative Gesture-Based Windshield Interaction System" Applied Sciences 13, no. 17: 9901. https://doi.org/10.3390/app13179901