A Lightweight Reconstruction Model via a Neural Network for a Video Super-Resolution Model

Abstract

:1. Introduction

2. Materials and Methods

2.1. Recurrent Neural Network (RNN)

2.2. Recursive Residual Network (RRN)

2.3. Depth-Separable Convolution

2.4. Network Design

2.5. Image Quality Evaluation

3. Results

3.1. Datasets

3.1.1. Vimeo-90k

3.1.2. Vid 4

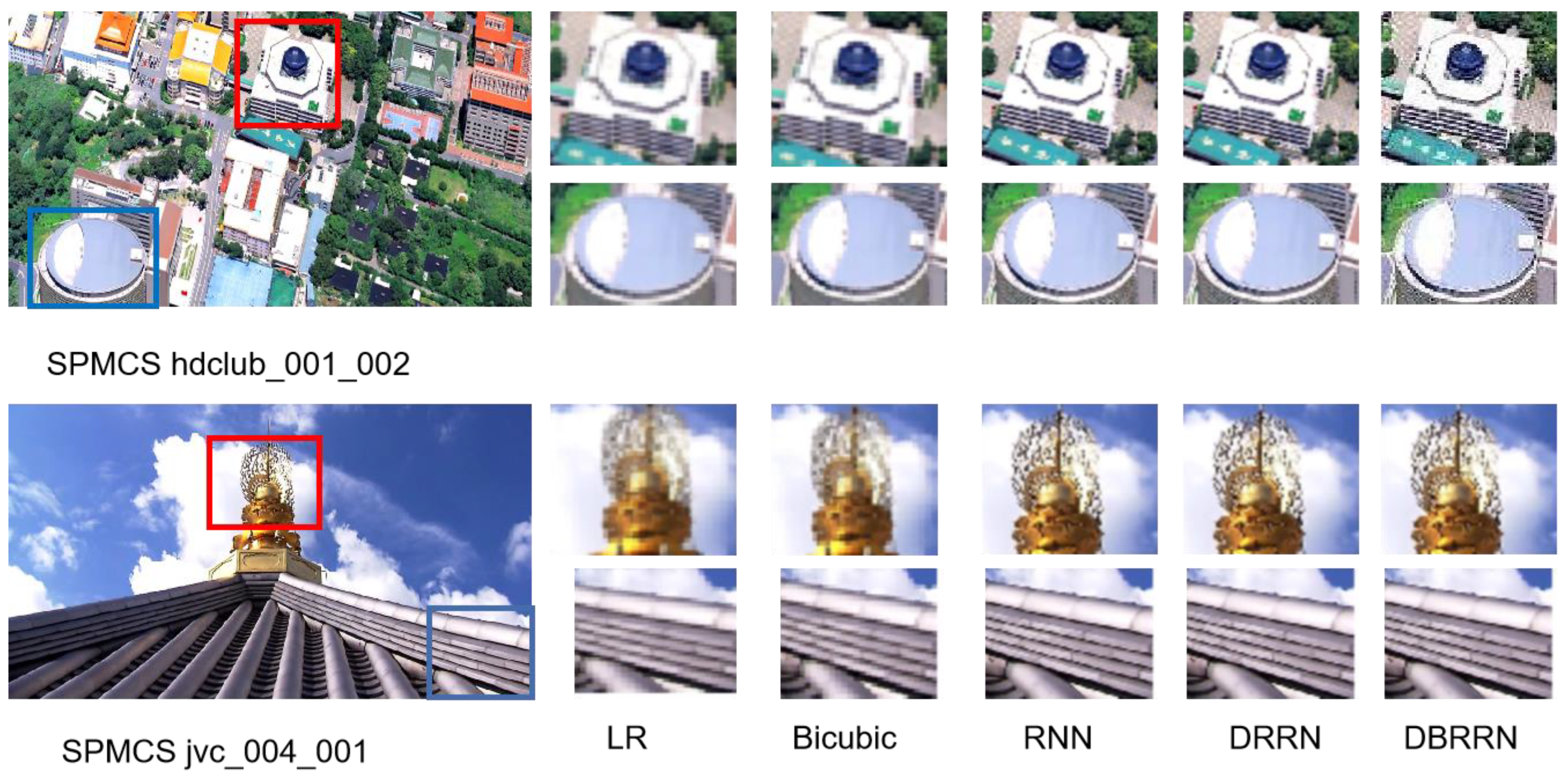

3.1.3. SPMCS

3.1.4. UDM10

3.2. Experimental Settings and Training Procedures

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- LeCun, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Yang, J.; Wright, J.; Huang, T.S.; Ma, Y. Image Super-Resolution Via Sparse Representation. IEEE Trans. Image Process. 2010, 19, 2861–2873. [Google Scholar] [CrossRef] [PubMed]

- Liu, C.; Sun, D. On Bayesian Adaptive Video Super Resolution. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 36, 346–360. [Google Scholar] [CrossRef] [PubMed]

- Ma, Z.; Liao, R.; Tao, X.; Xu, L.; Jia, J.; Wu, E. Handling Motion Blur in Multi-Frame Super-Resolution. In Proceedings of the 2015 IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5224–5232. [Google Scholar]

- Dong, C.; Loy, C.C.; He, K.; Tang, X. Learning a Deep Convolutional Network for Image Super-Resolution. In Proceedings of the European Conference on Computer Vision, Zurich, Switzerland, 6–12 September 2014; pp. 184–199. [Google Scholar]

- Dong, C.; Loy, C.C.; Tang, X. Accelerating the Super-Resolution Convolutional Neural Network. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 391–407. [Google Scholar]

- Kim, J.; Lee, J.K.; Lee, K.M. Accurate Image Super-Resolution Using Very Deep Convolutional Networks. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1646–1654. [Google Scholar]

- Shi, W.; Caballero, J.; Huszár, F.; Totz, J.; Aitken, A.P.; Bishop, R.; Rueckert, D.; Wang, Z. Real-Time Single Image and Video Super-Resolution Using an Efficient Sub-Pixel Convolutional Neural Network. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 1874–1883. [Google Scholar]

- Lim, B.; Son, S.; Kim, H.; Nah, S.; Mu Lee, K. Enhanced Deep Residual Networks for Single Image Super-Resolution. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 1132–1140. [Google Scholar]

- Zhang, Y.; Tian, Y.; Kong, Y.; Zhong, B.; Fu, Y. Residual Dense Network for Image Super-Resolution. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2472–2481. [Google Scholar]

- Zhang, Y.; Li, K.; Li, K.; Wang, L.; Zhong, B.; Fu, Y. Image Super-Resolution Using Very Deep Residual Channel Attention Networks. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 294–310. [Google Scholar]

- Guo, Y.; Chen, J.; Wang, J.; Chen, Q.; Cao, J.; Deng, Z.; Xu, Y.; Tan, M. Closed-Loop Matters: Dual Regression Networks for Single Image Super-Resolution. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 5406–5415. [Google Scholar]

- Liao, R.; Tao, X.; Li, R.; Ma, Z.; Jia, J. Video Super-Resolution via Deep Draft-Ensemble Learning. In Proceedings of the 2015 IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 531–539. [Google Scholar]

- Kappeler, A.; Yoo, S.; Dai, Q.; Katsaggelos, A.K. Video Super-Resolution With Convolutional Neural Networks. IEEE Trans. Comput. Imaging 2016, 2, 109–122. [Google Scholar] [CrossRef]

- Caballero, J.; Ledig, C.; Aitken, A.; Totz, J.; Wang, Z.; Shi, W. Real-Time Video Super-Resolution with Spatio-Temporal Networks and Motion Compensation. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2848–2857. [Google Scholar]

- Jo, Y.; Oh, S.W.; Kang, J.; Kim, S.J. Deep Video Super-Resolution Network Using Dynamic Upsampling Filters Without Explicit Motion Compensation. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3224–3232. [Google Scholar]

- Tao, X.; Gao, H.; Liao, R.; Wang, J.; Jia, J. Detail-Revealing Deep Video Super-Resolution. In Proceedings of the 2017 IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4482–4490. [Google Scholar]

- Tian, Y.; Zhang, Y.; Fu, Y.; Xu, C. TDAN: Temporally-Deformable Alignment Network for Video Super-Resolution. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 3357–3366. [Google Scholar]

- Haris, M.; Shakhnarovich, G.; Ukita, N. Recurrent Back-Projection Network for Video Super-Resolution. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–18 June 2019; pp. 3892–3901. [Google Scholar]

- Wang, X.; Chan, K.C.; Yu, K.; Dong, C.; Change Loy, C. EDVR: Video Restoration With Enhanced Deformable Convolutional Networks. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Long Beach, CA, USA, 16–17 June 2019; pp. 1954–1963. [Google Scholar]

- Chen, J.; Tan, X.; Shan, C.; Liu, S.; Chen, Z. VESR-Net: The Winning Solution to Youku Video Enhancement and Super-Resolution Challenge. arXiv 2020, arXiv:2003.02115 2020. [Google Scholar]

- Chan, K.C.K.; Wang, X.; Yu, K.; Dong, C.; Loy, C.C. Understanding Deformable Alignment in Video Super-Resolution. AAAI Conf. Artif. Intell. 2020, 35, 973–981. [Google Scholar] [CrossRef]

- Ledig, C.; Theis, L.; Huszár, F.; Caballero, J.; Cunningham, A.; Acosta, A.; Aitken, A.; Tejani, A.; Totz, J.; Wang, Z.; et al. Photo-Realistic Single Image Super-Resolution Using a Generative Adversarial Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 105–114. [Google Scholar]

- Wang, X.; Yu, K.; Wu, S.; Gu, J.; Liu, Y.; Dong, C.; Qiao, Y.; Change Loy, C. ESRGAN: Enhanced Super-Resolution Generative Adversarial Networks. In Proceedings of the European Conference on Computer Vision Workshops, Munich, Germany, 8–14 September 2018; pp. 63–79. [Google Scholar]

- Lucas, A.; Lopez-Tapia, S.; Molina, R.; Katsaggelos, A.K. Generative Adversarial Networks and Perceptual Losses for Video Super-Resolution. IEEE Trans. Image Process. 2019, 28, 3312–3327. [Google Scholar] [CrossRef] [PubMed]

- Chu, M.; Xie, Y.; Mayer, J.; Leal-Taixé, L.; Thuerey, N. Learning Temporal Coherence via Self-Supervision for GAN-based Video Generation. ACM Trans. Graphics 2018, 39, 75. [Google Scholar] [CrossRef]

- Guo, X.; Tu, Z.; Li, G.; Shen, Z.; Wu, W. A novel lightweight multi-dimension feature fusion network for single-image super-resolution reconstruction. Vis. Comput. Sci. 2023, 26, 1–12. [Google Scholar] [CrossRef]

- Tian, C.W.; Xu, Y.; Zuo, W.M.; Lin, C.W.; Zhang, D. Asymmetric CNN for Image Superresolution. IEEE Trans. Syst. Man Cybern. Syst. 2022, 52, 3718–3730. [Google Scholar] [CrossRef]

- Zhu, F.; Jia, X.; Wang, S. Revisiting Temporal Modeling for Video Super-resolution. arXiv 2020, arXiv:2008.05765. [Google Scholar]

- Chollet, F. Xception: Deep Learning with Depthwise Separable Convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Xiang, X.; Tian, Y.; Zhang, Y.; Fu, Y.; Allebach, J.P.; Xu, C. Zooming Slow-Mo: Fast and Accurate One-Stage Space-Time Video Super-Resolution. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Yi, P.; Wang, Z.; Jiang, K.; Jiang, J.; Ma, J. Progressive Fusion Video Super-Resolution Network via Exploiting Non-Local Spatio-Temporal Correlations. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Korea, 27 October–2 November 2019. [Google Scholar]

| Method | RNN | DRRN | DBRRN |

|---|---|---|---|

| Input Frames | recurrent | recurrent | recurrent |

| Param. [M] | 7.204 | 2.93 | 2.94 |

| FLOPs [GMAC] | 193 | 108 | 120 |

| Runtime [ms] | 45 | 30 | 32 |

| Vid4 (Y) | 27.69 | 26.78 | 27.01 |

| SPMCS (Y) | 29.89 | 28.89 | 29.10 |

| UDM10 (Y) | 30.33 | 29.55 | 30.01 |

| Car | hdclub | hitachi_isee | hk | jvc | |

|---|---|---|---|---|---|

| SSIM | 0.80 | 0.58 | 0.69 | 0.80 | 0.82 |

| PSNR | 28.05 | 21.12 | 22.19 | 28.32 | 27.02 |

| Time | 0.0475 | 0.0467 | 0.048 | 0.0475 | 0.053 |

| Score | RNN | DRRN | DBRRN |

|---|---|---|---|

| car | 2.6 | 2.6 | 2.7 |

| hdclub | 2.0 | 2.0 | 2.2 |

| Hitachi_isee | 2.4 | 2.5 | 2.5 |

| hk | 2.6 | 2.6 | 2.7 |

| jvc | 2.7 | 2.7 | 2.8 |

| Average | 2.46 | 2.48 | 2.58 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tang, X.; Xu, Y.; Ouyang, F.; Zhu, L. A Lightweight Reconstruction Model via a Neural Network for a Video Super-Resolution Model. Appl. Sci. 2023, 13, 10165. https://doi.org/10.3390/app131810165

Tang X, Xu Y, Ouyang F, Zhu L. A Lightweight Reconstruction Model via a Neural Network for a Video Super-Resolution Model. Applied Sciences. 2023; 13(18):10165. https://doi.org/10.3390/app131810165

Chicago/Turabian StyleTang, Xinkun, Ying Xu, Feng Ouyang, and Ligu Zhu. 2023. "A Lightweight Reconstruction Model via a Neural Network for a Video Super-Resolution Model" Applied Sciences 13, no. 18: 10165. https://doi.org/10.3390/app131810165

APA StyleTang, X., Xu, Y., Ouyang, F., & Zhu, L. (2023). A Lightweight Reconstruction Model via a Neural Network for a Video Super-Resolution Model. Applied Sciences, 13(18), 10165. https://doi.org/10.3390/app131810165