A Low-Brightness Image Enhancement Algorithm Based on Multi-Scale Fusion

Abstract

:1. Introduction

2. Related Work

3. Proposed Method

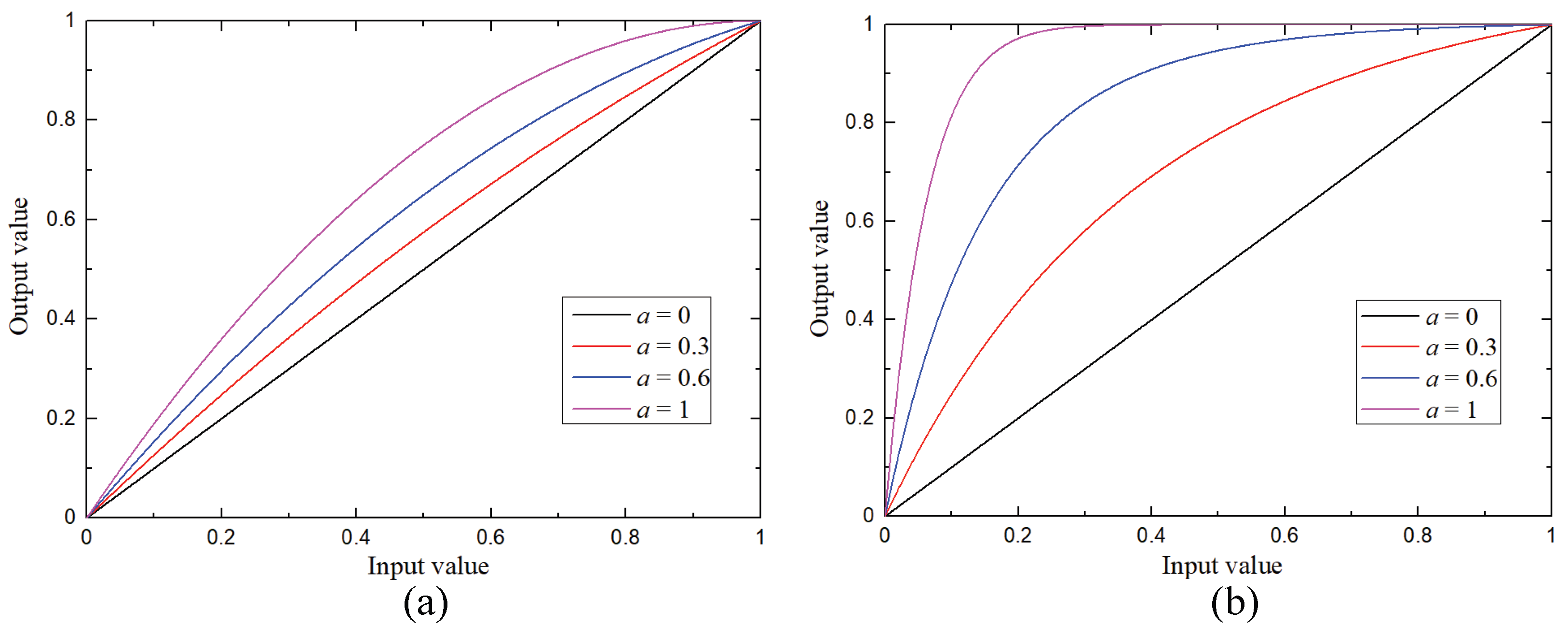

3.1. Brightness Transformation Function

3.2. Weights Definition

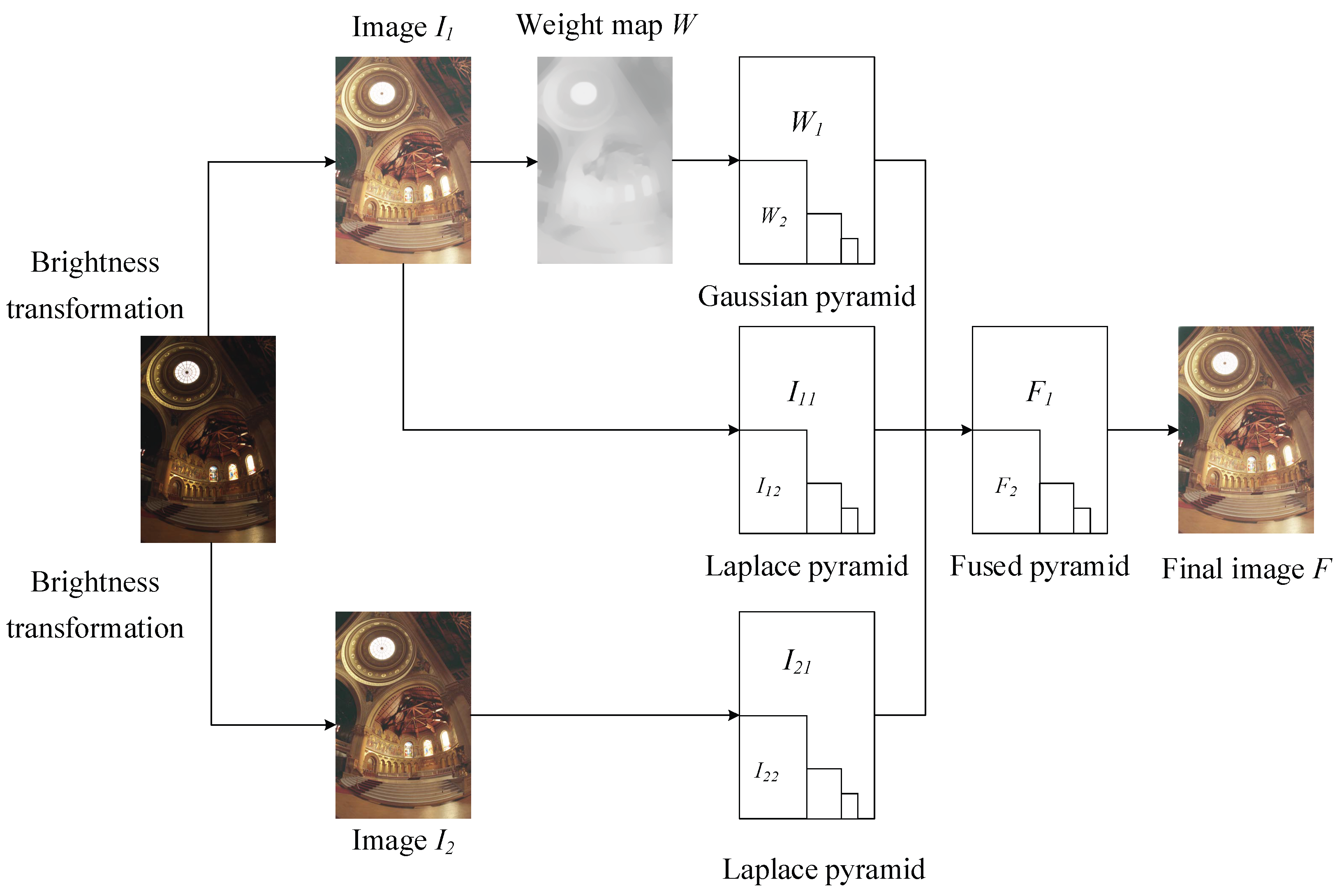

3.3. Pyramid Fusion

| Algorithm 1: The proposed algorithm |

| Input: Source image I, parameter a, parameter n |

| output: The result after fusion |

| 1: Calculate , by Equation (5); |

| 2: Put , into the Laplace pyramid; |

| 3: Take image as the source image for calculating the weight map W; |

| 4: Put W into the Gaussian pyramid; |

| 5: for each , and do |

| 6: Calculate by Equation (13); |

| 7: end for |

| 8: Use Laplacian pyramid reconstruction to obtain the final fused image . |

4. Experiment and Analysis

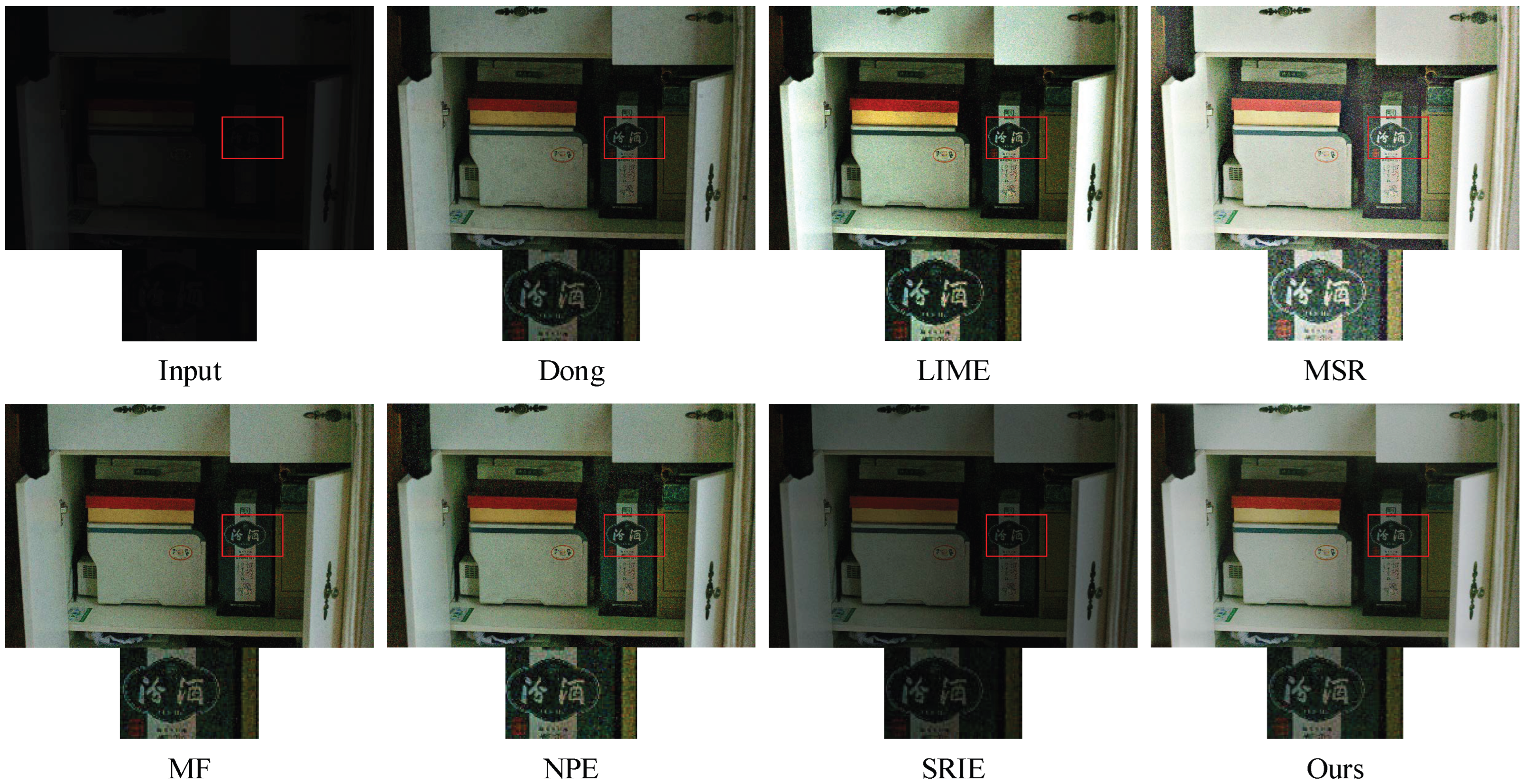

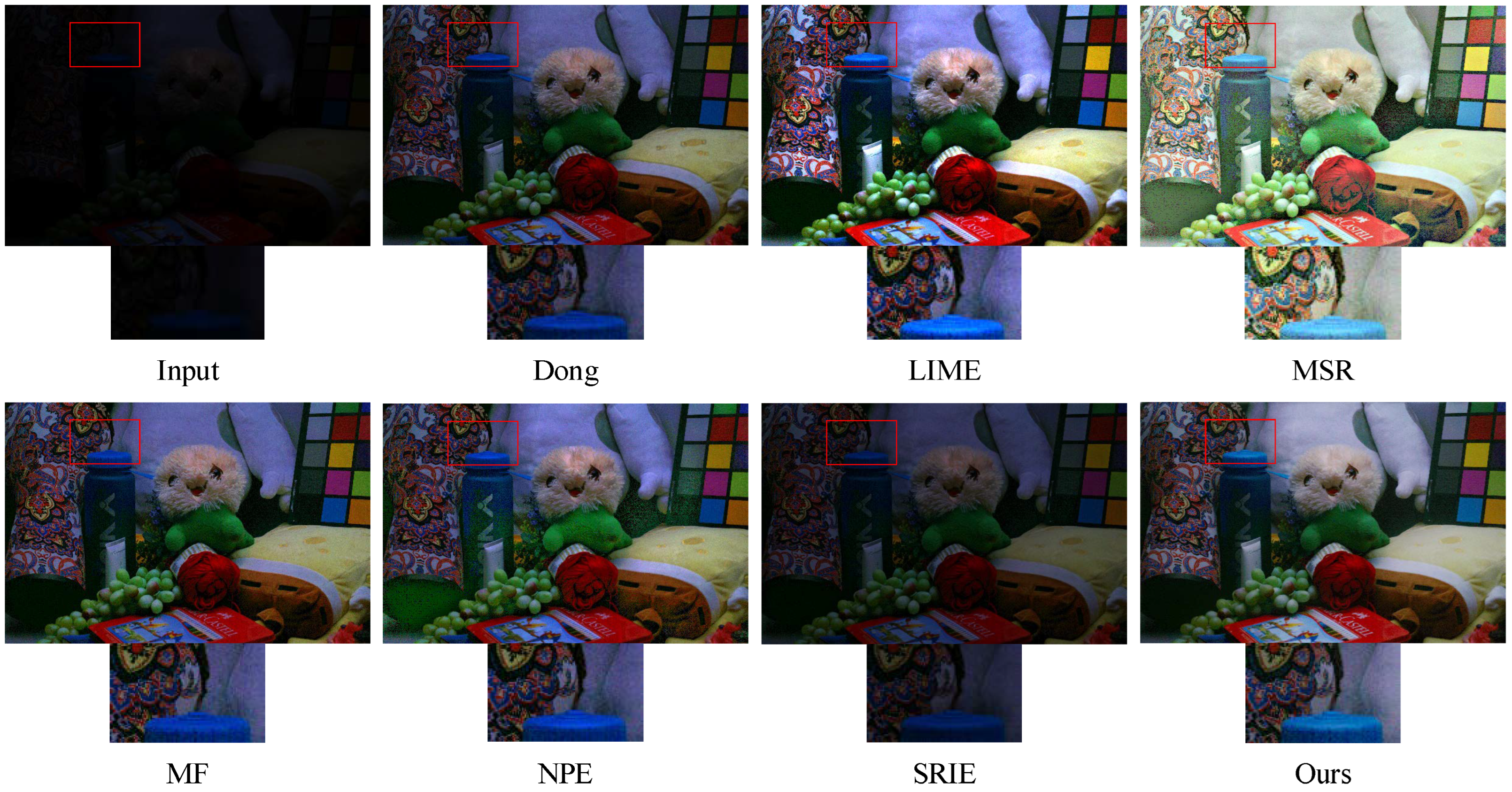

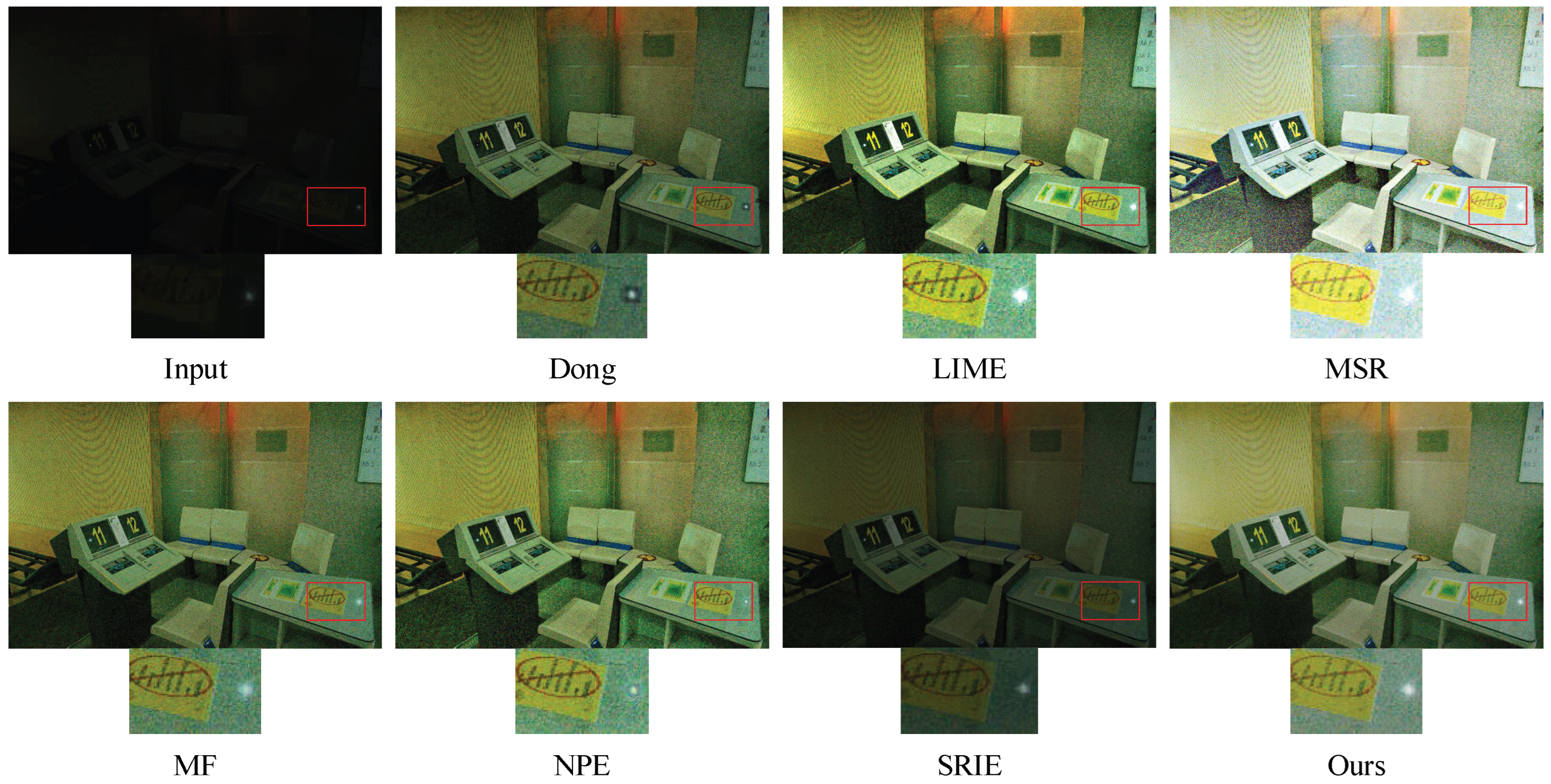

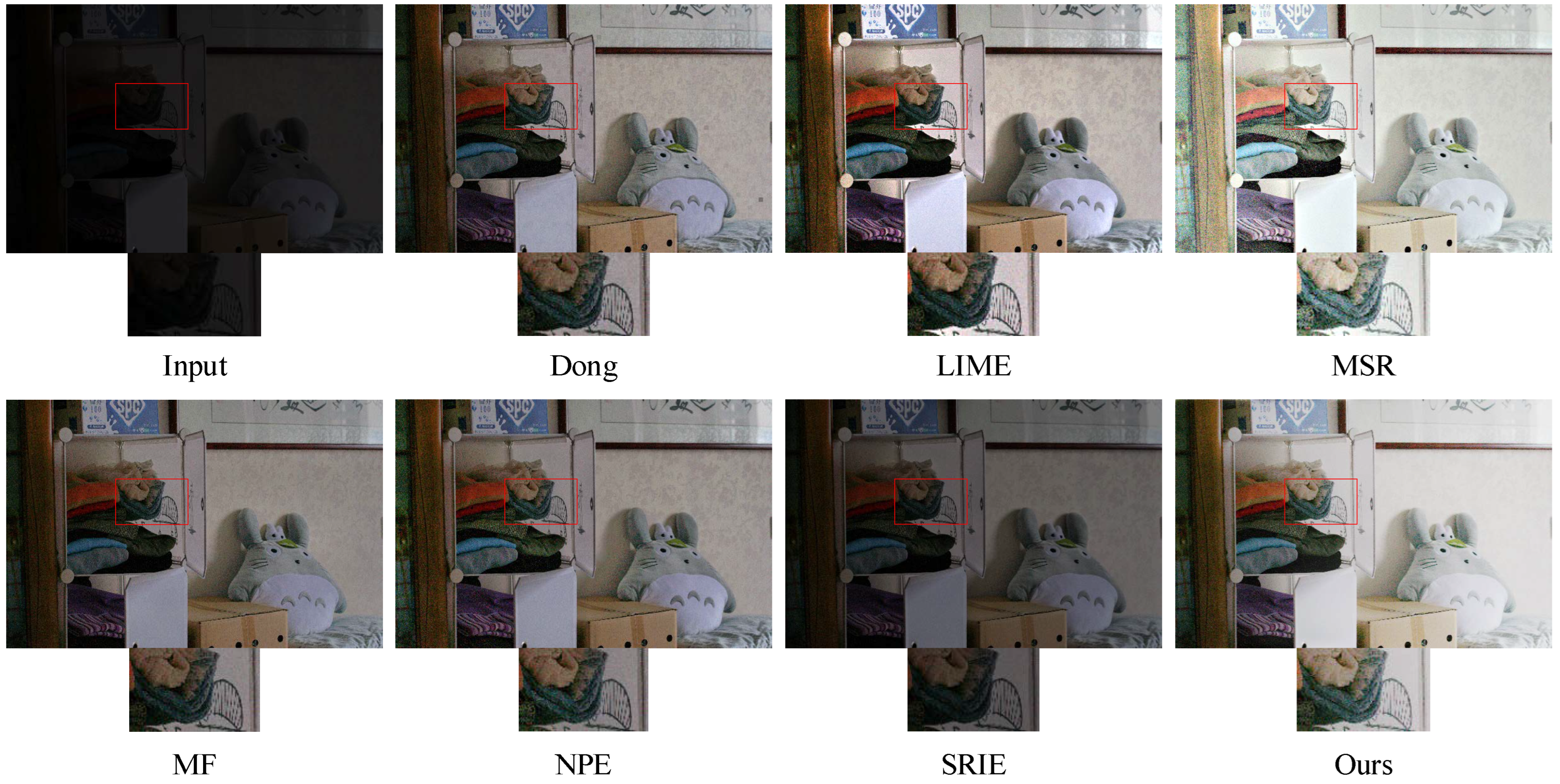

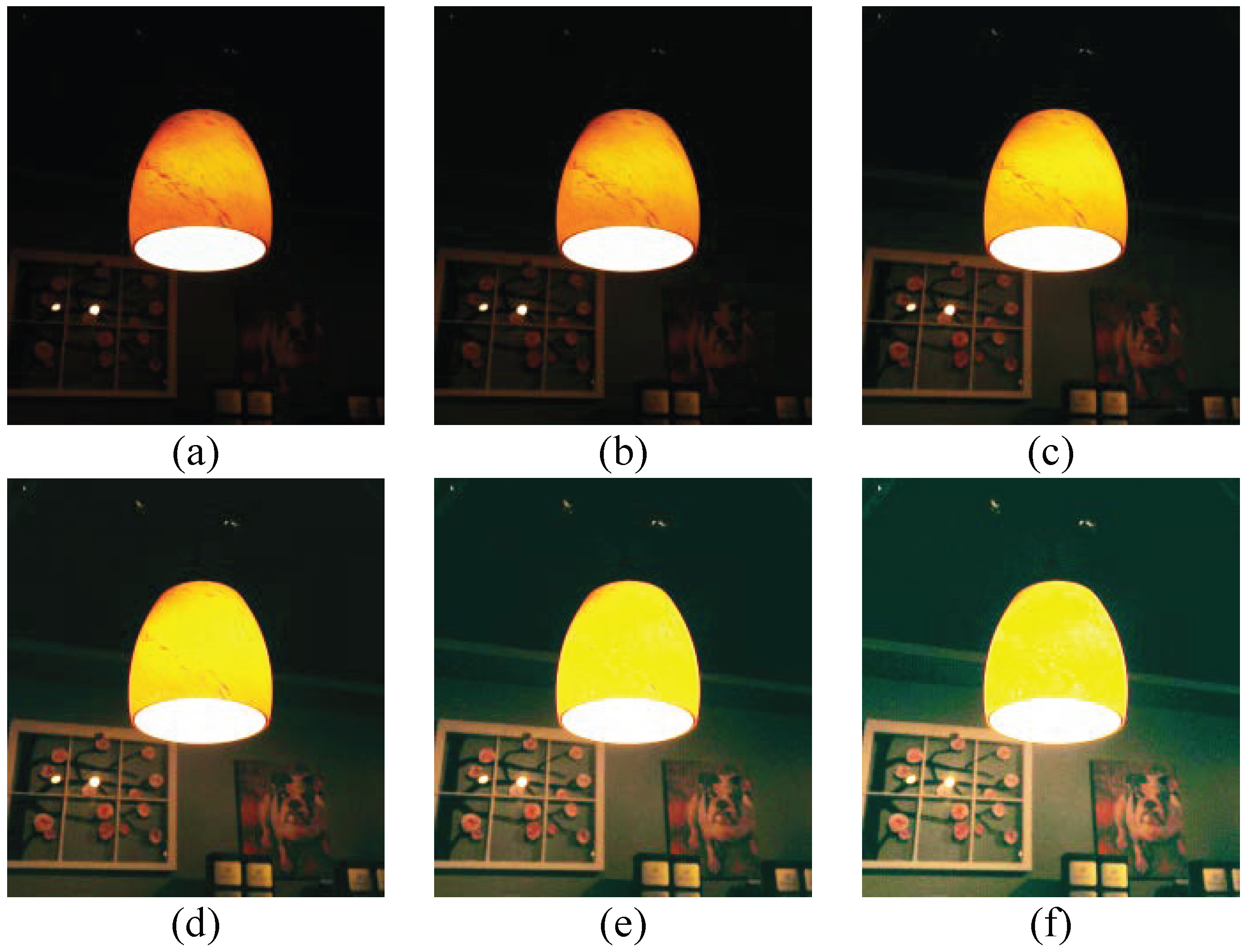

4.1. Subjective Evaluation of Experimental Results

4.2. Objective Assessment of Image Quality

4.2.1. Lightness Order Error (LOE)

4.2.2. Visual Information Fidelity

4.2.3. Natural Image Quality Evaluator

5. Discussion

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Chouhan, R.; Biswas, P.K.; Jha, R.K. Enhancement of low-contrast images by internal noise-induced Fourier coefficient rooting. Signal Image Video Process. 2015, 9, 255–263. [Google Scholar] [CrossRef]

- Zhang, S.; Lan, X.; Yao, H.; Zhou, H.; Tao, D.; Li, X. A biologically inspired appearance model for robust visual tracking. IEEE Trans. Neural Netw. Learn. Syst. 2016, 28, 2357–2370. [Google Scholar] [CrossRef] [PubMed]

- Lan, X.; Zhang, S.; Yuen, P.C.; Chellappa, R. Learning common and feature-specific patterns: A novel multiple-sparse-representation-based tracker. IEEE Trans. Image Process. 2017, 27, 2022–2037. [Google Scholar] [CrossRef] [PubMed]

- Tang, H.; Zhu, H.; Tao, H.; Xie, C. An Improved Algorithm for Low-Light Image Enhancement Based on RetinexNet. Appl. Sci. 2022, 12, 7268. [Google Scholar] [CrossRef]

- Si, W.; Xiong, J.; Huang, Y.; Jiang, X.; Hu, D. Quality Assessment of Fruits and Vegetables Based on Spatially Resolved Spectroscopy: A Review. Foods 2022, 11, 1198. [Google Scholar] [CrossRef] [PubMed]

- Xu, Y. A fusion-based approach of deep learning and edge-cutting algorithms for identification and color recognition of traffic lights. Intell. Transp. Infrastruct. 2023, 2, liad007. [Google Scholar] [CrossRef]

- Wang, D.; Xu, C.; Feng, B.; Hu, Y.; Tan, W.; An, Z.; Han, J.; Qian, K.; Fang, Q. Multi-Exposure Image Fusion Based on Weighted Average Adaptive Factor and Local Detail Enhancement. Appl. Sci. 2022, 12, 5868. [Google Scholar] [CrossRef]

- Hoang, T.; Pan, B.; Nguyen, D.; Wang, Z. Generic gamma correction for accuracy enhancement in fringe-projection profilometry. Opt. Lett. 2010, 35, 1992–1994. [Google Scholar] [CrossRef]

- Pan, X.; Li, C.; Pan, Z.; Yan, J.; Tang, S.; Yin, X. Low-Light Image Enhancement Method Based on Retinex Theory by Improving Illumination Map. Appl. Sci. 2022, 12, 5257. [Google Scholar] [CrossRef]

- Brainard, D.H.; Wandell, B.A. Analysis of the retinex theory of color vision. JOSA A 1986, 3, 1651–1661. [Google Scholar] [CrossRef]

- Jobson, D.J.; Rahman, Z.u.; Woodell, G.A. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [PubMed]

- Petro, A.B.; Sbert, C.; Morel, J.M. Multiscale retinex. Image Process. Line 2014, 4, 71–88. [Google Scholar] [CrossRef]

- Dong, X.; Pang, Y.; Wen, J. Fast efficient algorithm for enhancement of low lighting video. In ACM SIGGRAPH 2010 Posters; ACM Digital Library: New York, NY, USA, 2010; p. 1. [Google Scholar]

- Guo, X.; Li, Y.; Ling, H. LIME: Low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 2016, 26, 982–993. [Google Scholar] [CrossRef] [PubMed]

- Wang, S.; Zheng, J.; Hu, H.M.; Li, B. Naturalness preserved enhancement algorithm for non-uniform illumination images. IEEE Trans. Image Process. 2013, 22, 3538–3548. [Google Scholar] [CrossRef]

- Fu, X.; Zeng, D.; Huang, Y.; Zhang, X.P.; Ding, X. A weighted variational model for simultaneous reflectance and illumination estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2782–2790. [Google Scholar]

- Fu, X.; Zeng, D.; Huang, Y.; Liao, Y.; Ding, X.; Paisley, J. A fusion-based enhancing method for weakly illuminated images. Signal Process. 2016, 129, 82–96. [Google Scholar] [CrossRef]

- Agrawal, S.; Panda, R.; Mishro, P.K.; Abraham, A. A novel joint histogram equalization based image contrast enhancement. J. King Saud Univ.-Comput. Inf. Sci. 2022, 34, 1172–1182. [Google Scholar] [CrossRef]

- Park, S.; Yu, S.; Kim, M.; Park, K.; Paik, J. Dual autoencoder network for retinex-based low-light image enhancement. IEEE Access 2018, 6, 22084–22093. [Google Scholar] [CrossRef]

- Wei, C.; Wang, W.; Yang, W.; Liu, J. Deep retinex decomposition for low-light enhancement. arXiv 2018, arXiv:1808.04560. [Google Scholar]

- Chen, C.; Chen, Q.; Xu, J.; Koltun, V. Learning to see in the dark. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 3291–3300. [Google Scholar]

- Hai, J.; Xuan, Z.; Yang, R.; Hao, Y.; Zou, F.; Lin, F.; Han, S. R2rnet: Low-light image enhancement via real-low to real-normal network. J. Vis. Commun. Image Represent. 2023, 90, 103712. [Google Scholar] [CrossRef]

- Fan, S.; Liang, W.; Ding, D.; Yu, H. LACN: A lightweight attention-guided ConvNeXt network for low-light image enhancement. Eng. Appl. Artif. Intell. 2023, 117, 105632. [Google Scholar] [CrossRef]

- Han, X.; Lv, T.; Song, X.; Nie, T.; Liang, H.; He, B.; Kuijper, A. An adaptive two-scale image fusion of visible and infrared images. IEEE Access 2019, 7, 56341–56352. [Google Scholar] [CrossRef]

- Ying, Z.; Li, G.; Ren, Y.; Wang, R.; Wang, W. A new low-light image enhancement algorithm using camera response model. In Proceedings of the IEEE International Conference on Computer Vision Workshops, Venice, Italy, 22–29 October 2017; pp. 3015–3022. [Google Scholar]

- Ying, Z.; Li, G.; Gao, W. A bio-inspired multi-exposure fusion framework for low-light image enhancement. arXiv 2017, arXiv:1711.00591. [Google Scholar]

- Shao, L.; Zhen, X.; Tao, D.; Li, X. Spatio-temporal Laplacian pyramid coding for action recognition. IEEE Trans. Cybern. 2013, 44, 817–827. [Google Scholar] [PubMed]

- Sheikh, H.R.; Bovik, A.C. Image information and visual quality. IEEE Trans. Image Process. 2006, 15, 430–444. [Google Scholar] [CrossRef] [PubMed]

- Mittal, A.; Soundararajan, R.; Bovik, A.C. Making a “completely blind” image quality analyzer. IEEE Signal Process. Lett. 2012, 20, 209–212. [Google Scholar] [CrossRef]

- Lee, C.; Lee, C.; Kim, C.S. Contrast enhancement based on layered difference representation of 2D histograms. IEEE Trans. Image Process. 2013, 22, 5372–5384. [Google Scholar]

| Input | Dong | LIME | MSR | MF | NPE | SRIE | Ours |

|---|---|---|---|---|---|---|---|

| bookshelf | 961.8 | 1063.3 | 1942.6 | 563.3 | 810.3 | 608.2 | 358.2 |

| cupboard | 306.9 | 431.4 | 930.6 | 368.4 | 678.3 | 348.9 | 361.9 |

| doll | 449.9 | 645.1 | 1534.8 | 397.2 | 800.5 | 476.8 | 365.5 |

| classroom | 470.6 | 687.7 | 1636.5 | 598.1 | 1120.9 | 539.7 | 410.3 |

| swimming pool | 1244.6 | 538.2 | 1373.6 | 684.5 | 1875.2 | 1140.9 | 295.5 |

| hall | 805.6 | 622.1 | 1638.9 | 658.9 | 1698.9 | 801.1 | 280.7 |

| gym | 625.8 | 846.9 | 1742.8 | 533.3 | 1014.2 | 666.2 | 392.1 |

| wardrobe | 574.3 | 1211.5 | 2477.9 | 613.3 | 554.2 | 429.5 | 455.2 |

| Input | Dong | LIME | MSR | MF | NPE | SRIE | Ours |

|---|---|---|---|---|---|---|---|

| bookshelf | 9.734 | 18.763 | 16.214 | 7.199 | 8.374 | 4.908 | 11.533 |

| cupboard | 35.375 | 82.298 | 89.709 | 42.794 | 54.187 | 14.187 | 63.57 |

| doll | 41.627 | 146.493 | 165.138 | 59.936 | 63.301 | 16.742 | 66.871 |

| classroom | 42.835 | 125.786 | 137.805 | 55.984 | 85.473 | 16.337 | 95.942 |

| swimming pool | 9.256 | 23.655 | 19.558 | 9.109 | 13.393 | 4.373 | 15.948 |

| hall | 8.537 | 18.114 | 14.541 | 8.858 | 9.876 | 4.361 | 10.375 |

| gym | 23.621 | 84.685 | 70.673 | 30.145 | 48.797 | 9.566 | 54.606 |

| wardrobe | 22.204 | 42.362 | 36.703 | 16.675 | 22.063 | 9.233 | 31.02 |

| Input | Dong | LIME | MSR | MF | NPE | SRIE | Ours |

|---|---|---|---|---|---|---|---|

| bookshelf | 8.331 | 9.002 | 8.059 | 8.704 | 9.018 | 7.769 | 7.081 |

| cupboard | 11.372 | 10.927 | 10.998 | 12.932 | 11.566 | 10.224 | 10.128 |

| doll | 7.653 | 8.085 | 8.536 | 8.389 | 8.106 | 7.741 | 7.588 |

| classroom | 10.714 | 11.547 | 10.322 | 11.485 | 11.485 | 10.771 | 10.153 |

| swimming pool | 9.913 | 9.247 | 8.864 | 9.585 | 9.264 | 8.501 | 8.438 |

| hall | 10.703 | 11.119 | 9.788 | 11.919 | 10.272 | 9.922 | 9.673 |

| gym | 8.885 | 8.509 | 8.232 | 9.843 | 8.314 | 7.652 | 7.145 |

| wardrobe | 6.965 | 7.412 | 6.762 | 7.805 | 7.209 | 6.423 | 5.782 |

| Method | DICM | LIME | VV | Avg. |

|---|---|---|---|---|

| Dong [13] | 4.313 | 2.601 | 3.597 | 3.504 |

| LIME [14] | 3.421 | 2.636 | 3.561 | 3.206 |

| MSR [12] | 3.346 | 2.452 | 3.629 | 3.142 |

| MF [17] | 3.506 | 2.825 | 3.234 | 3.188 |

| NPE [15] | 3.277 | 2.562 | 3.191 | 3.024 |

| SRIE [16] | 3.556 | 2.541 | 3.454 | 3.183 |

| Ours | 3.121 | 2.235 | 3.152 | 2.836 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, E.; Guo, L.; Guo, J.; Yan, S.; Li, X.; Kong, L. A Low-Brightness Image Enhancement Algorithm Based on Multi-Scale Fusion. Appl. Sci. 2023, 13, 10230. https://doi.org/10.3390/app131810230

Zhang E, Guo L, Guo J, Yan S, Li X, Kong L. A Low-Brightness Image Enhancement Algorithm Based on Multi-Scale Fusion. Applied Sciences. 2023; 13(18):10230. https://doi.org/10.3390/app131810230

Chicago/Turabian StyleZhang, Enqi, Lihong Guo, Junda Guo, Shufeng Yan, Xiangyang Li, and Lingsheng Kong. 2023. "A Low-Brightness Image Enhancement Algorithm Based on Multi-Scale Fusion" Applied Sciences 13, no. 18: 10230. https://doi.org/10.3390/app131810230

APA StyleZhang, E., Guo, L., Guo, J., Yan, S., Li, X., & Kong, L. (2023). A Low-Brightness Image Enhancement Algorithm Based on Multi-Scale Fusion. Applied Sciences, 13(18), 10230. https://doi.org/10.3390/app131810230