Immersive Phobia Therapy through Adaptive Virtual Reality and Biofeedback

Abstract

:1. Introduction

2. Phobia Statistics and Therapy Methods

2.1. Commercial Virtual Reality Exposure Therapy Systems

2.2. Virtual Reality Exposure Therapy Systems Developed in the Academic Context

3. The PhoVR System

3.1. VR and Biophysical Acquisition Devices

3.2. Description of the Virtual Environments

3.2.1. Tutorial Scene

- Checkpoint—the type of task in which the user moves to a marker point. Upon successful completion of the task, a confirmation sound is heard.

- Photo—in this task, the user has to take a picture of an object or animal. Depending on the scene, the object or animal can differ. In one scene, the object is a statue, in another a seagull, etc. When the user reaches the area around the target object, the screen will partially change its appearance, and the object will have an outline. If the angle between the direction of the camera and the location of the object is not small enough, the outline will be yellow. The user has to look at the object to change the outline color to green. The picture can be taken by pressing the controller’s Trigger button.

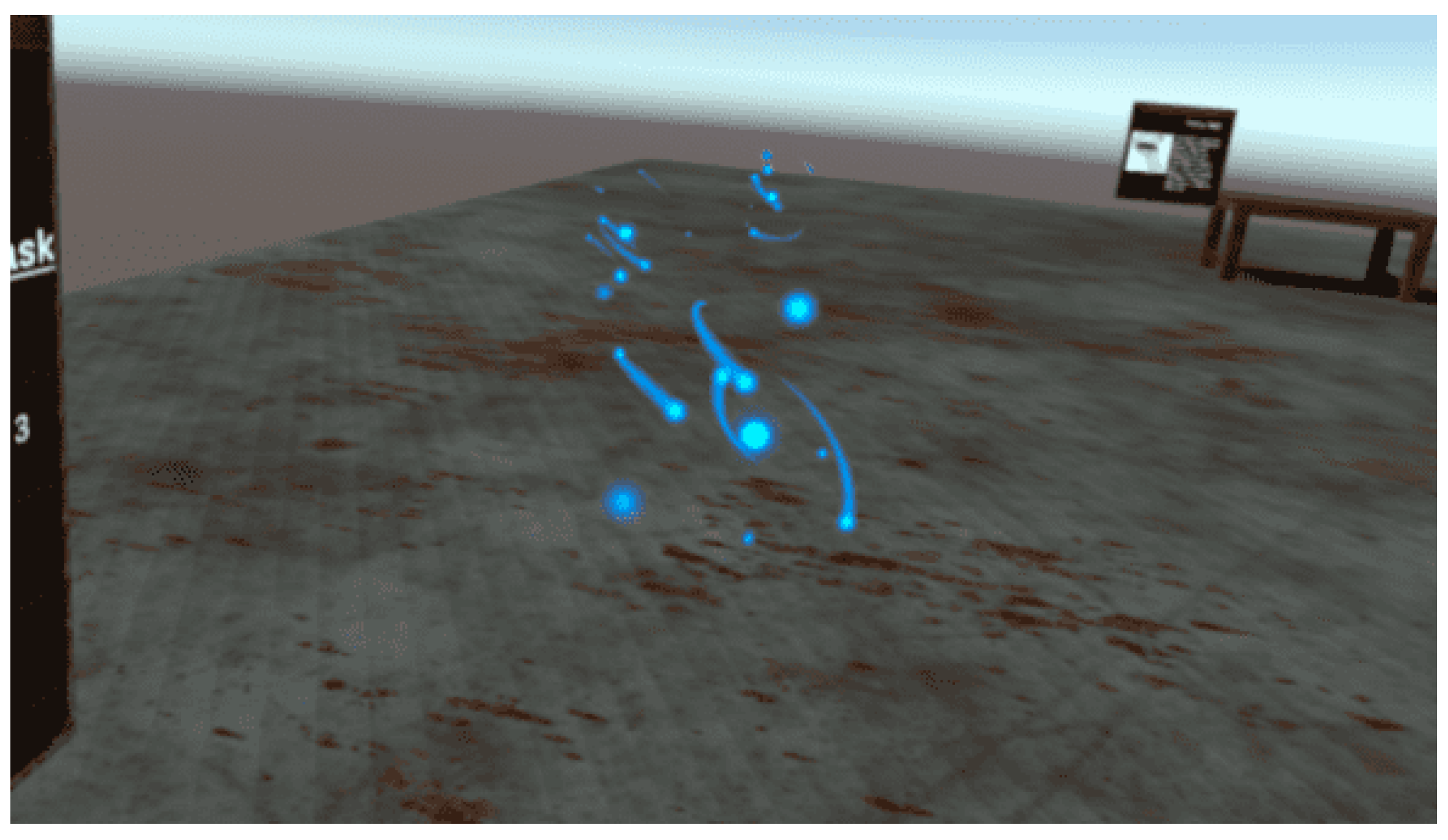

- Collection—the type of task in which the user collects three or more objects of the same type. The collectibles are blue particles. To collect them, the player needs to move toward the particles in VR so that a collision occurs between them and the player’s body (Figure 1).

- Pickup—in this task, the user has to pick up various objects from the scene. Depending on the scene played, the objects or animals differ: in one scene the object is a candle, in another scene a bottle, etc. To put an item in the inventory, the user points their right hand at the item and presses the controller’s Grip button. If the hand is pointed correctly, the controller will vibrate, and a semi-transparent circle will be visible at the base of the object (Figure 2).

- How easy was the task sequence?

- How easy was it to control your feelings?

- How enjoyable was the task sequence?

- Joystick—by moving the sticks up and down or right and left, the player can move around the scene;

- Joystick press—opens the Main Menu where various aspects of the application such as graphic quality, sound volume, movement speed, active scene, etc., can be modified;

- A—rotates the user to the left;

- B—rotates the user to the right;

- Trigger—used for selecting or confirming actions (menu/quests);

- Grip—used in the task of picking up an object.

3.2.2. Virtual Environments for Acrophobia Therapy

- Walking on a metal platform (Figure 4);

- Walking on the edge of the main ridge;

- Descent route, along the river.

- Level 1—the player will walk on the terrace, at a height of 66m, equivalent to the 17th floor;

- Level 2—height of 108 m, equivalent to the 28th floor;

- Level 3—the last level is the roof of the building, located at a height of 131 m, equivalent to the 35th floor.

3.2.3. Virtual Environments for Claustrophobia Therapy

3.2.4. Virtual Environment for Fear of Public Speaking Therapy

- A module for setting up the scene (Figure 15);

- A module for running the scene;

- Immersing the user within the previously chosen and configured scene;

- Speaking freely or presenting a topic within the VR application;

- Monitoring immersion factors;

- Real-time calculation of the degree of attention of each person in the virtual audience;

- Numerical display and color indicators of the attention degree of each person in the virtual audience;

- Animations corresponding to the calculated degree of attention.

- In-session analysis;

- Post-session analysis.

- Small room (interview room)—maximum 4 seats;

- Medium-sized room (lecture hall)—maximum 27 seats;

- Large room (amphitheater)—maximum 124 seats (Figure 16).

- Empty room (0%);

- Small audience size (25%);

- Average audience size (50%);

- Large audience size (75%);

- Full room (100%).

- Placing the user within a chosen and previously configured scene;

- Speaking freely or presenting a topic within the VR exposure scenario (Figure 17);

- During the presentation, the system monitors the factors that contribute to the audience’s interest, calculates the degree of attention (cumulative score) for each virtual person, displays this degree numerically and through color indicators, and performs animations of the virtual audience corresponding to their degree of attention.

- Position relative to the audience—calculated by measuring the distance between the presenter and each person in the audience;

- Hand movement—the total length of the hands’ movement trajectories over a defined time interval (for example, the last 10 s);

- Gaze Direction—the direction the presenter is looking at affects the audience factors based on how far each person in the audience is from the center of the presenter’s field of view. This applies only to the people in the audience who are located within the angle of attention. The angle of attention is centered in the presenter’s gaze direction in the virtual environment and has a preset aperture (for example, 60 degrees). The attention factor is calculated according to the angle between the direction of the presenter’s gaze and the direction of the person in the audience (the segment connecting the presenter’s position to the person’s position) (Figure 18).

- Voice volume—during the session, the decibel level recorded by the microphone is measured, and depending on a chosen parameter, the presenter has to adjust the voice volume so that everyone in the audience can hear what the presenter is saying. In addition, the presenter’s score will be adversely affected if there are too large or too frequent fluctuations in the voice volume.

- Speech rate—the number of words the presenter speaks is measured throughout the session and averaged to show the number of words per minute. This is calculated based on the number of words generated using the speech-to-text algorithm (conversion performed using Microsoft Azure Services [37]).

- Pauses between words—during the session, the duration of time when the presenter is not speaking, i.e., no sound is recorded by the microphone, is measured. The degrees of fluency and monotony are calculated based on various empirical rules.

- Various animations attributed to the people in the audience;

- Numerical values and color codes (optionally displayed and can be activated by pressing a button on the controller).

- Plots for evaluating all the factors mentioned during the session;

- Comparative data with previous sessions in which the user participated (history/evolution).

3.3. Technologies and Performance Evaluation

- Unity 3D [38]—a graphics engine with support for virtual reality;

- SteamVR plugin [39]—an extension for Unity 3D;

- Adobe Fuse [40]—used for building the 3D models of the audience;

- Adobe Mixamo [41]—an application used for adding animations to 3D models. With Adobe Mixamo, we rendered the various states of people in the audience, such as “Interested” or “Not interested”;

- Microsoft Azure Speech to Text [42]—an application for analyzing the voice recorded with the microphone to generate text. The text is consequently analyzed to obtain statistics regarding speech rate and pauses between words.

- Processor—Qualcomm Snapdragon XR2 7 nm;

- Memory—6 GB—DDR5;

- Video card—Adreno 650 × 7 nm;

- Storage—256 GB;

- Display refresh rate—72 Hz, 90 Hz, 120 Hz;

- Resolution—1832 × 1920 (per eye).

- Processor—Ryzen 73,700 × 3.6 GHz;

- Memory—32 GB—DDR4 3200 MHz;

- Video card: 1060 GTX—6 GB.

3.4. Biofeedback Integrated in the Virtual Environment

3.5. Signaling Difficult Moments

- For people with severe forms of phobia, the intervention of a real therapist is preferred, because they have the knowledge to adequately intervene in case of an excessive response from the patient and orchestrate the exposure therapy according to the patient’s emotional state. The virtual companion is effective, both for patients with severe phobias (treated in the clinic) and for users with mild phobias (treated autonomously at home). It provides simple, general advice (it reminds of generic or therapist-selected relaxation methods) and proposes the presentation of supporting elements when this may be necessary for the user.

- The virtual companion will have a beneficial psychological effect, removing the sense of isolation felt by some users in virtual environments, distracting from the phobic stimulus if necessary, and encouraging the user in difficult moments or congratulating them on achievements.

- The virtual companion is an extremely attractive concept for young users, who are familiar with this kind of companion from video games. In Figure 21, we present two virtual companions—an eagle and a fox.

3.6. Dynamic Adaptation of the Virtual Environment

3.7. The Control Panel

- Administration by using a therapist account;

- Patient management;

- Patient profile configuration (including clinical profile);

- Recording therapy sessions and saving them in the cloud;

- Displaying patients’ therapy history (Figure 23);

- Visualization of the results of therapy sessions with the possibility to add comments/notes/scores/information about their progress (Figure 24);

- Visualization of patient progress (easy-to-interpret graphs);

- Session recordings’ playback (Figure 27).

- The possibility to watch in real time what the patient sees in VR;

- Visualization of biometric data and statistics;

- Watching the execution of tasks for each session level;

- The possibility of recording special sessions only with biometric data monitoring without using the virtual environments (videos/images the therapist selects that can be viewed by the patient on the HMD).

- Administration by using a therapist account, which was tested by generating a therapist account and through authentication;

- Patient management through the possibility of adding and deleting patients;

- Patient profile configuration (including clinical profile) through the possibility of modifying the personal and clinical data of a patient;

- Proper communication with the PhoVR hardware devices by checking the WebSocket connection with the two systems (Oculus Quest 2 and HMD connected to PC) (Figure 28);

- Monitoring patient therapy sessions (real-time communication) using a live view from the VR device and biometric data visualization in real time (Figure 29);

- Recording therapy sessions and saving their data in the cloud, visualizing a patient’s session history;

- Viewing a therapy session with the possibility of adding comments/grades/scores/information about its progress;

- Displaying recordings of the therapy sessions.

4. Testing the PhoVR System

- To evaluate the scenarios’ level of realism and their therapeutic efficiency;

- To evaluate the facilities provided to the psychotherapists for patient and therapy session management;

- To obtain suggestions and comments for future system improvement.

4.1. The Testing Procedure

- The control panel for patient and therapy session management;

- The presentation of the VR equipment and a training session on how to install and use it;

- The presentation of the biometric data acquisition device and a training session on how to use it

- A brief presentation of the testing scenarios;

- The testing procedure with psychotherapy specialists consisted of three main stages: testing the VR system for acrophobia and claustrophobia therapy; testing the public speaking training system; and system evaluations.

- Filling in the STAI questionnaire;

- The presentation of the VR equipment;

- The presentation of the biometric data acquisition device;

- A short training session on how to navigate through the scenes and perform the required tasks;

- A brief presentation of the testing scenarios.

- A test of the VR system for acrophobia and claustrophobia therapy;

- A test of the public speaking therapy system;

- System evaluations by filling in the evaluation questionnaire.

- The results obtained for the EDA-T signal were MTraining = 8.97 (±6.23) µS; MCity = 8.53 (±6.86) µS; MBridge = 11.52 (±6.76) µS; MTunnel = 11.70 (±6.77) µS; and MLabyrinth = 8.10 (±2.89) µS. Unifactorial analysis revealed a significant difference between these values F(5.1871) = 16.72, p < 0.001. Post hoc Bonferroni-corrected tests were used to compare all pairs of virtual environments. The significant mean differences (MDs) were recorded between training and bridge (MD = −2.55 µS, p < 0.001), training and tunnel (MD = −2.72 µS, p < 0.001), as well as between city and bridge (MD = −2.99 µS, p < 0.001), city and tunnel (MD = −3.17 µS, p < 0.001), bridge and labyrinth (MD = +3.42 µS, p = 0.002) and tunnel and labyrinth (MD = +3.60 µS, p < 0.001).

- The results obtained for the HR signal were MTraining = 83.52 (±10.56) bpm; MCity = 85.39 (±9.78) bpm; MBridge = 82.73 (±9.77) bpm; MTunnel = 82.90 (±10.38) bpm; and MLabyrinth = 83.78 (±5.36) bpm. Unifactorial analysis revealed a significant difference between these values F(5.1871) = 4.81, p < 0.001. Post hoc Bonferroni-corrected tests were used to compare all pairs of virtual environments. The significant mean differences (MDs) were recorded between training and city (MD = −1.87 pbm, p = 0.019), as well as between city and bridge (MD = +2.66 pbm, p = 0.017) and city and tunnel (MD = +2.49 pbm, p = 0.002).

4.2. Results

- Most subjects (16 people) had both anxiety as a trait and anxiety as a state;

- One subject with a high score (58) on the T-Anxiety scale (anxiety as a trait) had an average score (40) on the S-Anxiety scale (anxiety as a state), which can be interpreted as good self-control;

- One subject with an average score on the T-Anxiety scale (38) had a high score (50) on the S-Anxiety scale, which indicates that some situations can have a strong emotional impact on this individual;

- One subject had average scores on both scales, which indicates a balance in terms of anxiety.

- Comments from each session should be made available in a more centralized way at the end of the patient file;

- An analysis of the patient’s progress;

- A preconfiguration module for psychologists with different levels depending on the progress of the patient;

- Advanced search;

- Accessing past appointments.

- More phobias could be addressed;

- Familiarization with the system may take some time;

- The difficulty of performing certain movements;

- The feeling of dizziness caused by the VR equipment.

- Addressing multiple phobias;

- Working on the feeling of realism;

- Greater freedom of movement with the left hand, perhaps with the body as well;

- A longer virtual environment with more dispersed tasks;

- Using real images from a natural environment;

- Improvement from a visual qualitative point of view.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Conflicts of Interest

References

- UN News. Global Perspective Human Stories. Available online: https://news.un.org/en/story/2022/06/1120682 (accessed on 15 July 2023).

- World Health Organization. Mental Disorder. Available online: https://www.who.int/news-room/fact-sheets/detail/mental-disorders (accessed on 15 July 2023).

- Mental Health and COVID-19: Early Evidence of the Pandemic’s Impact: Scientific Brief. Available online: https://www.who.int/publications/i/item/WHO-2019-nCoV-Sci_Brief-Mental_health-2022.1 (accessed on 15 July 2023).

- Thakur, N. MonkeyPox2022Tweets: A large-scale Twitter dataset on the 2022 Monkeypox outbreak, findings from analysis of Tweets, and open research questions. Infect. Dis. Rep. 2022, 14, 855–883. [Google Scholar] [CrossRef]

- Araf, Y.; Maliha, S.T.; Zhai, J.; Zheng, C. Marburg virus outbreak in 2022: A public health concern. Lancet Microb. 2023, 4, e9. [Google Scholar] [CrossRef] [PubMed]

- List of Phobias: Common Phobias from A to Z. Available online: https://www.verywellmind.com/list-of-phobias-2795453 (accessed on 15 July 2023).

- Phobia Statistics and Surprising Facts About Our Biggest Fears. Available online: https://www.fearof.net/phobia-statistics-and-surprising-facts-about-our-biggest-fears/ (accessed on 15 July 2023).

- Curtiss, J.E.; Levine, D.S.; Ander, I.; Baker, A.W. Cognitive-behavioral treatments for anxiety and stress-related disorders. Focus 2021, 19, 184–189. [Google Scholar] [CrossRef] [PubMed]

- Opriş, D.; Pintea, S.; García-Palacios, A.; Botella, C.; Szamosközi, Ş.; David, D. Virtual reality exposure therapy in anxiety disorders: A quantitative meta-analysis: Virtual Reality Exposure Therapy. Depress. Anxiety 2012, 29, 85–93. [Google Scholar] [CrossRef] [PubMed]

- Rothbaum, B.O.; Hodges, L.F.; Kooper, R.; Opdyke, D.; Williford, J.S.; North, M. Effectiveness of computer-generated (virtual reality) graded exposure in the treatment of acrophobia. Am. J. Psychiatry 1995, 152, 626–628. [Google Scholar] [CrossRef] [PubMed]

- C2Care. Available online: https://www.c2.care/en/ (accessed on 15 July 2023).

- Amelia. Available online: https://ameliavirtualcare.com (accessed on 15 July 2023).

- Virtual Reality Medical Center. Available online: https://vrphobia.com/ (accessed on 15 July 2023).

- Virtually Better. Available online: https://www.virtuallybetter.com/ (accessed on 15 July 2023).

- XRHealth. Available online: https://www.xr.health/ (accessed on 15 July 2023).

- Schafer, P.; Koller, M.; Diemer, J.; Meixner, G. Development and evaluation of a virtual reality-system with integrated tracking of extremities under the aspect of Acrophobia. In Proceedings of the 2015 SAI Intelligent Systems Conference (IntelliSys), London, UK, 10–11 November 2015. [Google Scholar]

- Coelho, C.M.; Silva, C.F.; Santos, J.A.; Tichon, J.; Wallis, G. Virtual and Real Environments for Acrophobia Desensitisation. PsychNology J. 2008, 6, 203. [Google Scholar]

- Emmelkamp, P.M.G.; Krijn, M.; Hulsbosch, A.M.; de Vries, S.; Schuemie, M.J.; van der Mast, C.A.P.G. Virtual reality treatment versus exposure in vivo: A comparative evaluation in acrophobia. Behav. Res. Ther. 2002, 40, 509–516. [Google Scholar] [CrossRef] [PubMed]

- Abdullah, M.; Ahmed, Z. An effective virtual reality based remedy for acrophobia. Int. J. Adv. Comput. Sci. Appl. IJACSA 2018, 9, 162–167. [Google Scholar] [CrossRef]

- Donker, T.; van Klaveren, C.; Cornelisz, I.; Kok, R.N.; van Gelder, J.-L. Analysis of usage data from a self-guided app-based virtual reality Cognitive Behavior Therapy for acrophobia: A randomized controlled trial. J. Clin. Med. 2020, 9, 1614. [Google Scholar] [CrossRef] [PubMed]

- Maron, P.; Powell, V.; Powell, W. Differential effect of neutral and fear-stimulus virtual reality exposure on physiological indicators of anxiety in acrophobia. In Proceedings of the International Conference on Disability, Virtual Reality and Assistive Technology, Los Angeles, CA, USA, 20–22 September 2016; pp. 149–155. [Google Scholar]

- Gromer, D.; Madeira, O.; Gast, P.; Nehfischer, M.; Jost, M.; Müller, M.; Pauli, P. Height simulation in a virtual reality CAVE system: Validity of fear responses and effects of an immersion manipulation. Front. Hum. Neurosci. 2018, 12, 372. [Google Scholar] [CrossRef] [PubMed]

- Levy, F.; Leboucher, P.; Rautureau, G.; Jouvent, R. E-virtual reality exposure therapy in acrophobia: A pilot study. J. Telemed. Telecare 2016, 22, 215–220. [Google Scholar] [CrossRef] [PubMed]

- Chardonnet, J.-R.; Di Loreto, C.; Ryard, J.; Housseau, A. A virtual reality simulator to detect acrophobia in work-at-height situations. In Proceedings of the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR), Tuebingen/Reutlingen, Germany, 18–22 March 2018. [Google Scholar]

- Slater, M.; Pertaub, D.-P.; Barker, C.; Clark, D.M. An experimental study on fear of public speaking using a virtual environment. Cyberpsychol. Behav. 2006, 9, 627–633. [Google Scholar] [CrossRef] [PubMed]

- Pertaub, D.P.; Slater, M.; Barker, C. An experiment on fear of public speaking in virtual reality. Stud. Health Technol. Inform. 2001, 81, 372–378. [Google Scholar] [PubMed]

- DIVE. Available online: https://www.ri.se/sv?refdom=sics.se (accessed on 9 September 2023).

- North, M.M.; North, S.M.; Coble, J.R. Virtual reality therapy: An effective treatment for the fear of public speaking. Int. J. Virtual Real. Multimed. Publ. Prof. 1998, 3, 318–320. [Google Scholar] [CrossRef]

- Stupar-Rutenfrans, S.; Ketelaars, L.E.H.; van Gisbergen, M.S. Beat the fear of public speaking: Mobile 360° video virtual reality exposure training in home environment reduces public speaking anxiety. Cyberpsychol. Behav. Soc. Netw. 2017, 20, 624–633. [Google Scholar] [CrossRef] [PubMed]

- Nazligul, M.D.; Yilmaz, M.; Gulec, U.; Gozcu, M.A.; O’Connor, R.V.; Clarke, P.M. Overcoming public speaking anxiety of software engineers using virtual reality exposure therapy. Commun. Comput. Inf. Sci. 2017, 191–202. [Google Scholar] [CrossRef]

- Bubel, M.; Jiang, R.; Lee, C.H.; Shi, W.; Tse, A. AwareMe: Addressing fear of public speech through awareness. In Proceedings of the 2016 CHI Conference Extended Abstracts on Human Factors in Computing Systems, San Jose, CA, USA, 7–12 May 2016; ACM: New York, NY, USA. [Google Scholar]

- Koller, M.; Schafer, P.; Lochner, D.; Meixner, G. Rich interactions in virtual reality exposure therapy: A pilot-study evaluating a system for presentation training. In Proceedings of the 2019 IEEE International Conference on Healthcare Informatics (ICHI), Xi’an, China, 10–13 June 2019. [Google Scholar]

- Oculus Quest 2. Available online: https://www.oculus.com/experiences/quest/ (accessed on 15 July 2023).

- HTC Vive. Available online: https://www.vive.com/us/ (accessed on 15 July 2023).

- Valve Index. Available online: https://www.valvesoftware.com/ro/index (accessed on 15 July 2023).

- BITalino. Available online: https://www.pluxbiosignals.com/collections/bitalino (accessed on 15 July 2023).

- Microsoft Azure. Available online: https://azure.microsoft.com/ (accessed on 15 July 2023).

- Unity 3D. Available online: https://unity.com/ (accessed on 15 July 2023).

- Steam VR Plugin for Unity. Available online: https://assetstore.unity.com/packages/tools/integration/steamvr-plugin-32647 (accessed on 15 July 2023).

- Adobe Fuse. Available online: https://www.adobe.com/wam/fuse.html (accessed on 15 July 2023).

- Adobe Mixamo. Available online: https://www.mixamo.com/#/ (accessed on 15 July 2023).

- Microsoft Azure Speech to Text. Available online: https://azure.microsoft.com/en-us/products/cognitive-services/speech-to-text (accessed on 15 July 2023).

- Bălan, O.; Cristea, Ș.; Moise, G.; Petrescu, L.; Ivașcu, S.; Moldoveanu, A.; Leordeanu, M. Ether—An assistive virtual agent for acrophobia therapy in virtual reality. In Proceedings of the HCI International 2020—Late Breaking Papers: Virtual and Augmented Reality, Copenhagen, Denmark, 19–24 July 2020; pp. 12–25. [Google Scholar]

- Tudose, C.; Odubasteanu, C. Object-relational mapping using JPA, hibernate and spring data JPA. In Proceedings of the 2021 23rd International Conference on Control Systems and Computer Science (CSCS), Bucharest, Romania, 26–28 May 2021. [Google Scholar]

- Bonteanu, A.M.; Tudose, C. Multi-platform Performance Analysis for CRUD Operations in Relational Databases from Java Programs Using Hibernate. In Proceedings of the International Conference on Big Data Intelligence and Computing (DataCom 2022), Denarau Island, Fiji, 8–10 December 2022; Publisher Springer Nature: Singapore, 2022; pp. 275–288. [Google Scholar]

- Anghel, I.I.; Calin, R.S.; Nedelea, M.L.; Stanica, I.C.; Tudose, C.; Boiangiu, C.A. Software development methodologies: A comparative analysis. UPB Sci. Bull. 2022, 83, 3. [Google Scholar]

- Julian, L.J. Measures of anxiety: State-trait anxiety inventory (STAI), beck anxiety inventory (BAI), and hospital anxiety and depression scale-anxiety (HADS-A). Arthritis Care Res. 2011, 63, 467–472. [Google Scholar] [CrossRef]

- Gilbert, D.G.; Robinson, J.H.; Chamberlin, C.L.; Spielberger, C.D. Effects of smoking/nicotine on anxiety, heart rate, and lateralization of EEG during a stressful movie. Psychophysiology 1989, 26, 311–320. [Google Scholar] [CrossRef] [PubMed]

- Bouchard, S.; Ivers, H.; Gauthier, J.G.; Pelletier, M.-H.; Savard, J. Psychometric properties of the French version of the State-Trait Anxiety Inventory (form Y) adapted for older adults. La Rev. Can. Vieil. Can. J. Aging 1998, 17, 440–453. [Google Scholar] [CrossRef]

| Scene | Test Performance (fps) | |||

|---|---|---|---|---|

| Oculus Quest 2 | Desktop System | |||

| Minimum Value | Average Value | Minimum Value | Average Value | |

| Main menu | 60 | 72 | 74 | 119 |

| Tutorial scene | 55 | 60 | 71 | 120 |

| Acrophobia—natural environment | 30 | 35 | 60 | 86 |

| Acrophobia—urban environment | 35 | 40 | 68 | 90 |

| Claustrophobia—tunnel | 57 | 60 | 74 | 114 |

| Claustrophobia—cave | 58 | 60 | 70 | 105 |

| Interview scenario | - | - | 87 | 115 |

| Classroom scenario | - | - | 98 | 117 |

| Amphitheater scenario | - | - | 64 | 91 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Moldoveanu, A.; Mitruț, O.; Jinga, N.; Petrescu, C.; Moldoveanu, F.; Asavei, V.; Anghel, A.M.; Petrescu, L. Immersive Phobia Therapy through Adaptive Virtual Reality and Biofeedback. Appl. Sci. 2023, 13, 10365. https://doi.org/10.3390/app131810365

Moldoveanu A, Mitruț O, Jinga N, Petrescu C, Moldoveanu F, Asavei V, Anghel AM, Petrescu L. Immersive Phobia Therapy through Adaptive Virtual Reality and Biofeedback. Applied Sciences. 2023; 13(18):10365. https://doi.org/10.3390/app131810365

Chicago/Turabian StyleMoldoveanu, Alin, Oana Mitruț, Nicolae Jinga, Cătălin Petrescu, Florica Moldoveanu, Victor Asavei, Ana Magdalena Anghel, and Livia Petrescu. 2023. "Immersive Phobia Therapy through Adaptive Virtual Reality and Biofeedback" Applied Sciences 13, no. 18: 10365. https://doi.org/10.3390/app131810365

APA StyleMoldoveanu, A., Mitruț, O., Jinga, N., Petrescu, C., Moldoveanu, F., Asavei, V., Anghel, A. M., & Petrescu, L. (2023). Immersive Phobia Therapy through Adaptive Virtual Reality and Biofeedback. Applied Sciences, 13(18), 10365. https://doi.org/10.3390/app131810365