Visual Image Dehazing Using Polarimetric Atmospheric Light Estimation

Abstract

:1. Introduction

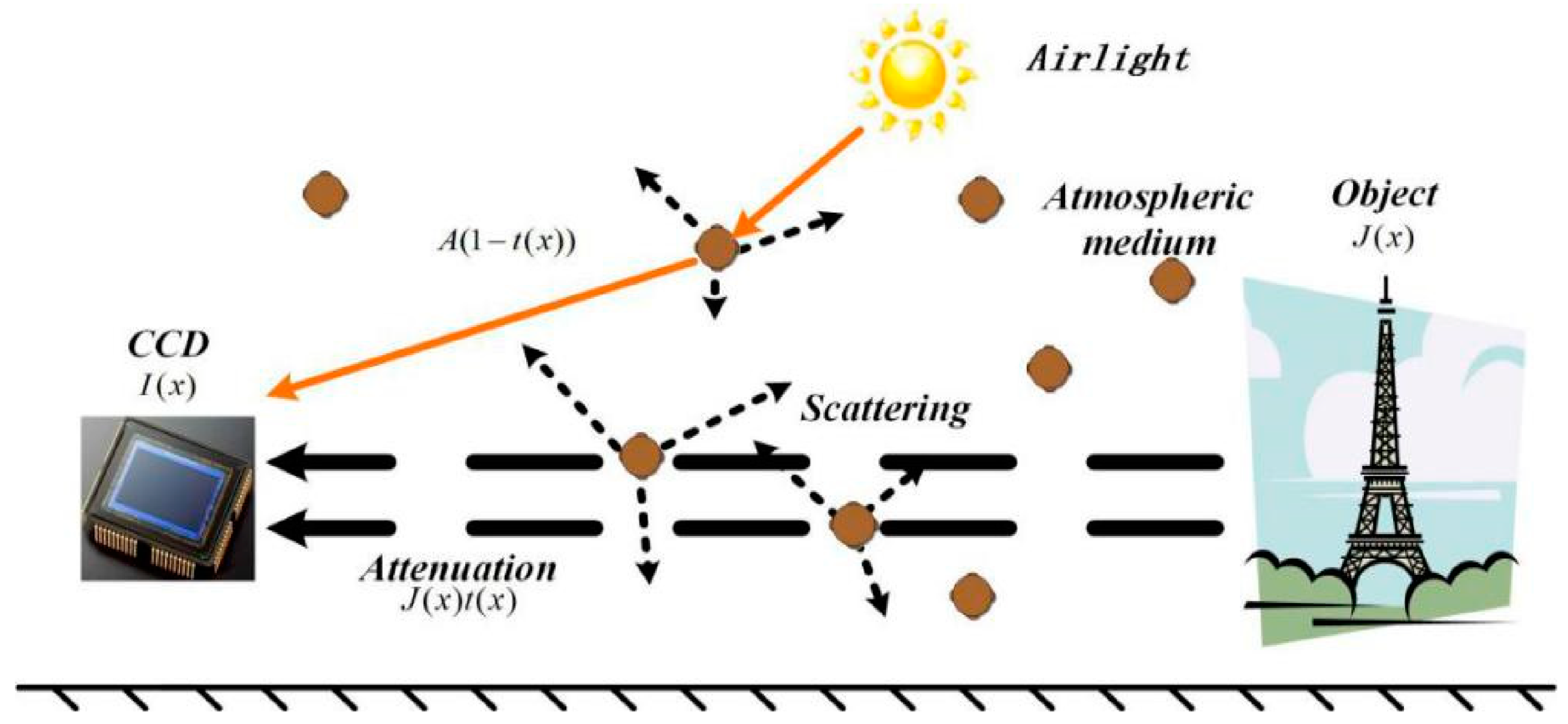

2. Dark Channel Dehazing Theory (DCP)

3. Atmospheric Light Estimation

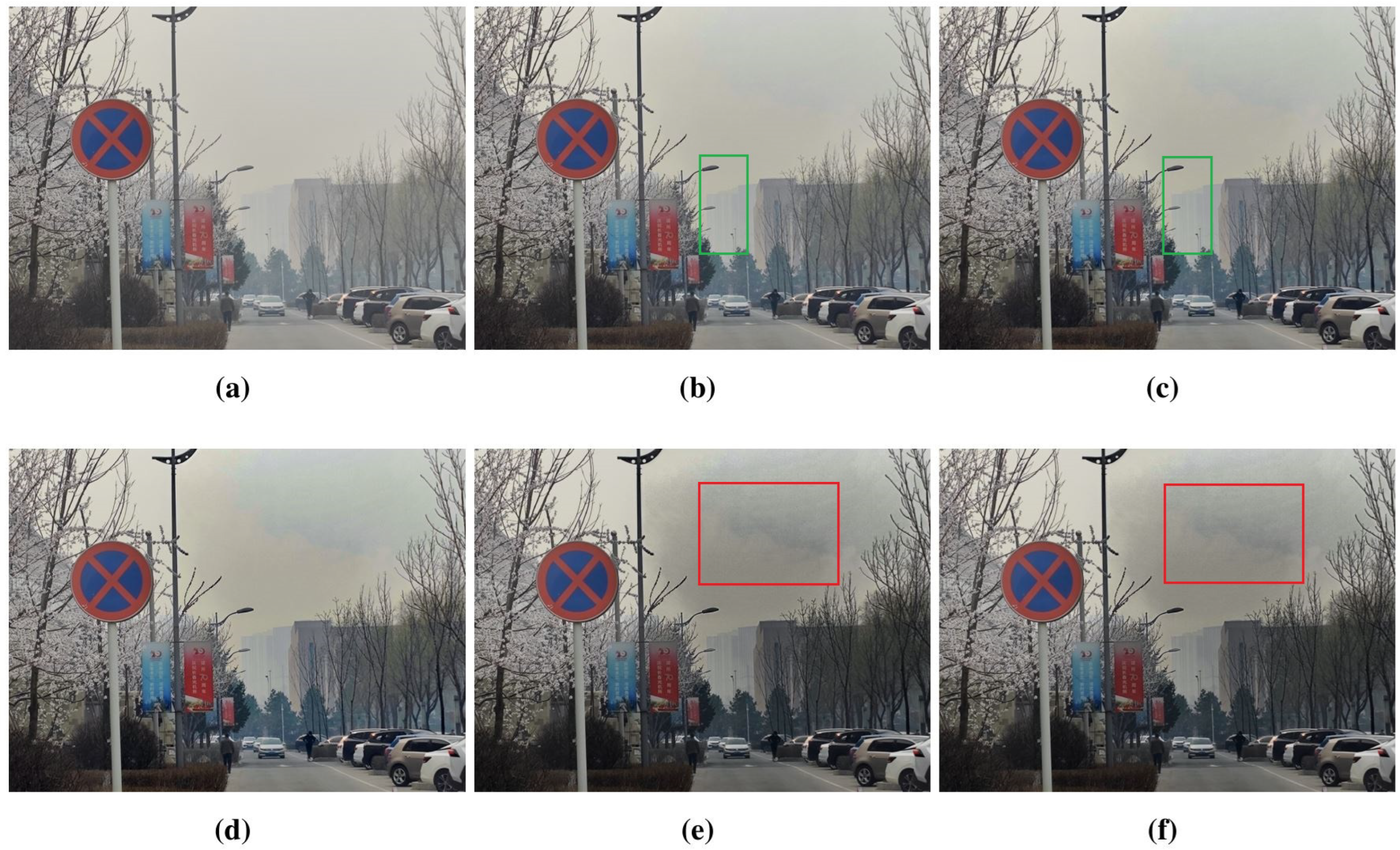

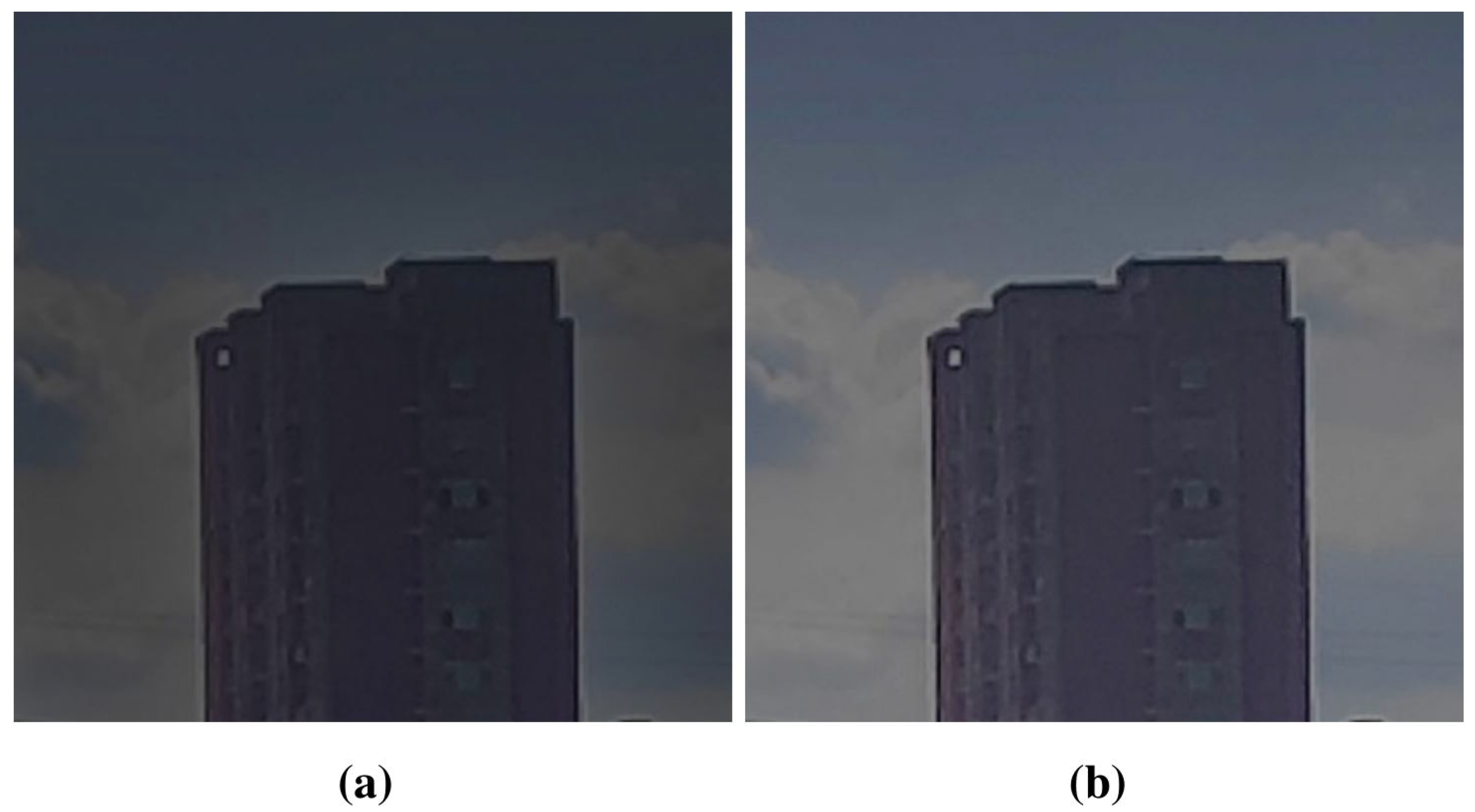

3.1. Influence of Atmospheric Light Intensity

3.2. Polarization State Estimation Model

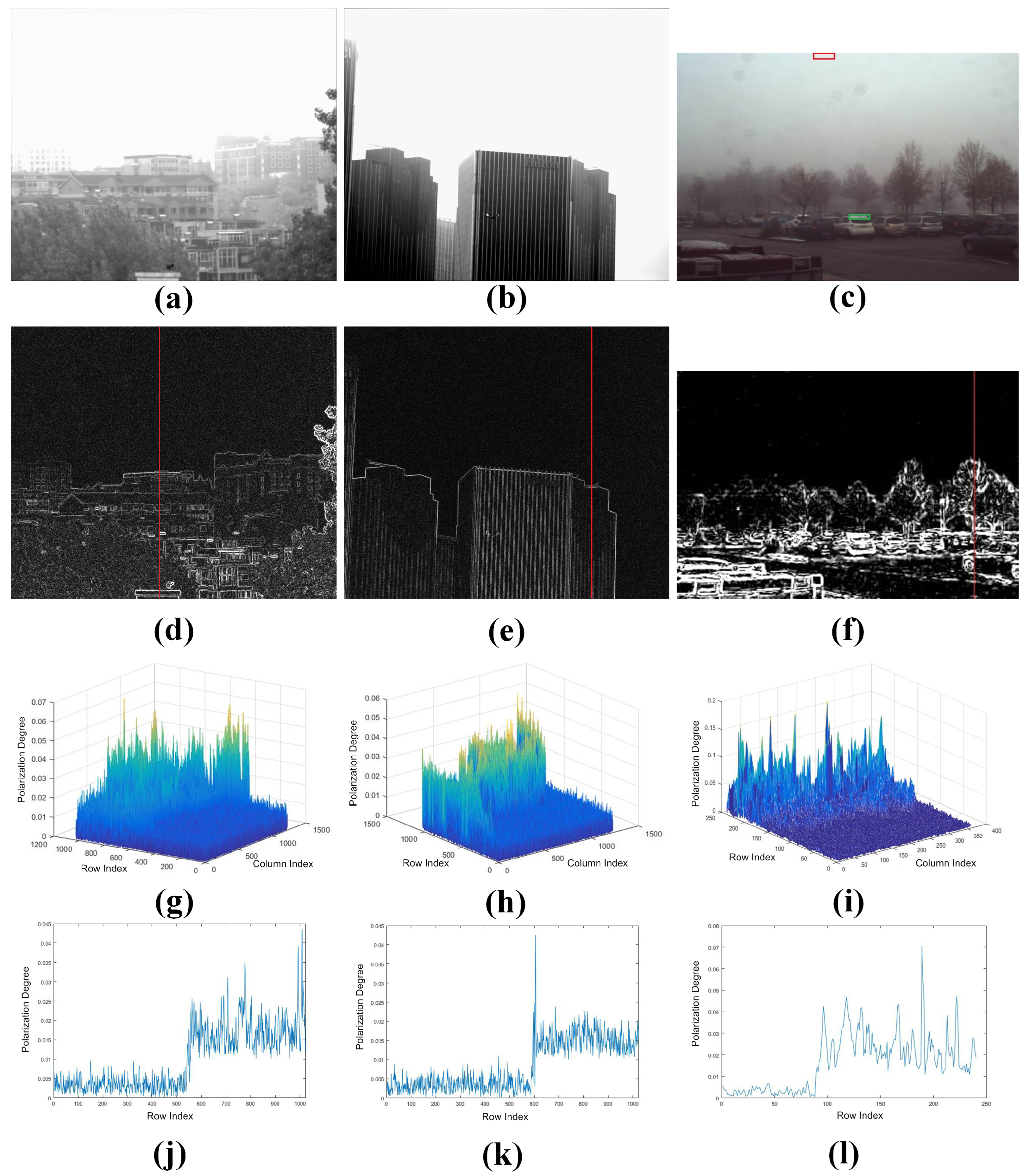

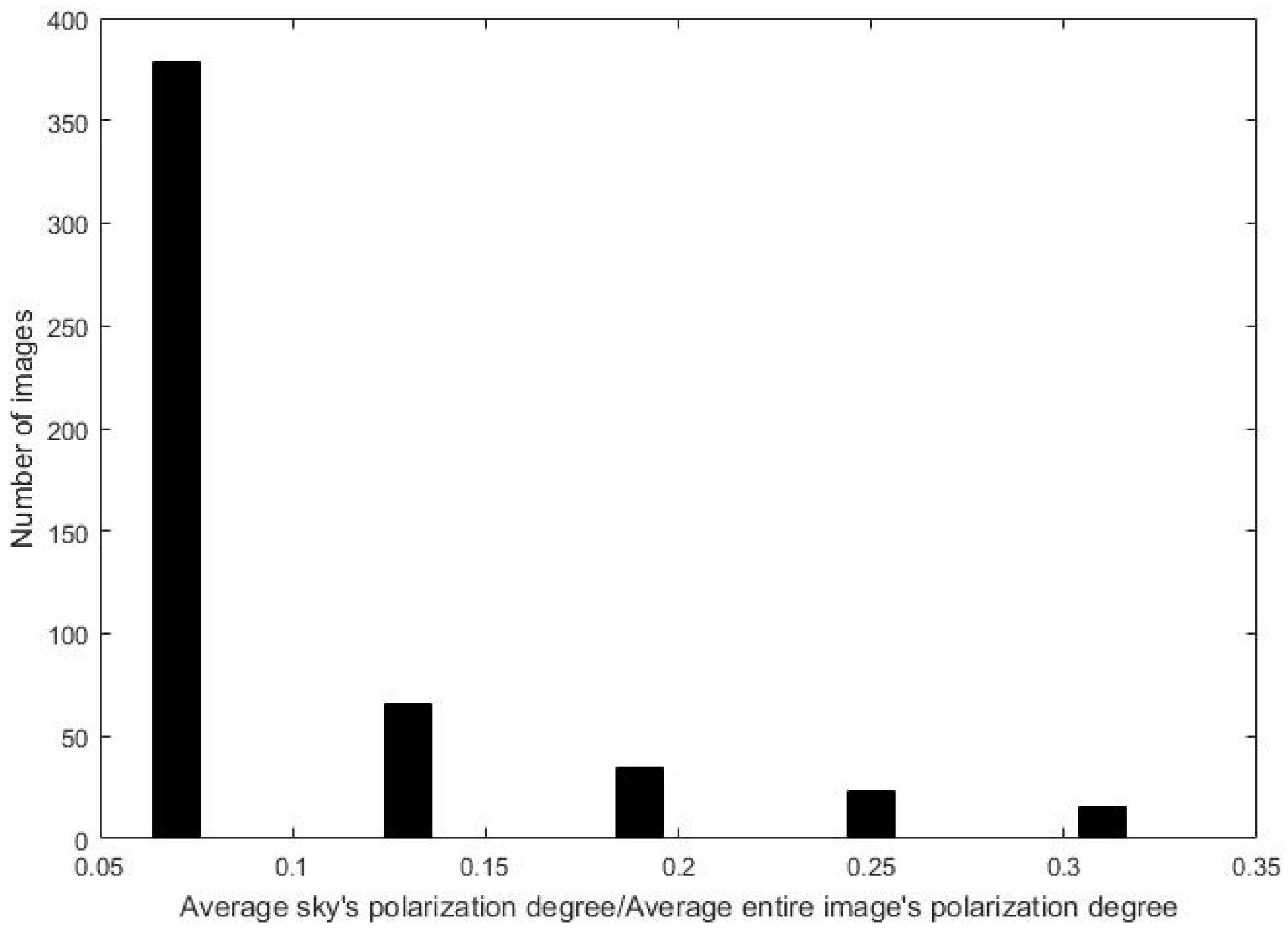

3.3. Polarization Analysis of Natural Scenes

3.4. Polarimetric Degree-Based Atmospheric Light Estimation

4. Polarimetric Dark Channel Dehazing System

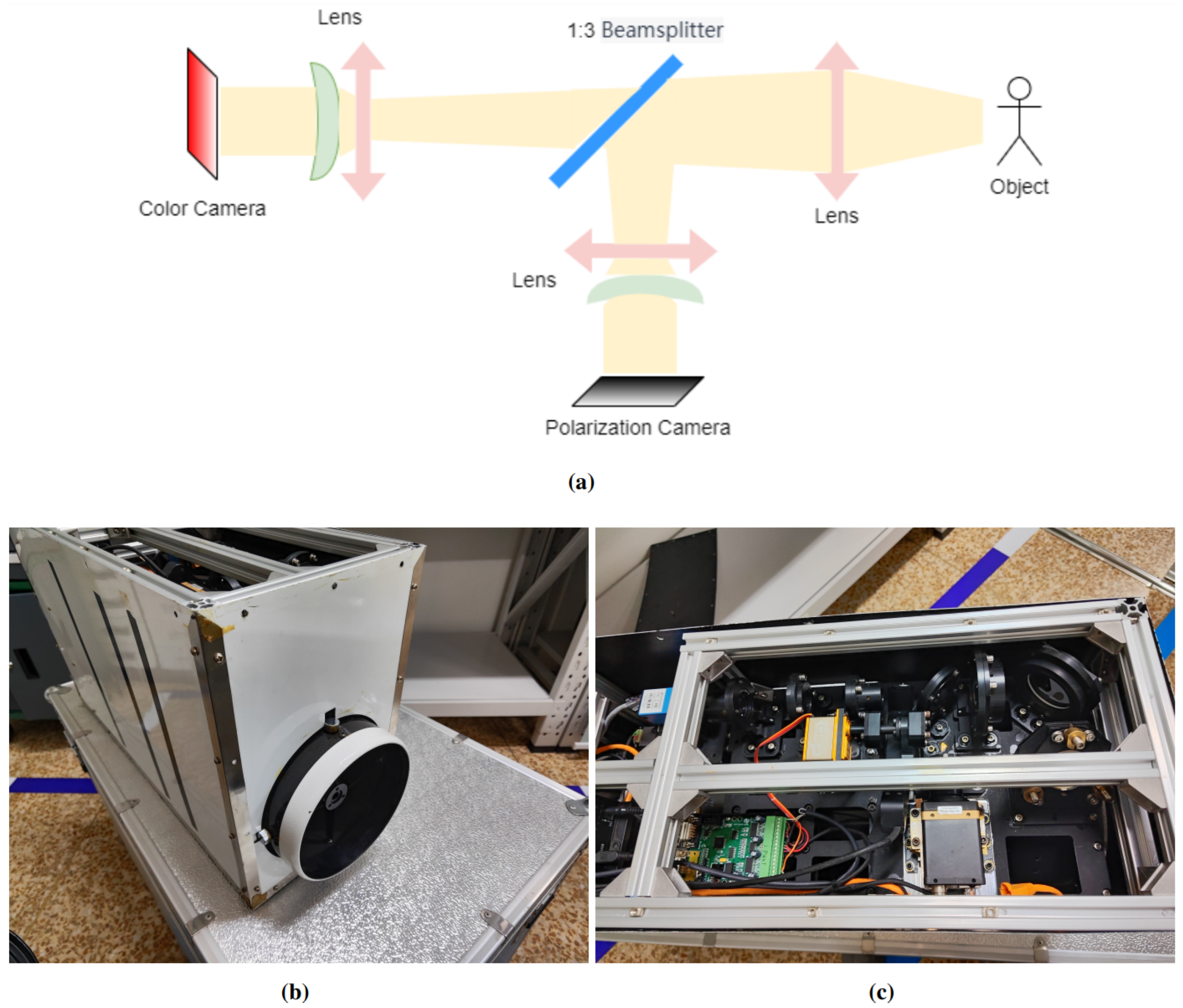

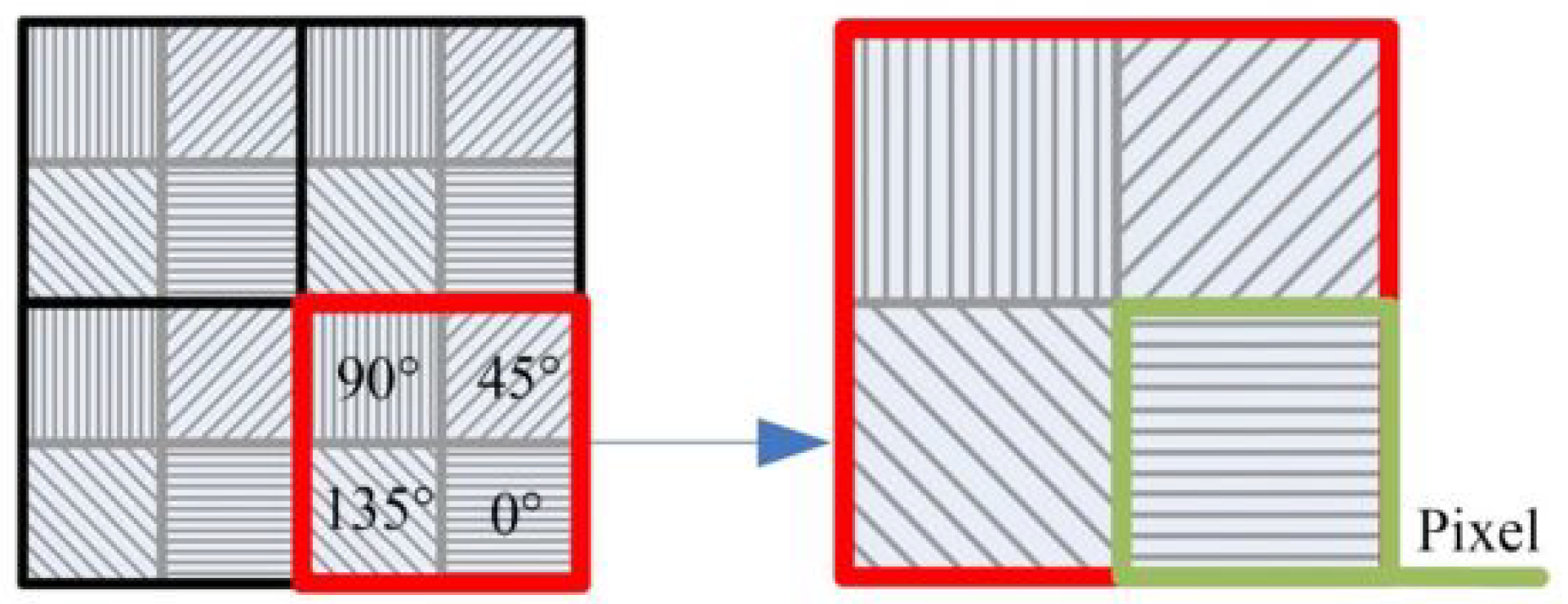

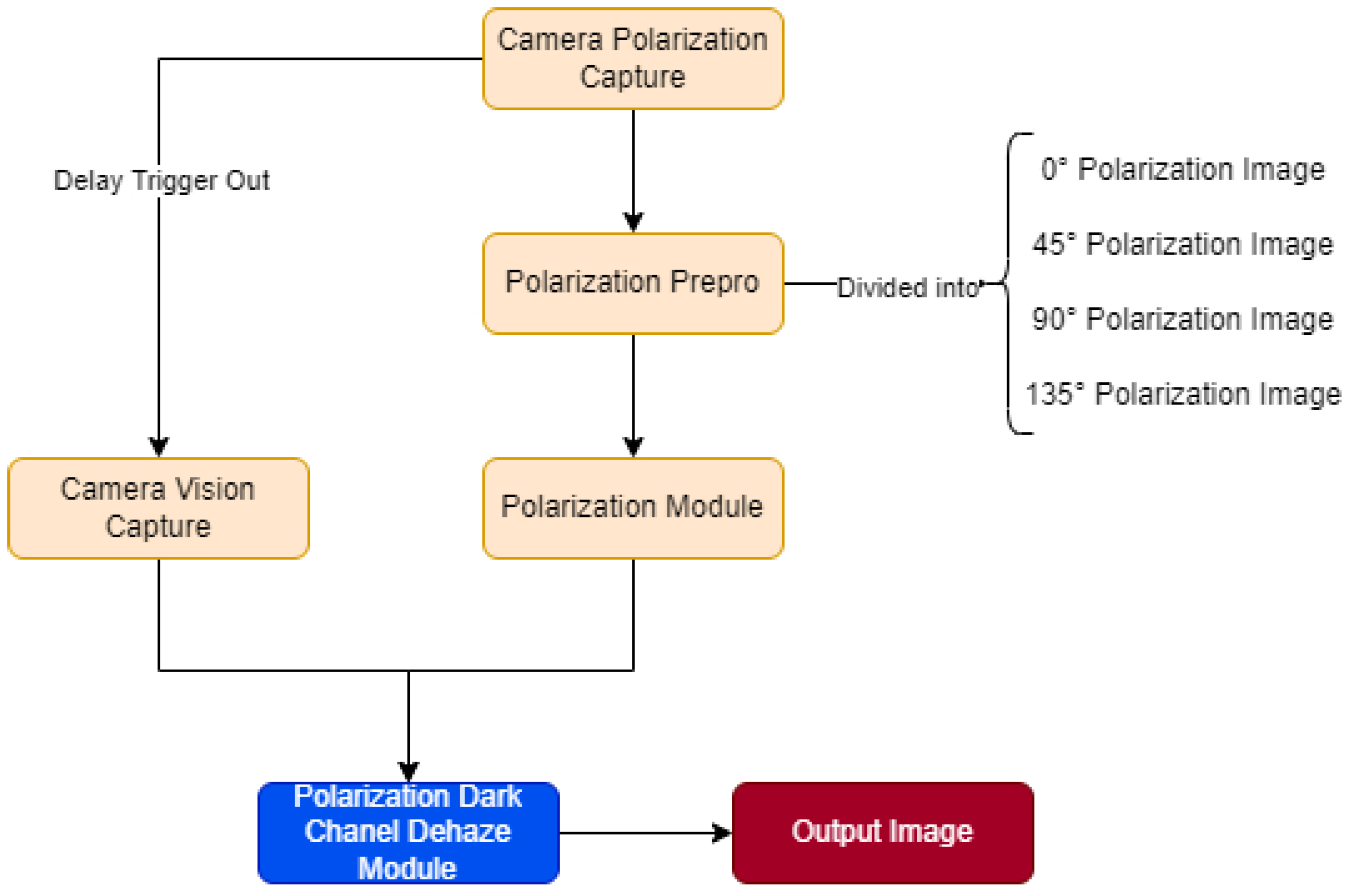

4.1. System Components and Workflow

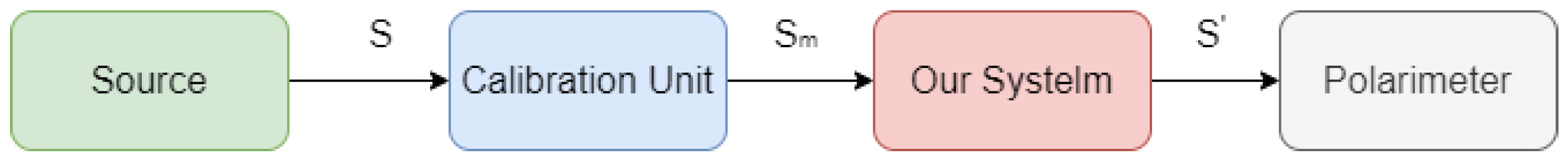

4.2. Polarization Calibration

5. Results and Discussion

5.1. Experimental Environment

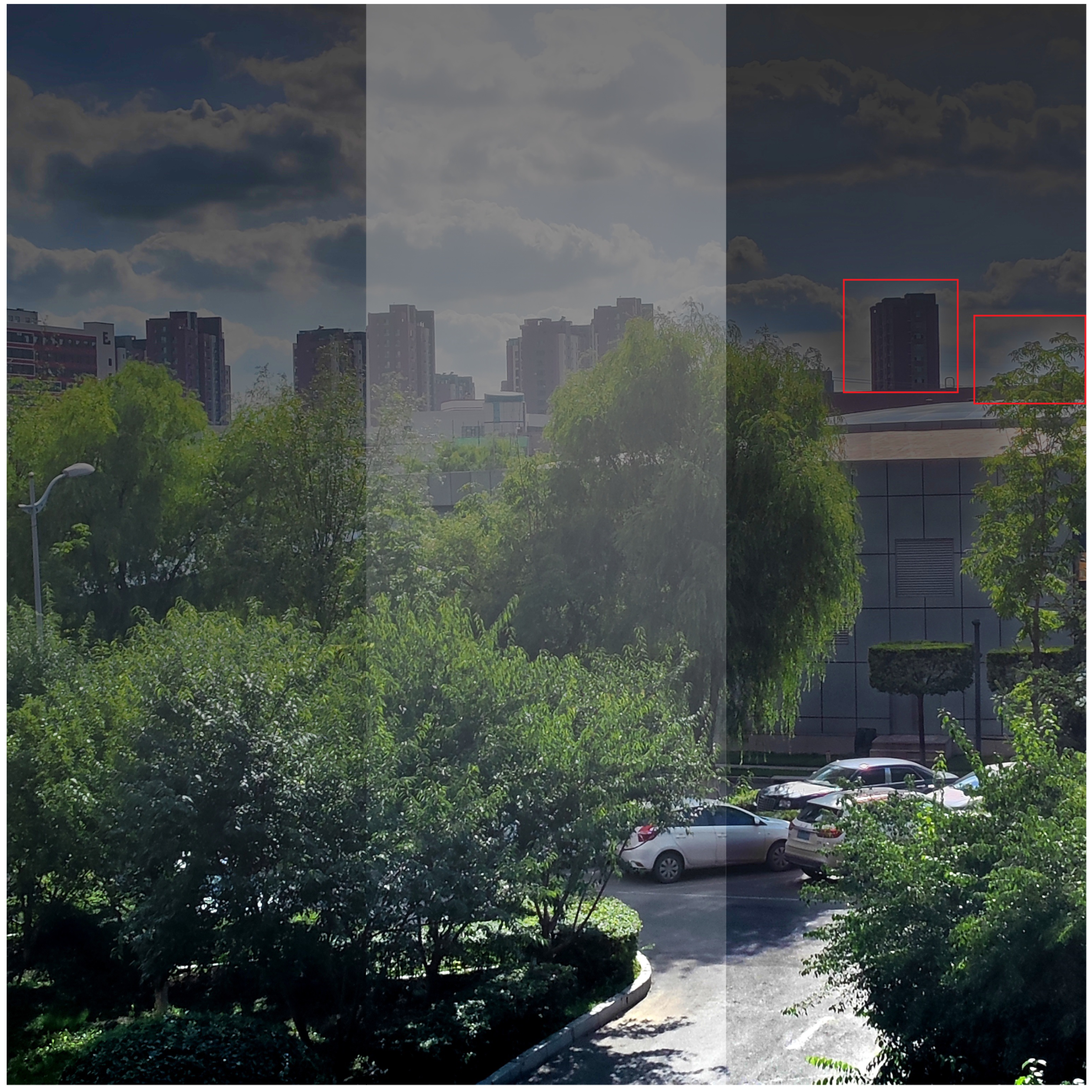

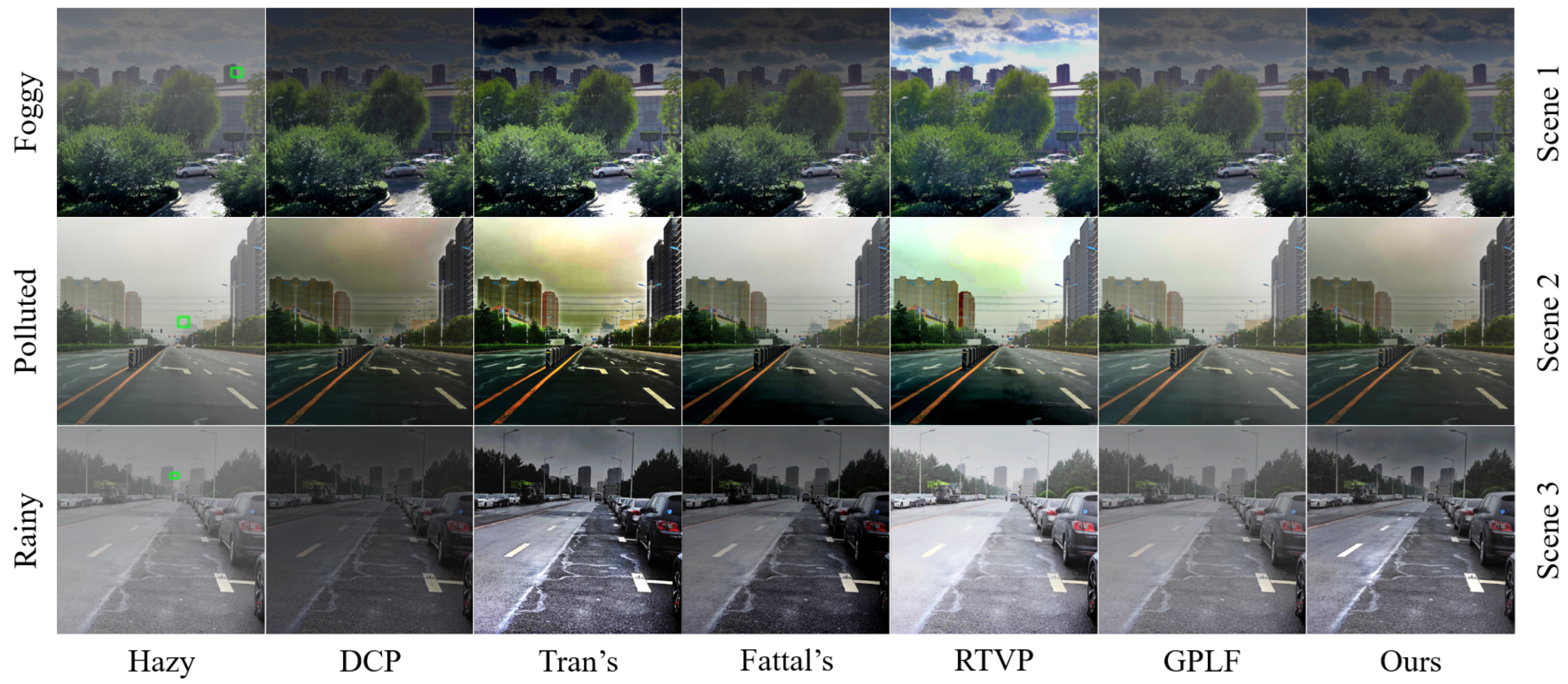

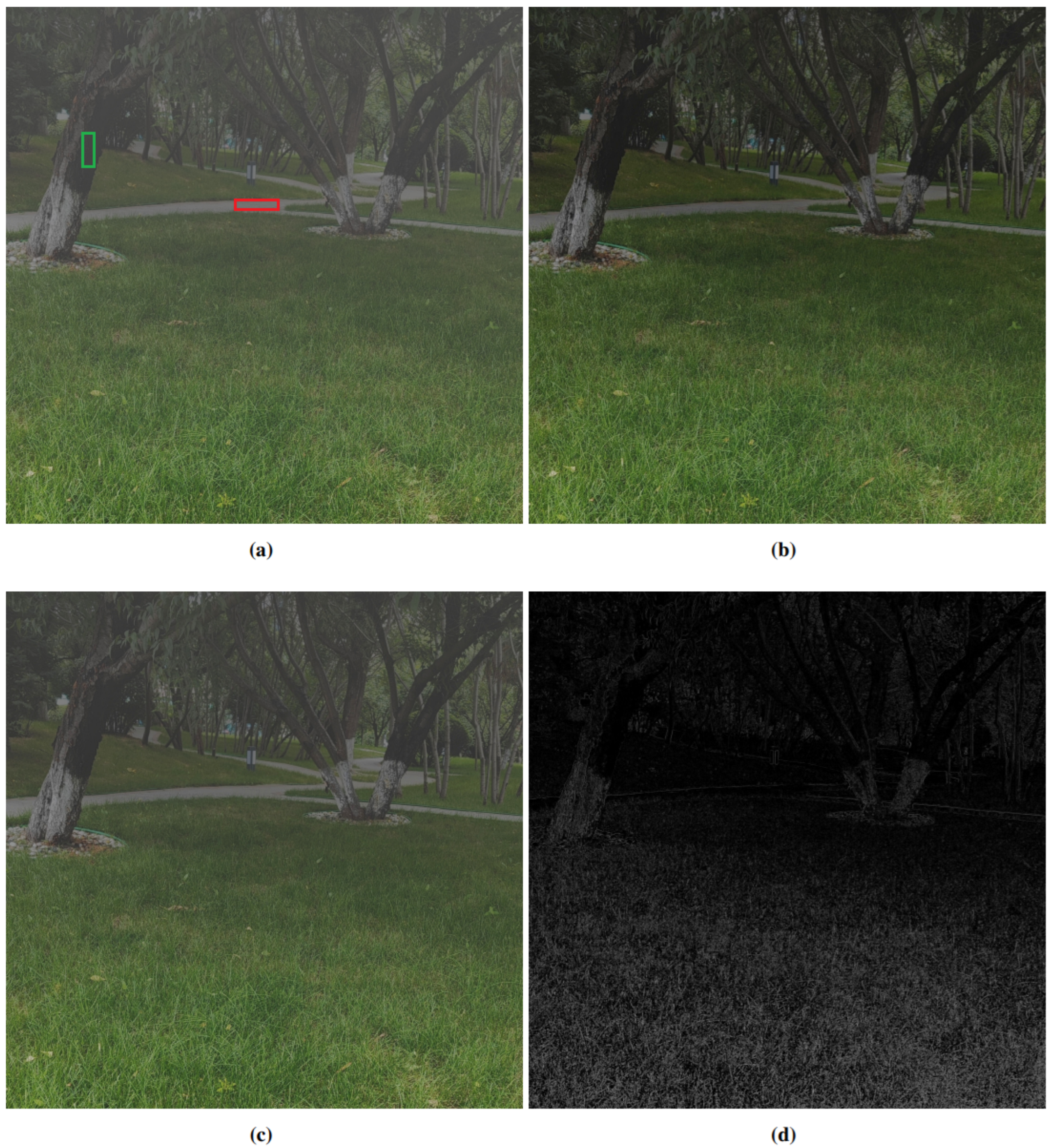

5.2. Experimental Results

5.3. Limitations of the Proposed Method

6. Conclusions and Future Work

6.1. Achievements and Contributions

6.2. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| DCP | Dark Channel Prior |

| DOP | Degree of Polarization |

| PSNR | Peak Signal-to-Noise Ratio |

| SSIM | Structural Similarity Index |

References

- Xie, C.; Nishizawa, T.; Sugimoto, N.; Matsui, I.; Wang, Z. Characteristics of aerosol optical properties in pollution and Asian dust episodes over Beijing, China. Appl. Opt. 2008, 47, 4945–4951. [Google Scholar] [CrossRef] [PubMed]

- Edner, H.; Ragnarson, P.; Spännare, S.; Svanberg, S. Differential optical absorption spectroscopy (DOAS) system for urban atmospheric pollution monitoring. Appl. Opt. 1993, 32, 327–333. [Google Scholar] [CrossRef] [PubMed]

- Xu, F.; Lv, Z.; Lou, X.; Zhang, Y.; Zhang, Z. Nitrogen dioxide monitoring using a blue LED. Appl. Opt. 2008, 47, 5337–5340. [Google Scholar] [CrossRef] [PubMed]

- Yi, W.; Liu, H.; Wang, P.; Fu, M.; Tan, J.; Li, X. Reconstruction of target image from inhomogeneous degradations through backscattering medium images using self-calibration. Opt. Express 2017, 25, 7392–7401. [Google Scholar] [CrossRef] [PubMed]

- Yang, G.; Yang, H.; Yu, S.; Wang, J.; Nie, Z. A Multi-Scale Dehazing Network with Dark Channel Priors. Sensors 2023, 23, 5980. [Google Scholar] [CrossRef] [PubMed]

- He, K.; Sun, J.; Tang, X. Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 2011, 33, 2341–2353. [Google Scholar] [PubMed]

- Li, B.; Peng, X.; Wang, Z.; Xu, J.; Feng, D. AOD-Net: All-in-One Dehazing Network. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22 October 2017. [Google Scholar]

- Ren, W.; Liu, S.; Zhang, H.; Pan, J.; Cao, X.; Yang, M.H. Single Image Dehazing via Multi-scale Convolutional Neural Networks. In Computer Vision—ECCV 2016; Leibe, B., Matas, J., Sebe, N., Welling, M., Eds.; Springer International Publishing: Berlin/Heidelberg, Germany, 2016; Volume 9906. [Google Scholar]

- Chen, Z.; Wang, Y.; Yang, Y.; Liu, D. PSD: Principled Synthetic-to-Real Dehazing Guided by Physical Priors. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Nashville, TN, USA, 20 June 2021. [Google Scholar]

- Schechner, Y.; Narasimhan, S.; Nayar, S. Polarization-based vision through haze. Appl. Opt. 2003, 42, 511–525. [Google Scholar] [CrossRef] [PubMed]

- Schechner, Y.; Narasimhan, S.; Nayar, S. Instant Dehazing of Images Using Polarization. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), Kauai, HI, USA, 8–14 December 2001. [Google Scholar]

- Liang, J.; Ju, H.; Ren, L.; Yang, L.; Liang, R. Generalized Polarimetric Dehazing Method Based on Low-Pass Filtering in Frequency Domain. Sensors 2020, 20, 1729. [Google Scholar] [CrossRef]

- Shen, L.; Zhao, Y.; Peng, Q.; Chan, J.; Kong, S. An Iterative Image Dehazing Method With Polarization. IEEE Trans. Multimed. 2019, 21, 1093–1107. [Google Scholar] [CrossRef]

- Liang, Z.; Ding, X.; Mi, Z.; Wang, Y.; Fu, X. Effective Polarization-Based Image Dehazing With Regularization Constraint. IEEE Geosci. Remote. Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Wang, X.; Ouyang, J.; Wei, W.; Liu, F.; Zhang, G. Real-Time Vision through Haze Based on Polarization Imaging. Appl. Sci. 2019, 9, 142. [Google Scholar] [CrossRef]

- Huang, F.; Ke, C.; Wu, X.; Wang, S.; Wu, J.; Wang, X. Polarization dehazing method based on spatial frequency division and fusion for a far-field and dense hazy image. Appl. Opt. 2021, 60, 9319–9332. [Google Scholar] [CrossRef] [PubMed]

- Frank, C.; Wim, D.; Klamer, S. Infrared polarization measurements and modelling applied to surface laid anti-personnel landmines. Opt. Eng. 2002, 41, 1021–1032. [Google Scholar]

- Aron, Y.; Gronau, Y. Polarization in the LWIR: A method to improve target aquisition. Infrared Technol. Appl. XXXI 2005, 5783, 653–661. [Google Scholar]

- McCartney, E. Scattering Phenomena: Optics of the Atmosphere. Scattering by Molecules and Particles; Wiley: New York, USA, December 1976; pp. 1084–1085. [Google Scholar]

- Liu, K.; He, L.; Ma, S.; Gao, S.; Bi, D. A Sensor Image Dehazing Algorithm Based on Feature Learning. Sensors 2018, 18, 2606. [Google Scholar] [CrossRef] [PubMed]

- Sadjadi, F. Invariants of polarization transformations. Appl. Opt. 2007, 46, 2914–2921. [Google Scholar] [CrossRef] [PubMed]

- Giménez, Y.; Lapray, P.; Foulonneau, A.; Bigue, L. Calibration algorithms for polarization filter array camera: Survey and evaluation. J. Electron. Imaging 2020, 29, 041011. [Google Scholar] [CrossRef]

- Wu, J.; Song, W.; Guo, C.; Ye, X.; Huang, F. Image dehazing based on polarization optimization and atmosphere light correction. Opt. Precis. Eng. 2023, 31, 1827–1840. [Google Scholar] [CrossRef]

- Wang, Z.; Bovik, A.; Sheikh, H.; Simoncelli, E. Image quality assessment: From error visibility to structural similarity. IEEE Trans. Image Process. 2004, 13, 600–612. [Google Scholar] [CrossRef] [PubMed]

- Tran, R. Visibility in Bad Weather from a Single Image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Anchorage, AK, USA, 23–28 June 2008. [Google Scholar]

- Fattal, R. Single image dehazing. ACM Trans. Graph. 2008, 27, 1–9. [Google Scholar] [CrossRef]

| Image | Hazy Image | DCP | Proposed |

|---|---|---|---|

| Figure 10a | 0.1918 | 0.2605 | 0.3486 |

| Figure 13a | 0.0853 | 0.1959 | 0.2515 |

| Figure 13b | 0.0391 | 0.0811 | 0.0791 |

| Figure 14b | 0.1683 | 0.3006 | 0.3154 |

| Scene | Evaluation Method | Hazy | DCP | Tran’s | Fattal’s | RTVP | GLPF | Proposed |

|---|---|---|---|---|---|---|---|---|

| Entropy | 7.2522 | 7.7512 | 7.8001 | 7.8011 | 7.8472 | 7.7801 | 7.9495 | |

| Scene 1 | Contrast | 0.1918 | 0.2605 | 0.3704 | 0.3401 | 0.7401 | 0.2751 | 0.3486 |

| SSIM | - | 0.8024 | 0.7725 | 0.8137 | 0.7714 | 0.8554 | 0.8987 | |

| Entropy | 6.8675 | 7.6453 | 7.8739 | 7.8429 | 7.8781 | 7.2325 | 7.8885 | |

| Scene 2 | Contrast | 0.0391 | 0.0811 | 0.0947 | 0.0651 | 0.0798 | 0.0464 | 0.0791 |

| SSIM | - | 0.6716 | 0.6357 | 0.7145 | 0.6681 | 0.8052 | 0.7768 | |

| Entropy | 7.8913 | 7.9469 | 7.8739 | 7.9478 | 7.9485 | 7.9256 | 7.9509 | |

| Scene 3 | Contrast | 0.0853 | 0.1959 | 0.2654 | 0.2135 | 0.1978 | 0.1214 | 0.2515 |

| SSIM | - | 0.6161 | 0.6362 | 0.6478 | 0.6604 | 0.8151 | 0.7998 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, S.; Li, Y.; Li, H.; Wang, B.; Wu, Y.; Zhang, Z. Visual Image Dehazing Using Polarimetric Atmospheric Light Estimation. Appl. Sci. 2023, 13, 10909. https://doi.org/10.3390/app131910909

Liu S, Li Y, Li H, Wang B, Wu Y, Zhang Z. Visual Image Dehazing Using Polarimetric Atmospheric Light Estimation. Applied Sciences. 2023; 13(19):10909. https://doi.org/10.3390/app131910909

Chicago/Turabian StyleLiu, Shuai, Ying Li, Hang Li, Bin Wang, Yuanhao Wu, and Zhenduo Zhang. 2023. "Visual Image Dehazing Using Polarimetric Atmospheric Light Estimation" Applied Sciences 13, no. 19: 10909. https://doi.org/10.3390/app131910909

APA StyleLiu, S., Li, Y., Li, H., Wang, B., Wu, Y., & Zhang, Z. (2023). Visual Image Dehazing Using Polarimetric Atmospheric Light Estimation. Applied Sciences, 13(19), 10909. https://doi.org/10.3390/app131910909