Pix2Pix and Deep Neural Network-Based Deep Learning Technology for Predicting Vortical Flow Fields and Aerodynamic Performance of Airfoils

Abstract

1. Introduction

2. Methods

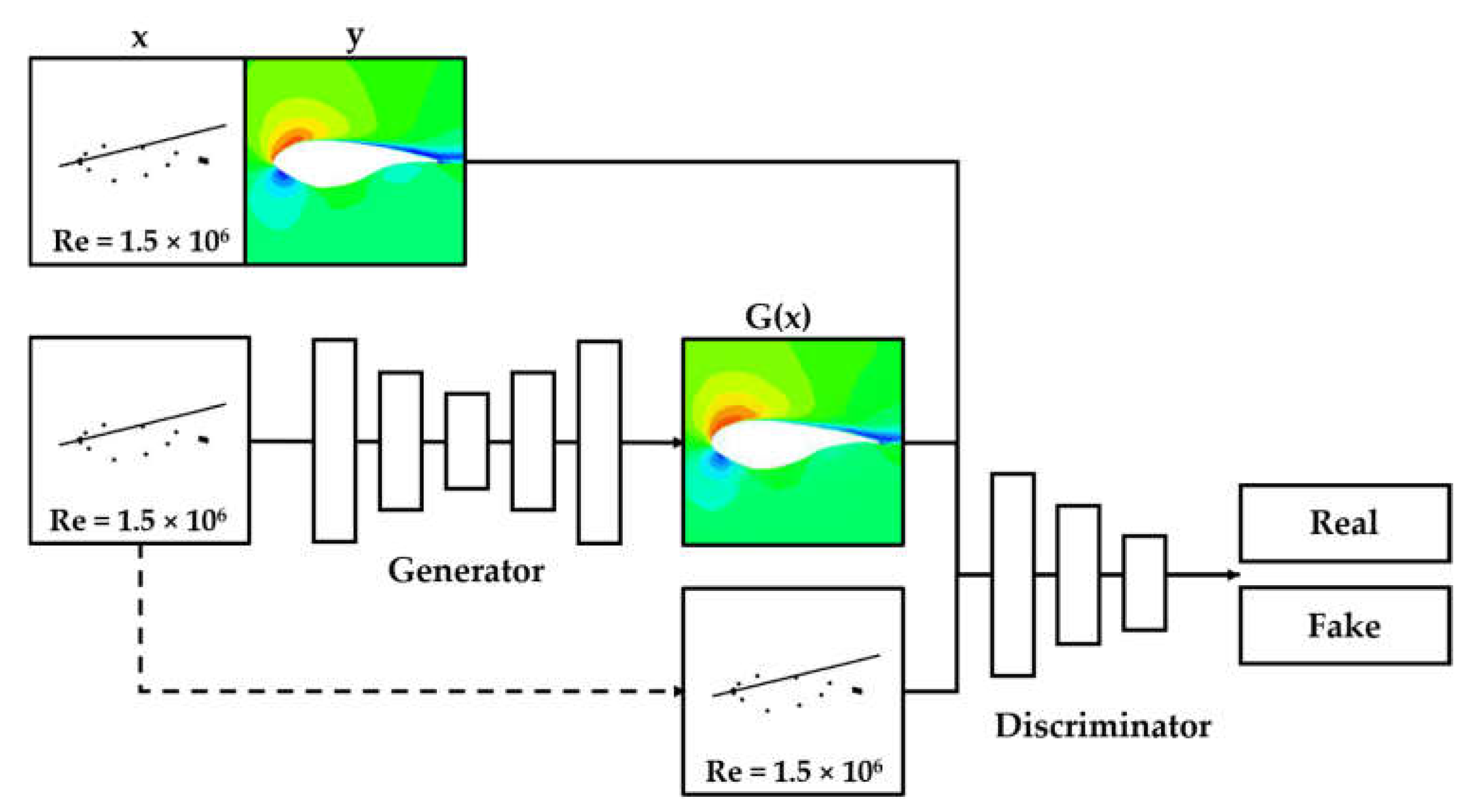

2.1. Generative Adversarial Network (GAN)

2.2. Conditional Generative Adversarial Network (cGAN)

2.3. Image-to-Image Translation with Conditional Adversarial Net (Pix2pix)

2.4. Deep Neural Network (DNN)

2.5. Prediction of Airfoil Flow Field and Aerodynamic Performance Using Pix2pix and the DNN

2.6. Dataset

3. Results

3.1. Implementation Details

3.2. Prediction of the Flow Fields of Airfoils with Different Shapes with Pix2pix

3.3. Prediction of the Flow Fields of Airfoils with Different Shapes, Angles of Attack, and Reynolds Numbers with Pix2Pix

3.4. Prediction of the Aerodynamic Performance of Airfoils with Different Shapes, Angles of Attack, and Reynolds Numbers with the DNN

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Global Wind Energy Council. Annual Wind Report, Brussels, Belgium. 2022. Available online: https://gwec.net/global-wind-report-2022/ (accessed on 14 November 2022).

- Kumar, Y.; Ringenberg, J.; Depuru, S.S.; Devabhaktuni, V.K.; Lee, J.W.; Nikolaidis, E.; Andersen, B.; Afjeh, A. Wind energy: Trends and enabling technologies. Renew. Sustain. Energy Rev. 2016, 53, 209–224. [Google Scholar] [CrossRef]

- Stanley, A.P.J.; Roberts, O.; Lopez, A.; Williams, T.; Barker, A. Turbine scale and siting considerations in wind plant layout optimization and implications for capacity density. Energy Rep. 2022, 8, 3507–3525. [Google Scholar] [CrossRef]

- Buckney, N.; Pirrera, A.; Weaver, P.; Griffith, D.T. Structural Efficiency Analysis of the Sandia 100 m Wind Turbine Blade. In Proceedings of the 32nd ASME Wind Energy Symposium, Harbor, MD, USA, 13–17 January 2014; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2014; pp. 1–27. [Google Scholar]

- Jeong, J.-H.; Kim, S.-H. Optimization of thick wind turbine airfoils using a genetic algorithm. J. Mech. Sci. Technol. 2018, 32, 3191–3199. [Google Scholar] [CrossRef]

- Chehouri, A.; Younes, R.; Ilinca, A.; Perron, J. Wind Turbine Design: Multi-Objective Optimization. In Wind Turbines—Design, Control and Applications; IntechOpen: London, UK, 2016. [Google Scholar]

- Sekar, V.; Jiang, Q.; Shu, C.; Khoo, B.C. Fast flow field prediction over airfoils using deep learning approach. Phys. Fluids 2019, 31, 057103. [Google Scholar] [CrossRef]

- Zuo, K.; Bu, S.; Zhang, W.; Hu, J.; Ye, Z.; Yuan, X. Fast sparse flow field prediction around airfoils via multi-head perceptron based deep learning architecture. Aerosp. Sci. Technol. 2022, 130, 107942. [Google Scholar] [CrossRef]

- Guo, X.; Li, W.; Iorio, F. Convolutional Neural Networks for Steady Flow Approximation. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; ACM: New York, NY, USA, 2016; pp. 481–490. [Google Scholar]

- Kutz, J.N. Deep learning in fluid dynamics. J. Fluid Mech. 2017, 814, 1–4. [Google Scholar] [CrossRef]

- Li, J.; Du, X.; Martins, J.R.R.A. Machine learning in aerodynamic shape optimization. Prog. Aerosp. Sci. 2022, 134, 100849. [Google Scholar] [CrossRef]

- Bhatnagar, S.; Afshar, Y.; Pan, S.; Duraisamy, K.; Kaushik, S. Prediction of Aerodynamic Flow Fields Using Convolutional Neural Networks. Comput. Mech. 2019, 64, 525–545. [Google Scholar] [CrossRef]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial networks. Commun. ACM 2020, 63, 139–144. [Google Scholar] [CrossRef]

- Isola, P.; Zhu, J.Y.; Zhou, T.; Efros, A.A. Image-to-image translation with conditional adversarial networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 5967–5976. [Google Scholar] [CrossRef]

- Gauthier, J. Conditional Generative Adversarial Nets for Convolutional Face Generation. Computer Science 2015. Available online: https://api.semanticscholar.org/CorpusID:3559987 (accessed on 9 November 2022).

- Mirza, M.; Osindero, S. Conditional Generative Adversarial Nets. arXiv 2014. [Google Scholar] [CrossRef]

| Condition | Value | |

|---|---|---|

| Dataset 1 | Airfoil | DU 00-W2-401 |

| DU 00-W2-350 | ||

| DU 97-W-300 | ||

| DU 91-W2-250 | ||

| DU 93-W-210 | ||

| RE | 1.5 × 106 | |

| AOA | 10∘ | |

| Total number of data points: 606 | ||

| Dataset 2 | Airfoil | DU 00-W2-401 |

| DU 00-W2-350 | ||

| DU 97-W-300 | ||

| DU 91-W2-250 | ||

| DU 93-W-210 | ||

| RE | 0.5 × 106, 1.5 × 106, 3.0 × 106 | |

| AOA | ||

| Total number of data points: 12,405 | ||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Song, H.-S.; Mugabi, J.; Jeong, J.-H. Pix2Pix and Deep Neural Network-Based Deep Learning Technology for Predicting Vortical Flow Fields and Aerodynamic Performance of Airfoils. Appl. Sci. 2023, 13, 1019. https://doi.org/10.3390/app13021019

Song H-S, Mugabi J, Jeong J-H. Pix2Pix and Deep Neural Network-Based Deep Learning Technology for Predicting Vortical Flow Fields and Aerodynamic Performance of Airfoils. Applied Sciences. 2023; 13(2):1019. https://doi.org/10.3390/app13021019

Chicago/Turabian StyleSong, Han-Seop, Jophous Mugabi, and Jae-Ho Jeong. 2023. "Pix2Pix and Deep Neural Network-Based Deep Learning Technology for Predicting Vortical Flow Fields and Aerodynamic Performance of Airfoils" Applied Sciences 13, no. 2: 1019. https://doi.org/10.3390/app13021019

APA StyleSong, H.-S., Mugabi, J., & Jeong, J.-H. (2023). Pix2Pix and Deep Neural Network-Based Deep Learning Technology for Predicting Vortical Flow Fields and Aerodynamic Performance of Airfoils. Applied Sciences, 13(2), 1019. https://doi.org/10.3390/app13021019