1. Introduction

The Leap Motion Controller (LMC) is a device widely used in HMI (human–machine interaction) systems and applications. It is capable of highly accurate hand and finger tracking; therefore, it allows the design and implementation of sophisticated gesture-based interaction systems.

Many researchers have used the Leap Motion Controller to interact with robotic systems via gestures [

1,

2,

3,

4,

5,

6,

7,

8,

9,

10,

11,

12,

13,

14,

15,

16,

17,

18,

19,

20,

21,

22,

23,

24,

25,

26,

27,

28,

29].

Some works focused specifically on the interaction with professional robotic manipulators [

1,

2,

3,

4,

5,

10,

13,

14,

15]. Other works included the implementation of grasping or the finger movement of grippers and hand-like end effectors [

3,

6,

11,

14,

15,

23,

25,

28]. Some researchers controlled simulations via hand gestures using the LMC [

30,

31], others interacted with wheeled robots [

8,

32], drones [

22], and humanoid robots [

18]. Some works focused solely on hand gesture recognition [

33,

34,

35,

36,

37].

All of the above proves the Leap Motion Controller is widely used in human–robot interaction research. Several papers have evaluated the accuracy and precision of the LMC [

38,

39,

40,

41,

42]. All these works focused only on certain areas within the workspace of the Leap Motion. Weichart et al. tested within a workspace box of 200 × 200 × 200 mm [

38]. Guna et al. tested within a workspace subspace of 600 × 600 × 400 mm (with 400 mm being the height) [

40]. Valentini et al. tested fingertip detection on 3 planes in three different heights—200, 400, and 600 mm; therefore, they worked in a workspace subspace of 240 × 240 × 600 mm (with 600 mm being the height) [

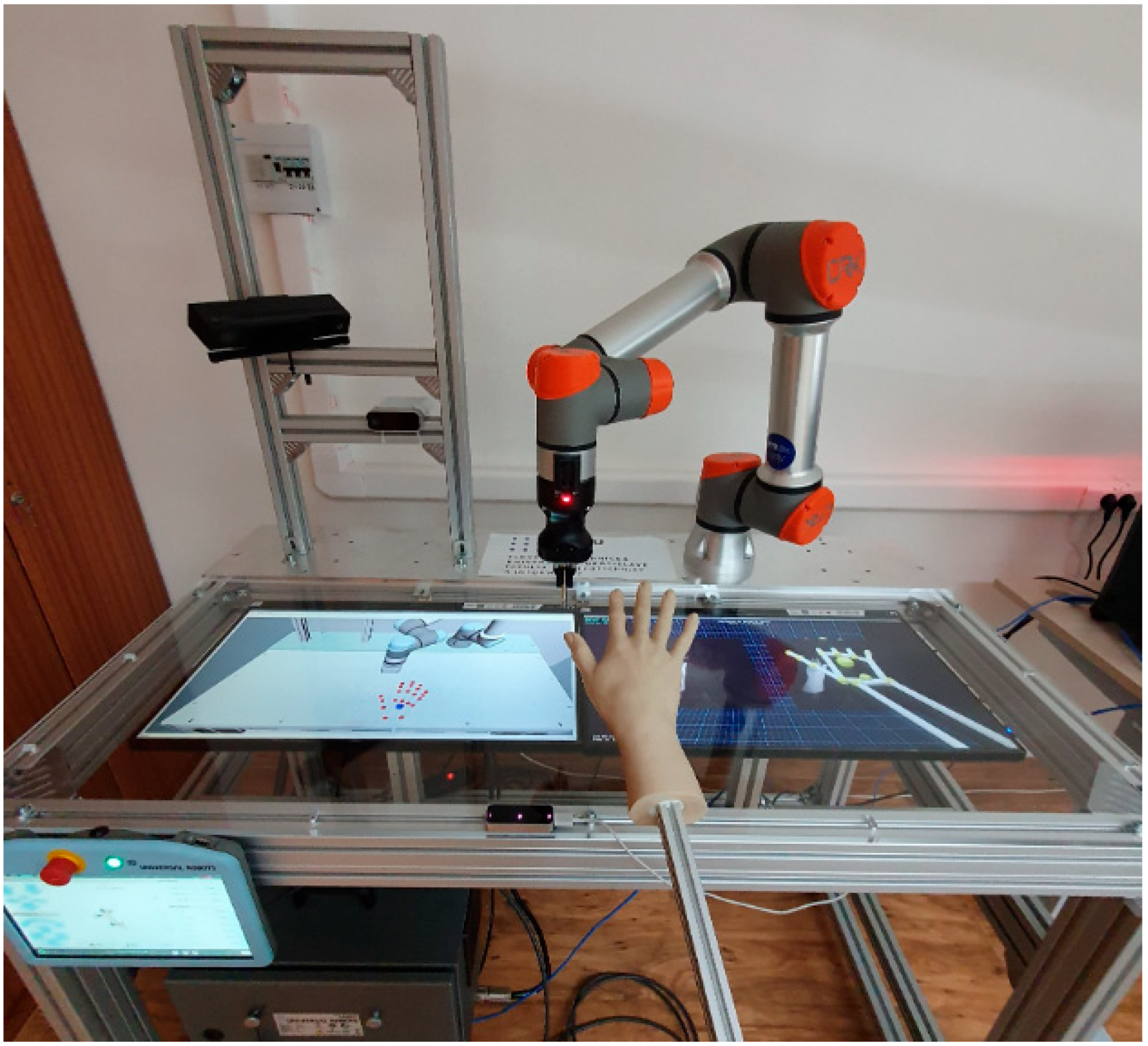

41]. Vysocký et al. focused on the target height position of 250 mm. The aim of this study and main contribution to the field is the determination of the full workspace of the LMC in real conditions, which has not been done before. This information is very useful in designing HMI applications; in our applied research, we use it for the optimalization of HRI (human–robot interaction) interfaces and methods (

Figure 1).

2. Related Work

In this section, we refer in detail to some key HRI applications to show how the LMC is currently used in robotics research.

Zhang et al. designed an HRI interface which incorporated marker-less tracking and a wearable-based approach to interact with a human operator via continuous movements of their hand. Marker-less tracking was completed using the LMC; wearable-based tracking was completed using a wireless wristwatch. Two Kalman filters were used to suppress the noise of the measurements [

1].

Zhou et al. presented an intuitive grasping control of their proprietary 13-DOF humanoid soft robotic hand, BCL-13. They used the LMC to obtain information about hand joint angles; these are then mapped onto the robotic hand through a dedicated filter. This way, the 13 degrees of freedom can be controlled by the hand of the operator [

6].

Chen et al. presented a remote manipulation method for a mobile manipulator via the gestures of the operator. A small mobile robot was equipped with a 4-DOF robot arm to grasp objects. The operator used one hand to control both the motion of the mobile robot and the posture of the robotic arm. The LMC was used to gather information on the operator’s hands. Two filters were employed to estimate the position and posture of the human hand to reduce the inherent noise of the sensor. A Kalman filter was used to estimate the position, and a particle filter was used to estimate the orientation of the operator’s hand. The effectiveness of the proposed human–robot interface was experimentally verified in a laboratory. The authors claimed they have proven the system can be used for robot teleoperation by a non-professional operator [

7].

Kobayashi et al. proposed a robot–human handover with a “hand/arm” robotic manipulator with the Universal Robot Hand II as its end effector. The authors stated that in the robot–human handover, the robot must avoid not only hurting a human receiver but also can not disturb the operator’s activity. Therefore, in the developed HRI method, the “hand/arm” robot measured the position and posture of the human hand for a smooth handover using the LMC. The handover motion was executed according to the position and posture of the operator’s hand [

14].

Yu et al. proposed a gesture-based telemanipulation scheme to control the NAO humanoid robot. Using the LMC, the NAO robot was telemanipulated to accomplish locomotion, dexterous manipulation, and composite tasks [

18].

Sarkar et al. presented their implementation of drone control via simple human gestures. They used the LMC sensor and the Parrot AR DRONE 2.0, which is an off-the-shelf quad rotor having an on-board Wi-Fi system. The AR DRONE was connected to the ground station via Wi-Fi, and the LMC was connected to the ground station via USB port. The LMC was used to detect the hand gestures and send them to the ground station. The ground station used ROS (Robot Operating System) on Linux [

22].

Devine et al. presented experiments that explored the possibility of using an optical tracking device input to remotely control dual-arm robots. They proposed using the Leap Motion Controller as an alternative to using joysticks, which should allow for more intuitive 6-DOF control where only one hand is needed to control each robot arm. They affixed two end effectors to the Baxter research robot: a standard electric gripper, and an AR10 robotic hand. The standard electric gripper was controlled via pinch gestures, and all five fingers and the thumb were controlled on the AR10 robotic hand using finger movement detected by the LMC. Authors performed only simple tests; the results indicated that in lifting standard objects the standard electric gripper had a higher success rate. The main issue was the lack of any touch or force feedback for the operator. However, as the user became more comfortable with the system, their performance became better [

24].

Tian et al. presented a real-time virtual grasping algorithm to model interactions with virtual objects. Their approach was designed for multi-fingered hands and made no assumptions about the motion of the user’s hand or the virtual objects. Given a model of the virtual hand, they used machine learning and particle swarm optimization to automatically pre-compute stable grasp configurations for the object. The learning pre-computation step was accelerated using GPU parallelization. At runtime, they relied on the pre-computed stable grasp configurations, and dynamics/non-penetration constraints along with motion-planning techniques to compute plausible-looking grasps. In practice, their real-time algorithm could perform virtual grasping operations in less than 20 ms for complex virtual objects. They implemented their grasping algorithm with Oculus Rift HMD and the Leap Motion Controller and evaluated its performance for different tasks corresponding to grabbing virtual objects and placing them at arbitrary locations [

31].

3. The Leap Motion Controller

The LMC uses two 640 × 240-pixel near-infrared cameras spaced 4 cm apart, capturing emitted light from three LEDs with a wavelength of 850 nm. The cameras typically operate at 120 Hz. The depth data are acquired by applying unique algorithms on the raw data, consisting of infrared brightness values and the calibration data [

43]. Leap Motion provides human hand tracking, explicitly focusing on the position and orientation of fingers.

The controller, depicted in

Figure 2, can track hands within a workspace that according to the datasheet extends up to 60 cm (24”) preferred (up to 80 cm maximum). It is the purpose of this paper to verify and discuss this claim. The typical field of view is declared to be 140 × 120° and it is illustrated in

Figure 3. The controller produces an output in the form of gray-scale images captured by the IR cameras and the skeletal representation of the human hand. The LMC can differentiate between 27 distinct hand elements.

4. Materials

In order to simulate a human hand, we made an exact replica of the human operator’s hand participating in our research project; the replica was made by a professional sculptor, and it is depicted in

Figure 4 and

Figure 5 (5a—picture of the replica, 5b—dimensions of the replica). Firstly, a mold of the right hand with straightened fingers was made. There were spaces between each finger in order to make them clearly visible and detectable. The form was filled with a material ensuring matte reflective properties to simulate real skin. The final model was fastened to an aluminum profile rod. The final product could be easily fixed to a holder to perform experiments.

The main advantage (and at the same time a necessity) of the usage of such a hand replica in experiments is that it does not shake. A human operator is unable to keep their hand and fingers straight without shaking. Therefore, in such a case it would be impossible to separate the repeatability properties of the investigated sensor from human hand shaking. Using an exact professional replica of a real human operator’s hand ensures that only the parameters of the Leap Motion Controller are examined and determined.

5. Methods

In our experiments, the replica of the operator’s hand was mounted on a stand and moved randomly in the proclaimed Leap Motion Controller’s workspace and beyond, where the tracking ability allowed us. The goal was to determine the boundaries of the actual workspace in real-world conditions and measure the precision (repeatability) of tracking within it. The hand was always pushed as far as possible from the center of measuring, and the tracking ability was checked at all times. If there was at least some chance the hand could be tracked under some conditions, the measurement was included. By this we mean that sometimes the tracking in the furthest corners of the workspace stopped working but could be established in other attempts. In such cases, the hand model was repeatedly moved close above the sensor and then to approximately the same location to acquire data. The Leap Motion Controller was more likely to regain and keep the tracking ability when the replica was smoothly moved from the close positions to the most distant ones. In each position, 500 samples were acquired for each tracked hand/finger joint and stored for evaluation.

We evaluated the measured data from several perspectives:

Is the measurement within the proclaimed workspace (140 × 120° field of view)?

Is the measurement within the recommended distance from the LMC (60 cm)?

Is the measurement within the maximum distance (80 cm) or even above?

How reliable is the tracking: what is the precision/repeatability?

All of the following figures have the workspace proclaimed by the datasheet drawn in green; all values are in millimeters. Each measurement is displayed as a ball; the ball represents the average of standard deviations of all detected hand/finger joints for that particular position—the higher the value, the bigger the ball. In most figures, these balls are projected to 2D space for a clearer view. The circle/ball size corresponds exactly to the measured standard deviation average. Outliers (9 in total) are treated separately (removed) as they would have occupied too much space and made the figures cluttered.

5.1. Measurements up to 60 cm above the LMC

In

Figure 6, there is top-to-bottom view of the measured data up to 60 cm Euclidian distance from the sensor. It is clear from the data, that the right side of the spectrum has a lower range in the x axis direction, and the tracking is less reliable.

This is most likely caused by the fact that the hand model used is right-handed: the thumb is more stretched than the pinky finger, and therefore finger joints can be seen further away in the left direction (negative x axis values).

In

Figure 7, there is front-to-back visualization of the measured data; all measurements are within the proclaimed workspace. The same phenomenon as in the previous figure can be clearly observed, and again, the most likely cause is that if the right hand moves to the far right the pinky and ring finger stop being visible, resulting in bad tracking precision.

Figure 8 depicts the side view, where the negative y axis values are closer to the operator. The hand is more trackable in the opposite direction, which is probably caused by the fact that the wrist and forearm are more visible when the hand is stretched this way.

The overall view of the presented measurement range is in

Figure 9.

5.2. Measurements up to 80 cm above the LMC

As is clear from the figures, in the range of 60 to 80 cm, all measurements are still within the proclaimed workspace. Furthermore, the tracking performs better than below 60 cm on the far right side (positive x axis) because both sides of the palm (thumb and pinky finger) are visible to the LMC in such a height.

5.3. Measurements Higher Than 80 cm above the LMC

In

Figure 14 and

Figure 15, there are all performed measurements displayed. Outliers are removed. Is it clear that above 60 cm, the full proclaimed workspace in terms of the field of view is not fully trackable.

6. Discussion

In this paper, we evaluated the workspace of the Leap Motion Controller. This sensor is widely used in HMI interaction, and several studies have focused on its precision and accuracy; however, none of them evaluated the range of its workspace to its greatest extent (80 cm and higher). To achieve as realistic data as possible, we used an exact replica of a human operator’s hand crafted by a professional sculptor.

The results show that the proclaimed typical field of view 140 × 120° is usable only in the range of 60 cm Euclidian distance from the origin of the LMC’s coordinate system. The front view visualizations show that the tracking range on the x axis (left–right movement) is dependent on the hand being used and the level of stretch of the operator’s fingers. In the range up to 60 cm, the right hand with a stretched thumb is more easily tracked on the negative side of the x axis (movement to the left). It is very likely that a left hand replica would behave similarly with the sides switched; however, in the range 60–80 cm, this behavior is no longer present. The side view results clearly show that hands are better trackable when the sensor sees the forearm. If the forearm is not seen, the tracking range is lower. Our measurements also show that the LMC is capable of tracking hands even above the maximum range of 60 cm, and in some cases, even up to 100 cm.

For the most precise results, according to our measurements, it is best to use the Leap Motion Controller in the ranges:

Left–right: X < −20 cm, +20 cm>

Front–back: Y < −20 cm, +20 cm>

Top–bottom: Z < 5 cm, +60 cm>

For applications where high precision is necessary, the interface can be designed in such a way that it warns the operator when her or his hand is outside this range.

In our approach, we use only a stretched palm gesture perpendicular to the plane where the LMC is positioned. Therefore, the limitation of our work is the use of only one gesture, and one hand size, which is the size of a male adult, 185 cm tall. We evaluate only static positions (to determine the size of the LMC’s workspace), but we do not evaluate motion tracking. This could be accomplished by fixing the hand to the robot’s end effector and repeating the same motions. In the future, rotations, and possibly other gestures and hand sizes (male, female, child), could be used to gather more information about the tracking behavior of the LMC.

Author Contributions

Conceptualization, M.T.; methodology, M.T.; software, M.T.; validation, M.D.; formal analysis, M.T. and M.D.; investigation, M.T.; resources, M.T.; data curation, M.D.; writing—original draft preparation, M.T.; writing—review and editing, M.T. and M.D.; visualization, M.D.; supervision, M.T.; project administration, M.T.; funding acquisition, M.D., J.R. and F.D. All authors have read and agreed to the published version of the manuscript.

Funding

This article was written thanks to the generous support under the Operational Program Integrated Infrastructure for the project: “Research and development of the applicability of autonomous flying vehicles in the fight against the pandemic caused by COVID-19“, Project no. 313011ATR9, co-financed by the European Regional Development Fund. Further funding by KEGA 028STU-4/2022 and APVV-21-0352.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Zhang, P.; Li, B.; Du, G.; Liu, X. A Wearable-Based and Markerless Human-Manipulator Interface with Feedback Mechanism and Kalman Filters. Int. J. Adv. Robot. Syst. 2015, 12, 164. [Google Scholar] [CrossRef]

- Zhang, P.; Liu, X.; Du, G.; Liang, B.; Wang, X. A markerless human-manipulators interface using multi-sensors. Ind. Rob. 2015, 42, 544–553. [Google Scholar] [CrossRef]

- Chen, C.; Chen, L.; Zhou, X.; Yan, W. Controlling a robot using leap motion. In Proceedings of the 2017 2nd International Conference on Robotics and Automation Engineering (ICRAE), Shanghai, China, 29–31 December 2017. [Google Scholar]

- Hu, T.J.; Zhu, X.J.; Wang, X.Q.; Wang, T.S.; Li, J.F.; Qian, W.P. Human stochastic closed-loop behavior for master-slave teleoperation using multi-leap-motion sensor. Sci. China Technol. Sci. 2017, 60, 374–384. [Google Scholar] [CrossRef]

- Mendes, N.; Ferrer, J.; Vitorino, J.; Safeea, M.; Neto, P. Human Behavior and Hand Gesture Classification for Smart Human-robot Interaction. Procedia Manuf. 2017, 11, 91–98. [Google Scholar] [CrossRef]

- Zhou, J.; Chen, X.; Chang, U.; Pan, J.; Wang, W.; Wang, Z. Intuitive Control of Humanoid Soft-Robotic Hand BCL-13. In Proceedings of the IEEE-RAS International Conference on Humanoid Robots, Beijing, China, 6–9 November 2018. [Google Scholar]

- Chen, M.; Liu, C.; Du, G. A human–robot interface for mobile manipulator. Intell. Serv. Robot. 2018, 111, 269–278. [Google Scholar] [CrossRef]

- Valner, R.; Kruusamäe, K.; Pryor, M. TeMoto: Intuitive multi-range telerobotic system with natural gestural and verbal instruction interface. Robotics 2018, 7, 9. [Google Scholar] [CrossRef]

- Ponmani, K.; Sridharan, S. Human–robot interaction using three-dimensional gestures. In Lecture Notes in Electrical Engineering; Springer: Singapore, 2018; Volume 492. [Google Scholar]

- Tang, G.; Webb, P. The Design and Evaluation of an Ergonomic Contactless Gesture Control System for Industrial Robots. J. Robot. 2018, 2018, 9791286. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, R.; Chen, L.; Zheng, X. Natural Gesture Control of a Delta Robot Using Leap Motion. J. Phys. Conf. Ser. 2019, 1187, 032042. [Google Scholar] [CrossRef]

- Hentout, A.; Aouache, M.; Maoudj, A.; Akli, I. Human–robot interaction in industrial collaborative robotics: A literature review of the decade 2008–2017. Adv. Robot. 2019, 33, 764–799. [Google Scholar] [CrossRef]

- Liu, Y.; Zhang, Y.; Fu, B.; Yang, R. Predictive control for robot arm teleoperation. In Proceedings of the 39th Annual Conference of the IEEE Industrial Electronics Society, Vienna, Austria, 10–13 November 2013. [Google Scholar]

- Kobayashi, F.; Okamoto, K.; Kojima, F. Robot-human handover based on position and posture of human hand. In Proceedings of the 2014 Joint 7th International Conference on Soft Computing and Intelligent Systems, SCIS 2014 and 15th International Symposium on Advanced Intelligent Systems (ISIS), Kitakyushu, Japan, 3–6 December 2014. [Google Scholar]

- Bassily, D.; Georgoulas, C.; Güttler, J.; Linner, T.; Bock, T. Intuitive and adaptive robotic arm manipulation using the leap motion controller. In Proceedings of the Joint Conference of ISR 2014—45th International Symposium on Robotics and Robotik 2014—8th German Conference on Robotics, ISR/ROBOTIK, Munich, Germany, 2–3 June 2014. [Google Scholar]

- Liu, Y.K.; Zhang, Y.M. Toward Welding Robot with Human Knowledge: A Remotely-Controlled Approach. IEEE Trans. Autom. Sci. Eng. 2015, 12, 769–774. [Google Scholar] [CrossRef]

- Du, G.; Zhang, P. A Markerless Human-Robot Interface Using Particle Filter and Kalman Filter for Dual Robots. IEEE Trans. Ind. Electron. 2015, 62, 2257–2264. [Google Scholar] [CrossRef]

- Yu, N.; Xu, C.; Wang, K.; Yang, Z.; Liu, J. Gesture-based telemanipulation of a humanoid robot for home service tasks. In Proceedings of the 2015 IEEE International Conference on Cyber Technology in Automation, Control and Intelligent Systems, IEEE-CYBER, Shenyang, China, 8–12 June 2015. [Google Scholar]

- Du, G.; Zhang, P.; Liu, X. Markerless Human-Manipulator Interface Using Leap Motion with Interval Kalman Filter and Improved Particle Filter. IEEE Trans. Ind. Informatics 2016, 12, 694–704. [Google Scholar] [CrossRef]

- Lu, W.; Tong, Z.; Chu, J. Dynamic hand gesture recognition with leap motion controller. IEEE Signal Process. Lett. 2016, 23, 1188–1192. [Google Scholar] [CrossRef]

- Pititeeraphab, Y.; Choitkunnan, P.; Thongpance, N.; Kullathum, K.; Pintavirooj, C. Robot-arm control system using LEAP motion controller. In Proceedings of the BME-HUST 2016—3rd International Conference on Biomedical Engineering, Hanoi, Vietnam, 5–6 October 2016. [Google Scholar]

- Sarkar, A.; Patel, K.A.; Ram, R.K.G.; Capoor, G.K. Gesture control of drone using a motion controller. In Proceedings of the 2016 International Conference on Industrial Informatics and Computer Systems (CIICS), Sharjah, United Arab Emirates, 13–15 March 2016. [Google Scholar]

- Gunawardane, P.D.S.H.; Medagedara, N.T.; Madusanka, B.G.D.A.; Wijesinghe, S. The development of a Gesture Controlled Soft Robot gripping mechanism. In Proceedings of the 2016 IEEE International Conference on Information and Automation for Sustainability: Interoperable Sustainable Smart Systems for Next Generation (ICIAfS), Galle, Sri Lanka, 16–19 December 2016. [Google Scholar]

- Devine, S.; Rafferty, K.; Ferguson, S. Real time robotic arm control using hand gestures with multiple end effectors. In Proceedings of the 2016 UKACC International Conference on Control, UKACC Control, Belfast, UK, 31 August–2 September 2016. [Google Scholar]

- Li, C.; Fahmy, A.; Sienz, J. Development of a neural network-based control system for the DLR-HIT II robot hand using leap motion. IEEE Access 2019, 7, 136914–136923. [Google Scholar] [CrossRef]

- Devaraja, R.R.; Maskeliūnas, R.; Damaševičius, R. Design and evaluation of anthropomorphic robotic hand for object grasping and shape recognition. Computers 2021, 10, 1. [Google Scholar] [CrossRef]

- Rudd, G.; Daly, L.; Cuckov, F. Intuitive gesture-based control system with collision avoidance for robotic manipulators. Ind. Rob. 2020, 47, 243–251. [Google Scholar] [CrossRef]

- Koenig, A.; Rodriguez, Y.; Baena, F.; Secoli, R. Gesture-based teleoperated grasping for educational robotics. In Proceedings of the 2021 30th IEEE International Conference on Robot and Human Interactive Communication (RO-MAN), Vancouver, BC, Canada, 8–12 August 2021. [Google Scholar]

- Korayem, M.H.; Madihi, M.A.; Vahidifar, V. Controlling surgical robot arm using leap motion controller with Kalman filter. Meas. J. Int. Meas. Confed. 2021, 178, 109372. [Google Scholar] [CrossRef]

- Chen, S.; Ma, H.; Yang, C.; Fu, M. Hand gesture based robot control system using leap motion. In Proceedings of the Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics), Portsmouth, UK, 24–27 August 2015; Volume 9244. [Google Scholar]

- Tian, H.; Wang, C.; Manocha, D.; Zhang, X. Realtime Hand-Object Interaction Using Learned Grasp Space for Virtual Environments. IEEE Trans. Vis. Comput. Graph. 2019, 25, 2623–2635. [Google Scholar] [CrossRef]

- Artal-Sevil, J.S.; Montañés, J.L. Development of a robotic arm and implementation of a control strategy for gesture recognition through Leap Motion device. In Proceedings of the 2016 Technologies Applied to Electronics Teaching (TAEE), Seville, Spain, 22–24 June 2016. [Google Scholar]

- Zeng, W.; Wang, C.; Wang, Q. Hand gesture recognition using Leap Motion via deterministic learning. Multimed. Tools Appl. 2018, 77, 28185–28206. [Google Scholar] [CrossRef]

- Li, B.; Zhang, C.; Han, C.; Bai, B. Gesture Recognition Based on Kinect v2 and Leap Motion Data Fusion. Int. J. Pattern Recognit. Artif. Intell. 2019, 33, 1955005. [Google Scholar] [CrossRef]

- Kumar, P.; Saini, R.; Roy, P.P.; Dogra, D.P. Study of Text Segmentation and Recognition Using Leap Motion Sensor. IEEE Sens. J. 2017, 17, 1293–1301. [Google Scholar] [CrossRef]

- Avola, D.; Bernardi, M.; Cinque, L.; Foresti, G.L.; Massaroni, C. Exploiting Recurrent Neural Networks and Leap Motion Controller for the Recognition of Sign Language and Semaphoric Hand Gestures. IEEE Trans. Multimed. 2019, 21, 234–245. [Google Scholar] [CrossRef]

- Li, H.; Wu, L.; Wang, H.; Han, C.; Quan, W.; Zhao, J. Hand Gesture Recognition Enhancement Based on Spatial Fuzzy Matching in Leap Motion. IEEE Trans. Ind. Inform. 2020, 16, 1885–1894. [Google Scholar] [CrossRef]

- Weichert, F.; Bachmann, D.; Rudak, B.; Fisseler, D. Analysis of the accuracy and robustness of the Leap Motion Controller. Sensors 2013, 13, 6380–6393. [Google Scholar] [CrossRef] [PubMed]

- Bachmann, D.; Weichert, F.; Rinkenauer, G. Evaluation of the leap motion controller as a new contact-free pointing device. Sensors 2014, 15, 214–233. [Google Scholar] [CrossRef]

- Guna, J.; Jakus, G.; Pogačnik, M.; Tomažič, S.; Sodnik, J. An analysis of the precision and reliability of the leap motion sensor and its suitability for static and dynamic tracking. Sensors 2014, 14, 3702–3720. [Google Scholar] [CrossRef]

- Valentini, P.P.; Pezzuti, E. Accuracy in fingertip tracking using Leap Motion Controller for interactive virtual applications. Int. J. Interact. Des. Manuf. 2017, 11, 641–650. [Google Scholar] [CrossRef]

- Vysocký, A.; Grushko, S.; Oščádal, P.; Kot, T.; Babjak, J.; Jánoš, R.; Sukop, M.; Bobovský, Z. Analysis of precision and stability of hand tracking with leap motion sensor. Sensors 2020, 20, 4088. [Google Scholar] [CrossRef]

- Guzsvinecz, T.; Szucs, V.; Sik-Lanyi, C. Suitability of the kinect sensor and leap motion controller—A literature review. Sensors 2019, 19, 1072. [Google Scholar] [CrossRef]

Figure 1.

HRI workplace including the Leap Motion Controller.

Figure 1.

HRI workplace including the Leap Motion Controller.

Figure 2.

The Leap Motion Controller.

Figure 2.

The Leap Motion Controller.

Figure 3.

The approximate shape of the LMC workspace.

Figure 3.

The approximate shape of the LMC workspace.

Figure 4.

The process of making a hand replica by a professional sculptor.

Figure 4.

The process of making a hand replica by a professional sculptor.

Figure 5.

(a) Final replica of the operator’s right hand. (b) Dimensions of the replica.

Figure 5.

(a) Final replica of the operator’s right hand. (b) Dimensions of the replica.

Figure 6.

Up to 60 cm: top–bottom view. x and y axis are in mm.

Figure 6.

Up to 60 cm: top–bottom view. x and y axis are in mm.

Figure 7.

Up to 60 cm: front–back view. X and z axis are in mm.

Figure 7.

Up to 60 cm: front–back view. X and z axis are in mm.

Figure 8.

Up to 60 cm: side view. Z and y axis are in mm.

Figure 8.

Up to 60 cm: side view. Z and y axis are in mm.

Figure 9.

Up to 60 cm: overall view. X, y, and z axis are in mm.

Figure 9.

Up to 60 cm: overall view. X, y, and z axis are in mm.

Figure 10.

Up to 80 cm: top–bottom view. X and y axis are in mm.

Figure 10.

Up to 80 cm: top–bottom view. X and y axis are in mm.

Figure 11.

Up to 80 cm: front–back view. X and z axis are in mm.

Figure 11.

Up to 80 cm: front–back view. X and z axis are in mm.

Figure 12.

Up to 80 cm: side view. Z and y axis are in mm.

Figure 12.

Up to 80 cm: side view. Z and y axis are in mm.

Figure 13.

Up to 80 cm: overall view. X, y, and z axis are in mm.

Figure 13.

Up to 80 cm: overall view. X, y, and z axis are in mm.

Figure 14.

All measurements: (A) (top–bottom view), (B) (front view). X, y, and z axis are in mm.

Figure 14.

All measurements: (A) (top–bottom view), (B) (front view). X, y, and z axis are in mm.

Figure 15.

All measurements: (A) (side view), (B) (overall view). X, y, and z axis are in mm.

Figure 15.

All measurements: (A) (side view), (B) (overall view). X, y, and z axis are in mm.

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).