Full-Field Mode Shape Identification Based on Subpixel Edge Detection and Tracking

Abstract

:1. Introduction

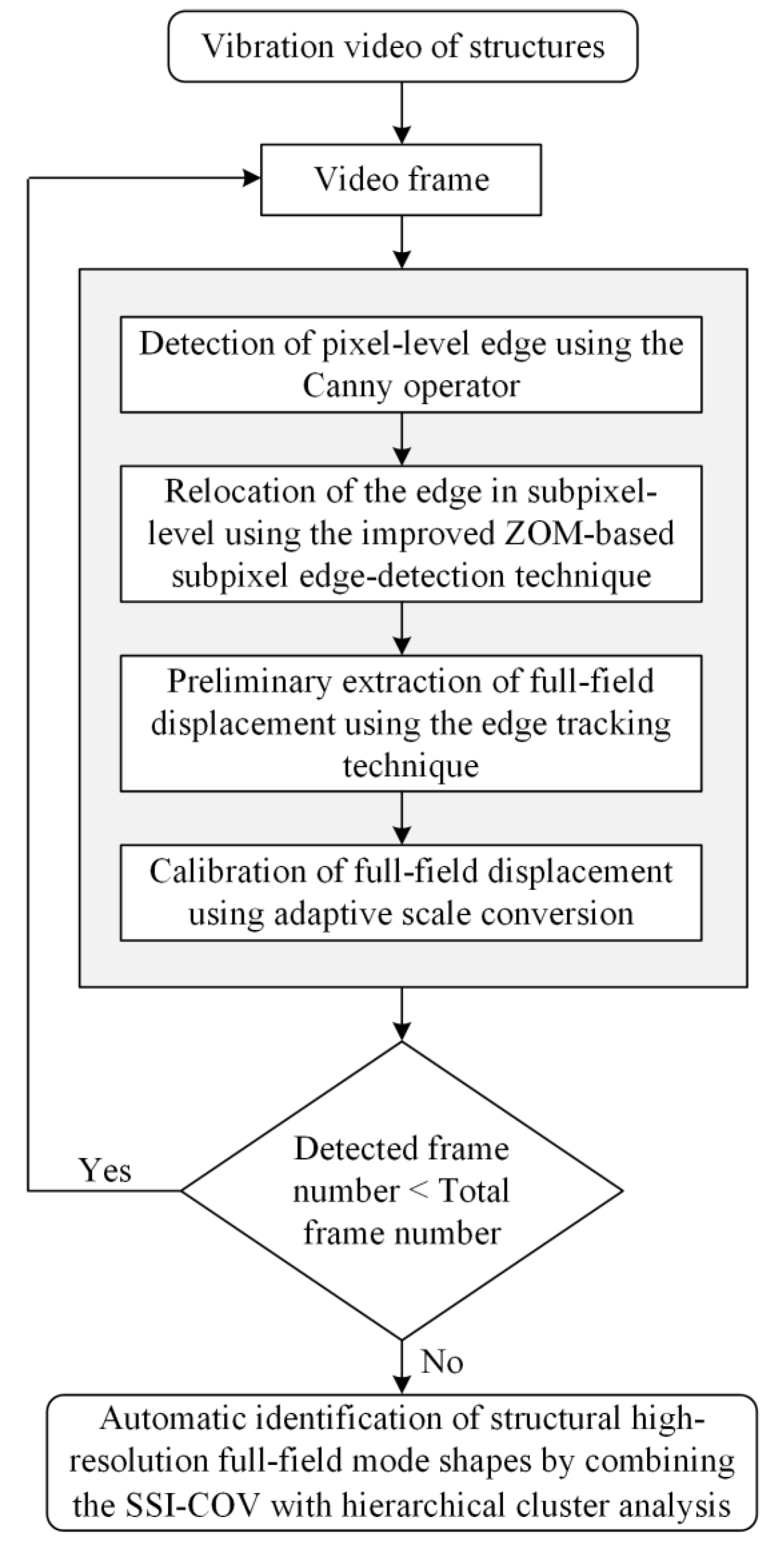

2. Theory and Methodology

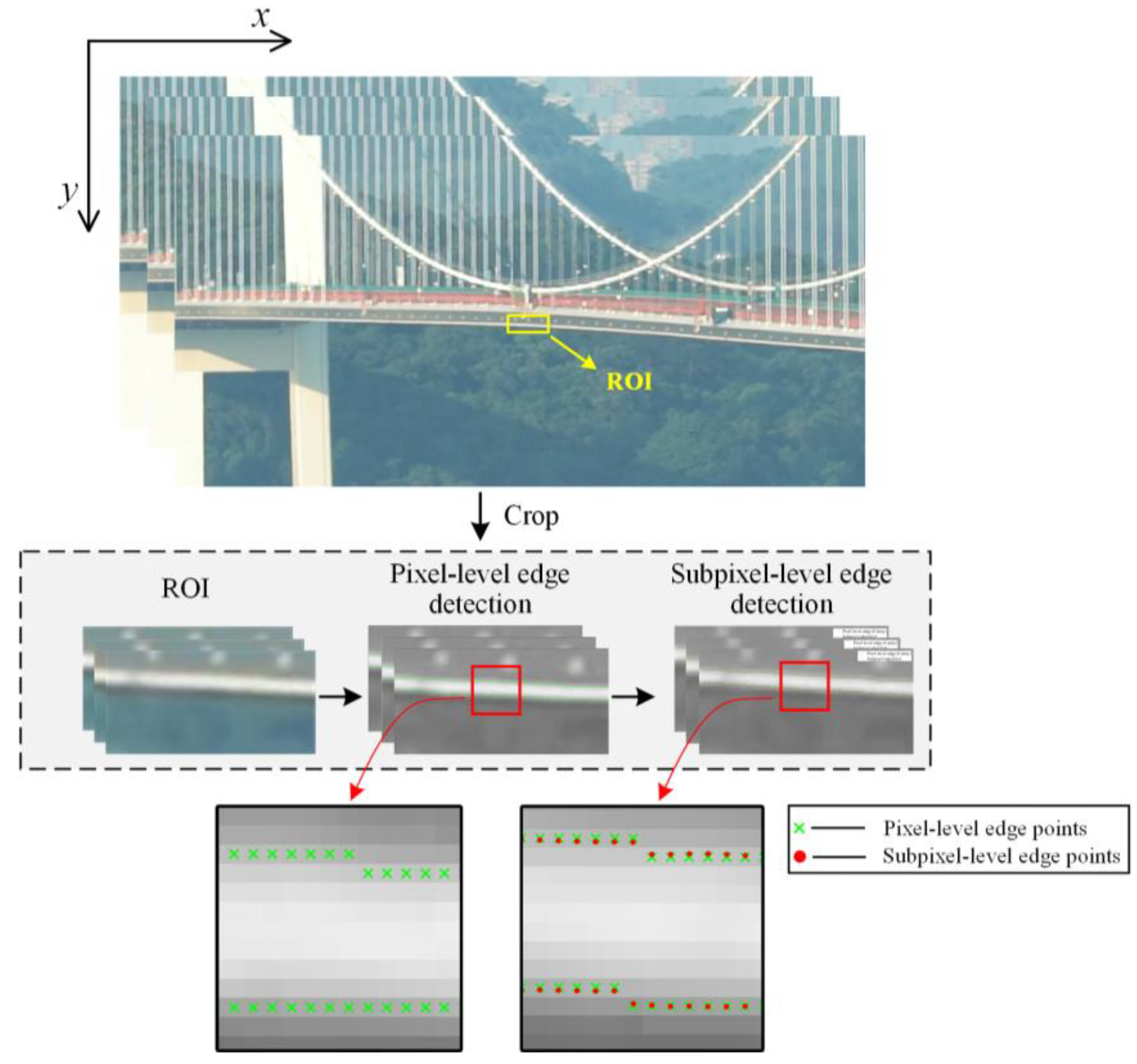

2.1. Detection of Pixel-Level Edge

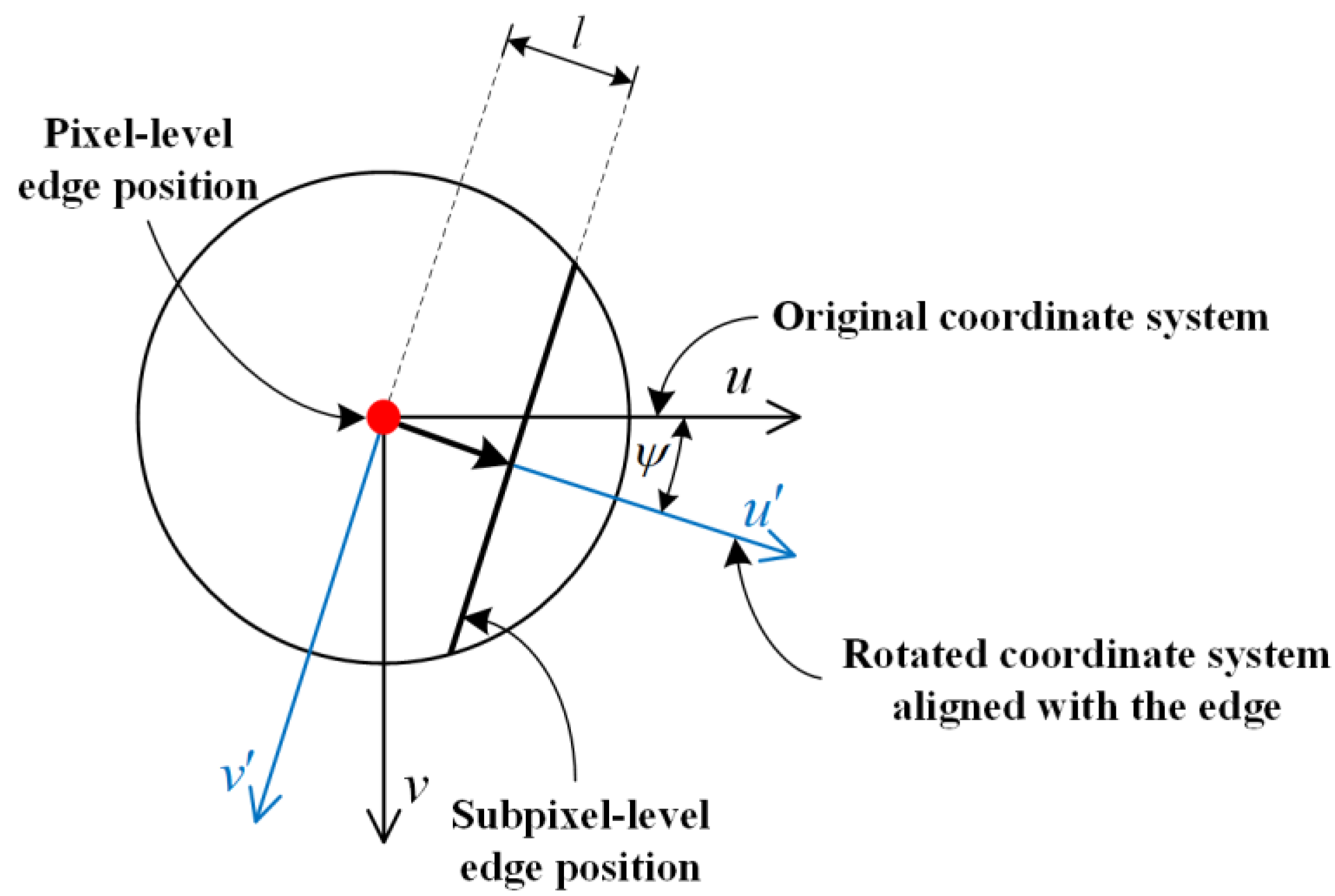

2.2. Relocation of the Edge in Subpixel-Level

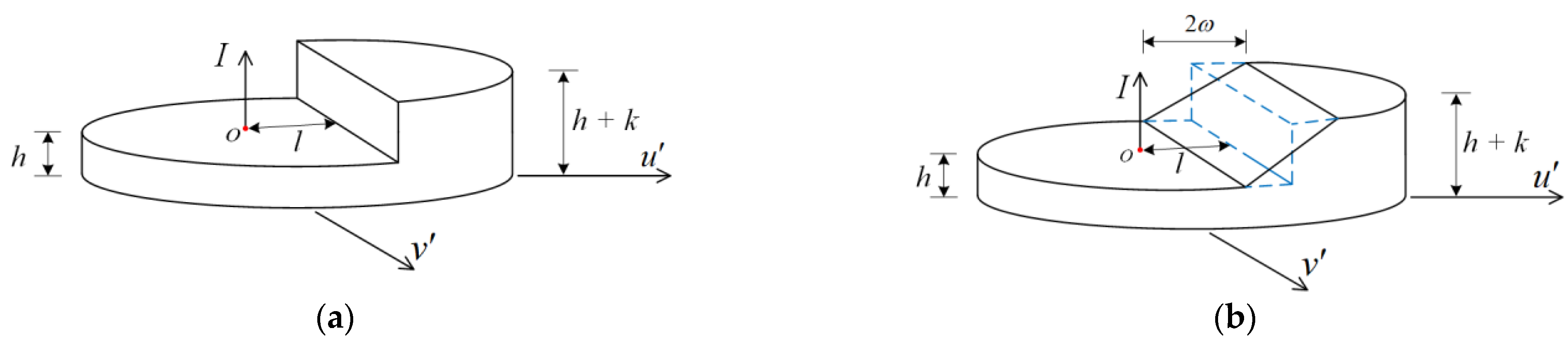

2.2.1. Improvement of the Edge Model

2.2.2. ZOM-Based Edge Location Calculation

2.3. Extraction of Full-Field Displacement Time History

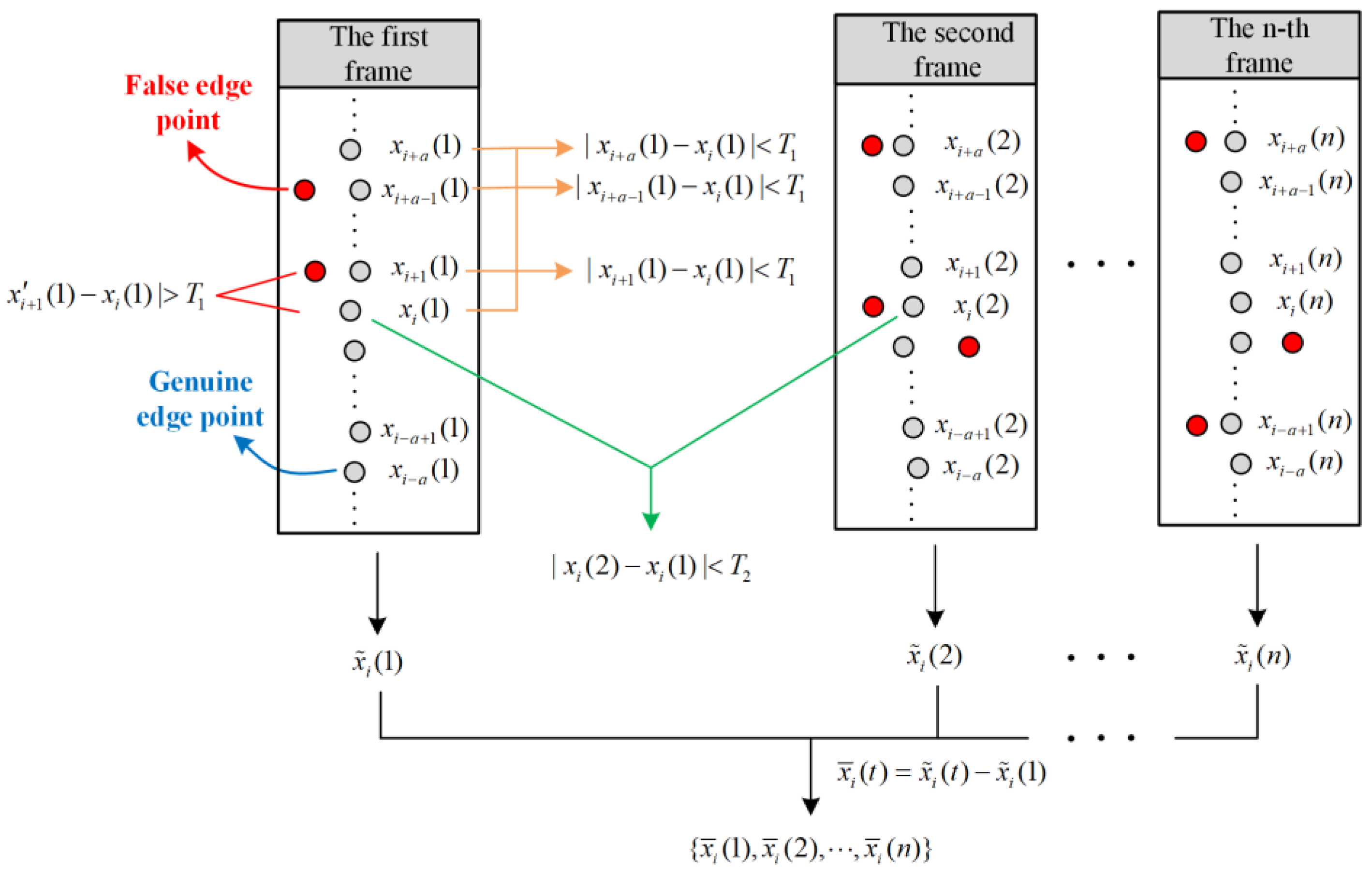

2.3.1. Displacement Extraction Based on Edge Tracking

2.3.2. Displacement Calibration Based on Adaptive Scale Conversion

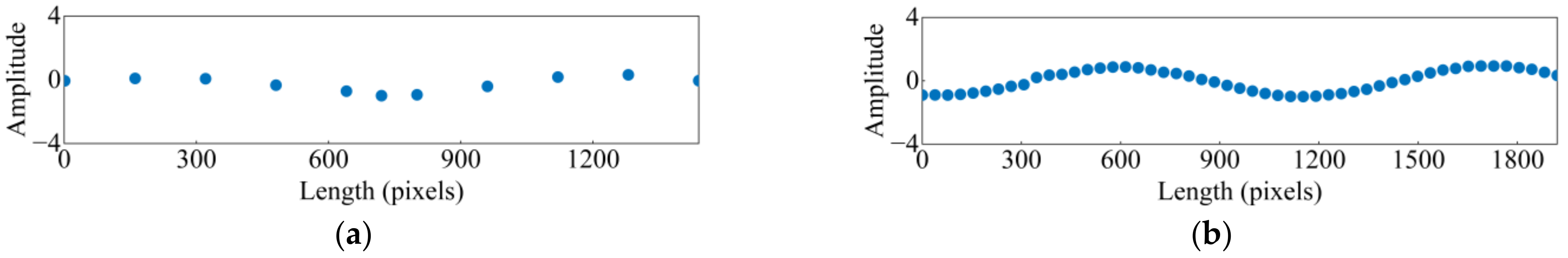

2.4. Identification of Full-Field Mode Shapes

2.4.1. Extraction of Modal Parameters Using SSI-COV

2.4.2. Automatic Identification of Physical Modes Using Hierarchical Cluster Analysis

3. Verification with Cantilever Beam Vibration

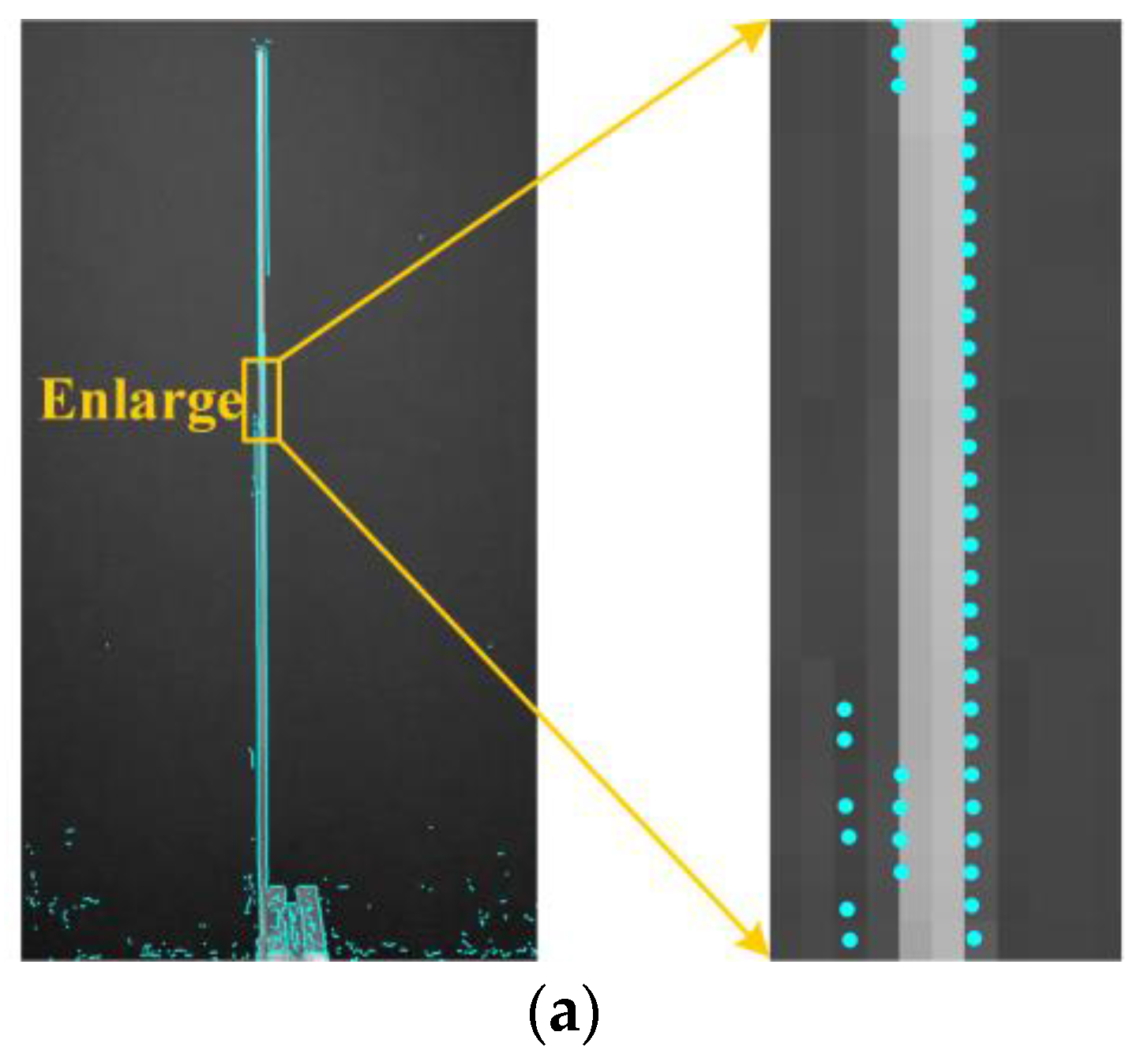

3.1. Results of Edges by Different Detection Algorithms

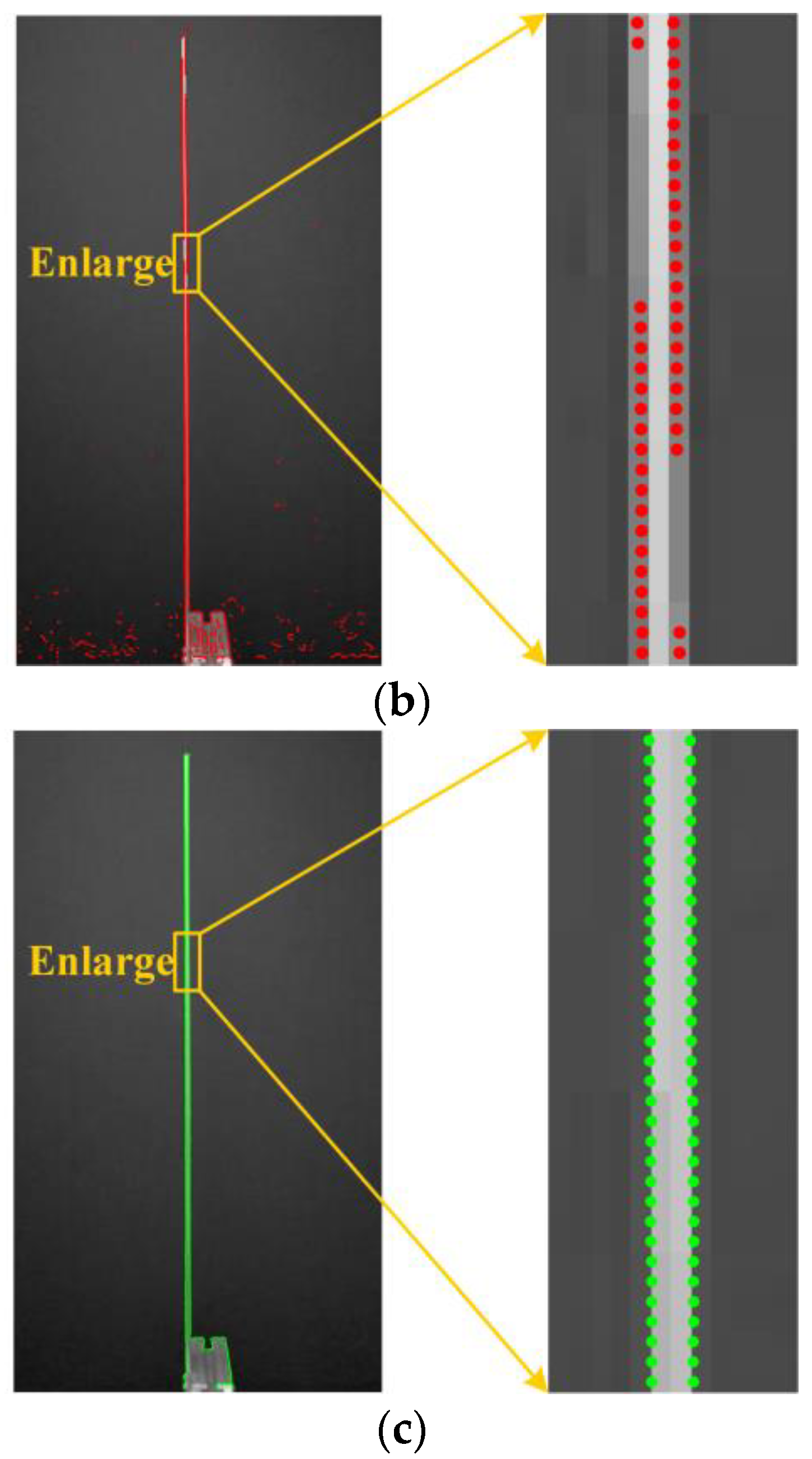

3.2. Results of Displacement Time History

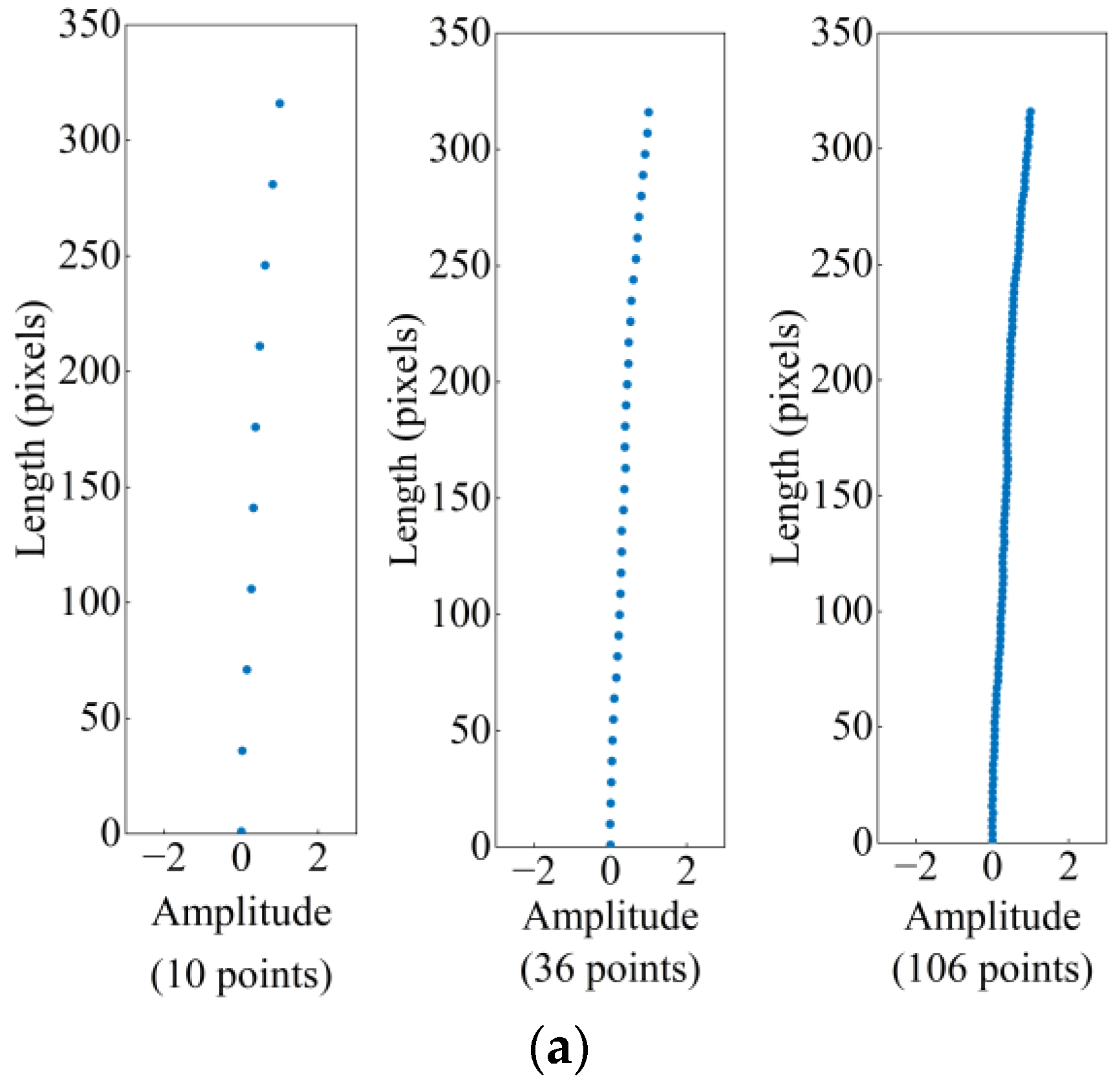

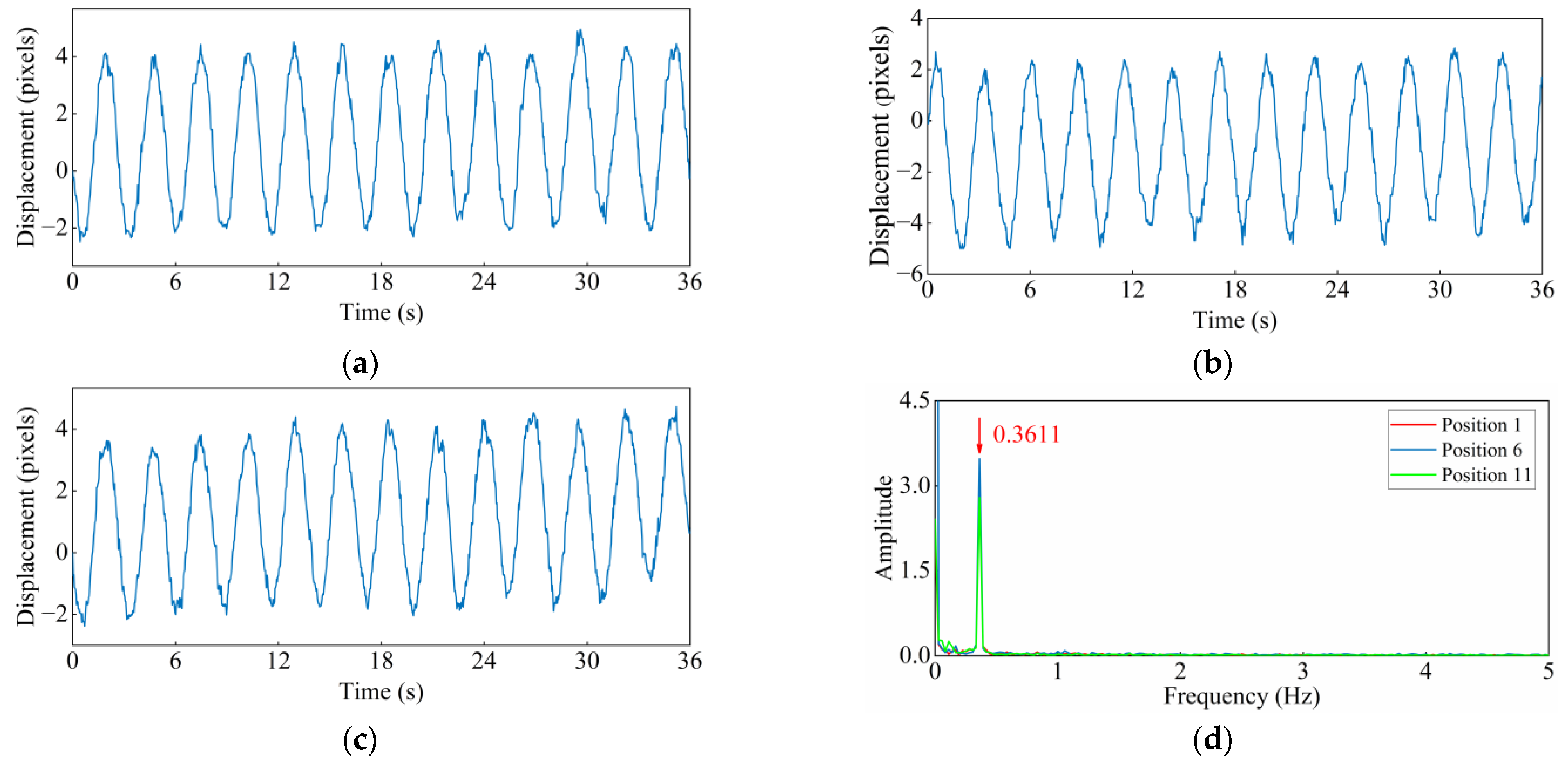

3.3. Results of Frequencies and Full-Field Mode Shapes

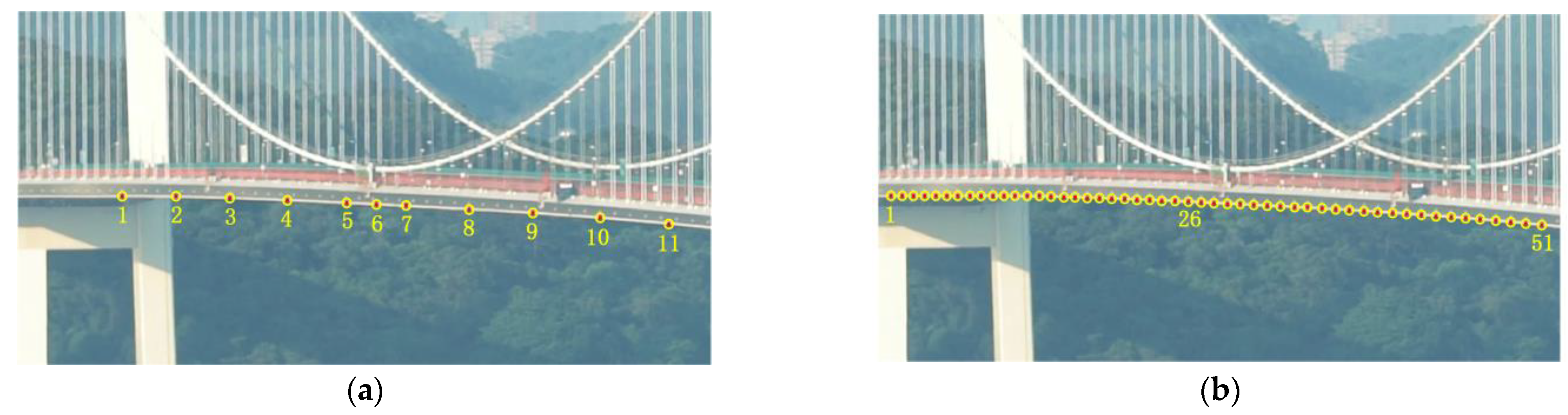

4. Application on a Real Bridge

5. Conclusions

- (1)

- The proposed method uses the Canny operator to locate the rough pixel-level edge and applies the ZOM-based algorithm to relocate the precise subpixel-level edge. The detected edge is very complete and close to the true edge, which shows a much better performance in edge detection compared to the methods in the literature.

- (2)

- The methods in the literature can only identify the first two bending vibration frequencies of the cantilever beam, while the proposed method can clearly identify the first four bending vibration frequencies, with errors of less than 3% of the theoretical values.

- (3)

- The results of the cantilever beam and the Humen Bridge confirm that the proposed method is capable of identifying high-resolution full-field mode shapes without installing artificial targets. A higher resolution of the mode shapes can be identified as more edge points are selected. The identified high-resolution mode shape of the Humen Bridge is helpful to the determination of modal characteristics.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Seo, J.; Hu, J.W.; Lee, J. Summary review of structural health monitoring applications for highway bridges. J. Perform. Constr. Facil. 2016, 30, 04015072. [Google Scholar] [CrossRef]

- Ou, J.; Li, H. Structural health monitoring in mainland China: Review and future trends. Struct. Health Monit. 2010, 9, 219–231. [Google Scholar] [CrossRef]

- Barbosh, M.; Singh, P.; Sadhu, A. Empirical mode decomposition and its variants: A review with applications in structural health monitoring. Smart Mater. Struct. 2020, 29, 093001. [Google Scholar] [CrossRef]

- Cedolin, L. Stability of Structures: Elastic, Inelastic, Fracture and Damage Theories; World Scientific: Singapore, 2010. [Google Scholar] [CrossRef]

- Xu, X.; Ren, Y.; Huang, Q.; Fan, Z.Y.; Tong, Z.J.; Chang, W.J.; Liu, B. Anomaly detection for large span bridges during operational phase using structural health monitoring data. Smart Mater. Struct. 2020, 29, 045029. [Google Scholar] [CrossRef]

- Zhuang, Y.; Chen, W.; Jin, T.; Chen, B.; Zhang, H.; Zhang, W. A review of computer vision-based structural deformation monitoring in field environments. Sensors 2022, 22, 3789. [Google Scholar] [CrossRef] [PubMed]

- Qian, H.; Wu, Y.; Zhu, R.; Zhang, D.; Jiang, D. Modal identification of ultralow-frequency flexible structures based on digital image correlation method. Appl. Sci. 2021, 12, 185. [Google Scholar] [CrossRef]

- Peroš, J.; Paar, R.; Divić, V.; Kovačić, B. Fusion of laser scans and image data-rgb+d for structural health monitoring of engineering structures. Appl. Sci. 2022, 12, 11763. [Google Scholar] [CrossRef]

- Kong, X.; Luo, K.; Deng, L.; Yi, J.X.; Yin, P.C.; Ji, W. Structural frequency identification based on broad-band phase-based motion magnification and computer vision. China Civ. Eng. J. 2022. [Google Scholar] [CrossRef]

- Zhou, Y.; Cheng, Y.T. Non-contact structural displacement measurement based on digital image correlation method. J. Hunan Univ. 2021, 48, 1–9. [Google Scholar] [CrossRef]

- Li, J.; Kong, X.; Yang, Y.; Yang, Z.; Hu, J. Optical flow based measurement of flow field in wave-structure interaction. Ocean Eng. 2022, 263, 112336. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q.; Ozer, E.; Fukuda, Y. A vision-based sensor for noncontact structural displacement measurement. Sensors 2015, 15, 16557–16575. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Experimental validation of cost-effective vision-based structural health monitoring. Mech. Syst. Sig. Process. 2017, 88, 199–211. [Google Scholar] [CrossRef]

- Neri, P.; Paoli, A.; Razionale, A.V.; Santus, C. Low-speed cameras system for 3D-DIC vibration measurements in the kHz range. Mech. Syst. Sig. Process. 2022, 162, 108040. [Google Scholar] [CrossRef]

- Horn, B.K.; Schunck, B.G. Determining optical flow. Artif. Intell. 1981, 17, 185–203. [Google Scholar] [CrossRef] [Green Version]

- Caetano, E.; Silva, S.; Bateira, J. A vision system for vibration monitoring of civil engineering structures. Exp. Tech. 2011, 35, 74–82. [Google Scholar] [CrossRef]

- Lucas, B.D.; Kanade, T. An iterative image registration technique with an application to stereo vision. In DARPA Image Understanding Workshop (IUW ′81); Carnegie Mellon University: Pittsburgh, PA, USA, 1981; Volume 81, pp. 674–679. [Google Scholar]

- Guo, J. Dynamic displacement measurement of large-scale structures based on the Lucas-Kanade template tracking algorithm. Mech. Syst. Sig. Process. 2016, 66, 425–436. [Google Scholar] [CrossRef]

- Cha, Y.J.; Chen, J.G.; Büyüköztürk, O. Output-only computer vision based damage detection using phase-based optical flow and unscented Kalman filters. Eng. Struct. 2017, 132, 300–313. [Google Scholar] [CrossRef]

- Guo, J.; Wu, X.; Liu, J.; Wei, T.; Yang, X.; Yang, X.; He, B.; Zhang, W. Non-contact vibration sensor using deep learning and image processing. Measurement 2021, 183, 109823. [Google Scholar] [CrossRef]

- Dong, C.Z.; Ye, X.W.; Jin, T. Identification of structural dynamic characteristics based on machine vision technology. Measurement 2018, 126, 405–416. [Google Scholar] [CrossRef]

- Yoon, H.; Elanwar, H.; Choi, H.; Golparvar-Fard, M.; Spencer, B.F., Jr. Target-free approach for vision-based structural system identification using consumer-grade cameras. Struct. Control Health Monit. 2016, 23, 1405–1416. [Google Scholar] [CrossRef]

- Dizaji, M.S.; Harris, D.K.; Alipour, M. Integrating visual sensing and structural identification using 3D-digital image correlation and topology optimization to detect and reconstruct the 3D geometry of structural damage. Struct. Health Monit. 2022, 21, 14759217211073505. [Google Scholar] [CrossRef]

- Poozesh, P.; Sarrafi, A.; Mao, Z.; Avitabile, P.; Niezrecki, C. Feasibility of extracting operating shapes using phase-based motion magnification technique and stereo-photogrammetry. J. Sound Vib. 2017, 407, 350–366. [Google Scholar] [CrossRef]

- Wadhwa, N.; Rubinstein, M.; Durand, F.; Freeman, W.T. Phase-based video motion processing. ACM Trans. Graph. 2013, 32, 1–10. [Google Scholar] [CrossRef] [Green Version]

- Yu, L.; Pan, B. Single-camera high-speed stereo-digital image correlation for full-field vibration measurement. Mech. Syst. Sig. Process. 2017, 94, 374–383. [Google Scholar] [CrossRef]

- Chen, J.G.; Wadhwa, N.; Cha, Y.J.; Durand, F.; Freeman, W.T.; Buyukozturk, O. Modal identification of simple structures with high-speed video using motion magnification. J. Sound Vib. 2015, 345, 58–71. [Google Scholar] [CrossRef]

- Yang, Y.; Dorn, C.; Mancini, T.; Talken, Z.; Kenyon, G.; Farrar, C.; Mascareñas, D. Blind identification of full-field vibration modes from video measurements with phase-based video motion magnification. Mech. Syst. Sig. Process. 2017, 85, 567–590. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Ghosal, S.; Mehrotra, R. Orthogonal moment operators for subpixel edge detection. Pattern Recognit. 1993, 26, 295–306. [Google Scholar] [CrossRef]

- Christian, J.A. Accurate planetary limb localization for image-based spacecraft navigation. J. Spacecr. Rocket. 2017, 54, 708–730. [Google Scholar] [CrossRef]

- Magalhaes, F.; Cunha, A.; Caetano, E. Online automatic identification of the modal parameters of a long span arch bridge. Mech. Syst. Sig. Process. 2009, 23, 316–329. [Google Scholar] [CrossRef]

- Roberts, L.G. Machine Perception of Three-dimensional Solids. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 1963. [Google Scholar]

- Sobel, I.E. Camera Models and Machine Perception; Stanford University: Stanford, CA, USA, 1970. [Google Scholar]

- Amer, G.M.H.; Abushaala, A.M. Edge detection methods. In Proceedings of the 2015 2nd World Symposium on Web Applications and Networking (IEEE), Sousse, Tunisia, 21–23 March 2015. [Google Scholar] [CrossRef]

- Von, G.R.G.; Randall, G. A sub-pixel edge detector: An implementation of the canny/devernay algorithm. Image Process. Line 2017, 7, 347–372. [Google Scholar] [CrossRef] [Green Version]

- Hermosilla, T.; Bermejo, E.; Balaguer, A.; Ruiz, L.A. Non-linear fourth-order image interpolation for subpixel edge detection and localization. Image Vis. Comput. 2008, 26, 1240–1248. [Google Scholar] [CrossRef]

- Mortari, D.; de Dilectis, F.; Zanetti, R. Position estimation using the image derivative. Aerospace 2015, 2, 435–460. [Google Scholar] [CrossRef] [Green Version]

- Ye, J.; Fu, G.; Poudel, U.P. High-accuracy edge detection with blurred edge model. Image Vis. Comput. 2005, 23, 453–467. [Google Scholar] [CrossRef]

- Lyvers, E.P.; Mitchell, O.R.; Akey, M.L.; Reeves, A.P. Subpixel measurements using a moment-based edge operator. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 1293–1309. [Google Scholar] [CrossRef]

- Qu, Y.; Cui, C.; Chen, S.; Li, J. A fast subpixel edge detection method using Sobel–Zernike moments operator. Image Vis. Comput. 2005, 23, 11–17. [Google Scholar] [CrossRef]

- Tan, J.; Ao, L.; Cui, J.W.; Kang, W.J. Further improvement of edge location accuracy of charge-coupled-device laser autocollimators using orthogonal Fourier-Mellin moments. Opt. Eng. 2007, 46, 057007. [Google Scholar] [CrossRef]

- Bin, T.J.; Lei, A.; Jiwen, C.; Wenjing, K.; Dandan, L. Subpixel edge location based on orthogonal Fourier–Mellin moments. Image Vis. Comput. 2008, 26, 563–569. [Google Scholar] [CrossRef]

- Da, F.; Zhang, H. Sub-pixel edge detection based on an improved moment. Image Vis. Comput. 2010, 28, 1645–1658. [Google Scholar] [CrossRef]

- Trujillo-Pino, A.; Krissian, K.; Alemán-Flores, M.; Santana-Cedrés, D. Accurate subpixel edge location based on partial area effect. Image Vis. Comput. 2013, 31, 72–90. [Google Scholar] [CrossRef]

- Yang, Y.; Dorn, C. Affinity propagation clustering of full-field, high-spatial-dimensional measurements for robust output-only modal identification: A proof-of-concept study. J. Sound Vib. 2020, 483, 115473. [Google Scholar] [CrossRef]

- B767–400ER. The Vibration Video of Humen Bridge in Dongguan During Traffic Suspension. Available online: https://www.bilibili.com/video/BV1Nt4y1178T (accessed on 20 January 2022).

- Zhang, G.; Zhu, L. Test on vibration characteristics of Humen bridge. J. Tongji Univ. 1999, 2, 69–72. [Google Scholar]

| Mode Number | ① Theoretical Values [46] | ② Proposed Method | Error (%) |②-①|/① |

|---|---|---|---|

| 1 | 7.59 | 7.5 | 1.19 |

| 2 | 47.57 | 47.34 | 0.48 |

| 3 | 133.18 | 133.6 | 0.32 |

| 4 | 219.96 | 226.4 | 2.93 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kong, X.; Yi, J.; Wang, X.; Luo, K.; Hu, J. Full-Field Mode Shape Identification Based on Subpixel Edge Detection and Tracking. Appl. Sci. 2023, 13, 747. https://doi.org/10.3390/app13020747

Kong X, Yi J, Wang X, Luo K, Hu J. Full-Field Mode Shape Identification Based on Subpixel Edge Detection and Tracking. Applied Sciences. 2023; 13(2):747. https://doi.org/10.3390/app13020747

Chicago/Turabian StyleKong, Xuan, Jinxin Yi, Xiuyan Wang, Kui Luo, and Jiexuan Hu. 2023. "Full-Field Mode Shape Identification Based on Subpixel Edge Detection and Tracking" Applied Sciences 13, no. 2: 747. https://doi.org/10.3390/app13020747