Unsupervised Semantic Segmentation Inpainting Network Using a Generative Adversarial Network with Preprocessing

Abstract

:1. Introduction

- We introduce a preprocessing method that matches the maxima of the distributions of the predicted free-map and ground-truth map. This prevents the discriminator from learning the probability distribution and rather focuses on the segmentation inpainting, allowing the generator to learn how to inpaint the masked part.

- We propose a new cross-entropy total variation loss term to facilitate the generator network to smooth the nearby static segmentation. The proposed term is a denoising loss function for binary data, which removes the noise caused by the instability of the unsupervised learning process.

2. Related Works

2.1. Image Inpainting

2.2. Supervised Segmentation Inpainting

2.3. Unsupervised Segmentation Inpainting

3. Proposed Method

3.1. Overall Framework

3.2. Preprocessing for Probability Map Distribution Matching

3.3. Objective Function

3.4. Training Networks

3.5. Network Architecture

4. Experiment and Results

4.1. Datasets

4.2. Result on MICC-SRI Dataset

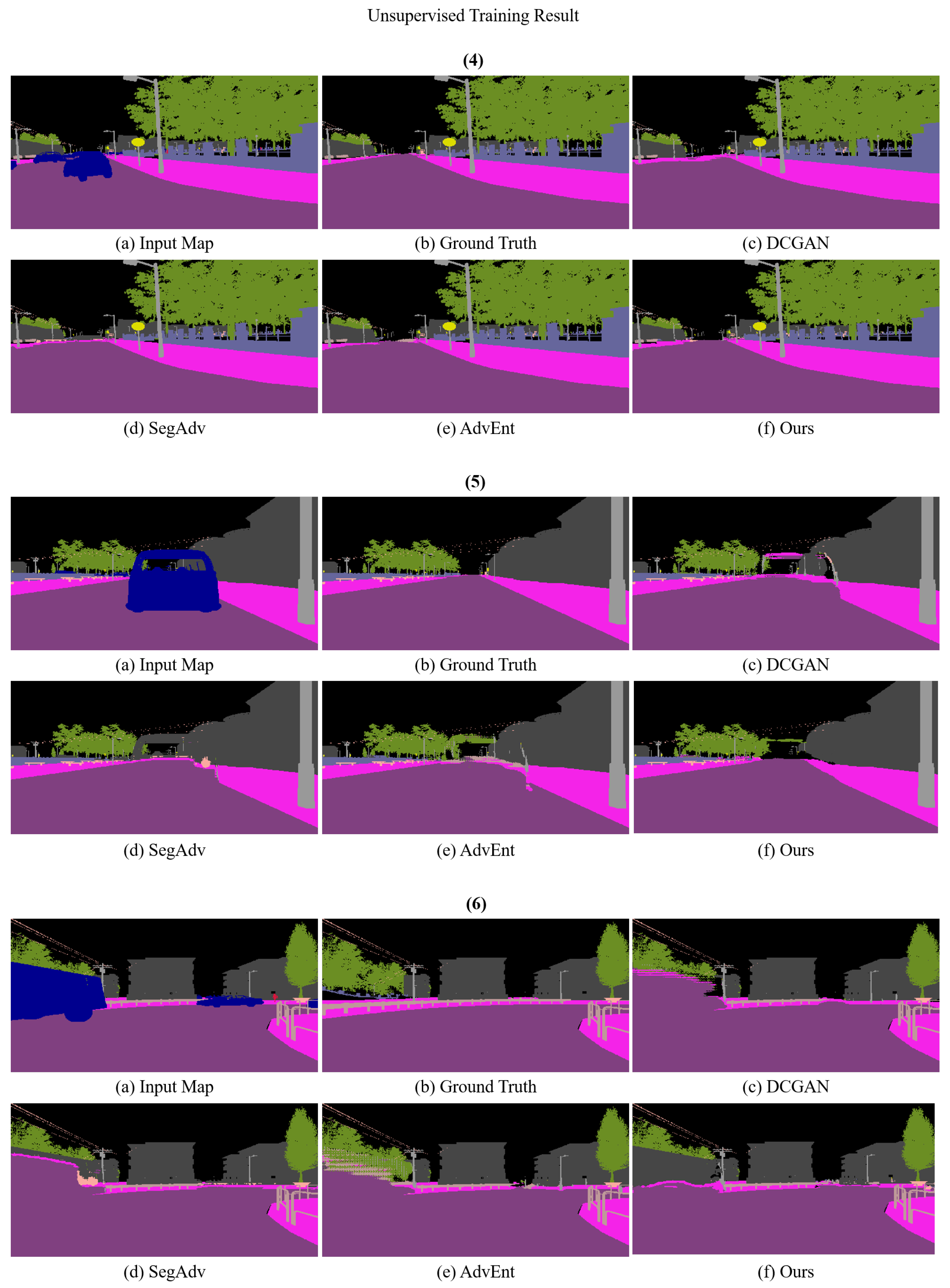

4.3. Result on Cityscapes

4.4. Hyperparameter Study

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ivanovs, M.; Ozols, K.; Dobrajs, A.; Kadikis, R. Improving semantic segmentation of urban scenes for self-driving cars with synthetic images. Sensors 2022, 22, 2252. [Google Scholar] [CrossRef] [PubMed]

- Yao, J.; Ramalingam, S.; Taguchi, Y.; Miki, Y.; Urtasun, R. Estimating drivable collision-free space from monocular video. In Proceedings of the 2015 IEEE Winter Conference on Applications of Computer Vision, Waikoloa, HI, USA, 5–9 January 2015; pp. 420–427. [Google Scholar]

- Jiménez, F.; Clavijo, M.; Cerrato, A. Perception, positioning and decision-making algorithms adaptation for an autonomous valet parking system based on infrastructure reference points using one single LiDAR. Sensors 2022, 22, 979. [Google Scholar] [CrossRef] [PubMed]

- Lai, X.; Tian, Z.; Xu, X.; Chen, Y.; Liu, S.; Zhao, H.; Wang, L.; Jia, J. DecoupleNet: Decoupled network for domain adaptive semantic segmentation. In Proceedings of the European Conference on Computer Vision, Tel Aviv, Israel, 23–27 October 2022; Springer: Berlin/Heidelberg, Germany, 2022; pp. 369–387. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; Springer: Berlin/Heidelberg, Germany, 2015; pp. 234–241. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 40, 834–848. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mehta, S.; Rastegari, M.; Caspi, A.; Shapiro, L.; Hajishirzi, H. Espnet: Efficient spatial pyramid of dilated convolutions for semantic segmentation. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018; pp. 552–568. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Networks; ACM: New York, NY, USA, 2020; Volume 63, pp. 139–144. [Google Scholar]

- Berlincioni, L.; Becattini, F.; Galteri, L.; Seidenari, L.; Bimbo, A.D. Road layout understanding by generative adversarial inpainting. In Inpainting and Denoising Challenges; Springer: Berlin/Heidelberg, Germany, 2019; pp. 111–128. [Google Scholar]

- Cordts, M.; Omran, M.; Ramos, S.; Rehfeld, T.; Enzweiler, M.; Benenson, R.; Franke, U.; Roth, S.; Schiele, B. The cityscapes dataset for semantic urban scene understanding. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 3213–3223. [Google Scholar]

- Ballester, C.; Bertalmio, M.; Caselles, V.; Sapiro, G.; Verdera, J. Filling-in by joint interpolation of vector fields and gray levels. IEEE Trans. Image Process. 2001, 10, 1200–1211. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barnes, C.; Shechtman, E.; Finkelstein, A.; Goldman, D.B. PatchMatch: A randomized correspondence algorithm for structural image editing. ACM Trans. Graph. 2009, 28, 24. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef] [Green Version]

- Mao, X.; Shen, C.; Yang, Y.B. Image Restoration Using very Deep Convolutional Encoder-Decoder Networks with Symmetric Skip Connections. 2016, Volume 29. Available online: https://arxiv.org/abs/1603.09056v2 (accessed on 30 December 2022).

- Pathak, D.; Krahenbuhl, P.; Donahue, J.; Darrell, T.; Efros, A.A. Context encoders: Feature learning by inpainting. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2536–2544. [Google Scholar]

- Iizuka, S.; Simo-Serra, E.; Ishikawa, H. Globally and locally consistent image completion. ACM Trans. Graph. (ToG) 2017, 36, 1–14. [Google Scholar] [CrossRef]

- Yu, J.; Lin, Z.; Yang, J.; Shen, X.; Lu, X.; Huang, T.S. Generative image inpainting with contextual attention. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 5505–5514. [Google Scholar]

- Saharia, C.; Chan, W.; Chang, H.; Lee, C.; Ho, J.; Salimans, T.; Fleet, D.; Norouzi, M. Palette: Image-to-image diffusion models. In Proceedings of the ACM SIGGRAPH 2022 Conference, Vancouver, BC, Canada, 7–11 August 2022; pp. 1–10. [Google Scholar]

- Song, Y.; Yang, C.; Shen, Y.; Wang, P.; Huang, Q.; Kuo, C.C.J. Spg-net: Segmentation prediction and guidance network for image inpainting. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018; p. 97. Available online: https://github.com/unizard/AwesomeArxiv/issues/175 (accessed on 30 December 2022).

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. In Proceedings of the International Conference on Learning Representations, San Juan, PR, USA, 2–4 May 2016. [Google Scholar]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved techniques for training gans. In Proceedings of the Advances in Neural Information Processing Systems, Barcelona, Spain, 5–10 December 2016; Volume 29. [Google Scholar]

- Wang, T.C.; Liu, M.Y.; Zhu, J.Y.; Tao, A.; Kautz, J.; Catanzaro, B. High-resolution image synthesis and semantic manipulation with conditional gans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 8798–8807. [Google Scholar]

- Liu, G.; Reda, F.A.; Shih, K.J.; Wang, T.C.; Tao, A.; Catanzaro, B. Image inpainting for irregular holes using partial convolutions. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 85–100. [Google Scholar]

- Li, Y.; Liu, S.; Yang, J.; Yang, M.H. Generative face completion. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 3911–3919. [Google Scholar]

- Taigman, Y.; Polyak, A.; Wolf, L. In Proceedings of the International Conference on Learning Representations, Toulon, France, 24–26 April 2017.

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired image-to-image translation using cycle-consistent adversarial networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Vu, T.H.; Jain, H.; Bucher, M.; Cord, M.; Pérez, P. Advent: Adversarial entropy minimization for domain adaptation in semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 2517–2526. [Google Scholar]

- Hoffman, J.; Tzeng, E.; Park, T.; Zhu, J.Y.; Isola, P.; Saenko, K.; Efros, A.; Darrell, T. Cycada: Cycle-consistent adversarial domain adaptation. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 1989–1998. Available online: https://www.aminer.org/pub/5a260c8617c44a4ba8a326ee/cycada-cycle-consistent-adversarial-domain-adaptation (accessed on 30 December 2022).

- Luc, P.; Couprie, C.; Chintala, S.; Verbeek, J. Semantic segmentation using adversarial networks. arXiv 2016, arXiv:1611.08408. [Google Scholar]

- Hung, W.C.; Tsai, Y.H.; Liou, Y.T.; Lin, Y.Y.; Yang, M.H. Adversarial Learning for Semi-Supervised Semantic Segmentation. In Proceedings of the British Machine Vision Conference, Newcastle, UK, 3–6 September 2018. [Google Scholar]

- Rudin, L.I.; Osher, S.; Fatemi, E. Nonlinear total variation based noise removal algorithms. Phys. D Nonlinear Phenom. 1992, 60, 259–268. [Google Scholar] [CrossRef]

- Hecht-Nielsen, R. Theory of the backpropagation neural network in Neural Networks for Perception; Academic Press: Cambridge, MA, USA, 1992; pp. 65–93. [Google Scholar]

- Janocha, K.; Czarnecki, W.M. On loss functions for deep neural networks in classification. arXiv 2017, arXiv:1702.05659. [Google Scholar] [CrossRef]

- Javanmardi, M.; Sajjadi, M.; Liu, T.; Tasdizen, T. Unsupervised total variation loss for semi-supervised deep learning of semantic segmentation. arXiv 2016, arXiv:1605.01368. [Google Scholar]

- Bengio, Y.; Louradour, J.; Collobert, R.; Weston, J. Curriculum learning. In Proceedings of the 26th Annual International Conference on Machine Learning, Montreal, QC, Canada, 14–18 June 2009; pp. 41–48. [Google Scholar]

- Wojna, Z.; Ferrari, V.; Guadarrama, S.; Silberman, N.; Chen, L.C.; Fathi, A.; Uijlings, J. The devil is in the decoder: Classification, regression and gans. Int. J. Comput. Vis. 2019, 127, 1694–1706. [Google Scholar] [CrossRef]

- Maas, A.L.; Hannun, A.Y.; Ng, A.Y. Rectifier nonlinearities improve neural network acoustic models. In Proceedings of the International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; Volume 30, p. 3. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating deep network training by reducing internal covariate shift. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the Conference on Robot Learning, Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| Semantic Segmentation Inpainting Network | ||||

|---|---|---|---|---|

| Layer | Kernel Size | Stride | Output Size | Skip Connections |

| Input | C × 256 × 256 | |||

| Encoder-conv 1-1 | 5 × 5 | 1 × 1 | 64 × 256 × 256 | |

| Encoder-conv 1-2 | 3 × 3 | 1 × 1 | 64 × 256 × 256 | |

| Encoder-conv 1-3 | 3 × 3 | 1 × 1 | 64 × 256 × 256 | Decoder-conv 1-1 |

| Encoder-conv 2-1 | 3 × 3 | 2 × 2 | 128 × 128 × 128 | |

| Encoder-conv 2-2 | 3 × 3 | 1 × 1 | 128 × 128 × 128 | |

| Encoder-conv 2-3 | 3 × 3 | 1 × 1 | 128 × 128 × 128 | Decoder-conv 2-1 |

| Encoder-conv 3-1 | 3 × 3 | 2 × 2 | 256 × 64 × 64 | |

| Encoder-conv 3-2 | 3 × 3 | 1 × 1 | 256 × 64 × 64 | |

| Encoder-conv 3-3 | 3 × 3 | 1 × 1 | 256 × 64 × 64 | Decoder-conv 3-1 |

| Encoder-conv 4-1 | 3 × 3 | 2 × 2 | 512 × 32 × 32 | |

| Encoder-conv 4-2 | 3 × 3 | 1 × 1 | 512 × 32 × 32 | |

| Encoder-conv 4-3 | 3 × 3 | 1 × 1 | 512 × 32 × 32 | |

| Upsampling | 512 × 64 × 64 | |||

| Decoder-conv 1-1 | 3 × 3 | 1 × 1 | 256 × 64 × 64 | Encoder-conv 1-3 |

| Decoder-conv 1-2 | 3 × 3 | 1 × 1 | 256 × 64 × 64 | |

| Decoder-conv 1-3 | 3 × 3 | 1 × 1 | 256 × 64 × 64 | |

| Upsampling | 512 × 64 × 64 | |||

| Decoder-conv 2-1 | 3 × 3 | 1 × 1 | 128 × 128 × 128 | Encoder-conv 2-3 |

| Decoder-conv 2-2 | 3 × 3 | 1 × 1 | 128 × 128 × 128 | |

| Decoder-conv 2-3 | 3 × 3 | 1 × 1 | 128 × 128 × 128 | |

| Upsampling | 512 × 64 × 64 | |||

| Decoder-conv 3-1 | 3 × 3 | 1 × 1 | 64 × 256 × 256 | Encoder-conv 3-3 |

| Decoder-conv 3-2 | 3 × 3 | 1 × 1 | 64 × 256 × 256 | |

| Output | 3 × 3 | 1 × 1 | C × 256 × 256 | |

| Discriminator Network | |||

|---|---|---|---|

| Layer | Kernel Size | Stride | Output Size |

| Input | C × 256 × 256 | ||

| Encoder-conv 1 | 5 | 2 × 2 | 64 × 128 × 128 |

| Encoder-conv 2 | 5 | 2 × 2 | 128 × 64 × 64 |

| Encoder-conv 3 | 5 | 2 × 2 | 256 × 32 × 32 |

| Encoder-conv 4 | 5 | 2 × 2 | 512 × 16 × 16 |

| Encoder-conv 5 | 5 | 2 × 2 | 512 × 8 × 8 |

| Encoder-conv 6 | 5 | 2 × 2 | 512 × 4 × 4 |

| Fully Connected | 1024 | ||

| Output | 1 | ||

| Method | Pixel Accuracy | F1 | mIOU | FPS | |

|---|---|---|---|---|---|

| Unsupervised | DCGAN [20] | 66.44 | 38.09 | 30.64 | 18.2 |

| SegAdv [29] | 67.79 | 38.33 | 32.11 | 17.4 | |

| AdvEnt [27] | 73.31 | 52.11 | 42.87 | 17.9 | |

| DCGAN + Our Preprocessing (M1) | 67.95 | 39.10 | 30.72 | 16.7 | |

| AdvEnt + Our Preprocessing (M2) | 73.57 | 51.25 | 41.61 | 16.5 | |

| Ours | 16.1 | ||||

| Supervised | DCGAN [20] | 86.70 | 62.31 | 56.32 | 18.2 |

| SegAdv [29] | 62.25 | 57.17 | 17.4 | ||

| AdvEnt [27] | 86.39 | 63.02 | 57.53 | 17.9 | |

| Ours | 86.56 | 16.1 |

| Sky | Building | Fence | Pole | Road | Sidewalk | Vegetation | Wall | Sign | mIOU | |

|---|---|---|---|---|---|---|---|---|---|---|

| DCGAN [20] | 21.2 | 37.5 | 11.1 | 26.0 | 77.8 | 37.4 | 19.4 | 23.4 | 21.6 | 30.6 |

| SegAdv [29] | 2.1 | 34.8 | 3.5 | 29.4 | 76.2 | 29.0 | 26.4 | 25.5 | 52.5 | 31.1 |

| AdvEnt [27] | 48.3 | 44.7 | 9.6 | 30.4 | 78.8 | 31.5 | 42.9 | |||

| Ours | 40.3 | 32.4 | 42.5 |

| Method | Pixel Accuracy | F1 | mIOU |

|---|---|---|---|

| DCGAN [20] | 54.63 | 35.89 | 30.47 |

| Ours |

| Maximum Value | N(m,) | Pixel Accuracy |

|---|---|---|

| 1 | 0.01 | 67.32 |

| 0.99 | 0.01 | 72.28 |

| 0.97 | 0.01 | |

| 0.95 | 0.01 | 71.27 |

| 0.90 | 0.01 | 68.78 |

| 0.97 | 0 | 73.62 |

| 0.97 | 0.010 | |

| 0.97 | 0.025 | 74.35 |

| 0.97 | 0.035 | 73.14 |

| 0.97 | 0.050 | 73.47 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahn, W.-J.; Kim, D.-W.; Kang, T.-K.; Pae, D.-S.; Lim, M.-T. Unsupervised Semantic Segmentation Inpainting Network Using a Generative Adversarial Network with Preprocessing. Appl. Sci. 2023, 13, 781. https://doi.org/10.3390/app13020781

Ahn W-J, Kim D-W, Kang T-K, Pae D-S, Lim M-T. Unsupervised Semantic Segmentation Inpainting Network Using a Generative Adversarial Network with Preprocessing. Applied Sciences. 2023; 13(2):781. https://doi.org/10.3390/app13020781

Chicago/Turabian StyleAhn, Woo-Jin, Dong-Won Kim, Tae-Koo Kang, Dong-Sung Pae, and Myo-Taeg Lim. 2023. "Unsupervised Semantic Segmentation Inpainting Network Using a Generative Adversarial Network with Preprocessing" Applied Sciences 13, no. 2: 781. https://doi.org/10.3390/app13020781

APA StyleAhn, W.-J., Kim, D.-W., Kang, T.-K., Pae, D.-S., & Lim, M.-T. (2023). Unsupervised Semantic Segmentation Inpainting Network Using a Generative Adversarial Network with Preprocessing. Applied Sciences, 13(2), 781. https://doi.org/10.3390/app13020781