Depth-Adaptive Deep Neural Network Based on Learning Layer Relevance Weights

Abstract

1. Introduction

1.1. Motivation

1.2. Problem Statement

1.3. Contribution

2. Literature Review

2.1. Dynamic Architectures

- Find the most similar pair of neurons whose cosine distance is minimal and merge them.

- Add a new neuron that minimizes the following cost function:

2.2. Layer Bypassing and Combination

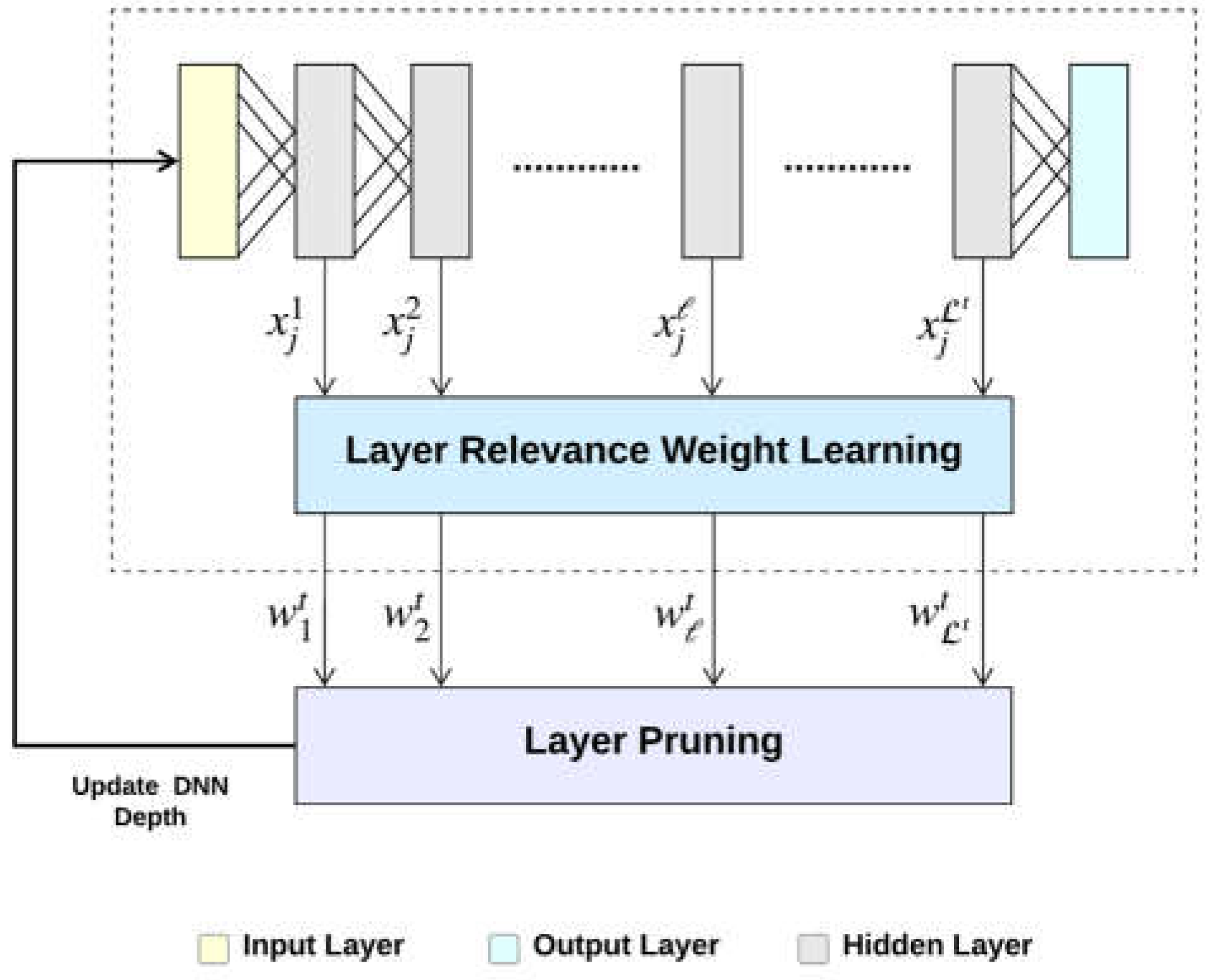

3. Depth-Adaptive Deep Neural Network

3.1. Class-Independent ANNA

3.2. Class-Dependent ANNA

| Algorithm 1: Layer Fuzzy Relevance Weight Learning Algorithm |

| Input: : the mapping of the input instances that belong to the current batch : the membership coefficients according to the training set : the sum of the intra-class distances at the previous batch Output: the learned layers fuzzy relevance weights at iteration Compute the distance for all pairs using (7). Compute the intra-class distance for each layer using (14) for class-independent approach or (19) for class-dependent approach. Compute the fuzzy relevance weight for each layer using (15) for class-independent approach or (20) for class-dependent approach. |

3.3. Layer Pruning

| Algorithm 2: Layer Pruning Algorithm |

| Input: : the learned layers’ fuzzy relevance weights at iteration Output: : the pruned network with the learned network depth Prune all layers after |

| Algorithm 3: Depth-Adaptive Deep Neural Network |

| Input: : input instances : the ground truth of the input instances : initialized deep feedforward neural network with initial depth Output: : trained deep feedforward neural network with learned depth REPEAT Select a batch from Train the network Learn the layer fuzzy relevance weights using Algorithm 1 Prune layers using Algorithm 2 UNTIL convergences |

4. Experimental Setting

4.1. Datasets

4.2. Experiment Descriptions

4.2.1. Experiment 1: Empirical Investigation of the Effect of the Plain Network Depth on the Model Performance

4.2.2. Experiment 2: Performance Assessment of ANNA Approaches Applied to Plain Networks

4.2.3. Experiment 3: Performance Assessment of ANNA Approaches Applied to ResNet Plain Models

4.2.4. Experiment 4: Performance Comparison of ANNA Approaches with State-of-the-Art Models

4.3. Implementation

5. Results and Discussion

5.1. Experiment 1: Empirical Investigation of the Effect of the Plain Network Depth on the Model Performance

5.2. Experiment 2: Performance Assessment of ANNA Approaches Applied to Plain Networks

5.3. Experiment 3: Performance Assessment of ANNA Approaches Applied to ResNet Plain Models

5.4. Experiment 4: Performance Comparison of ANNA Approaches with State-of-the-Art Models

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Waldrop, M.M. Computer vision. Science 1984, 224, 1225–1227. [Google Scholar] [CrossRef] [PubMed]

- Sonka, M.; Hlavac, V.; Boyle, R. Image Processing, Analysis, and Machine Vision; Cengage Learning: Boston, MA, USA, 2014; Available online: https://books.google.com/books?hl=en&lr=&id=DcETCgAAQBAJ&oi=fnd&pg=PR11&ots=ynj1Cr2sqH&sig=dEZoUJLqh6cK7ptmT18zu6gAc_k (accessed on 6 February 2020).

- Maindonald, J. Pattern Recognition and Machine Learning. J. Stat. Softw. 2007, 17, 1–3. [Google Scholar] [CrossRef]

- Liu, W.; Wang, Z.; Liu, X.; Zeng, N.; Liu, Y.; Alsaadi, F.E. A survey of deep neural network architectures and their applications. Neurocomputing 2017, 234, 11–26. [Google Scholar] [CrossRef]

- Padmanabhan, J.; Premkumar, M.J.J. Machine learning in automatic speech recognition: A survey. IETE Tech. Rev. 2015, 32, 240–251. [Google Scholar] [CrossRef]

- Jaitly, N.; Hinton, G. Learning a better representation of speech soundwaves using restricted boltzmann machines. In Proceedings of the 2011 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Prague, Czech Republic, 22–27 May 2011; pp. 5884–5887. [Google Scholar] [CrossRef]

- Mohamed, A.R.; Yu, D.; Deng, L. Investigation of full-sequence training of deep belief networks for speech recognition. In Proceedings of the 11th Annual Conference of the International Speech Communication Association, INTERSPEECH, Chiba, Japan, 26–30 September 2010; pp. 2846–2849. [Google Scholar]

- Alom, M.Z.; Taha, T.M.; Yakopcic, C.; Westberg, S.; Sidike, P.; Nasrin, M.S.; Hasan, M.; Van Essen, B.C.; Awwal, A.A.; Asari, V.K. A State-of-the-Art Survey on Deep Learning Theory and Architectures. Electronics 2019, 8, 292. [Google Scholar] [CrossRef]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning. Nature 2016, 521, 800. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. Commun. ACM 2017, 60, 84–90. [Google Scholar] [CrossRef]

- Lo, S.C.B.; Chan, H.P.; Lin, J.S.; Li, H.; Freedman, M.T.; Mun, S.K. Convolution Neural Network for Medical Image Pattern Recognition. Neural Netw. 1995, 8, 1201–1214. [Google Scholar] [CrossRef]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. SegNet: A Deep Convolutional Encoder-Decoder Architecture for Image Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Chen, L.; Zhang, H.; Xiao, J.; Nie, L.; Shao, J.; Liu, W.; Chua, T.S. SCA-CNN: Spatial and channel-wise attention in convolutional networks for image captioning. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017; pp. 6298–6306. [Google Scholar] [CrossRef]

- Karpathy, A.; Toderici, G.; Shetty, S.; Leung, T.; Sukthankar, R.; Li, F.F. Large-scale video classification with convolutional neural networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 1725–1732. [Google Scholar] [CrossRef]

- Yang, J.B.; Nguyen, M.N.; San, P.P.; Li, X.L.; Krishnaswamy, S. Deep convolutional neural networks on multichannel time series for human activity recognition. In Proceedings of the IJCAI International Joint Conference on Artificial Intelligence, Buenos Aires, Argentina, 25–31 July 2015; pp. 3995–4001. [Google Scholar]

- Hou, J.-C.; Wang, S.-S.; Lai, Y.-H.; Tsao, Y.; Chang, H.-W.; Wang, H.-M. Audio-Visual Speech Enhancement Using Multimodal Deep Convolutional Neural Networks. IEEE Trans. Emerg. Top. Comput. Intell. 2018, 2, 117–128. [Google Scholar] [CrossRef]

- Xu, Y.; Kong, Q.; Huang, Q.; Wang, W.; Plumbley, M.D. Convolutional gated recurrent neural network incorporating spatial features for audio tagging. In Proceedings of the International Joint Conference on Neural Networks, Melbourne, Australia, 19–25 August 2017; pp. 3461–3466. [Google Scholar] [CrossRef]

- Ding, Y.; Zhao, X.; Zhang, Z.; Cai, W.; Yang, N.; Zhan, Y. Semi-Supervised Locality Preserving Dense Graph Neural Network with ARMA Filters and Context-Aware Learning for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5511812. [Google Scholar] [CrossRef]

- Ding, Y.; Zhang, Z.; Zhao, X.; Hong, D.; Cai, W.; Yu, C.; Yang, N.; Cai, W. Multi-feature fusion: Graph neural network and CNN combining for hyperspectral image classification. Neurocomputing 2022, 501, 246–257. [Google Scholar] [CrossRef]

- Ding, Y.; Zhang, Z.; Zhao, X.; Cai, Y.; Li, S.; Deng, B.; Cai, W. Self-Supervised Locality Preserving Low-Pass Graph Convolutional Embedding for Large-Scale Hyperspectral Image Clustering. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5536016. [Google Scholar] [CrossRef]

- Ding, Y.; Zhang, Z.; Zhao, X.; Cai, W.; Yang, N.; Hu, H.; Huang, X.; Cao, Y.; Cai, W. Unsupervised Self-correlated Learning Smoothy Enhanced Locality Preserving Graph Convolution Embedding Clustering for Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5536716. [Google Scholar] [CrossRef]

- Shrestha, A.; Mahmood, A. Review of deep learning algorithms and architectures. IEEE Access 2019, 7, 53040–53065. [Google Scholar] [CrossRef]

- Cortes, C.; Gonzalvo, X.; Kuznetsov, V.; Mohri, M.; Yang, S. AdaNet: Adaptive structural learning of artificial neural networks. In Proceedings of the 34th International Conference on Machine Learning (ICML 2017), Sydney, Australia, 6–11 August 2017; Volume 2, pp. 1452–1466. [Google Scholar]

- Sun, S.; Chen, W.; Wang, L.; Liu, X.; Liu, T.Y. On the depth of deep neural networks: A theoretical view. In Proceedings of the 30th AAAI Conference on Artificial Intelligence, AAAI 2016, Phoenix, AZ, USA, 12–17 February 2016; pp. 2066–2072. Available online: http://arxiv.org/abs/1506.05232 (accessed on 28 January 2020).

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar] [CrossRef]

- Bergstra, J.; Bardenet, R.; Bengio, Y.; Kégl, B. Algorithms for Hyper-Parameter Optimization. Adv. Neural Inf. Process. Syst. 2011, 24, 2546–2554. [Google Scholar]

- Tang, A.M.; Quek, C.; Ng, G.S. GA-TSKfnn: Parameters tuning of fuzzy neural network using genetic algorithms. Expert Syst. Appl. 2005, 29, 769–781. [Google Scholar] [CrossRef]

- Ren, C.; An, N.; Wang, J.; Li, L.; Hu, B.; Shang, D. Optimal parameters selection for BP neural network based on particle swarm optimization: A case study of wind speed forecasting. Knowl. -Based Syst. 2014, 56, 226–239. [Google Scholar] [CrossRef]

- Zhou, G.; Sohn, K.; Lee, H. Online incremental feature learning with denoising autoencoders. J. Mach. Learn. Res. 2012, 22, 1453–1461. [Google Scholar]

- Philipp, G.; Carbonell, J.G. Nonparametric Neural Networks. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017; Available online: http://arxiv.org/abs/1712.05440 (accessed on 15 February 2020).

- Draelos, T.J.; Miner, N.E.; Lamb, C.C.; Cox, J.A.; Vineyard, C.M.; Carlson, K.D.; Severa, W.M.; James, C.D.; Aimone, J.B. Neurogenesis Deep Learning. In Proceedings of the International Joint Conference on Neural Networks, Perth, Australia, 27 November–1 December 2017; pp. 526–533. [Google Scholar] [CrossRef]

- Yoon, J.; Yang, E.; Lee, J.; Hwang, S.J. Lifelong Learning with Dynamically Expandable Networks. In Proceedings of the 6th International Conference on Learning Representations. (ICLR 2018), Vancouver, BC, Canada, 30 April–3 May 2018; Available online: http://arxiv.org/abs/1708.01547 (accessed on 15 February 2020).

- Alvarez, J.M.; Salzmann, M. Learning the number of neurons in deep networks. Adv. Neural Inf. Process. Syst. 2016, 29, 2270–2278. [Google Scholar]

- Molchanov, P.; Tyree, S.; Karras, T.; Aila, T.; Kautz, J. Pruning convolutional neural networks for resource efficient inference. In Proceedings of the 5th International Conference on Learning Representations, ICLR 2017, Toulon, France, 24–26 April 2017; pp. 1–17. [Google Scholar]

- Srivastava, R.K.; Greff, K.; Schmidhuber, J. Training very deep networks. Adv. Neural Inf. Process. Syst. 2015, 28, 2377–2385. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. Proc. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. 2016, 7, 770–778. [Google Scholar] [CrossRef]

- Huang, G.; Sun, Y.; Liu, Z.; Sedra, D.; Weinberger, K.Q. Deep networks with stochastic depth. In European Conference on Computer Vision; Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2016; Volume 9908 LNCS, pp. 646–661. [Google Scholar] [CrossRef]

- Wang, J.; Wei, Z.; Zhang, T.; Zeng, W. Deeply-Fused Nets. arXiv 2016, arXiv:1605.07716. [Google Scholar]

- Bengio, Y. Learning deep architectures for AI. Found. Trends Mach. Learn. 2009, 2, 1–127. [Google Scholar] [CrossRef]

- Roy, S.K.; Manna, S.; Song, T.; Bruzzone, L. Attention-Based Adaptive Spectral-Spatial Kernel ResNet for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 7831–7843. [Google Scholar] [CrossRef]

- Stamatis, D. Lagrange Multipliers. In Six Sigma and Beyond; CRC Press: Boca Raton, FL, USA, 2002; Volume 35, pp. 319–324. [Google Scholar] [CrossRef]

- LeCun, Y.; Cortes, C. MNIST Handwritten Digit Database. AT&T Labs. Available online: http://yann.lecun.com/exdb/mnist/ (accessed on 3 June 2021).

- Krizhevsky, A.; Hinton, G. Learning Multiple Layers of Features from Tiny Images; Technical Report; University of Toronto: Toronto, ON, Canada, 2009; pp. 1–58. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015; Available online: http://www.robots.ox.ac.uk/ (accessed on 21 September 2022).

| Layer Name | Output Size | 50 Hidden Layers |

|---|---|---|

| Conv1 | ||

| Conv2_x | ||

| Output |

| Layer Name | Output Size | 18 Layers | 34 Layers |

|---|---|---|---|

| Conv1 | |||

| Conv2_x | |||

| Conv3_x | |||

| Conv4_x | |||

| Conv5_x | |||

| Output | |||

| Model | Accuracy | Error | F1-Score | AUC | Depth |

|---|---|---|---|---|---|

| Plain-18 | 61.04 | 38.96 | 0.55 | 0.85 | 18 layers |

| ResNet-18 | 61.67 | 38.33 | 0.59 | 0.87 | 18 layers |

| Class-Independent ANNA + Plain-18 | 87.82 | 12.18 | 0.87 | 0.98 | 2 layers |

| Class-Dependent ANNA + Plain-18 | 89.9 | 10.1 | 0.9 | 0.99 | 2 layers |

| Plain-34 | 14.48 | 85.52 | 0.07 | 0.5 | 34 layers |

| ResNet-34 | 46.59 | 53.41 | 0.4 | 0.77 | 34 layers |

| Class-Independent ANNA + Plain-34 | 86.18 | 13.82 | 0.86 | 0.98 | 3 layers |

| Class-Dependent ANNA + Plain-34 | 87.97 | 12.03 | 0.88 | 0.98 | 3 layers |

| Class-Independent ANNA + Plain-50 | 87.58 | 12.42 | 0.88 | 0.98 | 2 layers |

| Class-Dependent ANNA + Plain-50 | 86.61 | 13.39 | 0.87 | 0.98 | 2 layers |

| Model | Accuracy | Error | F1-Score | AUC | Depth |

|---|---|---|---|---|---|

| Plain-18 | 44.28 | 55.72 | 0.44 | 0.86 | 18 layers |

| ResNet-18 | 48.2 | 51.8 | 0.47 | 0.88 | 18 layers |

| Class-Independent ANNA + Plain-18 | 56.48 | 43.52 | 0.56 | 0.91 | 1 layer |

| Class-Dependent ANNA + Plain-18 | 56.14 | 43.86 | 0.56 | 0.91 | 1 layer |

| Plain-34 | 26 | 74 | 0.18 | 0.76 | 34 layers |

| ResNet-34 | 49.36 | 50.64 | 0.48 | 0.88 | 34 layers |

| Class-Independent ANNA + Plain-34 | 53.91 | 46.09 | 0.53 | 0.9 | 2 layers |

| Class-Dependent ANNA + Plain-34 | 52.9 | 47.1 | 0.53 | 0.9 | 2 layers |

| Class-Independent ANNA + Plain-50 | 47.71 | 52.29 | 0.45 | 0.87 | 2 layers |

| Class-Dependent ANNA + Plain-50 | 49.2 | 50.8 | 0.47 | 0.88 | 2 layers |

| Model | Testing Time (s) | |

|---|---|---|

| MNIST | CIFAR-10 | |

| Plain-18 | 1.61 | 1.71 |

| ResNet-18 | 1.67 | 1.84 |

| Class-Independent ANNA + Plain-18 | 1.01 | 1.02 |

| Class-Dependent ANNA + Plain-18 | 0.99 | 1.03 |

| Plain-34 | 2.18 | 2.04 |

| ResNet-34 | 2.18 | 1.99 |

| Class-Independent ANNA + Plain-34 | 1.06 | 1.02 |

| Class-Dependent ANNA + Plain-34 | 1.06 | 1.02 |

| Model | MNIST | CIFAR-10 | ||

|---|---|---|---|---|

| Accuracy | Error | Accuracy | Error | |

| ResNet-18 | 48.2 | 51.8 | 48.2 | 51.8 |

| ResNet-34 | 46.59 | 53.41 | 49.36 | 50.64 |

| AdaNet | 92.45 | 7.55 | 15.7 | 84.3 |

| DenseNet-121 | 24.5 | 75.5 | 49.86 | 50.14 |

| DenseNet-169 | 19.1 | 80.9 | 42.86 | 57.14 |

| A2S2K-Resnet | 40.78 | 59.22 | 31.04 | 68.96 |

| Class-Independent ANNA + Plain-18 | 87.82 | 12.18 | 56.48 | 43.52 |

| Class-Dependent ANNA + Plain-18 | 89.9 | 10.1 | 56.14 | 43.86 |

| Class-Independent ANNA + Plain-34 | 86.18 | 13.82 | 53.91 | 46.09 |

| Class-Dependent ANNA + Plain-34 | 87.97 | 12.03 | 52.9 | 47.1 |

| Class-Independent ANNA + Plain-50 | 87.58 | 12.42 | 47.71 | 52.29 |

| Class-Dependent ANNA + Plain-50 | 86.61 | 13.39 | 49.2 | 50.8 |

| Model | MNIST | CIFAR-10 | ||||

|---|---|---|---|---|---|---|

| FLOPs (G) | Time (s) | FLOPs (G) | Time (s) | |||

| Training | Testing | Training | Testing | |||

| ResNet-18 | 0.0713 | 23.89 | 1.67 | 0.162 | 22.07 | 1.84 |

| ResNet-34 | 0.147 | 37.88 | 2.18 | 0.326 | 34.2 | 1.99 |

| AdaNet | 0.00953 | 12.3 | 5.92 | 0.00761 | 11.58 | 5.63 |

| DenseNet-121 | 0.102 | 57.5 | 4.12 | 0.117 | 50.43 | 4.16 |

| DenseNet-169 | 0.124 | 80.55 | 5.93 | 0.139 | 71.58 | 5.99 |

| A2S2K-Resnet | 0.0984 | 15.09 | 1.17 | 0.1312 | 16.41 | 1.53 |

| Class-Independent ANNA + Plain-18 | 0.00666 | 292.26 | 1.01 | 0.00695 | 201.94 | 1.02 |

| Class-Dependent ANNA + Plain-18 | 0.00666 | 263.29 | 0.99 | 0.00695 | 194.09 | 1.03 |

| Class-Independent ANNA + Plain-34 | 0.0114 | 523.73 | 1.06 | 0.0129 | 357 | 1.02 |

| Class-Dependent ANNA + Plain-34 | 0.0114 | 412.88 | 1.06 | 0.0129 | 366.15 | 1.02 |

| Class-Independent ANNA + Plain-50 | 0.0155 | 659.91 | 1.0 | 0.0214 | 556.11 | 1.01 |

| Class-Dependent ANNA + Plain-50 | 0.0155 | 664.83 | 1.01 | 0.0214 | 554.56 | 1.02 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Alturki, A.; Bchir, O.; Ben Ismail, M.M. Depth-Adaptive Deep Neural Network Based on Learning Layer Relevance Weights. Appl. Sci. 2023, 13, 398. https://doi.org/10.3390/app13010398

Alturki A, Bchir O, Ben Ismail MM. Depth-Adaptive Deep Neural Network Based on Learning Layer Relevance Weights. Applied Sciences. 2023; 13(1):398. https://doi.org/10.3390/app13010398

Chicago/Turabian StyleAlturki, Arwa, Ouiem Bchir, and Mohamed Maher Ben Ismail. 2023. "Depth-Adaptive Deep Neural Network Based on Learning Layer Relevance Weights" Applied Sciences 13, no. 1: 398. https://doi.org/10.3390/app13010398

APA StyleAlturki, A., Bchir, O., & Ben Ismail, M. M. (2023). Depth-Adaptive Deep Neural Network Based on Learning Layer Relevance Weights. Applied Sciences, 13(1), 398. https://doi.org/10.3390/app13010398